DeepClaude

Unleash Next-Level AI! 🚀 💻 Code Generation: DeepSeek r1 + Claude 3.7 Sonnet - Unparalleled Performance! 📝 Content Creation: DeepSeek r1 + Gemini 2.0 - Superior Quality! 🔌 OpenAI-Compatible. 🌊 Streaming & Non-Streaming Support. ✨ Experience the Future of AI – Today! Click to Try Now! ✨

Stars: 2296

DeepClaude is an open-source project inspired by the DeepSeek R1 model, aiming to provide the best results in various tasks by combining different models. It supports OpenAI-compatible input and output formats, integrates with DeepSeek and Claude APIs, and offers special support for other OpenAI-compatible models. Users can run the project locally or deploy it on a server to access a powerful language model service. The project also provides guidance on obtaining necessary APIs and running the project, including using Docker for deployment.

README:

特别说明:

在最新的 1.0 版本,我们已经实现了配置界面,部署更简单。

1.编程:推荐 DeepSeek r1 + Claude 3.7 Sonnet 组合,效果最好;

2.内容创作:推荐 DeepSeek r1 + Gemini 2.0 Flash 或 Gemini 2.0 Pro 组合,效果最好,并且可以完全免费使用。

对于不太会部署,只是希望使用上最强组合模型的朋友,可以直接访问 Erlich 个人网站自助购买按量付费的 API:https://erlich.fun/deepclaude-pricing

也可以直接联系 Erlich(微信号:erlichliu1)

赞助商:问小白 https://www.wenxiaobai.com (丝滑使用 DeepSeek r1 满血版, 支持联网、上传文件、图片、AI 创作 PPT 等)

更新日志:

2025-03-10.1: deepseek r1 推理部分 max_token 改为 5,节省输出 Tokens 消耗;非流式输出部分增加 reasoning_content 数据字段的返回;将非流式输出设置为缺省值,方便 dify 等工具使用。

2025-03-05.1: 更改docker compose配置, 使用volume将容器配置文件绑定至本地, 避免重启容器时丢失配置. 同时设置失败自动重启.

2025-03-02.1: 更新 1.0 版本,支持图形化配置界面,取消 .env 配置,预配置模板,配置更方便

2025-02-25.1: 添加 system message 对于 Claude 3.5 Sonnet 的支持

2025-02-23.1: 重构代码,支持 OpenAI 兼容模型,deepgeminiflash 和 deepgeminipro 配置更方便(请详细查看 READEME 和 .env.example 内的说明)。

2025-02-21.1: 添加 Claude 这段的详细数据结构安全检查。

2025-02-16.1: 支持 claude 侧采用请求体中的自定义模型名称。(如果你采用 oneapi 等中转方,那么现在可以通过配置环境变量或在 API 请求中采用任何 Gemini 等模型完成后半部分。接下来将重构代码,更清晰地支持不同的思考模型组合。)

2025-02-08.2: 支持非流式请求,支持 OpenAI 兼容的 models 接口返回。(

2025-02-08.1: 添加 Github Actions,支持 fork 自动同步、支持自动构建 Docker 最新镜像、支持 docker-compose 部署

2025-02-07.2: 修复 Claude temperature 参数可能会超过范围导致的请求失败的 bug

2025-02-07.1: 支持 Claude temputerature 等参数;添加更详细的 .env.example 说明

2025-02-06.1:修复非原生推理模型无法获得到推理内容的 bug

2025-02-05.1: 支持通过环境变量配置是否是原生支持推理字段的模型,满血版本通常支持

2025-02-04.2: 支持跨域配置,可在 .env 中配置

2025-02-04.1: 支持 Openrouter 以及 OneAPI 等中转服务商作为 Claude 部分的供应商

2025-02-03.3: 支持 OpenRouter 作为 Claude 的供应商,详见 .env.example 说明

2025-02-03.2: 由于 deepseek r1 在某种程度上已经开启了一个规范,所以我们也遵循推理标注的这种规范,更好适配支持的更好的 Cherry Studio 等软件。

2025-02-03.1: Siliconflow 的 DeepSeek R1 返回结构变更,支持新的返回结构

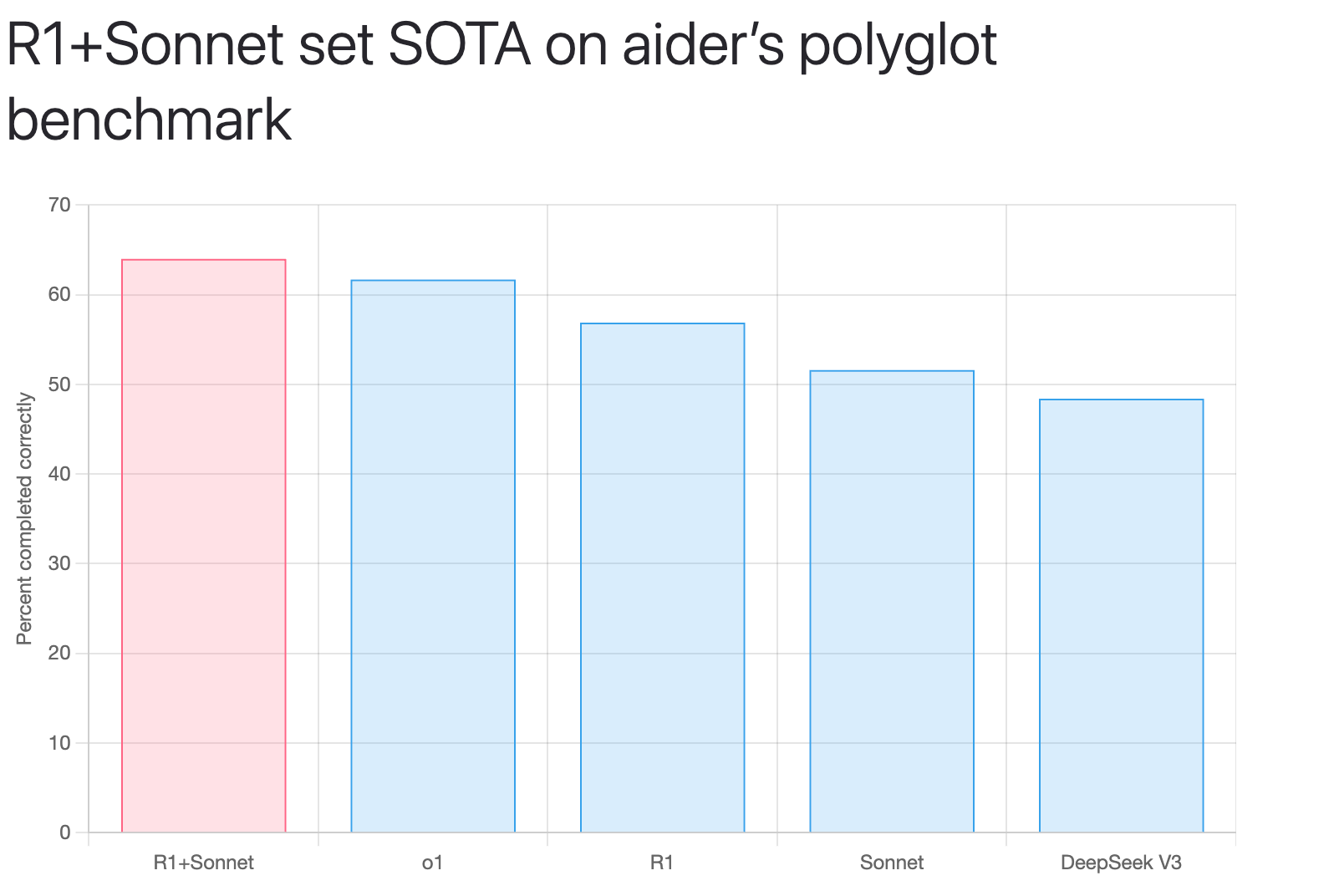

最近 DeepSeek 推出了 DeepSeek R1 模型,在推理能力上已经达到了第一梯队。但是 DeepSeek R1 在一些日常任务的输出上可能仍然无法匹敌 Claude 3.5 Sonnet。Aider 团队最近有一篇研究,表示通过采用 DeepSeek R1 + Claude 3.5 Sonnet 可以实现最好的效果。

R1 as architect with Sonnet as editor has set a new SOTA of 64.0% on the aider polyglot benchmark. They achieve this at 14X less cost compared to the previous o1 SOTA result.

本项目受到该项目的启发,通过 fastAPI 完全重写,经过 15 天大量社区用户的真实测试,我们创作了一些新的组合使用方案。

1.编程:推荐使用 deepclaude = deepseek r1 + claude 3.7 sonnet; 2.内容创作:推荐使用 deepgeminipro = deepseek r1 + gemini 2.0 pro (该方案可以完全免费使用); 3.日常实验:推荐 deepgeminiflash = deepseek r1 + gemini 2.0 flash (该方案可以完全免费使用)。

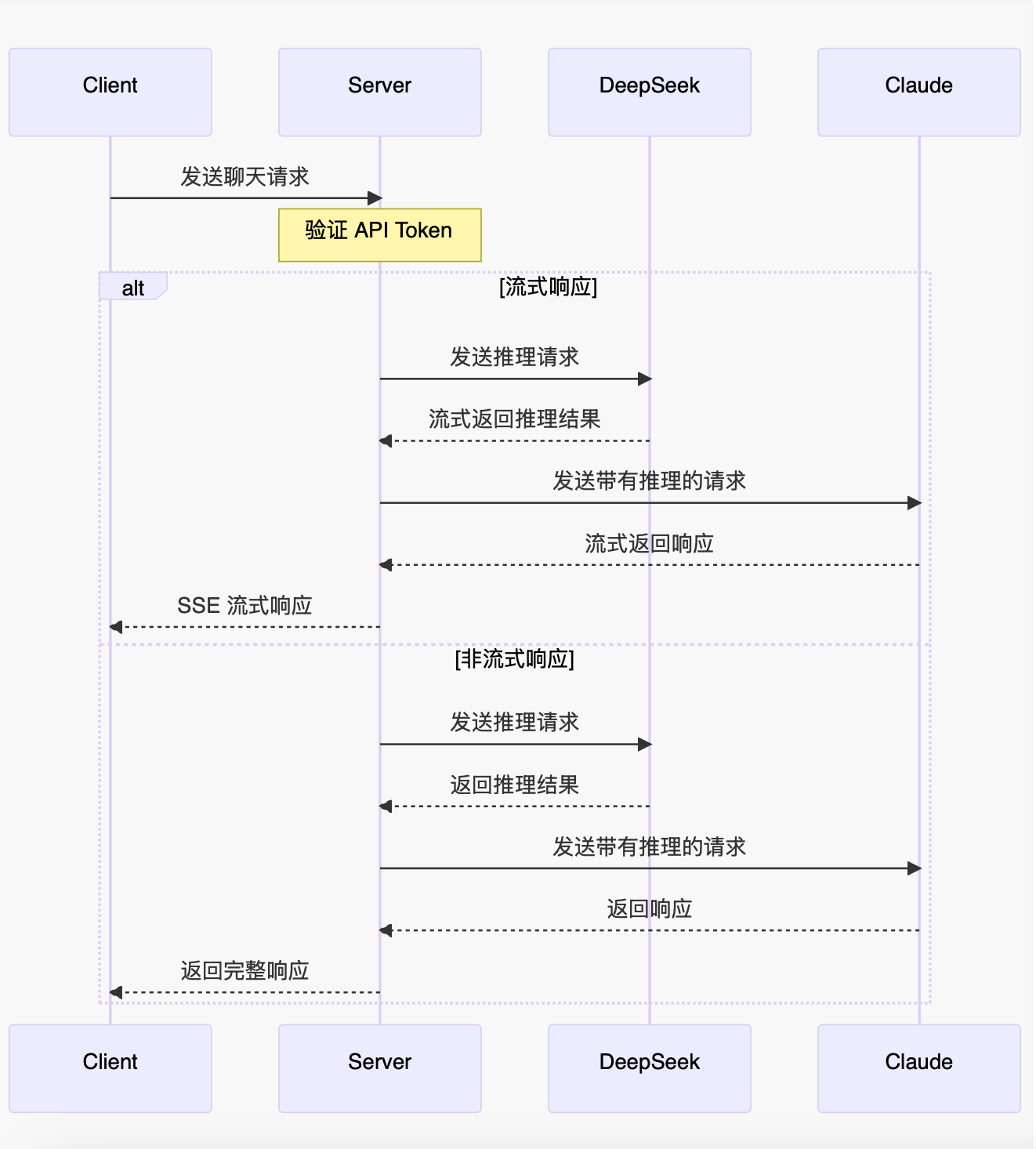

项目支持 OpenAI 兼容格式的输入输出,支持 DeepSeek 官方 API 以及第三方托管的 API、生成部分也支持 Claude 官方 API 以及中转 API,并对 OpenAI 兼容格式的其他 Model 做了特别支持。

🔥推荐使用方法: 1.用户可以自行运行在自己的服务器,并对外提供开放 API 接口,接入 OneAPI 等实现统一分发。

2.接入你的日常大语言模型对话聊天使用。

项目支持本地运行和服务器运行,推荐使用服务器部署,实现随时随处可访问的最强大语言模型服务,甚至可以完全免费使用。

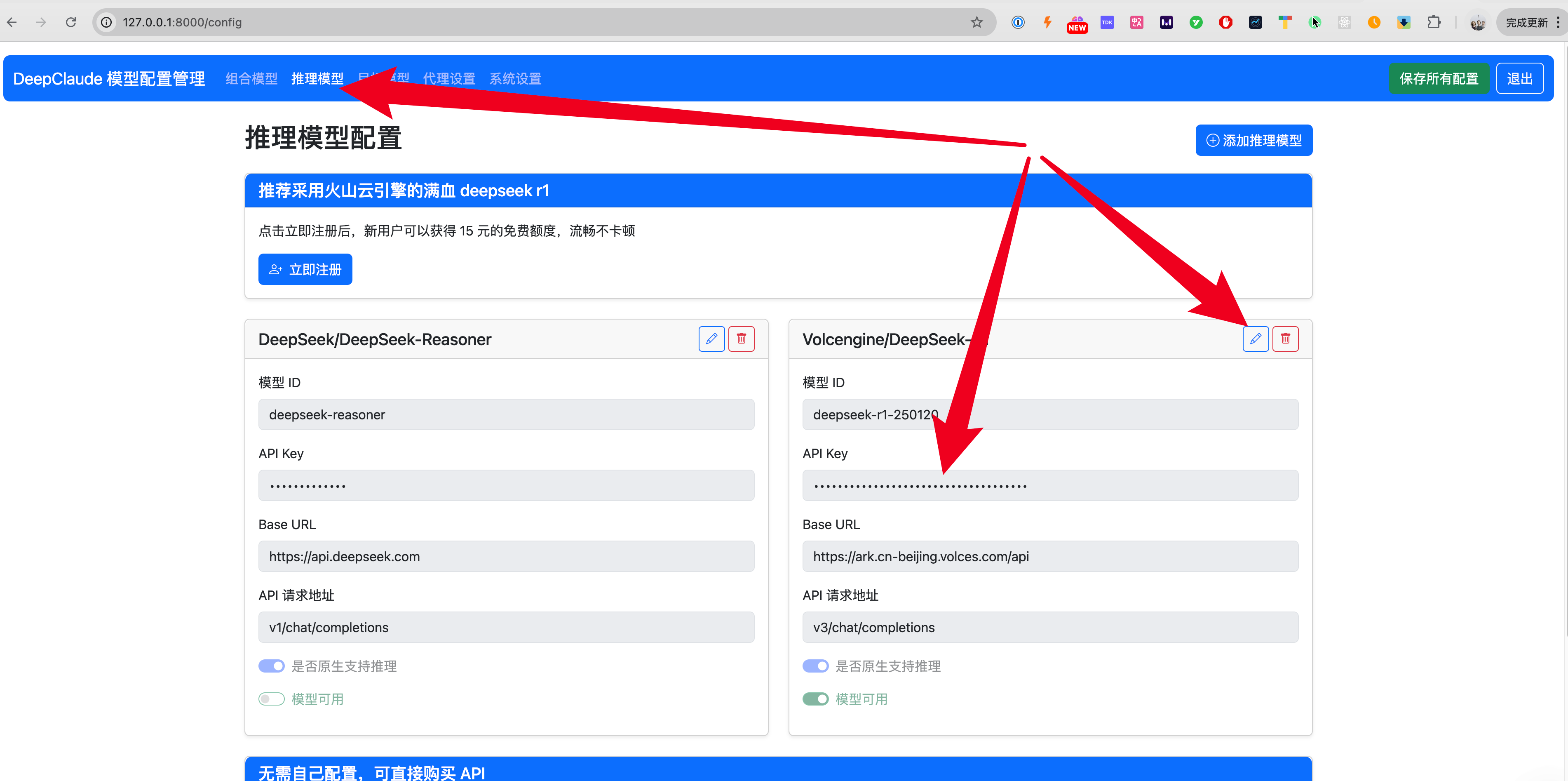

- 获取 DeepSeek API,因为最近 DeepSeek 官方的供应能里不足,所以经常无法使用,不推荐。目前更推荐使用火山云引擎(我们已经做了预配置,你只需注册后获取 api key 即可),点击链接注册可以获得 15 元代金券,免费用流畅的 deepseek r1 :https://www.volcengine.com/experience/ark?utm_term=202502dsinvite&ac=DSASUQY5&rc=AK7Q5AEU 邀请码:AK7Q5AEU

- 获取 Claude 的 API KEY:https://console.anthropic.com。(也可采用其他中转服务,如 DMXapi、Openrouter 以及其他服务商的 API KEY)

- 获取 Gemini 的 API KEY:https://aistudio.google.com/apikey (有免费的额度,日常够用)

Step 1. 克隆本项目到适合的文件夹并进入项目

git clone https://github.com/ErlichLiu/DeepClaude.git

cd DeepClaudeStep 2. 通过 uv 安装依赖(如果你还没有安装 uv,请看下方注解)

# 通过 uv 在本地创建虚拟环境,并安装依赖

uv sync

# macOS 激活虚拟环境

source .venv/bin/activate

# Windows 激活虚拟环境

.venv\Scripts\activateStep 3. 运行

## 本地运行

uvicorn app.main:app --port 8000

## 服务器运行

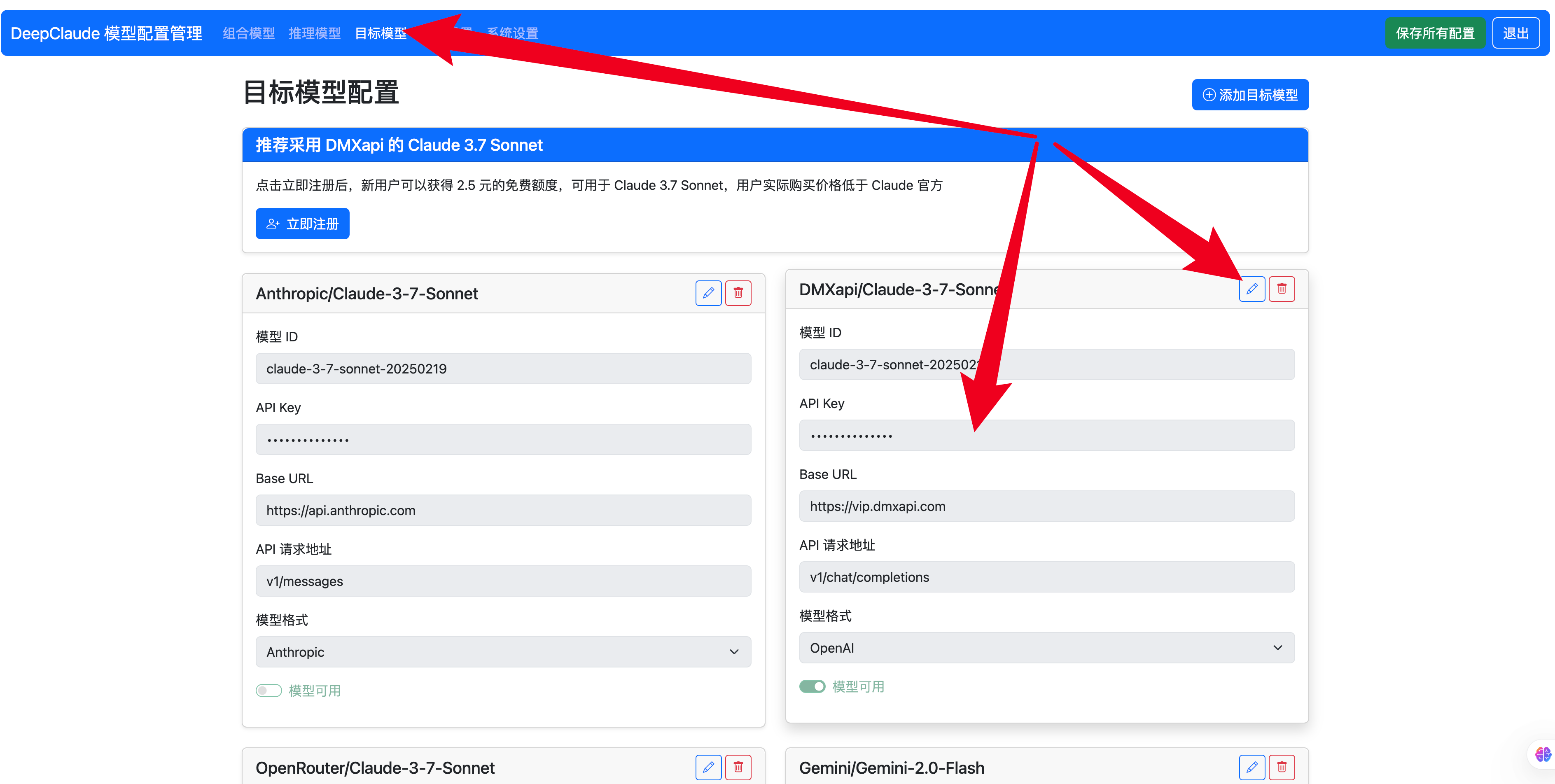

uvicorn app.main:app --host 0.0.0.0 --port 8000Step 4. 打开浏览器访问 http://127.0.0.1:8000/config 输入默认 api key:123456 (如果你运行在云端,请尽快登录后在系统设置内更改,避免被其他人盗用,本地登录则无需更改)

按照提示在“推理模型这一栏”配置一个火山云引擎的 api key,点击编辑,粘贴进去 api key 后点击保存即可

是否支持原生推理选项控制了两套针对推理模型返回思考内容.

- 支持原生推理: 推理模型在返回体

reasoning_content字段返回推理内容, 在content字段返回回答内容. 例如:- DeepSeek官方

deepseek-reasoner - Siliconflow

deepseek-ai/deepseek-r1

- DeepSeek官方

- 不支持原生推理: 推理模型在

content字段中以<think></think>标签包裹推理内容返回. 例如:- 派欧算力云

deepseek/deepseek-r1,deepseek/deepseek-r1/community,deepseek/deepseek-r1-turbo - AiHubMix

aihubmix-DeepSeek-R1 - Cluade 3.7 Sonnet Thinking

- 派欧算力云

大多数服务商提供的deepseek-r1均支持原生推理, 所以推荐默认开启. 如果不确定可以在外部使用聊天框架(Chatbox)测试模型响应内容. 如果出现<think></think>标签则需要关闭支持原生推理选项.

不支持原生推理的deepseek-r1可能需要prompt来触发思考, 若日志中收集到推理内容长度一直为0, 而且出现<think>字样, 则考虑检查此因素:

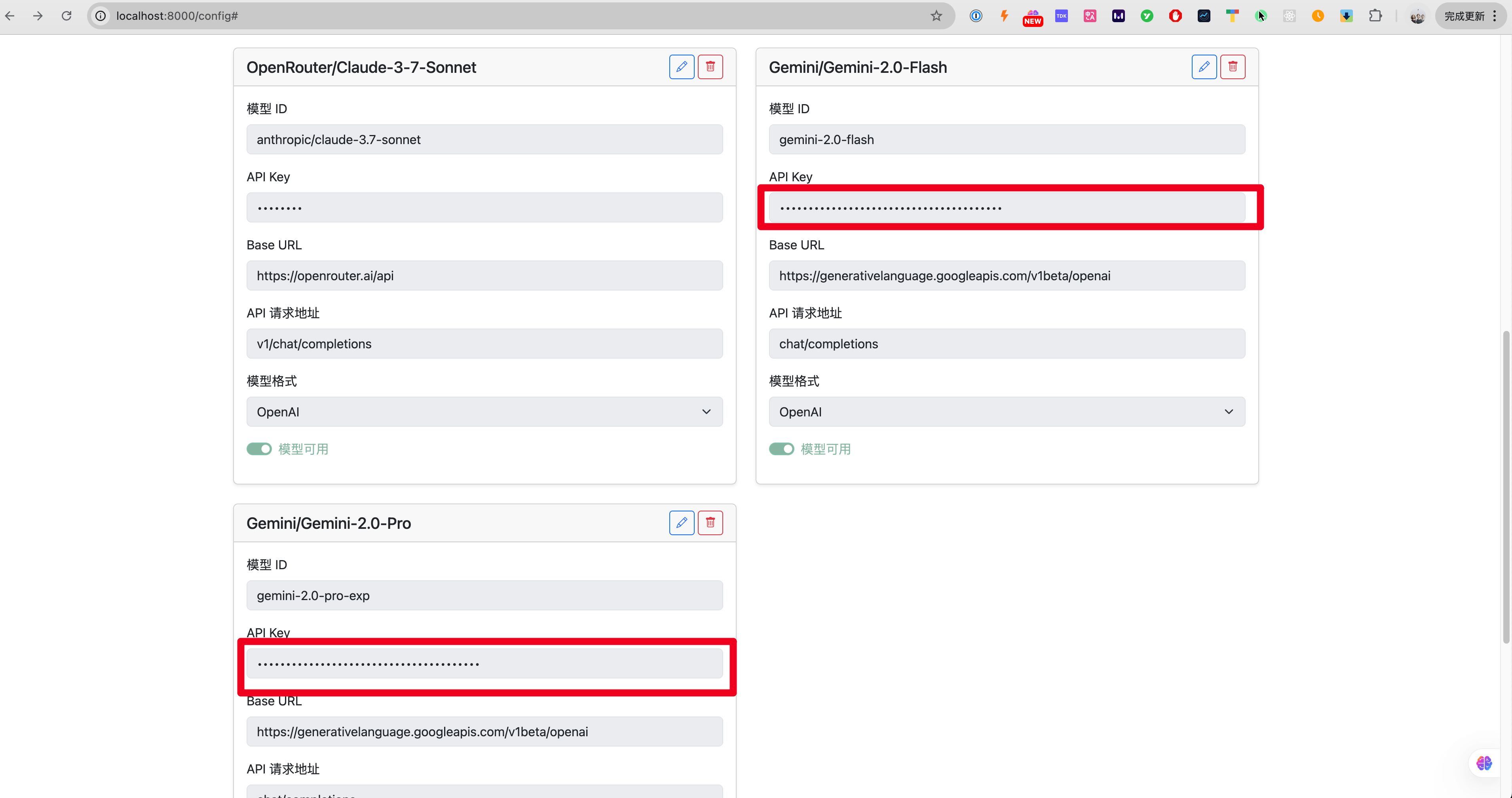

按照提示在“目标模型”配置一个 Claude 3.7 Sonnet 的 api key 以及一个 Gmeini 的 api key,Gemini 的 api key 可以在:https://aistudio.google.com/apikey 获取

Step 5. 配置程序到你的 Chatbox(推荐 Cherry Studio NextChat、ChatBox、LobeChat)

如果你的客户端是 Cherry Studio、Chatbox(选择 OpenAI API 模式,注意不是 OpenAI 兼容模式) API 地址为 http://127.0.0.1:8000 API 密钥为默认的 123456,如果你在系统设置内进行修改,则改为你修改过的即可 需要手动配置三个模型,分别为 deepclaude、deepgeminiflash 和 deepgeminipro 模型

如果你的客户端是 LobeChat API 地址为:http://127.0.0.1:8000/v1 API 密钥为默认的 123456,如果你在系统设置内进行修改,则改为你修改过的即可 支持获取模型列表,可以同时获取到 deepclaude、deepgeminiflash 和 deepgeminipro 模型

注:本项目采用 uv 作为包管理器,这是一个更快速更现代的管理方式,用于替代 pip,你可以在此了解更多

项目已经支持 Github Actions 自动更新 fork 项目的代码,保持你的 fork 版本与当前 main 分支保持一致。如需开启,请 frok 后在 Settings 中开启 Actions 权限即可。

- Email: [email protected]

- Website: Erlichliu

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for DeepClaude

Similar Open Source Tools

DeepClaude

DeepClaude is an open-source project inspired by the DeepSeek R1 model, aiming to provide the best results in various tasks by combining different models. It supports OpenAI-compatible input and output formats, integrates with DeepSeek and Claude APIs, and offers special support for other OpenAI-compatible models. Users can run the project locally or deploy it on a server to access a powerful language model service. The project also provides guidance on obtaining necessary APIs and running the project, including using Docker for deployment.

xiaozhi-esp32

The xiaozhi-esp32 repository is the first hardware project by Xia Ge, focusing on creating an AI chatbot using ESP32, SenseVoice, and Qwen72B. The project aims to help beginners in AI hardware development understand how to apply language models to hardware devices. It supports various functionalities such as Wi-Fi configuration, offline voice wake-up, multilingual speech recognition, voiceprint recognition, TTS using large models, and more. The project encourages participation for learning and improvement, providing resources for hardware and firmware development.

all-api-hub

All API Hub is an open-source browser extension that serves as a centralized management tool for third-party AI aggregation hubs and self-built New APIs. It automatically identifies accounts, checks balances, synchronizes models, manages keys, and supports cross-platform and cloud backups. The extension supports various aggregation hubs like one-api, new-api, Veloera, one-hub, done-hub, Neo-API, Super-API, RIX_API, and VoAPI. It offers features such as intelligent site recognition, multi-account overview panel, automatic check-ins, token and key management, model information and pricing display, model and interface validation, usage analysis and visualization, quick export integration, self-built New API and Veloera management tools, Cloudflare challenge assistant, data backup and synchronization, multi-platform support, and privacy-focused local storage.

MirrorFlow

MirrorFlow is an end-to-end toolchain for dialogue data processing, cleaning/extraction, trainable samples generation, fine-tuning/distillation, and usage with evaluation. It supports two main routes: 'Digital Self' for fine-tuning chat records to mimic user expression habits and 'GPT-4o Style Alignment' for aligning output structures, clarification methods, refusal habits, and tool invocation behavior.

cool-admin-java

Cool-admin-java is an open-source backend permission management system with features like Ai coding, flow arrangement, modularity, and plugin support. It is used to quickly build backend applications. The system offers a modern development experience by providing functionalities such as one-click generation of API interfaces to frontend pages, drag-and-drop flow arrangement, modularized code for easy maintenance, and extensibility through plugin installation for features like payments, SMS, and emails.

FeedCraft

FeedCraft is a powerful tool to process your rss feeds as a middleware. Use it to translate your feed, extract fulltext, emulate browser to render js-heavy page, use llm such as google gemini to generate brief for your rss article, use natural language to filter your rss feed, and more! It is an open-source tool that can be self-deployed and used with any RSS reader. It supports AI-powered processing using Open AI compatible LLMs, custom prompt, saving rules to apply to different RSS sources, portable mode for on-the-go usage, and dock mode for advanced customization of RSS sources and processing parameters.

AI-Codereview-Gitlab

AI-Codereview-Gitlab is an automated code review tool based on large models, designed to help development teams conduct intelligent code reviews quickly during code merging or submission. It supports multiple large models including DeepSeek, ZhipuAI, OpenAI, and Ollama. The tool can automatically push review results to DingTalk, WeChat Work, and Feishu, generate daily reports based on GitLab commit records, and provide a visual dashboard to display code review records. The tool works by triggering webhook events on GitLab when users submit code, calling third-party large models to review the code, and recording the review results in corresponding Merge Requests or Commit Notes.

gitmesh

GitMesh is an AI-powered Git collaboration network designed to address contributor dropout in open source projects. It offers real-time branch-level insights, intelligent contributor-task matching, and automated workflows. The platform transforms complex codebases into clear contribution journeys, fostering engagement through gamified rewards and integration with open source support programs. GitMesh's mascot, Meshy/Mesh Wolf, symbolizes agility, resilience, and teamwork, reflecting the platform's ethos of efficiency and power through collaboration.

awesome-cuda-tensorrt-fpga

Okay, here is a JSON object with the requested information about the awesome-cuda-tensorrt-fpga repository:

chatgpt-plus

ChatGPT-PLUS is an open-source AI assistant solution based on AI large language model API, with a built-in operational management backend for easy deployment. It integrates multiple large language models from platforms like OpenAI, Azure, ChatGLM, Xunfei Xinghuo, and Wenxin Yanyan. Additionally, it includes MidJourney and Stable Diffusion AI drawing features. The system offers a complete open-source solution with ready-to-use frontend and backend applications, providing a seamless typing experience via Websocket. It comes with various pre-trained role applications such as Xiaohongshu writer, English translation master, Socrates, Confucius, Steve Jobs, and weekly report assistant to meet various chat and application needs. Users can enjoy features like Suno Wensheng music, integration with MidJourney/Stable Diffusion AI drawing, personal WeChat QR code for payment, built-in Alipay and WeChat payment functions, support for various membership packages and point card purchases, and plugin API integration for developing powerful plugins using large language model functions.

nonebot-plugin-marshoai

nonebot-plugin-marshoai is a chatbot plugin that utilizes the OpenAI standard format API, such as the GitHub Models API, to enable chat functionalities. The plugin features the character Marsho, a cute cat girl, for engaging conversations. It supports OneBot adapters and GitHub Models API, with limited validation for other adapters. Developed by Melobot.

poco-agent

Poco Agent is a cloud-based tool that provides a secure sandbox environment for running tasks without affecting the host machine. It offers a modern UI with mobile adaptability, easy configuration through Docker, and extensive capabilities with support for MCP protocol and custom skills. Users can run tasks asynchronously and schedule them, even when the web interface is closed. Additional features include a built-in browser for internet research and GitHub repository integration. Poco Agent aims to be a more secure, visually appealing, and user-friendly alternative to OpenClaw.

Code-Review-GPT-Gitlab

A project that utilizes large models to help with Code Review on Gitlab, aimed at improving development efficiency. The project is customized for Gitlab and is developing a Multi-Agent plugin for collaborative review. It integrates various large models for code security issues and stays updated with the latest Code Review trends. The project architecture is designed to be powerful, flexible, and efficient, with easy integration of different models and high customization for developers.

XianTu

XianTu is an AI-driven immersive cultivation text adventure game that features dynamic storytelling with multiple large models, a complete cultivation system including realm breakthroughs, cultivation of techniques, equipment refining, and NPC interactions, intelligent decision-making system based on multiple dimensions, multiple save file management with cloud sync support, open world exploration with character relationship networks, cross-platform compatibility with dual themes, and compatibility with SillyTavern embedded environment and standalone web version.

magic-resume

Magic Resume is a modern online resume editor that makes creating professional resumes simple and fun. Built on Next.js and Framer Motion, it supports real-time preview and custom themes. Features include Next.js 14+ based construction, smooth animation effects (Framer Motion), custom theme support, responsive design, dark mode, export to PDF, real-time preview, auto-save, and local storage. The technology stack includes Next.js 14+, TypeScript, Framer Motion, Tailwind CSS, Shadcn/ui, and Lucide Icons.

For similar tasks

DeepClaude

DeepClaude is an open-source project inspired by the DeepSeek R1 model, aiming to provide the best results in various tasks by combining different models. It supports OpenAI-compatible input and output formats, integrates with DeepSeek and Claude APIs, and offers special support for other OpenAI-compatible models. Users can run the project locally or deploy it on a server to access a powerful language model service. The project also provides guidance on obtaining necessary APIs and running the project, including using Docker for deployment.

album-ai

Album AI is an experimental project that uses GPT-4o-mini to automatically identify metadata from image files in the album. It leverages RAG technology to enable conversations with the album, serving as a photo album or image knowledge base to assist in content generation. The tool provides APIs for search and chat functionalities, supports one-click deployment to platforms like Render, and allows for integration and modification under a permissive open-source license.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.