coze-js

The JavaScript SDK for the Coze API

Stars: 90

Coze-js is a monorepo containing packages for Coze API and Realtime API. It provides usage examples for Node.js and React Web, as well as full console and sample call up demos. The tool requires Node.js 18+, pnpm 9.12.0, and Rush 5.140.0 for installation. Developers can start developing projects within the repository by following the provided steps. Each package in the monorepo can be developed and published independently, with documentation on contributing guidelines and publishing. The tool is licensed under MIT.

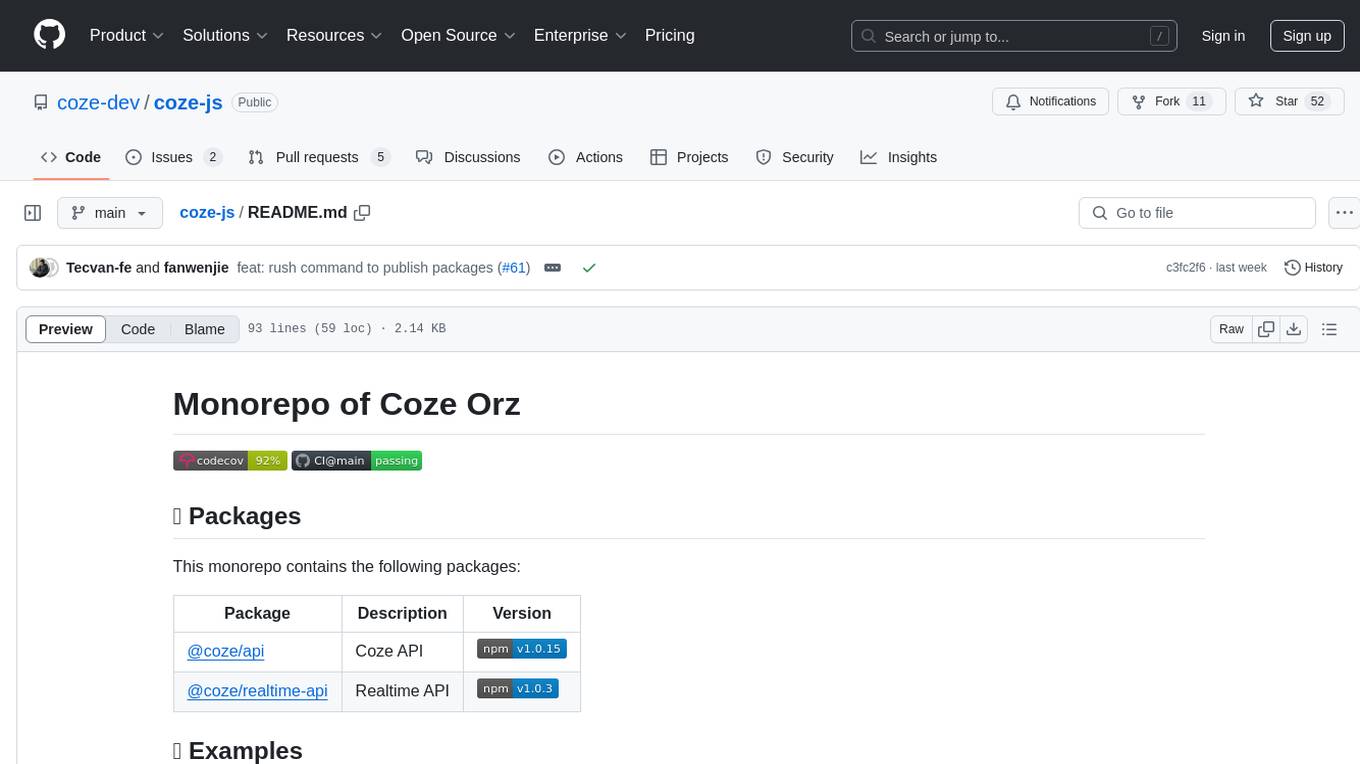

README:

English | 简体中文

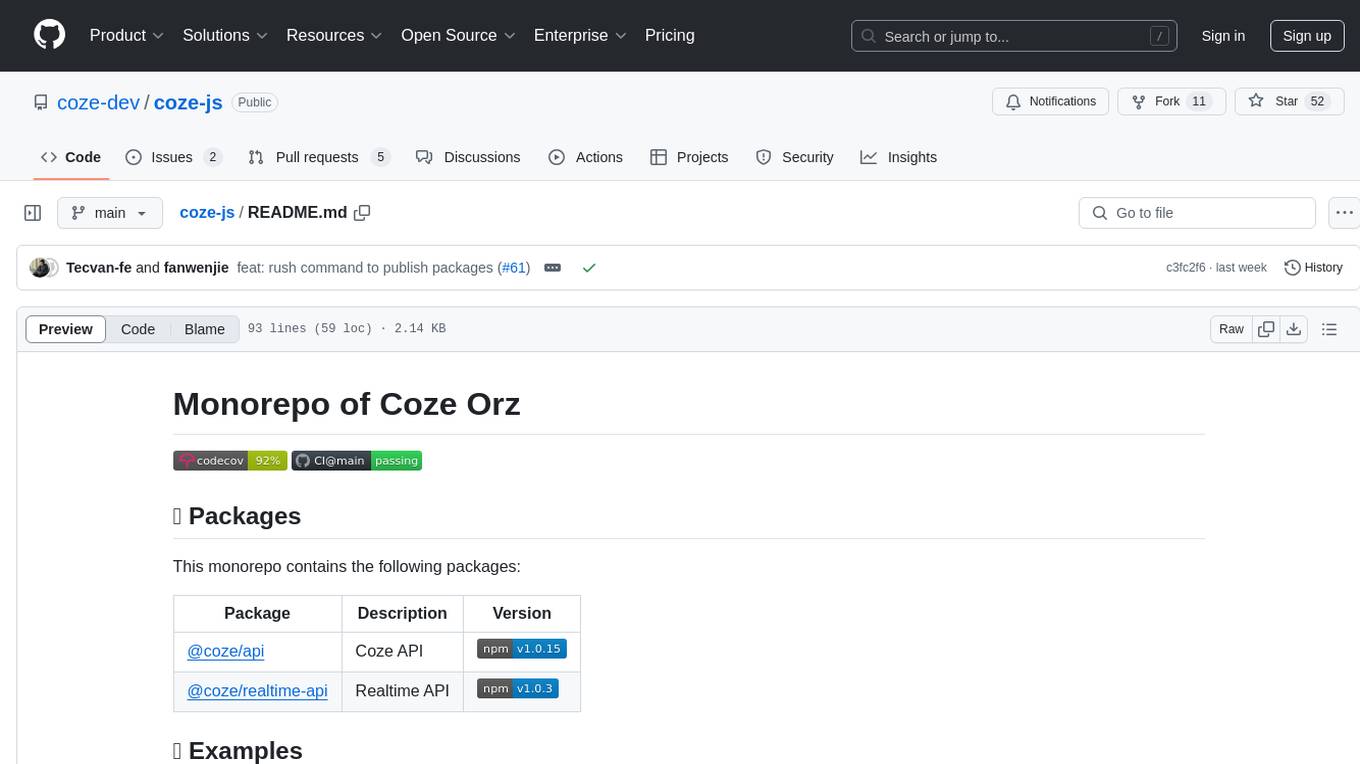

This monorepo contains the following packages:

| Package | Description | Version |

|---|---|---|

| @coze/api | Coze API SDK |  |

| @coze/realtime-api | Realtime API SDK |  |

| @coze/taro-api | Taro Mini Program Coze API SDK |  |

Find usage examples for each package in the examples directory:

- coze-js-node - Node.js Demo for @coze/api

- coze-js-web - React Web Demo for @coze/api, Preview

- coze-js-taro - Taro4 Mini Program Demo for @coze/taro-api

- coze-js-taro3 - Taro3 Mini Program Demo for @coze/taro-api

- realtime-console - Full Console Demo for @coze/realtime-api, Preview

- realtime-call-up - Sample Call Up Demo for @coze/realtime-api

- realtime-quickstart-react - Quickstart React Demo for @coze/realtime-api

- realtime-quickstart-vue - Quickstart Vue Demo for @coze/realtime-api

- quickstart-oauth-server - Quickstart OAuth Server Demo for Coze SDK

- Node.js 18+ (LTS/Hydrogen recommended)

- pnpm 9.12.0

- Rush 5.140.0

- Install Node.js 18+

nvm install lts/hydrogen

nvm alias default lts/hydrogen # set default node version

nvm use lts/hydrogen- Clone the repository

git clone [email protected]:coze-dev/coze-js.git- Install required global dependencies

npm i -g [email protected] @microsoft/[email protected]- Install project dependencies

rush update- Build the project

rush buildAfter that, you can start to develop projects inside this repository.

Enjoy it!

Each package in this monorepo can be developed and published independently. To start developing:

- Navigate to the package directory:

cd packages/<package-name>- Start development:

npm run startFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for coze-js

Similar Open Source Tools

coze-js

Coze-js is a monorepo containing packages for Coze API and Realtime API. It provides usage examples for Node.js and React Web, as well as full console and sample call up demos. The tool requires Node.js 18+, pnpm 9.12.0, and Rush 5.140.0 for installation. Developers can start developing projects within the repository by following the provided steps. Each package in the monorepo can be developed and published independently, with documentation on contributing guidelines and publishing. The tool is licensed under MIT.

packages

This repository is a monorepo for NPM packages published under the `@elevenlabs` scope. It contains multiple packages in the `packages` folder. The setup allows for easy development, linking packages, creating new packages, and publishing them with GitHub actions.

OpenSandbox

OpenSandbox is a general-purpose sandbox platform for AI applications, offering multi-language SDKs, unified sandbox APIs, and Docker/Kubernetes runtimes for scenarios like Coding Agents, GUI Agents, Agent Evaluation, AI Code Execution, and RL Training. It provides features such as multi-language SDKs, sandbox protocol, sandbox runtime, sandbox environments, and network policy. Users can perform basic sandbox operations like installing and configuring the sandbox server, starting the sandbox server, creating a code interpreter, and executing commands. The platform also offers examples for coding agent integrations, browser and desktop environments, and ML and training tasks.

ChatGPT-API-Faucet

ChatGPT API Faucet is a frontend project for the public platform ChatGPT API Faucet, inspired by the crypto project MultiFaucet. It allows developers in the AI ecosystem to claim $1.00 for free every 24 hours. The program is developed using the Next.js framework and React library, with key components like _app.tsx for initializing pages, index.tsx for main modifications, and Layout.tsx for defining layout components. Users can deploy the project by installing dependencies, building the project, starting the project, configuring reverse proxies or using port:IP access, and running a development server. The tool also supports token balance queries and is related to projects like one-api, ChatGPT-Cost-Calculator, and Poe.Monster. It is licensed under the MIT license.

WilliamButcherBot

WilliamButcherBot is a Telegram Group Manager Bot and Userbot written in Python using Pyrogram. It provides features for managing Telegram groups and users, with ready-to-use methods available. The bot requires Python 3.9, Telegram API Key, Telegram Bot Token, and MongoDB URI. Users can install it locally or on a VPS, run it directly, generate Pyrogram session for Heroku, or use Docker for deployment. Additionally, users can write new modules to extend the bot's functionality by adding them to the wbb/modules/ directory.

ClaudeBar

ClaudeBar is a macOS menu bar application that monitors AI coding assistant usage quotas. It allows users to keep track of their usage of Claude, Codex, Gemini, GitHub Copilot, Antigravity, and Z.ai at a glance. The application offers multi-provider support, real-time quota tracking, multiple themes, visual status indicators, system notifications, auto-refresh feature, and keyboard shortcuts for quick access. Users can customize monitoring by toggling individual providers on/off and receive alerts when quota status changes. The tool requires macOS 15+, Swift 6.2+, and CLI tools installed for the providers to be monitored.

sfdx-hardis

sfdx-hardis is a toolbox for Salesforce DX, developed by Cloudity, that simplifies tasks which would otherwise take minutes or hours to complete manually. It enables users to define complete CI/CD pipelines for Salesforce projects, backup metadata, and monitor any Salesforce org. The tool offers a wide range of commands that can be accessed via the command line interface or through a Visual Studio Code extension. Additionally, sfdx-hardis provides Docker images for easy integration into CI workflows. The tool is designed to be natively compliant with various platforms and tools, making it a versatile solution for Salesforce developers.

inspector

A developer tool for testing and debugging Model Context Protocol (MCP) servers. It allows users to test the compliance of their MCP servers with the latest MCP specs, supports various transports like STDIO, SSE, and Streamable HTTP, features an LLM Playground for testing server behavior against different models, provides comprehensive logging and error reporting for MCP server development, and offers a modern developer experience with multiple server connections and saved configurations. The tool is built using Next.js and integrates MCP capabilities, AI SDKs from OpenAI, Anthropic, and Ollama, and various technologies like Node.js, TypeScript, and Next.js.

Kubeli

Kubeli is a modern, beautiful Kubernetes management desktop application with real-time monitoring, terminal access, and a polished user experience. It offers features like multi-cluster support, real-time updates, resource browser, pod logs streaming, terminal access, port forwarding, metrics dashboard, YAML editor, AI assistant, MCP server, Helm releases management, proxy support, internationalization, and dark/light mode. The tech stack includes Vite, React 19, TypeScript, Tailwind CSS 4 for frontend, Tauri 2.0 (Rust) for desktop, kube-rs with k8s-openapi v1.32 for K8s client, Zustand for state management, Radix UI and Lucide Icons for UI components, Monaco Editor for editing, XTerm.js for terminal, and uPlot for charts.

lemonade

Lemonade is a tool that helps users run local Large Language Models (LLMs) with high performance by configuring state-of-the-art inference engines for their Neural Processing Units (NPUs) and Graphics Processing Units (GPUs). It is used by startups, research teams, and large companies to run LLMs efficiently. Lemonade provides a high-level Python API for direct integration of LLMs into Python applications and a CLI for mixing and matching LLMs with various features like prompting templates, accuracy testing, performance benchmarking, and memory profiling. The tool supports both GGUF and ONNX models and allows importing custom models from Hugging Face using the Model Manager. Lemonade is designed to be easy to use and switch between different configurations at runtime, making it a versatile tool for running LLMs locally.

sim

Sim is a platform that allows users to build and deploy AI agent workflows quickly and easily. It provides cloud-hosted and self-hosted options, along with support for local AI models. Users can set up the application using Docker Compose, Dev Containers, or manual setup with PostgreSQL and pgvector extension. The platform utilizes technologies like Next.js, Bun, PostgreSQL with Drizzle ORM, Better Auth for authentication, Shadcn and Tailwind CSS for UI, Zustand for state management, ReactFlow for flow editor, Fumadocs for documentation, Turborepo for monorepo management, Socket.io for real-time communication, and Trigger.dev for background jobs.

free-one-api

Free-one-api is a tool that allows access to all LLM reverse engineering libraries in a standard OpenAI API format. It supports automatic load balancing, Web UI, stream mode, multiple LLM reverse libraries, heartbeat detection mechanism, automatic disabling of unavailable channels, and runtime log recording. The tool is designed to work with the 'one-api' project and 'songquanpeng/one-api' for accessing official interfaces of various LLMs (paid). Contributors are needed to test adapters, find new reverse engineering libraries, and submit PRs.

lean-spec

LeanSpec is a tool for Spec-Driven Development that aims to help users ship faster with higher quality by creating small, focused documents that both humans and AI can understand. It provides features like Kanban board, smart search, dependency tracking, web UI, project stats, and AI integration. The tool is designed to work with various AI coding assistants and offers agent skills to teach AI the Spec-Driven Development methodology. LeanSpec is compatible with tools like VS Code Copilot, Claude Code, GitHub Copilot, and more, and it requires Node.js, pnpm, and Rust for development. The desktop app has a separate repository for development, and the tool supports common development tasks like testing, building, and documentation.

conduit

Conduit is an open-source, cross-platform mobile application for Open-WebUI, providing a native mobile experience for interacting with your self-hosted AI infrastructure. It supports real-time chat, model selection, conversation management, markdown rendering, theme support, voice input, file uploads, multi-modal support, secure storage, folder management, and tools invocation. Conduit offers multiple authentication flows and follows a clean architecture pattern with Riverpod for state management, Dio for HTTP networking, WebSocket for real-time streaming, and Flutter Secure Storage for credential management.

tiddlywiki-starter-kit

TiddlyWiki Starter Kit is a pre-configured setup for TiddlyWiki, utilizing Tailwind CSS for responsive design and providing multiple wiki support for different purposes. It offers quick operations with keyboard shortcuts, simplified configuration through editing the .env file, and one-click installation using npm create command.

aegra

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

For similar tasks

alog

ALog is an open-source project designed to facilitate the deployment of server-side code to Cloudflare. It provides a step-by-step guide on creating a Cloudflare worker, configuring environment variables, and updating API base URL. The project aims to simplify the process of deploying server-side code and interacting with OpenAI API. ALog is distributed under the GNU General Public License v2.0, allowing users to modify and distribute the app while adhering to App Store Review Guidelines.

crabml

Crabml is a llama.cpp compatible AI inference engine written in Rust, designed for efficient inference on various platforms with WebGPU support. It focuses on running inference tasks with SIMD acceleration and minimal memory requirements, supporting multiple models and quantization methods. The project is hackable, embeddable, and aims to provide high-performance AI inference capabilities.

chatllm.cpp

ChatLLM.cpp is a pure C++ implementation tool for real-time chatting with RAG on your computer. It supports inference of various models ranging from less than 1B to more than 300B. The tool provides accelerated memory-efficient CPU inference with quantization, optimized KV cache, and parallel computing. It allows streaming generation with a typewriter effect and continuous chatting with virtually unlimited content length. ChatLLM.cpp also offers features like Retrieval Augmented Generation (RAG), LoRA, Python/JavaScript/C bindings, web demo, and more possibilities. Users can clone the repository, quantize models, build the project using make or CMake, and run quantized models for interactive chatting.

ai-dial-core

AI DIAL Core is an HTTP Proxy that provides a unified API to different chat completion and embedding models, assistants, and applications. It is written in Java 17 and built on Eclipse Vert.x. The core functionality includes handling static and dynamic settings, deployment on Kubernetes using Helm charts, and storing user data in Blob Storage and Redis. It supports various identity providers, storage providers like AWS S3, Google Cloud Storage, and Azure Blob Store, and features like AI DIAL Addons, Interceptors, Assistants, Applications, and Models with customizable parameters and configurations.

coze-js

Coze-js is a monorepo containing packages for Coze API and Realtime API. It provides usage examples for Node.js and React Web, as well as full console and sample call up demos. The tool requires Node.js 18+, pnpm 9.12.0, and Rush 5.140.0 for installation. Developers can start developing projects within the repository by following the provided steps. Each package in the monorepo can be developed and published independently, with documentation on contributing guidelines and publishing. The tool is licensed under MIT.

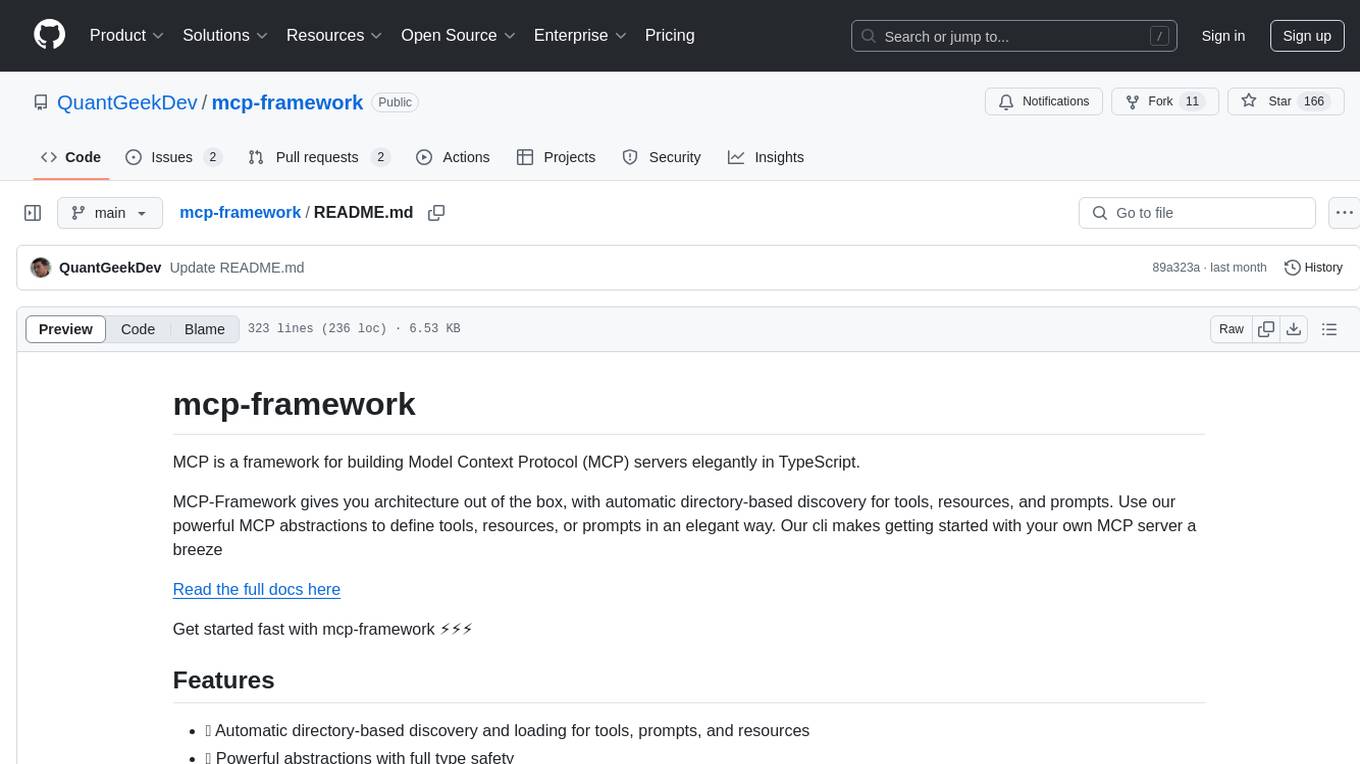

mcp-framework

MCP-Framework is a TypeScript framework for building Model Context Protocol (MCP) servers with automatic directory-based discovery for tools, resources, and prompts. It provides powerful abstractions, simple server setup, and a CLI for rapid development and project scaffolding.

TheNinjaRPG

TheNinja-RPG is the official source code for the game www.TheNinja-RPG.com. It relies on external services for authentication, websockets, database, etc. Users need to sign up for free accounts on services like Clerk, UploadThing, and Replicate. The codebase provides various 'make' commands for setup, building, and database management. The project does not have a specific license and is under exclusive copyright protection.

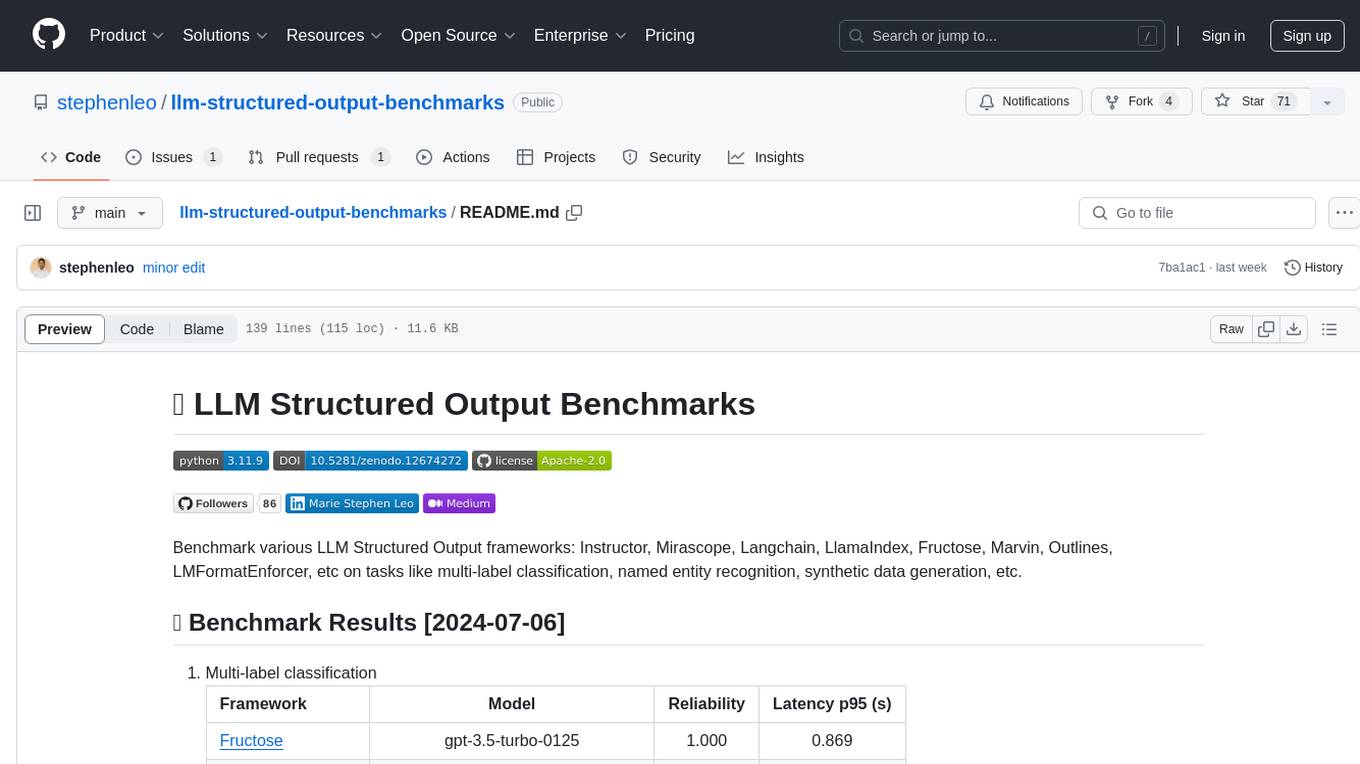

llm-structured-output-benchmarks

Benchmark various LLM Structured Output frameworks like Instructor, Mirascope, Langchain, LlamaIndex, Fructose, Marvin, Outlines, LMFormatEnforcer, etc on tasks like multi-label classification, named entity recognition, synthetic data generation. The tool provides benchmark results, methodology, instructions to run the benchmark, add new data, and add a new framework. It also includes a roadmap for framework-related tasks, contribution guidelines, citation information, and feedback request.

For similar jobs

google.aip.dev

API Improvement Proposals (AIPs) are design documents that provide high-level, concise documentation for API development at Google. The goal of AIPs is to serve as the source of truth for API-related documentation and to facilitate discussion and consensus among API teams. AIPs are similar to Python's enhancement proposals (PEPs) and are organized into different areas within Google to accommodate historical differences in customs, styles, and guidance.

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

speakeasy

Speakeasy is a tool that helps developers create production-quality SDKs, Terraform providers, documentation, and more from OpenAPI specifications. It supports a wide range of languages, including Go, Python, TypeScript, Java, and C#, and provides features such as automatic maintenance, type safety, and fault tolerance. Speakeasy also integrates with popular package managers like npm, PyPI, Maven, and Terraform Registry for easy distribution.

apicat

ApiCat is an API documentation management tool that is fully compatible with the OpenAPI specification. With ApiCat, you can freely and efficiently manage your APIs. It integrates the capabilities of LLM, which not only helps you automatically generate API documentation and data models but also creates corresponding test cases based on the API content. Using ApiCat, you can quickly accomplish anything outside of coding, allowing you to focus your energy on the code itself.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

OllamaKit

OllamaKit is a Swift library designed to simplify interactions with the Ollama API. It handles network communication and data processing, offering an efficient interface for Swift applications to communicate with the Ollama API. The library is optimized for use within Ollamac, a macOS app for interacting with Ollama models.

ollama4j

Ollama4j is a Java library that serves as a wrapper or binding for the Ollama server. It facilitates communication with the Ollama server and provides models for deployment. The tool requires Java 11 or higher and can be installed locally or via Docker. Users can integrate Ollama4j into Maven projects by adding the specified dependency. The tool offers API specifications and supports various development tasks such as building, running unit tests, and integration tests. Releases are automated through GitHub Actions CI workflow. Areas of improvement include adhering to Java naming conventions, updating deprecated code, implementing logging, using lombok, and enhancing request body creation. Contributions to the project are encouraged, whether reporting bugs, suggesting enhancements, or contributing code.