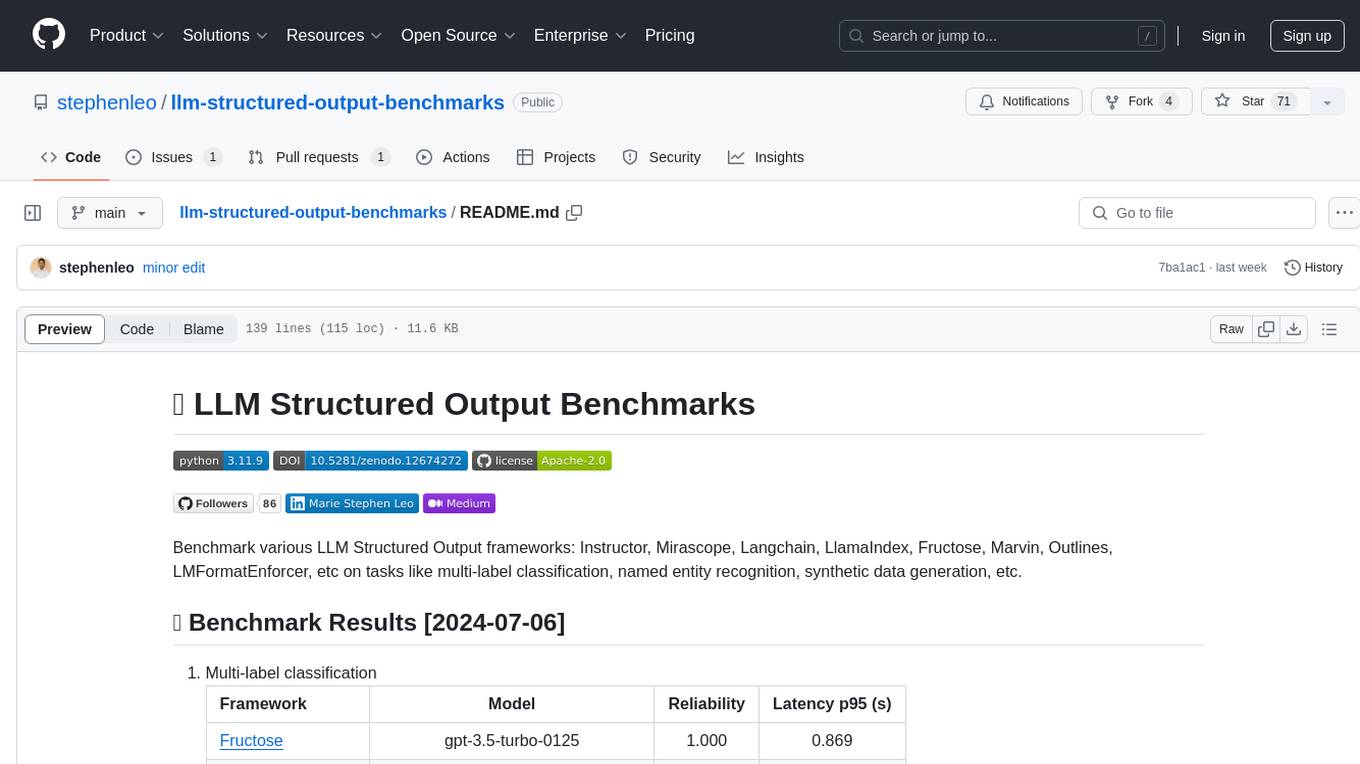

llm-structured-output-benchmarks

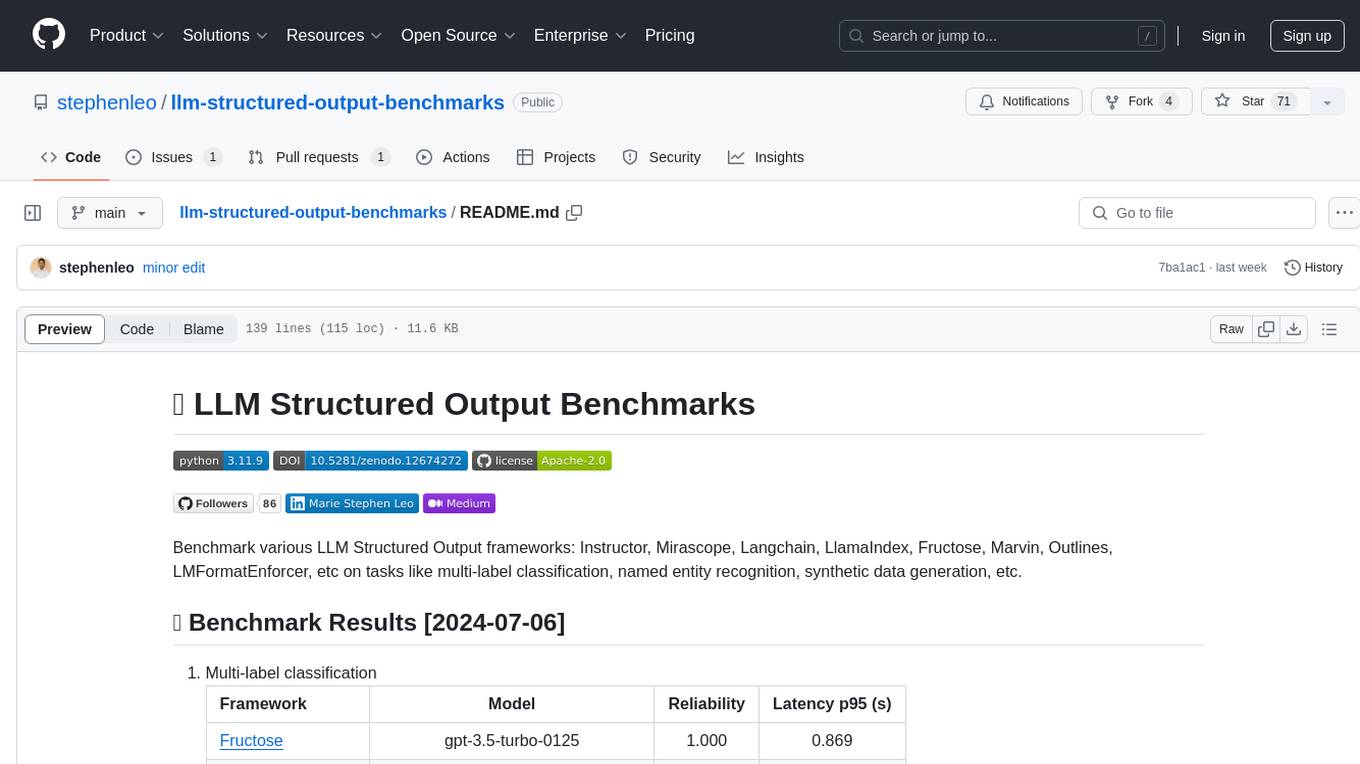

Benchmark various LLM Structured Output frameworks: Instructor, Mirascope, Langchain, LlamaIndex, Fructose, Marvin, Outlines, etc on tasks like multi-label classification, named entity recognition, synthetic data generation, etc.

Stars: 111

Benchmark various LLM Structured Output frameworks like Instructor, Mirascope, Langchain, LlamaIndex, Fructose, Marvin, Outlines, LMFormatEnforcer, etc on tasks like multi-label classification, named entity recognition, synthetic data generation. The tool provides benchmark results, methodology, instructions to run the benchmark, add new data, and add a new framework. It also includes a roadmap for framework-related tasks, contribution guidelines, citation information, and feedback request.

README:

Benchmark various LLM Structured Output frameworks: Instructor, Mirascope, Langchain, LlamaIndex, Fructose, Marvin, Outlines, LMFormatEnforcer, etc on tasks like multi-label classification, named entity recognition, synthetic data generation, etc.

- Multi-label classification

Framework Model Reliability Latency p95 (s) Fructose gpt-4o-mini-2024-07-18 1.000 1.138 Modelsmith gpt-4o-mini-2024-07-18 1.000 1.184 OpenAI Structured Output gpt-4o-mini-2024-07-18 1.000 1.201 Instructor gpt-4o-mini-2024-07-18 1.000 1.206 Outlines unsloth/llama-3-8b-Instruct-bnb-4bit 1.000 1.804* LMFormatEnforcer unsloth/llama-3-8b-Instruct-bnb-4bit 1.000 3.649* Llamaindex gpt-4o-mini-2024-07-18 0.996 0.853 Marvin gpt-4o-mini-2024-07-18 0.988 1.338 Mirascope gpt-4o-mini-2024-07-18 0.985 1.531 - Named Entity Recognition

Framework Model Reliability Latency p95 (s) Precision Recall F1 Score OpenAI Structured Output gpt-4o-mini-2024-07-18 1.000 3.459 0.834 0.748 0.789 LMFormatEnforcer unsloth/llama-3-8b-Instruct-bnb-4bit 1.000 6.573* 0.701 0.262 0.382 Instructor gpt-4o-mini-2024-07-18 0.998 2.438 0.776 0.768 0.772 Mirascope gpt-4o-mini-2024-07-18 0.989 3.879 0.768 0.738 0.752 Llamaindex gpt-4o-mini-2024-07-18 0.979 5.771 0.792 0.310 0.446 Marvin gpt-4o-mini-2024-07-18 0.979 3.270 0.822 0.776 0.798 - Synthetic Data Generation

Framework Model Reliability Latency p95 (s) Variety Instructor gpt-4o-mini-2024-07-18 1.000 1.923 0.750 Marvin gpt-4o-mini-2024-07-18 1.000 1.496 0.010 Llamaindex gpt-4o-mini-2024-07-18 1.000 1.003 0.020 Modelsmith gpt-4o-mini-2024-07-18 0.970 2.324 0.835 Mirascope gpt-4o-mini-2024-07-18 0.790 3.383 0.886 Outlines unsloth/llama-3-8b-Instruct-bnb-4bit 0.690 2.354* 0.942 OpenAI Structured Output gpt-4o-mini-2024-07-18 0.650 1.431 0.877 LMFormatEnforcer unsloth/llama-3-8b-Instruct-bnb-4bit 0.650 2.561* 0.662

* NVIDIA GeForce RTX 4080 Super GPU

- Install the requirements using

pip install -r requirements.txt - Set the OpenAI api key:

export OPENAI_API_KEY=sk-... - Run the benchmark using

python -m main run-benchmark - Raw results are stored in the

resultsdirectory. - Generate the results using:

- Multilabel classification:

python -m main generate-results - NER:

python -m main generate-results --task ner - Synthetic data generation:

python -m main generate-results --task synthetic_data_generation

- Multilabel classification:

- To get help on the command line arguments, add

--helpafter the command. Eg.,python -m main run-benchmark --help

- Multi-label classification:

- Task: Given a text, predict the labels associated with it.

-

Data:

- Base data: Alexa intent detection dataset

- Benchmarking test is run using synthetic data generated by running:

python -m data_sources.generate_dataset generate-multilabel-data. - The synthetic data is generated by sampling and combining rows from the base data to achieve multiple classes per row according to some distribution for num classes per row. See

python -m data_sources.generate_dataset generate-multilabel-data --helpfor more details.

-

Prompt:

"Classify the following text: {text}" -

Evaluation Metrics:

- Reliability: The percentage of times the framework returns valid labels without errors. The average of all the rows

percent_successfulvalues. - Latency: The 95th percentile of the time taken to run the framework on the data.

- Reliability: The percentage of times the framework returns valid labels without errors. The average of all the rows

-

Experiment Details: Run each row through the framework

n_runsnumber of times and log the percent of successful runs for each row.

- Named Entity Recognition

- Task: Given a text, extract the entities present in it.

-

Data:

- Base data: Synthetic PII Finance dataset

- Benchmarking test is run using a sampled data generated by running:

python -m data_sources.generate_dataset generate-ner-data. - The data is sampled from the base data to achieve number of entities per row according to some distribution. See

python -m data_sources.generate_dataset generate-ner-data --helpfor more details.

-

Prompt:

Extract and resolve a list of entities from the following text: {text} -

Evaluation Metrics:

- Reliability: The percentage of times the framework returns valid labels without errors. The average of all the rows

percent_successfulvalues. - Latency: The 95th percentile of the time taken to run the framework on the data.

- Precision: The micro average of the precision of the framework on the data.

- Recall: The micro average of the recall of the framework on the data.

- F1 Score: The micro average of the F1 score of the framework on the data.

- Reliability: The percentage of times the framework returns valid labels without errors. The average of all the rows

-

Experiment Details: Run each row through the framework

n_runsnumber of times and log the percent of successful runs for each row.

- Synthetic Data Generation

- Task: Generate synthetic data similar according to a Pydantic data model schema.

-

Data:

- Two level nested User details Pydantic schema.

-

Prompt:

Generate a random person's information. The name must be chosen at random. Make it something you wouldn't normally choose. -

Evaluation Metrics:

- Reliability: The percentage of times the framework returns valid labels without errors. The average of all the rows

percent_successfulvalues. - Latency: The 95th percentile of the time taken to run the framework on the data.

- Variety: The percent of names that are unique compared to all names generated.

- Reliability: The percentage of times the framework returns valid labels without errors. The average of all the rows

-

Experiment Details: Run each row through the framework

n_runsnumber of times and log the percent of successful runs.

- Create a new pandas dataframe pickle file with the following columns:

-

text: The text to be sent to the framework -

labels: List of labels associated with the text - See

data/multilabel_classification.pklfor an example.

-

- Add the path to the new pickle file in the

./config.yamlfile under thesource_data_pickle_pathkey for all the frameworks you want to test. - Run the benchmark using

python -m main run-benchmarkto test the new data on all the frameworks! - Generate the results using

python -m main generate-results

The easiest way to create a new framework is to reference the ./frameworks/instructor_framework.py file. Detailed steps are as follows:

- Create a .py file in frameworks directory with the name of the framework. Eg.,

instructor_framework.pyfor the instructor framework. - In this .py file create a class that inherits

BaseFrameworkfromframeworks.base. - The class should define an

initmethod that initializes the base class. Here are the arguments the base class expects:-

task(str): the task that the framework is being tested on. Obtained from./config.yamlfile. Allowed values are"multilabel_classification"and"ner" -

prompt(str): Prompt template used. Obtained from theinit_kwargsin the./config.yamlfile. -

llm_model(str): LLM model to be used. Obtained from theinit_kwargsin the./config.yamlfile. -

llm_model_family(str): LLM model family to be used. Current supported values as"openai"and"transformers". Obtained from theinit_kwargsin the./config.yamlfile. -

retries(int): Number of retries for the framework. Default is $0$. Obtained from theinit_kwargsin the./config.yamlfile. -

source_data_picke_path(str): Path to the source data pickle file. Obtained from theinit_kwargsin the./config.yamlfile. -

sample_rows(int): Number of rows to sample from the source data. Useful for testing on a smaller subset of data. Default is $0$ which uses all rows in source_data_pickle_path for the benchmarking. Obtained from theinit_kwargsin the./config.yamlfile. -

response_model(Any): The response model to be used. Internally passed by the benchmarking script.

-

- The class should define a

runmethod that takes three arguments:-

task: The task that the framework is being tested on. Obtained from thetaskin the./config.yamlfile. Eg.,"multilabel_classification" -

n_runs: number of times to repeat each text -

expected_response: Output expected from the framework. Use default value ofNone -

inputs: a dictionary of{"text": str}wherestris the text to be sent to the framework. Use default value of empty dictionary{}

-

- This

runmethod should create anotherrun_experimentfunction that takesinputsas argument, runs that input through the framework and returns the output. - The

run_experimentfunction should be annotated with the@experimentdecorator fromframeworks.basewithn_runs,expected_resposneandtaskas arguments. - The

runmethod should call therun_experimentfunction and return the four outputspredictions,percent_successful,metricsandlatencies. - Import this new class in

frameworks/__init__.py. - Add a new entry in the

./config.yamlfile with the name of the class as the key. The yaml entry can have the following fields-

task: the task that the framework is being tested on. Obtained from./config.yamlfile. Allowed values are"multilabel_classification"and"ner" -

n_runs: number of times to repeat each text -

init_kwargs: all the arguments that need to be passed to theinitmethod of the class, including those mentioned in step 3 above.

-

- Framework related tasks:

Framework Multi-label classification Named Entity Recognition Synthetic Data Generation OpenAI Structured Output ✅ OpenAI ✅ OpenAI ✅ OpenAI Instructor ✅ OpenAI ✅ OpenAI ✅ OpenAI Mirascope ✅ OpenAI ✅ OpenAI ✅ OpenAI Fructose ✅ OpenAI 🚧 In Progress 🚧 In Progress Marvin ✅ OpenAI ✅ OpenAI ✅ OpenAI Llamaindex ✅ OpenAI ✅ OpenAI ✅ OpenAI Modelsmith ✅ OpenAI 🚧 In Progress ✅ OpenAI Outlines ✅ HF Transformers 🚧 In Progress ✅ HF Transformers LM format enforcer ✅ HF Transformers ✅ HF Transformers ✅ HF Transformers Jsonformer ❌ No Enum Support 💭 Planning 💭 Planning Strictjson ❌ Non-standard schema ❌ Non-standard schema ❌ Non-standard schema Guidance 💭 Planning 💭 Planning 💭 Planning DsPy 💭 Planning 💭 Planning 💭 Planning Langchain 💭 Planning 💭 Planning 💭 Planning - Others

- [x] Latency metrics

- [ ] CICD pipeline for benchmark run automation

- [ ] Async run

Contributions are welcome! Here are the steps to contribute:

- Please open an issue with any new framework you would like to add. This will help avoid duplication of effort.

- Once the issue is assigned to you, pls submit a PR with the new framework!

To cite LLM Structured Output Benchmarks in your work, please use the following bibtex reference:

@software{marie_stephen_leo_2024_12327267,

author = {Marie Stephen Leo},

title = {{stephenleo/llm-structured-output-benchmarks:

Release for Zenodo}},

month = jun,

year = 2024,

publisher = {Zenodo},

version = {v0.0.1},

doi = {10.5281/zenodo.12327267},

url = {https://doi.org/10.5281/zenodo.12327267}

}If this work helped you in any way, please consider ⭐ this repository to give me feedback so I can spend more time on this project.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-structured-output-benchmarks

Similar Open Source Tools

llm-structured-output-benchmarks

Benchmark various LLM Structured Output frameworks like Instructor, Mirascope, Langchain, LlamaIndex, Fructose, Marvin, Outlines, LMFormatEnforcer, etc on tasks like multi-label classification, named entity recognition, synthetic data generation. The tool provides benchmark results, methodology, instructions to run the benchmark, add new data, and add a new framework. It also includes a roadmap for framework-related tasks, contribution guidelines, citation information, and feedback request.

optillm

optillm is an OpenAI API compatible optimizing inference proxy implementing state-of-the-art techniques to enhance accuracy and performance of LLMs, focusing on reasoning over coding, logical, and mathematical queries. By leveraging additional compute at inference time, it surpasses frontier models across diverse tasks.

RouterArena

RouterArena is an open evaluation platform and leaderboard for LLM routers, aiming to provide a standardized evaluation framework for assessing the performance of routers in terms of accuracy, cost, and other metrics. It offers diverse data coverage, comprehensive metrics, automated evaluation, and a live leaderboard to track router performance. Users can evaluate their routers by following setup steps, obtaining routing decisions, running LLM inference, and evaluating router performance. Contributions and collaborations are welcome, and users can submit their routers for evaluation to be included in the leaderboard.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features like Virtual API System, Solvable Queries, and Stable Evaluation System. The benchmark ensures consistency through a caching system and API simulators, filters queries based on solvability using LLMs, and evaluates model performance using GPT-4 with metrics like Solvable Pass Rate and Solvable Win Rate.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features such as a Virtual API System with caching and API simulators, a new set of solvable queries determined by LLMs, and a Stable Evaluation System using GPT-4. The Virtual API Server can be set up either by building from source or using a prebuilt Docker image. Users can test the server using provided scripts and evaluate models with Solvable Pass Rate and Solvable Win Rate metrics. The tool also includes model experiments results comparing different models' performance.

AQLM

AQLM is the official PyTorch implementation for Extreme Compression of Large Language Models via Additive Quantization. It includes prequantized AQLM models without PV-Tuning and PV-Tuned models for LLaMA, Mistral, and Mixtral families. The repository provides inference examples, model details, and quantization setups. Users can run prequantized models using Google Colab examples, work with different model families, and install the necessary inference library. The repository also offers detailed instructions for quantization, fine-tuning, and model evaluation. AQLM quantization involves calibrating models for compression, and users can improve model accuracy through finetuning. Additionally, the repository includes information on preparing models for inference and contributing guidelines.

KwaiAgents

KwaiAgents is a series of Agent-related works open-sourced by the [KwaiKEG](https://github.com/KwaiKEG) from [Kuaishou Technology](https://www.kuaishou.com/en). The open-sourced content includes: 1. **KAgentSys-Lite**: a lite version of the KAgentSys in the paper. While retaining some of the original system's functionality, KAgentSys-Lite has certain differences and limitations when compared to its full-featured counterpart, such as: (1) a more limited set of tools; (2) a lack of memory mechanisms; (3) slightly reduced performance capabilities; and (4) a different codebase, as it evolves from open-source projects like BabyAGI and Auto-GPT. Despite these modifications, KAgentSys-Lite still delivers comparable performance among numerous open-source Agent systems available. 2. **KAgentLMs**: a series of large language models with agent capabilities such as planning, reflection, and tool-use, acquired through the Meta-agent tuning proposed in the paper. 3. **KAgentInstruct**: over 200k Agent-related instructions finetuning data (partially human-edited) proposed in the paper. 4. **KAgentBench**: over 3,000 human-edited, automated evaluation data for testing Agent capabilities, with evaluation dimensions including planning, tool-use, reflection, concluding, and profiling.

TableLLM

TableLLM is a large language model designed for efficient tabular data manipulation tasks in real office scenarios. It can generate code solutions or direct text answers for tasks like insert, delete, update, query, merge, and chart operations on tables embedded in spreadsheets or documents. The model has been fine-tuned based on CodeLlama-7B and 13B, offering two scales: TableLLM-7B and TableLLM-13B. Evaluation results show its performance on benchmarks like WikiSQL, Spider, and self-created table operation benchmark. Users can use TableLLM for code and text generation tasks on tabular data.

DB-GPT-Hub

DB-GPT-Hub is an experimental project leveraging Large Language Models (LLMs) for Text-to-SQL parsing. It includes stages like data collection, preprocessing, model selection, construction, and fine-tuning of model weights. The project aims to enhance Text-to-SQL capabilities, reduce model training costs, and enable developers to contribute to improving Text-to-SQL accuracy. The ultimate goal is to achieve automated question-answering based on databases, allowing users to execute complex database queries using natural language descriptions. The project has successfully integrated multiple large models and established a comprehensive workflow for data processing, SFT model training, prediction output, and evaluation.

Cherry_LLM

Cherry Data Selection project introduces a self-guided methodology for LLMs to autonomously discern and select cherry samples from open-source datasets, minimizing manual curation and cost for instruction tuning. The project focuses on selecting impactful training samples ('cherry data') to enhance LLM instruction tuning by estimating instruction-following difficulty. The method involves phases like 'Learning from Brief Experience', 'Evaluating Based on Experience', and 'Retraining from Self-Guided Experience' to improve LLM performance.

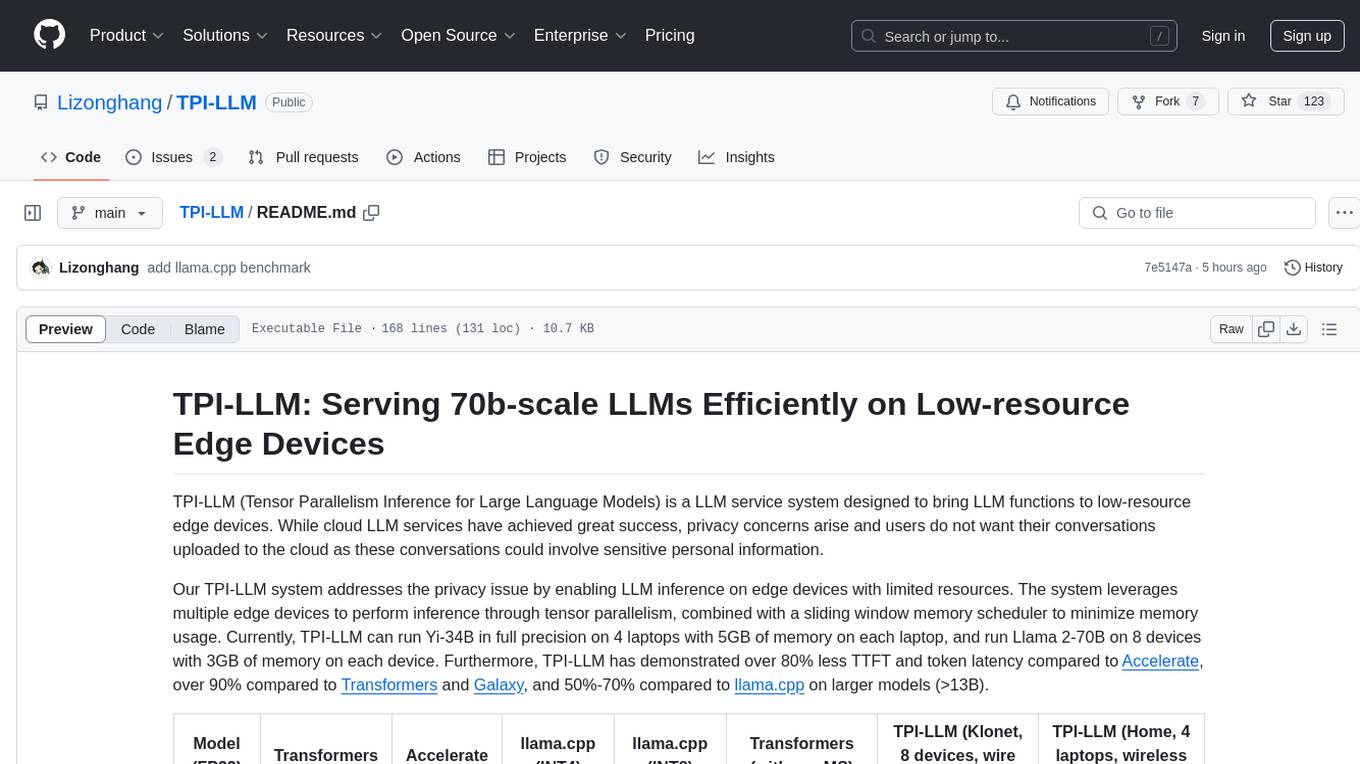

TPI-LLM

TPI-LLM (Tensor Parallelism Inference for Large Language Models) is a system designed to bring LLM functions to low-resource edge devices, addressing privacy concerns by enabling LLM inference on edge devices with limited resources. It leverages multiple edge devices for inference through tensor parallelism and a sliding window memory scheduler to minimize memory usage. TPI-LLM demonstrates significant improvements in TTFT and token latency compared to other models, and plans to support infinitely large models with low token latency in the future.

cambrian

Cambrian-1 is a fully open project focused on exploring multimodal Large Language Models (LLMs) with a vision-centric approach. It offers competitive performance across various benchmarks with models at different parameter levels. The project includes training configurations, model weights, instruction tuning data, and evaluation details. Users can interact with Cambrian-1 through a Gradio web interface for inference. The project is inspired by LLaVA and incorporates contributions from Vicuna, LLaMA, and Yi. Cambrian-1 is licensed under Apache 2.0 and utilizes datasets and checkpoints subject to their respective original licenses.

manim-generator

The 'manim-generator' repository focuses on automatic video generation using an agentic LLM flow combined with the manim python library. It experiments with automated Manim video creation by delegating code drafting and validation to specific roles, reducing render failures, and improving visual consistency through iterative feedback and vision inputs. The project also includes 'Manim Bench' for comparing AI models on full Manim video generation.

vscode-unify-chat-provider

The 'vscode-unify-chat-provider' repository is a tool that integrates multiple LLM API providers into VS Code's GitHub Copilot Chat using the Language Model API. It offers free tier access to mainstream models, perfect compatibility with major LLM API formats, deep adaptation to API features, best performance with built-in parameters, out-of-the-box configuration, import/export support, great UX, and one-click use of various models. The tool simplifies model setup, migration, and configuration for users, providing a seamless experience within VS Code for utilizing different language models.

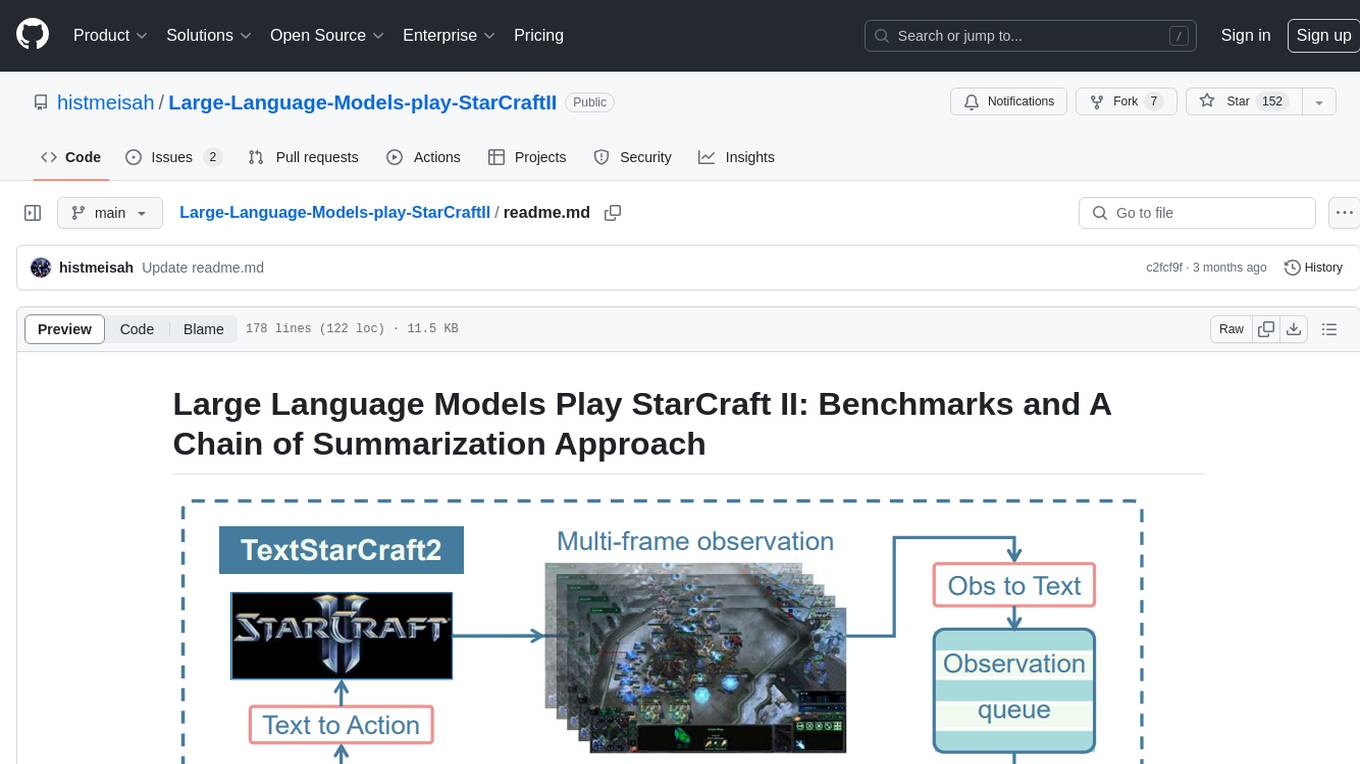

Large-Language-Models-play-StarCraftII

Large Language Models Play StarCraft II is a project that explores the capabilities of large language models (LLMs) in playing the game StarCraft II. The project introduces TextStarCraft II, a textual environment for the game, and a Chain of Summarization method for analyzing game information and making strategic decisions. Through experiments, the project demonstrates that LLM agents can defeat the built-in AI at a challenging difficulty level. The project provides benchmarks and a summarization approach to enhance strategic planning and interpretability in StarCraft II gameplay.

BetaML.jl

The Beta Machine Learning Toolkit is a package containing various algorithms and utilities for implementing machine learning workflows in multiple languages, including Julia, Python, and R. It offers a range of supervised and unsupervised models, data transformers, and assessment tools. The models are implemented entirely in Julia and are not wrappers for third-party models. Users can easily contribute new models or request implementations. The focus is on user-friendliness rather than computational efficiency, making it suitable for educational and research purposes.

For similar tasks

llm-structured-output-benchmarks

Benchmark various LLM Structured Output frameworks like Instructor, Mirascope, Langchain, LlamaIndex, Fructose, Marvin, Outlines, LMFormatEnforcer, etc on tasks like multi-label classification, named entity recognition, synthetic data generation. The tool provides benchmark results, methodology, instructions to run the benchmark, add new data, and add a new framework. It also includes a roadmap for framework-related tasks, contribution guidelines, citation information, and feedback request.

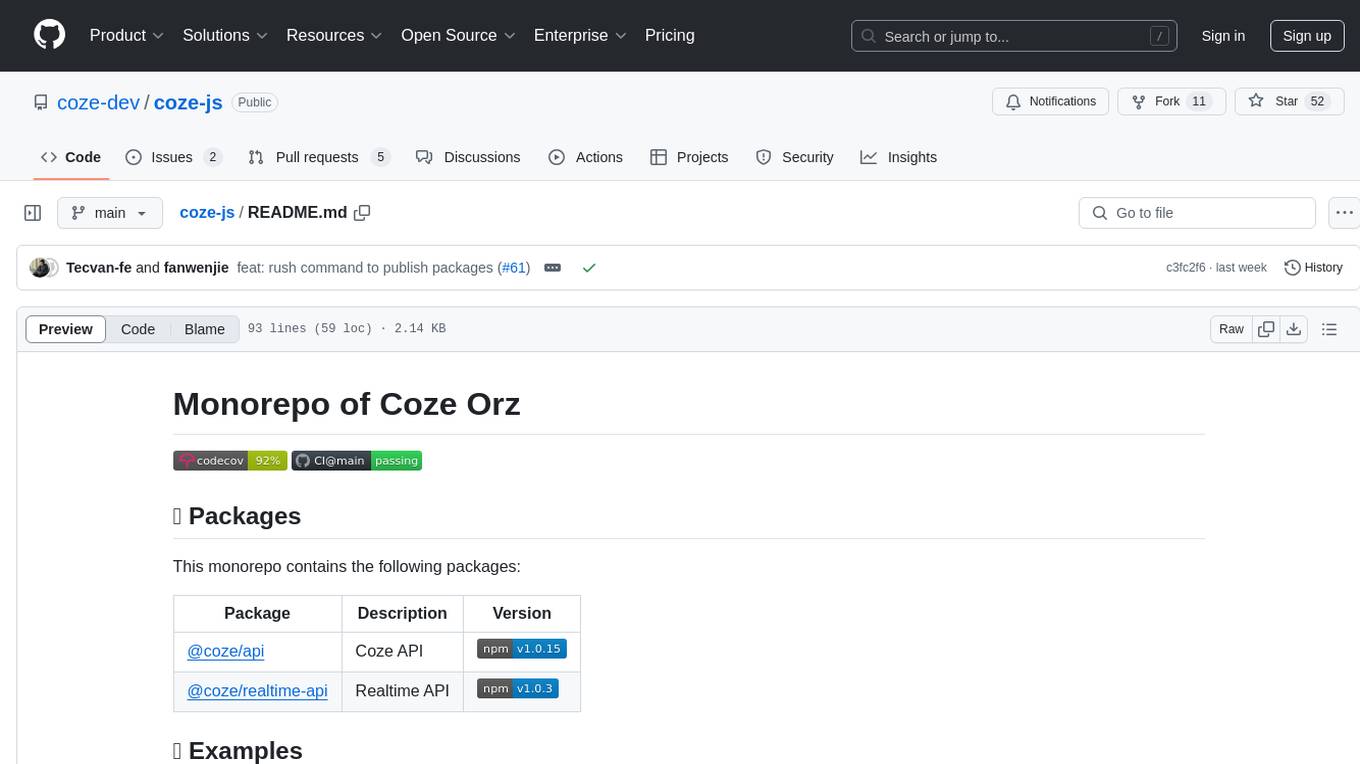

coze-js

Coze-js is a monorepo containing packages for Coze API and Realtime API. It provides usage examples for Node.js and React Web, as well as full console and sample call up demos. The tool requires Node.js 18+, pnpm 9.12.0, and Rush 5.140.0 for installation. Developers can start developing projects within the repository by following the provided steps. Each package in the monorepo can be developed and published independently, with documentation on contributing guidelines and publishing. The tool is licensed under MIT.

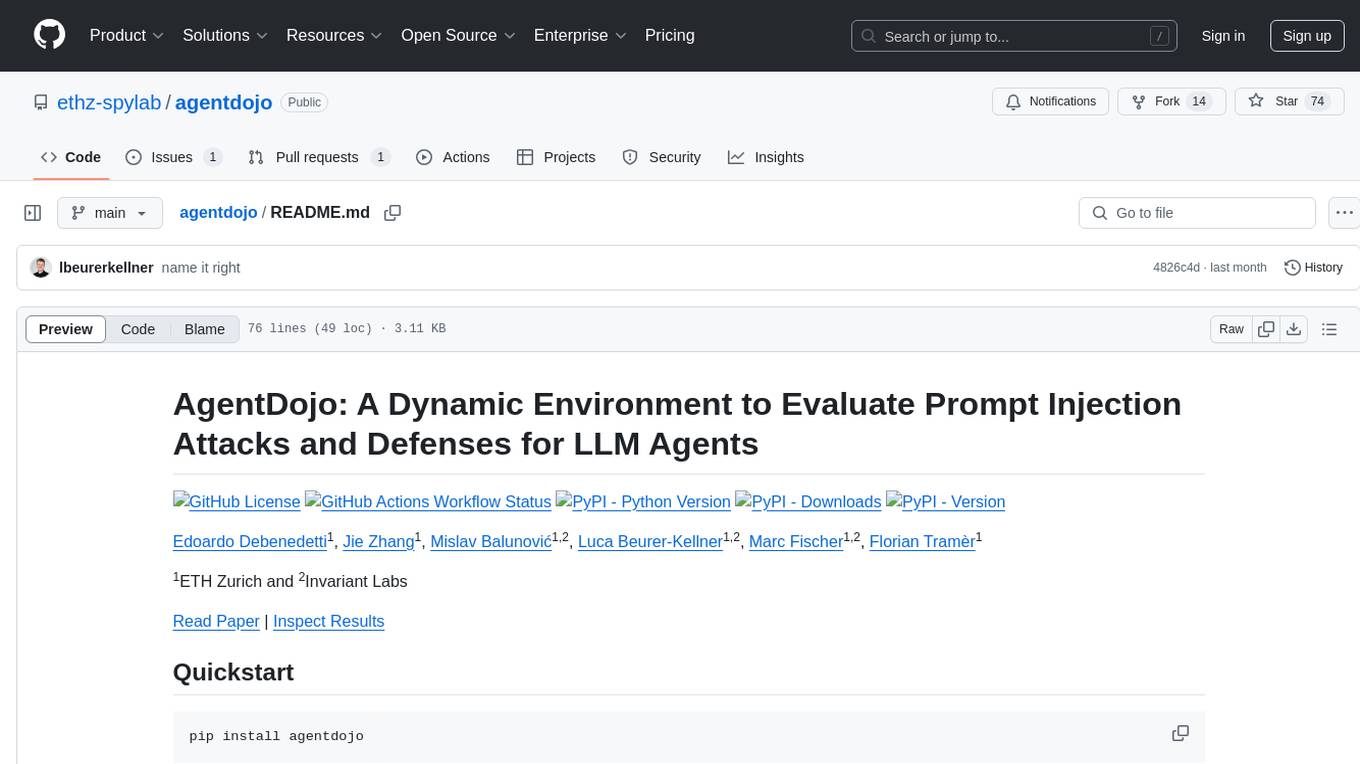

agentdojo

AgentDojo is a dynamic environment designed to evaluate prompt injection attacks and defenses for large language models (LLM) agents. It provides a benchmark script to run different suites and tasks with specified LLM models, defenses, and attacks. The tool is under active development, and users can inspect the results through dedicated documentation pages and the Invariant Benchmark Registry.

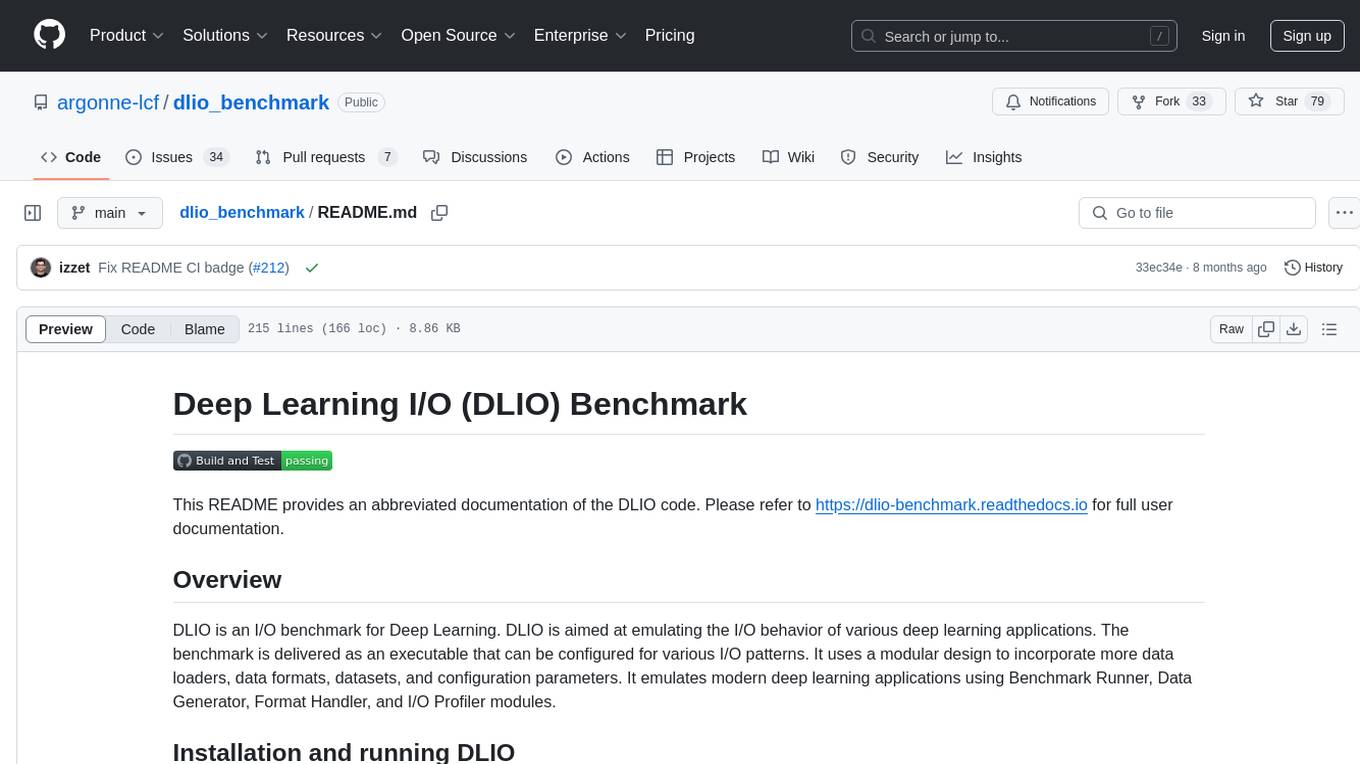

dlio_benchmark

DLIO is an I/O benchmark tool designed for Deep Learning applications. It emulates modern deep learning applications using Benchmark Runner, Data Generator, Format Handler, and I/O Profiler modules. Users can configure various I/O patterns, data loaders, data formats, datasets, and parameters. The tool is aimed at emulating the I/O behavior of deep learning applications and provides a modular design for flexibility and customization.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.