local-deep-research

Local Deep Research achieves ~95% on SimpleQA benchmark (tested with GPT-4.1-mini). Supports local and cloud LLMs (Ollama, Google, Anthropic, ...). Searches 10+ sources - arXiv, PubMed, web, and your private documents. Everything Local & Encrypted.

Stars: 4033

Local Deep Research is a powerful AI-powered research assistant that performs deep, iterative analysis using multiple LLMs and web searches. It can be run locally for privacy or configured to use cloud-based LLMs for enhanced capabilities. The tool offers advanced research capabilities, flexible LLM support, rich output options, privacy-focused operation, enhanced search integration, and academic & scientific integration. It also provides a web interface, command line interface, and supports multiple LLM providers and search engines. Users can configure AI models, search engines, and research parameters for customized research experiences.

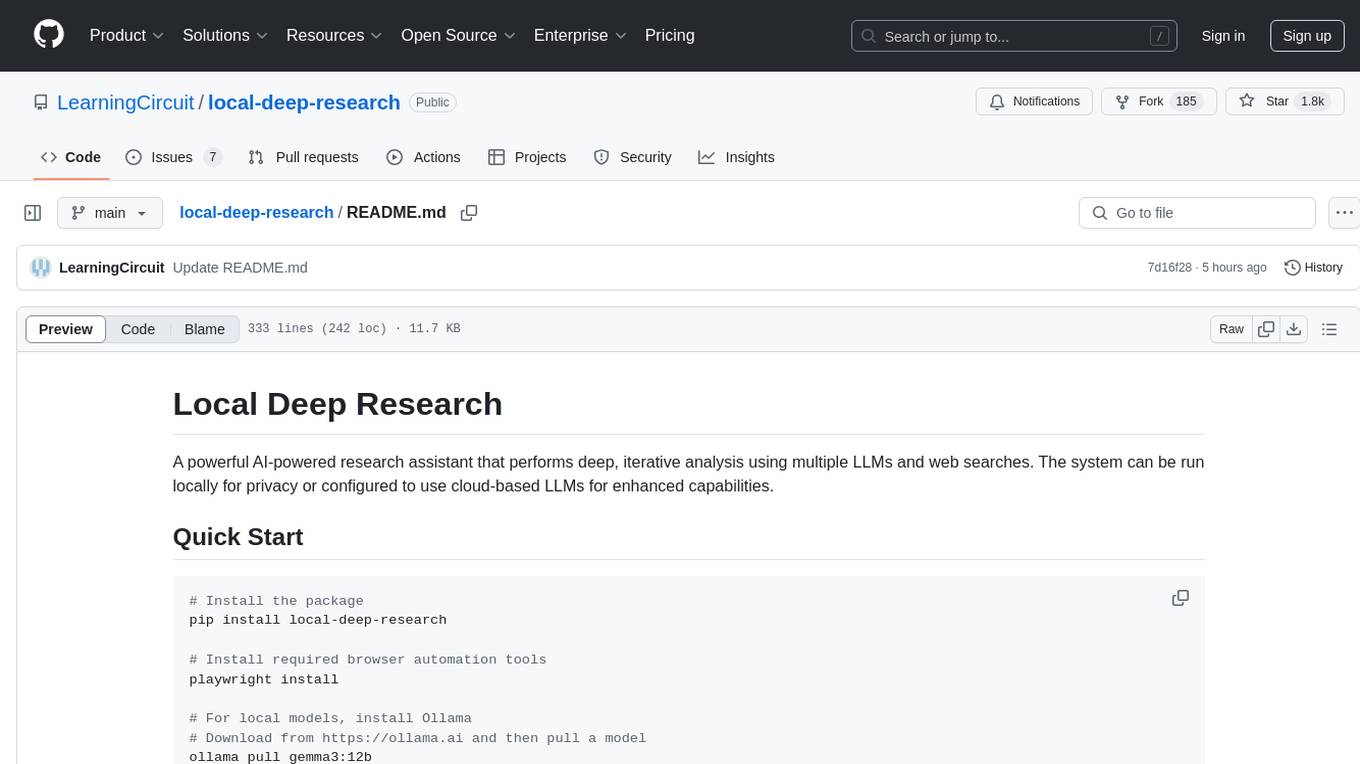

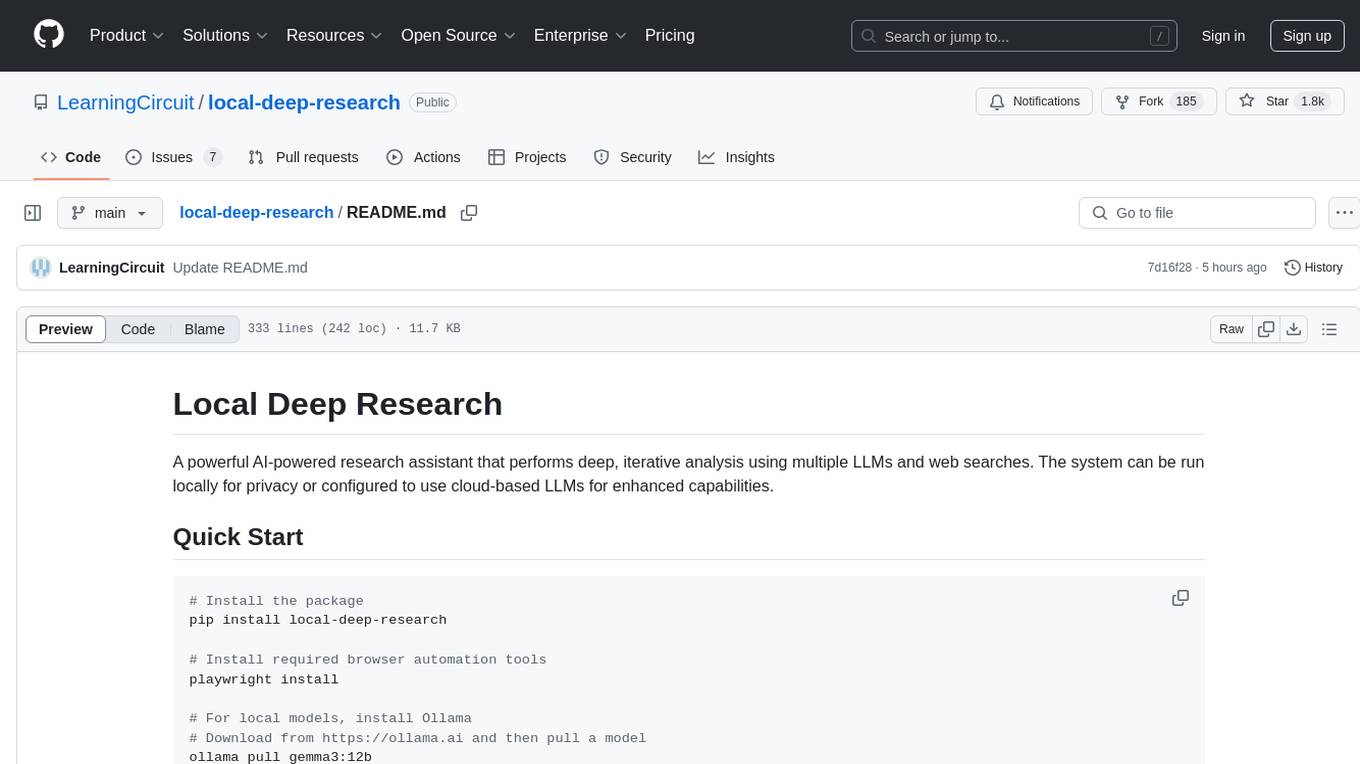

README:

AI-powered research assistant for deep, iterative research

Performs deep, iterative research using multiple LLMs and search engines with proper citations

AI research assistant you control. Run locally for privacy, use any LLM and build your own searchable knowledge base. You own your data and see exactly how it works.

Docker Run (Linux):

# Step 1: Pull and run Ollama

docker run -d -p 11434:11434 --name ollama ollama/ollama

docker exec ollama ollama pull gpt-oss:20b

# Step 2: Pull and run SearXNG for optimal search results

docker run -d -p 8080:8080 --name searxng searxng/searxng

# Step 3: Pull and run Local Deep Research

docker run -d -p 5000:5000 --network host \

--name local-deep-research \

--volume 'deep-research:/data' \

-e LDR_DATA_DIR=/data \

localdeepresearch/local-deep-researchExemplary Docker Compose:

- Mac and no Nvidia-GPU: Docker Compose File

# download and up -d

curl -O https://raw.githubusercontent.com/LearningCircuit/local-deep-research/main/docker-compose.yml && docker compose up -d- With NVIDIA GPU (Linux):

# download and up -d

curl -O https://raw.githubusercontent.com/LearningCircuit/local-deep-research/main/docker-compose.yml && \

curl -O https://raw.githubusercontent.com/LearningCircuit/local-deep-research/main/docker-compose.gpu.override.yml && \

docker compose -f docker-compose.yml -f docker-compose.gpu.override.yml up -dOpen http://localhost:5000 after ~30 seconds.

pip install (for developers):

pip install local-deep-research

⚠️ Docker is preferred for most users. SQLCipher installation can be difficult — if you don't need database encryption, setexport LDR_ALLOW_UNENCRYPTED=trueto skip it. API keys and data will be stored unencrypted. For encryption setup, see SQLCipher Guide.

You ask a complex question. LDR:

- Does the research for you automatically

- Searches across web, academic papers, and your own documents

- Synthesizes everything into a report with proper citations

Choose from 20+ research strategies for quick facts, deep analysis, or academic research.

flowchart LR

R[Research] --> D[Download Sources]

D --> L[(Library)]

L --> I[Index & Embed]

I --> S[Search Your Docs]

S -.-> REvery research session finds valuable sources. Download them directly into your encrypted library—academic papers from ArXiv, PubMed articles, web pages. LDR extracts text, indexes everything, and makes it searchable. Next time you research, ask questions across your own documents and the live web together. Your knowledge compounds over time.

flowchart LR

U1[User A] --> D1[(Encrypted DB)]

U2[User B] --> D2[(Encrypted DB)]Your data stays yours. Each user gets their own isolated SQLCipher database encrypted with AES-256 (Signal-level security). No password recovery means true zero-knowledge—even server admins can't read your data. Run fully local with Ollama + SearXNG and nothing ever leaves your machine.

In-memory credentials: Like all applications that use secrets at runtime — including password managers, browsers, and API clients — credentials are held in plain text in process memory during active sessions. This is an industry-wide accepted reality, not specific to LDR: if an attacker can read process memory, they can also read any in-process decryption key. We mitigate this with session-scoped credential lifetimes and core dump exclusion. Ideas for further improvements are always welcome via GitHub Issues. See our Security Policy for details.

Supply Chain Security: Docker images are signed with Cosign, include SLSA provenance attestations, and attach SBOMs. Verify with:

cosign verify localdeepresearch/local-deep-research:latestDetailed Architecture → | Security Policy →

~95% accuracy on SimpleQA benchmark (preliminary results)

- Tested with GPT-4.1-mini + SearXNG + focused-iteration strategy

- Comparable to state-of-the-art AI research systems

- Local models can achieve similar performance with proper configuration

- Join our community benchmarking effort →

- Quick Summary - Get answers in 30 seconds to 3 minutes with citations

- Detailed Research - Comprehensive analysis with structured findings

- Report Generation - Professional reports with sections and table of contents

- Document Analysis - Search your private documents with AI

- LangChain Integration - Use any vector store as a search engine

- REST API - Authenticated HTTP access with per-user databases

- Benchmarking - Test and optimize your configuration

- Analytics Dashboard - Track costs, performance, and usage metrics

- Real-time Updates - WebSocket support for live research progress

- Export Options - Download results as PDF or Markdown

- Research History - Save, search, and revisit past research

- Adaptive Rate Limiting - Intelligent retry system that learns optimal wait times

- Keyboard Shortcuts - Navigate efficiently (ESC, Ctrl+Shift+1-5)

- Per-User Encrypted Databases - Secure, isolated data storage for each user

- Automated Research Digests - Subscribe to topics and receive AI-powered research summaries

- Customizable Frequency - Daily, weekly, or custom schedules for research updates

- Smart Filtering - AI filters and summarizes only the most relevant developments

- Multi-format Delivery - Get updates as markdown reports or structured summaries

- Topic & Query Support - Track specific searches or broad research areas

- Academic: arXiv, PubMed, Semantic Scholar

- General: Wikipedia, SearXNG

- Technical: GitHub, Elasticsearch

- Historical: Wayback Machine

- News: The Guardian, Wikinews

- Tavily - AI-powered search

- Google - Via SerpAPI or Programmable Search Engine

- Brave Search - Privacy-focused web search

- Local Documents - Search your files with AI

- LangChain Retrievers - Any vector store or database

- Meta Search - Combine multiple engines intelligently

# Step 1: Pull and run SearXNG for optimal search results

docker run -d -p 8080:8080 --name searxng searxng/searxng

# Step 2: Pull and run Local Deep Research

docker run -d -p 5000:5000 --network host \

--name local-deep-research \

--volume 'deep-research:/data' \

-e LDR_DATA_DIR=/data \

localdeepresearch/local-deep-researchLDR uses Docker compose to bundle the web app and all its dependencies so you can get up and running quickly.

Default: CPU-only base (works on all platforms)

The base configuration works on macOS (M1/M2/M3/M4 and Intel), Windows, and Linux without requiring any GPU hardware.

Quick Start Command:

Note: curl -O will overwrite existing docker-compose.yml files in the current directory.

Linux/macOS:

curl -O https://raw.githubusercontent.com/LearningCircuit/local-deep-research/main/docker-compose.yml && docker compose up -dWindows (PowerShell required):

curl.exe -O https://raw.githubusercontent.com/LearningCircuit/local-deep-research/main/docker-compose.yml

if ($?) { docker compose up -d }Use with a different model:

curl -O https://raw.githubusercontent.com/LearningCircuit/local-deep-research/main/docker-compose.yml && MODEL=gpt-oss:20b docker compose up -dFor users with NVIDIA GPUs who want hardware acceleration.

Prerequisites:

Install the NVIDIA Container Toolkit first (Ubuntu/Debian):

# Install NVIDIA Container Toolkit (for GPU support)

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

sudo apt-get update

sudo apt-get install nvidia-container-toolkit -y

sudo systemctl restart docker

# Verify installation

nvidia-smiVerify: The nvidia-smi command should display your GPU information. If it fails, check your NVIDIA driver installation.

Note: For RHEL/CentOS/Fedora, Arch, or other Linux distributions, see the NVIDIA Container Toolkit installation guide.

Quick Start Commands:

Note: curl -O will overwrite existing files in the current directory.

curl -O https://raw.githubusercontent.com/LearningCircuit/local-deep-research/main/docker-compose.yml && \

curl -O https://raw.githubusercontent.com/LearningCircuit/local-deep-research/main/docker-compose.gpu.override.yml && \

docker compose -f docker-compose.yml -f docker-compose.gpu.override.yml up -dOptional: Create an alias for convenience

alias docker-compose-gpu='docker compose -f docker-compose.yml -f docker-compose.gpu.override.yml'

# Then simply use: docker-compose-gpu up -dOpen http://localhost:5000 after ~30 seconds. This starts LDR with SearXNG and all dependencies.

See docker-compose.yml for a docker-compose file with reasonable defaults to get up and running with ollama, searxng, and local deep research all running locally.

Things you may want/need to configure:

- Ollama GPU driver

- Ollama context length (depends on available VRAM)

- Ollama keep alive (duration model will stay loaded into VRAM and idle before getting unloaded automatically)

- Deep Research model (depends on available VRAM and preference)

- Docker

- Docker Compose

-

cookiecutter: Runpip install --user cookiecutter

Clone the repository:

git clone https://github.com/LearningCircuit/local-deep-research.git

cd local-deep-researchCookiecutter will interactively guide you through the process of creating a

docker-compose configuration that meets your specific needs. This is the

recommended approach if you are not very familiar with Docker.

In the LDR repository, run the following command to generate the compose file:

cookiecutter cookiecutter-docker/

docker compose -f docker-compose.default.yml upNote: For most users, Docker is preferred as it handles all dependencies automatically. pip install is best suited for developers or users who want to integrate LDR into existing Python projects. SQLCipher installation can be difficult — see the note below for how to skip it.

# Step 1: Install the package

pip install local-deep-research

# Step 2: Setup SearXNG for best results

docker pull searxng/searxng

docker run -d -p 8080:8080 --name searxng searxng/searxng

# Step 3: Install Ollama from https://ollama.ai

# Step 4: Download a model

ollama pull gemma3:12b

# Step 5: Start the web interface

python -m local_deep_research.web.app

⚠️ SQLCipher Note: For database encryption (AES-256), install system-level SQLCipher libraries — see SQLCipher Guide. If you don't need encryption, setexport LDR_ALLOW_UNENCRYPTED=trueto use standard SQLite. API keys and data will be stored unencrypted. Docker includes encryption out of the box.

Note: For development from source, see the Development Guide.

VLLM support (for running transformer models directly):

pip install "local-deep-research[vllm]"This installs torch, transformers, and vllm for advanced local model hosting. Most users running Ollama or LlamaCpp don't need this.

For Unraid users:

Local Deep Research is fully compatible with Unraid servers!

- Navigate to Docker tab → Docker Repositories

- Add template repository:

https://github.com/LearningCircuit/local-deep-research - Click Add Container → Select LocalDeepResearch from template

- Configure paths (default:

/mnt/user/appdata/local-deep-research/) - Click Apply

If you prefer using Docker Compose on Unraid:

- Install "Docker Compose Manager" from Community Applications

- Create a new stack with the compose file from this repo

- Update volume paths to Unraid format (

/mnt/user/appdata/...)

Features on Unraid:

- ✅ Pre-configured template with sensible defaults

- ✅ Automatic SearXNG and Ollama integration

- ✅ NVIDIA GPU passthrough support (optional)

- ✅ Integration with Unraid shares for document search

- ✅ Backup integration with CA Appdata Backup plugin

from local_deep_research.api import LDRClient, quick_query

# Option 1: Simplest - one line research

summary = quick_query("username", "password", "What is quantum computing?")

print(summary)

# Option 2: Client for multiple operations

client = LDRClient()

client.login("username", "password")

result = client.quick_research("What are the latest advances in quantum computing?")

print(result["summary"])The code example below shows the basic API structure - for working examples, see the link below

import requests

from bs4 import BeautifulSoup

# Create session and authenticate

session = requests.Session()

login_page = session.get("http://localhost:5000/auth/login")

soup = BeautifulSoup(login_page.text, "html.parser")

login_csrf = soup.find("input", {"name": "csrf_token"}).get("value")

# Login and get API CSRF token

session.post("http://localhost:5000/auth/login",

data={"username": "user", "password": "pass", "csrf_token": login_csrf})

csrf = session.get("http://localhost:5000/auth/csrf-token").json()["csrf_token"]

# Make API request

response = session.post("http://localhost:5000/api/start_research",

json={"query": "Your research question"},

headers={"X-CSRF-Token": csrf})🚀 Ready-to-use HTTP API Examples → examples/api_usage/http/

- ✅ Automatic user creation - works out of the box

- ✅ Complete authentication with CSRF handling

- ✅ Result retry logic - waits until research completes

- ✅ Progress monitoring and error handling

# Run benchmarks from CLI

python -m local_deep_research.benchmarks --dataset simpleqa --examples 50

# Manage rate limiting

python -m local_deep_research.web_search_engines.rate_limiting status

python -m local_deep_research.web_search_engines.rate_limiting resetConnect LDR to your existing knowledge base:

from local_deep_research.api import quick_summary

# Use your existing LangChain retriever

result = quick_summary(

query="What are our deployment procedures?",

retrievers={"company_kb": your_retriever},

search_tool="company_kb"

)Works with: FAISS, Chroma, Pinecone, Weaviate, Elasticsearch, and any LangChain-compatible retriever.

Early experiments on small SimpleQA dataset samples:

| Configuration | Accuracy | Notes |

|---|---|---|

| gpt-4.1-mini + SearXNG + focused_iteration | 90-95% | Limited sample size |

| gpt-4.1-mini + Tavily + focused_iteration | 90-95% | Limited sample size |

| gemini-2.0-flash-001 + SearXNG | 82% | Single test run |

Note: These are preliminary results from initial testing. Performance varies significantly based on query types, model versions, and configurations. Run your own benchmarks →

Track costs, performance, and usage with detailed metrics. Learn more →

- Llama 3, Mistral, Gemma, DeepSeek

- LLM processing stays local (search queries still go to web)

- No API costs

- OpenAI (GPT-4, GPT-3.5)

- Anthropic (Claude 3)

- Google (Gemini)

- 100+ models via OpenRouter

- Installation Guide

- Frequently Asked Questions

- API Quickstart

- Configuration Guide

- Full Configuration Reference

"Local Deep Research deserves special mention for those who prioritize privacy... tuned to use open-source LLMs that can run on consumer GPUs or even CPUs. Journalists, researchers, or companies with sensitive topics can investigate information without queries ever hitting an external server."

- Korben.info - French tech blog ("Sherlock Holmes numérique")

- Roboto.fr - "L'alternative open-source gratuite à Deep Research d'OpenAI"

- KDJingPai AI Tools - AI productivity tools coverage

- AI Sharing Circle - AI resources coverage

- Hacker News - 190+ points, community discussion

- LangChain Twitter/X - Official LangChain promotion

- LangChain LinkedIn - 400+ likes

- Juejin (掘金) - Developer community

- Cnblogs (博客园) - Developer blogs

- GitHubDaily (Twitter/X) - Influential tech account

- Zhihu (知乎) - Tech community

- A姐分享 - AI resources

- CSDN - Installation guide

- NetEase (网易) - Tech news portal

- note.com: 調査革命:Local Deep Research徹底活用法 - Comprehensive tutorial

- Qiita: Local Deep Researchを試す - Docker setup guide

- LangChainJP (Twitter/X) - Japanese LangChain community

- PyTorch Korea Forum - Korean ML community

- GeekNews (Hada.io) - Korean tech news

- BSAIL Lab: How useful is Deep Research in Academia? - Academic review by contributor @djpetti

- The Art Of The Terminal: Use Local LLMs Already! - Comprehensive review of local AI tools, featuring LDR's research capabilities (embeddings now work!)

- SearXNG LDR-Academic - Academic-focused SearXNG fork with 12 research engines (arXiv, Google Scholar, PubMed, etc.) designed for LDR

- DeepWiki Documentation - Third-party documentation and guides

Note: Third-party projects and articles are independently maintained. We link to them as useful resources but cannot guarantee their code quality or security.

- Discord - Get help and share research techniques

- Reddit - Updates and showcases

- GitHub Issues - Bug reports

We welcome contributions of all sizes — from typo fixes to new features. The key rule: keep PRs small and atomic (one change per PR). For larger changes, please open an issue or start a discussion first — we want to protect your time and make sure your effort leads to a successful merge rather than a misaligned PR. See our Contributing Guide to get started.

MIT License - see LICENSE file.

Dependencies: All third-party packages use permissive licenses (MIT, Apache-2.0, BSD, etc.) - see allowlist

Built with: LangChain, Ollama, SearXNG, FAISS

Support Free Knowledge: Consider donating to Wikipedia, arXiv, or PubMed.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for local-deep-research

Similar Open Source Tools

local-deep-research

Local Deep Research is a powerful AI-powered research assistant that performs deep, iterative analysis using multiple LLMs and web searches. It can be run locally for privacy or configured to use cloud-based LLMs for enhanced capabilities. The tool offers advanced research capabilities, flexible LLM support, rich output options, privacy-focused operation, enhanced search integration, and academic & scientific integration. It also provides a web interface, command line interface, and supports multiple LLM providers and search engines. Users can configure AI models, search engines, and research parameters for customized research experiences.

local-cocoa

Local Cocoa is a privacy-focused tool that runs entirely on your device, turning files into memory to spark insights and power actions. It offers features like fully local privacy, multimodal memory, vector-powered retrieval, intelligent indexing, vision understanding, hardware acceleration, focused user experience, integrated notes, and auto-sync. The tool combines file ingestion, intelligent chunking, and local retrieval to build a private on-device knowledge system. The ultimate goal includes more connectors like Google Drive integration, voice mode for local speech-to-text interaction, and a plugin ecosystem for community tools and agents. Local Cocoa is built using Electron, React, TypeScript, FastAPI, llama.cpp, and Qdrant.

OpenResearcher

OpenResearcher is a fully open agentic large language model designed for long-horizon deep research scenarios. It achieves an impressive 54.8% accuracy on BrowseComp-Plus, surpassing performance of GPT-4.1, Claude-Opus-4, Gemini-2.5-Pro, DeepSeek-R1, and Tongyi-DeepResearch. The tool is fully open-source, providing the training and evaluation recipe—including data, model, training methodology, and evaluation framework for everyone to progress deep research. It offers features like a fully open-source recipe, highly scalable and low-cost generation of deep research trajectories, and remarkable performance on deep research benchmarks.

pyspur

PySpur is a graph-based editor designed for LLM (Large Language Models) workflows. It offers modular building blocks, node-level debugging, and performance evaluation. The tool is easy to hack, supports JSON configs for workflow graphs, and is lightweight with minimal dependencies. Users can quickly set up PySpur by cloning the repository, creating a .env file, starting docker services, and accessing the portal. PySpur can also work with local models served using Ollama, with steps provided for configuration. The roadmap includes features like canvas, async/batch execution, support for Ollama, new nodes, pipeline optimization, templates, code compilation, multimodal support, and more.

BubbleLab

Bubble Lab is an open-source agentic workflow automation builder designed for developers seeking full control, transparency, and type safety. It compiles workflows into clean, production-ready TypeScript code that can be debugged and deployed anywhere. With features like natural language prompt to workflow generation, full observability, seamless migration from other platforms, and instant export as TypeScript/API, Bubble Lab offers a flexible and code-centric approach to workflow automation.

open-health

OpenHealth is an AI health assistant that helps users manage their health data by leveraging AI and personal health information. It allows users to consolidate health data, parse it smartly, and engage in contextual conversations with GPT-powered AI. The tool supports various data sources like blood test results, health checkup data, personal physical information, family history, and symptoms. OpenHealth aims to empower users to take control of their health by combining data and intelligence for actionable health management.

RepoMaster

RepoMaster is an AI agent that leverages GitHub repositories to solve complex real-world tasks. It transforms how coding tasks are solved by automatically finding the right GitHub tools and making them work together seamlessly. Users can describe their tasks, and RepoMaster's AI analysis leads to auto discovery and smart execution, resulting in perfect outcomes. The tool provides a web interface for beginners and a command-line interface for advanced users, along with specialized agents for deep search, general assistance, and repository tasks.

Lumina-Note

Lumina Note is a local-first AI note-taking app designed to help users write, connect, and evolve knowledge with AI capabilities while ensuring data ownership. It offers a knowledge-centered workflow with features like Markdown editor, WikiLinks, and graph view. The app includes AI workspace modes such as Chat, Agent, Deep Research, and Codex, along with support for multiple model providers. Users can benefit from bidirectional links, LaTeX support, graph visualization, PDF reader with annotations, real-time voice input, and plugin ecosystem for extended functionalities. Lumina Note is built on Tauri v2 framework with a tech stack including React 18, TypeScript, Tailwind CSS, and SQLite for vector storage.

R2R

R2R (RAG to Riches) is a fast and efficient framework for serving high-quality Retrieval-Augmented Generation (RAG) to end users. The framework is designed with customizable pipelines and a feature-rich FastAPI implementation, enabling developers to quickly deploy and scale RAG-based applications. R2R was conceived to bridge the gap between local LLM experimentation and scalable production solutions. **R2R is to LangChain/LlamaIndex what NextJS is to React**. A JavaScript client for R2R deployments can be found here. ### Key Features * **🚀 Deploy** : Instantly launch production-ready RAG pipelines with streaming capabilities. * **🧩 Customize** : Tailor your pipeline with intuitive configuration files. * **🔌 Extend** : Enhance your pipeline with custom code integrations. * **⚖️ Autoscale** : Scale your pipeline effortlessly in the cloud using SciPhi. * **🤖 OSS** : Benefit from a framework developed by the open-source community, designed to simplify RAG deployment.

ClaudeBar

ClaudeBar is a macOS menu bar application that monitors AI coding assistant usage quotas. It allows users to keep track of their usage of Claude, Codex, Gemini, GitHub Copilot, Antigravity, and Z.ai at a glance. The application offers multi-provider support, real-time quota tracking, multiple themes, visual status indicators, system notifications, auto-refresh feature, and keyboard shortcuts for quick access. Users can customize monitoring by toggling individual providers on/off and receive alerts when quota status changes. The tool requires macOS 15+, Swift 6.2+, and CLI tools installed for the providers to be monitored.

InsForge

InsForge is a backend development platform designed for AI coding agents and AI code editors. It serves as a semantic layer that enables agents to interact with backend primitives such as databases, authentication, storage, and functions in a meaningful way. The platform allows agents to fetch backend context, configure primitives, and inspect backend state through structured schemas. InsForge facilitates backend context engineering for AI coding agents to understand, operate, and monitor backend systems effectively.

strix

Strix is an open-source AI tool designed to help developers and security teams find and fix vulnerabilities in applications. It offers a full hacker toolkit, teams of autonomous AI agents for collaboration, real validation with proof-of-concepts, a developer-first CLI with actionable reports, and auto-fix & reporting features. Strix can be used for application security testing, rapid penetration testing, bug bounty automation, and CI/CD integration. It provides comprehensive vulnerability detection for various security issues and offers advanced multi-agent orchestration for security testing. The tool supports basic and advanced testing scenarios, headless mode for automated jobs, and CI/CD integration with GitHub Actions. Strix also offers configuration options for optimal performance and recommends specific AI models for best results. Full documentation is available at docs.strix.ai, and contributions to the project are welcome.

MassGen

MassGen is a cutting-edge multi-agent system that leverages the power of collaborative AI to solve complex tasks. It assigns a task to multiple AI agents who work in parallel, observe each other's progress, and refine their approaches to converge on the best solution to deliver a comprehensive and high-quality result. The system operates through an architecture designed for seamless multi-agent collaboration, with key features including cross-model/agent synergy, parallel processing, intelligence sharing, consensus building, and live visualization. Users can install the system, configure API settings, and run MassGen for various tasks such as question answering, creative writing, research, development & coding tasks, and web automation & browser tasks. The roadmap includes plans for advanced agent collaboration, expanded model, tool & agent integration, improved performance & scalability, enhanced developer experience, and a web interface.

seline

Seline is a local-first AI desktop application that integrates conversational AI, visual generation tools, vector search, and multi-channel connectivity. It allows users to connect WhatsApp, Telegram, or Slack to create always-on bots with full context and background task delivery. The application supports multi-channel connectivity, deep research mode, local web browsing with Puppeteer, local knowledge and privacy features, visual and creative tools, automation and agents, developer experience enhancements, and more. Seline is actively developed with a focus on improving user experience and functionality.

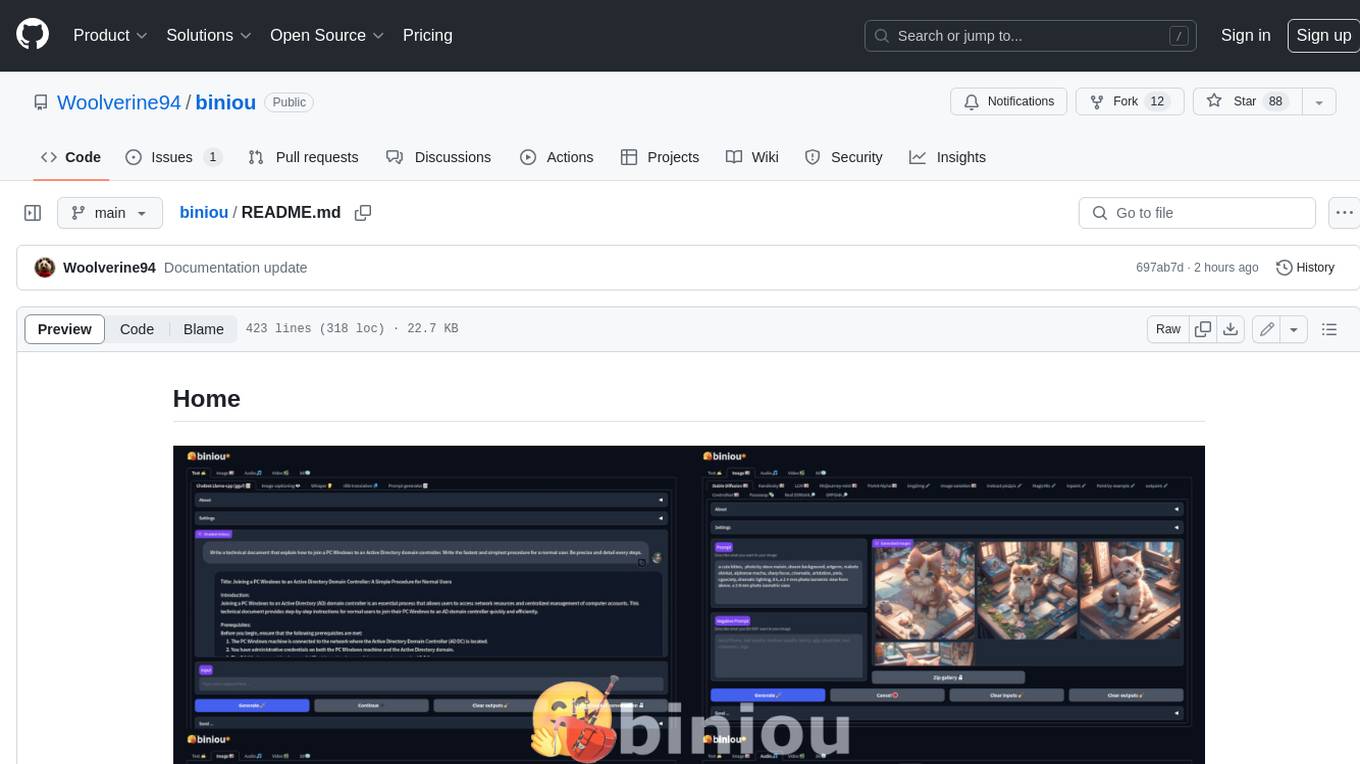

biniou

biniou is a self-hosted webui for various GenAI (generative artificial intelligence) tasks. It allows users to generate multimedia content using AI models and chatbots on their own computer, even without a dedicated GPU. The tool can work offline once deployed and required models are downloaded. It offers a wide range of features for text, image, audio, video, and 3D object generation and modification. Users can easily manage the tool through a control panel within the webui, with support for various operating systems and CUDA optimization. biniou is powered by Huggingface and Gradio, providing a cross-platform solution for AI content generation.

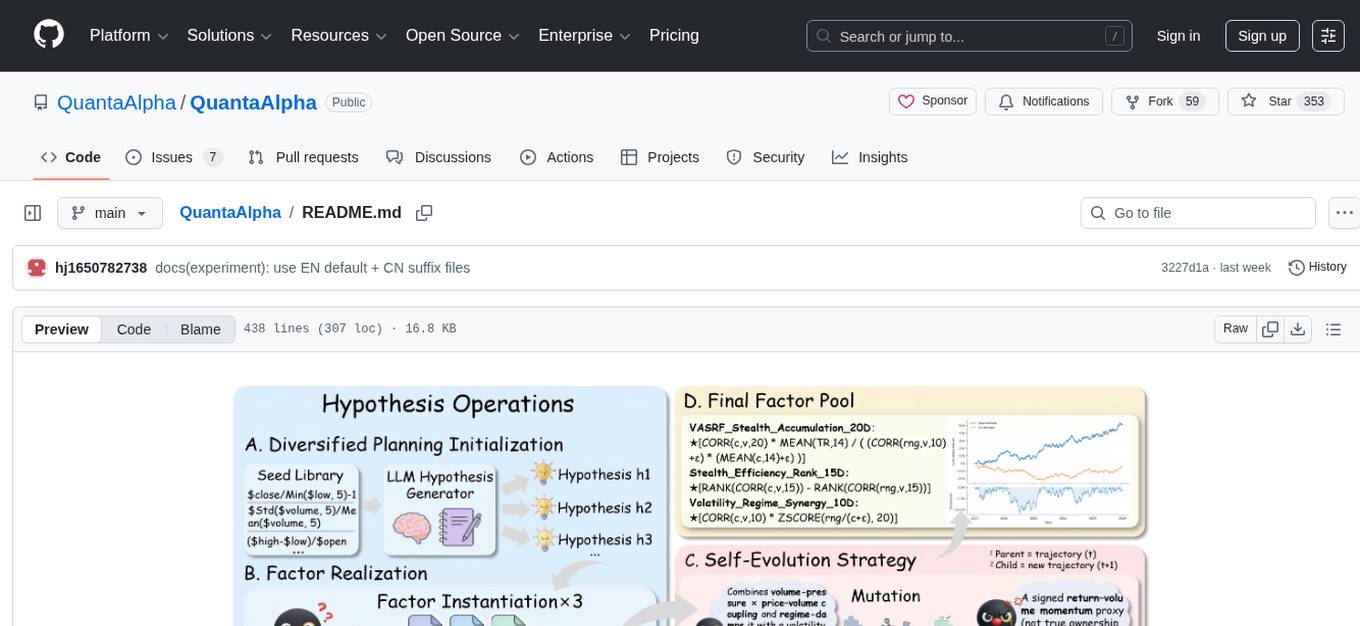

QuantaAlpha

QuantaAlpha is a framework designed for factor mining in quantitative alpha research. It combines LLM intelligence with evolutionary strategies to automatically mine, evolve, and validate alpha factors through self-evolving trajectories. The framework provides a trajectory-based approach with diversified planning initialization and structured hypothesis-code constraint. Users can describe their research direction and observe the automatic factor mining process. QuantaAlpha aims to transform how quantitative alpha factors are discovered by leveraging advanced technologies and self-evolving methodologies.

For similar tasks

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

mojo

Mojo is a new programming language that bridges the gap between research and production by combining Python syntax and ecosystem with systems programming and metaprogramming features. Mojo is still young, but it is designed to become a superset of Python over time.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

databend

Databend is an open-source cloud data warehouse that serves as a cost-effective alternative to Snowflake. With its focus on fast query execution and data ingestion, it's designed for complex analysis of the world's largest datasets.

For similar jobs

SLR-FC

This repository provides a comprehensive collection of AI tools and resources to enhance literature reviews. It includes a curated list of AI tools for various tasks, such as identifying research gaps, discovering relevant papers, visualizing paper content, and summarizing text. Additionally, the repository offers materials on generative AI, effective prompts, copywriting, image creation, and showcases of AI capabilities. By leveraging these tools and resources, researchers can streamline their literature review process, gain deeper insights from scholarly literature, and improve the quality of their research outputs.

paper-ai

Paper-ai is a tool that helps you write papers using artificial intelligence. It provides features such as AI writing assistance, reference searching, and editing and formatting tools. With Paper-ai, you can quickly and easily create high-quality papers.

paper-qa

PaperQA is a minimal package for question and answering from PDFs or text files, providing very good answers with in-text citations. It uses OpenAI Embeddings to embed and search documents, and follows a process of embedding docs and queries, searching for top passages, creating summaries, scoring and selecting relevant summaries, putting summaries into prompt, and generating answers. Users can customize prompts and use various models for embeddings and LLMs. The tool can be used asynchronously and supports adding documents from paths, files, or URLs.

ChatData

ChatData is a robust chat-with-documents application designed to extract information and provide answers by querying the MyScale free knowledge base or uploaded documents. It leverages the Retrieval Augmented Generation (RAG) framework, millions of Wikipedia pages, and arXiv papers. Features include self-querying retriever, VectorSQL, session management, and building a personalized knowledge base. Users can effortlessly navigate vast data, explore academic papers, and research documents. ChatData empowers researchers, students, and knowledge enthusiasts to unlock the true potential of information retrieval.

noScribe

noScribe is an AI-based software designed for automated audio transcription, specifically tailored for transcribing interviews for qualitative social research or journalistic purposes. It is a free and open-source tool that runs locally on the user's computer, ensuring data privacy. The software can differentiate between speakers and supports transcription in 99 languages. It includes a user-friendly editor for reviewing and correcting transcripts. Developed by Kai Dröge, a PhD in sociology with a background in computer science, noScribe aims to streamline the transcription process and enhance the efficiency of qualitative analysis.

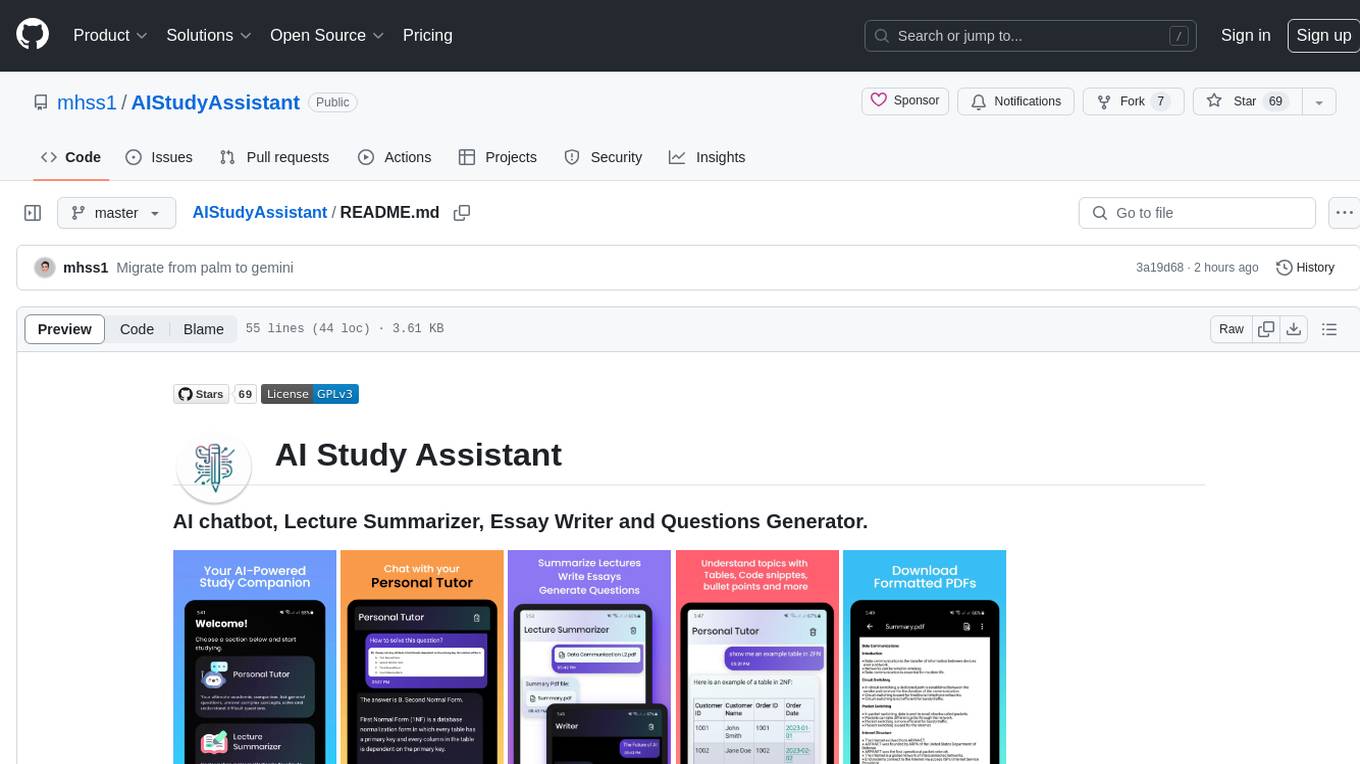

AIStudyAssistant

AI Study Assistant is an app designed to enhance learning experience and boost academic performance. It serves as a personal tutor, lecture summarizer, writer, and question generator powered by Google PaLM 2. Features include interacting with an AI chatbot, summarizing lectures, generating essays, and creating practice questions. The app is built using 100% Kotlin, Jetpack Compose, Clean Architecture, and MVVM design pattern, with technologies like Ktor, Room DB, Hilt, and Kotlin coroutines. AI Study Assistant aims to provide comprehensive AI-powered assistance for students in various academic tasks.

data-to-paper

Data-to-paper is an AI-driven framework designed to guide users through the process of conducting end-to-end scientific research, starting from raw data to the creation of comprehensive and human-verifiable research papers. The framework leverages a combination of LLM and rule-based agents to assist in tasks such as hypothesis generation, literature search, data analysis, result interpretation, and paper writing. It aims to accelerate research while maintaining key scientific values like transparency, traceability, and verifiability. The framework is field-agnostic, supports both open-goal and fixed-goal research, creates data-chained manuscripts, involves human-in-the-loop interaction, and allows for transparent replay of the research process.

k2

K2 (GeoLLaMA) is a large language model for geoscience, trained on geoscience literature and fine-tuned with knowledge-intensive instruction data. It outperforms baseline models on objective and subjective tasks. The repository provides K2 weights, core data of GeoSignal, GeoBench benchmark, and code for further pretraining and instruction tuning. The model is available on Hugging Face for use. The project aims to create larger and more powerful geoscience language models in the future.