TPI-LLM

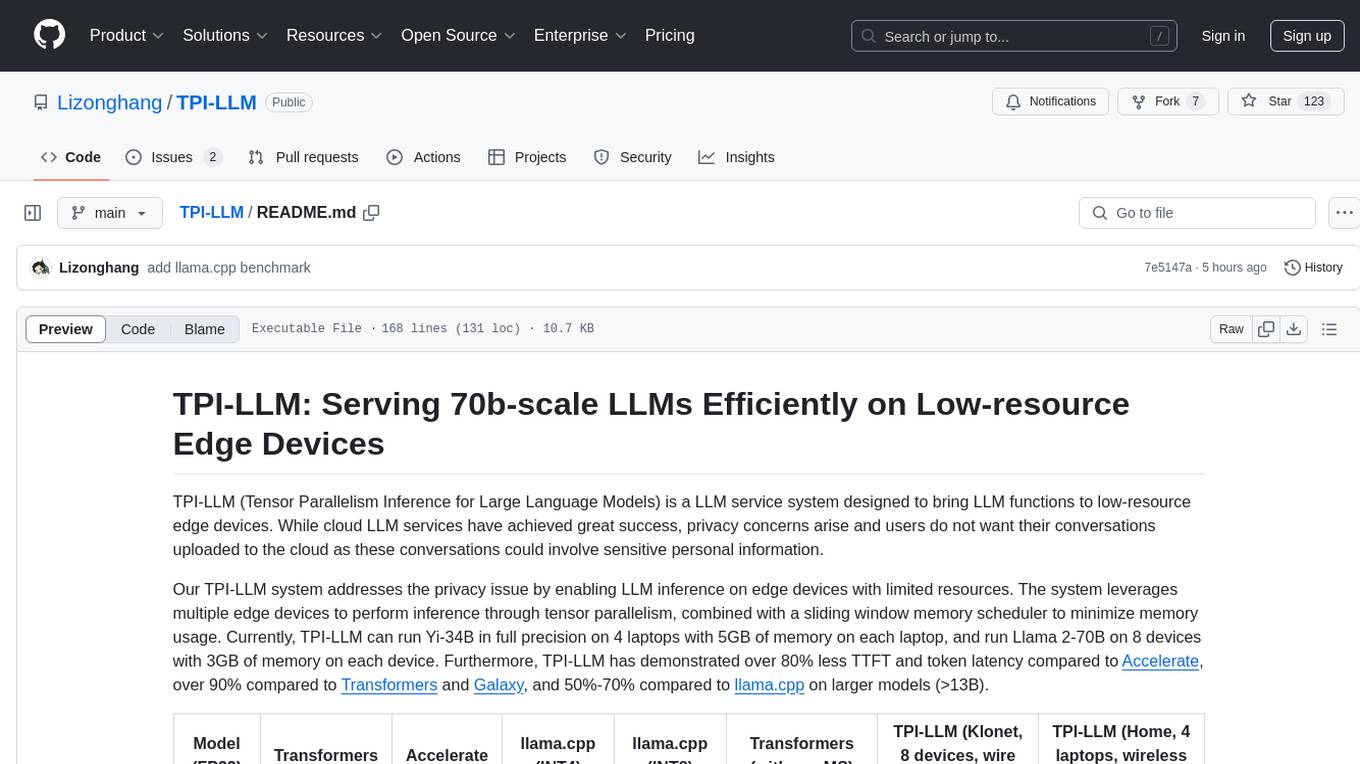

TPI-LLM: A High-Performance Tensor Parallelism Inference System for Edge LLM Services.

Stars: 123

TPI-LLM (Tensor Parallelism Inference for Large Language Models) is a system designed to bring LLM functions to low-resource edge devices, addressing privacy concerns by enabling LLM inference on edge devices with limited resources. It leverages multiple edge devices for inference through tensor parallelism and a sliding window memory scheduler to minimize memory usage. TPI-LLM demonstrates significant improvements in TTFT and token latency compared to other models, and plans to support infinitely large models with low token latency in the future.

README:

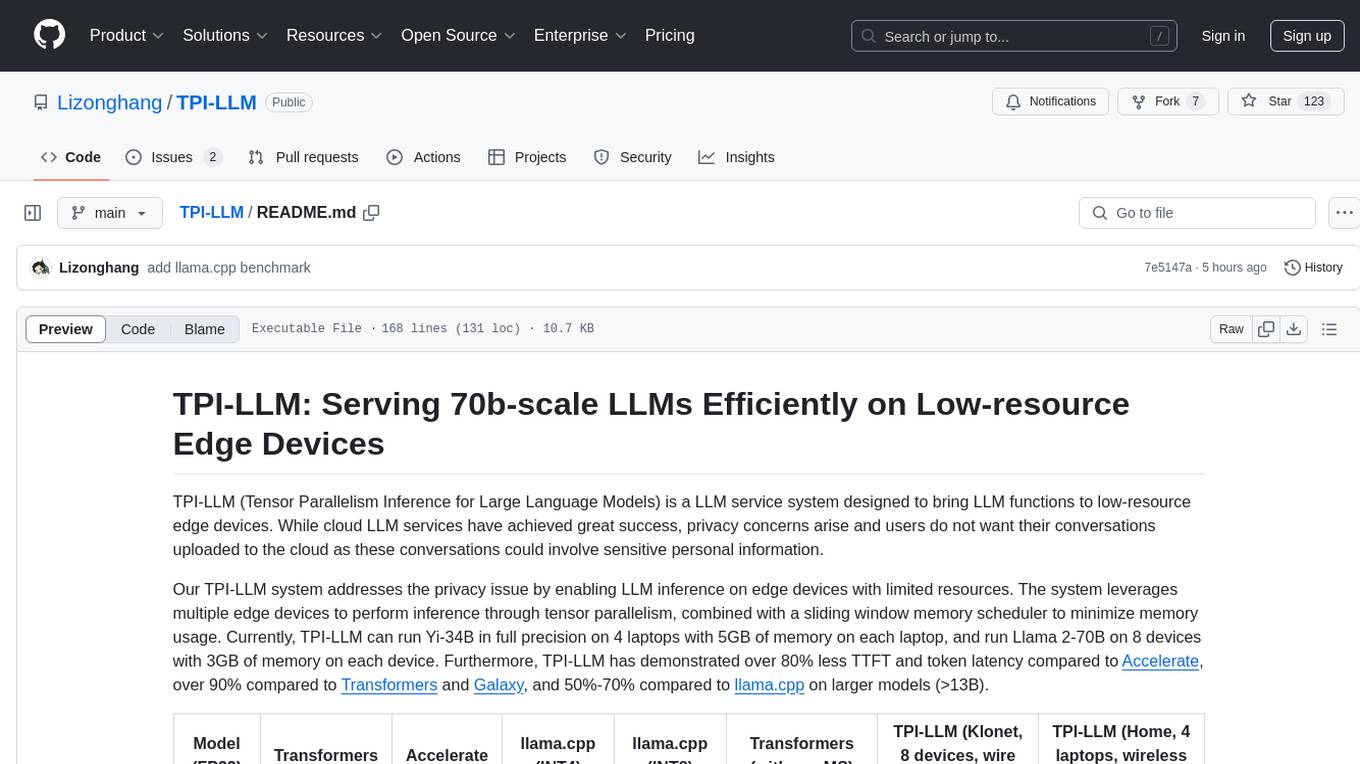

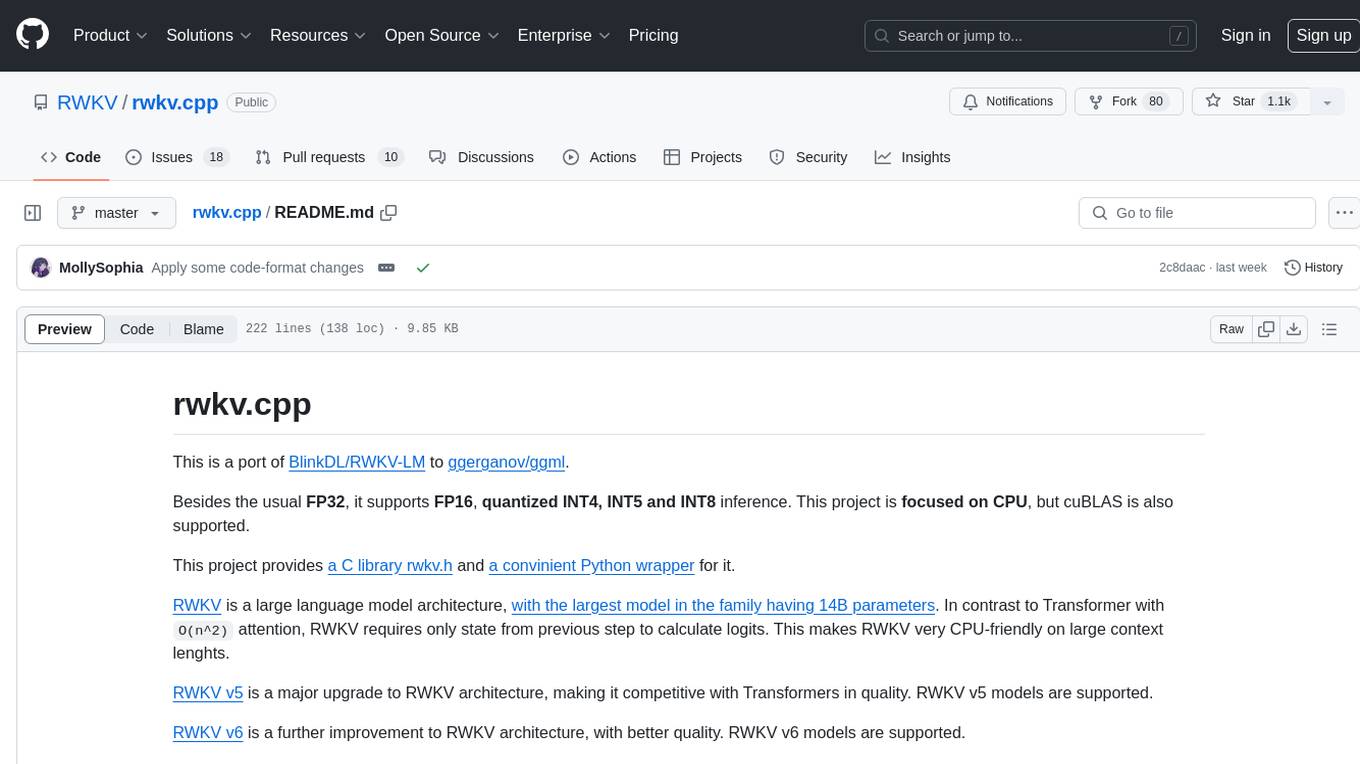

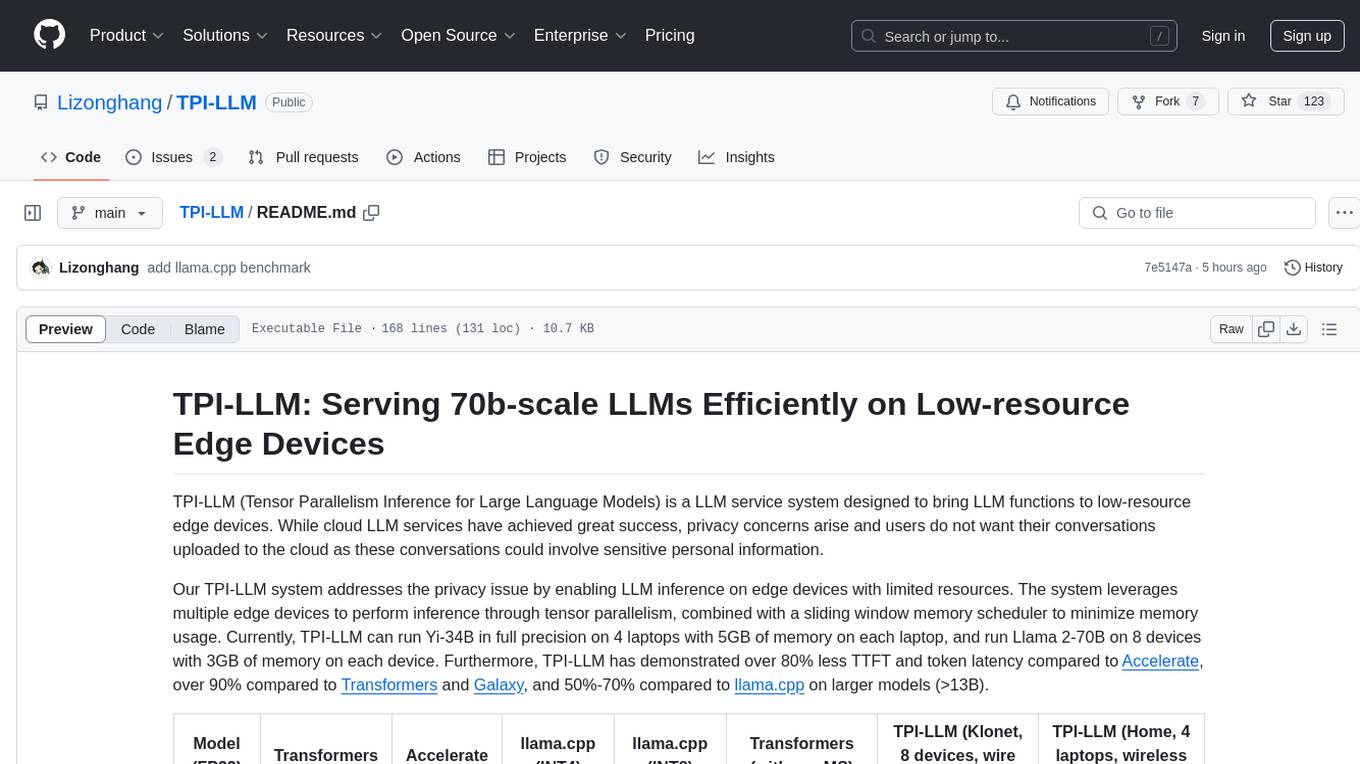

TPI-LLM (Tensor Parallelism Inference for Large Language Models) is a LLM service system designed to bring LLM functions to low-resource edge devices. While cloud LLM services have achieved great success, privacy concerns arise and users do not want their conversations uploaded to the cloud as these conversations could involve sensitive personal information.

Our TPI-LLM system addresses the privacy issue by enabling LLM inference on edge devices with limited resources. The system leverages multiple edge devices to perform inference through tensor parallelism, combined with a sliding window memory scheduler to minimize memory usage. Currently, TPI-LLM can run Yi-34B in full precision on 4 laptops with 5GB of memory on each laptop, and run Llama 2-70B on 8 devices with 3GB of memory on each device. Furthermore, TPI-LLM has demonstrated over 80% less TTFT and token latency compared to Accelerate, over 90% compared to Transformers and Galaxy, and 50%-70% compared to llama.cpp on larger models (>13B).

| Model (FP32) | Transformers | Accelerate | llama.cpp (INT4) | llama.cpp (INT8) | Transformers (with our MS) | TPI-LLM (Klonet, 8 devices, wire connected) | TPI-LLM (Home, 4 laptops, wireless connected) |

|---|---|---|---|---|---|---|---|

| Llama 2-3B | 30 s/token | 16 s/token | 0.05 s/token | 0.07 s/token | 3 s/token | 2 s/token | 2 s/token |

| Llama 2-7B | 56 s/token | 26 s/token | 0.08 s/token | 8 s/token | 8 s/token | 3 s/token | 5 s/token |

| Llama 3.1-8B | 65 s/token | 31 s/token | 1 s/token | 11 s/token | 12 s/token | 4 s/token | 8 s/token |

| Llama 2-13B | OOM | OOM | 10 s/token | 20 s/token | 18 s/token | 3 s/token | 9 s/token |

| Yi-34B | OOM | OOM | 29 s/token | 51 s/token | 55 s/token | 14 s/token | 29 s/token |

Note: We set up two testbeds: the home testbed (4 laptops connected via local Wi-Fi) and the Klonet testbed (8 devices connected via a wire edge network).

Note: Computations were in full precision on solely CPUs, except for llama.cpp, which used Apple Metal Graphics and INT4/INT8 quantization for acceleration.

Note: Except for TPI-LLM, all other benchmarks were run on a Mac M1 laptop with 8 cores and 8GB memory.

In the future, we plan to migrate to llama.cpp, add supports for Q4/Q8 quantizations and integrated GPUs, and further improve the parallelism paradigm, in order to support infinitely large models in a low token latency.

-

Clone this repo and enter the project folder.

-

Add

PYTHONPATHto.bashrc:

> vim ~/.bashrc

export PYTHONPATH=<PATH-TO-TPI-LLM>/src

- Create a new conda environment and install dependencies:

> conda create -n tpi-llm python=3.9

> conda activate tpi-llm

(tpi-llm) > pip install -r requirements.txt

We provide Docker images for TPI-LLM, available on Docker Hub. This is the easiest way to get started, but the container may slow down inference speed.

If the container is a master node, use docker cp <HOST_MODEL_PATH> master:/root/TPI-LLM/ to copy the pretrained model files

to the container of the master node.

If you prefer to build the Docker image yourself, you can modify and use the provided Dockerfile in our repo.

> docker build -t tpi-llm:local .

> docker run -dit --name master tpi-llm:local

To get started, you’ll need to download the pretrained model weights from Hugging Face:

- Llama 2 series, for example, Meta/Llama-2-7b-hf

- Llama 3 series, for example, Meta/Llama-3-8b

- Llama 3.1 series, for example, Meta/Llama-3.1-8b-Instruct

- 01 AI Yi series, for example, chargoddard/Yi-34B-Llama

Please make sure that the downloaded weight files conform to the HuggingFace format.

After downloading, save the model files in a directory of your choice, which we’ll refer to as /root/TPI-LLM/pretrained_models/Llama-2-1.1b-ft.

Run the example script for a trial:

> python examples/run_multiprocess.py --world_size 4 --model_type llama --model_path /root/TPI-LLM/pretrained_models/Llama-2-1.1b-ft --prompt "how are you?" --length 20 --memory_window 4

This command will run 4 processes on a single machine, creating a pseudo-distributed environment that leverages tensor parallelism for Llama inference.

First-Time Setup:

If this is your first time running the task, the master node will automatically slice the pretrained weight files. Suppose we have 4 worker nodes (including the master node), the sliced weight files should be like the following:

> ls <PATH-TO-MODEL-FILES>

|- config.json

|- model-00001-of-00004.safetensors

|- model-00002-of-00004.safetensors

|- model-00003-of-00004.safetensors

|- model-00004-of-00004.safetensors

|- model.safetensors.index.json

|- ...

|- split/

|--- node_0

|--- node_1

|--- node_2

|--- node_3

Subsequent Runs:

For subsequent runs, the sliced model weight files can be reused. Or you can include the --split_bin option

to re-split it.

Assume we have 2 laptops with IP addresses as follows:

IP of host 1: 192.168.2.1 (master node)

IP of host 2: 192.168.2.2 (worker node)

The master node is regarded as the task publisher, who initiates the prompt and display generated text to users, it also slices the pretrained weight files and serve as a file server to distribute the sliced files to other worker nodes.

Step 1: To launch the master node, run the following command on laptop 1:

# Run the master node on laptop 1 (IP: 192.168.2.1, RANK = 0)

> python examples/run_multihost.py --rank 0 --world_size 2 --master_ip 192.168.2.1 --master_port=29500 --model_type llama --model_path /root/TPI-LLM/pretrained_models/Llama-2-1.1b-ft --prompt "how are you?" --length 20 --memory_window 4

NOTE: Please make sure the master node can be connected by all other nodes. The master node also participate in tensor-parallel inference.

Step 2: To launch other worker nodes, use the following command on other laptops (e.g., laptop 2):

# Run the worker node on host 2 (IP: 192.168.2.2, RANK = 1)

> python examples/run_multihost.py --rank 1 --world_size 2 --master_ip 192.168.2.1 --master_port=29500 --model_type llama --model_path /root/TPI-LLM/pretrained_models/sync --memory_window 4

The worker nodes will automatically download their weight files from the master node. If you have downloaded

the files before, you can use the option --force_download to force a re-download.

TPI-LLM provides several optional parameters that you can customize to control various aspects of the inference process. Below is a list of these options:

| Argument | Default | Type | Description |

|---|---|---|---|

--prompt |

"" |

str |

The input prompt. |

--length |

20 |

int |

Maximum length of the generated sequence. |

--prefix |

"" |

str |

Text added prior to input for context. |

--split_bin |

False |

bool |

Split the pretrained model file. (available only on the master node) |

--save_dir |

"split" |

str |

The directory to save split model files. |

--seed |

42 |

int |

Random seed for reproducibility. |

--file_port |

29600 |

int |

Port number on the master node where the file server is listening on. |

--force_download |

False |

bool |

Force worker nodes to re-download model weight slices. (available only on the non-master node) |

--temperature |

1.0 |

float |

Sampling temperature for text generation. (available only on the master node) |

--k |

0 |

int |

Number of highest probability tokens to keep for top-k sampling. (available only on the master node) |

--p |

0.9 |

float |

Cumulative probability for nucleus sampling (top-p). (available only on the master node) |

--disable_memory_schedule |

False |

bool |

Set to True to disable memory window scheduling, this may lead to higher speed. |

--memory_window |

2 |

int |

Size of the memory window used during inference. Should be at least 2. |

--torch_dist |

False |

bool |

Whether to use torch.distributed. |

Upcoming, the paper is under review.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for TPI-LLM

Similar Open Source Tools

TPI-LLM

TPI-LLM (Tensor Parallelism Inference for Large Language Models) is a system designed to bring LLM functions to low-resource edge devices, addressing privacy concerns by enabling LLM inference on edge devices with limited resources. It leverages multiple edge devices for inference through tensor parallelism and a sliding window memory scheduler to minimize memory usage. TPI-LLM demonstrates significant improvements in TTFT and token latency compared to other models, and plans to support infinitely large models with low token latency in the future.

optillm

optillm is an OpenAI API compatible optimizing inference proxy implementing state-of-the-art techniques to enhance accuracy and performance of LLMs, focusing on reasoning over coding, logical, and mathematical queries. By leveraging additional compute at inference time, it surpasses frontier models across diverse tasks.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features such as a Virtual API System with caching and API simulators, a new set of solvable queries determined by LLMs, and a Stable Evaluation System using GPT-4. The Virtual API Server can be set up either by building from source or using a prebuilt Docker image. Users can test the server using provided scripts and evaluate models with Solvable Pass Rate and Solvable Win Rate metrics. The tool also includes model experiments results comparing different models' performance.

factorio-learning-environment

Factorio Learning Environment is an open source framework designed for developing and evaluating LLM agents in the game of Factorio. It provides two settings: Lab-play with structured tasks and Open-play for building large factories. Results show limitations in spatial reasoning and automation strategies. Agents interact with the environment through code synthesis, observation, action, and feedback. Tools are provided for game actions and state representation. Agents operate in episodes with observation, planning, and action execution. Tasks specify agent goals and are implemented in JSON files. The project structure includes directories for agents, environment, cluster, data, docs, eval, and more. A database is used for checkpointing agent steps. Benchmarks show performance metrics for different configurations.

r2ai

r2ai is a tool designed to run a language model locally without internet access. It can be used to entertain users or assist in answering questions related to radare2 or reverse engineering. The tool allows users to prompt the language model, index large codebases, slurp file contents, embed the output of an r2 command, define different system-level assistant roles, set environment variables, and more. It is accessible as an r2lang-python plugin and can be scripted from various languages. Users can use different models, adjust query templates dynamically, load multiple models, and make them communicate with each other.

llm-checker

LLM Checker is an AI-powered CLI tool that analyzes your hardware to recommend optimal LLM models. It features deterministic scoring across 35+ curated models with hardware-calibrated memory estimation. The tool helps users understand memory bandwidth, VRAM limits, and performance characteristics to choose the right LLM for their hardware. It provides actionable recommendations in seconds by scoring compatible models across four dimensions: Quality, Speed, Fit, and Context. LLM Checker is designed to work on any Node.js 16+ system, with optional SQLite search features for advanced functionality.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features like Virtual API System, Solvable Queries, and Stable Evaluation System. The benchmark ensures consistency through a caching system and API simulators, filters queries based on solvability using LLMs, and evaluates model performance using GPT-4 with metrics like Solvable Pass Rate and Solvable Win Rate.

airunner

AI Runner is a multi-modal AI interface that allows users to run open-source large language models and AI image generators on their own hardware. The tool provides features such as voice-based chatbot conversations, text-to-speech, speech-to-text, vision-to-text, text generation with large language models, image generation capabilities, image manipulation tools, utility functions, and more. It aims to provide a stable and user-friendly experience with security updates, a new UI, and a streamlined installation process. The application is designed to run offline on users' hardware without relying on a web server, offering a smooth and responsive user experience.

evalchemy

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

skylos

Skylos is a privacy-first SAST tool for Python, TypeScript, and Go that bridges the gap between traditional static analysis and AI agents. It detects dead code, security vulnerabilities (SQLi, SSRF, Secrets), and code quality issues with high precision. Skylos uses a hybrid engine (AST + optional Local/Cloud LLM) to eliminate false positives, verify via runtime, find logic bugs, and provide context-aware audits. It offers automated fixes, end-to-end remediation, and 100% local privacy. The tool supports taint analysis, secrets detection, vulnerability checks, dead code detection and cleanup, agentic AI and hybrid analysis, codebase optimization, operational governance, and runtime verification.

llm_processes

This repository contains code for LLM Processes, which focuses on generating numerical predictive distributions conditioned on natural language. It supports various LLMs through Hugging Face transformer APIs and includes experiments on prompt engineering, 1D synthetic data, comparison to LLMTime, Fashion MNIST, black-box optimization, weather regression, in-context learning, and text conditioning. The code requires Python 3.9+, PyTorch 2.3.0+, and other dependencies for running experiments and reproducing results.

zeptoclaw

ZeptoClaw is an ultra-lightweight personal AI assistant that offers a compact Rust binary with 29 tools, 8 channels, 9 providers, and container isolation. It focuses on integrations, security, and size discipline without compromising on performance. With features like container isolation, prompt injection detection, secret leak scanner, policy engine, input validator, and more, ZeptoClaw ensures secure AI agent execution. It supports migration from OpenClaw, deployment on various platforms, and configuration of LLM providers. ZeptoClaw is designed for efficient AI assistance with minimal resource consumption and maximum security.

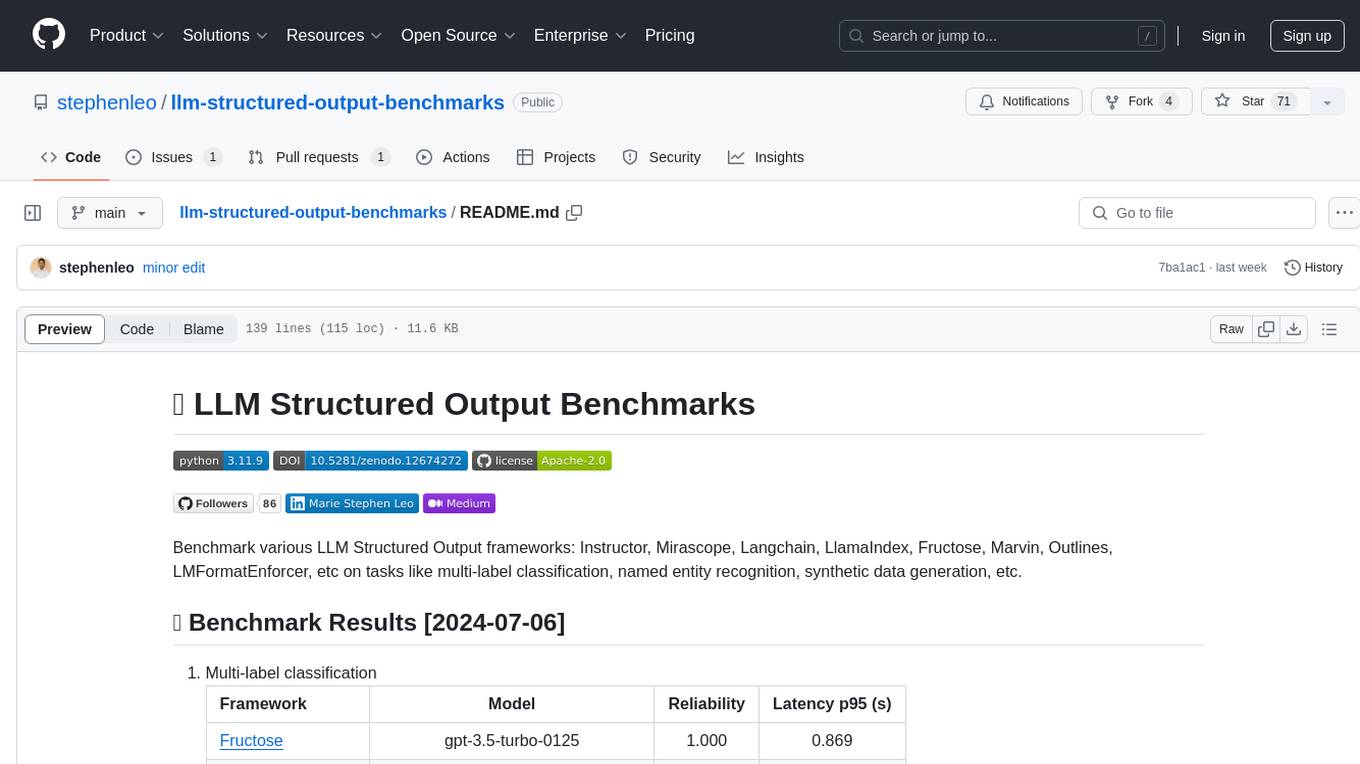

llm-structured-output-benchmarks

Benchmark various LLM Structured Output frameworks like Instructor, Mirascope, Langchain, LlamaIndex, Fructose, Marvin, Outlines, LMFormatEnforcer, etc on tasks like multi-label classification, named entity recognition, synthetic data generation. The tool provides benchmark results, methodology, instructions to run the benchmark, add new data, and add a new framework. It also includes a roadmap for framework-related tasks, contribution guidelines, citation information, and feedback request.

rwkv.cpp

rwkv.cpp is a port of BlinkDL/RWKV-LM to ggerganov/ggml, supporting FP32, FP16, and quantized INT4, INT5, and INT8 inference. It focuses on CPU but also supports cuBLAS. The project provides a C library rwkv.h and a Python wrapper. RWKV is a large language model architecture with models like RWKV v5 and v6. It requires only state from the previous step for calculations, making it CPU-friendly on large context lengths. Users are advised to test all available formats for perplexity and latency on a representative dataset before serious use.

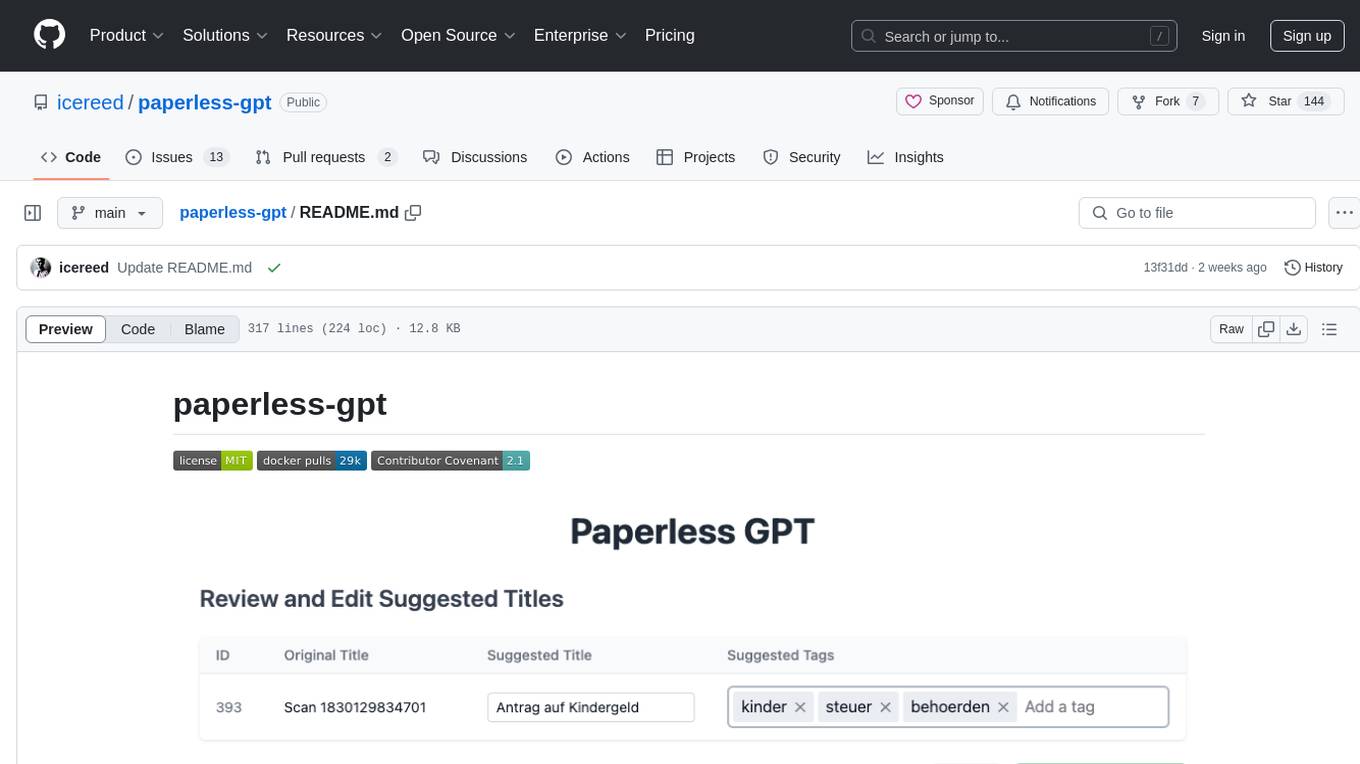

paperless-gpt

paperless-gpt is a tool designed to generate accurate and meaningful document titles and tags for paperless-ngx using Large Language Models (LLMs). It supports multiple LLM providers, including OpenAI and Ollama. With paperless-gpt, you can streamline your document management by automatically suggesting appropriate titles and tags based on the content of your scanned documents. The tool offers features like multiple LLM support, customizable prompts, easy integration with paperless-ngx, user-friendly interface for reviewing and applying suggestions, dockerized deployment, automatic document processing, and an experimental OCR feature.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

For similar tasks

TPI-LLM

TPI-LLM (Tensor Parallelism Inference for Large Language Models) is a system designed to bring LLM functions to low-resource edge devices, addressing privacy concerns by enabling LLM inference on edge devices with limited resources. It leverages multiple edge devices for inference through tensor parallelism and a sliding window memory scheduler to minimize memory usage. TPI-LLM demonstrates significant improvements in TTFT and token latency compared to other models, and plans to support infinitely large models with low token latency in the future.

SimpleAICV_pytorch_training_examples

SimpleAICV_pytorch_training_examples is a repository that provides simple training and testing examples for various computer vision tasks such as image classification, object detection, semantic segmentation, instance segmentation, knowledge distillation, contrastive learning, masked image modeling, OCR text detection, OCR text recognition, human matting, salient object detection, interactive segmentation, image inpainting, and diffusion model tasks. The repository includes support for multiple datasets and networks, along with instructions on how to prepare datasets, train and test models, and use gradio demos. It also offers pretrained models and experiment records for download from huggingface or Baidu-Netdisk. The repository requires specific environments and package installations to run effectively.

MooER

MooER (摩耳) is an LLM-based speech recognition and translation model developed by Moore Threads. It allows users to transcribe speech into text (ASR) and translate speech into other languages (AST) in an end-to-end manner. The model was trained using 5K hours of data and is now also available with an 80K hours version. MooER is the first LLM-based speech model trained and inferred using domestic GPUs. The repository includes pretrained models, inference code, and a Gradio demo for a better user experience.

fsdp_qlora

The fsdp_qlora repository provides a script for training Large Language Models (LLMs) with Quantized LoRA and Fully Sharded Data Parallelism (FSDP). It integrates FSDP+QLoRA into the Axolotl platform and offers installation instructions for dependencies like llama-recipes, fastcore, and PyTorch. Users can finetune Llama-2 70B on Dual 24GB GPUs using the provided command. The script supports various training options including full params fine-tuning, LoRA fine-tuning, custom LoRA fine-tuning, quantized LoRA fine-tuning, and more. It also discusses low memory loading, mixed precision training, and comparisons to existing trainers. The repository addresses limitations and provides examples for training with different configurations, including BnB QLoRA and HQQ QLoRA. Additionally, it offers SLURM training support and instructions for adding support for a new model.

Anima

Anima is the first open-source 33B Chinese large language model based on QLoRA, supporting DPO alignment training and open-sourcing a 100k context window model. The latest update includes AirLLM, a library that enables inference of 70B LLM from a single GPU with just 4GB memory. The tool optimizes memory usage for inference, allowing large language models to run on a single 4GB GPU without the need for quantization or other compression techniques. Anima aims to democratize AI by making advanced models accessible to everyone and contributing to the historical process of AI democratization.

pgvecto.rs

pgvecto.rs is a Postgres extension written in Rust that provides vector similarity search functions. It offers ultra-low-latency, high-precision vector search capabilities, including sparse vector search and full-text search. With complete SQL support, async indexing, and easy data management, it simplifies data handling. The extension supports various data types like FP16/INT8, binary vectors, and Matryoshka embeddings. It ensures system performance with production-ready features, high availability, and resource efficiency. Security and permissions are managed through easy access control. The tool allows users to create tables with vector columns, insert vector data, and calculate distances between vectors using different operators. It also supports half-precision floating-point numbers for better performance and memory usage optimization.

KIVI

KIVI is a plug-and-play 2bit KV cache quantization algorithm optimizing memory usage by quantizing key cache per-channel and value cache per-token to 2bit. It enables LLMs to maintain quality while reducing memory usage, allowing larger batch sizes and increasing throughput in real LLM inference workloads.

bitsandbytes

bitsandbytes enables accessible large language models via k-bit quantization for PyTorch. It provides features for reducing memory consumption for inference and training by using 8-bit optimizers, LLM.int8() for large language model inference, and QLoRA for large language model training. The library includes quantization primitives for 8-bit & 4-bit operations and 8-bit optimizers.

For similar jobs

TPI-LLM

TPI-LLM (Tensor Parallelism Inference for Large Language Models) is a system designed to bring LLM functions to low-resource edge devices, addressing privacy concerns by enabling LLM inference on edge devices with limited resources. It leverages multiple edge devices for inference through tensor parallelism and a sliding window memory scheduler to minimize memory usage. TPI-LLM demonstrates significant improvements in TTFT and token latency compared to other models, and plans to support infinitely large models with low token latency in the future.

KAI-Scheduler

KAI Scheduler is a robust, efficient, and scalable Kubernetes scheduler optimized for GPU resource allocation in AI and machine learning workloads. It supports batch scheduling, bin packing, spread scheduling, workload priority, hierarchical queues, resource distribution, fairness policies, workload consolidation, elastic workloads, dynamic resource allocation, GPU sharing, and works in both cloud and on-premise environments.

ai-containers

This repository contains Dockerfiles, scripts, yaml files, Helm charts, etc. used to scale out AI containers with versions of TensorFlow and PyTorch optimized for Intel platforms. Scaling is done with python, Docker, kubernetes, kubeflow, cnvrg.io, Helm, and other container orchestration frameworks for use in the cloud and on-premise.

azure-agentic-infraops

Agentic InfraOps is a multi-agent orchestration system for Azure infrastructure development that transforms how you build Azure infrastructure with AI agents. It provides a structured 7-step workflow that coordinates specialized AI agents through a complete infrastructure development cycle: Requirements → Architecture → Design → Plan → Code → Deploy → Documentation. The system enforces Azure Well-Architected Framework (WAF) alignment and Azure Verified Modules (AVM) at every phase, combining the speed of AI coding with best practices in cloud engineering.

datadreamer

DataDreamer is an advanced toolkit designed to facilitate the development of edge AI models by enabling synthetic data generation, knowledge extraction from pre-trained models, and creation of efficient and potent models. It eliminates the need for extensive datasets by generating synthetic datasets, leverages latent knowledge from pre-trained models, and focuses on creating compact models suitable for integration into any device and performance for specialized tasks. The toolkit offers features like prompt generation, image generation, dataset annotation, and tools for training small-scale neural networks for edge deployment. It provides hardware requirements, usage instructions, available models, and limitations to consider while using the library.

bailing

Bailing is an open-source voice assistant designed for natural conversations with users. It combines Automatic Speech Recognition (ASR), Voice Activity Detection (VAD), Large Language Model (LLM), and Text-to-Speech (TTS) technologies to provide a high-quality voice interaction experience similar to GPT-4o. Bailing aims to achieve GPT-4o-like conversation effects without the need for GPU, making it suitable for various edge devices and low-resource environments. The project features efficient open-source models, modular design allowing for module replacement and upgrades, support for memory function, tool integration for information retrieval and task execution via voice commands, and efficient task management with progress tracking and reminders.

FLAME

FLAME is a lightweight and efficient deep learning framework designed for edge devices. It provides a simple and user-friendly interface for developing and deploying deep learning models on resource-constrained devices. With FLAME, users can easily build and optimize neural networks for tasks such as image classification, object detection, and natural language processing. The framework supports various neural network architectures and optimization techniques, making it suitable for a wide range of applications in the field of edge computing.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.