Best AI tools for< Optimize Memory Usage >

20 - AI tool Sites

Unsloth

Unsloth is an AI tool designed to make finetuning large language models like Llama-3, Mistral, Phi-3, and Gemma 2x faster, use 70% less memory, and with no degradation in accuracy. The tool provides documentation to help users navigate through training their custom models, covering essentials such as installing and updating Unsloth, creating datasets, running, and deploying models. Users can also integrate third-party tools and utilize platforms like Google Colab.

Chatty

Chatty is an AI-powered chat application that utilizes cutting-edge models to provide efficient and personalized responses to user queries. The application is designed to optimize VRAM usage by employing models with specific suffixes, resulting in reduced memory requirements. Users can expect a slight delay in the initial response due to model downloading. Chatty aims to enhance user experience through its advanced AI capabilities.

Mebot

Mebot is an AI-powered application designed to help users enhance their memory and cognitive skills. By leveraging artificial intelligence technology, Mebot provides personalized memory training exercises and techniques to improve memory retention and recall. Users can track their progress, set reminders, and receive tailored recommendations to optimize their memory performance. With Mebot, users can enjoy a fun and engaging way to boost their memory capabilities and overall cognitive function.

Timely

Timely is an AI-powered time tracking software designed to automate time tracking, bill clients accurately, and enhance productivity. It offers features such as automatic time tracking, memory tracker, timesheets, project dashboard, and efficient task management. Timely is trusted by thousands of users across various industries to provide accurate time data for informed decision-making and improved business operations.

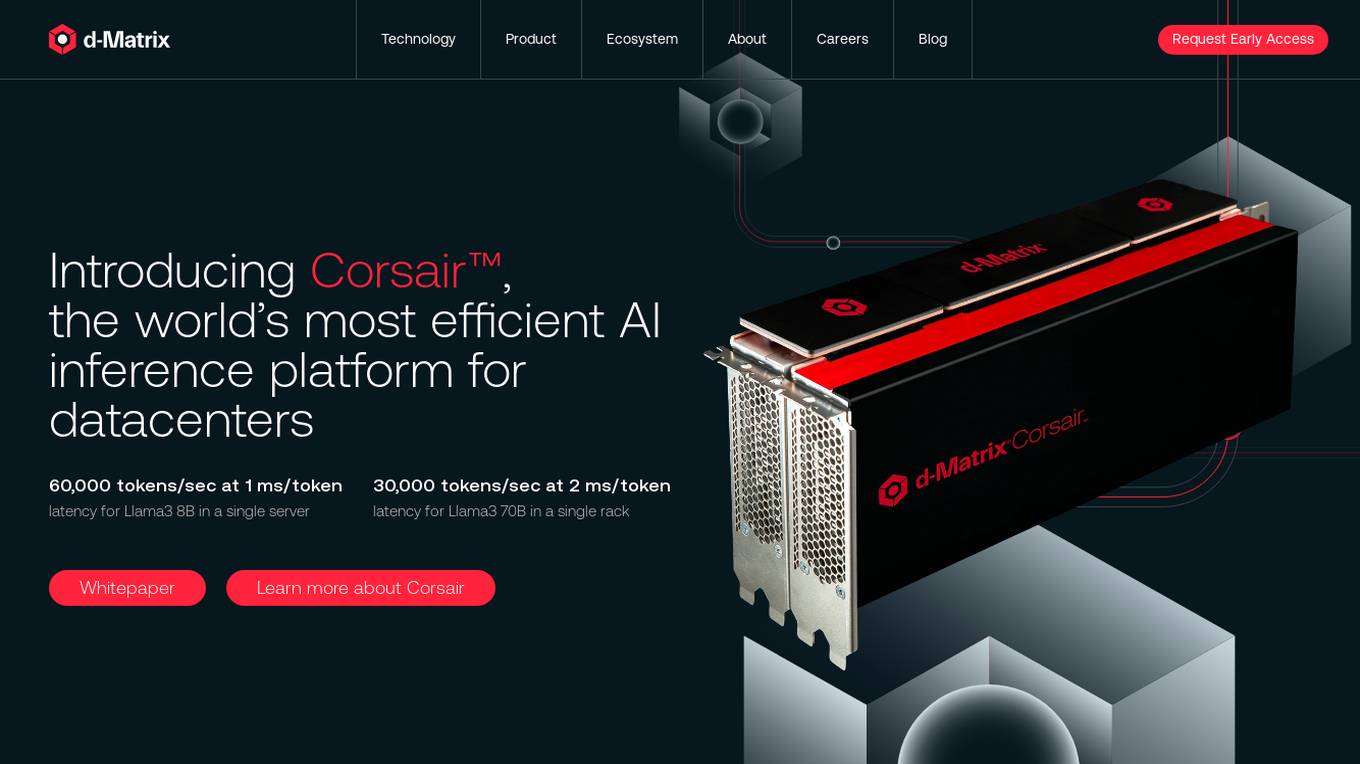

d-Matrix

d-Matrix is an AI tool that offers ultra-low latency batched inference for generative AI technology. It introduces Corsair™, the world's most efficient AI inference platform for datacenters, providing high performance, efficiency, and scalability for large-scale inference tasks. The tool aims to transform the economics of AI inference by delivering fast, sustainable, and scalable AI solutions without compromising on speed or usability.

Kin

Kin is a personal AI application designed to enhance both your private and work life. It offers personalized coaching, guidance, and emotional support to boost your confidence and impact. Kin helps you piece together mental puzzles, providing clear guidance and support for your professional and personal journey. The application prioritizes privacy and security, ensuring that all data stays on your device and is encrypted. With features like advice, role-playing conversations, generating ideas, and time optimization, Kin aims to nurture connections, prepare for tough situations, and help you manage tasks efficiently.

Memgrain

Memgrain is an AI-powered study tool that offers a range of features to help users create, study, memorize, and learn through flashcards and book summaries. The platform leverages AI technology to generate interactive flashcards from various sources like notes, PDFs, and webpages. Users can utilize spaced repetition algorithms for effective memorization and personalized learning experiences. Memgrain aims to revolutionize the way knowledge is absorbed and retained by combining academic rigor with innovative technology.

Wordjotter

Wordjotter is an AI-powered Anki flashcards application that utilizes artificial intelligence to enhance the learning experience. It helps users create and study flashcards efficiently by leveraging AI algorithms to optimize content and improve retention. With Wordjotter, users can easily create personalized flashcards, receive intelligent recommendations, track their progress, and collaborate with others in a seamless manner. The application aims to revolutionize the traditional flashcard learning method by incorporating AI technology to make learning more effective and engaging.

Keebo

Keebo is an AI tool designed for Snowflake optimization, offering automated query, cost, and tuning optimization. It is the only fully-automated Snowflake optimizer that dynamically adjusts to save customers 25% and more. Keebo's patented technology, based on cutting-edge research, optimizes warehouse size, clustering, and memory without impacting performance. It learns and adjusts to workload changes in real-time, setting up in just 30 minutes and delivering savings within 24 hours. The tool uses telemetry metadata for optimizations, providing full visibility and adjustability for complex scenarios and schedules.

Timely

Timely is an AI-powered automatic time tracking solution designed for consultancies, agencies, and software companies. It helps users automate time tracking, bill clients accurately, and focus on important tasks. With features like memory tracking, timesheets, project dashboard, and task management, Timely streamlines workflow and enhances team collaboration. The application offers benefits such as 100% accurate time data, optimized utilization, and improved margins. However, manual time tracking can lead to inaccurate data, lost efficiency, and employee dissatisfaction. Timely's AI technology eliminates manual input, provides comprehensive reporting, and ensures team-wide transparency, making time tracking and reporting pain-free.

PodPulse

PodPulse is an AI-powered tool that transforms lengthy podcasts into concise and captivating summaries, providing users with essential key takeaways and valuable insights. It offers a streamlined way to access a rich variety of podcast topics and creators, delivering bite-sized wisdom tailored for the modern listener. With AI-generated summaries, users can trust in a fair and comprehensive grasp of each podcast episode, free from bias. PodPulse also revolutionizes the reading experience with Bionic Reading®, making learning engaging and accessible for all users, including those with dyslexia and ADHD. Users can effortlessly save, sort, and revisit their favorite podcast moments, creating a personalized audio library at their fingertips. Additionally, PodPulse boosts memory power with brain-boosting emails that help retain key highlights. The tool aims to optimize time and amplify knowledge for users at an affordable price.

Mentals.ai

Mentals.ai is an operating system for AI agents that enables users to design and deploy complex AI agents using natural language instructions. It focuses on creativity and logic rather than programming, allowing for multi-agent collaboration, advanced features like memory and code execution, and various use cases such as business automation, personal automation, and marketing automation. The platform also supports the creation of intelligent chatbots, virtual employees, and multi-agent systems, revolutionizing productivity and problem-solving in various domains.

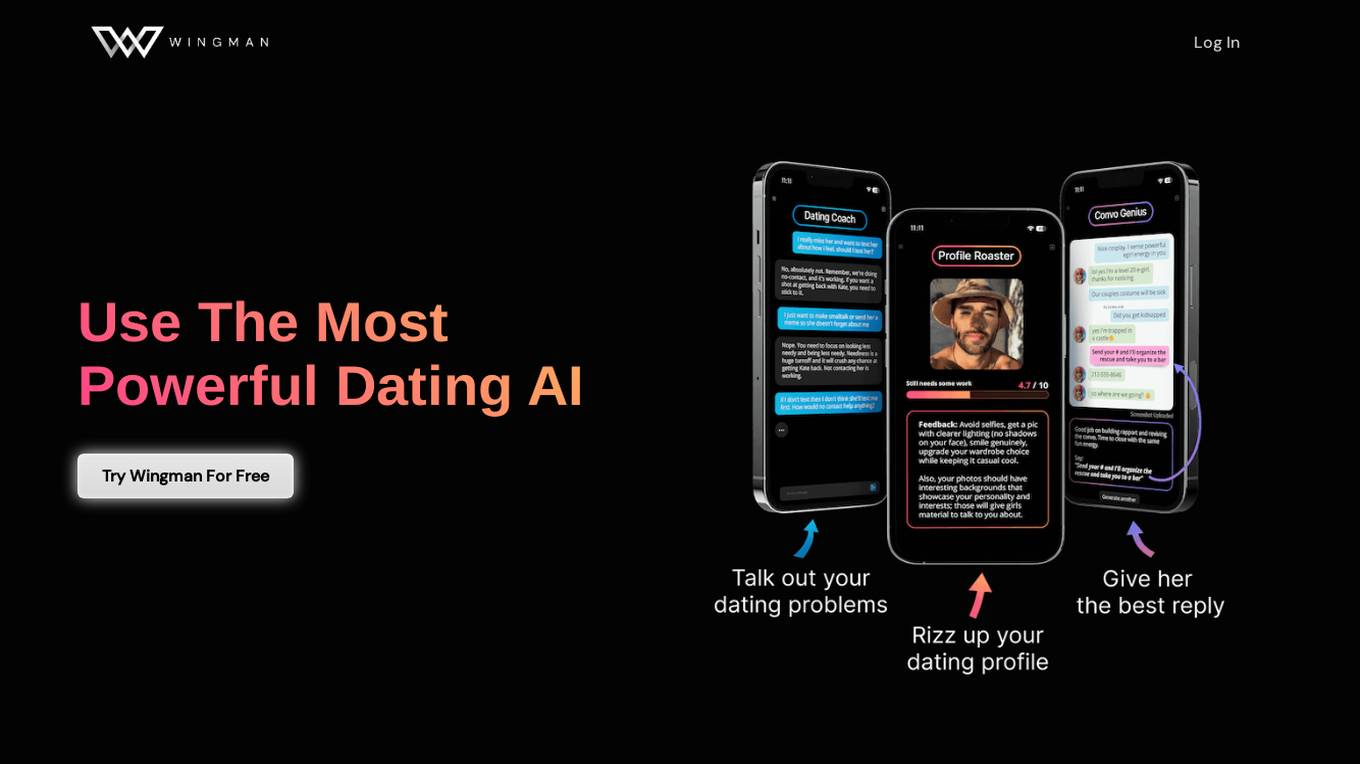

Wingman

Wingman is an AI dating coach application that offers personalized dating advice to straight men. It provides services such as chatbot coaching, profile optimization, and conversation feedback to help users improve their dating game and increase their chances of finding meaningful connections. Wingman prioritizes user privacy by ensuring all interactions are fully anonymized, and it continuously updates its memory bank to provide tailored advice. The application is currently in beta phase and offers complimentary access to invited users, with plans to introduce a free trial version upon official launch.

Resha

The website Resha offers a comprehensive collection of artificial intelligence and software tools in one place. Users can explore various categories such as artificial intelligence, coding, art, audio editing, e-commerce, developer tools, email assistants, search engine optimization tools, social media marketing, storytelling, design assistants, image editing, logo creation, data tables, SQL codes, music, text-to-speech conversion, voice cloning, video creation, video editing, 3D video creation, customer service support tools, educational tools, fashion, finance management, human resources management, legal assistance, presentations, productivity management, real estate management, sales management, startup tools, scheduling, fitness, entertainment tools, games, gift ideas, healthcare, memory, religion, research, and auditing.

Timely

Timely is an automatic time tracking tool designed for consultancies, agencies, and SaaS businesses. It eliminates manual timers, provides accurate time data, and offers insights to make data-backed decisions. With features like AI timesheets, project dashboard, and integrations with popular tools, Timely aims to optimize business workflows and drive profitability. The tool is privacy-focused, ensuring user data remains secure and private.

Google Chrome

Google Chrome is a fast and secure web browser developed by Google. It is designed to provide a smooth browsing experience across different platforms. The browser offers features like Energy Saver and Memory Saver to optimize performance, tab management tools for organization, and automatic updates every four weeks. Additionally, Chrome integrates AI innovations such as Google Lens for visual search, AI-powered writing assistance, tab organization suggestions, and generative themes for personalized browsing. It also prioritizes safety with features like Password Manager, Enhanced Safe Browsing, Safety Check, and Privacy Guide.

Memorly.AI

Memorly.AI is an AI tool designed to help businesses grow by leveraging AI agents to manage sales and support operations seamlessly. The tool offers personalized engagement and optimized processes through autonomous workflows, efficient customer interactions across multiple channels, and 24/7 human-like interactions. With Memorly.AI, businesses can handle high volumes without increasing costs, leading to improved conversation rates, customer engagement, and cost reduction. The tool is pay-per-use and allows businesses to deploy AI agents on various channels like WhatsApp, websites, VoIP, and more.

GoCharlie

GoCharlie is a leading Generative AI company specializing in developing cognitive agents and models optimized for businesses. Its AI technology enables professionals and businesses to amplify their productivity and create high-performing content tailored to their needs. GoCharlie's AI assistant, Charlie, automates repetitive tasks, allowing teams to focus on more strategic and creative work. It offers a suite of proprietary LLM and multimodal models, a Memory Vault to build an AI Brain for businesses, and Agent AI to deliver the full power of AI to operations. GoCharlie can automate mundane tasks, drive complex workflows, and facilitate instant, precise data retrieval.

Brainglue

Brainglue is a conversational AI tool designed for creative professionals to supercharge their strategic thinking and communication. It offers a suite of AI workflows optimized for writing, analysis, research, illustration, and more. Users can chat with AI advisors, access frontier models like GPT-4 and Claude 3, save recurring context in memory docs, and generate images with state-of-the-art models. Brainglue aims to enhance productivity and provide a thoughtful AI experience tailored for knowledge professionals and creative minds.

Webflow Optimize

Webflow Optimize is a website optimization and personalization tool that empowers users to create high-performing sites, analyze site performance, and maximize conversions through testing and personalization. It offers features such as real-time collaboration, shared libraries, CMS management, hosting, security, SEO controls, and integration with various apps. Users can create custom site experiences, track performance, and harness AI for faster results and smarter insights. The tool allows for easy setup of tests, personalized messaging, and monitoring of results to enhance user engagement and boost conversion rates.

7 - Open Source AI Tools

fsdp_qlora

The fsdp_qlora repository provides a script for training Large Language Models (LLMs) with Quantized LoRA and Fully Sharded Data Parallelism (FSDP). It integrates FSDP+QLoRA into the Axolotl platform and offers installation instructions for dependencies like llama-recipes, fastcore, and PyTorch. Users can finetune Llama-2 70B on Dual 24GB GPUs using the provided command. The script supports various training options including full params fine-tuning, LoRA fine-tuning, custom LoRA fine-tuning, quantized LoRA fine-tuning, and more. It also discusses low memory loading, mixed precision training, and comparisons to existing trainers. The repository addresses limitations and provides examples for training with different configurations, including BnB QLoRA and HQQ QLoRA. Additionally, it offers SLURM training support and instructions for adding support for a new model.

Anima

Anima is the first open-source 33B Chinese large language model based on QLoRA, supporting DPO alignment training and open-sourcing a 100k context window model. The latest update includes AirLLM, a library that enables inference of 70B LLM from a single GPU with just 4GB memory. The tool optimizes memory usage for inference, allowing large language models to run on a single 4GB GPU without the need for quantization or other compression techniques. Anima aims to democratize AI by making advanced models accessible to everyone and contributing to the historical process of AI democratization.

pgvecto.rs

pgvecto.rs is a Postgres extension written in Rust that provides vector similarity search functions. It offers ultra-low-latency, high-precision vector search capabilities, including sparse vector search and full-text search. With complete SQL support, async indexing, and easy data management, it simplifies data handling. The extension supports various data types like FP16/INT8, binary vectors, and Matryoshka embeddings. It ensures system performance with production-ready features, high availability, and resource efficiency. Security and permissions are managed through easy access control. The tool allows users to create tables with vector columns, insert vector data, and calculate distances between vectors using different operators. It also supports half-precision floating-point numbers for better performance and memory usage optimization.

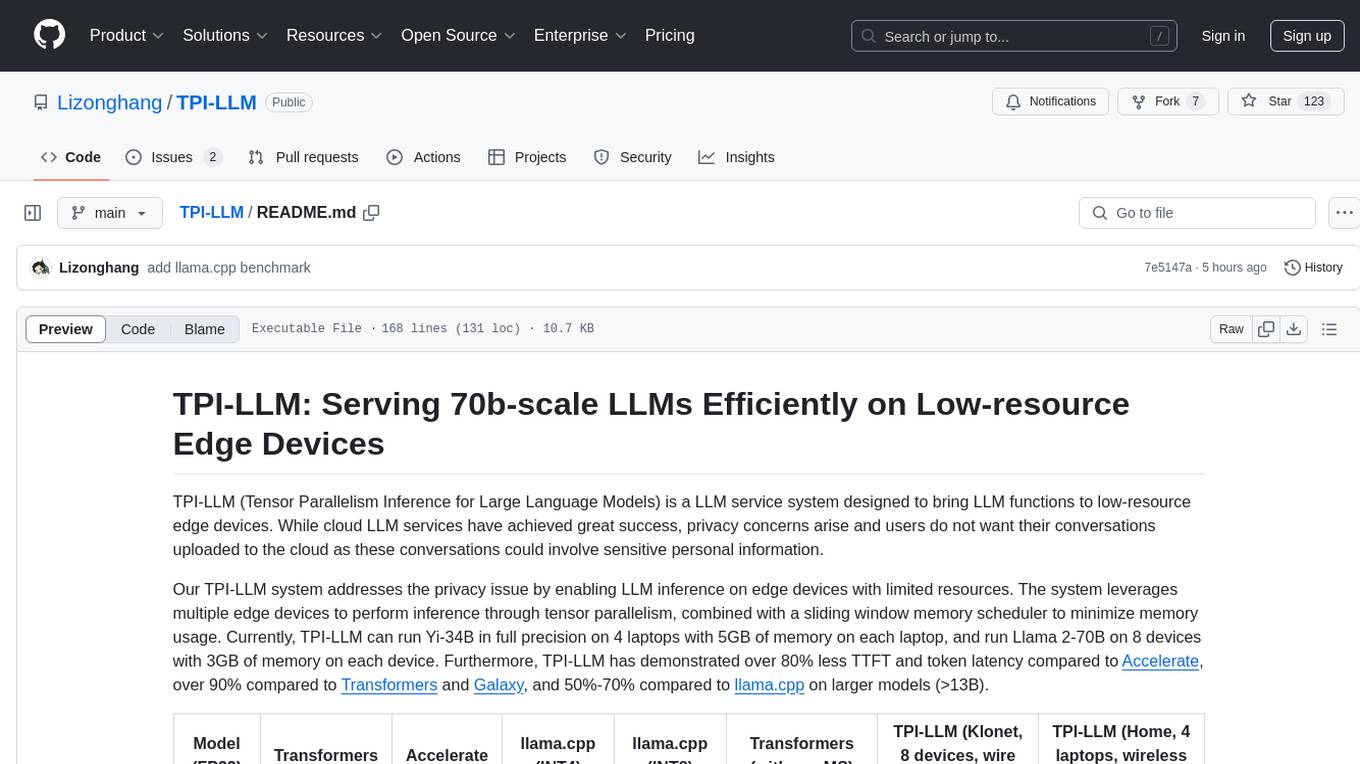

TPI-LLM

TPI-LLM (Tensor Parallelism Inference for Large Language Models) is a system designed to bring LLM functions to low-resource edge devices, addressing privacy concerns by enabling LLM inference on edge devices with limited resources. It leverages multiple edge devices for inference through tensor parallelism and a sliding window memory scheduler to minimize memory usage. TPI-LLM demonstrates significant improvements in TTFT and token latency compared to other models, and plans to support infinitely large models with low token latency in the future.

KIVI

KIVI is a plug-and-play 2bit KV cache quantization algorithm optimizing memory usage by quantizing key cache per-channel and value cache per-token to 2bit. It enables LLMs to maintain quality while reducing memory usage, allowing larger batch sizes and increasing throughput in real LLM inference workloads.

bitsandbytes

bitsandbytes enables accessible large language models via k-bit quantization for PyTorch. It provides features for reducing memory consumption for inference and training by using 8-bit optimizers, LLM.int8() for large language model inference, and QLoRA for large language model training. The library includes quantization primitives for 8-bit & 4-bit operations and 8-bit optimizers.

pointer

Pointer is a lightweight and efficient tool for analyzing and visualizing data structures in C and C++ programs. It provides a user-friendly interface to track memory allocations, pointer references, and data structures, helping developers to identify memory leaks, pointer errors, and optimize memory usage. With Pointer, users can easily navigate through complex data structures, visualize memory layouts, and debug pointer-related issues in their codebase. The tool offers interactive features such as memory snapshots, pointer tracking, and memory visualization, making it a valuable asset for C and C++ developers working on memory-intensive applications.

20 - OpenAI Gpts

CV & Resume ATS Optimize + 🔴Match-JOB🔴

Professional Resume & CV Assistant 📝 Optimize for ATS 🤖 Tailor to Job Descriptions 🎯 Compelling Content ✨ Interview Tips 💡

Website Conversion by B12

I'll help you optimize your website for more conversions, and compare your site's CRO potential to competitors’.

Thermodynamics Advisor

Advises on thermodynamics processes to optimize system efficiency.

Cloud Architecture Advisor

Guides cloud strategy and architecture to optimize business operations.

International Tax Advisor

Advises on international tax matters to optimize company's global tax position.

Investment Management Advisor

Provides strategic financial guidance for investment behavior to optimize organization's wealth.

ESG Strategy Navigator 🌱🧭

Optimize your business with sustainable practices! ESG Strategy Navigator helps integrate Environmental, Social, Governance (ESG) factors into corporate strategy, ensuring compliance, ethical impact, and value creation. 🌟

Floor Plan Optimization Assistant

Help optimize floor plan, for better experience, please visit collov.ai

AI Business Transformer

Top AI for business automation, data analytics, content creation. Optimize efficiency, gain insights, and innovate with AI Business Transformer.

Business Pricing Strategies & Plans Toolkit

A variety of business pricing tools and strategies! Optimize your price strategy and tactics with AI-driven insights. Critical pricing tools for businesses of all sizes looking to strategically navigate the market.