bitsandbytes

Accessible large language models via k-bit quantization for PyTorch.

Stars: 7950

bitsandbytes enables accessible large language models via k-bit quantization for PyTorch. It provides features for reducing memory consumption for inference and training by using 8-bit optimizers, LLM.int8() for large language model inference, and QLoRA for large language model training. The library includes quantization primitives for 8-bit & 4-bit operations and 8-bit optimizers.

README:

bitsandbytes enables accessible large language models via k-bit quantization for PyTorch. We provide three main features for dramatically reducing memory consumption for inference and training:

- 8-bit optimizers uses block-wise quantization to maintain 32-bit performance at a small fraction of the memory cost.

- LLM.int8() or 8-bit quantization enables large language model inference with only half the required memory and without any performance degradation. This method is based on vector-wise quantization to quantize most features to 8-bits and separately treating outliers with 16-bit matrix multiplication.

- QLoRA or 4-bit quantization enables large language model training with several memory-saving techniques that don't compromise performance. This method quantizes a model to 4-bits and inserts a small set of trainable low-rank adaptation (LoRA) weights to allow training.

The library includes quantization primitives for 8-bit & 4-bit operations, through bitsandbytes.nn.Linear8bitLt and bitsandbytes.nn.Linear4bit and 8-bit optimizers through bitsandbytes.optim module.

bitsandbytes has the following minimum requirements for all platforms:

- Python 3.10+

-

PyTorch 2.3+

- Note: While we aim to provide wide backwards compatibility, we recommend using the latest version of PyTorch for the best experience.

Note: this table reflects the status of the current development branch. For the latest stable release, see the document in the 0.49.0 tag.

🚧 = In Development, 〰️ = Partially Supported, ✅ = Supported, 🐢 = Slow Implementation Supported, ❌ = Not Supported

| Platform | Accelerator | Hardware Requirements | LLM.int8() | QLoRA 4-bit | 8-bit Optimizers |

|---|---|---|---|---|---|

| 🐧 Linux, glibc >= 2.24 | |||||

| x86-64 | ◻️ CPU | Minimum: AVX2 Optimized: AVX512F, AVX512BF16 |

✅ | ✅ | ❌ |

🟩 NVIDIA GPU cuda

|

SM60+ minimum SM75+ recommended |

✅ | ✅ | ✅ | |

🟥 AMD GPU cuda

|

CDNA: gfx90a, gfx942, gfx950 RDNA: gfx1100, gfx1101, gfx1150, gfx1151, gfx1200, gfx1201 |

✅ | 〰️ | ✅ | |

🟦 Intel GPU xpu

|

Data Center GPU Max Series Arc A-Series (Alchemist) Arc B-Series (Battlemage) |

✅ | ✅ | 〰️ | |

🟪 Intel Gaudi hpu

|

Gaudi2, Gaudi3 | ✅ | 〰️ | ❌ | |

| aarch64 | ◻️ CPU | ✅ | ✅ | ❌ | |

🟩 NVIDIA GPU cuda

|

SM75+ | ✅ | ✅ | ✅ | |

| 🪟 Windows 11 / Windows Server 2022+ | |||||

| x86-64 | ◻️ CPU | AVX2 | ✅ | ✅ | ❌ |

🟩 NVIDIA GPU cuda

|

SM60+ minimum SM75+ recommended |

✅ | ✅ | ✅ | |

🟦 Intel GPU xpu

|

Arc A-Series (Alchemist) Arc B-Series (Battlemage) |

✅ | ✅ | 〰️ | |

| 🍎 macOS 14+ | |||||

| arm64 | ◻️ CPU | Apple M1+ | ✅ | ✅ | ❌ |

⬜ Metal mps

|

Apple M1+ | 🐢 | 🐢 | ❌ | |

The continued maintenance and development of bitsandbytes is made possible thanks to the generous support of our sponsors. Their contributions help ensure that we can keep improving the project and delivering valuable updates to the community.

bitsandbytes is MIT licensed.

If you found this library useful, please consider citing our work:

@article{dettmers2023qlora,

title={Qlora: Efficient finetuning of quantized llms},

author={Dettmers, Tim and Pagnoni, Artidoro and Holtzman, Ari and Zettlemoyer, Luke},

journal={arXiv preprint arXiv:2305.14314},

year={2023}

}@article{dettmers2022llmint8,

title={LLM.int8(): 8-bit Matrix Multiplication for Transformers at Scale},

author={Dettmers, Tim and Lewis, Mike and Belkada, Younes and Zettlemoyer, Luke},

journal={arXiv preprint arXiv:2208.07339},

year={2022}

}@article{dettmers2022optimizers,

title={8-bit Optimizers via Block-wise Quantization},

author={Dettmers, Tim and Lewis, Mike and Shleifer, Sam and Zettlemoyer, Luke},

journal={9th International Conference on Learning Representations, ICLR},

year={2022}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for bitsandbytes

Similar Open Source Tools

bitsandbytes

bitsandbytes enables accessible large language models via k-bit quantization for PyTorch. It provides features for reducing memory consumption for inference and training by using 8-bit optimizers, LLM.int8() for large language model inference, and QLoRA for large language model training. The library includes quantization primitives for 8-bit & 4-bit operations and 8-bit optimizers.

ms-swift

ms-swift is an official framework provided by the ModelScope community for fine-tuning and deploying large language models and multi-modal large models. It supports training, inference, evaluation, quantization, and deployment of over 400 large models and 100+ multi-modal large models. The framework includes various training technologies and accelerates inference, evaluation, and deployment modules. It offers a Gradio-based Web-UI interface and best practices for easy application of large models. ms-swift supports a wide range of model types, dataset types, hardware support, lightweight training methods, distributed training techniques, quantization training, RLHF training, multi-modal training, interface training, plugin and extension support, inference acceleration engines, model evaluation, and model quantization.

Fast-dLLM

Fast-DLLM is a diffusion-based Large Language Model (LLM) inference acceleration framework that supports efficient inference for models like Dream and LLaDA. It offers fast inference support, multiple optimization strategies, code generation, evaluation capabilities, and an interactive chat interface. Key features include Key-Value Cache for Block-Wise Decoding, Confidence-Aware Parallel Decoding, and overall performance improvements. The project structure includes directories for Dream and LLaDA model-related code, with installation and usage instructions provided for using the LLaDA and Dream models.

NanoLLM

NanoLLM is a tool designed for optimized local inference for Large Language Models (LLMs) using HuggingFace-like APIs. It supports quantization, vision/language models, multimodal agents, speech, vector DB, and RAG. The tool aims to provide efficient and effective processing for LLMs on local devices, enhancing performance and usability for various AI applications.

mindnlp

MindNLP is an open-source NLP library based on MindSpore. It provides a platform for solving natural language processing tasks, containing many common approaches in NLP. It can help researchers and developers to construct and train models more conveniently and rapidly. Key features of MindNLP include: * Comprehensive data processing: Several classical NLP datasets are packaged into a friendly module for easy use, such as Multi30k, SQuAD, CoNLL, etc. * Friendly NLP model toolset: MindNLP provides various configurable components. It is friendly to customize models using MindNLP. * Easy-to-use engine: MindNLP simplified complicated training process in MindSpore. It supports Trainer and Evaluator interfaces to train and evaluate models easily. MindNLP supports a wide range of NLP tasks, including: * Language modeling * Machine translation * Question answering * Sentiment analysis * Sequence labeling * Summarization MindNLP also supports industry-leading Large Language Models (LLMs), including Llama, GLM, RWKV, etc. For support related to large language models, including pre-training, fine-tuning, and inference demo examples, you can find them in the "llm" directory. To install MindNLP, you can either install it from Pypi, download the daily build wheel, or install it from source. The installation instructions are provided in the documentation. MindNLP is released under the Apache 2.0 license. If you find this project useful in your research, please consider citing the following paper: @misc{mindnlp2022, title={{MindNLP}: a MindSpore NLP library}, author={MindNLP Contributors}, howpublished = {\url{https://github.com/mindlab-ai/mindnlp}}, year={2022} }

PaddleNLP

PaddleNLP is an easy-to-use and high-performance NLP library. It aggregates high-quality pre-trained models in the industry and provides out-of-the-box development experience, covering a model library for multiple NLP scenarios with industry practice examples to meet developers' flexible customization needs.

verl

veRL is a flexible and efficient reinforcement learning training framework designed for large language models (LLMs). It allows easy extension of diverse RL algorithms, seamless integration with existing LLM infrastructures, and flexible device mapping. The framework achieves state-of-the-art throughput and efficient actor model resharding with 3D-HybridEngine. It supports popular HuggingFace models and is suitable for users working with PyTorch FSDP, Megatron-LM, and vLLM backends.

inference

Xorbits Inference (Xinference) is a powerful and versatile library designed to serve language, speech recognition, and multimodal models. With Xorbits Inference, you can effortlessly deploy and serve your or state-of-the-art built-in models using just a single command. Whether you are a researcher, developer, or data scientist, Xorbits Inference empowers you to unleash the full potential of cutting-edge AI models.

llm_recipes

This repository showcases the author's experiments with Large Language Models (LLMs) for text generation tasks. It includes dataset preparation, preprocessing, model fine-tuning using libraries such as Axolotl and HuggingFace, and model evaluation.

VLMEvalKit

VLMEvalKit is an open-source evaluation toolkit of large vision-language models (LVLMs). It enables one-command evaluation of LVLMs on various benchmarks, without the heavy workload of data preparation under multiple repositories. In VLMEvalKit, we adopt generation-based evaluation for all LVLMs, and provide the evaluation results obtained with both exact matching and LLM-based answer extraction.

GEN-AI

GEN-AI is a versatile Python library for implementing various artificial intelligence algorithms and models. It provides a wide range of tools and functionalities to support machine learning, deep learning, natural language processing, computer vision, and reinforcement learning tasks. With GEN-AI, users can easily build, train, and deploy AI models for diverse applications such as image recognition, text classification, sentiment analysis, object detection, and game playing. The library is designed to be user-friendly, efficient, and scalable, making it suitable for both beginners and experienced AI practitioners.

llm-compressor

llm-compressor is an easy-to-use library for optimizing models for deployment with vllm. It provides a comprehensive set of quantization algorithms, seamless integration with Hugging Face models and repositories, and supports mixed precision, activation quantization, and sparsity. Supported algorithms include PTQ, GPTQ, SmoothQuant, and SparseGPT. Installation can be done via git clone and local pip install. Compression can be easily applied by selecting an algorithm and calling the oneshot API. The library also offers end-to-end examples for model compression. Contributions to the code, examples, integrations, and documentation are appreciated.

DelhiLM

DelhiLM is a natural language processing tool for building and training language models. It provides a user-friendly interface for text processing tasks such as tokenization, lemmatization, and language model training. With DelhiLM, users can easily preprocess text data and train custom language models for various NLP applications. The tool supports different languages and allows for fine-tuning pre-trained models to suit specific needs. DelhiLM is designed to be flexible, efficient, and easy to use for both beginners and experienced NLP practitioners.

airllm

AirLLM is a tool that optimizes inference memory usage, enabling large language models to run on low-end GPUs without quantization, distillation, or pruning. It supports models like Llama3.1 on 8GB VRAM. The tool offers model compression for up to 3x inference speedup with minimal accuracy loss. Users can specify compression levels, profiling modes, and other configurations when initializing models. AirLLM also supports prefetching and disk space management. It provides examples and notebooks for easy implementation and usage.

deeppowers

Deeppowers is a powerful Python library for deep learning applications. It provides a wide range of tools and utilities to simplify the process of building and training deep neural networks. With Deeppowers, users can easily create complex neural network architectures, perform efficient training and optimization, and deploy models for various tasks. The library is designed to be user-friendly and flexible, making it suitable for both beginners and experienced deep learning practitioners.

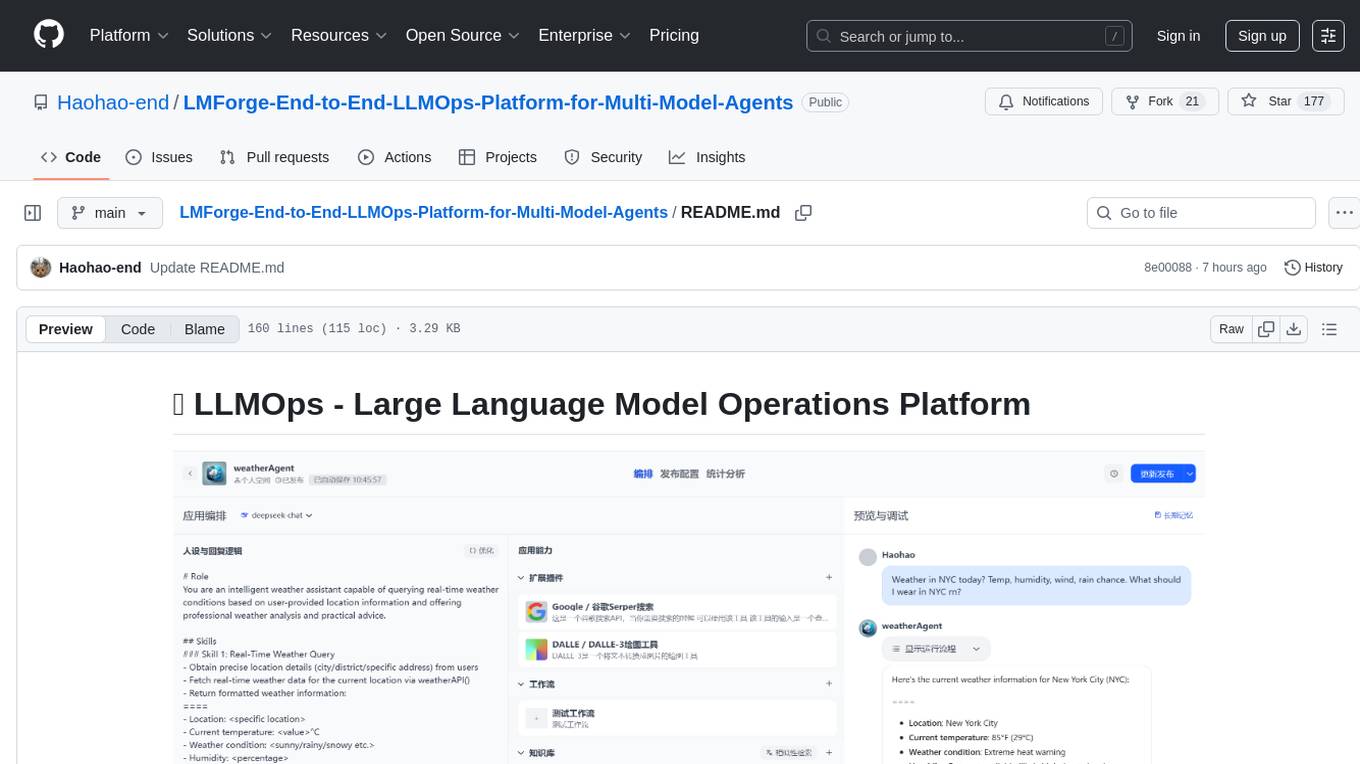

LMForge-End-to-End-LLMOps-Platform-for-Multi-Model-Agents

LMForge is an end-to-end LLMOps platform designed for multi-model agents. It provides a comprehensive solution for managing and deploying large language models efficiently. The platform offers tools for training, fine-tuning, and deploying various types of language models, enabling users to streamline the development and deployment process. With LMForge, users can easily experiment with different model architectures, optimize hyperparameters, and scale their models to meet specific requirements. The platform also includes features for monitoring model performance, managing datasets, and collaborating with team members, making it a versatile tool for researchers and developers working with language models.

For similar tasks

bitsandbytes

bitsandbytes enables accessible large language models via k-bit quantization for PyTorch. It provides features for reducing memory consumption for inference and training by using 8-bit optimizers, LLM.int8() for large language model inference, and QLoRA for large language model training. The library includes quantization primitives for 8-bit & 4-bit operations and 8-bit optimizers.

fsdp_qlora

The fsdp_qlora repository provides a script for training Large Language Models (LLMs) with Quantized LoRA and Fully Sharded Data Parallelism (FSDP). It integrates FSDP+QLoRA into the Axolotl platform and offers installation instructions for dependencies like llama-recipes, fastcore, and PyTorch. Users can finetune Llama-2 70B on Dual 24GB GPUs using the provided command. The script supports various training options including full params fine-tuning, LoRA fine-tuning, custom LoRA fine-tuning, quantized LoRA fine-tuning, and more. It also discusses low memory loading, mixed precision training, and comparisons to existing trainers. The repository addresses limitations and provides examples for training with different configurations, including BnB QLoRA and HQQ QLoRA. Additionally, it offers SLURM training support and instructions for adding support for a new model.

Anima

Anima is the first open-source 33B Chinese large language model based on QLoRA, supporting DPO alignment training and open-sourcing a 100k context window model. The latest update includes AirLLM, a library that enables inference of 70B LLM from a single GPU with just 4GB memory. The tool optimizes memory usage for inference, allowing large language models to run on a single 4GB GPU without the need for quantization or other compression techniques. Anima aims to democratize AI by making advanced models accessible to everyone and contributing to the historical process of AI democratization.

pgvecto.rs

pgvecto.rs is a Postgres extension written in Rust that provides vector similarity search functions. It offers ultra-low-latency, high-precision vector search capabilities, including sparse vector search and full-text search. With complete SQL support, async indexing, and easy data management, it simplifies data handling. The extension supports various data types like FP16/INT8, binary vectors, and Matryoshka embeddings. It ensures system performance with production-ready features, high availability, and resource efficiency. Security and permissions are managed through easy access control. The tool allows users to create tables with vector columns, insert vector data, and calculate distances between vectors using different operators. It also supports half-precision floating-point numbers for better performance and memory usage optimization.

TPI-LLM

TPI-LLM (Tensor Parallelism Inference for Large Language Models) is a system designed to bring LLM functions to low-resource edge devices, addressing privacy concerns by enabling LLM inference on edge devices with limited resources. It leverages multiple edge devices for inference through tensor parallelism and a sliding window memory scheduler to minimize memory usage. TPI-LLM demonstrates significant improvements in TTFT and token latency compared to other models, and plans to support infinitely large models with low token latency in the future.

KIVI

KIVI is a plug-and-play 2bit KV cache quantization algorithm optimizing memory usage by quantizing key cache per-channel and value cache per-token to 2bit. It enables LLMs to maintain quality while reducing memory usage, allowing larger batch sizes and increasing throughput in real LLM inference workloads.

pointer

Pointer is a lightweight and efficient tool for analyzing and visualizing data structures in C and C++ programs. It provides a user-friendly interface to track memory allocations, pointer references, and data structures, helping developers to identify memory leaks, pointer errors, and optimize memory usage. With Pointer, users can easily navigate through complex data structures, visualize memory layouts, and debug pointer-related issues in their codebase. The tool offers interactive features such as memory snapshots, pointer tracking, and memory visualization, making it a valuable asset for C and C++ developers working on memory-intensive applications.

llm-awq

AWQ (Activation-aware Weight Quantization) is a tool designed for efficient and accurate low-bit weight quantization (INT3/4) for Large Language Models (LLMs). It supports instruction-tuned models and multi-modal LMs, providing features such as AWQ search for accurate quantization, pre-computed AWQ model zoo for various LLMs, memory-efficient 4-bit linear in PyTorch, and efficient CUDA kernel implementation for fast inference. The tool enables users to run large models on resource-constrained edge platforms, delivering more efficient responses with LLM/VLM chatbots through 4-bit inference.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.