awesome-llm-json

Resource list for generating JSON using LLMs via function calling, tools, CFG. Libraries, Models, Notebooks, etc.

Stars: 1895

This repository is an awesome list dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs. It includes terminology explanations, hosted and local models, Python libraries, blog articles, videos, Jupyter notebooks, and leaderboards related to LLMs and JSON generation. The repository covers various aspects such as function calling, JSON mode, guided generation, and tool usage with different providers and models.

README:

This awesome list is dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs.

- Terminology

- Hosted Models

- Local Models

- Python Libraries

- Blog Articles

- Videos

- Jupyter Notebooks

- Leaderboards

Unfortunately, generating JSON goes by a few different names that roughly mean the same thing:

- Structured Outputs: Using an LLM to generate any structured output including JSON, XML, or YAML regardless of technique (e.g. function calling, guided generation).

- Function Calling: Providing an LLM a hypothetical (or actual) function definition for it to "call" in it's chat or completion response. The LLM doesn't actually call the function, it just provides an indication that one should be called via a JSON message.

- JSON Mode: Specifying that an LLM must generate valid JSON. Depending on the provider, a schema may or may not be specified and the LLM may create an unexpected schema.

- Tool Usage: Giving an LLM a choice of tools such as image generation, web search, and "function calling". The functional calling parameter in the API request is now called "tools".

- Guided Generation: For constraining an LLM model to generate text that follows a prescribed specification such as a Context-Free Grammar.

- GPT Actions: ChatGPT invokes actions (i.e. API calls) based on the endpoints and parameters specified in an OpenAPI specification. Unlike the capability called "Function Calling", this capability will indeed call your function hosted by an API server.

None of these names are great, that's why I named this list just "Awesome LLM JSON".

| Provider | Models | Links |

|---|---|---|

| Anthropic | claude-3-opus-20240229 claude-3-sonnet-20240229 claude-3-haiku-20240307 |

API Docs Pricing |

| AnyScale | Mistral-7B-Instruct-v0.1 Mixtral-8x7B-Instruct-v0.1 |

Function Calling JSON Mode Pricing Announcement (2023) |

| Azure | gpt-4 gpt-4-turbo gpt-35-turbo mistral-large-latest mistral-large-2402 |

Function Calling OpenAI Pricing Mistral Pricing |

| Cohere | Command-R Command-R+ |

Function Calling Pricing Command-R (2024-03-11) Command-R+ (2024-04-04) |

| Fireworks.ai | firefunction-v1 |

Function Calling JSON Mode Grammar mode Pricing Announcement (2023-12-20) |

| gemini-1.0-pro |

Function Calling Pricing |

|

| Groq | llama2-70b mixtral-8x7b gemma-7b-it |

Function Calling Pricing |

| Hugging Face TGI | many open-source models |

Grammars, JSON mode, Function Calling and Tools For free locally, or via dedicated or serverless endpoints. |

| Mistral | mistral-large-latest |

Function Calling Pricing |

| OpenAI | gpt-4 gpt-4-turbo gpt-35-turbo |

Function Calling JSON Mode Pricing Announcement (2023-06-13) |

| Rysana | inversion-sm |

API Docs Pricing Announcement (2024-03-18) |

| Together AI | Mixtral-8x7B-Instruct-v0.1 Mistral-7B-Instruct-v0.1 CodeLlama-34b-Instruct |

Function Calling JSON Mode Pricing Announcement 2024-01-31 |

Parallel Function Calling

Below is a list of hosted API models that support multiple parallel function calls. This could include checking the weather in multiple cities or first finding the location of a hotel and then checking the weather at it's location.

- anthropic

- claude-3-opus-20240229

- claude-3-sonnet-20240229

- claude-3-haiku-20240307

- azure/openai

- gpt-4-turbo-preview

- gpt-4-1106-preview

- gpt-4-0125-preview

- gpt-3.5-turbo-1106

- gpt-3.5-turbo-0125

- cohere

- command-r

- together_ai

- Mixtral-8x7B-Instruct-v0.1

- Mistral-7B-Instruct-v0.1

- CodeLlama-34b-Instruct

Mistral 7B Instruct v0.3 (2024-05-22, Apache 2.0) an instruct fine-tuned version of Mistral with added function calling support.

C4AI Command R+ (2024-03-20, CC-BY-NC, Cohere) is a 104B parameter multilingual model with advanced Retrieval Augmented Generation (RAG) and tool use capabilities, optimized for reasoning, summarization, and question answering across 10 languages. Supports quantization for efficient use and demonstrates unique multi-step tool integration for complex task execution.

Hermes 2 Pro - Mistral 7B (2024-03-13, Nous Research) is a 7B parameter model that excels at function calling, JSON structured outputs, and general tasks. Trained on an updated OpenHermes 2.5 Dataset and a new function calling dataset, it uses a special system prompt and multi-turn structure. Achieves 91% on function calling and 84% on JSON mode evaluations.

Gorilla OpenFunctions v2 (2024-02-27, Apache 2.0 license, Charlie Cheng-Jie Ji et al.) interprets and executes functions based on JSON Schema Objects, supporting multiple languages and detecting function relevance.

NexusRaven-V2 (2023-12-05, Nexusflow) is a 13B model outperforming GPT-4 in zero-shot function calling by up to 7%, enabling effective use of software tools. Further instruction-tuned on CodeLlama-13B-instruct.

Functionary (2023-08-04, MeetKai) interprets and executes functions based on JSON Schema Objects, supporting various compute requirements and call types. Compatible with OpenAI-python and llama-cpp-python for efficient function execution in JSON generation tasks.

Hugging Face TGI enables JSON outputs and function calling for a variety of local models.

DSPy (MIT) is a framework for algorithmically optimizing LM prompts and weights. DSPy introduced typed predictor and signatures to leverage Pydantic for enforcing type constraints on inputs and outputs, improving upon string-based fields.

FuzzTypes (MIT) extends Pydantic with autocorrecting annotation types for enhanced data normalization and handling of complex types like emails, dates, and custom entities.

guidance (Apache-2.0) enables constrained generation, interleaving Python logic with LLM calls, reusable functions, and calling external tools. Optimizes prompts for faster generation.

Instructor (MIT) simplifies generating structured data from LLMs using Function Calling, Tool Calling, and constrained sampling modes. Built on Pydantic for validation and supports various LLMs.

LangChain (MIT) provides an interface for chains, integrations with other tools, and chains for applications. LangChain offers chains for structured outputs and function calling across models.

LiteLLM (MIT) simplifies calling 100+ LLMs in the OpenAI format, supporting function calling, tool calling, and JSON mode.

LlamaIndex (MIT) provides modules for structured outputs at different levels of abstraction, including output parsers for text completion endpoints, Pydantic programs for mapping prompts to structured outputs using function calling or output parsing, and pre-defined Pydantic programs for specific output types.

Marvin (Apache-2.0) is a lightweight toolkit for building reliable natural language interfaces with self-documenting tools for tasks like entity extraction and multi-modal support.

Outlines (Apache-2.0) facilitates structured text generation using multiple models, Jinja templating, and support for regex patterns, JSON schemas, Pydantic models, and context-free grammars.

Pydantic (MIT) simplifies working with data structures and JSON through data model definition, validation, JSON schema generation, and seamless parsing and serialization.

SGLang (MPL-2.0) allows specifying JSON schemas using regular expressions or Pydantic models for constrained decoding. Its high-performance runtime accelerates JSON decoding.

SynCode (MIT) is a framework for the grammar-guided generation of Large Language Models (LLMs). It supports CFG for Python, Go, Java, JSON, YAML, and many more.

Mirascope (MIT) is an LLM toolkit that supports structured extraction with an intuitive python API.

Magnetic (MIT) call LLMs from Python using 3 lines of code. Simply use the @prompt decorator to create functions that return structured output from the LLM, powered by Pydantic.

Formatron (MIT) is an efficient and scalable constrained decoding library that enables controlling over language model output format using f-string templates that support regular expressions, context-free grammars, JSON schemas, and Pydantic models. Formatron integrates seamlessly with various model inference libraries.

How fast can grammar-structured generation be? (2024-04-12, .txt Engineering) demonstrates an almost cost-free method to generate text that follows a grammar. It is shown to outperform llama.cpp by a factor of 50x on the C grammar.

Structured Generation Improves LLM performance: GSM8K Benchmark (2024-03-15, .txt Engineering) demonstrates consistent improvements across 8 models, highlighting benefits like "prompt consistency" and "thought-control."

LoRAX + Outlines: Better JSON Extraction with Structured Generation and LoRA (2024-03-03, Predibase Blog) combines Outlines with LoRAX v0.8 to enhance extraction accuracy and schema fidelity through structured generation, fine-tuning, and LoRA adapters.

FU, Show Me The Prompt. Quickly understand inscrutable LLM frameworks by intercepting API calls (2023-02-14, Hamel Husain) provides a practical guide to intercepting API calls using mitmproxy, gaining insights into tool functionality, and assessing necessity. Emphasizes minimizing complexity and maintaining close connection with underlying LLMs.

Coalescence: making LLM inference 5x faster (2024-02-02, .txt Engineering) shows how structured generation can be made faster than unstructured generation using a technique called "coalescence", with a caveat regarding how it may affect the quality of the generation.

Why Pydantic became indispensable for LLMs (2024-01-19, Adam Azzam) explains Pydantic's emergence as a critical tool, enabling sharing data models via JSON schemas and reasoning between unstructured and structured data. Highlights the importance of quantizing the decision space and potential issues with LLMs overfitting to older schema versions.

Getting Started with Function Calling (2024-01-11, Elvis Saravia) introduces function calling for connecting LLMs with external tools and APIs, providing an example using OpenAI's API and highlighting potential applications.

Pushing ChatGPT's Structured Data Support To Its Limits (2023-12-21, Max Woolf) delves into leveraging ChatGPT's capabilities using paid API, JSON schemas, and Pydantic. Highlights techniques for improving output quality and the benefits of structured data support.

Why use Instructor? (2023-11-18, Jason Liu) explains the benefits of the library, offering a readable approach, support for partial extraction and various types, and a self-correcting mechanism. Recommends additional resources on the Instructor website.

Using grammars to constrain llama.cpp output (2023-09-06, Ian Maurer) integrates context-free grammars with llama.cpp for more accurate and schema-compliant responses, particularly for biomedical data.

Using OpenAI functions and their Python library for data extraction (2023-07-09, Simon Willison) demonstrates extracting structured data using OpenAI Python library and function calling in a single API call, with a code example and suggestions for handling streaming limitations.

GPT Extracting Unstructured Data with Datasette and GPT-4 Turbo (2024-04-09, Simon Willison) showcases the datasette-extract plugin's ability to populate database tables from unstructured text and images, leveraging GPT-4 Turbo's API for data extraction.

LLM Structured Output for Function Calling with Ollama (2024-03-25, Andrej Baranovskij) demonstrates function calling-based data extraction using Ollama, Instructor and Sparrow agent.

Hermes 2 Pro Overview (2024-03-18, Prompt Engineer) introduces Hermes 2 Pro, a 7B parameter model excelling at function calling and structured JSON output. Demonstrates 90% accuracy in function calling and 84% in JSON mode, outperforming other models.

Mistral AI Function Calling (2024-02-24, Sophia Yang) demonstrates connecting LLMs to external tools, generating function arguments, and executing functions. Could be extended to generate or manipulate JSON data.

Function Calling in Ollama vs OpenAI (2024-02-13, Matt Williams) clarifies that models generate structured output for parsing and invoking functions. Compares implementations, highlighting Ollama's simpler approach and using few-shot prompts for consistency.

LLM Engineering: Structured Outputs (2024-02-12, Jason Liu, Weights & Biases Course) offers a concise course on handling structured JSON output, function calling, and validations using Pydantic, covering essentials for robust pipelines and efficient production integration.

Pydantic is all you need (2023-10-10, Jason Liu, AI Engineer Conference) discusses the importance of Pydantic for structured prompting and output validation, introducing the Instructor library and showcasing advanced applications for reliable and maintainable LLM-powered applications.

Function Calling with llama-cpp-python and OpenAI Python Client demonstrates integration, including setup using the Instructor library, with examples of retrieving weather information and extracting user details.

Function Calling with Mistral Models demonstrates connecting Mistral models with external tools through a simple example involving a payment transactions dataframe.

chatgpt-structured-data by Max Woolf provides demos showcasing ChatGPT's function calling and structured data support, covering various use cases and schemas.## Leaderboards

Berkeley Function-Calling Leaderboard (BFCL) is an evaluation framework for LLMs' function-calling capabilities including over 2k question-function-answer pairs across languages like Python, Java, JavaScript, SQL, and REST API, focusing on simple, multiple, and parallel function calls, as well as function relevance detection.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-llm-json

Similar Open Source Tools

awesome-llm-json

This repository is an awesome list dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs. It includes terminology explanations, hosted and local models, Python libraries, blog articles, videos, Jupyter notebooks, and leaderboards related to LLMs and JSON generation. The repository covers various aspects such as function calling, JSON mode, guided generation, and tool usage with different providers and models.

LazyLLM

LazyLLM is a low-code development tool for building complex AI applications with multiple agents. It assists developers in building AI applications at a low cost and continuously optimizing their performance. The tool provides a convenient workflow for application development and offers standard processes and tools for various stages of application development. Users can quickly prototype applications with LazyLLM, analyze bad cases with scenario task data, and iteratively optimize key components to enhance the overall application performance. LazyLLM aims to simplify the AI application development process and provide flexibility for both beginners and experts to create high-quality applications.

llm-search

pyLLMSearch is an advanced RAG system that offers a convenient question-answering system with a simple YAML-based configuration. It enables interaction with multiple collections of local documents, with improvements in document parsing, hybrid search, chat history, deep linking, re-ranking, customizable embeddings, and more. The package is designed to work with custom Large Language Models (LLMs) from OpenAI or installed locally. It supports various document formats, incremental embedding updates, dense and sparse embeddings, multiple embedding models, 'Retrieve and Re-rank' strategy, HyDE (Hypothetical Document Embeddings), multi-querying, chat history, and interaction with embedded documents using different models. It also offers simple CLI and web interfaces, deep linking, offline response saving, and an experimental API.

fenic

fenic is an opinionated DataFrame framework from typedef.ai for building AI and agentic applications. It transforms unstructured and structured data into insights using familiar DataFrame operations enhanced with semantic intelligence. With support for markdown, transcripts, and semantic operators, plus efficient batch inference across various model providers. fenic is purpose-built for LLM inference, providing a query engine designed for AI workloads, semantic operators as first-class citizens, native unstructured data support, production-ready infrastructure, and a familiar DataFrame API.

MemoryBear

MemoryBear is a next-generation AI memory system developed by RedBear AI, focusing on overcoming limitations in knowledge storage and multi-agent collaboration. It empowers AI with human-like memory capabilities, enabling deep knowledge understanding and cognitive collaboration. The system addresses challenges such as knowledge forgetting, memory gaps in multi-agent collaboration, and semantic ambiguity during reasoning. MemoryBear's core features include memory extraction engine, graph storage, hybrid search, memory forgetting engine, self-reflection engine, and FastAPI services. It offers a standardized service architecture for efficient integration and invocation across applications.

RAG-FiT

RAG-FiT is a library designed to improve Language Models' ability to use external information by fine-tuning models on specially created RAG-augmented datasets. The library assists in creating training data, training models using parameter-efficient finetuning (PEFT), and evaluating performance using RAG-specific metrics. It is modular, customizable via configuration files, and facilitates fast prototyping and experimentation with various RAG settings and configurations.

doris

Doris is a lightweight and user-friendly data visualization tool designed for quick and easy exploration of datasets. It provides a simple interface for users to upload their data and generate interactive visualizations without the need for coding. With Doris, users can easily create charts, graphs, and dashboards to analyze and present their data in a visually appealing way. The tool supports various data formats and offers customization options to tailor visualizations to specific needs. Whether you are a data analyst, researcher, or student, Doris simplifies the process of data exploration and presentation.

RAGFoundry

RAG Foundry is a library designed to enhance Large Language Models (LLMs) by fine-tuning models on RAG-augmented datasets. It helps create training data, train models using parameter-efficient finetuning (PEFT), and measure performance using RAG-specific metrics. The library is modular, customizable using configuration files, and facilitates prototyping with various RAG settings and configurations for tasks like data processing, retrieval, training, inference, and evaluation.

llm-course

The LLM course is divided into three parts: 1. 🧩 **LLM Fundamentals** covers essential knowledge about mathematics, Python, and neural networks. 2. 🧑🔬 **The LLM Scientist** focuses on building the best possible LLMs using the latest techniques. 3. 👷 **The LLM Engineer** focuses on creating LLM-based applications and deploying them. For an interactive version of this course, I created two **LLM assistants** that will answer questions and test your knowledge in a personalized way: * 🤗 **HuggingChat Assistant**: Free version using Mixtral-8x7B. * 🤖 **ChatGPT Assistant**: Requires a premium account. ## 📝 Notebooks A list of notebooks and articles related to large language models. ### Tools | Notebook | Description | Notebook | |----------|-------------|----------| | 🧐 LLM AutoEval | Automatically evaluate your LLMs using RunPod |  | | 🥱 LazyMergekit | Easily merge models using MergeKit in one click. |  | | 🦎 LazyAxolotl | Fine-tune models in the cloud using Axolotl in one click. |  | | ⚡ AutoQuant | Quantize LLMs in GGUF, GPTQ, EXL2, AWQ, and HQQ formats in one click. |  | | 🌳 Model Family Tree | Visualize the family tree of merged models. |  | | 🚀 ZeroSpace | Automatically create a Gradio chat interface using a free ZeroGPU. |  |

postgresml

PostgresML is a powerful Postgres extension that seamlessly combines data storage and machine learning inference within your database. It enables running machine learning and AI operations directly within PostgreSQL, leveraging GPU acceleration for faster computations, integrating state-of-the-art large language models, providing built-in functions for text processing, enabling efficient similarity search, offering diverse ML algorithms, ensuring high performance, scalability, and security, supporting a wide range of NLP tasks, and seamlessly integrating with existing PostgreSQL tools and client libraries.

supersonic

SuperSonic is a next-generation BI platform that integrates Chat BI (powered by LLM) and Headless BI (powered by semantic layer) paradigms. This integration ensures that Chat BI has access to the same curated and governed semantic data models as traditional BI. Furthermore, the implementation of both paradigms benefits from the integration: * Chat BI's Text2SQL gets augmented with context-retrieval from semantic models. * Headless BI's query interface gets extended with natural language API. SuperSonic provides a Chat BI interface that empowers users to query data using natural language and visualize the results with suitable charts. To enable such experience, the only thing necessary is to build logical semantic models (definition of metric/dimension/tag, along with their meaning and relationships) through a Headless BI interface. Meanwhile, SuperSonic is designed to be extensible and composable, allowing custom implementations to be added and configured with Java SPI. The integration of Chat BI and Headless BI has the potential to enhance the Text2SQL generation in two dimensions: 1. Incorporate data semantics (such as business terms, column values, etc.) into the prompt, enabling LLM to better understand the semantics and reduce hallucination. 2. Offload the generation of advanced SQL syntax (such as join, formula, etc.) from LLM to the semantic layer to reduce complexity. With these ideas in mind, we develop SuperSonic as a practical reference implementation and use it to power our real-world products. Additionally, to facilitate further development we decide to open source SuperSonic as an extensible framework.

CodeFuse-muAgent

CodeFuse-muAgent is a Multi-Agent framework designed to streamline Standard Operating Procedure (SOP) orchestration for agents. It integrates toolkits, code libraries, knowledge bases, and sandbox environments for rapid construction of complex Multi-Agent interactive applications. The framework enables efficient execution and handling of multi-layered and multi-dimensional tasks.

arthur-engine

The Arthur Engine is a comprehensive tool for monitoring and governing AI/ML workloads. It provides evaluation and benchmarking of machine learning models, guardrails enforcement, and extensibility for fitting into various application architectures. With support for a wide range of evaluation metrics and customizable features, the tool aims to improve model understanding, optimize generative AI outputs, and prevent data-security and compliance risks. Key features include real-time guardrails, model performance monitoring, feature importance visualization, error breakdowns, and support for custom metrics and models integration.

llumnix

Llumnix is a cross-instance request scheduling layer built on top of LLM inference engines such as vLLM, providing optimized multi-instance serving performance with low latency, reduced time-to-first-token (TTFT) and queuing delays, reduced time-between-tokens (TBT) and preemption stalls, and high throughput. It achieves this through dynamic, fine-grained, KV-cache-aware scheduling, continuous rescheduling across instances, KV cache migration mechanism, and seamless integration with existing multi-instance deployment platforms. Llumnix is easy to use, fault-tolerant, elastic, and extensible to more inference engines and scheduling policies.

ReaLHF

ReaLHF is a distributed system designed for efficient RLHF training with Large Language Models (LLMs). It introduces a novel approach called parameter reallocation to dynamically redistribute LLM parameters across the cluster, optimizing allocations and parallelism for each computation workload. ReaL minimizes redundant communication while maximizing GPU utilization, achieving significantly higher Proximal Policy Optimization (PPO) training throughput compared to other systems. It supports large-scale training with various parallelism strategies and enables memory-efficient training with parameter and optimizer offloading. The system seamlessly integrates with HuggingFace checkpoints and inference frameworks, allowing for easy launching of local or distributed experiments. ReaLHF offers flexibility through versatile configuration customization and supports various RLHF algorithms, including DPO, PPO, RAFT, and more, while allowing the addition of custom algorithms for high efficiency.

For similar tasks

gorilla

Gorilla is a tool that enables LLMs to use tools by invoking APIs. Given a natural language query, Gorilla comes up with the semantically- and syntactically- correct API to invoke. With Gorilla, you can use LLMs to invoke 1,600+ (and growing) API calls accurately while reducing hallucination. Gorilla also releases APIBench, the largest collection of APIs, curated and easy to be trained on!

one-click-llms

The one-click-llms repository provides templates for quickly setting up an API for language models. It includes advanced inferencing scripts for function calling and offers various models for text generation and fine-tuning tasks. Users can choose between Runpod and Vast.AI for different GPU configurations, with recommendations for optimal performance. The repository also supports Trelis Research and offers templates for different model sizes and types, including multi-modal APIs and chat models.

awesome-llm-json

This repository is an awesome list dedicated to resources for using Large Language Models (LLMs) to generate JSON or other structured outputs. It includes terminology explanations, hosted and local models, Python libraries, blog articles, videos, Jupyter notebooks, and leaderboards related to LLMs and JSON generation. The repository covers various aspects such as function calling, JSON mode, guided generation, and tool usage with different providers and models.

ai-devices

AI Devices Template is a project that serves as an AI-powered voice assistant utilizing various AI models and services to provide intelligent responses to user queries. It supports voice input, transcription, text-to-speech, image processing, and function calling with conditionally rendered UI components. The project includes customizable UI settings, optional rate limiting using Upstash, and optional tracing with Langchain's LangSmith for function execution. Users can clone the repository, install dependencies, add API keys, start the development server, and deploy the application. Configuration settings can be modified in `app/config.tsx` to adjust settings and configurations for the AI-powered voice assistant.

ragtacts

Ragtacts is a Clojure library that allows users to easily interact with Large Language Models (LLMs) such as OpenAI's GPT-4. Users can ask questions to LLMs, create question templates, call Clojure functions in natural language, and utilize vector databases for more accurate answers. Ragtacts also supports RAG (Retrieval-Augmented Generation) method for enhancing LLM output by incorporating external data. Users can use Ragtacts as a CLI tool, API server, or through a RAG Playground for interactive querying.

DelphiOpenAI

Delphi OpenAI API is an unofficial library providing Delphi implementation over OpenAI public API. It allows users to access various models, make completions, chat conversations, generate images, and call functions using OpenAI service. The library aims to facilitate tasks such as content generation, semantic search, and classification through AI models. Users can fine-tune models, work with natural language processing, and apply reinforcement learning methods for diverse applications.

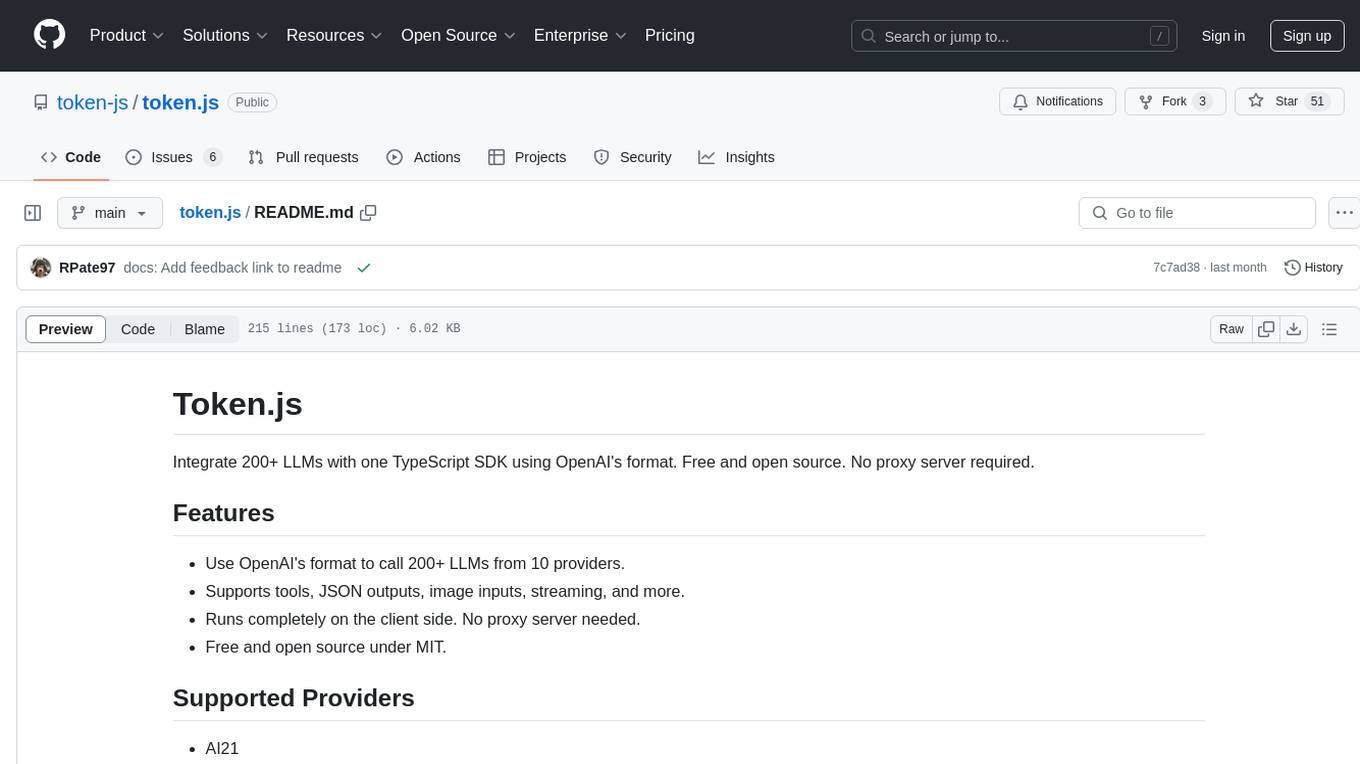

token.js

Token.js is a TypeScript SDK that integrates with over 200 LLMs from 10 providers using OpenAI's format. It allows users to call LLMs, supports tools, JSON outputs, image inputs, and streaming, all running on the client side without the need for a proxy server. The tool is free and open source under the MIT license.

osaurus

Osaurus is a native, Apple Silicon-only local LLM server built on Apple's MLX for maximum performance on M‑series chips. It is a SwiftUI app + SwiftNIO server with OpenAI‑compatible and Ollama‑compatible endpoints. The tool supports native MLX text generation, model management, streaming and non‑streaming chat completions, OpenAI‑compatible function calling, real-time system resource monitoring, and path normalization for API compatibility. Osaurus is designed for macOS 15.5+ and Apple Silicon (M1 or newer) with Xcode 16.4+ required for building from source.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.