awesome-llms-fine-tuning

Explore a comprehensive collection of resources, tutorials, papers, tools, and best practices for fine-tuning Large Language Models (LLMs). Perfect for ML practitioners and researchers!

Stars: 119

This repository is a curated collection of resources for fine-tuning Large Language Models (LLMs) like GPT, BERT, RoBERTa, and their variants. It includes tutorials, papers, tools, frameworks, and best practices to aid researchers, data scientists, and machine learning practitioners in adapting pre-trained models to specific tasks and domains. The resources cover a wide range of topics related to fine-tuning LLMs, providing valuable insights and guidelines to streamline the process and enhance model performance.

README:

Welcome to the curated collection of resources for fine-tuning Large Language Models (LLMs) like GPT, BERT, RoBERTa, and their numerous variants! In this era of artificial intelligence, the ability to adapt pre-trained models to specific tasks and domains has become an indispensable skill for researchers, data scientists, and machine learning practitioners.

Large Language Models, trained on massive datasets, capture an extensive range of knowledge and linguistic nuances. However, to unleash their full potential in specific applications, fine-tuning them on targeted datasets is paramount. This process not only enhances the models’ performance but also ensures that they align with the particular context, terminology, and requirements of the task at hand.

In this awesome list, we have meticulously compiled a range of resources, including tutorials, papers, tools, frameworks, and best practices, to aid you in your fine-tuning journey. Whether you are a seasoned practitioner looking to expand your expertise or a beginner eager to step into the world of LLMs, this repository is designed to provide valuable insights and guidelines to streamline your endeavors.

- GitHub projects

- Articles & Blogs

- Online Courses

- Books

- Research Papers

- Videos

- Tools & Software

- Conferences & Events

- Slides & Presentations

- Podcasts

- LlamaIndex 🦙: A data framework for your LLM applications. (23010 stars)

- Petals 🌸: Run LLMs at home, BitTorrent-style. Fine-tuning and inference up to 10x faster than offloading. (7768 stars)

- LLaMA-Factory: An easy-to-use LLM fine-tuning framework (LLaMA-2, BLOOM, Falcon, Baichuan, Qwen, ChatGLM3). (5532 stars)

- lit-gpt: Hackable implementation of state-of-the-art open-source LLMs based on nanoGPT. Supports flash attention, 4-bit and 8-bit quantization, LoRA and LLaMA-Adapter fine-tuning, pre-training. Apache 2.0-licensed. (3469 stars)

- H2O LLM Studio: A framework and no-code GUI for fine-tuning LLMs. Documentation: https://h2oai.github.io/h2o-llmstudio/ (2880 stars)

- Phoenix: AI Observability & Evaluation - Evaluate, troubleshoot, and fine tune your LLM, CV, and NLP models in a notebook. (1596 stars)

- LLM-Adapters: Code for the EMNLP 2023 Paper: "LLM-Adapters: An Adapter Family for Parameter-Efficient Fine-Tuning of Large Language Models". (769 stars)

- Platypus: Code for fine-tuning Platypus fam LLMs using LoRA. (589 stars)

- xtuner: A toolkit for efficiently fine-tuning LLM (InternLM, Llama, Baichuan, QWen, ChatGLM2). (540 stars)

- DB-GPT-Hub: A repository that contains models, datasets, and fine-tuning techniques for DB-GPT, with the purpose of enhancing model performance, especially in Text-to-SQL, and achieved higher exec acc than GPT-4 in spider eval with 13B LLM used this project. (422 stars)

- LLM-Finetuning-Hub : Repository that contains LLM fine-tuning and deployment scripts along with our research findings. ⭐ 416

- Finetune_LLMs : Repo for fine-tuning Casual LLMs. ⭐ 391

- MFTCoder : High Accuracy and efficiency multi-task fine-tuning framework for Code LLMs; 业内首个高精度、高效率、多任务、多模型支持、多训练算法,大模型代码能力微调框架. ⭐ 337

- llmware : Providing enterprise-grade LLM-based development framework, tools, and fine-tuned models. ⭐ 289

- LLM-Kit : 🚀WebUI integrated platform for latest LLMs | 各大语言模型的全流程工具 WebUI 整合包。支持主流大模型API接口和开源模型。支持知识库,数据库,角色扮演,mj文生图,LoRA和全参数微调,数据集制作,live2d等全流程应用工具. ⭐ 232

- h2o-wizardlm : Open-Source Implementation of WizardLM to turn documents into Q:A pairs for LLM fine-tuning. ⭐ 228

- hcgf : Humanable Chat Generative-model Fine-tuning | LLM微调. ⭐ 196

- llm_qlora : Fine-tuning LLMs using QLoRA. ⭐ 136

- awesome-llm-human-preference-datasets : A curated list of Human Preference Datasets for LLM fine-tuning, RLHF, and eval. ⭐ 124

- llm_finetuning : Convenient wrapper for fine-tuning and inference of Large Language Models (LLMs) with several quantization techniques (GTPQ, bitsandbytes). ⭐ 114

- Fine-Tune LLMs in 2024 with Hugging Face: TRL and Flash Attention 🤗: This blog post provides a comprehensive guide to fine-tune LLMs (e.g., Llama 2), using hugging face trl and flash attention on consumer size GPUs (24GB).

- Complete Guide to LLM Fine Tuning for Beginners 📚: A comprehensive guide that explains the process of fine-tuning a pre-trained model for new tasks, covering key concepts and providing a concrete example.

- Fine-Tuning Large Language Models (LLMs) 📖: This blog post presents an overview of fine-tuning pre-trained LLMs, discussing important concepts and providing a practical example with Python code.

- Creating a Domain Expert LLM: A Guide to Fine-Tuning 📝: An article that dives into the concept of fine-tuning using OpenAI's API, showcasing an example of fine-tuning a large language model for understanding the plot of a Handel opera.

- A Beginner's Guide to LLM Fine-Tuning 🌱: A guide that covers the process of fine-tuning LLMs, including the use of tools like QLoRA for configuring and fine-tuning models.

- Knowledge Graphs & LLMs: Fine-Tuning Vs. Retrieval-Augmented Generation 📖: This blog post explores the limitations of LLMs and provides insights into fine-tuning them in conjunction with knowledge graphs.

- Fine-tune an LLM on your personal data: create a “The Lord of the Rings” storyteller ✏️: An article that demonstrates how to train your own LLM on personal data, offering control over personal information without relying on OpenAI's GPT-4.

- Fine-tuning an LLM model with H2O LLM Studio to generate Cypher statements 🧪: This blog post provides an example of fine-tuning an LLM model using H2O LLM Studio for generating Cypher statements, enabling chatbot applications with knowledge graphs.

- Fine-Tune Your Own Llama 2 Model in a Colab Notebook 📝: A practical introduction to LLM fine-tuning, demonstrating how to implement it in a Google Colab notebook to create your own Llama 2 model.

- Thinking about fine-tuning a LLM? Here's 3 considerations before you get started 💡: This article discusses three ideas to consider when fine-tuning LLMs, including ways to improve GPT beyond PEFT and LoRA, and the importance of investing resources wisely.

- Introduction to LLMs and the generative AI : Part 3—Fine Tuning LLM with instruction 📚: This article explores the role of LLMs in artificial intelligence applications and provides an overview of fine-tuning them.

- RAG vs Finetuning — Which Is the Best Tool to Boost Your LLM Application - A blog post discussing the aspects to consider when building LLM applications and choosing the right method for your use case. 👨💻

- Finetuning an LLM: RLHF and alternatives (Part I) - An article showcasing alternative methods to RLHF, specifically Direct Preference Optimization (DPO). 🔄

- When Should You Fine-Tune LLMs? - Exploring the comparison between fine-tuning open-source LLMs and using a closed API for LLM Queries at Scale. 🤔

- Fine-Tuning Large Language Models - Considering the fine-tuning of large language models and comparing it to zero and few shot approaches. 🎯

- Private GPT: Fine-Tune LLM on Enterprise Data - Exploring training techniques that allow fine-tuning LLMs on smaller GPUs. 🖥️

- Fine-tune Google PaLM 2 with Scikit-LLM - Demonstrating how to fine-tune Google PaLM 2, the most advanced LLM from Google, using Scikit-LLM. 📈

- A Deep-Dive into Fine-Tuning of Large Language Models - A comprehensive blog on fine-tuning LLMs like GPT-4 & BERT, providing insights, trends, and benefits. 🚀

- Pre-training, fine-tuning and in-context learning in Large Language Models - Discussing the concepts of pre-training, fine-tuning, and in-context learning in LLMs. 📚

- List of Open Sourced Fine-Tuned Large Language Models - A curated list of open-sourced fine-tuned LLMs that can be run locally on your computer. 📋

- Practitioners guide to fine-tune LLMs for domain-specific use case - A guide covering key learnings and conclusions on fine-tuning LLMs for domain-specific use cases. 📝

- Finetune Llama 3.1 with a production stack on AWS, GCP or Azure - A guide and tutorial on finetuning Llama 3.1 (or Phi 3.5) in a production setup designed for MLOps best practices. 📓

- Fine-Tuning Fundamentals: Unlocking the Potential of LLMs | Udemy: A practical course for beginners on building chatGPT-style models and adapting them for specific use cases.

- Generative AI with Large Language Models | Coursera: Learn the fundamentals of generative AI with LLMs and how to deploy them in practical applications. Enroll for free.

- Large Language Models: Application through Production | edX: An advanced course for developers, data scientists, and engineers to build LLM-centric applications using popular frameworks and achieve end-to-end production readiness.

- Finetuning Large Language Models | Coursera Guided Project: A short guided project that covers essential finetuning concepts and training of large language models.

- OpenAI & ChatGPT API's: Expert Fine-tuning for Developers | Udemy: Discover the power of GPT-3 in creating conversational AI solutions, including topics like prompt engineering, fine-tuning, integration, and deploying ChatGPT models.

- Large Language Models Professional Certificate | edX: Learn how to build and productionize Large Language Model (LLM) based applications using the latest frameworks, techniques, and theory behind foundation models.

- Improving the Performance of Your LLM Beyond Fine Tuning | Udemy: A course designed for business leaders and developers interested in fine-tuning LLM models and exploring techniques for improving their performance.

- Introduction to Large Language Models | Coursera: An introductory level micro-learning course offered by Google Cloud, explaining the basics of Large Language Models (LLMs) and their use cases. Enroll for free.

- Syllabus | LLM101x | edX: Learn how to use data embeddings, vector databases, and fine-tune LLMs with domain-specific data to augment LLM pipelines.

- Performance Tuning Deep Learning Models Master Class | Udemy: A master class on tuning deep learning models, covering techniques to accelerate learning and optimize performance.

- Best Large Language Models (LLMs) Courses & Certifications: Curated from top educational institutions and industry leaders, this selection of LLMs courses aims to provide quality training for individuals and corporate teams looking to learn or improve their skills in fine-tuning LLMs.

- Mastering Language Models: Unleashing the Power of LLMs: In this comprehensive course, you'll delve into the fundamental principles of NLP and explore how LLMs have reshaped the landscape of AI applications. A comprehensive guide to advanced NLP and LLMs.

- LLMs Mastery: Complete Guide to Transformers & Generative AI: This course provides a great overview of AI history and covers fine-tuning the three major LLM models: BERT, GPT, and T5. Suitable for those interested in generative AI, LLMs, and production-level applications.

- Exploring The Technologies Behind ChatGPT, GPT4 & LLMs: The only course you need to learn about large language models like ChatGPT, GPT4, BERT, and more. Gain insights into the technologies behind these LLMs.

- Non-technical Introduction to Large Language Models: An overview of large language models for non-technical individuals, explaining the existing challenges and providing simple explanations without complex jargon.

- Large Language Models: Foundation Models from the Ground Up: Delve into the details of foundation models in LLMs, such as BERT, GPT, and T5. Gain an understanding of the latest advances that enhance LLM functionality.

- Generative AI with Large Language Models — New Hands-on Course by Deeplearning.ai and AWS

- A hands-on course that teaches how to fine-tune Large Language Models (LLMs) using reward models and reinforcement learning, with a focus on generative AI.

- From Data Selection To Fine Tuning: The Technical Guide To Constructing LLM Models

- A technical guide that covers the process of constructing LLM models, from data selection to fine-tuning.

- The LLM Knowledge Cookbook: From, RAG, to QLoRA, to Fine Tuning, and all the Recipes In Between!

- A comprehensive cookbook that explores various LLM models, including techniques like Retrieve and Generate (RAG) and Query Language Representation (QLoRA), as well as the fine-tuning process.

- Principles for Fine-tuning LLMs

- An article that demystifies the process of fine-tuning LLMs and explores different techniques, such as in-context learning, classic fine-tuning methods, parameter-efficient fine-tuning, and Reinforcement Learning with Human Feedback (RLHF).

- From Data Selection To Fine Tuning: The Technical Guide To Constructing LLM Models

- A technical guide that provides insights into building and training large language models (LLMs).

- Hands-On Large Language Models

- A book that covers the advancements in language AI systems driven by deep learning, focusing on large language models.

- Fine-tune Llama 2 for text generation on Amazon SageMaker JumpStart

- Learn how to fine-tune Llama 2 models using Amazon SageMaker JumpStart for optimized dialogue generation.

- Fast and cost-effective LLaMA 2 fine-tuning with AWS Trainium

- A blog post that explains how to achieve fast and cost-effective fine-tuning of LLaMA 2 models using AWS Trainium.

- Fine-tuning - Advanced Deep Learning with Python [Book] 💡: A book that explores the fine-tuning task following the pretraining task in advanced deep learning with Python.

- The LLM Knowledge Cookbook: From, RAG, to QLoRA, to Fine ... 💡: A comprehensive guide to using large language models (LLMs) for various tasks, covering everything from the basics to advanced fine-tuning techniques.

- Quick Start Guide to Large Language Models: Strategies and Best ... 💡: A guide focusing on strategies and best practices for large language models (LLMs) like BERT, T5, and ChatGPT, showcasing their unprecedented performance in various NLP tasks.

- 4. Advanced GPT-4 and ChatGPT Techniques - Developing Apps ... 💡: A chapter that dives into advanced techniques for GPT-4 and ChatGPT, including prompt engineering, zero-shot learning, few-shot learning, and task-specific fine-tuning.

- What are Large Language Models? - LLM AI Explained - AWS 💡: An explanation of large language models (LLMs), discussing the concepts of few-shot learning and fine-tuning to improve model performance.

- LLM-Adapters: An Adapter Family for Parameter-Efficient Fine-Tuning 📄: This paper presents LLM-Adapters, an easy-to-use framework that integrates various adapters into LLMs for parameter-efficient fine-tuning (PEFT) on different tasks.

- Two-stage LLM Fine-tuning with Less Specialization 📄: ProMoT, a two-stage fine-tuning framework, addresses the issue of format specialization in LLMs through Prompt Tuning with MOdel Tuning, improving their general in-context learning performance.

- Fine-tuning Large Enterprise Language Models via Ontological Reasoning 📄: This paper proposes a neurosymbolic architecture that combines Large Language Models (LLMs) with Enterprise Knowledge Graphs (EKGs) to achieve domain-specific fine-tuning of LLMs.

- QLoRA: Efficient Finetuning of Quantized LLMs 📄: QLoRA is an efficient finetuning approach that reduces memory usage while preserving task performance, offering insights on quantized pretrained language models.

- Full Parameter Fine-tuning for Large Language Models with Limited Resources 📄: This work introduces LOMO, a low-memory optimization technique, enabling the full parameter fine-tuning of large LLMs with limited GPU resources.

- LoRA: Low-Rank Adaptation of Large Language Models 📄: LoRA proposes a methodology to adapt large pre-trained models to specific tasks by injecting trainable rank decomposition matrices into each layer, reducing the number of trainable parameters while maintaining model quality.

- Enhancing LLM with Evolutionary Fine Tuning for News Summary Generation 📄: This paper presents a new paradigm for news summary generation using LLMs, incorporating genetic algorithms and powerful natural language understanding capabilities.

- How do languages influence each other? Studying cross-lingual data sharing during LLM fine-tuning 📄: This study investigates cross-lingual data sharing during fine-tuning of multilingual large language models (MLLMs) and analyzes the influence of different languages on model performance.

- Fine-Tuning Language Models with Just Forward Passes 📄: MeZO, a memory-efficient zeroth-order optimizer, enables fine-tuning of large language models while significantly reducing the memory requirements.

- Learning to Reason over Scene Graphs: A Case Study of Finetuning LLMs 📄: This work explores the applicability of GPT-2 LLMs in robotic task planning, demonstrating the potential for using LLMs in long-horizon task planning scenarios.

- Privately Fine-Tuning Large Language Models with: This paper explores the application of differential privacy to add privacy guarantees to fine-tuning large language models (LLMs).

- DISC-LawLLM: Fine-tuning Large Language Models for Intelligent Legal Systems: This paper presents DISC-LawLLM, an intelligent legal system that utilizes fine-tuned LLMs with legal reasoning capability to provide a wide range of legal services.

- Multi-Task Instruction Tuning of LLaMa for Specific Scenarios: A: The paper investigates the effectiveness of fine-tuning LLaMa, a foundational LLM, on specific writing tasks, demonstrating significant improvement in writing abilities.

- Training language models to follow instructions with human feedback: This paper proposes a method to align language models with user intent by fine-tuning them using human feedback, resulting in models preferred over larger models in human evaluations.

- Large Language Models Can Self-Improve: The paper demonstrates that LLMs can self-improve their reasoning abilities by fine-tuning using self-generated solutions, achieving state-of-the-art performance without ground truth labels.

- Embracing Large Language Models for Medical Applications: This paper highlights the potential of fine-tuned LLMs in medical applications, improving diagnostic accuracy and supporting clinical decision-making.

- Scaling Instruction-Finetuned Language Models: The paper explores instruction fine-tuning on LLMs, demonstrating significant improvements in performance and generalization to unseen tasks.

- Federated Fine-tuning of Billion-Sized Language Models across: This work introduces FwdLLM, a federated learning protocol designed to enhance the efficiency of fine-tuning large LLMs on mobile devices, improving memory and time efficiency.

- A Comprehensive Overview of Large Language Models: This paper provides an overview of the development and applications of large language models and their transfer learning capabilities.

- Fine-tuning language models to find agreement among humans with: The paper explores the fine-tuning of a large LLM to generate consensus statements that maximize approval for a group of people with diverse opinions.

- Intro to Large Language Models by Andrej Karpathy: This is a 1 hour introduction to Large Language Models. What they are, where they are headed, comparisons and analogies to present-day operating systems, and some of the security-related challenges of this new computing paradigm.

- Fine-tuning Llama 2 on Your Own Dataset | Train an LLM for Your ...: Learn how to fine-tune Llama 2 model on a custom dataset.

- Fine-tuning LLM with QLoRA on Single GPU: Training Falcon-7b on ...: This video demonstrates the fine-tuning process of the Falcon 7b LLM using QLoRA.

- Fine-tuning an LLM using PEFT | Introduction to Large Language ...: Discover how to fine-tune an LLM using PEFT, a technique that requires fewer resources.

- LLAMA-2 Open-Source LLM: Custom Fine-tuning Made Easy on a ...: A step-by-step guide on how to fine-tune the LLama 2 LLM model on your custom dataset.

- New Course: Finetuning Large Language Models - YouTube: This video introduces a course on fine-tuning LLMs, covering model selection, data preparation, training, and evaluation.

- Q: How to create an Instruction Dataset for Fine-tuning my LLM ...: In this tutorial, beginners learn about fine-tuning LLMs, including when, how, and why to do it.

- LLM Module 4: Fine-tuning and Evaluating LLMs | 4.13.1 Notebook ...: A notebook demo on fine-tuning and evaluating LLMs.

- Google LLM Fine-Tuning/Adapting/Customizing - Getting Started ...: Get started with fine-tuning Google's PaLM 2 large language model through a step-by-step guide.

- Pretraining vs Fine-tuning vs In-context Learning of LLM (GPT-x ...: An ultimate guide explaining pretraining, fine-tuning, and in-context learning of LLMs like GPT-x.

- How to Fine-Tune an LLM with a PDF - Langchain Tutorial - YouTube: Learn how to fine-tune OpenAI's GPT LLM to process PDF documents using Langchain and PDF libraries.

- EasyTune Walkthrough - YouTube - A walkthrough of fine-tuning LLM with QLoRA on a single GPU using Falcon-7b.

- Unlocking the Potential of ChatGPT Lessons in Training and Fine ... - THE STUDENT presents the instruction fine-tuning and in-context learning of LLMs with symbols.

- AI News: Creating LLMs without code! - YouTube - Maya Akim discusses the top 5 LLM fine-tuning use cases you need to know.

- Top 5 LLM Fine-Tuning Use Cases You Need to Know - YouTube - An in-depth video highlighting the top 5 LLM fine-tuning use cases with additional links for further exploration.

- clip2 llm emory - YouTube - Learn how to fine-tune Llama 2 on your own dataset and train an LLM for your specific use case.

- The EASIEST way to finetune LLAMA-v2 on a local machine! - YouTube - A step-by-step video guide demonstrating the easiest, simplest, and fastest way to fine-tune LLAMA-v2 on your local machine for a custom dataset.

- Training & Fine-Tuning LLMs: Introduction - YouTube - An introduction to training and fine-tuning LLMs, including important concepts and the NeurIPS LLM Efficiency Challenge.

- Fine-tuning LLMs with PEFT and LoRA - YouTube - A comprehensive video exploring how to use PEFT to fine-tune any decoder-style GPT model, including the basics of LoRA fine-tuning and uploading.

- Building and Curating Datasets for RLHF and LLM Fine-tuning ... - Learn about building and curating datasets for RLHF (Reinforcement Learning from Human Feedback) and LLM (Large Language Model) fine-tuning, with sponsorship by Argilla.

- Fine Tuning LLM (OpenAI GPT) with Custom Data in Python - YouTube - Explore how to extend LLM (OpenAI GPT) by fine-tuning it with a custom dataset to provide Q&A, summary, and other ChatGPT-like functions.

- LLaMA Efficient Tuning 🛠️: Easy-to-use LLM fine-tuning framework (LLaMA-2, BLOOM, Falcon).

- H2O LLM Studio 🛠️: Framework and no-code GUI for fine-tuning LLMs.

- PEFT 🛠️: Parameter-Efficient Fine-Tuning (PEFT) methods for efficient adaptation of pre-trained language models to downstream applications.

- ChatGPT-like model 🛠️: Run a fast ChatGPT-like model locally on your device.

- Petals: Run large language models like BLOOM-176B collaboratively, allowing you to load a small part of the model and team up with others for inference or fine-tuning. 🌸

- NVIDIA NeMo: A toolkit for building state-of-the-art conversational AI models and specifically designed for Linux. 🚀

- H2O LLM Studio: A framework and no-code GUI tool for fine-tuning large language models on Windows. 🎛️

- Ludwig AI: A low-code framework for building custom LLMs and other deep neural networks. Easily train state-of-the-art LLMs with a declarative YAML configuration file. 🤖

- bert4torch: An elegant PyTorch implementation of transformers. Load various open-source large model weights for reasoning and fine-tuning. 🔥

- Alpaca.cpp: Run a fast ChatGPT-like model locally on your device. A combination of the LLaMA foundation model and an open reproduction of Stanford Alpaca for instruction-tuned fine-tuning. 🦙

- promptfoo: Evaluate and compare LLM outputs, catch regressions, and improve prompts using automatic evaluations and representative user inputs. 📊

- ML/AI Conversation: Neuro-Symbolic AI - an Alternative to LLM - This meetup will discuss the experience with fine-tuning LLMs and explore neuro-symbolic AI as an alternative.

- AI Dev Day - Seattle, Mon, Oct 30, 2023, 5:00 PM - A tech talk on effective LLM observability and fine-tuning opportunities using vector similarity search.

- DeepLearning.AI Events - A series of events including mitigating LLM hallucinations, fine-tuning LLMs with PyTorch 2.0 and ChatGPT, and AI education programs.

- AI Dev Day - New York, Thu, Oct 26, 2023, 5:30 PM - Tech talks on best practices in GenAI applications and using LLMs for real-time, personalized notifications.

- Chat LLMs & AI Agents - Use Gen AI to Build AI Systems and Agents - An event focusing on LLMs, AI agents, and chain data, with opportunities for interaction through event chat.

- NYC AI/LLM/ChatGPT Developers Group - Regular tech talks/workshops for developers interested in AI, LLMs, ChatGPT, NLP, ML, Data, etc.

- Leveraging LLMs for Enterprise Data, Tue, Nov 14, 2023, 2:00 PM - Dive into essential LLM strategies tailored for non-public data applications, including prompt engineering and retrieval.

- Bellevue Applied Machine Learning Meetup - A meetup focusing on applied machine learning techniques and improving the skills of data scientists and ML practitioners.

- AI & Prompt Engineering Meetup Munich, Do., 5. Okt. 2023, 18:15 - Introduce H2O LLM Studio for fine-tuning LLMs and bring together AI enthusiasts from various backgrounds.

- Seattle AI/ML/Data Developers Group - Tech talks on evaluating LLM agents and learning AI/ML/Data through practice.

- Data Science Dojo - DC | Meetup: This is a DC-based meetup group for business professionals interested in teaching, learning, and sharing knowledge and understanding of data science.

- Find Data Science Events & Groups in Dubai, AE: Discover data science events and groups in Dubai, AE, to connect with people who share your interests.

- AI Meetup (in-person): Generative AI and LLMs - Halloween Edition: Join this AI meetup for a tech talk about generative AI and Large Language Models (LLMs), including open-source tools and best practices.

- ChatGPT Unleashed: Live Demo and Best Practices for NLP: This online event explores fine-tuning hacks for Large Language Models and showcases the practical applications of ChatGPT and LLMs.

- Find Data Science Events & Groups in Pune, IN: Explore online or in-person events and groups related to data science in Pune, IN.

- DC AI/ML/Data Developers Group | Meetup: This group aims to bring together AI enthusiasts in the D.C. area to learn and practice AI technologies, including AI, machine learning, deep learning, and data science.

- Boston AI/LLMs/ChatGPT Developers Group | Meetup: Join this group in Boston to learn and practice AI technologies like LLMs, ChatGPT, machine learning, deep learning, and data science.

- Paris NLP | Meetup: This meetup focuses on applications of natural language processing (NLP) in various fields, discussing techniques, research, and applications of both traditional and modern NLP approaches.

- SF AI/LLMs/ChatGPT Developers Group | Meetup: Connect with AI enthusiasts in the San Francisco/Bay area to learn and practice AI tech, including LLMs, ChatGPT, NLP, machine learning, deep learning, and data science.

- AI meetup (In-person): GenAI and LLMs for Health: Attend this tech talk about the application of LLMs in healthcare and learn about quick wins in using LLMs for health-related tasks.

- Fine tuning large LMs: Presentation discussing the process of fine-tuning large language models like GPT, BERT, and RoBERTa.

- LLaMa 2.pptx: Slides introducing LLaMa 2, a powerful large language model successor developed by Meta AI.

- LLM.pdf: Presentation exploring the role of Transformers in NLP, from BERT to GPT-3.

- Large Language Models Bootcamp: Bootcamp slides covering various aspects of large language models, including training from scratch and fine-tuning.

- The LHC Explained by CNN: Slides explaining the LHC (Large Hadron Collider) using CNN and fine-tuning image models.

- Using Large Language Models in 10 Lines of Code: Presentation demonstrating how to use large language models in just 10 lines of code.

- LLaMA-Adapter: Efficient Fine-tuning of Language Models with Zero-init Attention.pdf: Slides discussing LLaMA-Adapter, an efficient technique for fine-tuning language models with zero-init attention.

- Intro to LLMs: Presentation providing an introduction to large language models, including base models and fine-tuning with prompt-completion pairs.

- LLM Fine-Tuning (東大松尾研LLM講座 Day5資料) - Speaker Deck: Slides used for a lecture on fine-tuning large language models, specifically for the 東大松尾研サマースクール2023.

- Automate your Job and Business with ChatGPT #3: Presentation discussing the fundamentals of ChatGPT and its applications for job automation and business tasks.

- Unlocking the Power of Generative AI An Executive's Guide.pdf - A guide that explains the process of fine-tuning Large Language Models (LLMs) to tailor them to an organization's needs.

- Fine tune and deploy Hugging Face NLP models | PPT - A presentation that provides insights on how to build and deploy LLM models using Hugging Face NLP.

- 大規模言語モデル時代のHuman-in-the-Loop機械学習 - Speaker Deck - A slide deck discussing the process of fine-tuning Language Models to find agreement among humans with diverse preferences.

- AI and ML Series - Introduction to Generative AI and LLMs | PPT - A presentation introducing Generative AI and LLMs, including their usage in specific applications.

- Retrieval Augmented Generation in Practice: Scalable GenAI ... - A presentation discussing use cases for Generative AI, limitations of Large Language Models, and the use of Retrieval Augmented Generation (RAG) and fine-tuning techniques.

- LLM presentation final | PPT - A presentation covering the Child & Family Agency Act 2013 and the Best Interest Principle in the context of LLMs.

- LLM Paradigm Adaptations in Recommender Systems.pdf - A PDF explaining the fine-tuning process and objective adaptations in LLM-based recommender systems.

- Conversational AI with Transformer Models | PPT - A presentation highlighting the use of Transformer Models in Conversational AI applications.

- Llama-index | PPT - A presentation on the rise of LLMs and building LLM-powered applications.

- LLaMA-Adapter: Efficient Fine-tuning of Language Models with Zero-init Attention.pdf - A PDF discussing the efficient fine-tuning of Language Models with zero-init attention using LLaMA.

- Practical AI: Machine Learning, Data Science 🎧 - Making artificial intelligence practical, productive, and accessible to everyone. Engage in lively discussions about AI, machine learning, deep learning, neural networks, and more. Accessible insights and real-world scenarios for both beginners and seasoned practitioners.

- Gradient Dissent: Exploring Machine Learning, AI, Deep Learning 🎧 - Go behind the scenes to learn from industry leaders about how they are implementing deep learning in real-world scenarios. Gain insights into the machine learning industry and stay updated with the latest trends.

- Weaviate Podcast 🎧 - Join Connor Shorten for the Weaviate Podcast series, featuring interviews with experts and discussions on AI-related topics.

- Latent Space: The AI Engineer Podcast — CodeGen, Agents, Computer Vision, Data Science, AI UX and all things Software 3.0 🎧 - Dive into the world of AI engineering, covering topics like code generation, computer vision, data science, and the latest advancements in AI UX.

- Unsupervised Learning 🎧 - Gain insights into the rapidly developing AI landscape and its impact on businesses and the world. Explore discussions on LLM applications, trends, and disrupting technologies.

- The TWIML AI Podcast (formerly This Week in Machine Learning) 🎧 - Dive deep into fine-tuning approaches used in AI, LLM capabilities and limitations, and learn from experts in the field.

- AI and the Future of Work on Apple Podcasts: A podcast hosted by SC Moatti discussing the impact of AI on the future of work.

- Practical AI: Machine Learning, Data Science: Fine-tuning vs RAG: This episode explores the comparison between fine-tuning and retrieval augmented generation in machine learning and data science.

- Unsupervised Learning on Apple Podcasts: Episode 20 features an interview with Anthropic CEO Dario Amodei on the future of AGI and AI.

- Papers Read on AI | Podcast on Spotify: This podcast keeps you updated with the latest trends and best performing architectures in the field of computer science.

- This Day in AI Podcast on Apple Podcasts: Covering various AI-related topics, this podcast offers exciting insights into the world of AI.

- All About Evaluating LLM Applications // Shahul Es // #179 MLOps: In this episode, Shahul Es shares his expertise on evaluation in open source models, including insights on debugging, troubleshooting, and benchmarks.

- AI Daily on Apple Podcasts: Hosted by Conner, Ethan, and Farb, this podcast explores fascinating AI-related stories.

- Yannic Kilcher Videos (Audio Only) | Podcast on Spotify: Yannic Kilcher discusses machine learning research papers, programming, and the broader impact of AI in society.

- LessWrong Curated Podcast | Podcast on Spotify: Audio version of the posts shared in the LessWrong Curated newsletter.

- SAI: The Security and AI Podcast on Apple Podcasts: An episode focused on OpenAI's cybersecurity grant program.

This initial version of the Awesome List was generated with the help of the Awesome List Generator. It's an open-source Python package that uses the power of GPT models to automatically curate and generate starting points for resource lists related to a specific topic.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for awesome-llms-fine-tuning

Similar Open Source Tools

awesome-llms-fine-tuning

This repository is a curated collection of resources for fine-tuning Large Language Models (LLMs) like GPT, BERT, RoBERTa, and their variants. It includes tutorials, papers, tools, frameworks, and best practices to aid researchers, data scientists, and machine learning practitioners in adapting pre-trained models to specific tasks and domains. The resources cover a wide range of topics related to fine-tuning LLMs, providing valuable insights and guidelines to streamline the process and enhance model performance.

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

awesome-generative-ai-guide

This repository serves as a comprehensive hub for updates on generative AI research, interview materials, notebooks, and more. It includes monthly best GenAI papers list, interview resources, free courses, and code repositories/notebooks for developing generative AI applications. The repository is regularly updated with the latest additions to keep users informed and engaged in the field of generative AI.

oreilly-hands-on-gpt-llm

This repository contains code for the O'Reilly Live Online Training for Deploying GPT & LLMs. Learn how to use GPT-4, ChatGPT, OpenAI embeddings, and other large language models to build applications for experimenting and production. Gain practical experience in building applications like text generation, summarization, question answering, and more. Explore alternative generative models such as Cohere and GPT-J. Understand prompt engineering, context stuffing, and few-shot learning to maximize the potential of GPT-like models. Focus on deploying models in production with best practices and debugging techniques. By the end of the training, you will have the skills to start building applications with GPT and other large language models.

llms-tools

The 'llms-tools' repository is a comprehensive collection of AI tools, open-source projects, and research related to Large Language Models (LLMs) and Chatbots. It covers a wide range of topics such as AI in various domains, open-source models, chats & assistants, visual language models, evaluation tools, libraries, devices, income models, text-to-image, computer vision, audio & speech, code & math, games, robotics, typography, bio & med, military, climate, finance, and presentation. The repository provides valuable resources for researchers, developers, and enthusiasts interested in exploring the capabilities of LLMs and related technologies.

llm-course

The LLM course is divided into three parts: 1. 🧩 **LLM Fundamentals** covers essential knowledge about mathematics, Python, and neural networks. 2. 🧑🔬 **The LLM Scientist** focuses on building the best possible LLMs using the latest techniques. 3. 👷 **The LLM Engineer** focuses on creating LLM-based applications and deploying them. For an interactive version of this course, I created two **LLM assistants** that will answer questions and test your knowledge in a personalized way: * 🤗 **HuggingChat Assistant**: Free version using Mixtral-8x7B. * 🤖 **ChatGPT Assistant**: Requires a premium account. ## 📝 Notebooks A list of notebooks and articles related to large language models. ### Tools | Notebook | Description | Notebook | |----------|-------------|----------| | 🧐 LLM AutoEval | Automatically evaluate your LLMs using RunPod |  | | 🥱 LazyMergekit | Easily merge models using MergeKit in one click. |  | | 🦎 LazyAxolotl | Fine-tune models in the cloud using Axolotl in one click. |  | | ⚡ AutoQuant | Quantize LLMs in GGUF, GPTQ, EXL2, AWQ, and HQQ formats in one click. |  | | 🌳 Model Family Tree | Visualize the family tree of merged models. |  | | 🚀 ZeroSpace | Automatically create a Gradio chat interface using a free ZeroGPU. |  |

awesome-transformer-nlp

This repository contains a hand-curated list of great machine (deep) learning resources for Natural Language Processing (NLP) with a focus on Generative Pre-trained Transformer (GPT), Bidirectional Encoder Representations from Transformers (BERT), attention mechanism, Transformer architectures/networks, Chatbot, and transfer learning in NLP.

MediaAI

MediaAI is a repository containing lectures and materials for Aalto University's AI for Media, Art & Design course. The course is a hands-on, project-based crash course focusing on deep learning and AI techniques for artists and designers. It covers common AI algorithms & tools, their applications in art, media, and design, and provides hands-on practice in designing, implementing, and using these tools. The course includes lectures, exercises, and a final project based on students' interests. Students can complete the course without programming by creatively utilizing existing tools like ChatGPT and DALL-E. The course emphasizes collaboration, peer-to-peer tutoring, and project-based learning. It covers topics such as text generation, image generation, optimization, and game AI.

AI-Engineer-Headquarters

AI Engineer Headquarters is a comprehensive learning resource designed to help individuals master scientific methods, processes, algorithms, and systems to build stories and models in the field of Data and AI. The repository provides in-depth content through video sessions and text materials, catering to individuals aspiring to be in the top 1% of Data and AI experts. It covers various topics such as AI engineering foundations, large language models, retrieval-augmented generation, fine-tuning LLMs, reinforcement learning, ethical AI, agentic workflows, and career acceleration. The learning approach emphasizes action-oriented drills and routines, encouraging consistent effort and dedication to excel in the AI field.

Mastering-NLP-from-Foundations-to-LLMs

This code repository is for the book 'Mastering NLP from Foundations to LLMs', which provides an in-depth introduction to Natural Language Processing (NLP) techniques. It covers mathematical foundations of machine learning, advanced NLP applications such as large language models (LLMs) and AI applications, as well as practical skills for working on real-world NLP business problems. The book includes Python code samples and expert insights into current and future trends in NLP.

awesome-RLAIF

Reinforcement Learning from AI Feedback (RLAIF) is a concept that describes a type of machine learning approach where **an AI agent learns by receiving feedback or guidance from another AI system**. This concept is closely related to the field of Reinforcement Learning (RL), which is a type of machine learning where an agent learns to make a sequence of decisions in an environment to maximize a cumulative reward. In traditional RL, an agent interacts with an environment and receives feedback in the form of rewards or penalties based on the actions it takes. It learns to improve its decision-making over time to achieve its goals. In the context of Reinforcement Learning from AI Feedback, the AI agent still aims to learn optimal behavior through interactions, but **the feedback comes from another AI system rather than from the environment or human evaluators**. This can be **particularly useful in situations where it may be challenging to define clear reward functions or when it is more efficient to use another AI system to provide guidance**. The feedback from the AI system can take various forms, such as: - **Demonstrations** : The AI system provides demonstrations of desired behavior, and the learning agent tries to imitate these demonstrations. - **Comparison Data** : The AI system ranks or compares different actions taken by the learning agent, helping it to understand which actions are better or worse. - **Reward Shaping** : The AI system provides additional reward signals to guide the learning agent's behavior, supplementing the rewards from the environment. This approach is often used in scenarios where the RL agent needs to learn from **limited human or expert feedback or when the reward signal from the environment is sparse or unclear**. It can also be used to **accelerate the learning process and make RL more sample-efficient**. Reinforcement Learning from AI Feedback is an area of ongoing research and has applications in various domains, including robotics, autonomous vehicles, and game playing, among others.

god-level-ai

A drill of scientific methods, processes, algorithms, and systems to build stories & models. An in-depth learning resource for humans. This is a drill for people who aim to be in the top 1% of Data and AI experts. The repository provides a routine for deep and shallow work sessions, covering topics from Python to AI/ML System Design and Personal Branding & Portfolio. It emphasizes the importance of continuous effort and action in the tech field.

start-machine-learning

Start Machine Learning in 2024 is a comprehensive guide for beginners to advance in machine learning and artificial intelligence without any prior background. The guide covers various resources such as free online courses, articles, books, and practical tips to become an expert in the field. It emphasizes self-paced learning and provides recommendations for learning paths, including videos, podcasts, and online communities. The guide also includes information on building language models and applications, practicing through Kaggle competitions, and staying updated with the latest news and developments in AI. The goal is to empower individuals with the knowledge and resources to excel in machine learning and AI.

ai_gallery

AI Gallery is a showcase site built using React and Nextjs for static site generation, featuring interactive visualizations of classic algorithms, classic games implementation, and various interesting widgets. The project utilizes AI assistance from Claude 3.5 and GPT-4 to create components and enhance the development process. It aims to continually add more components with AI assistance, providing a platform for contributors to leverage AI in frontend development.

SuperKnowa

SuperKnowa is a fast framework to build Enterprise RAG (Retriever Augmented Generation) Pipelines at Scale, powered by watsonx. It accelerates Enterprise Generative AI applications to get prod-ready solutions quickly on private data. The framework provides pluggable components for tackling various Generative AI use cases using Large Language Models (LLMs), allowing users to assemble building blocks to address challenges in AI-driven text generation. SuperKnowa is battle-tested from 1M to 200M private knowledge base & scaled to billions of retriever tokens.

awesome-ai

Awesome AI is a curated list of artificial intelligence resources including courses, tools, apps, and open-source projects. It covers a wide range of topics such as machine learning, deep learning, natural language processing, robotics, conversational interfaces, data science, and more. The repository serves as a comprehensive guide for individuals interested in exploring the field of artificial intelligence and its applications across various domains.

For similar tasks

awesome-llms-fine-tuning

This repository is a curated collection of resources for fine-tuning Large Language Models (LLMs) like GPT, BERT, RoBERTa, and their variants. It includes tutorials, papers, tools, frameworks, and best practices to aid researchers, data scientists, and machine learning practitioners in adapting pre-trained models to specific tasks and domains. The resources cover a wide range of topics related to fine-tuning LLMs, providing valuable insights and guidelines to streamline the process and enhance model performance.

Gemini

Gemini is an open-source model designed to handle multiple modalities such as text, audio, images, and videos. It utilizes a transformer architecture with special decoders for text and image generation. The model processes input sequences by transforming them into tokens and then decoding them to generate image outputs. Gemini differs from other models by directly feeding image embeddings into the transformer instead of using a visual transformer encoder. The model also includes a component called Codi for conditional generation. Gemini aims to effectively integrate image, audio, and video embeddings to enhance its performance.

aligner

Aligner is a model-agnostic alignment tool that learns correctional residuals between preferred and dispreferred answers using a small model. It can be directly applied to various open-source and API-based models with only one-off training, suitable for rapid iteration and improving model performance. Aligner has shown significant improvements in helpfulness, harmlessness, and honesty dimensions across different large language models.

prompt-tuning-playbook

The LLM Prompt Tuning Playbook is a comprehensive guide for improving the performance of post-trained Language Models (LLMs) through effective prompting strategies. It covers topics such as pre-training vs. post-training, considerations for prompting, a rudimentary style guide for prompts, and a procedure for iterating on new system instructions. The playbook emphasizes the importance of clear, concise, and explicit instructions to guide LLMs in generating desired outputs. It also highlights the iterative nature of prompt development and the need for systematic evaluation of model responses.

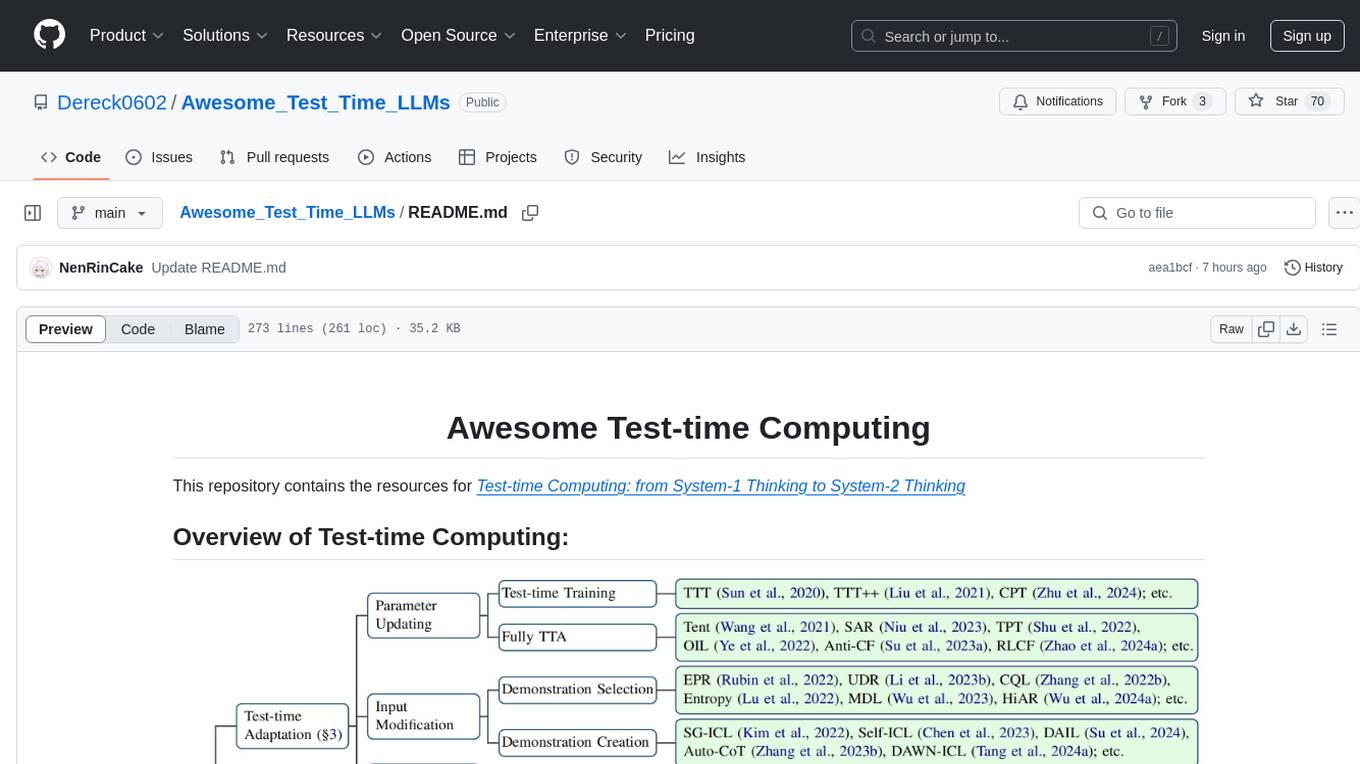

Awesome_Test_Time_LLMs

This repository focuses on test-time computing, exploring various strategies such as test-time adaptation, modifying the input, editing the representation, calibrating the output, test-time reasoning, and search strategies. It covers topics like self-supervised test-time training, in-context learning, activation steering, nearest neighbor models, reward modeling, and multimodal reasoning. The repository provides resources including papers and code for researchers and practitioners interested in enhancing the reasoning capabilities of large language models.

HuaTuoAI

HuaTuoAI is an artificial intelligence image classification system specifically designed for traditional Chinese medicine. It utilizes deep learning techniques, such as Convolutional Neural Networks (CNN), to accurately classify Chinese herbs and ingredients based on input images. The project aims to unlock the secrets of plants, depict the unknown realm of Chinese medicine using technology and intelligence, and perpetuate ancient cultural heritage.

mindsdb

MindsDB is a platform for customizing AI from enterprise data. You can create, serve, and fine-tune models in real-time from your database, vector store, and application data. MindsDB "enhances" SQL syntax with AI capabilities to make it accessible for developers worldwide. With MindsDB’s nearly 200 integrations, any developer can create AI customized for their purpose, faster and more securely. Their AI systems will constantly improve themselves — using companies’ own data, in real-time.

training-operator

Kubeflow Training Operator is a Kubernetes-native project for fine-tuning and scalable distributed training of machine learning (ML) models created with various ML frameworks such as PyTorch, Tensorflow, XGBoost, MPI, Paddle and others. Training Operator allows you to use Kubernetes workloads to effectively train your large models via Kubernetes Custom Resources APIs or using Training Operator Python SDK. > Note: Before v1.2 release, Kubeflow Training Operator only supports TFJob on Kubernetes. * For a complete reference of the custom resource definitions, please refer to the API Definition. * TensorFlow API Definition * PyTorch API Definition * Apache MXNet API Definition * XGBoost API Definition * MPI API Definition * PaddlePaddle API Definition * For details of all-in-one operator design, please refer to the All-in-one Kubeflow Training Operator * For details on its observability, please refer to the monitoring design doc.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.