d-Matrix

Transforming AI Economics with Ultra-Low Latency Inference

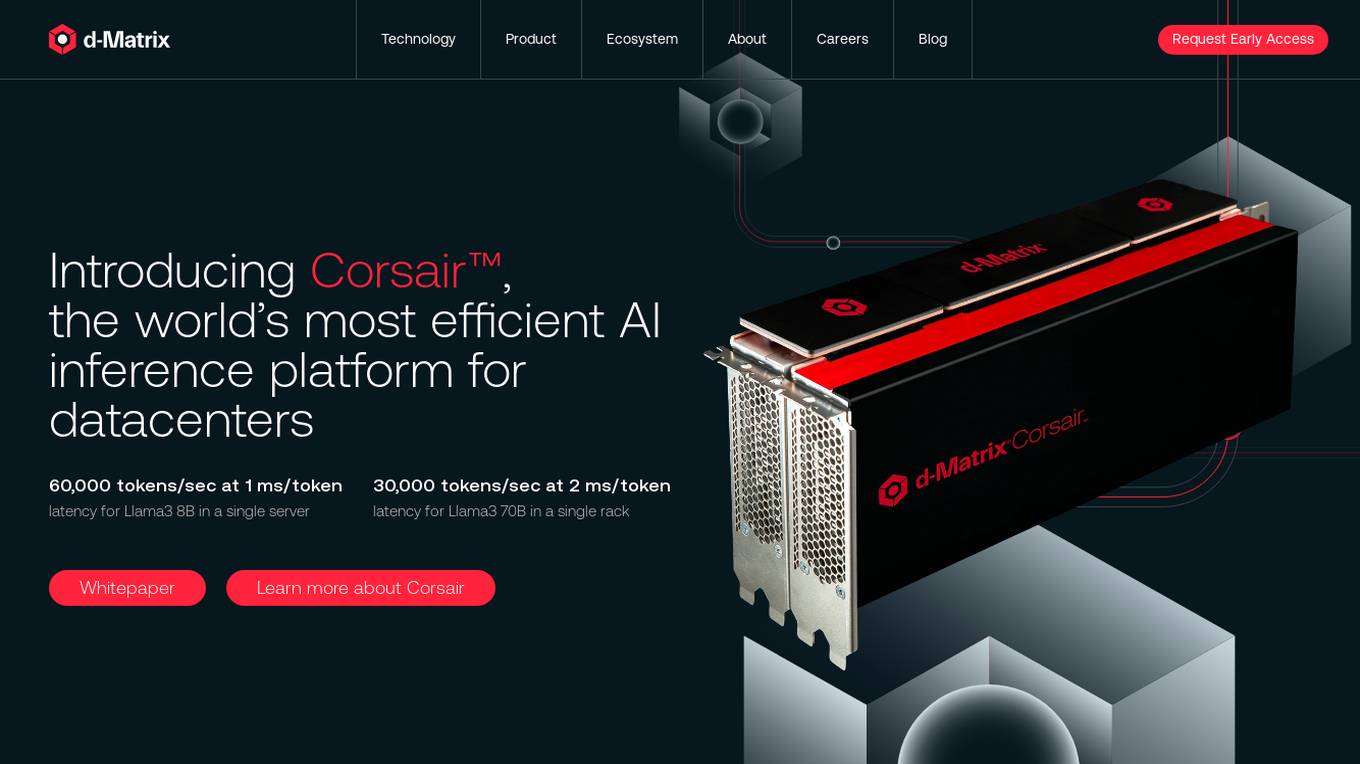

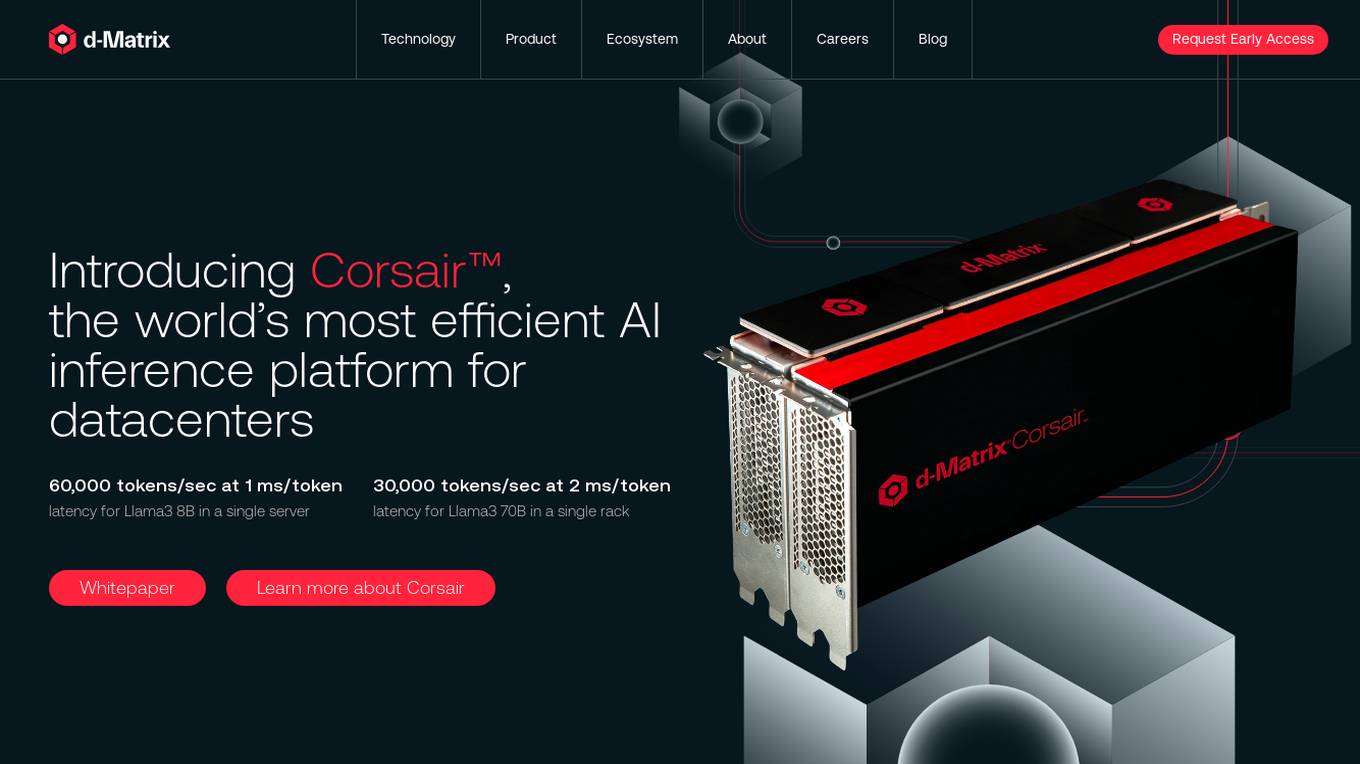

d-Matrix is an AI tool that offers ultra-low latency batched inference for generative AI technology. It introduces Corsair™, the world's most efficient AI inference platform for datacenters, providing high performance, efficiency, and scalability for large-scale inference tasks. The tool aims to transform the economics of AI inference by delivering fast, sustainable, and scalable AI solutions without compromising on speed or usability.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Features

Advantages

Disadvantages

Frequently Asked Questions

Alternative AI tools for d-Matrix

Similar sites

d-Matrix

d-Matrix is an AI tool that offers ultra-low latency batched inference for generative AI technology. It introduces Corsair™, the world's most efficient AI inference platform for datacenters, providing high performance, efficiency, and scalability for large-scale inference tasks. The tool aims to transform the economics of AI inference by delivering fast, sustainable, and scalable AI solutions without compromising on speed or usability.

Groq

Groq is a fast AI inference tool that offers instant intelligence for openly-available models like Llama 3.1. It provides ultra-low-latency inference for cloud deployments and is compatible with other providers like OpenAI. Groq's speed is proven to be instant through independent benchmarks, and it powers leading openly-available AI models such as Llama, Mixtral, Gemma, and Whisper. The tool has gained recognition in the industry for its high-speed inference compute capabilities and has received significant funding to challenge established players like Nvidia.

Groq

Groq is a fast AI inference tool that offers GroqCloud™ Platform and GroqRack™ Cluster for developers to build and deploy AI models with ultra-low-latency inference. It provides instant intelligence for openly-available models like Llama 3.1 and is known for its speed and compatibility with other AI providers. Groq powers leading openly-available AI models and has gained recognition in the AI chip industry. The tool has received significant funding and valuation, positioning itself as a strong challenger to established players like Nvidia.

Wallaroo.AI

Wallaroo.AI is an AI inference platform that offers production-grade AI inference microservices optimized on OpenVINO for cloud and Edge AI application deployments on CPUs and GPUs. It provides hassle-free AI inferencing for any model, any hardware, anywhere, with ultrafast turnkey inference microservices. The platform enables users to deploy, manage, observe, and scale AI models effortlessly, reducing deployment costs and time-to-value significantly.

Denvr DataWorks AI Cloud

Denvr DataWorks AI Cloud is a cloud-based AI platform that provides end-to-end AI solutions for businesses. It offers a range of features including high-performance GPUs, scalable infrastructure, ultra-efficient workflows, and cost efficiency. Denvr DataWorks is an NVIDIA Elite Partner for Compute, and its platform is used by leading AI companies to develop and deploy innovative AI solutions.

FriendliAI

FriendliAI is a generative AI infrastructure company that offers efficient, fast, and reliable generative AI inference solutions for production. Their cutting-edge technologies enable groundbreaking performance improvements, cost savings, and lower latency. FriendliAI provides a platform for building and serving compound AI systems, deploying custom models effortlessly, and monitoring and debugging model performance. The application guarantees consistent results regardless of the model used and offers seamless data integration for real-time knowledge enhancement. With a focus on security, scalability, and performance optimization, FriendliAI empowers businesses to scale with ease.

Anycores

Anycores is an AI tool designed to optimize the performance of deep neural networks and reduce the cost of running AI models in the cloud. It offers a platform that provides automated solutions for tuning and inference consultation, optimized networks zoo, and platform for reducing AI model cost. Anycores focuses on faster execution, reducing inference time over 10x times, and footprint reduction during model deployment. It is device agnostic, supporting Nvidia, AMD GPUs, Intel, ARM, AMD CPUs, servers, and edge devices. The tool aims to provide highly optimized, low footprint networks tailored to specific deployment scenarios.

Modular

Modular is a fast, scalable Gen AI inference platform that offers a comprehensive suite of tools and resources for AI development and deployment. It provides solutions for AI model development, deployment options, AI inference, research, and resources like documentation, models, tutorials, and step-by-step guides. Modular supports GPU and CPU performance, intelligent scaling to any cluster, and offers deployment options for various editions. The platform enables users to build agent workflows, utilize AI retrieval and controlled generation, develop chatbots, engage in code generation, and improve resource utilization through batch processing.

Nscale

Nscale is a full-stack, scalable, and sustainable AI cloud platform that offers a wide range of AI services and solutions. It provides services for developing, training, tuning, and deploying AI models using on-demand services. Nscale also offers serverless inference API endpoints, fine-tuning capabilities, private cloud solutions, and various GPU clusters engineered for AI. The platform aims to simplify the journey from AI model development to production, offering a marketplace for AI/ML tools and resources. Nscale's infrastructure includes data centers powered by renewable energy, high-performance GPU nodes, and optimized networking and storage solutions.

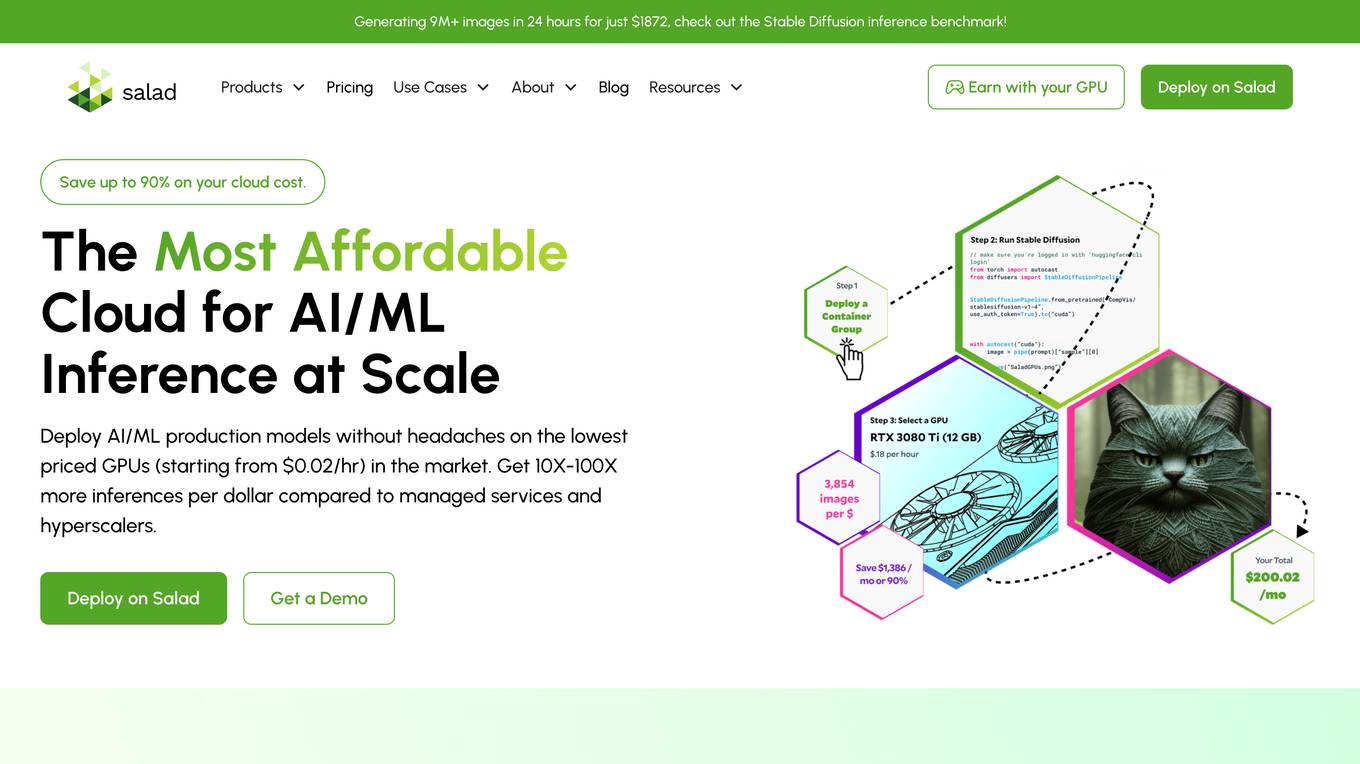

Salad

Salad is a distributed GPU cloud platform that offers fully managed and massively scalable services for AI applications. It provides the lowest priced AI transcription in the market, with features like image generation, voice AI, computer vision, data collection, and batch processing. Salad democratizes cloud computing by leveraging consumer GPUs to deliver cost-effective AI/ML inference at scale. The platform is trusted by hundreds of machine learning and data science teams for its affordability, scalability, and ease of deployment.

Embedl

Embedl is an AI tool that specializes in developing advanced solutions for efficient AI deployment in embedded systems. With a focus on deep learning optimization, Embedl offers a cost-effective solution that reduces energy consumption and accelerates product development cycles. The platform caters to industries such as automotive, aerospace, and IoT, providing cutting-edge AI products that drive innovation and competitive advantage.

SambaNova Systems

SambaNova Systems is an AI platform that revolutionizes AI workloads by offering an enterprise-grade full stack platform purpose-built for generative AI. It provides state-of-the-art AI and deep learning capabilities to help customers outcompete their peers. SambaNova delivers the only enterprise-grade full stack platform, from chips to models, designed for generative AI in the enterprise. The platform includes the SN40L Full Stack Platform with 1T+ parameter models, Composition of Experts, and Samba Apps. SambaNova also offers resources to accelerate AI journeys and solutions for various industries like financial services, healthcare, manufacturing, and more.

Galileo AI

Galileo AI is a platform that offers automated evaluations for AI applications, bringing automation and insight to AI evaluations to ensure reliable and confident shipping. It helps in eliminating 80% of evaluation time by replacing manual reviews with high-accuracy metrics, enabling rapid iteration, achieving real-time protection, and providing end-to-end visibility into agent completions. Galileo also allows developers to take control of AI complexity, de-risk AI in production, and deploy AI applications flexibly across different environments. The platform is trusted by enterprises and loved by developers for its accuracy, low-latency, and ability to run on L4 GPUs.

NeuReality

NeuReality is an AI-centric solution designed to democratize AI adoption by providing purpose-built tools for deploying and scaling inference workflows. Their innovative AI-centric architecture combines hardware and software components to optimize performance and scalability. The platform offers a one-stop shop for AI inference, addressing barriers to AI adoption and streamlining computational processes. NeuReality's tools enable users to deploy, afford, use, and manage AI more efficiently, making AI easy and accessible for a wide range of applications.

NetMind

NetMind is an AI tool that offers a Model Library, Enterprise AI Solutions, and AI Consulting services. It provides cutting-edge inference capabilities, model APIs for various data types, and GPU clusters for accelerated performance. The platform allows rapid deployment of models with flexible scaling options. NetMind caters to a wide range of industries, offering solutions that enhance accuracy, cut costs, and accelerate decision-making processes.

Novita AI

Novita AI is an AI cloud platform offering Model APIs, Serverless, and GPU Instance services in a cost-effective and integrated manner to accelerate AI businesses. It provides optimized models for high-quality dialogue use cases, full spectrum AI APIs for image, video, audio, and LLM applications, serverless auto-scaling based on demand, and customizable GPU solutions for complex AI tasks. The platform also includes a Startup Program, 24/7 service support, and has received positive feedback for its reasonable pricing and stable services.

For similar tasks

d-Matrix

d-Matrix is an AI tool that offers ultra-low latency batched inference for generative AI technology. It introduces Corsair™, the world's most efficient AI inference platform for datacenters, providing high performance, efficiency, and scalability for large-scale inference tasks. The tool aims to transform the economics of AI inference by delivering fast, sustainable, and scalable AI solutions without compromising on speed or usability.

DARROW

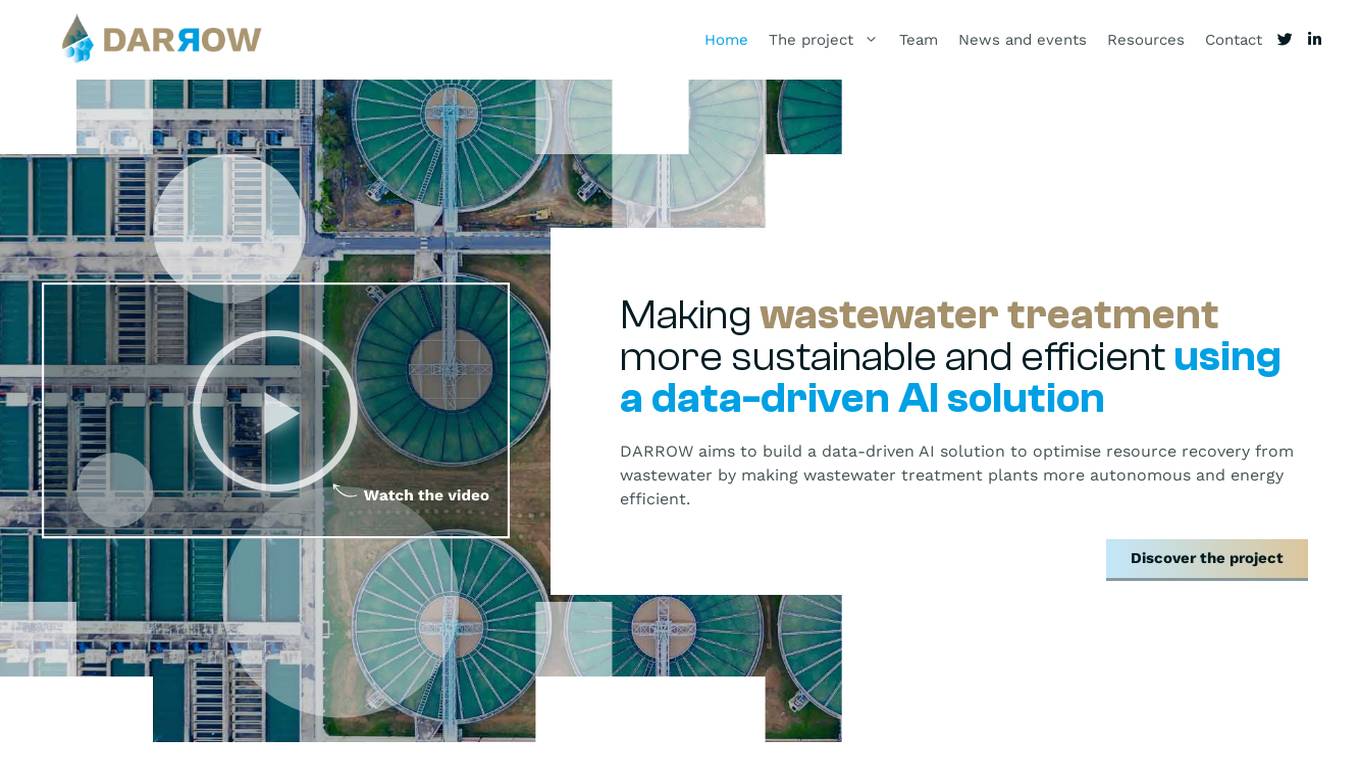

The website focuses on the DARROW project, which aims to make wastewater treatment more sustainable and efficient using a data-driven AI solution. It brings together experts from various disciplines to optimize resource recovery from wastewater, reduce energy consumption, and contribute to a circular economy. The project utilizes AI to enhance operational efficiency in wastewater treatment plants and explores innovative solutions for cleaner water management.

For similar jobs

Neurelo

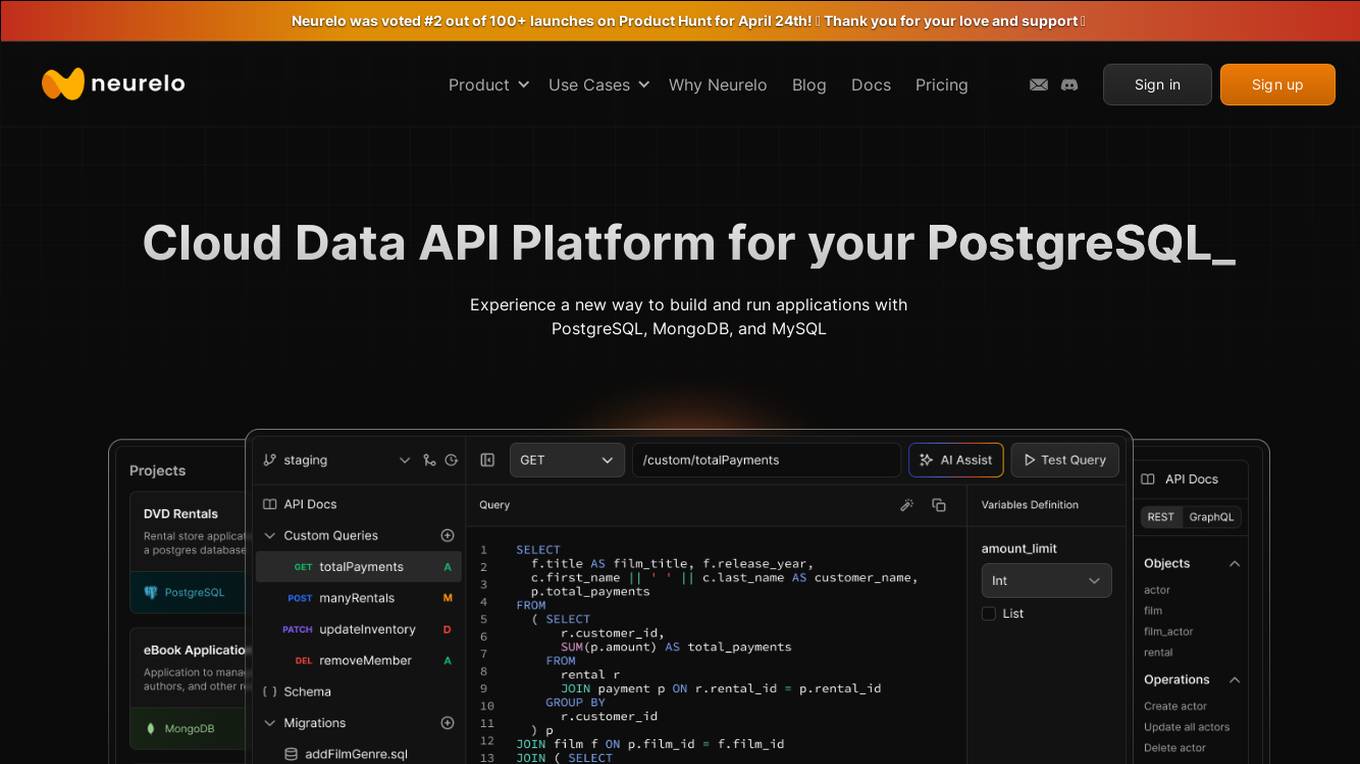

Neurelo is a cloud API platform that offers services for PostgreSQL, MongoDB, and MySQL. It provides features such as auto-generated APIs, custom query APIs with AI assistance, query observability, schema as code, and the ability to build full-stack applications in minutes. Neurelo aims to empower developers by simplifying database programming complexities and enhancing productivity. The platform leverages the power of cloud technology, APIs, and AI to offer a seamless and efficient way to build and run applications.

STELLARWITS

STELLARWITS is an AI solutions and software platform that empowers users to explore cutting-edge technology and innovation. The platform offers AI models with versatile capabilities, ranging from content generation to data analysis to problem-solving. Users can engage directly with the technology, experiencing its power in real-time. With a focus on transforming ideas into technology, STELLARWITS provides tailored solutions in software and AI development, delivering intelligent systems and machine learning models for innovative and efficient solutions. The platform also features a download hub with a curated selection of solutions to enhance the digital experience. Through blogs and company information, users can delve deeper into the narrative of STELLARWITS, exploring its mission, vision, and commitment to reshaping the tech landscape.

FPrime AI

FPrime AI is an AI application that aims to redefine Artificial Intelligence for enterprises by bridging the AI gap and empowering organizations of all sizes to leverage the transformative potential of AI. The application addresses common challenges such as the lack of AI vision, difficulty in finding and retaining AI talent, and data dilemmas. FPrime AI follows a customer-centric approach to tailor AI solutions, provide continuous support, and democratize access to AI knowledge and applications. The solution includes advanced AI technologies, automation tools, and analytics capabilities, with a team of AI experts and domain specialists collaborating closely with clients to address industry-specific needs and goals.

Cohere

Cohere is the leading AI platform for enterprise, offering products optimized for generative AI, search and discovery, and advanced retrieval. Their models are designed to enhance the global workforce, enabling businesses to thrive in the AI era. Cohere provides Command R+, Cohere Command, Cohere Embed, and Cohere Rerank for building efficient AI-powered applications. The platform also offers deployment options for enterprise-grade AI on any cloud or on-premises, along with developer resources like Playground, LLM University, and Developer Docs.

Provectus AI Solutions

Provectus is an Artificial Intelligence consultancy and solutions provider that helps businesses achieve their objectives through AI. They offer AI solutions that can be integrated into organizations through use case and platform approaches. Their solutions differentiate themselves by offering no license fees, open and certified architecture, cloud-native but vendor-agnostic solutions, turnkey solutions, and AI consulting and customization services. Provectus has successfully transformed industries such as insurance underwriting, digital banking, retail, healthcare, and manufacturing through their Generative AI technology.

AI Startup Ventures

The website is an AI tool that focuses on investing in startups, leading rounds from seed to growth, and sponsoring an accelerator for seed-stage AI companies. It also runs a supercomputer for AI startups and has made investments in various companies. The team behind the tool includes Nat Friedman, Daniel Gross, Hersh Desai, and Lenny Bogdonoff. In addition to investments, the tool is involved in giving grants to open source AI projects and other activities like reading the Herculaneum papyrii and building a city in North America.

Maven

Maven is an AI-powered platform offering live, online courses for professionals in various fields such as product design, engineering, marketing, business, leadership, and investing. The platform provides interactive cohorts with high student engagement, hands-on projects, and up-to-date case studies to help professionals acquire practical skills for immediate application in their jobs. Maven's curated frameworks and expert feedback aim to accelerate career growth by condensing years of operating experience into weeks. With a focus on AI-related courses, Maven offers certifications and training programs to help individuals confidently build AI products and lead AI organizations.

Pinecone

Pinecone is a vector database designed to build knowledgeable AI applications. It offers a serverless platform with high capacity and low cost, enabling users to perform low-latency vector search for various AI tasks. Pinecone is easy to start and scale, allowing users to create an account, upload vector embeddings, and retrieve relevant data quickly. The platform combines vector search with metadata filters and keyword boosting for better application performance. Pinecone is secure, reliable, and cloud-native, making it suitable for powering mission-critical AI applications.

SingleStore

SingleStore is a real-time data platform designed for apps, analytics, and gen AI. It offers faster hybrid vector + full-text search, fast-scaling integrations, and a free tier. SingleStore can read, write, and reason on petabyte-scale data in milliseconds. It supports streaming ingestion, high concurrency, first-class vector support, record lookups, and more.

LangChain

LangChain is an AI tool that offers a suite of products supporting developers in the LLM application lifecycle. It provides a framework to construct LLM-powered apps easily, visibility into app performance, and a turnkey solution for serving APIs. LangChain enables developers to build context-aware, reasoning applications and future-proof their applications by incorporating vendor optionality. LangSmith, a part of LangChain, helps teams improve accuracy and performance, iterate faster, and ship new AI features efficiently. The tool is designed to drive operational efficiency, increase discovery & personalization, and deliver premium products that generate revenue.

Vapi

Vapi is a Voice AI tool designed specifically for developers. It enables developers to interact with their code using voice commands, making the coding process more efficient and hands-free. With Vapi, developers can perform various tasks such as writing code, debugging, and running tests simply by speaking. The tool is equipped with advanced natural language processing capabilities to accurately interpret and execute voice commands. Vapi aims to revolutionize the way developers work by providing a seamless and intuitive coding experience.

Senior AI

Senior AI is a platform that leverages Artificial Intelligence to help individuals and companies develop and manage software products more efficiently and securely. It offers codebase awareness, bug analysis, security optimization, and productivity enhancements, making software development faster and more reliable. The platform provides different pricing tiers suitable for individuals, power users, small teams, growing teams, and large teams, with the option for enterprise solutions. Senior AI aims to supercharge software development with an AI-first approach, guiding users through the development process and providing tailored code suggestions and security insights.

System1

System1 is an industry-leading responsive acquisition marketing platform powered by AI and machine learning. Their Responsive Acquisition Marketing Platform (RAMP) is omni-channel and omni-vertical, designed for a privacy-centric world. It enables building powerful brands, developing privacy-focused products, and delivering high-intent customers to advertising partners. System1 is a technology company with a team of engineers, product managers, data scientists, and experts in the field. They focus on Best-In-Class hiring and business acquisition to achieve their ambitious goals.

Stack Overflow Blog

The Stack Overflow Blog is a platform that provides insights, updates, and discussions on various topics related to software development, technology, AI/ML, and career advice. It offers a space for developers and technologists to collaborate, share knowledge, and engage with the community. The blog covers a wide range of subjects, including product releases, podcast episodes, and industry trends. Users can explore articles, podcasts, and announcements to stay informed and connected with the tech community.

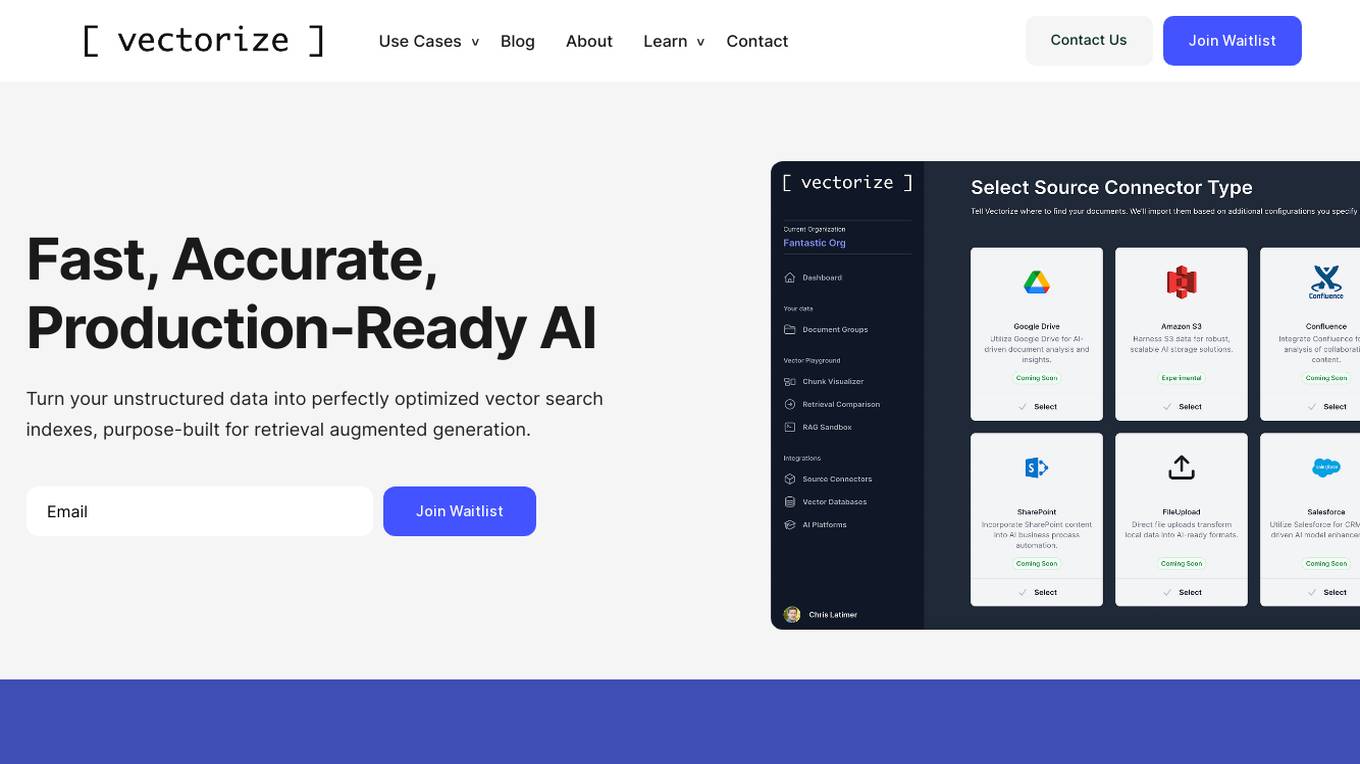

Vectorize

Vectorize is a fast, accurate, and production-ready AI tool that helps users turn unstructured data into optimized vector search indexes. It leverages Large Language Models (LLMs) to create copilots and enhance customer experiences by extracting natural language from various sources. With built-in support for top AI platforms and a variety of embedding models and chunking strategies, Vectorize enables users to deploy real-time vector pipelines for accurate search results. The tool also offers out-of-the-box connectors to popular knowledge repositories and collaboration platforms, making it easy to transform knowledge into AI-generated content.

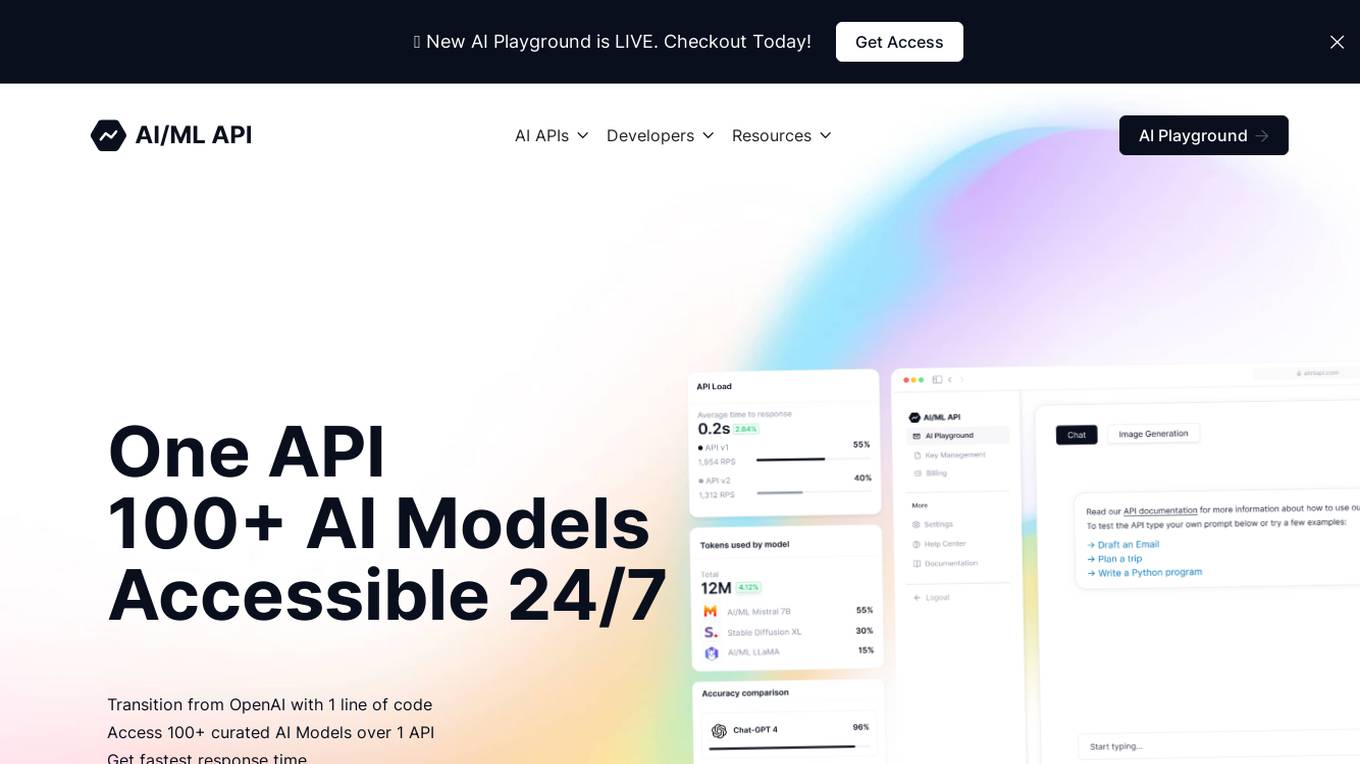

aimlapi.com

aimlapi.com is an AI tool that offers 100+ AI models accessible via one API. It provides developers with a wide range of AI models for various tasks such as chat, language, image generation, and code processing. The platform is designed to be user-friendly, cost-efficient, and scalable, making it suitable for developers of all levels. With a focus on transparency, affordability, and compatibility with OpenAI, aimlapi.com aims to provide high-quality AI solutions to its users.

Databricks

Databricks is a data and AI company that offers a Data Intelligence Platform to help users succeed with AI by developing generative AI applications, democratizing insights, and driving down costs. The platform maintains data lineage, quality, control, and privacy across the entire AI workflow, enabling users to create, tune, and deploy generative AI models. Databricks caters to industry leaders, providing tools and integrations to speed up success in data and AI. The company offers resources such as support, training, and community engagement to help users succeed in their data and AI journey.

Grok-1.5

The website features Grok-1.5, an AI application that bridges the gap between the digital and physical worlds through its multimodal model. Grok-1.5 boasts enhanced reasoning capabilities and a context length of 128,000 tokens. Additionally, the platform offers PromptIDE, an IDE for prompt engineering and interpretability research, allowing users to create and share complex prompts in Python. Grok, an AI modeled after the Hitchhiker’s Guide to the Galaxy, is also available on the site, providing answers to a wide range of questions and even suggesting relevant queries. The platform aims to facilitate knowledge sharing and exploration through advanced AI technologies.

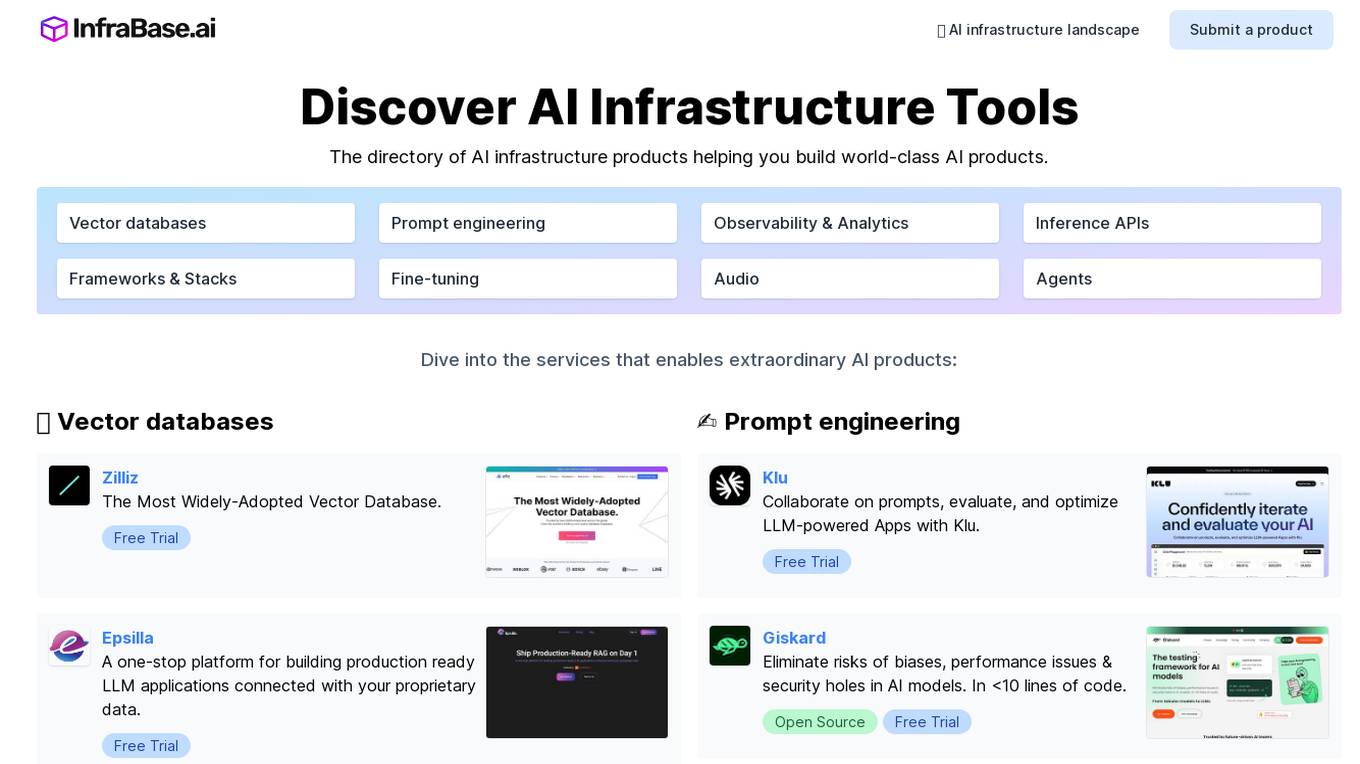

Infrabase.ai

Infrabase.ai is a directory of AI infrastructure products that helps users discover and explore a wide range of tools for building world-class AI products. The platform offers a comprehensive directory of products in categories such as Vector databases, Prompt engineering, Observability & Analytics, Inference APIs, Frameworks & Stacks, Fine-tuning, Audio, and Agents. Users can find tools for tasks like data storage, model development, performance monitoring, and more, making it a valuable resource for AI projects.

Derwen

Derwen is an open-source integration platform for production machine learning in enterprise, specializing in natural language processing, graph technologies, and decision support. It offers expertise in developing knowledge graph applications and domain-specific authoring. Derwen collaborates closely with Hugging Face and provides strong data privacy guarantees, low carbon footprint, and no cloud vendor involvement. The platform aims to empower AI engineers and domain experts with quality, time-to-value, and ownership since 2017.

Union.ai

Union.ai is an infrastructure platform designed for AI, ML, and data workloads. It offers a scalable MLOps platform that optimizes resources, reduces costs, and fosters collaboration among team members. Union.ai provides features such as declarative infrastructure, data lineage tracking, accelerated datasets, and more to streamline AI orchestration on Kubernetes. It aims to simplify the management of AI, ML, and data workflows in production environments by addressing complexities and offering cost-effective strategies.

SoundHound AI

SoundHound AI is a global leader in conversational intelligence, providing voice AI solutions for businesses to offer exceptional conversational experiences to their customers. Their proprietary technology enables best-in-class speed and accuracy in multiple languages across automotive, TV, IoT, and customer service industries. SoundHound offers innovative AI-driven products like Smart Answering, Smart Ordering, and Dynamic Interaction™, a real-time customer service interface. With SoundHound Chat AI, a powerful voice assistant integrated with Generative AI, the company powers millions of products and services, handling billions of interactions annually for top-tier businesses.

Attri

Attri is a leading Generative AI application specialized in custom AI solutions for enterprises. It harnesses the power of Generative AI and Foundation Models to drive innovation and accelerate digital transformation. Attri offers a range of AI solutions for various industries, focusing on responsible AI deployment and ethical innovation.

ALIAgents.ai

ALIAgents.ai is a platform that enables users to create and monetize AI agents on the blockchain. Users can design and deploy their own AI agents for various tasks such as customer service, data analysis, and more. The platform provides tools and resources to facilitate the development and deployment of AI agents, allowing users to tap into the potential of AI technology in a decentralized and secure manner.