evalchemy

Automatic evals for LLMs

Stars: 317

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

README:

A unified and easy-to-use toolkit for evaluating post-trained language models

Evalchemy is developed by the DataComp community and Bespoke Labs and builds on the LM-Eval-Harness.

- AIME25 and Alice in Wonderland have been added to available benchmarks.

-

API models via Curator: With

--model curatoryou can now evaluate with even more API based models via Curator, including all those supported by LiteLLM

python -m eval.eval \

--model curator \

--tasks AIME24,MATH500,GPQADiamond \

--model_name "gemini/gemini-2.0-flash-thinking-exp-01-21" \

--apply_chat_template False \

--model_args 'tokenized_requests=False' \

--output_path logs

- AIME24, AMC23, MATH500, LiveCodeBench, GPQADiamond, HumanEvalPlus, MBPPPlus, BigCodeBench, MultiPL-E, and CRUXEval have been added to our growing list of available benchmarks. This is part of the effort in the Open Thoughts project. See the our blog post on using Evalchemy for measuring reasoning models.

- vLLM models: High-performance inference and serving engine with PagedAttention technology

python -m eval.eval \

--model vllm \

--tasks alpaca_eval \

--model_args "pretrained=meta-llama/Meta-Llama-3-8B-Instruct" \

--batch_size 16 \

--output_path logs- OpenAI models: Full support for OpenAI's model lineup

python -m eval.eval \

--model openai-chat-completions \

--tasks alpaca_eval \

--model_args "model=gpt-4o-mini-2024-07-18,num_concurrent=32" \

--batch_size 16 \

--output_path logs - Unified Installation: One-step setup for all benchmarks, eliminating dependency conflicts

-

Parallel Evaluation:

- Data-Parallel: Distribute evaluations across multiple GPUs for faster results

- Model-Parallel: Handle large models that don't fit on a single GPU

- Simplified Usage: Run any benchmark with a consistent command-line interface

-

Results Management:

- Local results tracking with standardized output format

- Optional database integration for systematic tracking

- Leaderboard submission capability (requires database setup)

We suggest using conda (installation instructions).

# Create and activate conda environment

conda create --name evalchemy python=3.10

conda activate evalchemy

# Clone the repo

git clone [email protected]:mlfoundations/evalchemy.git

cd evalchemy

# Install dependencies

pip install -e ".[eval]"

pip install -e eval/chat_benchmarks/alpaca_eval

# Log into HuggingFace for datasets and models.

huggingface-cli login- All tasks from LM Evaluation Harness

- Custom instruction-based tasks (found in

eval/chat_benchmarks/):-

WildBench: Real-world task evaluation

-

AlpacaEval: Instruction following evaluation

-

HumanEval: Code generation and problem solving

-

HumanEvalPlus: HumanEval with more test cases

-

ZeroEval: Logical reasoning and problem solving

-

MBPPPlus: MBPP with more test cases

-

BigCodeBench: Benchmarking Code Generation with Diverse Function Calls and Complex Instructions

🚨 Warning: for BigCodeBench evaluation, we strongly recommend using a Docker container since the execution of LLM generated code on a machine can lead to destructive outcomes. More info is here.

-

MultiPL-E: Multi-Programming Language Evaluation of Large Language Models of Code

-

CRUXEval: Code Reasoning, Understanding, and Execution Evaluation

-

AIME24: Math Reasoning Dataset

-

AIME25: Math Reasoning Dataset

-

AMC23: Math Reasoning Dataset

-

MATH500: Math Reasoning Dataset split from Let's Verify Step by Step

-

LiveCodeBench: Benchmark of LLMs for code

-

LiveBench: A benchmark for LLMs designed with test set contamination and objective evaluation in mind

-

GPQA Diamond: A Graduate-Level Google-Proof Q&A Benchmark

-

Alice in Wonderland: Simple Tasks Showing Complete Reasoning Breakdown in LLMs

-

Arena-Hard-Auto (Coming soon): Automatic evaluation tool for instruction-tuned LLMs

-

SWE-Bench (Coming soon): Evaluating large language models on real-world software issues

-

SafetyBench (Coming soon): Evaluating the safety of LLMs

-

SciCode Bench (Coming soon): Evaluate language models in generating code for solving realistic scientific research problems

-

Berkeley Function Calling Leaderboard (Coming soon): Evaluating ability of LLMs to use APIs

We have recorded reproduced results against published numbers for these benchmarks in reproduced_benchmarks.md.

Make sure your OPENAI_API_KEY is set in your environment before running evaluations.

python -m eval.eval \

--model hf \

--tasks HumanEval,mmlu \

--model_args "pretrained=mistralai/Mistral-7B-Instruct-v0.3" \

--batch_size 2 \

--output_path logsThe results will be written out in output_path. If you have jq installed, you can view the results easily after evaluation. Example: jq '.results' logs/Qwen__Qwen2.5-7B-Instruct/results_2024-11-17T17-12-28.668908.json

Args:

-

--model: Which model type or provider is evaluated (example: hf) -

--tasks: Comma-separated list of tasks to be evaluated. -

--model_args: Model path and parameters. Comma-separated list of parameters passed to the model constructor. Accepts a string of the format"arg1=val1,arg2=val2,...". You can find the list supported arguments here. -

--batch_size: Batch size for inference -

--output_path: Directory to save evaluation results

Example running multiple benchmarks:

python -m eval.eval \

--model hf \

--tasks MTBench,WildBench,alpaca_eval \

--model_args "pretrained=mistralai/Mistral-7B-Instruct-v0.3" \

--batch_size 2 \

--output_path logsConfig shortcuts:

To be able to reuse commonly used settings without having to manually supply full arguments every time, we support reading eval configs from YAML files. These configs replace the --batch_size, --tasks, and --annoator_model arguments. Some example config files can be found in ./configs. To use these configs, you can use the --config flag as shown below:

python -m eval.eval \

--model hf \

--model_args "pretrained=mistralai/Mistral-7B-Instruct-v0.3" \

--output_path logs \

--config configs/light_gpt4omini0718.yamlWe add several more command examples in eval/examples to help you start using Evalchemy.

Through LM-Eval-Harness, we support all HuggingFace models and are currently adding support for all LM-Eval-Harness models, such as OpenAI and VLLM. For more information on such models, please check out the models page.

To choose a model, simply set 'pretrained=' where the model name can either be a HuggingFace model name or a path to a local model.

For faster evaluation using data parallelism (recommended):

accelerate launch --num-processes <num-gpus> --num-machines <num-nodes> \

--multi-gpu -m eval.eval \

--model hf \

--tasks MTBench,alpaca_eval \

--model_args 'pretrained=mistralai/Mistral-7B-Instruct-v0.3' \

--batch_size 2 \

--output_path logsFor models that don't fit on a single GPU, use model parallelism:

python -m eval.eval \

--model hf \

--tasks MTBench,alpaca_eval \

--model_args 'pretrained=mistralai/Mistral-7B-Instruct-v0.3,parallelize=True' \

--batch_size 2 \

--output_path logs💡 Note: While "auto" batch size is supported, we recommend manually tuning the batch size for optimal performance. The optimal batch size depends on the model size, GPU memory, and the specific benchmark. We used a maximum of 32 and a minimum of 4 (for RepoBench) to evaluate Llama-3-8B-Instruct on 8xH100 GPUs.

Our generated logs include critical information about each evaluation to help inform your experiments. We highlight important items in our generated logs.

-

Model Configuration

-

model: Model framework used -

model_args: Model arguments for the model framework -

batch_size: Size of processing batches -

device: Computing device specification -

annotator_model: Model used for annotation ("gpt-4o-mini-2024-07-18")

-

-

Seed Configuration

-

random_seed: General random seed -

numpy_seed: NumPy-specific seed -

torch_seed: PyTorch-specific seed -

fewshot_seed: Seed for few-shot examples

-

-

Model Details

-

model_num_parameters: Number of model parameters -

model_dtype: Model data type -

model_revision: Model version -

model_sha: Model commit hash

-

-

Version Control

-

git_hash: Repository commit hash -

date: Unix timestamp of evaluation -

transformers_version: Hugging Face Transformers version

-

-

Tokenizer Configuration

-

tokenizer_pad_token: Padding token details -

tokenizer_eos_token: End of sequence token -

tokenizer_bos_token: Beginning of sequence token -

eot_token_id: End of text token ID -

max_length: Maximum sequence length

-

-

Model Settings

-

model_source: Model source platform -

model_name: Full model identifier -

model_name_sanitized: Sanitized model name for file system usage -

chat_template: Conversation template -

chat_template_sha: Template hash

-

-

Timing Information

-

start_time: Evaluation start timestamp -

end_time: Evaluation end timestamp -

total_evaluation_time_seconds: Total duration

-

-

Hardware Environment

- PyTorch version and build configuration

- Operating system details

- GPU configuration

- CPU specifications

- CUDA and driver versions

- Relevant library versions

As part of Evalchemy, we want to make swapping in different Language Model Judges for standard benchmarks easy. Currently, we support two judge settings. The first is the default setting, where we use a benchmark's default judge. To activate this, you can either do nothing or pass in

--annotator_model autoIn addition to the default assignments, we support using gpt-4o-mini-2024-07-18 as a judge:

--annotator_model gpt-4o-mini-2024-07-18We are planning on adding support for different judges in the future!

Evalchemy makes running common benchmarks simple, fast, and versatile! We list the speeds and costs for each benchmark we achieve with Evalchemy for Meta-Llama-3-8B-Instruct on 8xH100 GPUs.

| Benchmark | Runtime (8xH100) | Batch Size | Total Tokens | Default Judge Cost ($) | GPT-4o-mini Judge Cost ($) | Notes |

|---|---|---|---|---|---|---|

| MTBench | 14:00 | 32 | ~196K | 6.40 | 0.05 | |

| WildBench | 38:00 | 32 | ~2.2M | 30.00 | 0.43 | Using GPT-4-mini judge |

| RepoBench | 46:00 | 4 | - | - | - | Lower batch size due to memory |

| MixEval | 13:00 | 32 | ~4-6M | 3.36 | 0.76 | Varies by judge model |

| AlpacaEval | 16:00 | 32 | ~936K | 9.40 | 0.14 | |

| HumanEval | 4:00 | 32 | - | - | - | No API costs |

| IFEval | 1:30 | 32 | - | - | - | No API costs |

| ZeroEval | 1:44:00 | 32 | - | - | - | Longest runtime |

| MBPP | 6:00 | 32 | - | - | - | No API costs |

| MMLU | 7:00 | 32 | - | - | - | No API costs |

| ARC | 4:00 | 32 | - | - | - | No API costs |

| DROP | 20:00 | 32 | - | - | - | No API costs |

Notes:

- Runtimes measured using 8x H100 GPUs with Meta-Llama-3-8B-Instruct model

- Batch sizes optimized for memory and speed

- API costs vary based on judge model choice

Cost-Saving Tips:

- Use gpt-4o-mini-2024-07-18 judge when possible for significant cost savings

- Adjust batch size based on available memory

- Consider using data-parallel evaluation for faster results

To run ZeroEval benchmarks, you need to:

- Request access to the ZebraLogicBench-private dataset on Hugging Face

- Accept the terms and conditions

- Log in to your Hugging Face account when running evaluations

To add a new evaluation system:

- Create a new directory under

eval/chat_benchmarks/ - Implement

eval_instruct.pywith two required functions:-

eval_instruct(model): Takes an LM Eval Model, returns results dict -

evaluate(results): Takes results dictionary, returns evaluation metrics

-

Use git subtree to manage external evaluation code:

# Add external repository

git subtree add --prefix=eval/chat_benchmarks/new_eval https://github.com/original/repo.git main --squash

# Pull updates

git subtree pull --prefix=eval/chat_benchmarks/new_eval https://github.com/original/repo.git main --squash

# Push contributions back

git subtree push --prefix=eval/chat_benchmarks/new_eval https://github.com/original/repo.git contribution-branchTo run evaluations in debug mode, add the --debug flag:

python -m eval.eval \

--model hf \

--tasks MTBench \

--model_args "pretrained=mistralai/Mistral-7B-Instruct-v0.3" \

--batch_size 2 \

--output_path logs \

--debugThis is particularly useful when testing new evaluation implementations, debugging model configurations, verifying dataset access, and testing database connectivity.

- Utilize batch processing for faster evaluation:

all_instances.append(

Instance(

"generate_until",

example,

(

inputs,

{

"max_new_tokens": 1024,

"do_sample": False,

},

),

idx,

)

)

outputs = self.compute(model, all_instances)- Use the LM-eval logger for consistent logging across evaluations

Evalchemy has been tested on CUDA 12.4. If you run into issues like this: undefined symbol: __nvJitLinkComplete_12_4, version libnvJitLink.so.12, try updating your CUDA version:

wget https://developer.download.nvidia.com/compute/cuda/repos/debian11/x86_64/cuda-keyring_1.1-1_all.deb

sudo dpkg -i cuda-keyring_1.1-1_all.deb

sudo add-apt-repository contrib

sudo apt-get update

sudo apt-get -y install cuda-toolkit-12-4To track experiments and evaluations, we support logging results to a PostgreSQL database. Details on the entry schemas and database setup can be found in database/.

Thank you to all the contributors for making this project possible! Please follow these instructions on how to contribute.

If you find Evalchemy useful, please consider citing us!

@software{Evalchemy,

author = {Guha, Etash and Raoof, Negin and Mercat, Jean and Frankel, Eric and Keh, Sedrick and Grover, Sachin and Smyrnis, George and Vu, Trung and Marten, Ryan and Saad-Falcon, Jon and Choi, Caroline and Arora, Kushal and Merrill, Mike and Deng, Yichuan and Suvarna, Ashima and Bansal, Hritik and Nezhurina, Marianna and Choi, Yejin and Heckel, Reinhard and Oh, Seewong and Hashimoto, Tatsunori and Jitsev, Jenia and Shankar, Vaishaal and Dimakis, Alex and Sathiamoorthy, Mahesh and Schmidt, Ludwig},

month = nov,

title = {{Evalchemy}},

year = {2024}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for evalchemy

Similar Open Source Tools

evalchemy

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

xFasterTransformer

xFasterTransformer is an optimized solution for Large Language Models (LLMs) on the X86 platform, providing high performance and scalability for inference on mainstream LLM models. It offers C++ and Python APIs for easy integration, along with example codes and benchmark scripts. Users can prepare models in a different format, convert them, and use the APIs for tasks like encoding input prompts, generating token ids, and serving inference requests. The tool supports various data types and models, and can run in single or multi-rank modes using MPI. A web demo based on Gradio is available for popular LLM models like ChatGLM and Llama2. Benchmark scripts help evaluate model inference performance quickly, and MLServer enables serving with REST and gRPC interfaces.

vibeship-spark-intelligence

Spark Intelligence is a self-evolving AI companion that runs 100% on your machine as a local AI companion. It captures, distills, transforms, and delivers advisory context to help you act with better context. It is designed to convert experience into adaptive operational behavior, not just stored memory. The tool is beyond a learning loop, continuously learning and growing smarter through use. It provides a distillation pipeline, transformation layer, advisory delivery, EIDOS loop, domain chips, observability surfaces, and a CLI for easy interaction. The architecture involves event capture, queue, bridge worker, pipeline, quality gate, cognitive learner, and more. The Obsidian Observatory integration allows users to browse, search, and query insights, decisions, and quality verdicts in a human-readable vault.

RepairAgent

RepairAgent is an autonomous LLM-based agent for automated program repair targeting the Defects4J benchmark. It uses an LLM-driven loop to localize, analyze, and fix Java bugs. The tool requires Docker, VS Code with Dev Containers extension, OpenAI API key, disk space of ~40 GB, and internet access. Users can get started with RepairAgent using either VS Code Dev Container or Docker Image. Running RepairAgent involves checking out the buggy project version, autonomous bug analysis, fix candidate generation, and testing against the project's test suite. Users can configure hyperparameters for budget control, repetition handling, commands limit, and external fix strategy. The tool provides output structure, experiment overview, individual analysis scripts, and data on fixed bugs from the Defects4J dataset.

factorio-learning-environment

Factorio Learning Environment is an open source framework designed for developing and evaluating LLM agents in the game of Factorio. It provides two settings: Lab-play with structured tasks and Open-play for building large factories. Results show limitations in spatial reasoning and automation strategies. Agents interact with the environment through code synthesis, observation, action, and feedback. Tools are provided for game actions and state representation. Agents operate in episodes with observation, planning, and action execution. Tasks specify agent goals and are implemented in JSON files. The project structure includes directories for agents, environment, cluster, data, docs, eval, and more. A database is used for checkpointing agent steps. Benchmarks show performance metrics for different configurations.

metis

Metis is an open-source, AI-driven tool for deep security code review, created by Arm's Product Security Team. It helps engineers detect subtle vulnerabilities, improve secure coding practices, and reduce review fatigue. Metis uses LLMs for semantic understanding and reasoning, RAG for context-aware reviews, and supports multiple languages and vector store backends. It provides a plugin-friendly and extensible architecture, named after the Greek goddess of wisdom, Metis. The tool is designed for large, complex, or legacy codebases where traditional tooling falls short.

sec-code-bench

SecCodeBench is a benchmark suite for evaluating the security of AI-generated code, specifically designed for modern Agentic Coding Tools. It addresses challenges in existing security benchmarks by ensuring test case quality, employing precise evaluation methods, and covering Agentic Coding Tools. The suite includes 98 test cases across 5 programming languages, focusing on functionality-first evaluation and dynamic execution-based validation. It offers a highly extensible testing framework for end-to-end automated evaluation of agentic coding tools, generating comprehensive reports and logs for analysis and improvement.

MassGen

MassGen is a cutting-edge multi-agent system that leverages the power of collaborative AI to solve complex tasks. It assigns a task to multiple AI agents who work in parallel, observe each other's progress, and refine their approaches to converge on the best solution to deliver a comprehensive and high-quality result. The system operates through an architecture designed for seamless multi-agent collaboration, with key features including cross-model/agent synergy, parallel processing, intelligence sharing, consensus building, and live visualization. Users can install the system, configure API settings, and run MassGen for various tasks such as question answering, creative writing, research, development & coding tasks, and web automation & browser tasks. The roadmap includes plans for advanced agent collaboration, expanded model, tool & agent integration, improved performance & scalability, enhanced developer experience, and a web interface.

postgresai

PostgresAI is an AI-native PostgreSQL observability tool designed for monitoring, health checks, and root cause analysis. It provides structured reports and metrics for AI consumption, tracks problems from detection to resolution, offers over 45 health checks including bloat, indexes, queries, settings, and security, and features Active Session History similar to Oracle ASH. PostgresAI is part of the Self-Driving Postgres initiative, aiming to make Postgres autonomous. It includes expert dashboards following the Four Golden Signals methodology and is battle-tested with companies like GitLab, Miro, Chewy, and more.

r2ai

r2ai is a tool designed to run a language model locally without internet access. It can be used to entertain users or assist in answering questions related to radare2 or reverse engineering. The tool allows users to prompt the language model, index large codebases, slurp file contents, embed the output of an r2 command, define different system-level assistant roles, set environment variables, and more. It is accessible as an r2lang-python plugin and can be scripted from various languages. Users can use different models, adjust query templates dynamically, load multiple models, and make them communicate with each other.

ps-fuzz

The Prompt Fuzzer is an open-source tool that helps you assess the security of your GenAI application's system prompt against various dynamic LLM-based attacks. It provides a security evaluation based on the outcome of these attack simulations, enabling you to strengthen your system prompt as needed. The Prompt Fuzzer dynamically tailors its tests to your application's unique configuration and domain. The Fuzzer also includes a Playground chat interface, giving you the chance to iteratively improve your system prompt, hardening it against a wide spectrum of generative AI attacks.

mcp-apache-spark-history-server

The MCP Server for Apache Spark History Server is a tool that connects AI agents to Apache Spark History Server for intelligent job analysis and performance monitoring. It enables AI agents to analyze job performance, identify bottlenecks, and provide insights from Spark History Server data. The server bridges AI agents with existing Apache Spark infrastructure, allowing users to query job details, analyze performance metrics, compare multiple jobs, investigate failures, and generate insights from historical execution data.

EasyInstruct

EasyInstruct is a Python package proposed as an easy-to-use instruction processing framework for Large Language Models (LLMs) like GPT-4, LLaMA, ChatGLM in your research experiments. EasyInstruct modularizes instruction generation, selection, and prompting, while also considering their combination and interaction.

iloom-cli

iloom is a tool designed to streamline AI-assisted development by focusing on maintaining alignment between human developers and AI agents. It treats context as a first-class concern, persisting AI reasoning in issue comments rather than temporary chats. The tool allows users to collaborate with AI agents in an isolated environment, switch between complex features without losing context, document AI decisions publicly, and capture key insights and lessons learned from AI sessions. iloom is not just a tool for managing git worktrees, but a control plane for maintaining alignment between users and their AI assistants.

paperless-gpt

paperless-gpt is a tool designed to generate accurate and meaningful document titles and tags for paperless-ngx using Large Language Models (LLMs). It supports multiple LLM providers, including OpenAI and Ollama. With paperless-gpt, you can streamline your document management by automatically suggesting appropriate titles and tags based on the content of your scanned documents. The tool offers features like multiple LLM support, customizable prompts, easy integration with paperless-ngx, user-friendly interface for reviewing and applying suggestions, dockerized deployment, automatic document processing, and an experimental OCR feature.

llm-leaderboard

Nejumi Leaderboard 3 is a comprehensive evaluation platform for large language models, assessing general language capabilities and alignment aspects. The evaluation framework includes metrics for language processing, translation, summarization, information extraction, reasoning, mathematical reasoning, entity extraction, knowledge/question answering, English, semantic analysis, syntactic analysis, alignment, ethics/moral, toxicity, bias, truthfulness, and robustness. The repository provides an implementation guide for environment setup, dataset preparation, configuration, model configurations, and chat template creation. Users can run evaluation processes using specified configuration files and log results to the Weights & Biases project.

For similar tasks

evalchemy

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

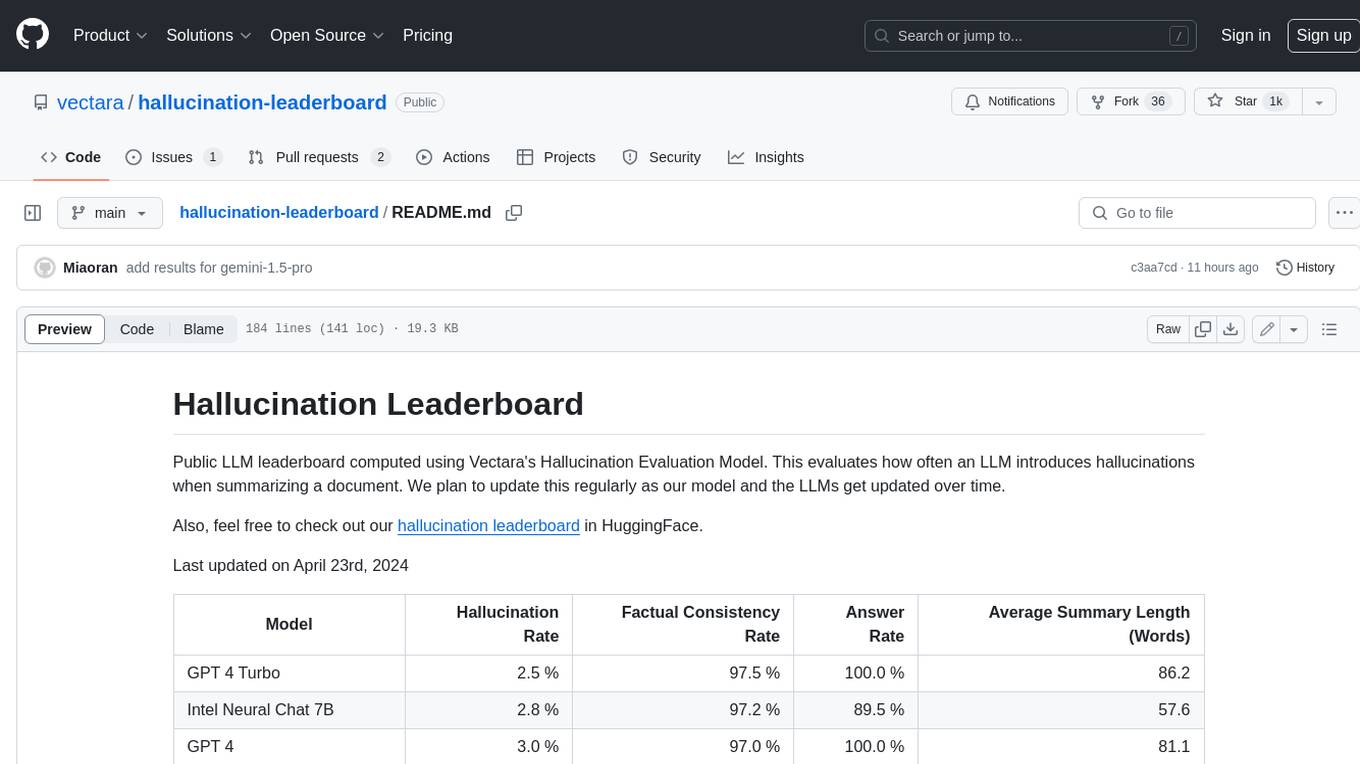

hallucination-leaderboard

This leaderboard evaluates the hallucination rate of various Large Language Models (LLMs) when summarizing documents. It uses a model trained by Vectara to detect hallucinations in LLM outputs. The leaderboard includes models from OpenAI, Anthropic, Google, Microsoft, Amazon, and others. The evaluation is based on 831 documents that were summarized by all the models. The leaderboard shows the hallucination rate, factual consistency rate, answer rate, and average summary length for each model.

h2o-llmstudio

H2O LLM Studio is a framework and no-code GUI designed for fine-tuning state-of-the-art large language models (LLMs). With H2O LLM Studio, you can easily and effectively fine-tune LLMs without the need for any coding experience. The GUI is specially designed for large language models, and you can finetune any LLM using a large variety of hyperparameters. You can also use recent finetuning techniques such as Low-Rank Adaptation (LoRA) and 8-bit model training with a low memory footprint. Additionally, you can use Reinforcement Learning (RL) to finetune your model (experimental), use advanced evaluation metrics to judge generated answers by the model, track and compare your model performance visually, and easily export your model to the Hugging Face Hub and share it with the community.

llm-jp-eval

LLM-jp-eval is a tool designed to automatically evaluate Japanese large language models across multiple datasets. It provides functionalities such as converting existing Japanese evaluation data to text generation task evaluation datasets, executing evaluations of large language models across multiple datasets, and generating instruction data (jaster) in the format of evaluation data prompts. Users can manage the evaluation settings through a config file and use Hydra to load them. The tool supports saving evaluation results and logs using wandb. Users can add new evaluation datasets by following specific steps and guidelines provided in the tool's documentation. It is important to note that using jaster for instruction tuning can lead to artificially high evaluation scores, so caution is advised when interpreting the results.

Awesome-LLM

Awesome-LLM is a curated list of resources related to large language models, focusing on papers, projects, frameworks, tools, tutorials, courses, opinions, and other useful resources in the field. It covers trending LLM projects, milestone papers, other papers, open LLM projects, LLM training frameworks, LLM evaluation frameworks, tools for deploying LLM, prompting libraries & tools, tutorials, courses, books, and opinions. The repository provides a comprehensive overview of the latest advancements and resources in the field of large language models.

bocoel

BoCoEL is a tool that leverages Bayesian Optimization to efficiently evaluate large language models by selecting a subset of the corpus for evaluation. It encodes individual entries into embeddings, uses Bayesian optimization to select queries, retrieves from the corpus, and provides easily managed evaluations. The tool aims to reduce computation costs during evaluation with a dynamic budget, supporting models like GPT2, Pythia, and LLAMA through integration with Hugging Face transformers and datasets. BoCoEL offers a modular design and efficient representation of the corpus to enhance evaluation quality.

cladder

CLadder is a repository containing the CLadder dataset for evaluating causal reasoning in language models. The dataset consists of yes/no questions in natural language that require statistical and causal inference to answer. It includes fields such as question_id, given_info, question, answer, reasoning, and metadata like query_type and rung. The dataset also provides prompts for evaluating language models and example questions with associated reasoning steps. Additionally, it offers dataset statistics, data variants, and code setup instructions for using the repository.

uncheatable_eval

Uncheatable Eval is a tool designed to assess the language modeling capabilities of LLMs on real-time, newly generated data from the internet. It aims to provide a reliable evaluation method that is immune to data leaks and cannot be gamed. The tool supports the evaluation of Hugging Face AutoModelForCausalLM models and RWKV models by calculating the sum of negative log probabilities on new texts from various sources such as recent papers on arXiv, new projects on GitHub, news articles, and more. Uncheatable Eval ensures that the evaluation data is not included in the training sets of publicly released models, thus offering a fair assessment of the models' performance.

For similar jobs

llm-jp-eval

LLM-jp-eval is a tool designed to automatically evaluate Japanese large language models across multiple datasets. It provides functionalities such as converting existing Japanese evaluation data to text generation task evaluation datasets, executing evaluations of large language models across multiple datasets, and generating instruction data (jaster) in the format of evaluation data prompts. Users can manage the evaluation settings through a config file and use Hydra to load them. The tool supports saving evaluation results and logs using wandb. Users can add new evaluation datasets by following specific steps and guidelines provided in the tool's documentation. It is important to note that using jaster for instruction tuning can lead to artificially high evaluation scores, so caution is advised when interpreting the results.

AlignBench

AlignBench is the first comprehensive evaluation benchmark for assessing the alignment level of Chinese large models across multiple dimensions. It includes introduction information, data, and code related to AlignBench. The benchmark aims to evaluate the alignment performance of Chinese large language models through a multi-dimensional and rule-calibrated evaluation method, enhancing reliability and interpretability.

LiveBench

LiveBench is a benchmark tool designed for Language Model Models (LLMs) with a focus on limiting contamination through monthly new questions based on recent datasets, arXiv papers, news articles, and IMDb movie synopses. It provides verifiable, objective ground-truth answers for accurate scoring without an LLM judge. The tool offers 18 diverse tasks across 6 categories and promises to release more challenging tasks over time. LiveBench is built on FastChat's llm_judge module and incorporates code from LiveCodeBench and IFEval.

evalchemy

Evalchemy is a unified and easy-to-use toolkit for evaluating language models, focusing on post-trained models. It integrates multiple existing benchmarks such as RepoBench, AlpacaEval, and ZeroEval. Key features include unified installation, parallel evaluation, simplified usage, and results management. Users can run various benchmarks with a consistent command-line interface and track results locally or integrate with a database for systematic tracking and leaderboard submission.

home-assistant-datasets

This package provides a collection of datasets for evaluating AI Models in the context of Home Assistant. It includes synthetic data generation, loading data into Home Assistant, model evaluation with different conversation agents, human annotation of results, and visualization of improvements over time. The datasets cover home descriptions, area descriptions, device descriptions, and summaries that can be performed on a home. The tool aims to build datasets for future training purposes.

Evaluator

NeMo Evaluator SDK is an open-source platform for robust, reproducible, and scalable evaluation of Large Language Models. It enables running hundreds of benchmarks across popular evaluation harnesses against any OpenAI-compatible model API. The platform ensures auditable and trustworthy results by executing evaluations in open-source Docker containers. NeMo Evaluator SDK is built on four core principles: Reproducibility by Default, Scale Anywhere, State-of-the-Art Benchmarking, and Extensible and Customizable.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.