Best AI tools for< Research Scientist In Natural Language Processing >

Infographic

20 - AI tool Sites

Derwen

Derwen is an open-source integration platform for production machine learning in enterprise, specializing in natural language processing, graph technologies, and decision support. It offers expertise in developing knowledge graph applications and domain-specific authoring. Derwen collaborates closely with Hugging Face and provides strong data privacy guarantees, low carbon footprint, and no cloud vendor involvement. The platform aims to empower AI engineers and domain experts with quality, time-to-value, and ownership since 2017.

NLTK

NLTK (Natural Language Toolkit) is a leading platform for building Python programs to work with human language data. It provides easy-to-use interfaces to over 50 corpora and lexical resources such as WordNet, along with a suite of text processing libraries for classification, tokenization, stemming, tagging, parsing, and semantic reasoning, wrappers for industrial-strength NLP libraries, and an active discussion forum. Thanks to a hands-on guide introducing programming fundamentals alongside topics in computational linguistics, plus comprehensive API documentation, NLTK is suitable for linguists, engineers, students, educators, researchers, and industry users alike.

Amazon Science

Amazon Science is a research and development organization within Amazon that focuses on developing new technologies and products in the fields of artificial intelligence, machine learning, and computer science. The organization is home to a team of world-renowned scientists and engineers who are working on a wide range of projects, including developing new algorithms for machine learning, building new computer vision systems, and creating new natural language processing tools. Amazon Science is also responsible for developing new products and services that use these technologies, such as the Amazon Echo and the Amazon Fire TV.

Anthropic

Anthropic is a research and deployment company founded in 2021 by former OpenAI researchers Dario Amodei, Daniela Amodei, and Geoffrey Irving. The company is developing large language models, including Claude, a multimodal AI model that can perform a variety of language-related tasks, such as answering questions, generating text, and translating languages.

Google Research

Google Research is a team of scientists and engineers working on a wide range of topics in computer science, including artificial intelligence, machine learning, and quantum computing. Our mission is to advance the state of the art in these fields and to develop new technologies that can benefit society. We publish hundreds of research papers each year and collaborate with researchers from around the world. Our work has led to the development of many new products and services, including Google Search, Google Translate, and Google Maps.

Association for the Advancement of Artificial Intelligence

The Association for the Advancement of Artificial Intelligence (AAAI) is a scientific society dedicated to advancing the scientific understanding of the mechanisms underlying thought and intelligent behavior and their embodiment in machines. AAAI's mission is to promote research in AI and to promote the use of AI technology for the benefit of humanity.

Keras

Keras is an open-source deep learning API written in Python, designed to make building and training deep learning models easier. It provides a user-friendly interface and a wide range of features and tools to help developers create and deploy machine learning applications. Keras is compatible with multiple frameworks, including TensorFlow, Theano, and CNTK, and can be used for a variety of tasks, including image classification, natural language processing, and time series analysis.

CogPrints

CogPrints is an electronic archive for self-archived papers in any area of Psychology, Neuroscience, and Linguistics, and many areas of Computer Science (e.g., artificial intelligence, robotics, vision, learning, speech, neural networks), Philosophy (e.g., mind, language, knowledge, science, logic), Biology (e.g., ethology, behavioral ecology, sociobiology, behavior genetics, evolutionary theory), Medicine (e.g., Psychiatry, Neurology, human genetics, Imaging), Anthropology (e.g., primatology, cognitive ethnology, archeology, paleontology), as well as any other portions of the physical, social and mathematical sciences that are pertinent to the study of cognition.

PyTorch

PyTorch is an open-source machine learning library based on the Torch library. It is used for applications such as computer vision, natural language processing, and reinforcement learning. PyTorch is known for its flexibility and ease of use, making it a popular choice for researchers and developers in the field of artificial intelligence.

TensorFlow

TensorFlow is an end-to-end platform for machine learning. It provides a wide range of tools and resources to help developers build, train, and deploy ML models. TensorFlow is used by researchers and developers all over the world to solve real-world problems in a variety of domains, including computer vision, natural language processing, and robotics.

OAI UI

OAI UI is an all-in-one AI platform designed to streamline various AI-related tasks. It offers a user-friendly interface that allows users to easily interact with AI technologies. The platform integrates multiple AI capabilities, such as natural language processing, machine learning, and computer vision, to provide a comprehensive solution for businesses and individuals looking to leverage AI in their workflows.

Krater.ai

Krater.ai is an AI SuperApp that offers a wide range of artificial intelligence tools and applications to enhance productivity and efficiency. It provides users with a comprehensive suite of AI-powered solutions for various tasks, from data analysis to natural language processing. With its user-friendly interface and advanced algorithms, Krater.ai simplifies complex processes and empowers users to make data-driven decisions with ease.

UseCasesFor.ai

UseCasesFor.ai is an AI application that offers a collection of over 250 use cases for artificial intelligence across various industries and disciplines. It provides insights into how different types of AI, such as computer vision, generative AI, machine learning, and natural language processing, are utilized in fields like agriculture, automotive, e-commerce, education, energy, entertainment, finance, healthcare, human resources, insurance, IT, law enforcement, legal, logistics, manufacturing, marketing, product development, public services, property, retail, science, sport, telecommunications, transport, tourism, and wildlife. The platform also allows users to sign up to receive a PDF containing all the use cases and stay updated with the latest AI trends and news.

Google Research Blog

The Google Research Blog is a platform for researchers at Google to share their latest work in artificial intelligence, machine learning, and other related fields. The blog covers a wide range of topics, from theoretical research to practical applications. The goal of the blog is to provide a forum for researchers to share their ideas and findings, and to foster collaboration between researchers at Google and around the world.

Mistral AI

Mistral AI is a cutting-edge AI technology provider for developers and businesses. Their open and portable generative AI models offer unmatched performance, flexibility, and customization. Mistral AI's mission is to accelerate AI innovation by providing powerful tools that can be easily integrated into various applications and systems.

Stanford Artificial Intelligence Laboratory

The Stanford Artificial Intelligence Laboratory (SAIL) is a center of excellence for Artificial Intelligence research, teaching, theory, and practice since its founding in 1963. SAIL faculty and students are committed to developing the theoretical foundations of AI, advancing the state-of-the-art in AI technologies, and applying AI to address real-world problems. SAIL is a vibrant and collaborative community of researchers, students, and staff who are passionate about AI and its potential to make the world a better place.

Elicit

Elicit is a research tool that uses artificial intelligence to help researchers analyze research papers more efficiently. It can summarize papers, extract data, and synthesize findings, saving researchers time and effort. Elicit is used by over 800,000 researchers worldwide and has been featured in publications such as Nature and Science. It is a powerful tool that can help researchers stay up-to-date on the latest research and make new discoveries.

Summarize Paper .com

Summarize Paper .com is an open-source AI tool that provides concise, understandable, and insightful summaries of the latest research articles on arXiv. The tool uses AI to generate key points and layman's summaries of research papers, making it easy for users to stay up-to-date with the latest developments in their field. In addition to its summary service, Summarize Paper .com also offers an AI assistant that can answer questions about arXiv papers. The tool is designed to make it easy for researchers, students, journalists, and anyone else who wants to stay informed about the latest research to access and understand the latest findings.

C&EN

C&EN, a publication of the American Chemical Society, provides the latest news and insights on the chemical industry, including research, technology, business, and policy. It covers a wide range of topics, including analytical chemistry, biological chemistry, business, careers, education, energy, environment, food, materials, people, pharmaceuticals, physical chemistry, policy, research integrity, safety, and synthesis.

AI Tools Hub

AI Tools Hub is a platform that provides a curated collection of the best AI tools available. It serves as a one-stop destination for individuals and businesses looking to explore and utilize cutting-edge AI technologies. The platform offers a wide range of tools across various categories such as machine learning, natural language processing, computer vision, and more. Users can discover, compare, and choose the most suitable AI tools for their specific needs, helping them enhance productivity and efficiency in their projects and workflows.

1 - Open Source Tools

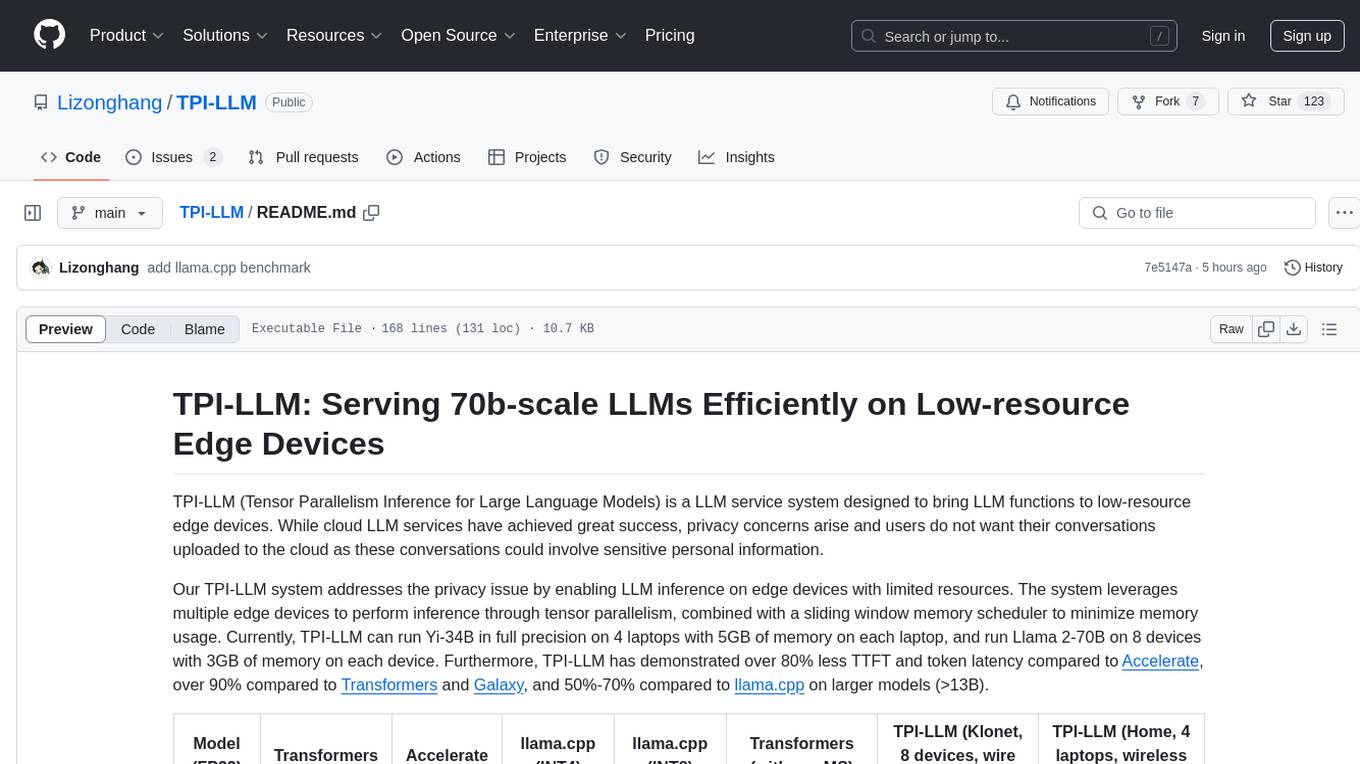

TPI-LLM

TPI-LLM (Tensor Parallelism Inference for Large Language Models) is a system designed to bring LLM functions to low-resource edge devices, addressing privacy concerns by enabling LLM inference on edge devices with limited resources. It leverages multiple edge devices for inference through tensor parallelism and a sliding window memory scheduler to minimize memory usage. TPI-LLM demonstrates significant improvements in TTFT and token latency compared to other models, and plans to support infinitely large models with low token latency in the future.

20 - OpenAI Gpts

Graphene Explorer AI

Leading AI in graphene research, offering innovative insights and solutions, powered by OpenAI.

Bio Abstract Expert

Generate a structured abstract for academic papers, primarily in the field of biology, adhering to a specified word count range. Simply upload your manuscript file (without the abstract) and specify the word count (for example, '200-250') to GPT.

CTMU Sage

Bot that guides users in understanding the Cognitive-Theoretic Model of the Universe

OphtalmoNewsIA

Synthèse d'articles d'ophtalmologie de PubMed depuis 2020 (par défaut), ou avant sur demande

AI-Driven Lab

recommends AI research these days in Japanese using AI-driven's-lab articles

Data Extractor Pro

Expert in data extraction and context-driven analysis. Can read most filetypes including PDFS, XLSX, Word, TXT, CSV, EML, Etc.

Data Analysis Prompt Engineer

Specializes in creating, refining, and testing data analysis prompts based on user queries.

GPT Designer

A creative aide for designing new GPT models, skilled in ideation and prompting.

Scientific Writing

Specializes in clear, precise academic writing in the natural sciences. Corrects text provided by the user and does not write originally.

Therocial Scientist

I am a digital scientist skilled in Python, here to assist with scientific and data analysis tasks.