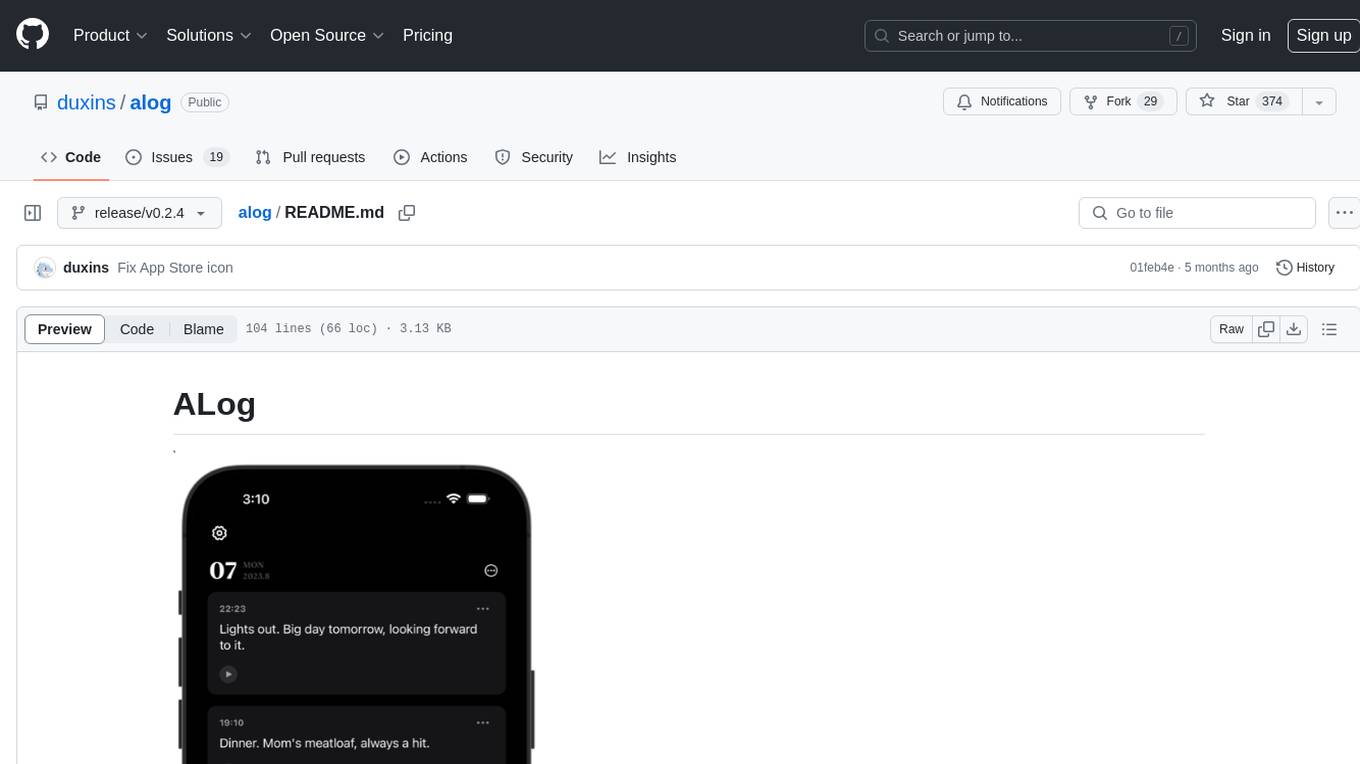

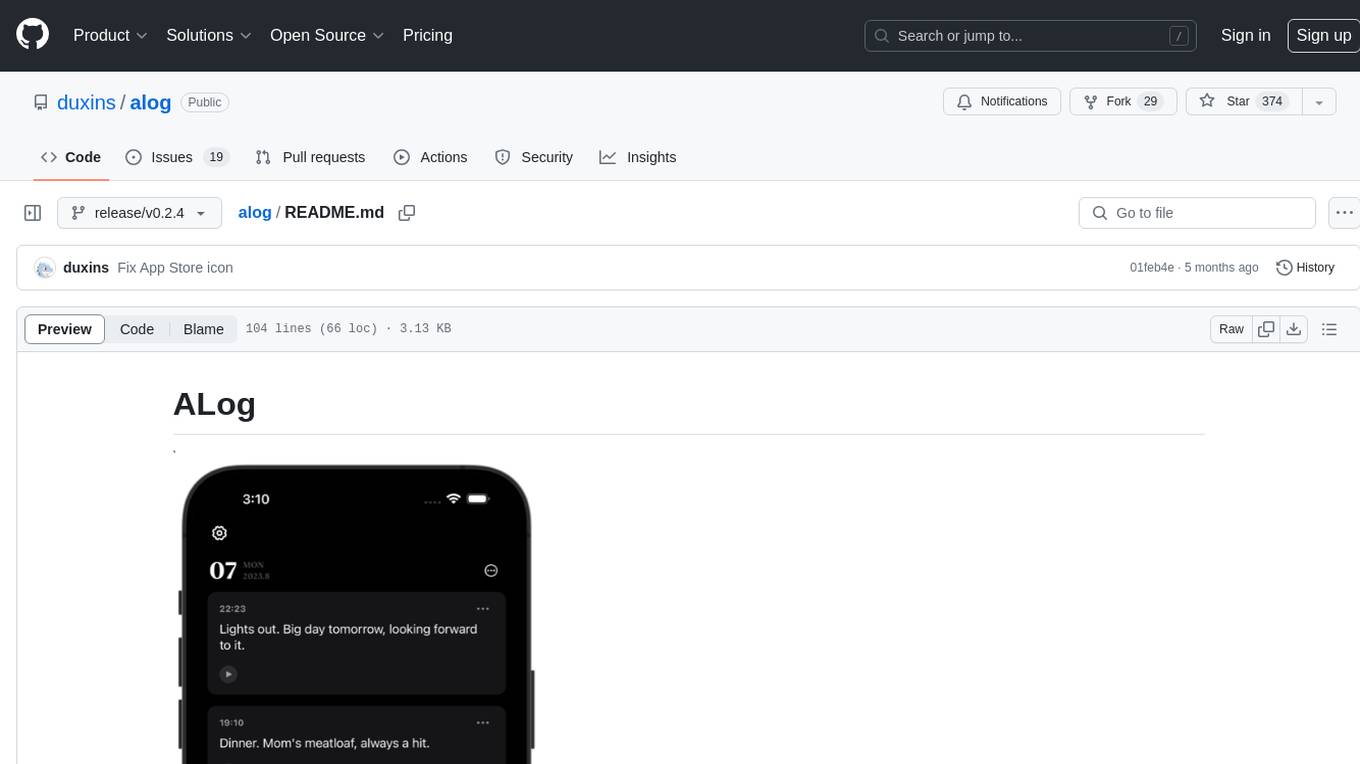

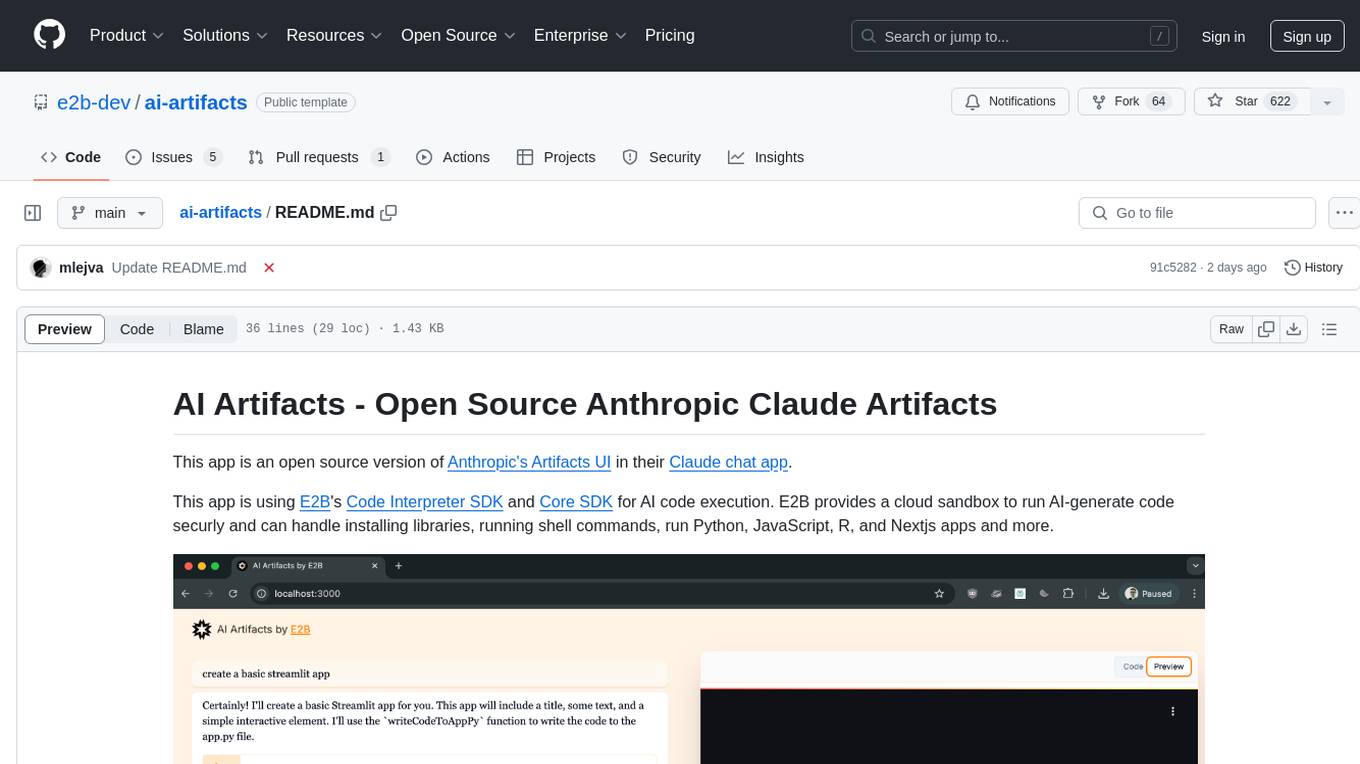

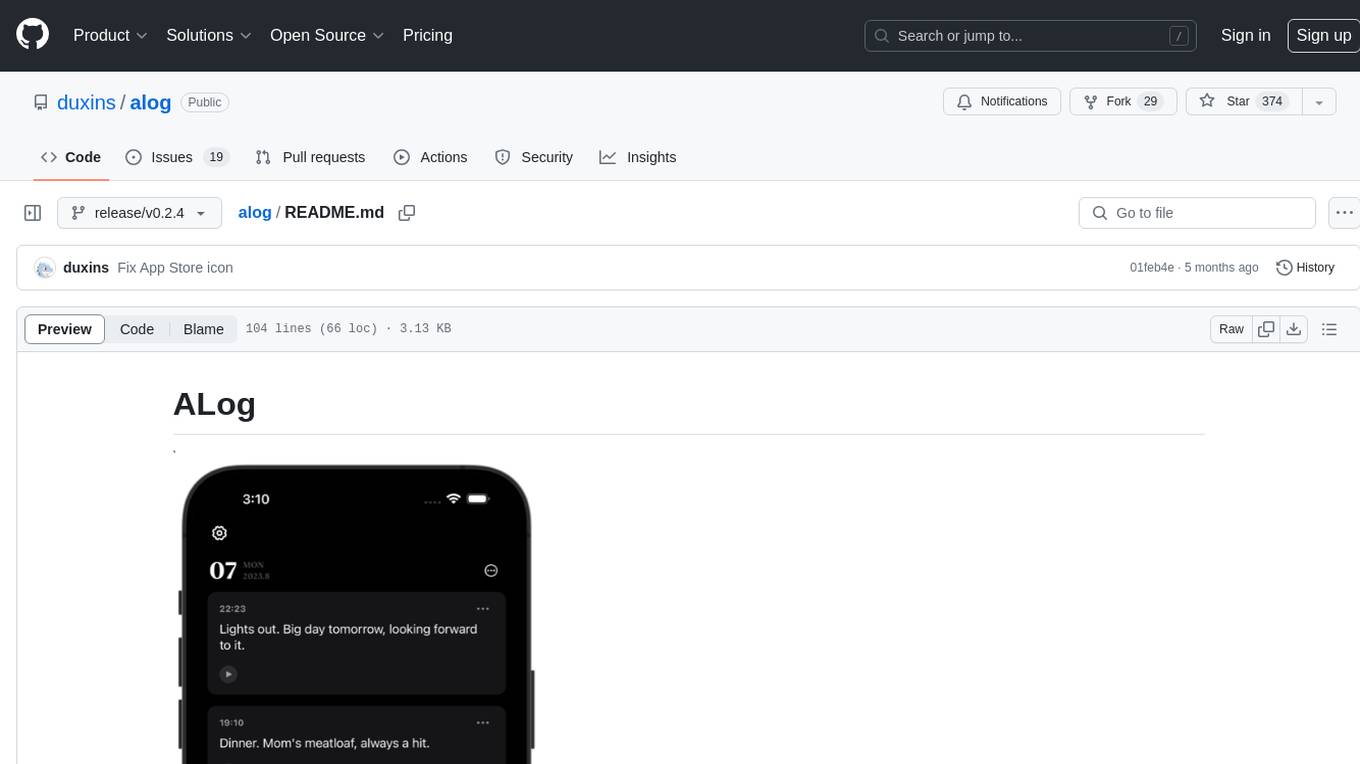

alog

AI-Powered Audio Diary

Stars: 416

ALog is an open-source project designed to facilitate the deployment of server-side code to Cloudflare. It provides a step-by-step guide on creating a Cloudflare worker, configuring environment variables, and updating API base URL. The project aims to simplify the process of deploying server-side code and interacting with OpenAI API. ALog is distributed under the GNU General Public License v2.0, allowing users to modify and distribute the app while adhering to App Store Review Guidelines.

README:

Follow these steps to build the project:

git clone https://github.com/duxins/alogbrew install xcodegenbundle installTo setup up the environment variables, copy the .env.example file and rename it as .env.

cp .env.example .envUpdate the keys as needed within the .env file.

bundle exec bin/arkanaFinally, generate the project by running:

xcodegenOnce you've followed these steps, you should have a fully built project ready for development. If you encounter any issues, please open an issue in the repository.

- Once logged in, navigate to the "Workers & Pages" section.

- Click on "Create Application" → "Create Worker".

- Rename the Worker as per your requirements.

- Click on "Deploy".

- After deploying, you'll see a "Quick Edit" button. Click on it.

- Paste the contents of Server/src/worker.js into the Cloudflare Worker editor.

- Click on "Save and deploy"

- On the Worker's Settings tab, navigate to the Variables section.

- Set the following variables:

| Variable | Description | |

|---|---|---|

OPENAI_KEY |

Required | Your OpenAI API key. |

HMAC_KEY |

Optional | This should be consistent with the key used on the client side. If this variable is not set, HMAC validation will not be performed. |

AI_MODEL |

Optional | Represents the default model. If not set, the model specified by the client will be used. |

- Open

Constants.swiftfile. - Update the

api_base_urlconstant to point to the URL of your deployed Cloudflare Worker.

struct Constants {

static let api_base_url = URL(string: "https://your-worker-name.workers.dev/")!

}Distributed under the GNU General Public License v2.0. See LICENSE for more information.

Important Note: This open-source license does not prevent anyone from renaming and repackaging this app for distribution. However, doing so is in direct violation of App Store Review Guidelines, specifically Guideline 4.1 (Copycats) and Guideline 4.3 (Spam). Any attempt to simply rename and repackage this app for submission to the App Store is explicitly prohibited.

Thanks to @onenewbite for his inspiring video "为什么你应该开始用ChatGPT写日记|做笔记", which has greatly influenced this project.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for alog

Similar Open Source Tools

alog

ALog is an open-source project designed to facilitate the deployment of server-side code to Cloudflare. It provides a step-by-step guide on creating a Cloudflare worker, configuring environment variables, and updating API base URL. The project aims to simplify the process of deploying server-side code and interacting with OpenAI API. ALog is distributed under the GNU General Public License v2.0, allowing users to modify and distribute the app while adhering to App Store Review Guidelines.

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

fragments

Fragments is an open-source tool that leverages Anthropic's Claude Artifacts, Vercel v0, and GPT Engineer. It is powered by E2B Sandbox SDK and Code Interpreter SDK, allowing secure execution of AI-generated code. The tool is based on Next.js 14, shadcn/ui, TailwindCSS, and Vercel AI SDK. Users can stream in the UI, install packages from npm and pip, and add custom stacks and LLM providers. Fragments enables users to build web apps with Python interpreter, Next.js, Vue.js, Streamlit, and Gradio, utilizing providers like OpenAI, Anthropic, Google AI, and more.

BuildCLI

BuildCLI is a command-line interface (CLI) tool designed for managing and automating common tasks in Java project development. It simplifies the development process by allowing users to create, compile, manage dependencies, run projects, generate documentation, manage configuration profiles, dockerize projects, integrate CI/CD tools, and generate structured changelogs. The tool aims to enhance productivity and streamline Java project management by providing a range of functionalities accessible directly from the terminal.

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, enables deployment of conversational service robots in minutes, integrates diverse knowledge bases, offers flexible configuration options, and features an attractive user interface.

manifold

Manifold is a powerful platform for workflow automation using AI models. It supports text generation, image generation, and retrieval-augmented generation, integrating seamlessly with popular AI endpoints. Additionally, Manifold provides robust semantic search capabilities using PGVector combined with the SEFII engine. It is under active development and not production-ready.

AirCasting

AirCasting is a platform for gathering, visualizing, and sharing environmental data. It aims to provide a central hub for environmental data, making it easier for people to access and use this information to make informed decisions about their environment.

openai-kotlin

OpenAI Kotlin API client is a Kotlin client for OpenAI's API with multiplatform and coroutines capabilities. It allows users to interact with OpenAI's API using Kotlin programming language. The client supports various features such as models, chat, images, embeddings, files, fine-tuning, moderations, audio, assistants, threads, messages, and runs. It also provides guides on getting started, chat & function call, file source guide, and assistants. Sample apps are available for reference, and troubleshooting guides are provided for common issues. The project is open-source and licensed under the MIT license, allowing contributions from the community.

fast-mcp

Fast MCP is a Ruby gem that simplifies the integration of AI models with your Ruby applications. It provides a clean implementation of the Model Context Protocol, eliminating complex communication protocols, integration challenges, and compatibility issues. With Fast MCP, you can easily connect AI models to your servers, share data resources, choose from multiple transports, integrate with frameworks like Rails and Sinatra, and secure your AI-powered endpoints. The gem also offers real-time updates and authentication support, making AI integration a seamless experience for developers.

ChatGPT

The ChatGPT API Free Reverse Proxy provides free self-hosted API access to ChatGPT (`gpt-3.5-turbo`) with OpenAI's familiar structure, eliminating the need for code changes. It offers streaming response, API endpoint compatibility, and complimentary access without an API key. Installation options include Docker, PC/Server, and Termux on Android devices. The API can be accessed through a self-hosted local server or a pre-hosted API with an API key obtained from the Discord server. Usage examples are provided for Python and Node.js, and the project is licensed under AGPL-3.0.

mobile-use

Mobile-use is an open-source AI agent that controls Android or IOS devices using natural language. It understands commands to perform tasks like sending messages and navigating apps. Features include natural language control, UI-aware automation, data scraping, and extensibility. Users can automate their mobile experience by setting up environment variables, customizing LLM configurations, and launching the tool via Docker or manually for development. The tool supports physical Android phones, Android simulators, and iOS simulators. Contributions are welcome, and the project is licensed under MIT.

ai-artifacts

AI Artifacts is an open source tool that replicates Anthropic's Artifacts UI in the Claude chat app. It utilizes E2B's Code Interpreter SDK and Core SDK for secure AI code execution in a cloud sandbox environment. Users can run AI-generated code in various languages such as Python, JavaScript, R, and Nextjs apps. The tool also supports running AI-generated Python in Jupyter notebook, Next.js apps, and Streamlit apps. Additionally, it offers integration with Vercel AI SDK for tool calling and streaming responses from the model.

recommendarr

Recommendarr is a tool that generates personalized TV show and movie recommendations based on your Sonarr, Radarr, Plex, and Jellyfin libraries using AI. It offers AI-powered recommendations, media server integration, flexible AI support, watch history analysis, customization options, and dark/light mode toggle. Users can connect their media libraries and watch history services, configure AI service settings, and get personalized recommendations based on genre, language, and mood/vibe preferences. The tool works with any OpenAI-compatible API and offers various recommended models for different cost options and performance levels. It provides personalized suggestions, detailed information, filter options, watch history analysis, and one-click adding of recommended content to Sonarr/Radarr.

action_mcp

Action MCP is a powerful tool for managing and automating your cloud infrastructure. It provides a user-friendly interface to easily create, update, and delete resources on popular cloud platforms. With Action MCP, you can streamline your deployment process, reduce manual errors, and improve overall efficiency. The tool supports various cloud providers and offers a wide range of features to meet your infrastructure management needs. Whether you are a developer, system administrator, or DevOps engineer, Action MCP can help you simplify and optimize your cloud operations.

trieve

Trieve is an advanced relevance API for hybrid search, recommendations, and RAG. It offers a range of features including self-hosting, semantic dense vector search, typo tolerant full-text/neural search, sub-sentence highlighting, recommendations, convenient RAG API routes, the ability to bring your own models, hybrid search with cross-encoder re-ranking, recency biasing, tunable popularity-based ranking, filtering, duplicate detection, and grouping. Trieve is designed to be flexible and customizable, allowing users to tailor it to their specific needs. It is also easy to use, with a simple API and well-documented features.

openmeter

OpenMeter is a real-time and scalable usage metering tool for AI, usage-based billing, infrastructure, and IoT use cases. It provides a REST API for integrations and offers client SDKs in Node.js, Python, Go, and Web. OpenMeter is licensed under the Apache 2.0 License.

For similar tasks

alog

ALog is an open-source project designed to facilitate the deployment of server-side code to Cloudflare. It provides a step-by-step guide on creating a Cloudflare worker, configuring environment variables, and updating API base URL. The project aims to simplify the process of deploying server-side code and interacting with OpenAI API. ALog is distributed under the GNU General Public License v2.0, allowing users to modify and distribute the app while adhering to App Store Review Guidelines.

crabml

Crabml is a llama.cpp compatible AI inference engine written in Rust, designed for efficient inference on various platforms with WebGPU support. It focuses on running inference tasks with SIMD acceleration and minimal memory requirements, supporting multiple models and quantization methods. The project is hackable, embeddable, and aims to provide high-performance AI inference capabilities.

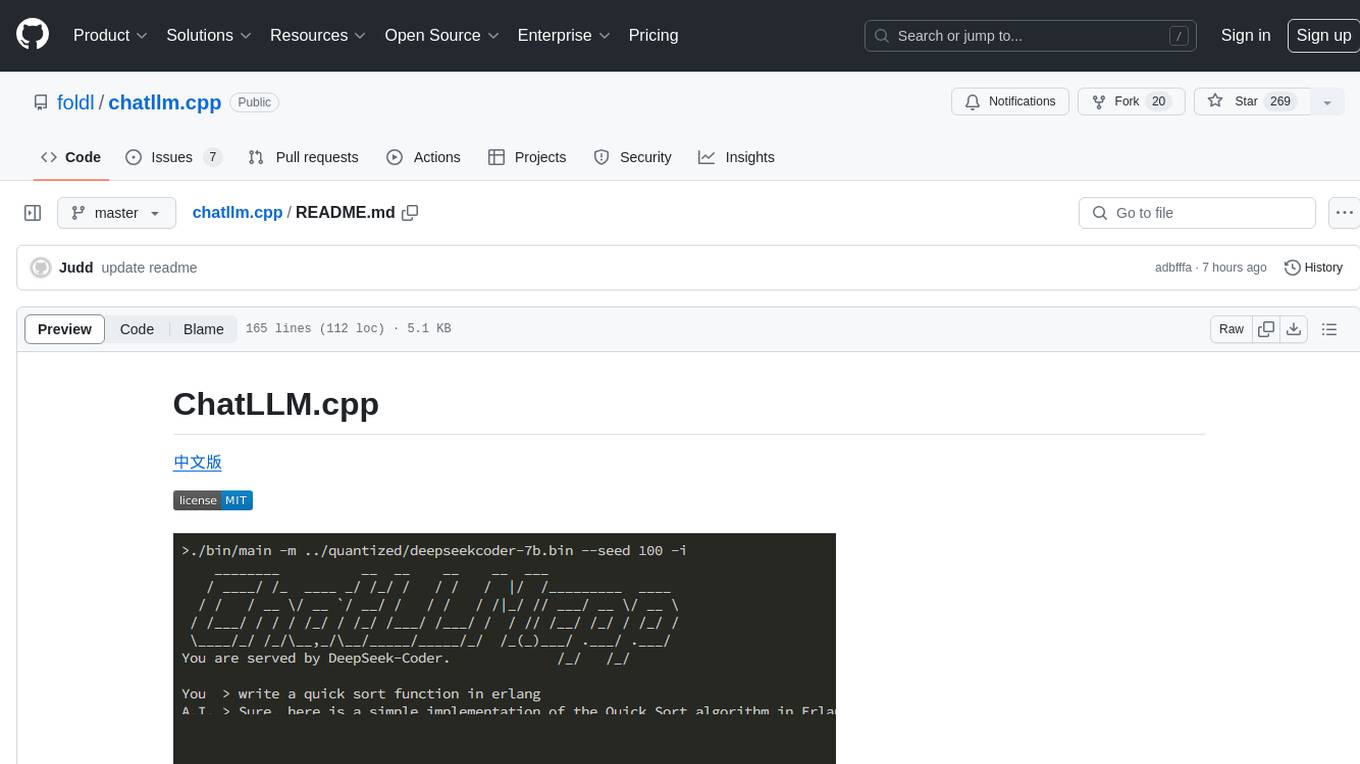

chatllm.cpp

ChatLLM.cpp is a pure C++ implementation tool for real-time chatting with RAG on your computer. It supports inference of various models ranging from less than 1B to more than 300B. The tool provides accelerated memory-efficient CPU inference with quantization, optimized KV cache, and parallel computing. It allows streaming generation with a typewriter effect and continuous chatting with virtually unlimited content length. ChatLLM.cpp also offers features like Retrieval Augmented Generation (RAG), LoRA, Python/JavaScript/C bindings, web demo, and more possibilities. Users can clone the repository, quantize models, build the project using make or CMake, and run quantized models for interactive chatting.

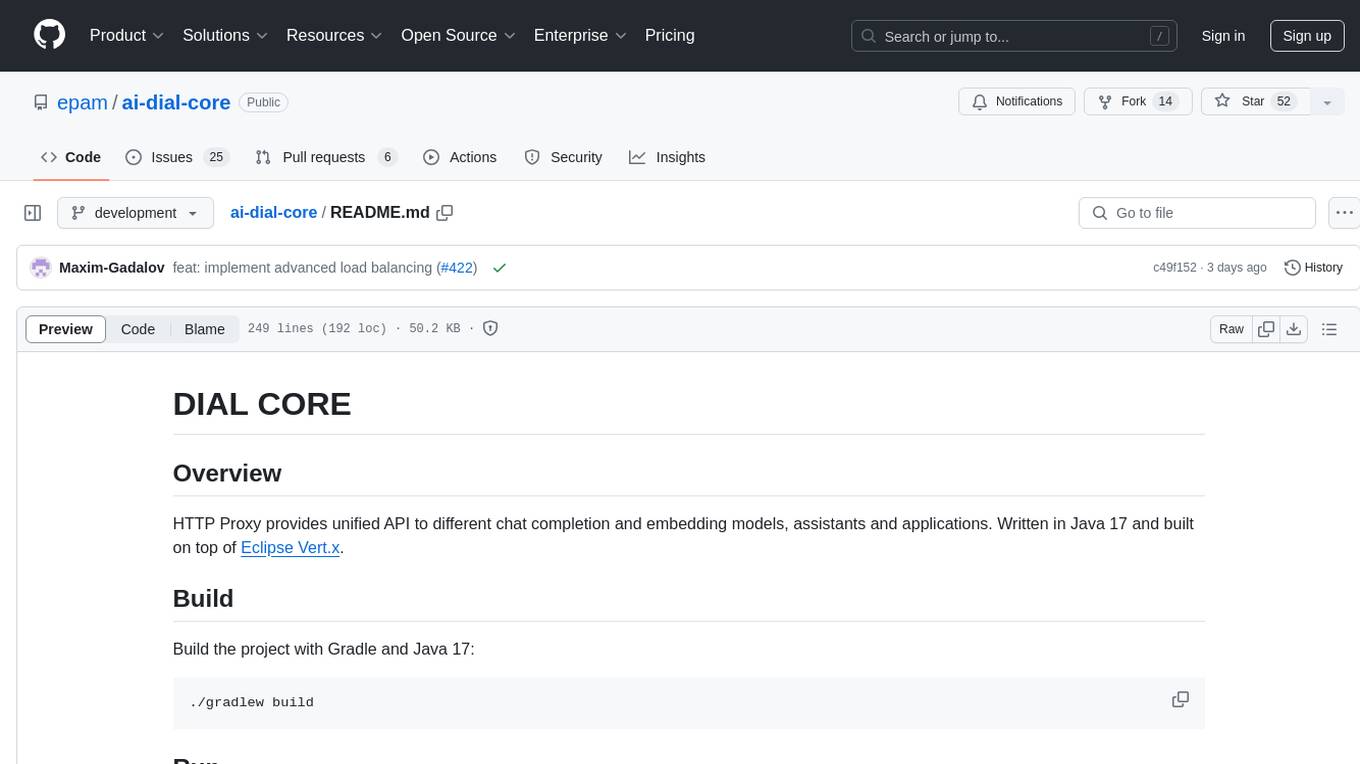

ai-dial-core

AI DIAL Core is an HTTP Proxy that provides a unified API to different chat completion and embedding models, assistants, and applications. It is written in Java 17 and built on Eclipse Vert.x. The core functionality includes handling static and dynamic settings, deployment on Kubernetes using Helm charts, and storing user data in Blob Storage and Redis. It supports various identity providers, storage providers like AWS S3, Google Cloud Storage, and Azure Blob Store, and features like AI DIAL Addons, Interceptors, Assistants, Applications, and Models with customizable parameters and configurations.

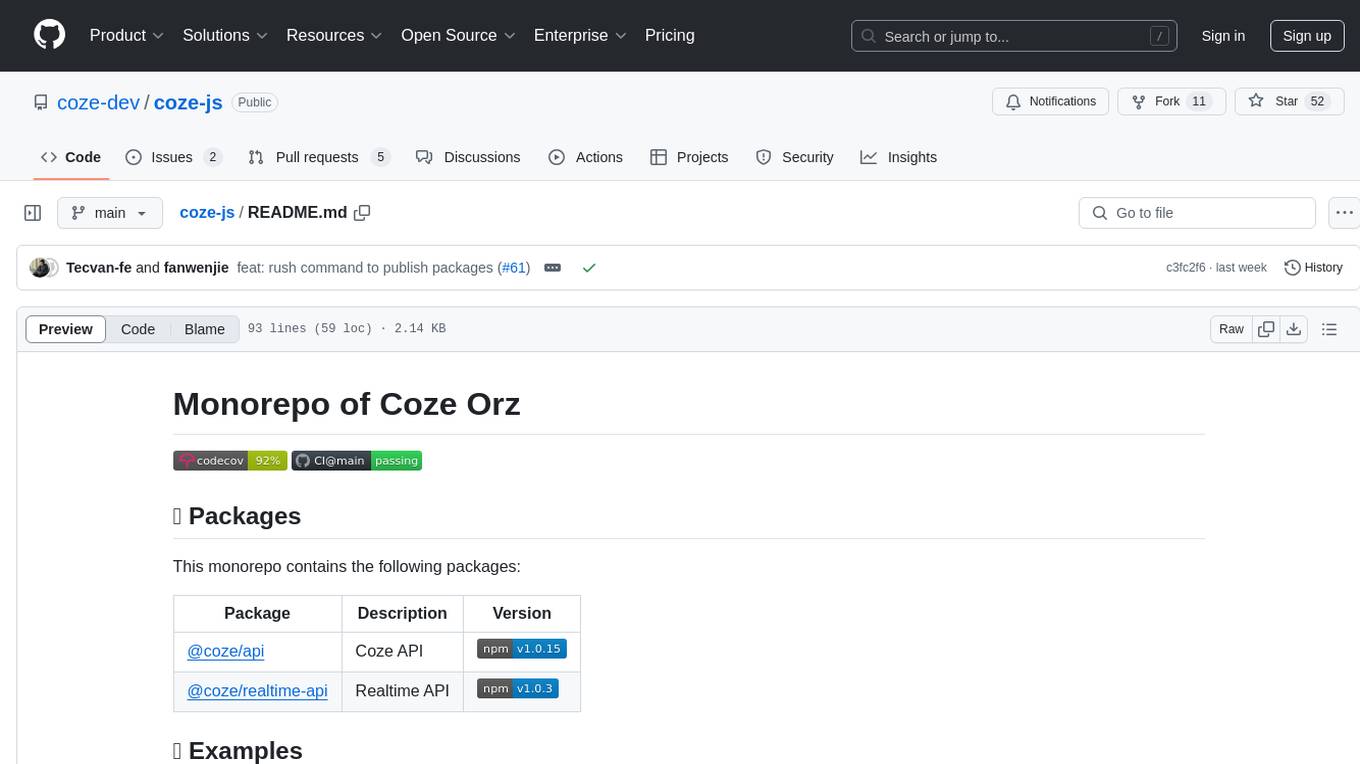

coze-js

Coze-js is a monorepo containing packages for Coze API and Realtime API. It provides usage examples for Node.js and React Web, as well as full console and sample call up demos. The tool requires Node.js 18+, pnpm 9.12.0, and Rush 5.140.0 for installation. Developers can start developing projects within the repository by following the provided steps. Each package in the monorepo can be developed and published independently, with documentation on contributing guidelines and publishing. The tool is licensed under MIT.

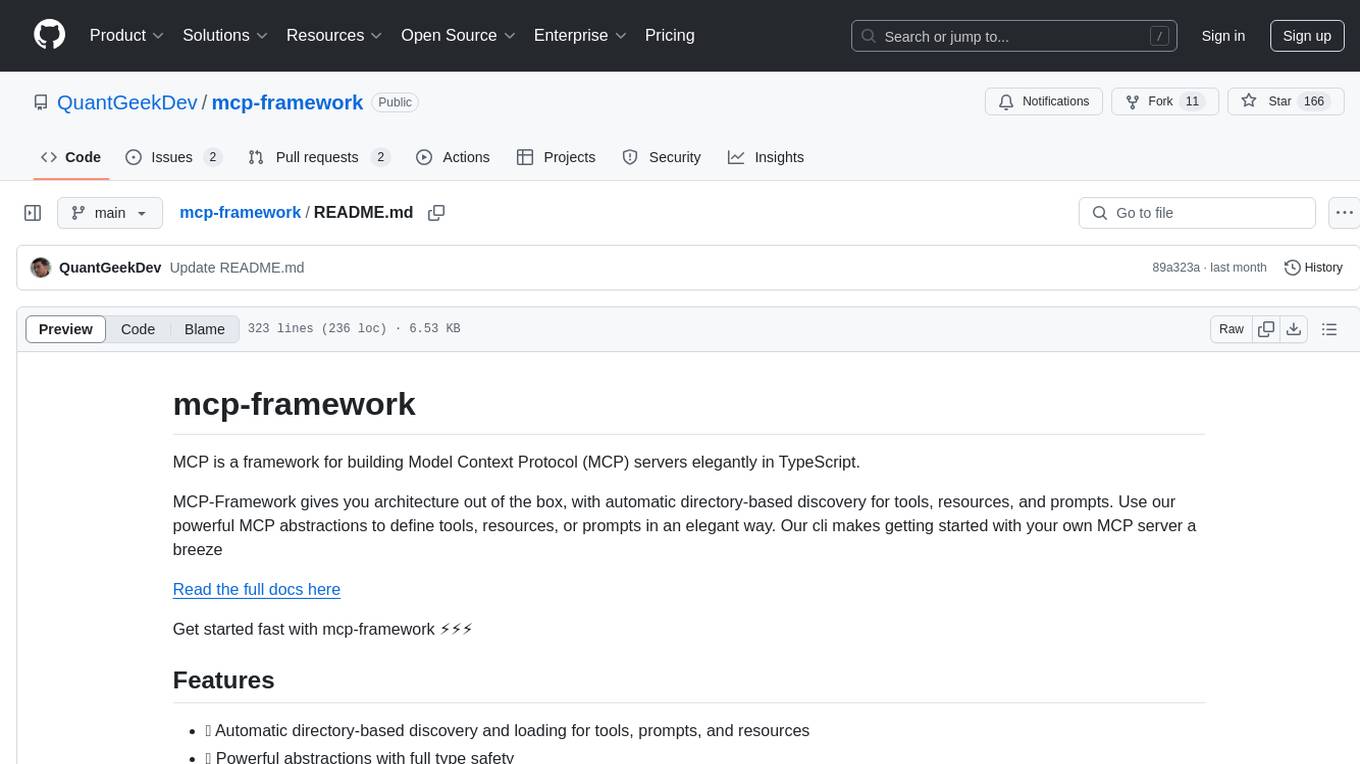

mcp-framework

MCP-Framework is a TypeScript framework for building Model Context Protocol (MCP) servers with automatic directory-based discovery for tools, resources, and prompts. It provides powerful abstractions, simple server setup, and a CLI for rapid development and project scaffolding.

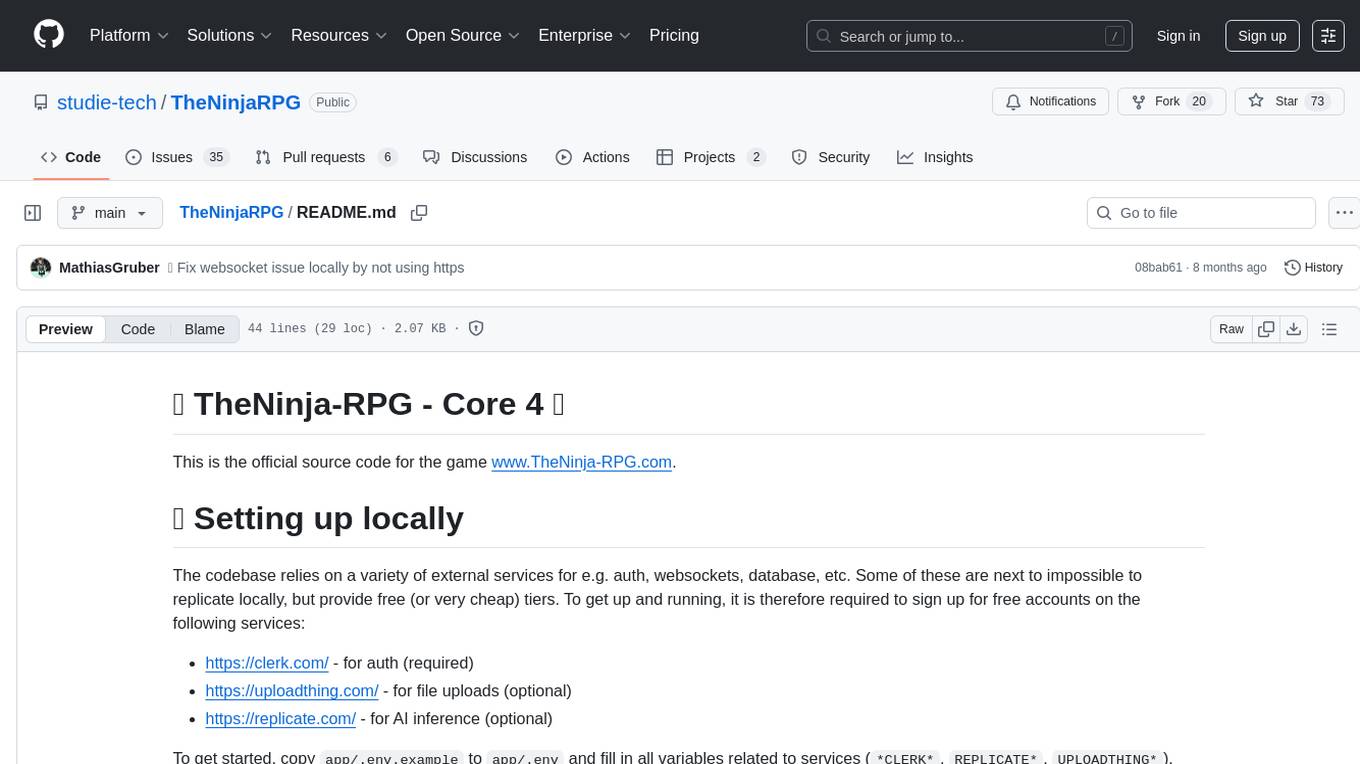

TheNinjaRPG

TheNinja-RPG is the official source code for the game www.TheNinja-RPG.com. It relies on external services for authentication, websockets, database, etc. Users need to sign up for free accounts on services like Clerk, UploadThing, and Replicate. The codebase provides various 'make' commands for setup, building, and database management. The project does not have a specific license and is under exclusive copyright protection.

AI-Horde-Worker

AI-Horde-Worker is a repository containing the original reference implementation for a worker that turns your graphics card(s) into a worker for the AI Horde. It allows users to generate or alchemize images for others. The repository provides instructions for setting up the worker on Windows and Linux, updating the worker code, running with multiple GPUs, and stopping the worker. Users can configure the worker using a WebUI to connect to the horde with their username and API key. The repository also includes information on model usage and running the Docker container with specified environment variables.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.