ai-dial-core

The main component of AI DIAL, which provides unified API to different chat completion and embedding models, assistants, and applications

Stars: 640

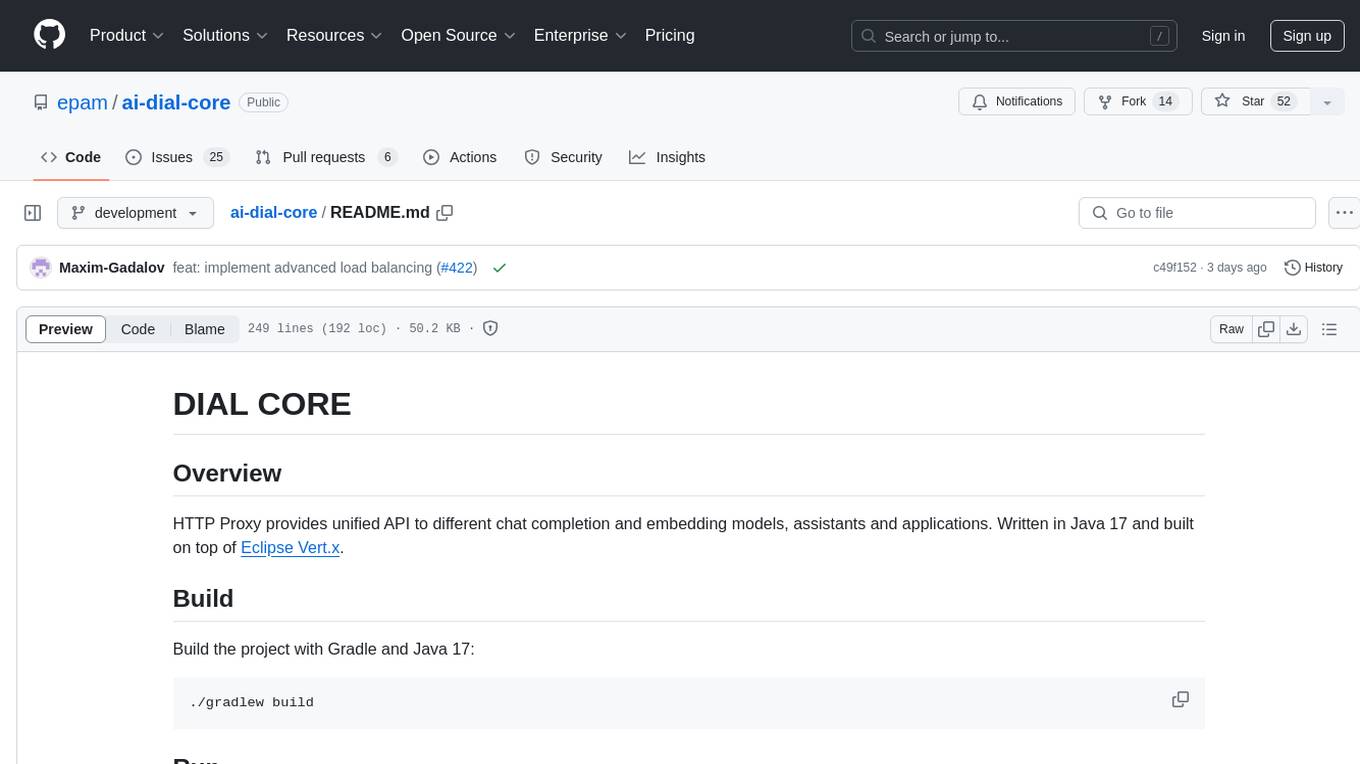

AI DIAL Core is an HTTP Proxy that provides a unified API to different chat completion and embedding models, assistants, and applications. It is written in Java 17 and built on Eclipse Vert.x. The core functionality includes handling static and dynamic settings, deployment on Kubernetes using Helm charts, and storing user data in Blob Storage and Redis. It supports various identity providers, storage providers like AWS S3, Google Cloud Storage, and Azure Blob Store, and features like AI DIAL Addons, Interceptors, Assistants, Applications, and Models with customizable parameters and configurations.

README:

[!NOTE] HTTP Proxy provides unified API to different chat completion and embedding models and applications. Written in Java 21 and built on top of Eclipse Vert.x.

DIAL Core has a dependency on GitHub packages of JClouds. Github doesn't provide anonymous access to packages.

That requires to pass credentials GitHub for access to published JClouds packages. See the code snippet below:

repositories {

maven {

url = uri("https://maven.pkg.github.com/epam/jclouds")

credentials {

username = project.findProperty("gpr.user") ?: System.getenv("GPR_USERNAME")

password = project.findProperty("gpr.key") ?: System.getenv("GPR_PASSWORD")

}

}

mavenCentral()

}[!IMPORTANT] You should set env variables

GPR_USERNAMEandGPR_PASSWORDto valid values, whereGPR_USERNAME- GitHub username andGPR_PASSWORD- GitHub personal access token.

[!IMPORTANT] The access token requires the permission

read:packages.See more details here to generate personal access token in GitHub.

Build the project with Gradle and Java 21:

./gradlew build

Run the project with Gradle:

./gradlew :server:run

Or run com.epam.aidial.core.server.AiDial class from your favorite IDE.

You have the option to deploy the DIAL Core on the Kubernetes cluster by utilizing an umbrella dial Helm chart, which also deploys other DIAL components. Alternatively, you can use dial-core Helm chart to deploy just Core.

[!NOTE] Refer to Examples for guidelines.

In any case, in your Helm values file, it is necessary to provide application's configurations described in the Configuration section.

[!NOTE] Static settings are used on startup and cannot be changed while application is running. Refer to example to view the example configuration file.

Priority order:

- Environment variables with extra "aidial." prefix. E.g. "aidial.server.port", "aidial.config.files".

- File specified in "AIDIAL_SETTINGS" environment variable.

- Default resource file: src/main/resources/aidial.settings.json.

| Setting | Default | Required | Description |

|---|---|---|---|

| config.files | aidial.config.json | No | List of paths to dynamic settings. Refer to example of the file with dynamic settings. |

| config.reload | 60000 | No | Config reload interval in milliseconds. |

| vertx.* | - | No | Vertx settings. Refer to vertx.io to learn more. |

| server.* | - | No | Vertx HTTP server settings for incoming requests. Refer to HTTP server options to learn more. |

| client.* | - | No | Vertx HTTP client settings for outbound requests. Refer to HTTP client options to learn more. |

| webSocketClient.* | - | No | Vertx web socket client settings for outbound requests. Refer to WebSocket client options to learn more. |

| invitations.ttlInSeconds | 259200 | No | Invitation time to live in seconds. |

| perRequestApiKey.ttl | 1800 | No | The TTL in seconds of per request API key |

| asyncTaskExecutor.useVirtualThreads | true | No | The flag determines if virtual threads are used to run blocking tasks or platform threads. |

| config.jsonMergeStrategy.overwriteArrays | false | No | Specifies a merging strategy for JSON arrays. If it's set to true, arrays will be overwritten. Otherwise, they will be concatenated. |

| apiKeyValidation.proxyCount | 0 | No | The count of trusted proxies between the client and DIAL Core server. See selecting an IP address in HTTP header X-Forwarded-For for more details. The default value means there are no proxies. |

Identity Providers Configurations

| Setting | Default | Required | Description |

|---|---|---|---|

| identityProviders | - | Yes | Map of identity providers. Note: At least one identity provider must be provided. Refer to examples to view available providers. Refer to IDP Configuration to view guidelines for configuring supported providers. |

| identityProviders | - | Yes | Map of identity providers. Note: At least one identity provider must be provided. Refer to examples to view available providers. Refer to IDP Configuration to view guidelines for configuring supported providers. |

| identityProviders.*.jwksUrl | - | Optional | Url to jwks provider. Required if disabledVerifyJwt is set to false. Note: Either jwksUrl or userInfoEndpoint must be provided. |

| identityProviders.*.userInfoEndpoint | - | Optional | Url to user info endpoint. Note: Either jwksUrl or userInfoEndpoint must be provided or disableJwtVerification is unset. Refer to Google example. |

| identityProviders.*.rolePath | - | Yes | Path(s) to the claim user roles in JWT token or user info response, e.g. resource_access.chatbot-ui.roles or just roles. Can be single String or Array of Strings. Refer to IDP Configuration to view guidelines for configuring supported providers. |

| identityProviders.*.projectPath | - | No | Path(s) to the claim in JWT token or user info response, e.g. azp, aud or some.path.client from which project name can be taken. Can be single String. Refer to IDP Configuration to view guidelines for configuring supported providers. |

| identityProviders.*.rolesDelimiter | - | No | Delimiter to split roles into array in case when list of roles presented as single String. e.g. "rolesDelimiter": " "

|

| identityProviders.*.loggingKey | - | No | User information to search in claims of JWT token. email or sub should be sufficient in most cases. Note: email might be unavailable for some IDPs. Please check your IDP documentation in this case. |

| identityProviders.*.loggingSalt | - | No | Salt to hash user information for logging. |

| identityProviders.*.positiveCacheExpirationMs | 600000 | No | How long to retain JWKS response in the cache in case of successfull response. |

| identityProviders.*.negativeCacheExpirationMs | 10000 | No | How long to retain JWKS response in the cache in case of failed response. |

| identityProviders.*.issuerPattern | - | No | Regexp to match the claim "iss" to identity provider. |

| identityProviders.*.disableJwtVerification | false | No | The flag disables JWT verification. Note. userInfoEndpoint must be unset if the flag is set to true. |

| identityProviders.*.audience | - | No | If the setting is set it will be validated against the claim aud in JWT |

| identityProviders.*.userDisplayName | - | No | Path to the claim in JWT token or user info response where user display name can be taken. |

| identityProviders.*.userIdPath | sub | No | Path to the claim in JWT token or user info response where user ID can be taken. Can differ based on each IDP. E.g. Microsoft Entra ID uses oid. |

Toolsets Security Configurations

| Setting | Default | Required | Description |

|---|---|---|---|

| toolsets.security.authorizationServers | - | No | Path(s) to the authorization server URLs trusted to issue access tokens for MCP clients. |

| toolsets.security.resourceSchema | https | No | Schema of the resource server. This URL schema is used to construct the resource identifier for token validation, as defined in RFC 9728. If not specified, the default value will be applied. |

| toolsets.security.resourceHost | - | No | The public, fully-qualified hostname of this resource server (e.g., api.example.com). This is used to construct the resource identifier for token validation per RFC 9728. If not set, the host is derived from the incoming request. |

| toolsets.security.scopesSupported | - | No | List of scope values, as defined in OAuth 2.0 [RFC6749], that are used in authorization requests to request access to this protected resource. |

| toolsets.security.kms.provider | unencrypted | No | Specifies KMS provider. Supported providers: aws, azure, gcp, unencrypted |

| toolsets.security.kms.keyId | - | No | Identifies the KMS key to use in the encryption operation. |

| toolsets.security.kms.region | - | No | Geo region where the KMS is located. Required if provider is set to aws. |

| toolsets.security.kms.encryptionAlgorithm | - | No | Encryption algorithm. Required if provider is set to azure. Note Refer to aws, azure to get the list of supported algorithms for azure. Default value for aws is SYMMETRIC_DEFAULT

|

| toolsets.security.kms.cache.enabled | true | No | The flag determines if CEK cache is enabled. |

| toolsets.security.kms.cache.maxSize | 10000 | No | Maximum number of cached CEK. |

| toolsets.security.kms.cache.expiration | 600000 | No | Expiration in milliseconds for cached CEK. |

| toolsets.security.encryption.algorithm | AES | No | The encryption algorithm to use for content encryption operations. Commonly "AES", but may be changed to support other algorithms supported by the JCE provider. |

| toolsets.security.encryption.keySize | 256 | No | Key size in bits for the encryption algorithm. For AES, valid values are 128, 192, or 256, depending on the algorithm and provider policy. |

| toolsets.security.encryption.cipherTransformation | AES/GCM/NoPadding | No | The cipher transformation specifying the algorithm, mode, and padding (e.g., "AES/GCM/NoPadding"). Must be compatible with the selected algorithm. |

| toolsets.security.encryption.ivLengthBytes | 12 | No | Length of the initialization vector (IV) in bytes. For AES-GCM, 12 bytes (96 bits) is recommended by NIST. |

| toolsets.security.encryption.gcmTagLengthBits | 128 | No | Length of the authentication tag in bits when using GCM mode. NIST recommends 128 bits for maximum integrity protection. |

Storage Configurations

| Setting | Default | Required | Description |

|---|---|---|---|

| storage.provider | filesystem | Yes | Specifies blob storage provider. Supported providers: s3, aws-s3, azureblob, google-cloud-storage, filesystem. See examples in the sections below. |

| storage.endpoint | - | Optional | Specifies endpoint url for s3 compatible storages. Note: The setting might be required. That depends on a concrete provider. |

| storage.identity | - | Optional | Blob storage access key. Can be optional for filesystem, aws-s3, google-cloud-storage providers. Refer to sections in this document dedicated to specific storage providers. |

| storage.credential | - | Optional | Blob storage secret key. Can be optional for filesystem, aws-s3, google-cloud-storage providers. |

| storage.bucket | - | No | Blob storage bucket. |

| storage.overrides.* | - | No | Key-value pairs to override storage settings. * might be any specific blob storage setting to be overridden. Refer to examples in the sections below. |

| storage.createBucket | false | No | Indicates whether bucket should be created on start-up. |

| storage.prefix | - | No | Base prefix for all stored resources. The purpose to use the same bucket for different environments, e.g. dev, prod, pre-prod. Must not contain path separators or any invalid chars. |

| storage.maxUploadedFileSize | 536870912 | No | Maximum size in bytes of uploaded file. If a size of uploaded file exceeds the limit the server returns HTTP code 413 |

Encryption Configurations

| Setting | Default | Required | Description |

|---|---|---|---|

| encryption.secret | - | No | Secret is used for AES encryption of a prefix to the bucket blob storage. The value should be random generated string. |

| encryption.key | - | No | Key is used for AES encryption of a prefix to the bucket blob storage. The value should be random generated string. |

Resources Configurations

| Setting | Default | Required | Description |

|---|---|---|---|

| resources.maxSize | 67108864 | No | Max allowed size in bytes for a resource. |

| resources.maxSizeToCache | 1048576 | No | Max size in bytes for a resource to cache in Redis. |

| resources.syncPeriod | 60000 | No | Period in milliseconds, how frequently check for resources to sync. |

| resources.syncDelay | 120000 | No | Delay in milliseconds for a resource to be written back in object storage after last modification. |

| resources.syncBatch | 4096 | No | How many resources to sync in one go. |

| resources.cacheExpiration | 300000 | No | Expiration in milliseconds for synced resources in Redis. |

| resources.compressionMinSize | 256 | No | Compress a resource with gzip if its size in bytes more or equal to this value. |

| resources.resourceTypesExpiration | No | Define expiration time per resource type in milliseconds | |

| resources.resourceTypesExpiration.FILE | 300000 | Define expiration time for files | |

| resources.resourceTypesExpiration.CONVERSATION | 300000 | Define expiration time for converations | |

| resources.resourceTypesExpiration.PROMPT | 300000 | Define expiration time for prompts | |

| resources.resourceTypesExpiration.APPLICATION | Infinity | Define expiration time for applications | |

| resources.resourceTypesExpiration.TOOL_SET | Infinity | Define expiration time for toolsets |

Redis Configurations

| Setting | Default | Required | Description |

|---|---|---|---|

| redis.singleServerConfig.address | - | Yes | Redis single server addresses, e.g. "redis://host:port". Either singleServerConfig or clusterServersConfig must be provided. |

| redis.clusterServersConfig.nodeAddresses | - | Yes | Json array with Redis cluster server addresses, e.g. ["redis://host1:port1","redis://host2:port2"]. Either singleServerConfig or clusterServersConfig must be provided. |

| redis.provider.* | - | No | Provider specific settings |

| redis.provider.name | - | Yes | Provider name. The valid values are aws-elasti-cache(see instructions), gcp-memory-store(see instructions), azure-redis-cache(see instructions. |

| redis.provider.userId | - | Yes | IAM-enabled user ID. Note. It's applied to aws-elasti-cache

|

| redis.provider.accountName | - | Yes | The resource name of the service account for which the credentials are requested, in the following format: projects/-/serviceAccounts/{ACCOUNT_EMAIL_OR_UNIQUEID}. The - wildcard character is required; replacing it with a project ID is invalid. Note. It's applied to gcp-memory-store

|

| redis.provider.region | - | Yes | Geo region where the cache is located. Note. It's applied to aws-elasti-cache

|

| redis.provider.clusterName | - | Yes | Redis cluster name. Note. It's applied to aws-elasti-cache

|

| redis.provider.serverless | - | Yes | The flag indicates if the cache is serverless. Note. It's applied to aws-elasti-cache

|

Access Configurations

| Setting | Default | Required | Description |

|---|---|---|---|

| access.admin.rules | - | No | Matches claims from identity providers with the rules to figure out whether a user is allowed to perform admin actions (READ and WRITE access to any resource, approving publication requests from DIAL users. Configuration example for DIAL Core: "access": {"admin": {"rules": [{"function": "EQUAL","source": "roles","targets": ["admin"]}]}} Where, function - a matching function one of TRUE (any user is admin), FALSE (noone is admin), EQUAL, CONTAIN, REGEX source - the path to the claim in the JWT token payload that should be evaluated against the targets. targets - is an array of values that the system checks for in the source claim. |

| access.createCodeAppRoles | - | No | The list of user roles to be allowed to create custom code applications or run code interpreter. Note. Calls by per request key are permitted even if the originator doesn't have permissions. |

Applications Configurations

| Setting | Default | Required | Description |

|---|---|---|---|

| applications.includeCustomApps | false | No | The flag indicates whether applications should be included into openai listing (required for Code Apps, Custom Apps, Quick Apps, etc) |

| applications.controllerEndpoint | - | No | The endpoint to Application Controller Web Service that manages deployments for applications with functions |

| applications.controllerTimeout | 240000 | No | The timeout of operations to Application Controller Web Service |

Code Interpreter Configurations

| Setting | Default | Required | Description |

|---|---|---|---|

| codeInterpreter.sessionImage | - | No | The code interpreter session image to use |

| codeInterpreter.sessionProxyUrl | - | No | The code interpreter will be deployed as a pod instead of knative deployment and all requests will be proxied through nginx proxy |

| codeInterpreter.sessionTtl | 600000 | No | The session time to leave after the last API call |

| codeInterpreter.checkPeriod | 10000 | No | The interval at which to check active sessions for expiration |

| codeInterpreter.checkSize | 256 | No | The maximum number of active sessions to check in single check |

DIAL Core stores user data in the following storages:

- Blob Storage keeps permanent data.

- Redis keeps volatile in-memory data for fast access.

[!NOTE] Refer to Storage Requirements to learn more.

[!NOTE] Dynamic settings are stored in JSON files, specified via "config.files" static setting, and reloaded at interval, specified via "config.reload" static setting. Refer to example.

Dynamic settings can include the following parameters:

| Parameter | Description |

|---|---|

| routes | A list of registered routes in DIAL Core. Refer to Routes to see dynamic settings. |

| interceptors | A list of deployed DIAL Interceptors and their parameters. Refer to Interceptors to see dynamic settings. |

| globalInterceptors | A list of interceptors to be executed for any deployment on chat completion request. Refer to Interceptors to learn more. |

| applications | A list of deployed applications and their parameters. Refer to Applications to see dynamic settings. |

| models | A list of deployed models and their parameters. Refer to Models to see dynamic settings. |

| toolsets | A list of available toolsets and their parameters. Refer to Toolsets to see dynamic settings. |

| roles | API key or JWT roles and their parameters. Refer to Roles to see dynamic settings. |

| keys | API keys and their parameters. Refer to API Keys to see dynamic settings. |

| retriableErrorCodes | List of Retriable Error Codes for handling outages at LLM Providers. This list extends the existing error codes (429, 502, 503, 504) but doesn't override them. |

| applicationTypeSchemas | Map of application schemas where key - schema ID, value - schema itself in JSON format. All schemas must be conformed to the root schema https://dial.epam.com/application_type_schemas/schema#. See link

|

Copyright (C) 2024 EPAM Systems

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this file except in compliance with the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-dial-core

Similar Open Source Tools

ai-dial-core

AI DIAL Core is an HTTP Proxy that provides a unified API to different chat completion and embedding models, assistants, and applications. It is written in Java 17 and built on Eclipse Vert.x. The core functionality includes handling static and dynamic settings, deployment on Kubernetes using Helm charts, and storing user data in Blob Storage and Redis. It supports various identity providers, storage providers like AWS S3, Google Cloud Storage, and Azure Blob Store, and features like AI DIAL Addons, Interceptors, Assistants, Applications, and Models with customizable parameters and configurations.

vscode-unify-chat-provider

The 'vscode-unify-chat-provider' repository is a tool that integrates multiple LLM API providers into VS Code's GitHub Copilot Chat using the Language Model API. It offers free tier access to mainstream models, perfect compatibility with major LLM API formats, deep adaptation to API features, best performance with built-in parameters, out-of-the-box configuration, import/export support, great UX, and one-click use of various models. The tool simplifies model setup, migration, and configuration for users, providing a seamless experience within VS Code for utilizing different language models.

llm-graph-builder

Knowledge Graph Builder App is a tool designed to convert PDF documents into a structured knowledge graph stored in Neo4j. It utilizes OpenAI's GPT/Diffbot LLM to extract nodes, relationships, and properties from PDF text content. Users can upload files from local machine or S3 bucket, choose LLM model, and create a knowledge graph. The app integrates with Neo4j for easy visualization and querying of extracted information.

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

FFAIVideo

FFAIVideo is a lightweight node.js project that utilizes popular AI LLM to intelligently generate short videos. It supports multiple AI LLM models such as OpenAI, Moonshot, Azure, g4f, Google Gemini, etc. Users can input text to automatically synthesize exciting video content with subtitles, background music, and customizable settings. The project integrates Microsoft Edge's online text-to-speech service for voice options and uses Pexels website for video resources. Installation of FFmpeg is essential for smooth operation. Inspired by MoneyPrinterTurbo, MoneyPrinter, and MsEdgeTTS, FFAIVideo is designed for front-end developers with minimal dependencies and simple usage.

RouterArena

RouterArena is an open evaluation platform and leaderboard for LLM routers, aiming to provide a standardized evaluation framework for assessing the performance of routers in terms of accuracy, cost, and other metrics. It offers diverse data coverage, comprehensive metrics, automated evaluation, and a live leaderboard to track router performance. Users can evaluate their routers by following setup steps, obtaining routing decisions, running LLM inference, and evaluating router performance. Contributions and collaborations are welcome, and users can submit their routers for evaluation to be included in the leaderboard.

PredictorLLM

PredictorLLM is an advanced trading agent framework that utilizes large language models to automate trading in financial markets. It includes a profiling module to establish agent characteristics, a layered memory module for retaining and prioritizing financial data, and a decision-making module to convert insights into trading strategies. The framework mimics professional traders' behavior, surpassing human limitations in data processing and continuously evolving to adapt to market conditions for superior investment outcomes.

DB-GPT-Hub

DB-GPT-Hub is an experimental project leveraging Large Language Models (LLMs) for Text-to-SQL parsing. It includes stages like data collection, preprocessing, model selection, construction, and fine-tuning of model weights. The project aims to enhance Text-to-SQL capabilities, reduce model training costs, and enable developers to contribute to improving Text-to-SQL accuracy. The ultimate goal is to achieve automated question-answering based on databases, allowing users to execute complex database queries using natural language descriptions. The project has successfully integrated multiple large models and established a comprehensive workflow for data processing, SFT model training, prediction output, and evaluation.

GenAIComps

GenAIComps is an initiative aimed at building enterprise-grade Generative AI applications using a microservice architecture. It simplifies the scaling and deployment process for production, abstracting away infrastructure complexities. GenAIComps provides a suite of containerized microservices that can be assembled into a mega-service tailored for real-world Enterprise AI applications. The modular approach of microservices allows for independent development, deployment, and scaling of individual components, promoting modularity, flexibility, and scalability. The mega-service orchestrates multiple microservices to deliver comprehensive solutions, encapsulating complex business logic and workflow orchestration. The gateway serves as the interface for users to access the mega-service, providing customized access based on user requirements.

TinyLLM

TinyLLM is a project that helps build a small locally hosted language model with a web interface using consumer-grade hardware. It supports multiple language models, builds a local OpenAI API web service, and serves a Chatbot web interface with customizable prompts. The project requires specific hardware and software configurations for optimal performance. Users can run a local language model using inference servers like vLLM, llama-cpp-python, and Ollama. The Chatbot feature allows users to interact with the language model through a web-based interface, supporting features like summarizing websites, displaying news headlines, stock prices, weather conditions, and using vector databases for queries.

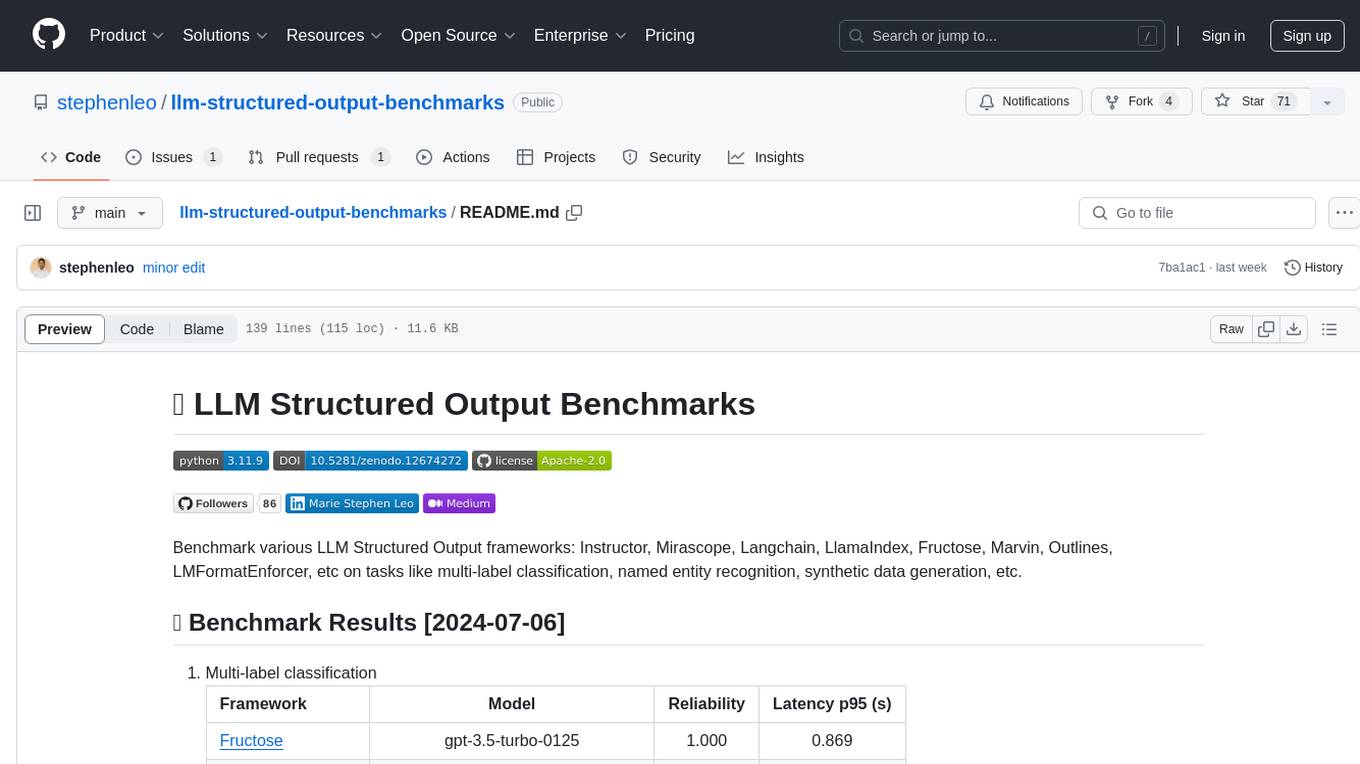

llm-structured-output-benchmarks

Benchmark various LLM Structured Output frameworks like Instructor, Mirascope, Langchain, LlamaIndex, Fructose, Marvin, Outlines, LMFormatEnforcer, etc on tasks like multi-label classification, named entity recognition, synthetic data generation. The tool provides benchmark results, methodology, instructions to run the benchmark, add new data, and add a new framework. It also includes a roadmap for framework-related tasks, contribution guidelines, citation information, and feedback request.

TableLLM

TableLLM is a large language model designed for efficient tabular data manipulation tasks in real office scenarios. It can generate code solutions or direct text answers for tasks like insert, delete, update, query, merge, and chart operations on tables embedded in spreadsheets or documents. The model has been fine-tuned based on CodeLlama-7B and 13B, offering two scales: TableLLM-7B and TableLLM-13B. Evaluation results show its performance on benchmarks like WikiSQL, Spider, and self-created table operation benchmark. Users can use TableLLM for code and text generation tasks on tabular data.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

cambrian

Cambrian-1 is a fully open project focused on exploring multimodal Large Language Models (LLMs) with a vision-centric approach. It offers competitive performance across various benchmarks with models at different parameter levels. The project includes training configurations, model weights, instruction tuning data, and evaluation details. Users can interact with Cambrian-1 through a Gradio web interface for inference. The project is inspired by LLaVA and incorporates contributions from Vicuna, LLaMA, and Yi. Cambrian-1 is licensed under Apache 2.0 and utilizes datasets and checkpoints subject to their respective original licenses.

openkore

OpenKore is a custom client and intelligent automated assistant for Ragnarok Online. It is a free, open source, and cross-platform program (Linux, Windows, and MacOS are supported). To run OpenKore, you need to download and extract it or clone the repository using Git. Configure OpenKore according to the documentation and run openkore.pl to start. The tool provides a FAQ section for troubleshooting, guidelines for reporting issues, and information about botting status on official servers. OpenKore is developed by a global team, and contributions are welcome through pull requests. Various community resources are available for support and communication. Users are advised to comply with the GNU General Public License when using and distributing the software.

llm-compression-intelligence

This repository presents the findings of the paper "Compression Represents Intelligence Linearly". The study reveals a strong linear correlation between the intelligence of LLMs, as measured by benchmark scores, and their ability to compress external text corpora. Compression efficiency, derived from raw text corpora, serves as a reliable evaluation metric that is linearly associated with model capabilities. The repository includes the compression corpora used in the paper, code for computing compression efficiency, and data collection and processing pipelines.

For similar tasks

alog

ALog is an open-source project designed to facilitate the deployment of server-side code to Cloudflare. It provides a step-by-step guide on creating a Cloudflare worker, configuring environment variables, and updating API base URL. The project aims to simplify the process of deploying server-side code and interacting with OpenAI API. ALog is distributed under the GNU General Public License v2.0, allowing users to modify and distribute the app while adhering to App Store Review Guidelines.

crabml

Crabml is a llama.cpp compatible AI inference engine written in Rust, designed for efficient inference on various platforms with WebGPU support. It focuses on running inference tasks with SIMD acceleration and minimal memory requirements, supporting multiple models and quantization methods. The project is hackable, embeddable, and aims to provide high-performance AI inference capabilities.

chatllm.cpp

ChatLLM.cpp is a pure C++ implementation tool for real-time chatting with RAG on your computer. It supports inference of various models ranging from less than 1B to more than 300B. The tool provides accelerated memory-efficient CPU inference with quantization, optimized KV cache, and parallel computing. It allows streaming generation with a typewriter effect and continuous chatting with virtually unlimited content length. ChatLLM.cpp also offers features like Retrieval Augmented Generation (RAG), LoRA, Python/JavaScript/C bindings, web demo, and more possibilities. Users can clone the repository, quantize models, build the project using make or CMake, and run quantized models for interactive chatting.

ai-dial-core

AI DIAL Core is an HTTP Proxy that provides a unified API to different chat completion and embedding models, assistants, and applications. It is written in Java 17 and built on Eclipse Vert.x. The core functionality includes handling static and dynamic settings, deployment on Kubernetes using Helm charts, and storing user data in Blob Storage and Redis. It supports various identity providers, storage providers like AWS S3, Google Cloud Storage, and Azure Blob Store, and features like AI DIAL Addons, Interceptors, Assistants, Applications, and Models with customizable parameters and configurations.

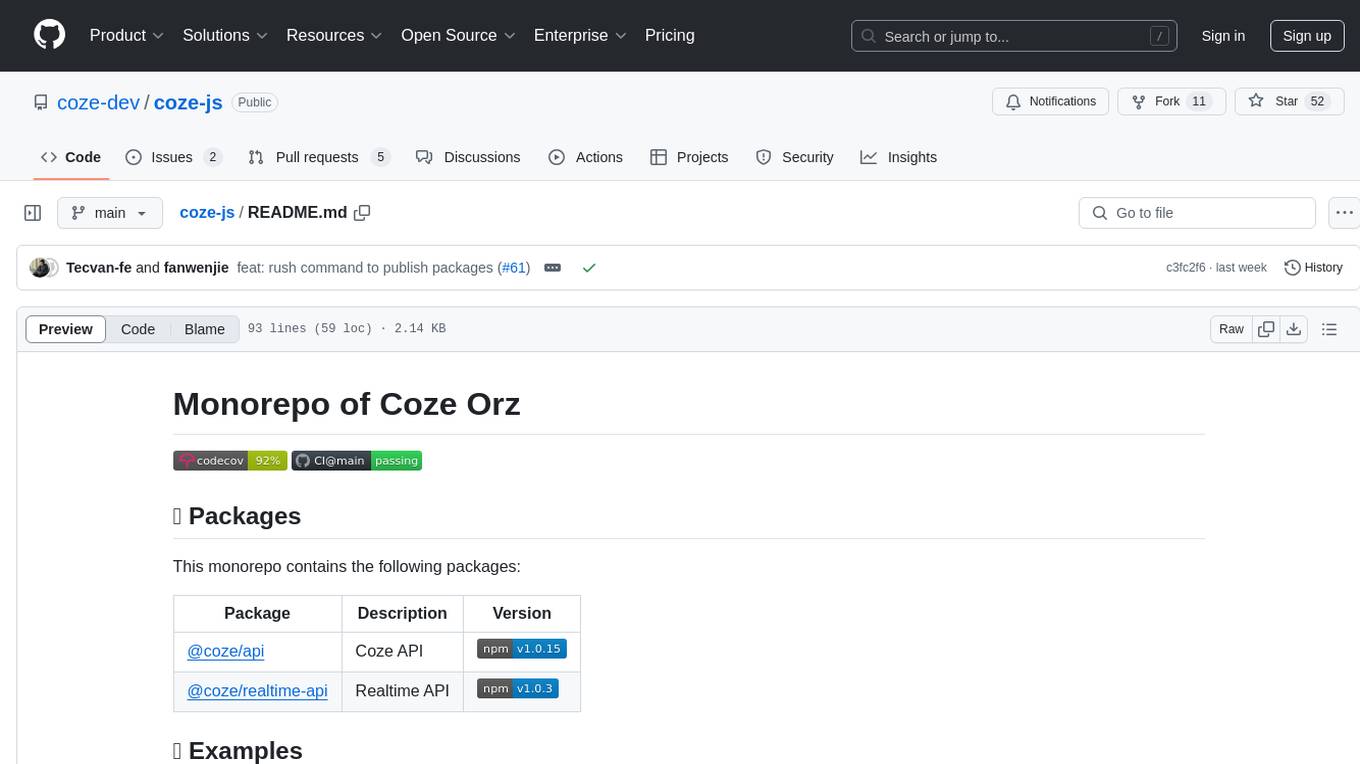

coze-js

Coze-js is a monorepo containing packages for Coze API and Realtime API. It provides usage examples for Node.js and React Web, as well as full console and sample call up demos. The tool requires Node.js 18+, pnpm 9.12.0, and Rush 5.140.0 for installation. Developers can start developing projects within the repository by following the provided steps. Each package in the monorepo can be developed and published independently, with documentation on contributing guidelines and publishing. The tool is licensed under MIT.

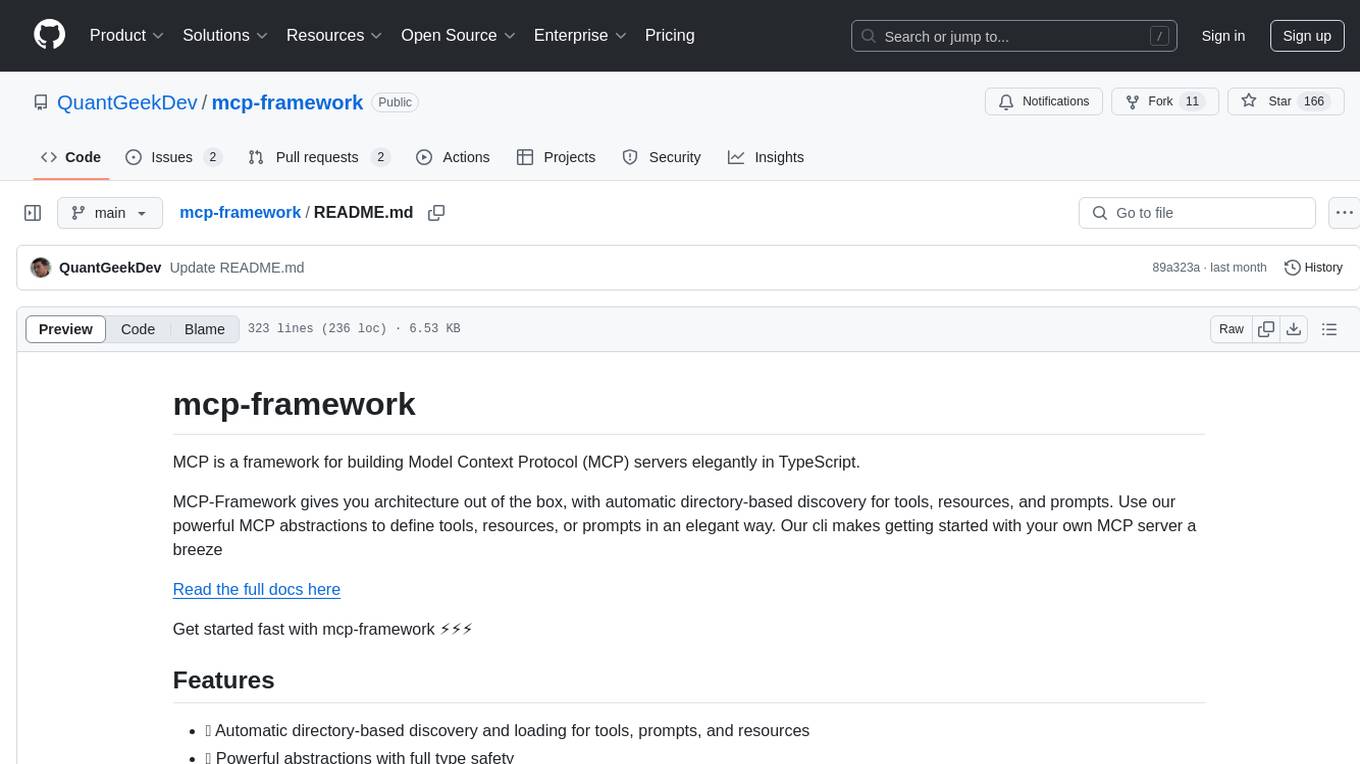

mcp-framework

MCP-Framework is a TypeScript framework for building Model Context Protocol (MCP) servers with automatic directory-based discovery for tools, resources, and prompts. It provides powerful abstractions, simple server setup, and a CLI for rapid development and project scaffolding.

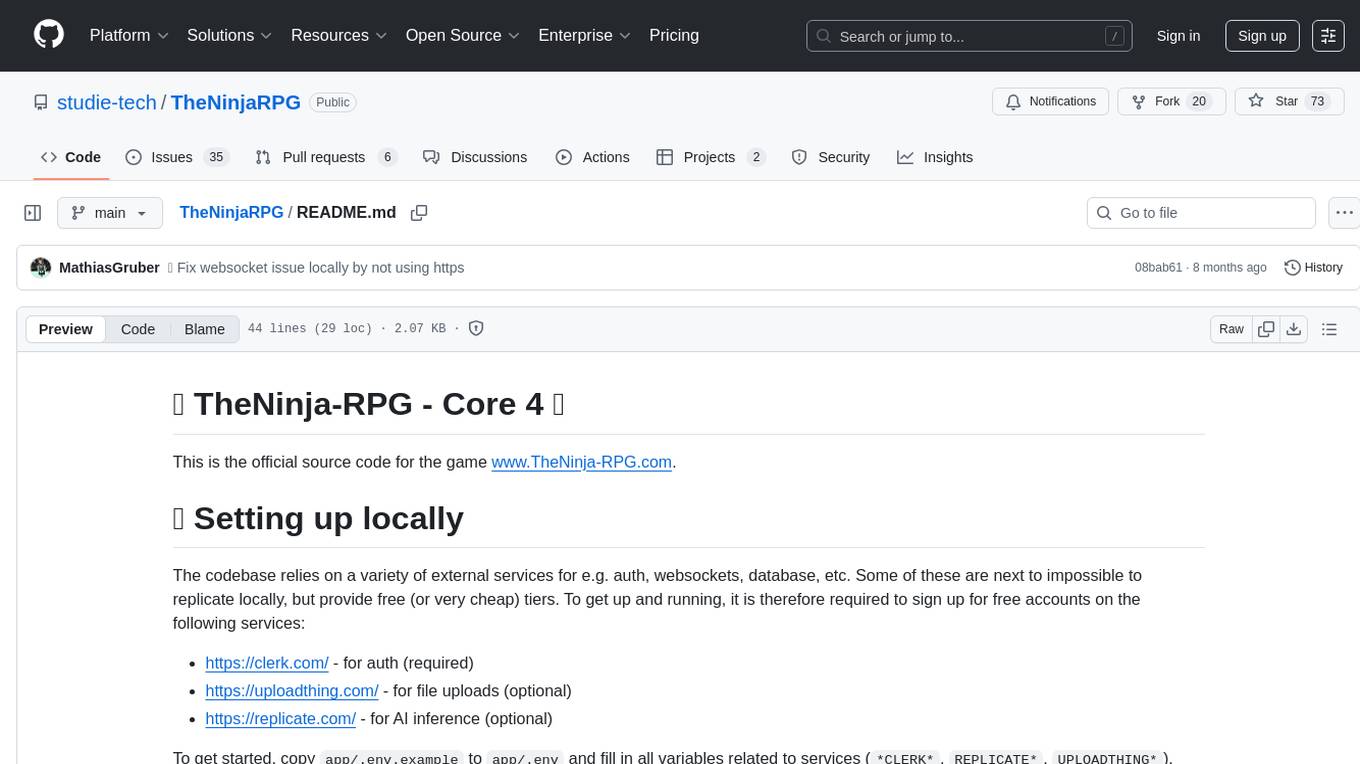

TheNinjaRPG

TheNinja-RPG is the official source code for the game www.TheNinja-RPG.com. It relies on external services for authentication, websockets, database, etc. Users need to sign up for free accounts on services like Clerk, UploadThing, and Replicate. The codebase provides various 'make' commands for setup, building, and database management. The project does not have a specific license and is under exclusive copyright protection.

langstream

LangStream is a tool for natural language processing tasks, providing a CLI for easy installation and usage. Users can try sample applications like Chat Completions and create their own applications using the developer documentation. It supports running on Kubernetes for production-ready deployment, with support for various Kubernetes distributions and external components like Apache Kafka or Apache Pulsar cluster. Users can deploy LangStream locally using minikube and manage the cluster with mini-langstream. Development requirements include Docker, Java 17, Git, Python 3.11+, and PIP, with the option to test local code changes using mini-langstream.

For similar jobs

resonance

Resonance is a framework designed to facilitate interoperability and messaging between services in your infrastructure and beyond. It provides AI capabilities and takes full advantage of asynchronous PHP, built on top of Swoole. With Resonance, you can: * Chat with Open-Source LLMs: Create prompt controllers to directly answer user's prompts. LLM takes care of determining user's intention, so you can focus on taking appropriate action. * Asynchronous Where it Matters: Respond asynchronously to incoming RPC or WebSocket messages (or both combined) with little overhead. You can set up all the asynchronous features using attributes. No elaborate configuration is needed. * Simple Things Remain Simple: Writing HTTP controllers is similar to how it's done in the synchronous code. Controllers have new exciting features that take advantage of the asynchronous environment. * Consistency is Key: You can keep the same approach to writing software no matter the size of your project. There are no growing central configuration files or service dependencies registries. Every relation between code modules is local to those modules. * Promises in PHP: Resonance provides a partial implementation of Promise/A+ spec to handle various asynchronous tasks. * GraphQL Out of the Box: You can build elaborate GraphQL schemas by using just the PHP attributes. Resonance takes care of reusing SQL queries and optimizing the resources' usage. All fields can be resolved asynchronously.

aiogram_bot_template

Aiogram bot template is a boilerplate for creating Telegram bots using Aiogram framework. It provides a solid foundation for building robust and scalable bots with a focus on code organization, database integration, and localization.

pluto

Pluto is a development tool dedicated to helping developers **build cloud and AI applications more conveniently** , resolving issues such as the challenging deployment of AI applications and open-source models. Developers are able to write applications in familiar programming languages like **Python and TypeScript** , **directly defining and utilizing the cloud resources necessary for the application within their code base** , such as AWS SageMaker, DynamoDB, and more. Pluto automatically deduces the infrastructure resource needs of the app through **static program analysis** and proceeds to create these resources on the specified cloud platform, **simplifying the resources creation and application deployment process**.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

aioconsole

aioconsole is a Python package that provides asynchronous console and interfaces for asyncio. It offers asynchronous equivalents to input, print, exec, and code.interact, an interactive loop running the asynchronous Python console, customization and running of command line interfaces using argparse, stream support to serve interfaces instead of using standard streams, and the apython script to access asyncio code at runtime without modifying the sources. The package requires Python version 3.8 or higher and can be installed from PyPI or GitHub. It allows users to run Python files or modules with a modified asyncio policy, replacing the default event loop with an interactive loop. aioconsole is useful for scenarios where users need to interact with asyncio code in a console environment.

aiosqlite

aiosqlite is a Python library that provides a friendly, async interface to SQLite databases. It replicates the standard sqlite3 module but with async versions of all the standard connection and cursor methods, along with context managers for automatically closing connections and cursors. It allows interaction with SQLite databases on the main AsyncIO event loop without blocking execution of other coroutines while waiting for queries or data fetches. The library also replicates most of the advanced features of sqlite3, such as row factories and total changes tracking.