openlit

Open source platform for AI Engineering: OpenTelemetry-native LLM Observability, GPU Monitoring, Guardrails, Evaluations, Prompt Management, Vault, Playground. 🚀💻 Integrates with 50+ LLM Providers, VectorDBs, Agent Frameworks and GPUs.

Stars: 2246

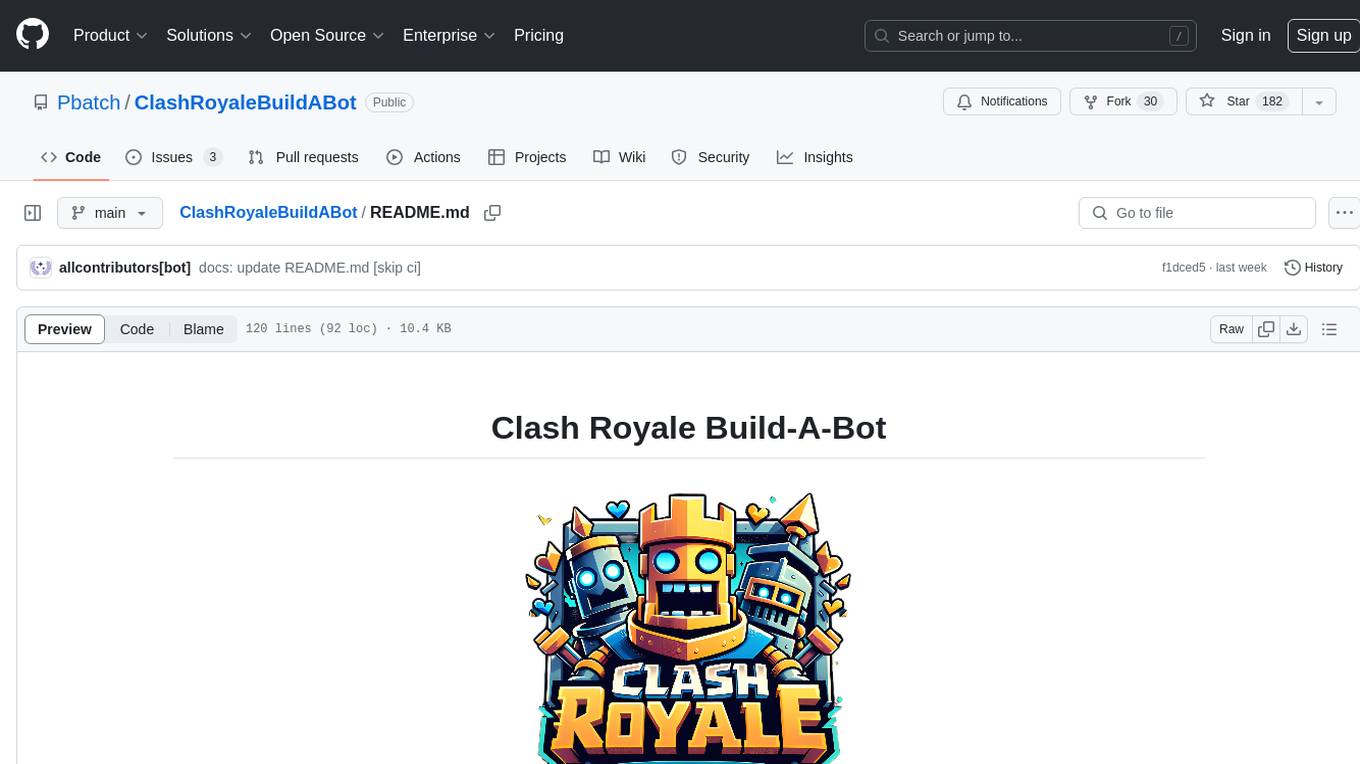

OpenLIT is an OpenTelemetry-native GenAI and LLM Application Observability tool. It's designed to make the integration process of observability into GenAI projects as easy as pie – literally, with just **a single line of code**. Whether you're working with popular LLM Libraries such as OpenAI and HuggingFace or leveraging vector databases like ChromaDB, OpenLIT ensures your applications are monitored seamlessly, providing critical insights to improve performance and reliability.

README:

https://github.com/user-attachments/assets/6909bf4a-f5b4-4060-bde3-95e91fa36168

OpenLIT allows you to simplify your AI development workflow, especially for Generative AI and LLMs. It streamlines essential tasks like experimenting with LLMs, organizing and versioning prompts, and securely handling API keys. With just one line of code, you can enable OpenTelemetry-native observability, offering full-stack monitoring that includes LLMs, vector databases, and GPUs. This enables developers to confidently build AI features and applications, transitioning smoothly from testing to production.

This project proudly follows and maintains the Semantic Conventions with the OpenTelemetry community, consistently updating to align with the latest standards in Observability.

-

📈 Analytics Dashboard: Monitor your AI application's health and performance with detailed dashboards that track metrics, costs, and user interactions, providing a clear view of overall efficiency.

-

🔌 OpenTelemetry-native Observability SDKs: Vendor-neutral SDKs to send traces and metrics to your existing observability tools.

-

💲 Cost Tracking for Custom and Fine-Tuned Models: Tailor cost estimations for specific models using custom pricing files for precise budgeting.

-

🐛 Exceptions Monitoring Dashboard: Quickly spot and resolve issues by tracking common exceptions and errors with a dedicated monitoring dashboard.

-

💭 Prompt Management: Manage and version prompts using Prompt Hub for consistent and easy access across applications.

-

🔑 API Keys and Secrets Management: Securely handle your API keys and secrets centrally, avoiding insecure practices.

-

🎮 Experiment with different LLMs: Use OpenGround to explore, test and compare various LLMs side by side.

-

🚀 Fleet Hub for OpAMP Management: Centrally manage and monitor OpenTelemetry Collectors across your infrastructure using the OpAMP (Open Agent Management Protocol) with secure TLS communication.

flowchart TB;

subgraph " "

direction LR;

subgraph " "

direction LR;

OpenLIT_SDK[OpenLIT SDK] -->|Sends Traces & Metrics| OTC[OpenTelemetry Collector];

OTC -->|Stores Data| ClickHouseDB[ClickHouse];

end

subgraph " "

direction RL;

OpenLIT_UI[OpenLIT] -->|Pulls Data| ClickHouseDB;

end

end-

Git Clone OpenLIT Repository

Open your command line or terminal and run:

git clone [email protected]:openlit/openlit.git

-

Self-host using Docker

Deploy and run OpenLIT with the following command:

docker compose up -d

For instructions on installing in Kubernetes using Helm, refer to the Kubernetes Helm installation guide.

Open your command line or terminal and run:

pip install openlitFor instructions on using the TypeScript SDK, visit the TypeScript SDK Installation guide.

Integrate OpenLIT into your AI applications by adding the following lines to your code.

import openlit

openlit.init()Configure the telemetry data destination as follows:

| Purpose | Parameter/Environment Variable | For Sending to OpenLIT |

|---|---|---|

| Send data to an HTTP OTLP endpoint |

otlp_endpoint or OTEL_EXPORTER_OTLP_ENDPOINT

|

"http://127.0.0.1:4318" |

| Authenticate telemetry backends |

otlp_headers or OTEL_EXPORTER_OTLP_HEADERS

|

Not required by default |

💡 Info: If the

otlp_endpointorOTEL_EXPORTER_OTLP_ENDPOINTis not provided, the OpenLIT SDK will output traces directly to your console, which is recommended during the development phase.

Initialize using Function Arguments

Add the following two lines to your application code:

import openlit

openlit.init(

otlp_endpoint="http://127.0.0.1:4318",

)Initialize using Environment Variables

Add the following two lines to your application code:

import openlit

openlit.init()Then, configure the your OTLP endpoint using environment variable:

export OTEL_EXPORTER_OTLP_ENDPOINT = "http://127.0.0.1:4318"With the Observability data now being collected and sent to OpenLIT, the next step is to visualize and analyze this data to get insights into your AI application's performance, behavior, and identify areas of improvement.

Just head over to OpenLIT at 127.0.0.1:3000 on your browser to start exploring. You can login using the default credentials:

-

Email:

[email protected] -

Password:

openlituser

OpenLIT auto-instruments 44+ LLM providers, AI frameworks, and vector databases with a single line of code. Each integration produces OpenTelemetry-native traces and metrics. Click any card to view the integration docs.

|

OpenAI |

Anthropic |

Cohere |

Mistral AI |

|

Groq |

Google AI Studio |

Together AI |

Ollama |

|

AWS Bedrock |

Azure AI Inference |

Vertex AI |

vLLM |

|

Reka |

LiteLLM |

Hugging Face |

AI21 |

|

GPT4All |

PremAI |

Sarvam AI |

Julep |

|

MultiOn |

|

LangChain |

LlamaIndex |

CrewAI |

Pydantic AI |

|

Agno |

Browser Use |

Haystack |

Letta |

|

Mem0 |

AG2 (AutoGen) |

Controlflow |

Crawl4AI |

|

Dynamiq |

OpenAI Agents |

Firecrawl |

|

Pinecone |

ChromaDB |

Qdrant |

Milvus |

|

Astra DB |

PostgreSQL |

|

ElevenLabs |

AssemblyAI |

We are dedicated to continuously improving OpenLIT. Here's a look at what's been accomplished and what's on the horizon:

| Feature | Status |

|---|---|

| OpenTelemetry-native Observability SDK for Tracing and Metrics | ✅ Completed |

| OpenTelemetry-native GPU Monitoring | ✅ Completed |

| Exceptions and Error Monitoring | ✅ Completed |

| Prompt Hub for Managing and Versioning Prompts | ✅ Completed |

| OpenGround for Testing and Comparing LLMs | ✅ Completed |

| Vault for Central Management of LLM API Keys and Secrets | ✅ Completed |

| Cost Tracking for Custom Models | ✅ Completed |

| Real-Time Guardrails Implementation | ✅ Completed |

| Programmatic Evaluation for LLM Response | ✅ Completed |

| Fleet Hub for OpAMP Management | ✅ Completed |

| Auto-Evaluation Metrics Based on Usage | 🔜 Coming Soon |

| Human Feedback for LLM Events | 🔜 Coming Soon |

| Dataset Generation Based on LLM Events | 🔜 Coming Soon |

| Search over Traces | 🔜 Coming Soon |

Whether it's big or small, we love contributions 💚. Check out our Contribution guide to get started

Unsure where to start? Here are a few ways to get involved:

- Join our Slack or Discord community to discuss ideas, share feedback, and connect with both our team and the wider OpenLIT community.

Your input helps us grow and improve, and we're here to support you every step of the way.

Connect with OpenLIT community and maintainers for support, discussions, and updates:

- 🌟 If you like it, leave a star on our GitHub.

- 🌍 Join our Slack or Discord community for live interactions and questions.

- 🐞 Report bugs on our GitHub Issues to help us improve OpenLIT.

- 𝕏 Follow us on X for the latest updates and news.

OpenLIT is available under the Apache-2.0 license.

This project is proudly supported by:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for openlit

Similar Open Source Tools

openlit

OpenLIT is an OpenTelemetry-native GenAI and LLM Application Observability tool. It's designed to make the integration process of observability into GenAI projects as easy as pie – literally, with just **a single line of code**. Whether you're working with popular LLM Libraries such as OpenAI and HuggingFace or leveraging vector databases like ChromaDB, OpenLIT ensures your applications are monitored seamlessly, providing critical insights to improve performance and reliability.

AI-HealthCare-Assistant

NearestDoctor is a full-stack, AI-powered healthcare web application that bridges the gap between patients and medical professionals. It combines machine learning, blockchain, facial recognition, and natural language processing into a seamless platform that covers the entire patient journey from first symptom to secured medical record. The platform offers features like AI symptom detection & chatbot, location-based doctor search, smart appointment scheduling, blockchain medical records, X-ray lung diagnosis, mental health test, doctor identity verification, multi-mode authentication, blogs & web scraping search, paramedical e-shop, AI voice assistant, and integrated payments. The architecture follows a microservices-inspired MERN architecture with React.js frontend, Node/Express REST API, Python Flask AI/ML microservices, MongoDB database, Ethereum smart contracts, TensorFlow for face recognition and X-ray diagnosis, Nanonets AI OCR API, Dialogflow for chatbot, Google Maps API, ALAN SDK for voice assistant, and Stripe API for payments.

ClashRoyaleBuildABot

Clash Royale Build-A-Bot is a project that allows users to build their own bot to play Clash Royale. It provides an advanced state generator that accurately returns detailed information using cutting-edge technologies. The project includes tutorials for setting up the environment, building a basic bot, and understanding state generation. It also offers updates such as replacing YOLOv5 with YOLOv8 unit model and enhancing performance features like placement and elixir management. The future roadmap includes plans to label more images of diverse cards, add a tracking layer for unit predictions, publish tutorials on Q-learning and imitation learning, release the YOLOv5 training notebook, implement chest opening and card upgrading features, and create a leaderboard for the best bots developed with this repository.

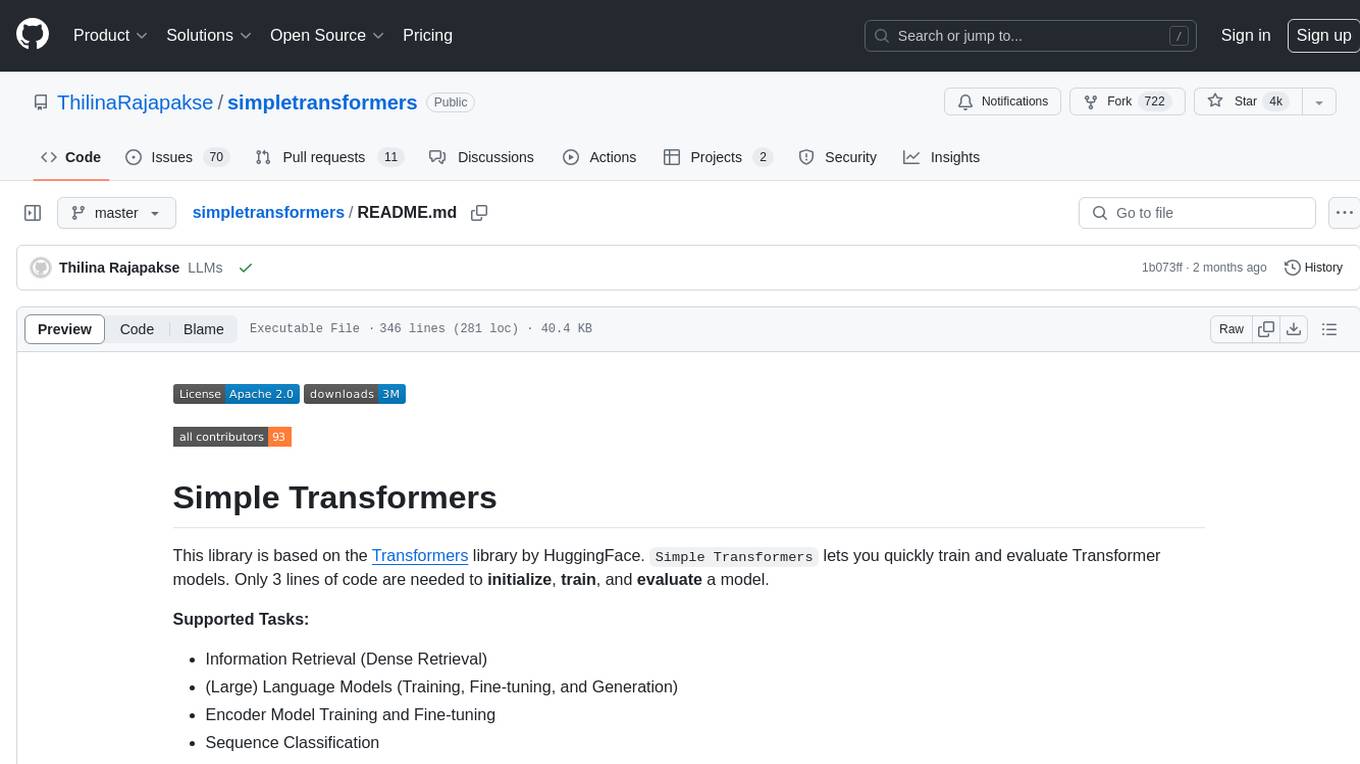

simpletransformers

Simple Transformers is a library based on the Transformers library by HuggingFace, allowing users to quickly train and evaluate Transformer models with only 3 lines of code. It supports various tasks such as Information Retrieval, Language Models, Encoder Model Training, Sequence Classification, Token Classification, Question Answering, Language Generation, T5 Model, Seq2Seq Tasks, Multi-Modal Classification, and Conversational AI.

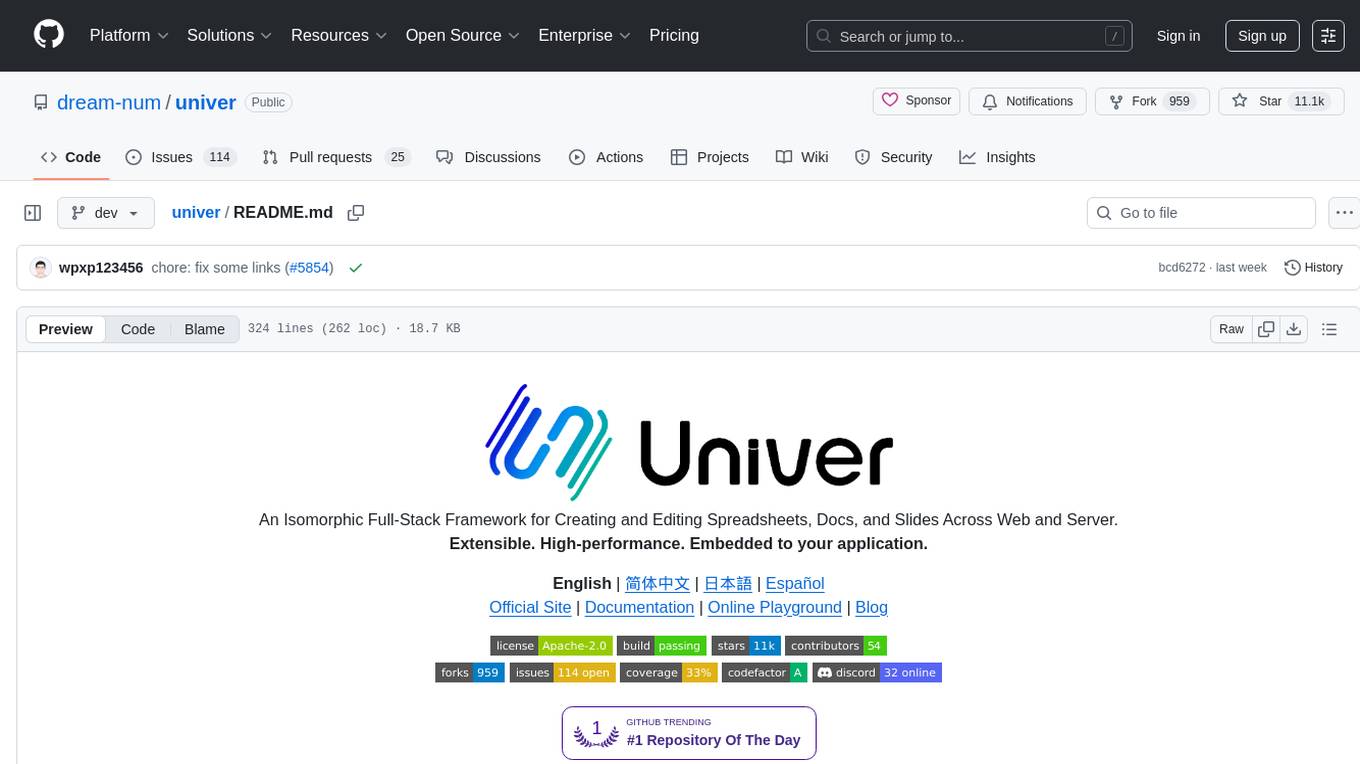

univer

Univer is an isomorphic full-stack framework designed for creating and editing spreadsheets, documents, and slides across web and server. It is highly extensible, high-performance, and can be embedded into applications. Univer offers a wide range of features including formulas, conditional formatting, data validation, collaborative editing, printing, import & export, and more. It supports multiple languages and provides a distraction-free editing experience with a clean interface. Univer is suitable for data analysts, software developers, project managers, content creators, and educators.

Jarvis

Jarvis is a powerful virtual AI assistant designed to simplify daily tasks through voice command integration. It features automation, device management, and personalized interactions, transforming technology engagement. Built using Python and AI models, it serves personal and administrative needs efficiently, making processes seamless and productive.

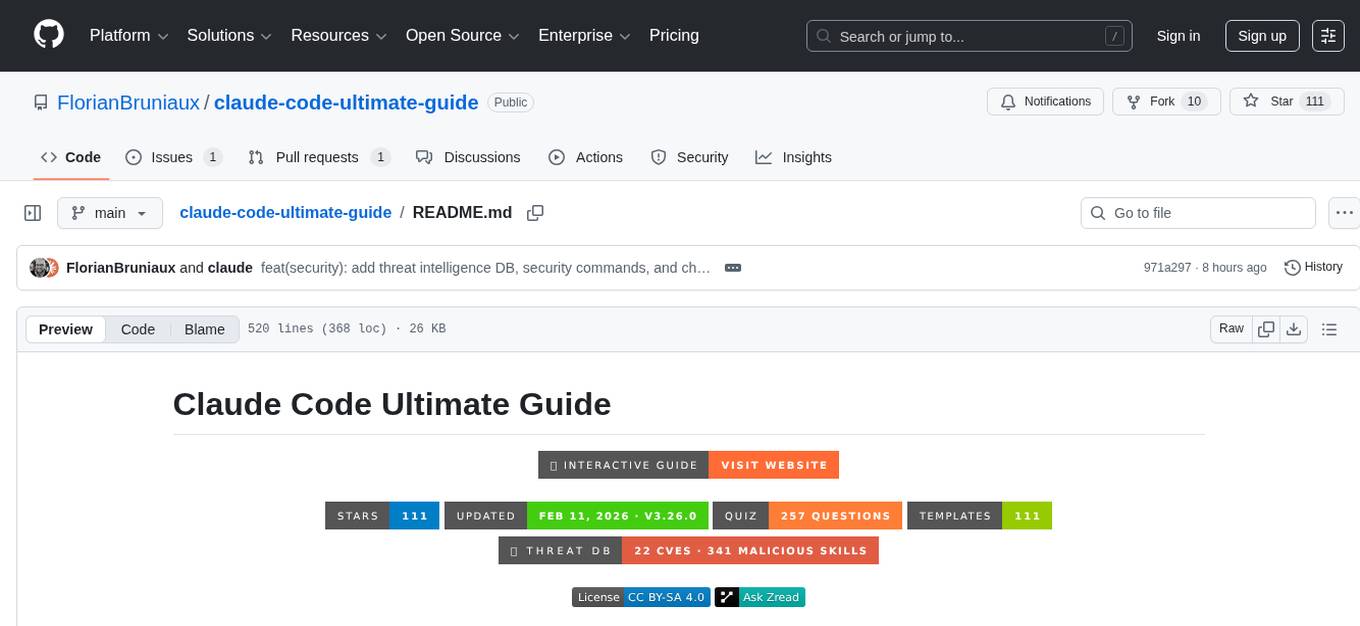

claude-code-ultimate-guide

The Claude Code Ultimate Guide is an exhaustive documentation resource that takes users from beginner to power user in using Claude Code. It includes production-ready templates, workflow guides, a quiz, and a cheatsheet for daily use. The guide covers educational depth, methodologies, and practical examples to help users understand concepts and workflows. It also provides interactive onboarding, a repository structure overview, and learning paths for different user levels. The guide is regularly updated and offers a unique 257-question quiz for comprehensive assessment. Users can also find information on agent teams coverage, methodologies, annotated templates, resource evaluations, and learning paths for different roles like junior developer, senior developer, power user, and product manager/devops/designer.

anylabeling

AnyLabeling is a tool for effortless data labeling with AI support from YOLO and Segment Anything. It combines features from LabelImg and Labelme with an improved UI and auto-labeling capabilities. Users can annotate images with polygons, rectangles, circles, lines, and points, as well as perform auto-labeling using YOLOv5 and Segment Anything. The tool also supports text detection, recognition, and Key Information Extraction (KIE) labeling, with multiple language options available such as English, Vietnamese, and Chinese.

pro-chat

ProChat is a components library focused on quickly building large language model chat interfaces. It empowers developers to create rich, dynamic, and intuitive chat interfaces with features like automatic chat caching, streamlined conversations, message editing tools, auto-rendered Markdown, and programmatic controls. The tool also includes design evolution plans such as customized dialogue rendering, enhanced request parameters, personalized error handling, expanded documentation, and atomic component design.

mindnlp

MindNLP is an open-source NLP library based on MindSpore. It provides a platform for solving natural language processing tasks, containing many common approaches in NLP. It can help researchers and developers to construct and train models more conveniently and rapidly. Key features of MindNLP include: * Comprehensive data processing: Several classical NLP datasets are packaged into a friendly module for easy use, such as Multi30k, SQuAD, CoNLL, etc. * Friendly NLP model toolset: MindNLP provides various configurable components. It is friendly to customize models using MindNLP. * Easy-to-use engine: MindNLP simplified complicated training process in MindSpore. It supports Trainer and Evaluator interfaces to train and evaluate models easily. MindNLP supports a wide range of NLP tasks, including: * Language modeling * Machine translation * Question answering * Sentiment analysis * Sequence labeling * Summarization MindNLP also supports industry-leading Large Language Models (LLMs), including Llama, GLM, RWKV, etc. For support related to large language models, including pre-training, fine-tuning, and inference demo examples, you can find them in the "llm" directory. To install MindNLP, you can either install it from Pypi, download the daily build wheel, or install it from source. The installation instructions are provided in the documentation. MindNLP is released under the Apache 2.0 license. If you find this project useful in your research, please consider citing the following paper: @misc{mindnlp2022, title={{MindNLP}: a MindSpore NLP library}, author={MindNLP Contributors}, howpublished = {\url{https://github.com/mindlab-ai/mindnlp}}, year={2022} }

monoscope

Monoscope is an open-source monitoring and observability platform that uses artificial intelligence to understand and monitor systems automatically. It allows users to ingest and explore logs, traces, and metrics in S3 buckets, query in natural language via LLMs, and create AI agents to detect anomalies. Key capabilities include universal data ingestion, AI-powered understanding, natural language interface, cost-effective storage, and zero configuration. Monoscope is designed to reduce alert fatigue, catch issues before they impact users, and provide visibility across complex systems.

sdk

The Kubeflow SDK is a set of unified Pythonic APIs that simplify running AI workloads at any scale without needing to learn Kubernetes. It offers consistent APIs across the Kubeflow ecosystem, enabling users to focus on building AI applications rather than managing complex infrastructure. The SDK provides a unified experience, simplifies AI workloads, is built for scale, allows rapid iteration, and supports local development without a Kubernetes cluster.

agentfield

AgentField is an open-source control plane designed for autonomous AI agents, providing infrastructure for agents to make decisions beyond chatbots. It offers features like scaling infrastructure, routing & discovery, async execution, durable state, observability, trust infrastructure with cryptographic identity, verifiable credentials, and policy enforcement. Users can write agents in Python, Go, TypeScript, or interact via REST APIs. The tool enables the creation of AI backends that reason autonomously within defined boundaries, offering predictability and flexibility. AgentField aims to bridge the gap between AI frameworks and production-ready infrastructure for AI agents.

smriti-ai

Smriti AI is an intelligent learning assistant that helps users organize, understand, and retain study materials. It transforms passive content into active learning tools by capturing resources, converting them into summaries and quizzes, providing spaced revision with reminders, tracking progress, and offering a multimodal interface. Suitable for students, self-learners, professionals, educators, and coaching institutes.

gateway

Gateway is a tool that streamlines requests to 100+ open & closed source models with a unified API. It is production-ready with support for caching, fallbacks, retries, timeouts, load balancing, and can be edge-deployed for minimum latency. It is blazing fast with a tiny footprint, supports load balancing across multiple models, providers, and keys, ensures app resilience with fallbacks, offers automatic retries with exponential fallbacks, allows configurable request timeouts, supports multimodal routing, and can be extended with plug-in middleware. It is battle-tested over 300B tokens and enterprise-ready for enhanced security, scale, and custom deployments.

latentbox

Latent Box is a curated collection of resources for AI, creativity, and art. It aims to bridge the information gap with high-quality content, promote diversity and interdisciplinary collaboration, and maintain updates through community co-creation. The website features a wide range of resources, including articles, tutorials, tools, and datasets, covering various topics such as machine learning, computer vision, natural language processing, generative art, and creative coding.

For similar tasks

openlit

OpenLIT is an OpenTelemetry-native GenAI and LLM Application Observability tool. It's designed to make the integration process of observability into GenAI projects as easy as pie – literally, with just **a single line of code**. Whether you're working with popular LLM Libraries such as OpenAI and HuggingFace or leveraging vector databases like ChromaDB, OpenLIT ensures your applications are monitored seamlessly, providing critical insights to improve performance and reliability.

doku

OpenLIT is an OpenTelemetry-native GenAI and LLM Application Observability tool. It's designed to make the integration process of observability into GenAI projects as easy as pie – literally, with just a single line of code. Whether you're working with popular LLM Libraries such as OpenAI and HuggingFace or leveraging vector databases like ChromaDB, OpenLIT ensures your applications are monitored seamlessly, providing critical insights to improve performance and reliability.

openllmetry

OpenLLMetry is a set of extensions built on top of OpenTelemetry that gives you complete observability over your LLM application. Because it uses OpenTelemetry under the hood, it can be connected to your existing observability solutions - Datadog, Honeycomb, and others. It's built and maintained by Traceloop under the Apache 2.0 license. The repo contains standard OpenTelemetry instrumentations for LLM providers and Vector DBs, as well as a Traceloop SDK that makes it easy to get started with OpenLLMetry, while still outputting standard OpenTelemetry data that can be connected to your observability stack. If you already have OpenTelemetry instrumented, you can just add any of our instrumentations directly.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.