opik

Debug, evaluate, and monitor your LLM applications, RAG systems, and agentic workflows with comprehensive tracing, automated evaluations, and production-ready dashboards.

Stars: 14270

Comet Opik is a repository containing two main services: a frontend and a backend. It provides a Python SDK for easy installation. Users can run the full application locally with minikube, following specific installation prerequisites. The repository structure includes directories for applications like Opik backend, with detailed instructions available in the README files. Users can manage the installation using simple k8s commands and interact with the application via URLs for checking the running application and API documentation. The repository aims to facilitate local development and testing of Opik using Kubernetes technology.

README:

Opik helps you build, evaluate, and optimize LLM systems that run better, faster, and cheaper. From RAG chatbots to code assistants to complex agentic pipelines, Opik provides comprehensive tracing, evaluations, dashboards, and powerful features like Opik Agent Optimizer and Opik Guardrails to improve and secure your LLM powered applications in production.

Website • Slack Community • Twitter • Changelog • Documentation

🧑⚖️ LLM as a Judge • 🔍 Evaluating your Application • ⭐ Star Us • 🤝 Contributing

Opik (built by Comet) is an open-source platform designed to streamline the entire lifecycle of LLM applications. It empowers developers to evaluate, test, monitor, and optimize their models and agentic systems. Key offerings include:

- Comprehensive Observability: Deep tracing of LLM calls, conversation logging, and agent activity.

- Advanced Evaluation: Robust prompt evaluation, LLM-as-a-judge, and experiment management.

- Production-Ready: Scalable monitoring dashboards and online evaluation rules for production.

- Opik Agent Optimizer: Dedicated SDK and set of optimizers to enhance prompts and agents.

- Opik Guardrails: Features to help you implement safe and responsible AI practices.

Key capabilities include:

-

Development & Tracing:

- Track all LLM calls and traces with detailed context during development and in production (Quickstart).

- Extensive 3rd-party integrations for easy observability: Seamlessly integrate with a growing list of frameworks, supporting many of the largest and most popular ones natively (including recent additions like Google ADK, Autogen, and Flowise AI). (Integrations)

- Annotate traces and spans with feedback scores via the Python SDK or the UI.

- Experiment with prompts and models in the Prompt Playground.

-

Evaluation & Testing:

- Automate your LLM application evaluation with Datasets and Experiments.

- Leverage powerful LLM-as-a-judge metrics for complex tasks like hallucination detection, moderation, and RAG assessment (Answer Relevance, Context Precision).

- Integrate evaluations into your CI/CD pipeline with our PyTest integration.

-

Production Monitoring & Optimization:

- Log high volumes of production traces: Opik is designed for scale (40M+ traces/day).

- Monitor feedback scores, trace counts, and token usage over time in the Opik Dashboard.

- Utilize Online Evaluation Rules with LLM-as-a-Judge metrics to identify production issues.

- Leverage Opik Agent Optimizer and Opik Guardrails to continuously improve and secure your LLM applications in production.

[!TIP] If you are looking for features that Opik doesn't have today, please raise a new Feature request 🚀

Get your Opik server running in minutes. Choose the option that best suits your needs:

Access Opik instantly without any setup. Ideal for quick starts and hassle-free maintenance.

👉 Create your free Comet account

Deploy Opik in your own environment. Choose between Docker for local setups or Kubernetes for scalability.

This is the simplest way to get a local Opik instance running. Note the new ./opik.sh installation script:

On Linux or Mac Enviroment:

# Clone the Opik repository

git clone https://github.com/comet-ml/opik.git

# Navigate to the repository

cd opik

# Start the Opik platform

./opik.shOn Windows Enviroment:

# Clone the Opik repository

git clone https://github.com/comet-ml/opik.git

# Navigate to the repository

cd opik

# Start the Opik platform

powershell -ExecutionPolicy ByPass -c ".\\opik.ps1"Service Profiles for Development

The Opik installation scripts now support service profiles for different development scenarios:

# Start full Opik suite (default behavior)

./opik.sh

# Start only infrastructure services (databases, caches etc.)

./opik.sh --infra

# Start infrastructure + backend services

./opik.sh --backend

# Enable guardrails with any profile

./opik.sh --guardrails # Guardrails with full Opik suite

./opik.sh --backend --guardrails # Guardrails with infrastructure + backendUse the --help or --info options to troubleshoot issues. Dockerfiles now ensure containers run as non-root users for enhanced security. Once all is up and running, you can now visit localhost:5173 on your browser! For detailed instructions, see the Local Deployment Guide.

For production or larger-scale self-hosted deployments, Opik can be installed on a Kubernetes cluster using our Helm chart. Click the badge for the full Kubernetes Installation Guide using Helm.

[!IMPORTANT] Version 1.7.0 Changes: Please check the changelog for important updates and breaking changes.

Opik provides a suite of client libraries and a REST API to interact with the Opik server. This includes SDKs for Python, TypeScript, and Ruby (via OpenTelemetry), allowing for seamless integration into your workflows. For detailed API and SDK references, see the Opik Client Reference Documentation.

To get started with the Python SDK:

Install the package:

# install using pip

pip install opik

# or install with uv

uv pip install opikConfigure the python SDK by running the opik configure command, which will prompt you for your Opik server address (for self-hosted instances) or your API key and workspace (for Comet.com):

opik configure[!TIP] You can also call

opik.configure(use_local=True)from your Python code to configure the SDK to run on a local self-hosted installation, or provide API key and workspace details directly for Comet.com. Refer to the Python SDK documentation for more configuration options.

You are now ready to start logging traces using the Python SDK.

The easiest way to log traces is to use one of our direct integrations. Opik supports a wide array of frameworks, including recent additions like Google ADK, Autogen, AG2, and Flowise AI:

| Integration | Description | Documentation |

|---|---|---|

| ADK | Log traces for Google Agent Development Kit (ADK) | Documentation |

| AG2 | Log traces for AG2 LLM calls | Documentation |

| AIsuite | Log traces for aisuite LLM calls | Documentation |

| Agno | Log traces for Agno agent orchestration framework calls | Documentation |

| Anthropic | Log traces for Anthropic LLM calls | Documentation |

| Autogen | Log traces for Autogen agentic workflows | Documentation |

| Bedrock | Log traces for Amazon Bedrock LLM calls | Documentation |

| BeeAI | Log traces for BeeAI agent framework calls | Documentation |

| BytePlus | Log traces for BytePlus LLM calls | Documentation |

| Cloudflare Workers AI | Log traces for Cloudflare Workers AI calls | Documentation |

| Cohere | Log traces for Cohere LLM calls | Documentation |

| CrewAI | Log traces for CrewAI calls | Documentation |

| Cursor | Log traces for Cursor conversations | Documentation |

| DeepSeek | Log traces for DeepSeek LLM calls | Documentation |

| Dify | Log traces for Dify agent runs | Documentation |

| DSPY | Log traces for DSPy runs | Documentation |

| Fireworks AI | Log traces for Fireworks AI LLM calls | Documentation |

| Flowise AI | Log traces for Flowise AI visual LLM builder | Documentation |

| Gemini | Log traces for Google Gemini LLM calls | Documentation |

| Groq | Log traces for Groq LLM calls | Documentation |

| Guardrails | Log traces for Guardrails AI validations | Documentation |

| Haystack | Log traces for Haystack calls | Documentation |

| Instructor | Log traces for LLM calls made with Instructor | Documentation |

| LangChain (Python) | Log traces for LangChain LLM calls | Documentation |

| LangChain (JS/TS) | Log traces for LangChain JavaScript/TypeScript calls | Documentation |

| LangGraph | Log traces for LangGraph executions | Documentation |

| LiteLLM | Log traces for LiteLLM model calls | Documentation |

| LiveKit Agents | Log traces for LiveKit Agents AI agent framework calls | Documentation |

| LlamaIndex | Log traces for LlamaIndex LLM calls | Documentation |

| Mastra | Log traces for Mastra AI workflow framework calls | Documentation |

| Mistral AI | Log traces for Mistral AI LLM calls | Documentation |

| Novita AI | Log traces for Novita AI LLM calls | Documentation |

| Ollama | Log traces for Ollama LLM calls | Documentation |

| OpenAI (Python) | Log traces for OpenAI LLM calls | Documentation |

| OpenAI (JS/TS) | Log traces for OpenAI JavaScript/TypeScript calls | Documentation |

| OpenAI Agents | Log traces for OpenAI Agents SDK calls | Documentation |

| OpenRouter | Log traces for OpenRouter LLM calls | Documentation |

| OpenTelemetry | Log traces for OpenTelemetry supported calls | Documentation |

| Pipecat | Log traces for Pipecat real-time voice agent calls | Documentation |

| Predibase | Log traces for Predibase LLM calls | Documentation |

| Pydantic AI | Log traces for PydanticAI agent calls | Documentation |

| Ragas | Log traces for Ragas evaluations | Documentation |

| Semantic Kernel | Log traces for Microsoft Semantic Kernel calls | Documentation |

| Smolagents | Log traces for Smolagents agents | Documentation |

| Spring AI | Log traces for Spring AI framework calls | Documentation |

| Strands Agents | Log traces for Strands agents calls | Documentation |

| Together AI | Log traces for Together AI LLM calls | Documentation |

| Vercel AI SDK | Log traces for Vercel AI SDK calls | Documentation |

| VoltAgent | Log traces for VoltAgent agent framework calls | Documentation |

| WatsonX | Log traces for IBM watsonx LLM calls | Documentation |

| xAI Grok | Log traces for xAI Grok LLM calls | Documentation |

[!TIP] If the framework you are using is not listed above, feel free to open an issue or submit a PR with the integration.

If you are not using any of the frameworks above, you can also use the track function decorator to log traces:

import opik

opik.configure(use_local=True) # Run locally

@opik.track

def my_llm_function(user_question: str) -> str:

# Your LLM code here

return "Hello"[!TIP] The track decorator can be used in conjunction with any of our integrations and can also be used to track nested function calls.

The Python Opik SDK includes a number of LLM as a judge metrics to help you evaluate your LLM application. Learn more about it in the metrics documentation.

To use them, simply import the relevant metric and use the score function:

from opik.evaluation.metrics import Hallucination

metric = Hallucination()

score = metric.score(

input="What is the capital of France?",

output="Paris",

context=["France is a country in Europe."]

)

print(score)Opik also includes a number of pre-built heuristic metrics as well as the ability to create your own. Learn more about it in the metrics documentation.

Opik allows you to evaluate your LLM application during development through Datasets and Experiments. The Opik Dashboard offers enhanced charts for experiments and better handling of large traces. You can also run evaluations as part of your CI/CD pipeline using our PyTest integration.

If you find Opik useful, please consider giving us a star! Your support helps us grow our community and continue improving the product.

There are many ways to contribute to Opik:

- Submit bug reports and feature requests

- Review the documentation and submit Pull Requests to improve it

- Speaking or writing about Opik and letting us know

- Upvoting popular feature requests to show your support

To learn more about how to contribute to Opik, please see our contributing guidelines.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for opik

Similar Open Source Tools

opik

Comet Opik is a repository containing two main services: a frontend and a backend. It provides a Python SDK for easy installation. Users can run the full application locally with minikube, following specific installation prerequisites. The repository structure includes directories for applications like Opik backend, with detailed instructions available in the README files. Users can manage the installation using simple k8s commands and interact with the application via URLs for checking the running application and API documentation. The repository aims to facilitate local development and testing of Opik using Kubernetes technology.

llm-graph-builder

Knowledge Graph Builder App is a tool designed to convert PDF documents into a structured knowledge graph stored in Neo4j. It utilizes OpenAI's GPT/Diffbot LLM to extract nodes, relationships, and properties from PDF text content. Users can upload files from local machine or S3 bucket, choose LLM model, and create a knowledge graph. The app integrates with Neo4j for easy visualization and querying of extracted information.

agentql

AgentQL is a suite of tools for extracting data and automating workflows on live web sites featuring an AI-powered query language, Python and JavaScript SDKs, a browser-based debugger, and a REST API endpoint. It uses natural language queries to pinpoint data and elements on any web page, including authenticated and dynamically generated content. Users can define structured data output and apply transforms within queries. AgentQL's natural language selectors find elements intuitively based on the content of the web page and work across similar web sites, self-healing as UI changes over time.

openlit

OpenLIT is an OpenTelemetry-native GenAI and LLM Application Observability tool. It's designed to make the integration process of observability into GenAI projects as easy as pie – literally, with just **a single line of code**. Whether you're working with popular LLM Libraries such as OpenAI and HuggingFace or leveraging vector databases like ChromaDB, OpenLIT ensures your applications are monitored seamlessly, providing critical insights to improve performance and reliability.

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

last_layer

last_layer is a security library designed to protect LLM applications from prompt injection attacks, jailbreaks, and exploits. It acts as a robust filtering layer to scrutinize prompts before they are processed by LLMs, ensuring that only safe and appropriate content is allowed through. The tool offers ultra-fast scanning with low latency, privacy-focused operation without tracking or network calls, compatibility with serverless platforms, advanced threat detection mechanisms, and regular updates to adapt to evolving security challenges. It significantly reduces the risk of prompt-based attacks and exploits but cannot guarantee complete protection against all possible threats.

portkey-python-sdk

The Portkey Python SDK is a control panel for AI apps that allows seamless integration of Portkey's advanced features with OpenAI methods. It provides features such as AI gateway for unified API signature, interoperability, automated fallbacks & retries, load balancing, semantic caching, virtual keys, request timeouts, observability with logging, requests tracing, custom metadata, feedback collection, and analytics. Users can make requests to OpenAI using Portkey SDK and also use async functionality. The SDK is compatible with OpenAI SDK methods and offers Portkey-specific methods like feedback and prompts. It supports various providers and encourages contributions through Github issues or direct contact via email or Discord.

findto

Findto is a decentralized search tool for the Web and AI that puts people in control of algorithms. It aims to provide a better search experience by offering diverse sources, privacy and carbon level information, trends exploration, autosuggest, voice search, and more. Findto encourages a free search experience and promotes a healthier internet by empowering users with democratic choices.

FFAIVideo

FFAIVideo is a lightweight node.js project that utilizes popular AI LLM to intelligently generate short videos. It supports multiple AI LLM models such as OpenAI, Moonshot, Azure, g4f, Google Gemini, etc. Users can input text to automatically synthesize exciting video content with subtitles, background music, and customizable settings. The project integrates Microsoft Edge's online text-to-speech service for voice options and uses Pexels website for video resources. Installation of FFmpeg is essential for smooth operation. Inspired by MoneyPrinterTurbo, MoneyPrinter, and MsEdgeTTS, FFAIVideo is designed for front-end developers with minimal dependencies and simple usage.

LLaVA-MORE

LLaVA-MORE is a new family of Multimodal Language Models (MLLMs) that integrates recent language models with diverse visual backbones. The repository provides a unified training protocol for fair comparisons across all architectures and releases training code and scripts for distributed training. It aims to enhance Multimodal LLM performance and offers various models for different tasks. Users can explore different visual backbones like SigLIP and methods for managing image resolutions (S2) to improve the connection between images and language. The repository is a starting point for expanding the study of Multimodal LLMs and enhancing new features in the field.

EasyEdit

EasyEdit is a Python package for edit Large Language Models (LLM) like `GPT-J`, `Llama`, `GPT-NEO`, `GPT2`, `T5`(support models from **1B** to **65B**), the objective of which is to alter the behavior of LLMs efficiently within a specific domain without negatively impacting performance across other inputs. It is designed to be easy to use and easy to extend.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

EvoAgentX

EvoAgentX is an open-source framework for building, evaluating, and evolving LLM-based agents or agentic workflows in an automated, modular, and goal-driven manner. It enables developers and researchers to move beyond static prompt chaining or manual workflow orchestration by introducing a self-evolving agent ecosystem. The framework includes features such as agent workflow autoconstruction, built-in evaluation, self-evolution engine, plug-and-play compatibility, comprehensive built-in tools, memory module support, and human-in-the-loop interactions.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

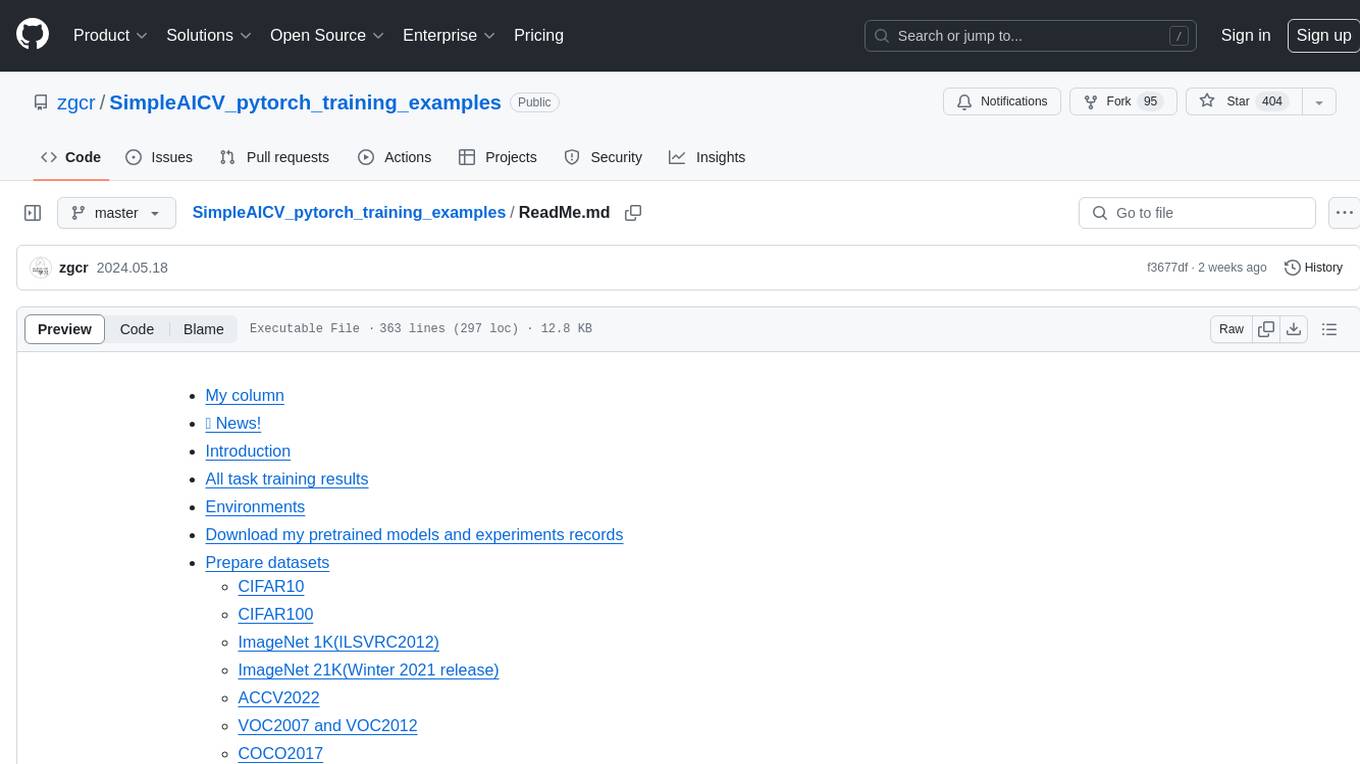

SimpleAICV_pytorch_training_examples

SimpleAICV_pytorch_training_examples is a repository that provides simple training and testing examples for various computer vision tasks such as image classification, object detection, semantic segmentation, instance segmentation, knowledge distillation, contrastive learning, masked image modeling, OCR text detection, OCR text recognition, human matting, salient object detection, interactive segmentation, image inpainting, and diffusion model tasks. The repository includes support for multiple datasets and networks, along with instructions on how to prepare datasets, train and test models, and use gradio demos. It also offers pretrained models and experiment records for download from huggingface or Baidu-Netdisk. The repository requires specific environments and package installations to run effectively.

boost

Laravel Boost accelerates AI-assisted development by providing essential context and structure for generating high-quality, Laravel-specific code. It includes an MCP server with specialized tools, AI guidelines, and a Documentation API. Boost is designed to streamline AI-assisted coding workflows by offering precise, context-aware results and extensive Laravel-specific information.

For similar tasks

opik

Comet Opik is a repository containing two main services: a frontend and a backend. It provides a Python SDK for easy installation. Users can run the full application locally with minikube, following specific installation prerequisites. The repository structure includes directories for applications like Opik backend, with detailed instructions available in the README files. Users can manage the installation using simple k8s commands and interact with the application via URLs for checking the running application and API documentation. The repository aims to facilitate local development and testing of Opik using Kubernetes technology.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.