LLaVA-MORE

LLaVA-MORE: A Comparative Study of LLMs and Visual Backbones for Enhanced Visual Instruction Tuning

Stars: 109

LLaVA-MORE is a new family of Multimodal Language Models (MLLMs) that integrates recent language models with diverse visual backbones. The repository provides a unified training protocol for fair comparisons across all architectures and releases training code and scripts for distributed training. It aims to enhance Multimodal LLM performance and offers various models for different tasks. Users can explore different visual backbones like SigLIP and methods for managing image resolutions (S2) to improve the connection between images and language. The repository is a starting point for expanding the study of Multimodal LLMs and enhancing new features in the field.

README:

If you make use of our work, please cite our repo:

@inproceedings{cocchi2025llava,

title={{LLaVA-MORE: A Comparative Study of LLMs and Visual Backbones for Enhanced Visual Instruction Tuning}},

author={Cocchi, Federico and Moratelli, Nicholas and Caffagni, Davide and Sarto, Sara and Baraldi, Lorenzo and Cornia, Marcella and Cucchiara, Rita},

booktitle={arxiv},

year={2025}

}- [2025/03/21] 🔜 Training and release of our LLaVA-MORE checkpoints with different LLMs and Visual Backbones

- [2025/03/21] 📚 Check out our latest paper

- [2025/03/18] 🔥 LLaVA-MORE 8B is now availalbe on Ollama!

- [2024/08/16] 📌 Improved LLaVA-MORE 8B model, considering advanced image backbones.

- [2024/08/01] 🔥 First release of our LLaVA-MORE 8B, based on LLaMA 3.1.

- [2024/08/01] 🔎 If you are interested in this area of research, check out our survey on the revolution of Multimodal LLMs, recently published in ACL (Findings).

- [2024/08/01] 📚 Check out the latest researches from AImageLab.

LLaVA-MORE is a new family of MLLMs that integrates recent language models with diverse visual backbones. To ensure fair comparisons, we employ a unified training protocol applied consistently across all architectures.

To further support the research community in enhancing Multimodal LLM performance, we are also releasing the training code and scripts for distributed training.

Remember to star the repository to stay updated on future releases 🤗!

In this section, we present the performance of our model compared to other versions of LLaVA across different multimodal datasets.

| Model Name | Text-VQA* | Science-QA | AI2D | SEED-vid | SEED-all | SEED-img | MMMU | MMBench-Cn | MMBench-En | POPE | GQA | MME-P | MME-C |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| LLaVA-v1.5-7B | 58.2 | 69.0 | 56.4 | 42.0 | 61.6 | 66.8 | 34.2 | 56.5 | 65.3 | 85.6 | 62.4 | 1474.3 | 314.6 |

| LLaVA-v1.5-LLaMA3-8B | 57.6 | 74.2 | 60.7 | 42.0 | 64.3 | 70.1 | 37.3 | 65.4 | 70.3 | 85.4 | 63.5 | 1544.4 | 330.3 |

| LLaVA-MORE-8B | 58.4 | 76.3 | 61.8 | 42.4 | 64.1 | 69.8 | 39.4 | 68.2 | 72.4 | 85.1 | 63.6 | 1531.5 | 353.3 |

| LLaVA-MORE-8B-S2 | 60.9 | 76.7 | 62.2 | 42.3 | 64.2 | 69.9 | 38.7 | 65.8 | 71.1 | 86.5 | 64.5 | 1563.8 | 293.2 |

| LLaVA-MORE-8B-siglip | 62.1 | 77.5 | 63.6 | 46.1 | 65.8 | 71.0 | 39.8 | 68.2 | 73.1 | 86.1 | 64.6 | 1531.0 | 315.4 |

| LLaVA-MORE-8B-S2-siglip | 63.5 | 77.1 | 62.7 | 44.7 | 65.5 | 71.0 | 40.0 | 68.0 | 71.8 | 86.0 | 64.9 | 1541.4 | 336.4 |

* The results of TextVQA are computed with OCR token in the input prompt.

In the table below, you can find links to ours 🤗 Hugging Face models.

| Model Name | 🤗 Hugging Face | Summary |

|---|---|---|

| LLaVA_MORE-llama_3_1-8B-pretrain | Hugging Face Model | Pretrained on LCS-558K and using LLaMA 3.1 8B Instruct as LLM backbone |

| LLaVA_MORE-llama_3_1-8B-finetuning | Hugging Face Model | Finetuned on LLaVA-Instruct-665K and using LLaMA 3.1 8B Instruct as LLM backbone |

| LLaVA_MORE-llama_3_1-8B-S2-pretrain | Hugging Face Model | Pretrained on LCS-558K and using LLaMA 3.1 8B Instruct as LLM backbone |

| LLaVA_MORE-llama_3_1-8B-S2-finetuning | Hugging Face Model | Finetuned on LLaVA-Instruct-665K and using LLaMA 3.1 8B Instruct as LLM backbone |

| LLaVA_MORE-llama_3_1-8B-siglip-pretrain | Hugging Face Model | Pretrained on LCS-558K and using LLaMA 3.1 8B Instruct as LLM backbone |

| LLaVA_MORE-llama_3_1-8B-siglip-finetuning | Hugging Face Model | Finetuned on LLaVA-Instruct-665K and using LLaMA 3.1 8B Instruct as LLM backbone |

| LLaVA_MORE-llama_3_1-8B-S2-siglip-pretrain | Hugging Face Model | Pretrained on LCS-558K and using LLaMA 3.1 8B Instruct as LLM backbone |

| LLaVA_MORE-llama_3_1-8B-S2-siglip-finetuning | Hugging Face Model | Finetuned on LLaVA-Instruct-665K and using LLaMA 3.1 8B Instruct as LLM backbone |

To create the conda environment named more use the following instructions.

With this environment you will have all the packages to run the code in this repo.

conda create -n more python==3.8.16

conda activate more

pip install -r requirements.txt

Note that the requirements are heavily inspired by the original LLaVA repo.

To help the community in training complex systems in distributed scenarios, we are publicly releasing not only the source code but also the bash scripts needed to train LLaVA-MORE on HPC facilities with a SLURM scheduler.

To further extend the reproducibility of our approach, we are also releasing the wandb logs of the training runs.

Pretraining

sbatch scripts/more/11_pretrain_llama_31_acc_st_1.shFinetuning

sbatch scripts/more/12_finetuning_llama_31_acc_st_1.shAs mentioned before, LLaVA-MORE introduces the use of LLaMA 3.1 within the LLaVA architecture for the first time. However, this repository goes beyond that single enhancement.

We have also incorporated the ability to use different visual backbones, such as SigLIP, and various methods for managing image resolutions (S2).

Considering that, you can view this repo as an effort to expand the study of Multimodal LLMs in multiple directions and as a starting point for enhancing new features to improve the connection between images and language.

You can find more references in this folder: scripts/more.

You can try our LLaVA-MORE with LLaMA 3.1 in the Image-To-Text task using the following script.

source activate more

cd local/path/LLaVA-MORE

export PYTHONPATH=.

# tokenizer_model_path

export HF_TOKEN=hf_read_token

export TOKENIZER_PATH=aimagelab/LLaVA_MORE-llama_3_1-8B-finetuning

python -u llava/eval/run_llava.pyIf you get out-of-memory problems, consider loading the model weights in 8 bit (load_in_8bit=True).

We thank the LLaVA team for open-sourcing a modular codebase to extend and train different models within the LLaVA family. We are also happy users of the lmms-eval library, which has significantly reduced the evaluation time of our checkpoints across different datasets.

We also thank CINECA for the availability of high-performance computing resources used to train LLaVA-MORE. This work is supported by the PNRR-M4C2 project FAIR - Future Artificial Intelligence Research and by the PNRR project ITSERR - Italian Strengthening of Esfri RI Resilience.

In case you face any issues or have any questions, please feel free to create an issue. Additionally, we welcome you to open a pull request to integrate new features and contribute to our project.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLaVA-MORE

Similar Open Source Tools

LLaVA-MORE

LLaVA-MORE is a new family of Multimodal Language Models (MLLMs) that integrates recent language models with diverse visual backbones. The repository provides a unified training protocol for fair comparisons across all architectures and releases training code and scripts for distributed training. It aims to enhance Multimodal LLM performance and offers various models for different tasks. Users can explore different visual backbones like SigLIP and methods for managing image resolutions (S2) to improve the connection between images and language. The repository is a starting point for expanding the study of Multimodal LLMs and enhancing new features in the field.

qserve

QServe is a serving system designed for efficient and accurate Large Language Models (LLM) on GPUs with W4A8KV4 quantization. It achieves higher throughput compared to leading industry solutions, allowing users to achieve A100-level throughput on cheaper L40S GPUs. The system introduces the QoQ quantization algorithm with 4-bit weight, 8-bit activation, and 4-bit KV cache, addressing runtime overhead challenges. QServe improves serving throughput for various LLM models by implementing compute-aware weight reordering, register-level parallelism, and fused attention memory-bound techniques.

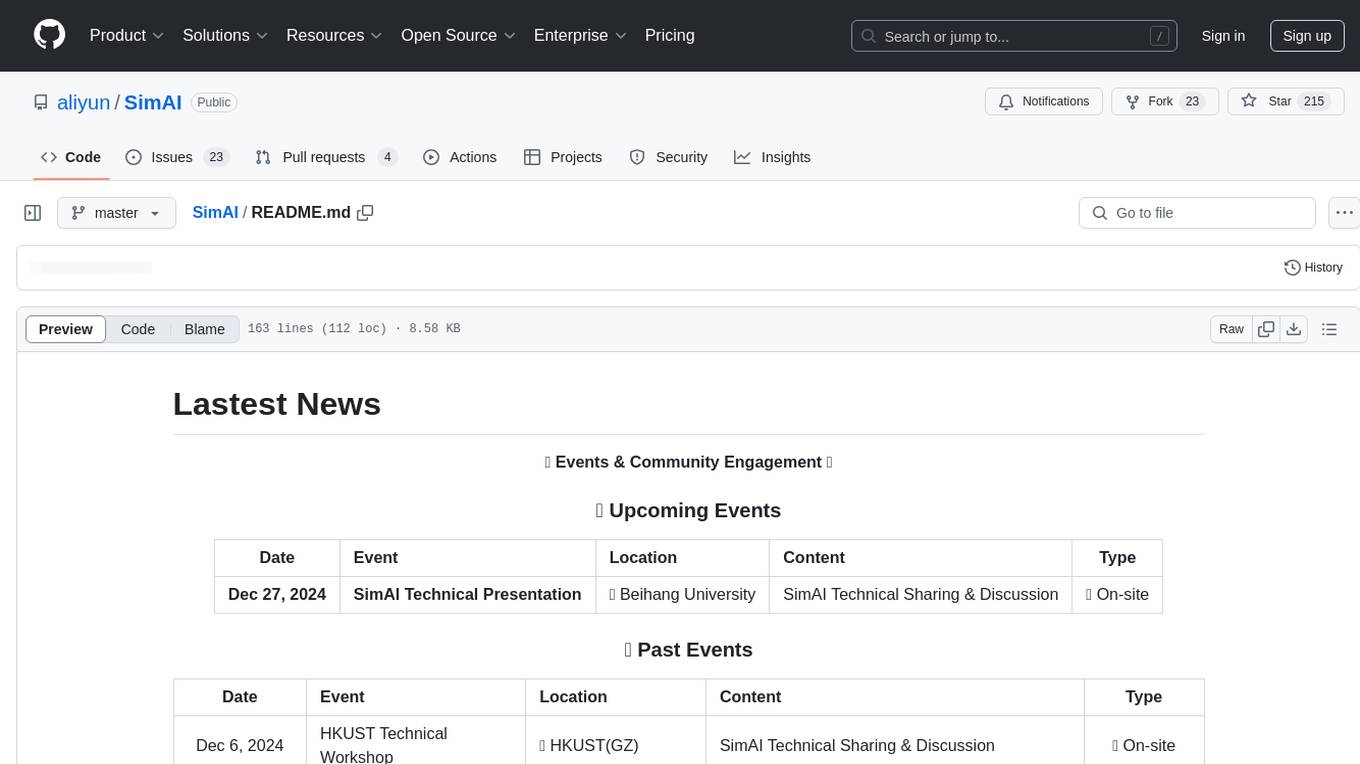

SimAI

SimAI is the industry's first full-stack, high-precision simulator for AI large-scale training. It provides detailed modeling and simulation of the entire LLM training process, encompassing framework, collective communication, network layers, and more. This comprehensive approach offers end-to-end performance data, enabling researchers to analyze training process details, evaluate time consumption of AI tasks under specific conditions, and assess performance gains from various algorithmic optimizations.

buffer-of-thought-llm

Buffer of Thoughts (BoT) is a thought-augmented reasoning framework designed to enhance the accuracy, efficiency, and robustness of large language models (LLMs). It introduces a meta-buffer to store high-level thought-templates distilled from problem-solving processes, enabling adaptive reasoning for efficient problem-solving. The framework includes a buffer-manager to dynamically update the meta-buffer, ensuring scalability and stability. BoT achieves significant performance improvements on reasoning-intensive tasks and demonstrates superior generalization ability and robustness while being cost-effective compared to other methods.

IDvs.MoRec

This repository contains the source code for the SIGIR 2023 paper 'Where to Go Next for Recommender Systems? ID- vs. Modality-based Recommender Models Revisited'. It provides resources for evaluating foundation, transferable, multi-modal, and LLM recommendation models, along with datasets, pre-trained models, and training strategies for IDRec and MoRec using in-batch debiased cross-entropy loss. The repository also offers large-scale datasets, code for SASRec with in-batch debias cross-entropy loss, and information on joining the lab for research opportunities.

HuatuoGPT-II

HuatuoGPT2 is an innovative domain-adapted medical large language model that excels in medical knowledge and dialogue proficiency. It showcases state-of-the-art performance in various medical benchmarks, surpassing GPT-4 in expert evaluations and fresh medical licensing exams. The open-source release includes HuatuoGPT2 models in 7B, 13B, and 34B versions, training code for one-stage adaptation, partial pre-training and fine-tuning instructions, and evaluation methods for medical response capabilities and professional pharmacist exams. The tool aims to enhance LLM capabilities in the Chinese medical field through open-source principles.

camel

CAMEL is an open-source library designed for the study of autonomous and communicative agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

MathEval

MathEval is a benchmark designed for evaluating the mathematical capabilities of large models. It includes over 20 evaluation datasets covering various mathematical domains with more than 30,000 math problems. The goal is to assess the performance of large models across different difficulty levels and mathematical subfields. MathEval serves as a reliable reference for comparing mathematical abilities among large models and offers guidance on enhancing their mathematical capabilities in the future.

ReST-MCTS

ReST-MCTS is a reinforced self-training approach that integrates process reward guidance with tree search MCTS to collect higher-quality reasoning traces and per-step value for training policy and reward models. It eliminates the need for manual per-step annotation by estimating the probability of steps leading to correct answers. The inferred rewards refine the process reward model and aid in selecting high-quality traces for policy model self-training.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

UniCoT

Uni-CoT is a unified reasoning framework that extends Chain-of-Thought (CoT) principles to the multimodal domain, enabling Multimodal Large Language Models (MLLMs) to perform interpretable, step-by-step reasoning across both text and vision. It decomposes complex multimodal tasks into structured, manageable steps that can be executed sequentially or in parallel, allowing for more scalable and systematic reasoning.

unoplat-code-confluence

Unoplat-CodeConfluence is a universal code context engine that aims to extract, understand, and provide precise code context across repositories tied through domains. It combines deterministic code grammar with state-of-the-art LLM pipelines to achieve human-like understanding of codebases in minutes. The tool offers smart summarization, graph-based embedding, enhanced onboarding, graph-based intelligence, deep dependency insights, and seamless integration with existing development tools and workflows. It provides a precise context API for knowledge engine and AI coding assistants, enabling reliable code understanding through bottom-up code summarization, graph-based querying, and deep package and dependency analysis.

inference

Xorbits Inference (Xinference) is a powerful and versatile library designed to serve language, speech recognition, and multimodal models. With Xorbits Inference, you can effortlessly deploy and serve your or state-of-the-art built-in models using just a single command. Whether you are a researcher, developer, or data scientist, Xorbits Inference empowers you to unleash the full potential of cutting-edge AI models.

MiniCPM-V-CookBook

MiniCPM-V & o Cookbook is a comprehensive repository for building multimodal AI applications effortlessly. It provides easy-to-use documentation, supports a wide range of users, and offers versatile deployment scenarios. The repository includes live demonstrations, inference recipes for vision and audio capabilities, fine-tuning recipes, serving recipes, quantization recipes, and a framework support matrix. Users can customize models, deploy them efficiently, and compress models to improve efficiency. The repository also showcases awesome works using MiniCPM-V & o and encourages community contributions.

EasyEdit

EasyEdit is a Python package for edit Large Language Models (LLM) like `GPT-J`, `Llama`, `GPT-NEO`, `GPT2`, `T5`(support models from **1B** to **65B**), the objective of which is to alter the behavior of LLMs efficiently within a specific domain without negatively impacting performance across other inputs. It is designed to be easy to use and easy to extend.

For similar tasks

Tokenizer

This repository contains implementations of byte pair encoding (BPE) tokenizer in Typescript and C# for OpenAI LLMs. The implementations are based on an open-sourced rust implementation in the OpenAI tiktoken. These implementations are valuable for prompt tokenization in Nodejs and .NET environments before feeding prompts into a LLM.

LLaVA-MORE

LLaVA-MORE is a new family of Multimodal Language Models (MLLMs) that integrates recent language models with diverse visual backbones. The repository provides a unified training protocol for fair comparisons across all architectures and releases training code and scripts for distributed training. It aims to enhance Multimodal LLM performance and offers various models for different tasks. Users can explore different visual backbones like SigLIP and methods for managing image resolutions (S2) to improve the connection between images and language. The repository is a starting point for expanding the study of Multimodal LLMs and enhancing new features in the field.

Co-LLM-Agents

This repository contains code for building cooperative embodied agents modularly with large language models. The agents are trained to perform tasks in two different environments: ThreeDWorld Multi-Agent Transport (TDW-MAT) and Communicative Watch-And-Help (C-WAH). TDW-MAT is a multi-agent environment where agents must transport objects to a goal position using containers. C-WAH is an extension of the Watch-And-Help challenge, which enables agents to send messages to each other. The code in this repository can be used to train agents to perform tasks in both of these environments.

GPT4Point

GPT4Point is a unified framework for point-language understanding and generation. It aligns 3D point clouds with language, providing a comprehensive solution for tasks such as 3D captioning and controlled 3D generation. The project includes an automated point-language dataset annotation engine, a novel object-level point cloud benchmark, and a 3D multi-modality model. Users can train and evaluate models using the provided code and datasets, with a focus on improving models' understanding capabilities and facilitating the generation of 3D objects.

asreview

The ASReview project implements active learning for systematic reviews, utilizing AI-aided pipelines to assist in finding relevant texts for search tasks. It accelerates the screening of textual data with minimal human input, saving time and increasing output quality. The software offers three modes: Oracle for interactive screening, Exploration for teaching purposes, and Simulation for evaluating active learning models. ASReview LAB is designed to support decision-making in any discipline or industry by improving efficiency and transparency in screening large amounts of textual data.

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

amber-train

Amber is the first model in the LLM360 family, an initiative for comprehensive and fully open-sourced LLMs. It is a 7B English language model with the LLaMA architecture. The model type is a language model with the same architecture as LLaMA-7B. It is licensed under Apache 2.0. The resources available include training code, data preparation, metrics, and fully processed Amber pretraining data. The model has been trained on various datasets like Arxiv, Book, C4, Refined-Web, StarCoder, StackExchange, and Wikipedia. The hyperparameters include a total of 6.7B parameters, hidden size of 4096, intermediate size of 11008, 32 attention heads, 32 hidden layers, RMSNorm ε of 1e^-6, max sequence length of 2048, and a vocabulary size of 32000.

kan-gpt

The KAN-GPT repository is a PyTorch implementation of Generative Pre-trained Transformers (GPTs) using Kolmogorov-Arnold Networks (KANs) for language modeling. It provides a model for generating text based on prompts, with a focus on improving performance compared to traditional MLP-GPT models. The repository includes scripts for training the model, downloading datasets, and evaluating model performance. Development tasks include integrating with other libraries, testing, and documentation.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.