Tokenizer

Typescript and .NET implementation of BPE tokenizer for OpenAI LLMs.

Stars: 119

This repository contains implementations of byte pair encoding (BPE) tokenizer in Typescript and C# for OpenAI LLMs. The implementations are based on an open-sourced rust implementation in the OpenAI tiktoken. These implementations are valuable for prompt tokenization in Nodejs and .NET environments before feeding prompts into a LLM.

README:

This repo contains Typescript and C# implementation of byte pair encoding(BPE) tokenizer for OpenAI LLMs, it's based on open sourced rust implementation in the OpenAI tiktoken. Both implementation are valuable to run prompt tokenization in Nodejs and .NET environment before feeding prompt into a LLM.

Please follow README.

[!IMPORTANT] Users of

Microsoft.DeepDev.TokenizerLibshould migrate toMicrosoft.ML.Tokenizers. The functionality inMicrosoft.DeepDev.TokenizerLibhas been added toMicrosoft.ML.Tokenizers.Microsoft.ML.Tokenizersis a tokenizer library being developed by the .NET team and going forward, the central place for tokenizer development in .NET. By usingMicrosoft.ML.Tokenizers, you should see improved performance over existing tokenizer library implementations, includingMicrosoft.DeepDev.TokenizerLib. A stable release ofMicrosoft.ML.Tokenizersis expected alongside the .NET 9.0 release (November 2024). Instructions for migration can be found at https://github.com/dotnet/machinelearning/blob/main/docs/code/microsoft-ml-tokenizers-migration-guide.md.

We welcome contributions. Please follow this guideline.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Tokenizer

Similar Open Source Tools

Tokenizer

This repository contains implementations of byte pair encoding (BPE) tokenizer in Typescript and C# for OpenAI LLMs. The implementations are based on an open-sourced rust implementation in the OpenAI tiktoken. These implementations are valuable for prompt tokenization in Nodejs and .NET environments before feeding prompts into a LLM.

multilspy

Multilspy is a Python library developed for research purposes to facilitate the creation of language server clients for querying and obtaining results of static analyses from various language servers. It simplifies the process by handling server setup, communication, and configuration parameters, providing a common interface for different languages. The library supports features like finding function/class definitions, callers, completions, hover information, and document symbols. It is designed to work with AI systems like Large Language Models (LLMs) for tasks such as Monitor-Guided Decoding to ensure code generation correctness and boost compilability.

hi-ml

The Microsoft Health Intelligence Machine Learning Toolbox is a repository that provides low-level and high-level building blocks for Machine Learning / AI researchers and practitioners. It simplifies and streamlines work on deep learning models for healthcare and life sciences by offering tested components such as data loaders, pre-processing tools, deep learning models, and cloud integration utilities. The repository includes two Python packages, 'hi-ml-azure' for helper functions in AzureML, 'hi-ml' for ML components, and 'hi-ml-cpath' for models and workflows related to histopathology images.

ontogpt

OntoGPT is a Python package for extracting structured information from text using large language models, instruction prompts, and ontology-based grounding. It provides a command line interface and a minimal web app for easy usage. The tool has been evaluated on test data and is used in related projects like TALISMAN for gene set analysis. OntoGPT enables users to extract information from text by specifying relevant terms and provides the extracted objects as output.

aici

The Artificial Intelligence Controller Interface (AICI) lets you build Controllers that constrain and direct output of a Large Language Model (LLM) in real time. Controllers are flexible programs capable of implementing constrained decoding, dynamic editing of prompts and generated text, and coordinating execution across multiple, parallel generations. Controllers incorporate custom logic during the token-by-token decoding and maintain state during an LLM request. This allows diverse Controller strategies, from programmatic or query-based decoding to multi-agent conversations to execute efficiently in tight integration with the LLM itself.

aiocoap

aiocoap is a Python library that implements the Constrained Application Protocol (CoAP) using native asyncio methods in Python 3. It supports various CoAP standards such as RFC7252, RFC7641, RFC7959, RFC8323, RFC7967, RFC8132, RFC9176, RFC8613, and draft-ietf-core-oscore-groupcomm-17. The library provides features for clients and servers, including multicast support, blockwise transfer, CoAP over TCP, TLS, and WebSockets, No-Response, PATCH/FETCH, OSCORE, and Group OSCORE. It offers an easy-to-use interface for concurrent operations and is suitable for IoT applications.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

ezkl

EZKL is a library and command-line tool for doing inference for deep learning models and other computational graphs in a zk-snark (ZKML). It enables the following workflow: 1. Define a computational graph, for instance a neural network (but really any arbitrary set of operations), as you would normally in pytorch or tensorflow. 2. Export the final graph of operations as an .onnx file and some sample inputs to a .json file. 3. Point ezkl to the .onnx and .json files to generate a ZK-SNARK circuit with which you can prove statements such as: > "I ran this publicly available neural network on some private data and it produced this output" > "I ran my private neural network on some public data and it produced this output" > "I correctly ran this publicly available neural network on some public data and it produced this output" In the backend we use the collaboratively-developed Halo2 as a proof system. The generated proofs can then be verified with much less computational resources, including on-chain (with the Ethereum Virtual Machine), in a browser, or on a device.

aisuite

Aisuite is a simple, unified interface to multiple Generative AI providers. It allows developers to easily interact with various Language Model (LLM) providers like OpenAI, Anthropic, Azure, Google, AWS, and more through a standardized interface. The library focuses on chat completions and provides a thin wrapper around python client libraries, enabling creators to test responses from different LLM providers without changing their code. Aisuite maximizes stability by using HTTP endpoints or SDKs for making calls to the providers. Users can install the base package or specific provider packages, set up API keys, and utilize the library to generate chat completion responses from different models.

dlio_benchmark

DLIO is an I/O benchmark tool designed for Deep Learning applications. It emulates modern deep learning applications using Benchmark Runner, Data Generator, Format Handler, and I/O Profiler modules. Users can configure various I/O patterns, data loaders, data formats, datasets, and parameters. The tool is aimed at emulating the I/O behavior of deep learning applications and provides a modular design for flexibility and customization.

GhostOS

GhostOS is an AI Agent framework designed to replace JSON Schema with a Turing-complete code interaction interface (Moss Protocol). It aims to create intelligent entities capable of continuous learning and growth through code generation and project management. The framework supports various capabilities such as turning Python files into web agents, real-time voice conversation, body movements control, and emotion expression. GhostOS is still in early experimental development and focuses on out-of-the-box capabilities for AI agents.

air-script

AirScript is a domain-specific language for expressing AIR constraints for STARKs, with the goal of enabling writing and auditing constraints without the need to learn a specific programming language. It also aims to perform automated optimizations and output constraint evaluator code in multiple target languages. The project is organized into several crates including Parser, MIR, AIR, Winterfell code generator, ACE code generator, and AirScript CLI for transpiling AIRs to target languages.

llm-random

This repository contains code for research conducted by the LLM-Random research group at IDEAS NCBR in Warsaw, Poland. The group focuses on developing and using this repository to conduct research. For more information about the group and its research, refer to their blog, llm-random.github.io.

monitors4codegen

This repository hosts the official code and data artifact for the paper 'Monitor-Guided Decoding of Code LMs with Static Analysis of Repository Context'. It introduces Monitor-Guided Decoding (MGD) for code generation using Language Models, where a monitor uses static analysis to guide the decoding. The repository contains datasets, evaluation scripts, inference results, a language server client 'multilspy' for static analyses, and implementation of various monitors monitoring for different properties in 3 programming languages. The monitors guide Language Models to adhere to properties like valid identifier dereferences, correct number of arguments to method calls, typestate validity of method call sequences, and more.

LLMs-in-Production

LLMs in Production is a repository for the book with Manning Publications, containing chapter listings and setup instructions. It provides environments for each chapter, linters, formatters, and tests. The scripts are designed to be run from the project root.

2p-kt

2P-Kt is a Kotlin-based and multi-platform reboot of tuProlog (2P), a multi-paradigm logic programming framework written in Java. It consists of an open ecosystem for Symbolic Artificial Intelligence (AI) with modules supporting logic terms, unification, indexing, resolution of logic queries, probabilistic logic programming, binary decision diagrams, OR-concurrent resolution, DSL for logic programming, parsing modules, serialisation modules, command-line interface, and graphical user interface. The tool is designed to support knowledge representation and automatic reasoning through logic programming in an extensible and flexible way, encouraging extensions towards other symbolic AI systems than Prolog. It is a pure, multi-platform Kotlin project supporting JVM, JS, Android, and Native platforms, with a lightweight library leveraging the Kotlin common library.

For similar tasks

Tokenizer

This repository contains implementations of byte pair encoding (BPE) tokenizer in Typescript and C# for OpenAI LLMs. The implementations are based on an open-sourced rust implementation in the OpenAI tiktoken. These implementations are valuable for prompt tokenization in Nodejs and .NET environments before feeding prompts into a LLM.

LLaVA-MORE

LLaVA-MORE is a new family of Multimodal Language Models (MLLMs) that integrates recent language models with diverse visual backbones. The repository provides a unified training protocol for fair comparisons across all architectures and releases training code and scripts for distributed training. It aims to enhance Multimodal LLM performance and offers various models for different tasks. Users can explore different visual backbones like SigLIP and methods for managing image resolutions (S2) to improve the connection between images and language. The repository is a starting point for expanding the study of Multimodal LLMs and enhancing new features in the field.

langcorn

LangCorn is an API server that enables you to serve LangChain models and pipelines with ease, leveraging the power of FastAPI for a robust and efficient experience. It offers features such as easy deployment of LangChain models and pipelines, ready-to-use authentication functionality, high-performance FastAPI framework for serving requests, scalability and robustness for language processing applications, support for custom pipelines and processing, well-documented RESTful API endpoints, and asynchronous processing for faster response times.

wllama

Wllama is a WebAssembly binding for llama.cpp, a high-performance and lightweight language model library. It enables you to run inference directly on the browser without the need for a backend or GPU. Wllama provides both high-level and low-level APIs, allowing you to perform various tasks such as completions, embeddings, tokenization, and more. It also supports model splitting, enabling you to load large models in parallel for faster download. With its Typescript support and pre-built npm package, Wllama is easy to integrate into your React Typescript projects.

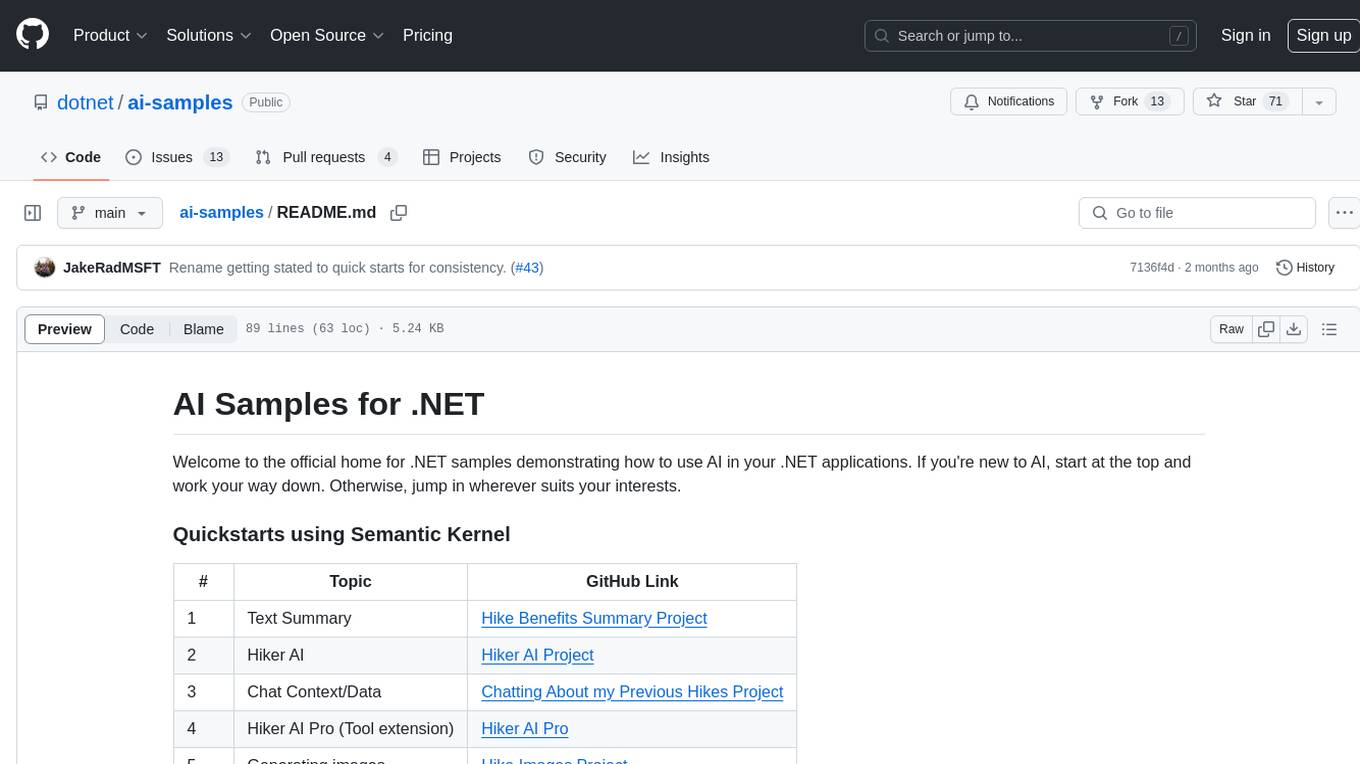

ai-samples

AI Samples for .NET is a repository containing various samples demonstrating how to use AI in .NET applications. It provides quickstarts using Semantic Kernel and Azure OpenAI SDK, covers LLM Core Concepts, End to End Examples, Local Models, Local Embedding Models, Tokenizers, Vector Databases, and Reference Examples. The repository showcases different AI-related projects and tools for developers to explore and learn from.

py-llm-core

PyLLMCore is a light-weighted interface with Large Language Models with native support for llama.cpp, OpenAI API, and Azure deployments. It offers a Pythonic API that is simple to use, with structures provided by the standard library dataclasses module. The high-level API includes the assistants module for easy swapping between models. PyLLMCore supports various models including those compatible with llama.cpp, OpenAI, and Azure APIs. It covers use cases such as parsing, summarizing, question answering, hallucinations reduction, context size management, and tokenizing. The tool allows users to interact with language models for tasks like parsing text, summarizing content, answering questions, reducing hallucinations, managing context size, and tokenizing text.

chonkie

Chonkie is a lightweight and fast RAG chunking library designed to efficiently split text for RAG (Retrieval-Augmented Generation) applications. It offers various chunking methods like TokenChunker, WordChunker, SentenceChunker, SemanticChunker, SDPMChunker, and an experimental LateChunker. Chonkie is feature-rich, easy to use, fast, supports multiple tokenizers, and comes with a cute pygmy hippo mascot. It aims to provide a no-nonsense solution for chunking text without the need to worry about dependencies or bloat.

flash-tokenizer

FlashTokenizer is a high-performance CPU tokenizer library implemented in C++ for LLM inference serving. It is 10 times faster than BertTokenizerFast in transformers, offering the highest speed and accuracy. Developed to be faster, more accurate, and easier to use than existing tokenizers like BertTokenizerFast, FlashTokenizer is implemented in C++ for straightforward maintenance. It supports parallel processing at the C++ level for batch encoding, delivering outstanding speed. The tokenizer is based on the LinMax Tokenizer proposed in Fast WordPiece Tokenization, enabling tokenization in linear time.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.