aici

AICI: Prompts as (Wasm) Programs

Stars: 1849

The Artificial Intelligence Controller Interface (AICI) lets you build Controllers that constrain and direct output of a Large Language Model (LLM) in real time. Controllers are flexible programs capable of implementing constrained decoding, dynamic editing of prompts and generated text, and coordinating execution across multiple, parallel generations. Controllers incorporate custom logic during the token-by-token decoding and maintain state during an LLM request. This allows diverse Controller strategies, from programmatic or query-based decoding to multi-agent conversations to execute efficiently in tight integration with the LLM itself.

README:

The Artificial Intelligence Controller Interface (AICI) lets you build Controllers that constrain and direct output of a Large Language Model (LLM) in real time. Controllers are flexible programs capable of implementing constrained decoding, dynamic editing of prompts and generated text, and coordinating execution across multiple, parallel generations. Controllers incorporate custom logic during the token-by-token decoding and maintain state during an LLM request. This allows diverse Controller strategies, from programmatic or query-based decoding to multi-agent conversations to execute efficiently in tight integration with the LLM itself.

The purpose of AICI is to make it easy to build and experiment with both existing and entirely new Controller strategies for improving LLM generations. By abstracting away implementation details of the underlying LLM inference and serving engine, AICI aims to simplify the development of Controllers, make it easier to write fast Controllers, and ease compatibility across LLM inference and serving engines.

AICI is designed for both local and cloud execution, including (eventually) multi-tenant LLM deployments. Controllers are implemented as light-weight WebAssembly (Wasm) modules which run on the same machine as the LLM inference engine, utilizing the CPU while the GPU is busy with token generation. AICI is one layer in the inference stack, and is designed to allow control libraries such as Guidance, LMQL, and others to run on top of it and gain both efficiency and performance improvements, as well as portability across LLM inference and serving engines.

AICI currently integrates with llama.cpp, HuggingFace Transformers, and rLLM (custom tch-based LLM inference engine), with vLLM in the works.

AICI is:

- Flexible: Controllers can be written in any language that can compile to Wasm (Rust, C, C++, ...), or be interpreted inside Wasm (Python, JavaScript, ...)

- Secure: Controllers are sandboxed and cannot access the filesystem, network, or any other resources

- Fast: Wasm modules are compiled to native code and run in parallel with the LLM inference engine, inducing only a minimal overhead to the generation process

AICI is a prototype, designed and built at Microsoft Research.

- Artificial Intelligence Controller Interface (AICI)

- QuickStart: Example Walkthrough

- Comprehensive Guide: Exploring Further

- Architecture

- Security

- Performance

- Flexibility

- Acknowledgements

- Contributing

- Trademarks

In this quickstart, we'll guide you through the following steps:

- Set up rLLM Server and AICI Runtime.

- Build and deploy a Controller.

- Use AICI to control LLM output, so you can customize a LLM to follow specific rules when generating text.

To compile AICI components, you need to set up your development environment for Rust. For this quickstart you also need Python 3.11 or later to create a controller.

[!NOTE] Windows users: please use WSL2 or the included devcontainer. Adding native Windows support is tracked here.

MacOS users: please make sure you have XCode command line tools installed by running

xcode-select -pand, if not installed, runxcode-select --install.CUDA: the CUDA build relies on specific libtorch installation. It's highly recommended you use the included devcontainer.

If you're using devcontainer, you can skip to the next section.

Using the system package manager, install the necessary tools for building code in the repository, including git, cmake and ccache.

For instance in WSL / Ubuntu using apt:

sudo apt-get install --assume-yes --no-install-recommends \

build-essential cmake ccache pkg-config libssl-dev libclang-dev clang llvm-dev git-lfs

or using Homebrew on macOS:

brew install git cmake ccache

Then install Rust, Rustup and Cargo, following the instructions provided here and here:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

After installation, verify that the rustup --version command is accessible by running it from the terminal. If the command isn't recognized, try opening a new terminal session.

Next install wasm32-wasi Rust component:

rustup target add wasm32-wasi

If you already had Rust installed, or are getting complaints from Cargo about outdated versions, run:

rustup update

Last, to work with Python controllers and scripts (like this tutorial), run this command to install the required packages:

pip install pytest pytest-forked ujson posix_ipc numpy requests

The rLLM server has two backends, one based on libtorch and CUDA

(rllm-cuda), and the other based on llama.cpp (rllm-llamacpp).

The rllm-cuda backend only works with NVidia GPUs with compute capability 8.0 or later

(A100 and later; RTX 30x0 and later) and requires a fiddly setup of libtorch

-- it's strongly recommended to use the included devcontainer.

While this guide focuses on the rllm-llamacpp backend,

the build steps are the same for rllm-cuda, modulo the folder name.

After dev env setup above, clone the AICI repository and proceed with the next steps outlined below.

Use the following command to build and run aicirt and rllm-llamacpp:

cd rllm/rllm-llamacpp

./server.sh phi2

You can pass other model names as argument (run ./server.sh without arguments to see available models).

You can also use a HuggingFace URL to .gguf file or a local path to a .gguf file.

(For rllm-cuda use HuggingFace model id or path to folder).

./server.sh orca

You can find more details about rllm-llamacpp here.

The rLLM server provides a HTTP interface, utilized for configuration tasks and processing requests. You can also use this interface to promptly verify its status. For instance, if you open http://127.0.0.1:4242/v1/models, you should see:

{

"object": "list",

"data": [

{

"object": "model",

"id": "TheBloke/phi-2-GGUF",

"created": 946810800,

"owned_by": "owner"

}

]

}confirming that the selected model is loaded.

AICI allows hosting custom logic, called Controllers, that initiate, terminate, and interact with LLMs token generation. Controllers take input arguments, process them, and return a result with logs, LLM tokens, and variables.

The repository includes some examples, in particular:

- jsctrl: a controller that accepts JavaScript code as input for execution. This code can interact with the model to generate text and tokens.

- pyctrl: a controller that accepts Python code as input for execution. This code can also interact with the model to generate text and tokens.

In this example we'll utilize pyctrl to manage token generation using a simple Python script. If you want, you can build and upload pyctrl, however by default the server will automatically download the latest release of pyctrl from GitHub.

In general, controllers require building and deployment, while scripts (Python or JavaScript) are sent with each request.

The following illustrates the relationship between the rLLM server, the AICI runtime, and the controller:

erDiagram

Host ||--|{ CPU : ""

Host ||--|{ GPU : ""

CPU ||--|| "rLLM Server" : execute

CPU ||--|{ "AICI Runtime" : execute

"AICI Runtime" ||--|| "Controller" : instantiate

GPU ||--|{ "LLM token generation" : executeSuppose we aim for a model to generate a list, adhering to a specific format and containing only five items.

Typically, achieving this involves prompt engineering, crafting the prompt precisely with clear instructions, such as:

What are the five most popular types of vehicles?

Return the result as a numbered list.

Do not add explanations, only the list.

The prompt would also vary depending on the model in use, given that each model tends to add explanations and understands instructions in different ways.

With AICI, we shift control back to code, and we can simplify the prompt to:

What are the most popular types of vehicles?

using code to:

- Limit the list to 5 items

- Prevent the model from adding some initial explanation

- Format to a numbered list

- Stop the model from adding some text after the list.

Let's create a list-of-five.py python file with the following content:

import pyaici.server as aici

# Force the model to generate a well formatted list of 5 items, e.g.

# 1. name 1

# 2. name 2

# 3. name 3

# 4. name 4

# 5. name 5

async def main():

# This is the prompt we want to run.

# Note how the prompt doesn't mention a number of vehicles or how to format the result.

prompt = "What are the most popular types of vehicles?\n"

# Tell the model to generate the prompt string, ie. let's start with the prompt "to complete"

await aici.FixedTokens(prompt)

# Store the current position in the token generation process

marker = aici.Label()

for i in range(1,6):

# Tell the model to generate the list number

await aici.FixedTokens(f"{i}.")

# Wait for the model to generate a vehicle name and end with a new line

await aici.gen_text(stop_at = "\n")

await aici.FixedTokens("\n")

# Store the tokens generated in a result variable

aici.set_var("result", marker.text_since())

aici.start(main())Running the script is not too different from sending a prompt. In this case, we're sending control logic and instructions all together.

To see the final result, execute the following command:

./aici.sh run list-of-five.py

Result:

Running with tagged AICI Controller: gh:microsoft/aici/pyctrl

[0]: FIXED 'What are the most popular types of vehicles?\n'

[0]: FIXED '1.'

[0]: GEN ' Cars\n'

[0]: FIXED '2.'

[0]: GEN ' Motorcycles\n'

[0]: FIXED '3.'

[0]: GEN ' Bicycles\n'

[0]: FIXED '4.'

[0]: GEN ' Trucks\n'

[0]: FIXED '5.'

[0]: GEN ' Boats\n'

[0]: FIXED '\n'

[DONE]

[Response] What are the most popular types of vehicles?

1. Cars

2. Motorcycles

3. Bicycles

4. Trucks

5. Boats

response saved to tmp/response.json

Usage: {'sampled_tokens': 16, 'ff_tokens': 37, 'cost': 69}

Timing: {'http_response': 0.05193686485290527, 'data0': 0.05199289321899414, 'first_token': 0.0658726692199707, 'last_token': 0.1784682273864746}

Tokens/sec: {'prompt': 861.0913072488067, 'sampling': 89.65181217019571}

Storage: {'result': '1. Cars\n2. Motorcycles\n3. Bicycles\n4. Trucks\n5. Boats\n\n'}

This repository contains a number of components, and which ones you need depends on your use case.

You can use an existing controller module.

We provide PyCtrl and JsCtrl

that let you script controllers using server-side Python and JavaScript, respectively.

The pyaici package contains aici command line tool that lets you

upload and run scripts with any controller

(we also provide REST API definition for the curious).

🧑💻Python code samples for scripting PyCtrl and a JavaScript Hello World for JSCtrl

We anticipate libraries will be built on top of controllers. We provide an example in promptlib - a client-side Python library that generates interacts with DeclCtrl via the pyaici package.

🧑💻 Example notebook that uses PromptLib to interact with DeclCtrl.

The controllers can be run in a cloud or local AICI-enabled LLM inference engine. You can run the provided reference engine (rLLM) locally with either libtorch+CUDA or llama.cpp backend.

To develop a new controller, use a Rust starter project that shows usage of aici_abi library, which simplifies implementing the low-level AICI interface.

🧑💻Sample code for a minimal new controller to get you started

To add AICI support to a new LLM inference engine, you will need to implement LLM-side of the protocol that talks to AICI runtime.

Finally, you may want to modify any of the provided components - PRs are most welcome!

AICI abstracts LLM inference engine from the controller and vice-versa, as in the picture below. The rounded nodes are aspirational. Additional layers can be built on top - we provide promptlib, but we strongly believe that Guidance, LMQL, SGLang, Outlines, jsonformer, LMFE, etc. can also run on top of AICI (either with custom controllers or utilizing PyCtrl or JsCtrl).

graph TD

PyCtrl -- AICI --> aicirt[AICI-runtime]

JsCtrl -- AICI --> aicirt

guidance([GuidanceCtrl]) -- AICI --> aicirt

lmql([LMQL Ctrl]) -- AICI --> aicirt

aicirt -- POSIX SHM --> rLLM

aicirt -- POSIX SHM --> llama[llama.cpp]

aicirt -- POSIX SHM --> pyaici

pyaici -- Python --> vLLM(vLLM)

pyaici -- Python --> hf[HF Transformers]The pyaici package makes it easier to integrate AICI with Python-based LLM inference engines. Take a look at integration with HuggingFace Transformers, though note that it doesn't support forking (generation of multiple sequences in parallel). The vLLM REST server is currently out of date. Please use the rLLM-cuda or rLLM-llama.cpp for now.

-

aicirtruns in a separate process, and can run under a different user than the LLM engine - Wasm modules are sandboxed by Wasmtime

- Wasm only have access to

aici_host_*functions, implemented in hostimpl.rs -

aicirtalso exposes a partial WASI interface; however almost all the functions are no-op, except forfd_writewhich shims file descriptors 1 and 2 (stdout and stderr) to print debug messages - each Wasm module runs in a separate process, helping with Spectre/Meltdown mitigation and allowing limits on CPU usage

In particular, Wasm modules cannot access the filesystem, network, or any other resources. They also cannot spin threads or access any timers (this is relevant for Spectre/Meltdown attacks).

Most of computation in AICI Controllers occurs on the CPU, in parallel with the logit generation on the GPU. The generation occurs in steps, where logits are generated in parallel for a new token for each sequence in a batch (typically between 1 and 50). This involves reading the whole model and KV caches for sequences in the batch from the GPU memory. For optimal batch throughput, the model and KV caches should utilize a major fraction of the GPU memory, and reading the whole memory takes about 40ms on A100 GPU (80GB).

Thus, each step of generation takes on the order of 20-50ms. With careful engineering, this is more than enough to compute the set of allowed tokens in Rust compiled to Wasm. These can be combined either natively in Rust, or via Python or JavaScript interpreters we provide.

For example, computing allowed token set in the 32000-strong vocabulary of Llama model takes:

- about 2.0ms for Yacc grammar of the C programming language

- about 0.3ms for a regular expression

- about 0.2ms for a substring constraint, from 4kB string

The above numbers are for a single sequence, however each sequence is processed in separate process, and thus if there is more cores than sequences (which is typical), they do not change. They also include overhead of calling into Python interpreter implemented in Wasm, and then back into Rust-generated Wasm code for the constraint itself. They are all well within the 20-50ms budget, so do not affect the generation time at all.

There is also some overhead in the critical path of sampling. It comes down to about 0.3ms per generation step when executing 10 sequences in parallel (this is irrespective of the constraint used). The overhead goes up to around 0.7ms for 40 sequences (though it has not been fully optimized yet).

WebAssembly is designed to have minimal overhead, compared to native code. In our experience, highly optimized Rust code is less than 2x slower when run in Wasmtime than native. This is 10-100x better than JavaScript or Python.

All measurements done on AMD EPYC 7V13 with nVidia A100 GPU with 80GB of VRAM.

The low-level interface that AICI runtime provides allows for:

- interaction with the LLM inference engine before, during, and after every generated token

- constraining decoding to a set of tokens

- backtracking KV-cache to a previous state

- fast-forwarding several tokens at a time (if they are known)

- forking generation into multiple branches

- communication between forks via shared variables

- utility functions for converting between tokens and byte strings

It can be utilized from any language that compiles to Wasm.

This repository provides a Rust library that makes it easy to implement controllers in Rust, and provides efficient implementations of specific constraints (regular expressions, yacc grammars, substrings). We also provide Python and JavaScript interpreters that allow to glue these constraints together. All of these can be easily extended.

- Flash Attention kernels are copied from flash-attention repo; see BSD LICENSE

- Paged Attention kernels are copied from vLLM repo; see Apache LICENSE

- OpenAI API definitions are copied and modified from candle-vllm; see MIT LICENSE

- cache_engine.rs, config.rs, and scheduler.rs are loosely based on vLLM

- llama.rs, phi.rs and logits.rs are based on candle-transformers

- specific Python library files are copied from RustPython (as we only use a subset of them)

- the example ANSI C grammar is based on https://www.lysator.liu.se/c/ANSI-C-grammar-y.html by Jeff Lee (from 1985)

If you find the AI Controller Interface and its ideas for defining a new layer in the LLM inference stack useful, please cite the package using the following reference:

- Michal Moskal, Madan Musuvathi, Emre Kıcıman. AI Controller Interface, (2024), GitHub repository. https://github.com/microsoft/aici

Bibtex:

@misc{Moskal2024,

author = {Moskal, Michal and Musuvathi, Madan and {K\i c\i man}, Emre},

title = {{AI Controller Interface}},

year = {2024},

publisher = {{GitHub}},

journal = {{GitHub} repository},

howpublished = {\url{https://github.com/microsoft/aici/}}

}This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact [email protected] with any additional questions or comments.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aici

Similar Open Source Tools

aici

The Artificial Intelligence Controller Interface (AICI) lets you build Controllers that constrain and direct output of a Large Language Model (LLM) in real time. Controllers are flexible programs capable of implementing constrained decoding, dynamic editing of prompts and generated text, and coordinating execution across multiple, parallel generations. Controllers incorporate custom logic during the token-by-token decoding and maintain state during an LLM request. This allows diverse Controller strategies, from programmatic or query-based decoding to multi-agent conversations to execute efficiently in tight integration with the LLM itself.

project_alice

Alice is an agentic workflow framework that integrates task execution and intelligent chat capabilities. It provides a flexible environment for creating, managing, and deploying AI agents for various purposes, leveraging a microservices architecture with MongoDB for data persistence. The framework consists of components like APIs, agents, tasks, and chats that interact to produce outputs through files, messages, task results, and URL references. Users can create, test, and deploy agentic solutions in a human-language framework, making it easy to engage with by both users and agents. The tool offers an open-source option, user management, flexible model deployment, and programmatic access to tasks and chats.

ezkl

EZKL is a library and command-line tool for doing inference for deep learning models and other computational graphs in a zk-snark (ZKML). It enables the following workflow: 1. Define a computational graph, for instance a neural network (but really any arbitrary set of operations), as you would normally in pytorch or tensorflow. 2. Export the final graph of operations as an .onnx file and some sample inputs to a .json file. 3. Point ezkl to the .onnx and .json files to generate a ZK-SNARK circuit with which you can prove statements such as: > "I ran this publicly available neural network on some private data and it produced this output" > "I ran my private neural network on some public data and it produced this output" > "I correctly ran this publicly available neural network on some public data and it produced this output" In the backend we use the collaboratively-developed Halo2 as a proof system. The generated proofs can then be verified with much less computational resources, including on-chain (with the Ethereum Virtual Machine), in a browser, or on a device.

llama-on-lambda

This project provides a proof of concept for deploying a scalable, serverless LLM Generative AI inference engine on AWS Lambda. It leverages the llama.cpp project to enable the usage of more accessible CPU and RAM configurations instead of limited and expensive GPU capabilities. By deploying a container with the llama.cpp converted models onto AWS Lambda, this project offers the advantages of scale, minimizing cost, and maximizing compute availability. The project includes AWS CDK code to create and deploy a Lambda function leveraging your model of choice, with a FastAPI frontend accessible from a Lambda URL. It is important to note that you will need ggml quantized versions of your model and model sizes under 6GB, as your inference RAM requirements cannot exceed 9GB or your Lambda function will fail.

jaison-core

J.A.I.son is a Python project designed for generating responses using various components and applications. It requires specific plugins like STT, T2T, TTSG, and TTSC to function properly. Users can customize responses, voice, and configurations. The project provides a Discord bot, Twitch events and chat integration, and VTube Studio Animation Hotkeyer. It also offers features for managing conversation history, training AI models, and monitoring conversations.

pgai

pgai simplifies the process of building search and Retrieval Augmented Generation (RAG) AI applications with PostgreSQL. It brings embedding and generation AI models closer to the database, allowing users to create embeddings, retrieve LLM chat completions, reason over data for classification, summarization, and data enrichment directly from within PostgreSQL in a SQL query. The tool requires an OpenAI API key and a PostgreSQL client to enable AI functionality in the database. Users can install pgai from source, run it in a pre-built Docker container, or enable it in a Timescale Cloud service. The tool provides functions to handle API keys using psql or Python, and offers various AI functionalities like tokenizing, detokenizing, embedding, chat completion, and content moderation.

kork

Kork is an experimental Langchain chain that helps build natural language APIs powered by LLMs. It allows assembling a natural language API from python functions, generating a prompt for correct program writing, executing programs safely, and controlling the kind of programs LLMs can generate. The language is limited to variable declarations, function invocations, and arithmetic operations, ensuring predictability and safety in production settings.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

Mapperatorinator

Mapperatorinator is a multi-model framework that uses spectrogram inputs to generate fully featured osu! beatmaps for all gamemodes and assist modding beatmaps. The project aims to automatically generate rankable quality osu! beatmaps from any song with a high degree of customizability. The tool is built upon osuT5 and osu-diffusion, utilizing GPU compute and instances on vast.ai for development. Users can responsibly use AI in their beatmaps with this tool, ensuring disclosure of AI usage. Installation instructions include cloning the repository, creating a virtual environment, and installing dependencies. The tool offers a Web GUI for user-friendly experience and a Command-Line Inference option for advanced configurations. Additionally, an Interactive CLI script is available for terminal-based workflow with guided setup. The tool provides generation tips and features MaiMod, an AI-driven modding tool for osu! beatmaps. Mapperatorinator tokenizes beatmaps, utilizes a model architecture based on HF Transformers Whisper model, and offers multitask training format for conditional generation. The tool ensures seamless long generation, refines coordinates with diffusion, and performs post-processing for improved beatmap quality. Super timing generator enhances timing accuracy, and LoRA fine-tuning allows adaptation to specific styles or gamemodes. The project acknowledges credits and related works in the osu! community.

brokk

Brokk is a code assistant designed to understand code semantically, allowing LLMs to work effectively on large codebases. It offers features like agentic search, summarizing related classes, parsing stack traces, adding source for usages, and autonomously fixing errors. Users can interact with Brokk through different panels and commands, enabling them to manipulate context, ask questions, search codebase, run shell commands, and more. Brokk helps with tasks like debugging regressions, exploring codebase, AI-powered refactoring, and working with dependencies. It is particularly useful for making complex, multi-file edits with o1pro.

llm-subtrans

LLM-Subtrans is an open source subtitle translator that utilizes LLMs as a translation service. It supports translating subtitles between any language pairs supported by the language model. The application offers multiple subtitle formats support through a pluggable system, including .srt, .ssa/.ass, and .vtt files. Users can choose to use the packaged release for easy usage or install from source for more control over the setup. The tool requires an active internet connection as subtitles are sent to translation service providers' servers for translation.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

rag-experiment-accelerator

The RAG Experiment Accelerator is a versatile tool that helps you conduct experiments and evaluations using Azure AI Search and RAG pattern. It offers a rich set of features, including experiment setup, integration with Azure AI Search, Azure Machine Learning, MLFlow, and Azure OpenAI, multiple document chunking strategies, query generation, multiple search types, sub-querying, re-ranking, metrics and evaluation, report generation, and multi-lingual support. The tool is designed to make it easier and faster to run experiments and evaluations of search queries and quality of response from OpenAI, and is useful for researchers, data scientists, and developers who want to test the performance of different search and OpenAI related hyperparameters, compare the effectiveness of various search strategies, fine-tune and optimize parameters, find the best combination of hyperparameters, and generate detailed reports and visualizations from experiment results.

AntSK

AntSK is an AI knowledge base/agent built with .Net8+Blazor+SemanticKernel. It features a semantic kernel for accurate natural language processing, a memory kernel for continuous learning and knowledge storage, a knowledge base for importing and querying knowledge from various document formats, a text-to-image generator integrated with StableDiffusion, GPTs generation for creating personalized GPT models, API interfaces for integrating AntSK into other applications, an open API plugin system for extending functionality, a .Net plugin system for integrating business functions, real-time information retrieval from the internet, model management for adapting and managing different models from different vendors, support for domestic models and databases for operation in a trusted environment, and planned model fine-tuning based on llamafactory.

llm.c

LLM training in simple, pure C/CUDA. There is no need for 245MB of PyTorch or 107MB of cPython. For example, training GPT-2 (CPU, fp32) is ~1,000 lines of clean code in a single file. It compiles and runs instantly, and exactly matches the PyTorch reference implementation. I chose GPT-2 as the first working example because it is the grand-daddy of LLMs, the first time the modern stack was put together.

mscclpp

MSCCL++ is a GPU-driven communication stack for scalable AI applications. It provides a highly efficient and customizable communication stack for distributed GPU applications. MSCCL++ redefines inter-GPU communication interfaces, delivering a highly efficient and customizable communication stack for distributed GPU applications. Its design is specifically tailored to accommodate diverse performance optimization scenarios often encountered in state-of-the-art AI applications. MSCCL++ provides communication abstractions at the lowest level close to hardware and at the highest level close to application API. The lowest level of abstraction is ultra light weight which enables a user to implement logics of data movement for a collective operation such as AllReduce inside a GPU kernel extremely efficiently without worrying about memory ordering of different ops. The modularity of MSCCL++ enables a user to construct the building blocks of MSCCL++ in a high level abstraction in Python and feed them to a CUDA kernel in order to facilitate the user's productivity. MSCCL++ provides fine-grained synchronous and asynchronous 0-copy 1-sided abstracts for communication primitives such as `put()`, `get()`, `signal()`, `flush()`, and `wait()`. The 1-sided abstractions allows a user to asynchronously `put()` their data on the remote GPU as soon as it is ready without requiring the remote side to issue any receive instruction. This enables users to easily implement flexible communication logics, such as overlapping communication with computation, or implementing customized collective communication algorithms without worrying about potential deadlocks. Additionally, the 0-copy capability enables MSCCL++ to directly transfer data between user's buffers without using intermediate internal buffers which saves GPU bandwidth and memory capacity. MSCCL++ provides consistent abstractions regardless of the location of the remote GPU (either on the local node or on a remote node) or the underlying link (either NVLink/xGMI or InfiniBand). This simplifies the code for inter-GPU communication, which is often complex due to memory ordering of GPU/CPU read/writes and therefore, is error-prone.

For similar tasks

aici

The Artificial Intelligence Controller Interface (AICI) lets you build Controllers that constrain and direct output of a Large Language Model (LLM) in real time. Controllers are flexible programs capable of implementing constrained decoding, dynamic editing of prompts and generated text, and coordinating execution across multiple, parallel generations. Controllers incorporate custom logic during the token-by-token decoding and maintain state during an LLM request. This allows diverse Controller strategies, from programmatic or query-based decoding to multi-agent conversations to execute efficiently in tight integration with the LLM itself.

tenere

Tenere is a TUI interface for Language Model Libraries (LLMs) written in Rust. It provides syntax highlighting, chat history, saving chats to files, Vim keybindings, copying text from/to clipboard, and supports multiple backends. Users can configure Tenere using a TOML configuration file, set key bindings, and use different LLMs such as ChatGPT, llama.cpp, and ollama. Tenere offers default key bindings for global and prompt modes, with features like starting a new chat, saving chats, scrolling, showing chat history, and quitting the app. Users can interact with the prompt in different modes like Normal, Visual, and Insert, with various key bindings for navigation, editing, and text manipulation.

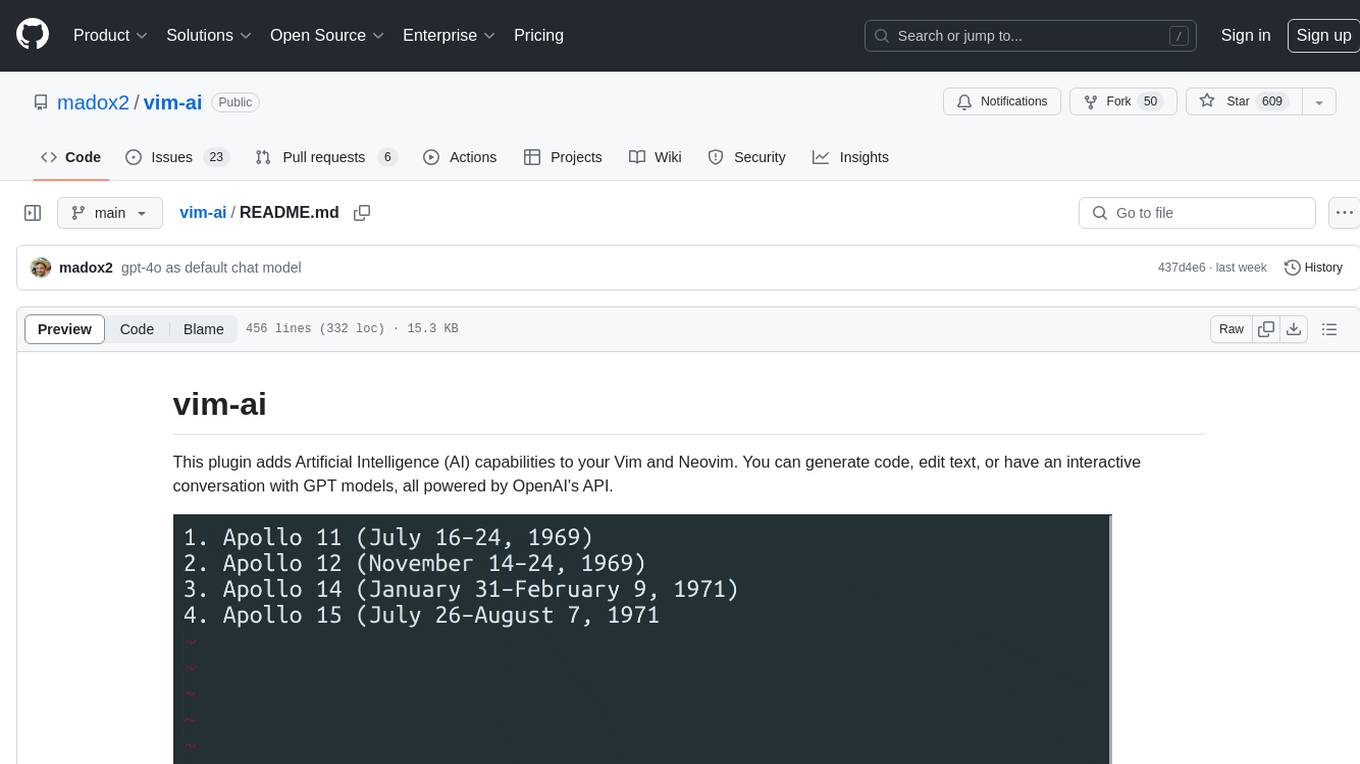

vim-ai

vim-ai is a plugin that adds Artificial Intelligence (AI) capabilities to Vim and Neovim. It allows users to generate code, edit text, and have interactive conversations with GPT models powered by OpenAI's API. The plugin uses OpenAI's API to generate responses, requiring users to set up an account and obtain an API key. It supports various commands for text generation, editing, and chat interactions, providing a seamless integration of AI features into the Vim text editor environment.

parrot.nvim

Parrot.nvim is a Neovim plugin that prioritizes a seamless out-of-the-box experience for text generation. It simplifies functionality and focuses solely on text generation, excluding integration of DALLE and Whisper. It supports persistent conversations as markdown files, custom hooks for inline text editing, multiple providers like Anthropic API, perplexity.ai API, OpenAI API, Mistral API, and local/offline serving via ollama. It allows custom agent definitions, flexible API credential support, and repository-specific instructions with a `.parrot.md` file. It does not have autocompletion or hidden requests in the background to analyze files.

talon-ai-tools

Control large language models and AI tools through voice commands using the Talon Voice dictation engine. This tool is designed to help users quickly edit text, code by voice, reduce keyboard use for those with health issues, and speed up workflow by using AI commands across the desktop. It prompts and extends tools like Github Copilot and OpenAI API for text and image generation. Users can set up the tool by downloading the repo, obtaining an OpenAI API key, and customizing the endpoint URL for preferred models. The tool can be used without an OpenAI key and can be exclusively used with Copilot for those not needing LLM integration.

nvim-aider

Nvim-aider is a plugin for Neovim that provides additional functionality and key mappings to enhance the user's editing experience. It offers features such as code navigation, quick access to commonly used commands, and improved text manipulation tools. With Nvim-aider, users can streamline their workflow and increase productivity while working with Neovim.

BiBi-Keyboard

BiBi-Keyboard is an AI-based intelligent voice input method that aims to make voice input more natural and efficient. It provides features such as voice recognition with simple and intuitive operations, multiple ASR engine support, AI text post-processing, floating ball input for cross-input method usage, AI editing panel with rich editing tools, Material3 design for modern interface style, and support for multiple languages. Users can adjust keyboard height, test input directly in the settings page, view recognition word count statistics, receive vibration feedback, and check for updates automatically. The tool requires Android 10.0 or higher, microphone permission for voice recognition, optional overlay permission for the floating ball feature, and optional accessibility permission for automatic text insertion.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.