LLMeBench

Benchmarking Large Language Models

Stars: 94

LLMeBench is a flexible framework designed for accelerating benchmarking of Large Language Models (LLMs) in the field of Natural Language Processing (NLP). It supports evaluation of various NLP tasks using model providers like OpenAI, HuggingFace Inference API, and Petals. The framework is customizable for different NLP tasks, LLM models, and datasets across multiple languages. It features extensive caching capabilities, supports zero- and few-shot learning paradigms, and allows on-the-fly dataset download and caching. LLMeBench is open-source and continuously expanding to support new models accessible through APIs.

README:

This repository contains code for the LLMeBench framework (described in this paper). The framework currently supports evaluation of a variety of NLP tasks using different model providers such as OpenAI (e.g., GPT), HuggingFace Inference API, Azure, and Petals (e.g., BLOOMZ); it can be seamlessly customized for any NLP task, LLM model and dataset, regardless of language.

- 26 February, 2025 -- New features added: Assets for Spoken Native QA, Multilingual Native QA, and Propagandistic Content Classification, along with datasets for hateful and propagandistic memes.

- 20 January, 2025 -- New assets added. Updated versions for openai, anthropic and sentence_transformers.

- 21 July, 2024 -- Multimodal capabilities have been added. Assets now include support for GPT-4 (OpenAI) and Sonet (Anthropic).

Developing LLMeBench is an ongoing effort and it will be continuously expanded. Currently, the framework features the following:

- Supports 34 tasks featuring 7 model providers. Tested with 80 datasets associated with 16 languages, resulting in ~800 benchmarking assets ready to run.

- Support for text, speech, and multimodality

- Easily extensible to new models accessible through APIs.

- Extensive caching capabilities, to avoid costly API re-calls for repeated experiments.

- Supports zero- and few-shot learning paradigms.

- On-the-fly datasets download and dataset caching.

- Open-source.

-

Install LLMeBench:

pip install 'llmebench[fewshot]' -

Download the current assets:

python -m llmebench assets download. This will fetch assets and place them in the current working directory. -

Download one of the dataset, e.g. ArSAS.

python -m llmebench data download ArSAS. This will download the data to the current working directory inside thedatafolder. -

Evaluate!

For example, to evaluate the performance of a random baseline for Sentiment analysis on ArSAS dataset, you can run:

python -m llmebench --filter 'sentiment/ArSAS_Random*' assets/ results/which uses the ArSAS_random "asset": a file that specifies the dataset, model and task to evaluate. Here,

ArSAS_Randomis the asset name referring to theArSASdataset name and theRandommodel, andassets/ar/sentiment_emotion_others/sentiment/is the directory where the benchmarking asset for the sentiment analysis task on Arabic ArSAS dataset can be found. Results will be saved in a directory calledresults.

In addition to supporting the user to implement their own LLM evaluation and benchmarking experiments, the framework comes equipped with benchmarking assets over a large variety of datasets and NLP tasks. To benchmark models on the same datasets, the framework automatically downloads the datasets when possible. Manually downloading them (for example to explore the data before running any assets) can be done as follows:

python -m llmebench data download <DatasetName>Voilà! all ready to start evaluation...

Note: Some datasets and associated assets are implemented in LLMeBench but the dataset files can't be re-distributed, it is the responsibility of the framework user to acquire them from their original sources. The metadata for each Dataset includes a link to the primary page for the dataset, which can be used to obtain the data. The data should be downloaded and present in a folder under data/<DatasetName>, where <DatasetName> is the same as implementation under llmebench.datasets. For instance, the ADIDataset should have it's data under data/ADI/.

Disclaimer: The datasets associated with the current version of LLMeBench are either existing datasets or processed versions of them. We refer users to the original license accompanying each dataset as provided in the metadata for each dataset script. It is our understanding that these licenses allow for datasets use and redistribution for research or non-commercial purposes .

To run the benchmark,

python -m llmebench --filter '*benchmarking_asset*' --limit <k> --n_shots <n> --ignore_cache <benchmark-dir> <results-dir>-

--filter '*benchmarking_asset*': (Optional) This flag indicates specific tasks in the benchmark to run. The framework will run a wildcard search using 'benchmarking_asset' in the assets directory specified by<benchmark-dir>. If not set, the framework will run the entire benchmark. -

--limit <k>: (Optional) Specify the number of samples from input data to run through the pipeline, to allow efficient testing. If not set, all the samples in a dataset will be evaluated. -

--n_shots <n>: (Optional) If defined, the framework will expect a few-shot asset and will run the few-shot learning paradigm, withnas the number of shots. If not set, zero-shot will be assumed. -

--ignore_cache: (Optional) A flag to ignore loading and saving intermediate model responses from/to cache. -

<benchmark-dir>: Path of the directory where the benchmarking assets can be found. -

<results-dir>: Path of the directory where to save output results, along with intermediate cached values. - You might need to also define environment variables (like access tokens and API urls, e.g.

AZURE_API_URLandAZURE_API_KEY) depending on the benchmark you are running. This can be done by either:-

export AZURE_API_KEY="..."before running the above command, or - prepending

AZURE_API_URL="..." AZURE_API_KEY="..."to the above command. - supplying a dotenv file using the

--envflag. Sample dotenv files are provided in theenv/folder - Each model provider's documentation specifies what environment variables are expected at runtime.

-

<results-dir>: This folder will contain the outputs resulting from running assets. It follows this structure:

-

all_results.json: A file that presents summarized output of all assets that were run where

<results-dir>was specified as the output directory. - The framework will create a sub-folder per benchmarking asset in this directory. A sub-folder will contain:

- n.json: A file per dataset sample, where n indicates sample order in the dataset input file. This file contains input sample, full prompt sent to the model, full model response, and the model output after post-processing as defined in the asset file.

- summary.jsonl: Lists all input samples, and for each, a summarized model prediction, and the post-processed model prediction.

- summary_failed.jsonl: Lists all input samples that didn't get a successful response from the model, in addition to output model's reason behind failure.

- results.json: Contains a summary on number of processed and failed input samples, and evaluation results.

- For few shot experiments, all results are stored in a sub-folder named like 3_shot, where the number signifies the number of few shots samples provided in that particular experiment

jq is a helpful command line utility to analyze the resulting json files. The simplest usage is jq . summary.jsonl, which will print a summary of all samples and model responses in a readable form.

The framework provides caching (if --ignore_cache isn't passed), to enable the following:

- Allowing users to bypass making API calls for items that have already been successfully processed.

- Enhancing the post-processing of the models’ output, as post-processing can be performed repeatedly without having to call the API every time.

The framework has some preliminary support to automatically select n examples per test sample based on a maximal marginal relevance-based approach (using langchain's implementation). This will be expanded in the future to have more few shot example selection mechanisms (e.g Random, Class based etc.).

To run few shot assets, supply the --n_shots <n> option to the benchmarking script. This is set to 0 by default and will run only zero shot assets. If --n_shots is > zero, only few shot assets are run.

The tutorials directory provides tutorials on the following: updating an existing asset, advanced usage commands to run different benchmarking use cases, and extending the framework by at least one of these components:

- Model Provider

- Task

- Dataset

- Asset

Please cite our papers when referring to this framework:

@inproceedings{abdelali-2024-larabench,

title = "{{LAraBench}: Benchmarking Arabic AI with Large Language Models}",

author ={Ahmed Abdelali and Hamdy Mubarak and Shammur Absar Chowdhury and Maram Hasanain and Basel Mousi and Sabri Boughorbel and Samir Abdaljalil and Yassine El Kheir and Daniel Izham and Fahim Dalvi and Majd Hawasly and Nizi Nazar and Yousseif Elshahawy and Ahmed Ali and Nadir Durrani and Natasa Milic-Frayling and Firoj Alam},

booktitle = {Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics: Volume 1, Long Papers},

month = mar,

year = {2024},

address = {Malta},

publisher = {Association for Computational Linguistics},

}

@article{dalvi2023llmebench,

title={{LLMeBench}: A Flexible Framework for Accelerating LLMs Benchmarking},

author={Fahim Dalvi and Maram Hasanain and Sabri Boughorbel and Basel Mousi and Samir Abdaljalil and Nizi Nazar and Ahmed Abdelali and Shammur Absar Chowdhury and Hamdy Mubarak and Ahmed Ali and Majd Hawasly and Nadir Durrani and Firoj Alam},

booktitle = {Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics: System Demonstrations},

month = mar,

year = {2024},

address = {Malta},

publisher = {Association for Computational Linguistics},

}

Please consider citing the following papers if you use the assets derived from them.

@inproceedings{kmainasi2024native,

title={Native vs non-native language prompting: A comparative analysis},

author={Kmainasi, Mohamed Bayan and Khan, Rakif and Shahroor, Ali Ezzat and Bendou, Boushra and Hasanain, Maram and Alam, Firoj},

booktitle={International Conference on Web Information Systems Engineering},

pages={406--420},

year={2024},

organization={Springer}

}

@article{hasan2024nativqa,

title={{NativQA}: Multilingual culturally-aligned natural query for {LLMs}},

author={Hasan, Md Arid and Hasanain, Maram and Ahmad, Fatema and Laskar, Sahinur Rahman and Upadhyay, Sunaya and Sukhadia, Vrunda N and Kutlu, Mucahid and Chowdhury, Shammur Absar and Alam, Firoj},

journal={arXiv preprint arXiv:2407.09823},

year={2024}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLMeBench

Similar Open Source Tools

LLMeBench

LLMeBench is a flexible framework designed for accelerating benchmarking of Large Language Models (LLMs) in the field of Natural Language Processing (NLP). It supports evaluation of various NLP tasks using model providers like OpenAI, HuggingFace Inference API, and Petals. The framework is customizable for different NLP tasks, LLM models, and datasets across multiple languages. It features extensive caching capabilities, supports zero- and few-shot learning paradigms, and allows on-the-fly dataset download and caching. LLMeBench is open-source and continuously expanding to support new models accessible through APIs.

MegatronApp

MegatronApp is a toolchain built around the Megatron-LM training framework, offering performance tuning, slow-node detection, and training-process visualization. It includes modules like MegaScan for anomaly detection, MegaFBD for forward-backward decoupling, MegaDPP for dynamic pipeline planning, and MegaScope for visualization. The tool aims to enhance large-scale distributed training by providing valuable capabilities and insights.

fuse-med-ml

FuseMedML is a Python framework designed to accelerate machine learning-based discovery in the medical field by promoting code reuse. It provides a flexible design concept where data is stored in a nested dictionary, allowing easy handling of multi-modality information. The framework includes components for creating custom models, loss functions, metrics, and data processing operators. Additionally, FuseMedML offers 'batteries included' key components such as fuse.data for data processing, fuse.eval for model evaluation, and fuse.dl for reusable deep learning components. It supports PyTorch and PyTorch Lightning libraries and encourages the creation of domain extensions for specific medical domains.

BambooAI

BambooAI is a lightweight library utilizing Large Language Models (LLMs) to provide natural language interaction capabilities, much like a research and data analysis assistant enabling conversation with your data. You can either provide your own data sets, or allow the library to locate and fetch data for you. It supports Internet searches and external API interactions.

BurstGPT

This repository provides a real-world trace dataset of LLM serving workloads for research and academic purposes. The dataset includes two files, BurstGPT.csv with trace data for 2 months including some failures, and BurstGPT_without_fails.csv without any failures. Users can scale the RPS in the trace, model patterns, and leverage the trace for various evaluations. Future plans include updating the time range of the trace, adding request end times, updating conversation logs, and open-sourcing a benchmark suite for LLM inference. The dataset covers 61 consecutive days, contains 1.4 million lines, and is approximately 50MB in size.

SheetCopilot

SheetCopilot is an assistant agent that manipulates spreadsheets by following user commands. It leverages Large Language Models (LLMs) to interact with spreadsheets like a human expert, enabling non-expert users to complete tasks on complex software such as Google Sheets and Excel via a language interface. The tool observes spreadsheet states, polishes generated solutions based on external action documents and error feedback, and aims to improve success rate and efficiency. SheetCopilot offers a dataset with diverse task categories and operations, supporting operations like entry & manipulation, management, formatting, charts, and pivot tables. Users can interact with SheetCopilot in Excel or Google Sheets, executing tasks like calculating revenue, creating pivot tables, and plotting charts. The tool's evaluation includes performance comparisons with leading LLMs and VBA-based methods on specific datasets, showcasing its capabilities in controlling various aspects of a spreadsheet.

matsciml

The Open MatSci ML Toolkit is a flexible framework for machine learning in materials science. It provides a unified interface to a variety of materials science datasets, as well as a set of tools for data preprocessing, model training, and evaluation. The toolkit is designed to be easy to use for both beginners and experienced researchers, and it can be used to train models for a wide range of tasks, including property prediction, materials discovery, and materials design.

cuvs

cuVS is a library that contains state-of-the-art implementations of several algorithms for running approximate nearest neighbors and clustering on the GPU. It can be used directly or through the various databases and other libraries that have integrated it. The primary goal of cuVS is to simplify the use of GPUs for vector similarity search and clustering.

zshot

Zshot is a highly customizable framework for performing Zero and Few shot named entity and relationships recognition. It can be used for mentions extraction, wikification, zero and few shot named entity recognition, zero and few shot named relationship recognition, and visualization of zero-shot NER and RE extraction. The framework consists of two main components: the mentions extractor and the linker. There are multiple mentions extractors and linkers available, each serving a specific purpose. Zshot also includes a relations extractor and a knowledge extractor for extracting relations among entities and performing entity classification. The tool requires Python 3.6+ and dependencies like spacy, torch, transformers, evaluate, and datasets for evaluation over datasets like OntoNotes. Optional dependencies include flair and blink for additional functionalities. Zshot provides examples, tutorials, and evaluation methods to assess the performance of the components.

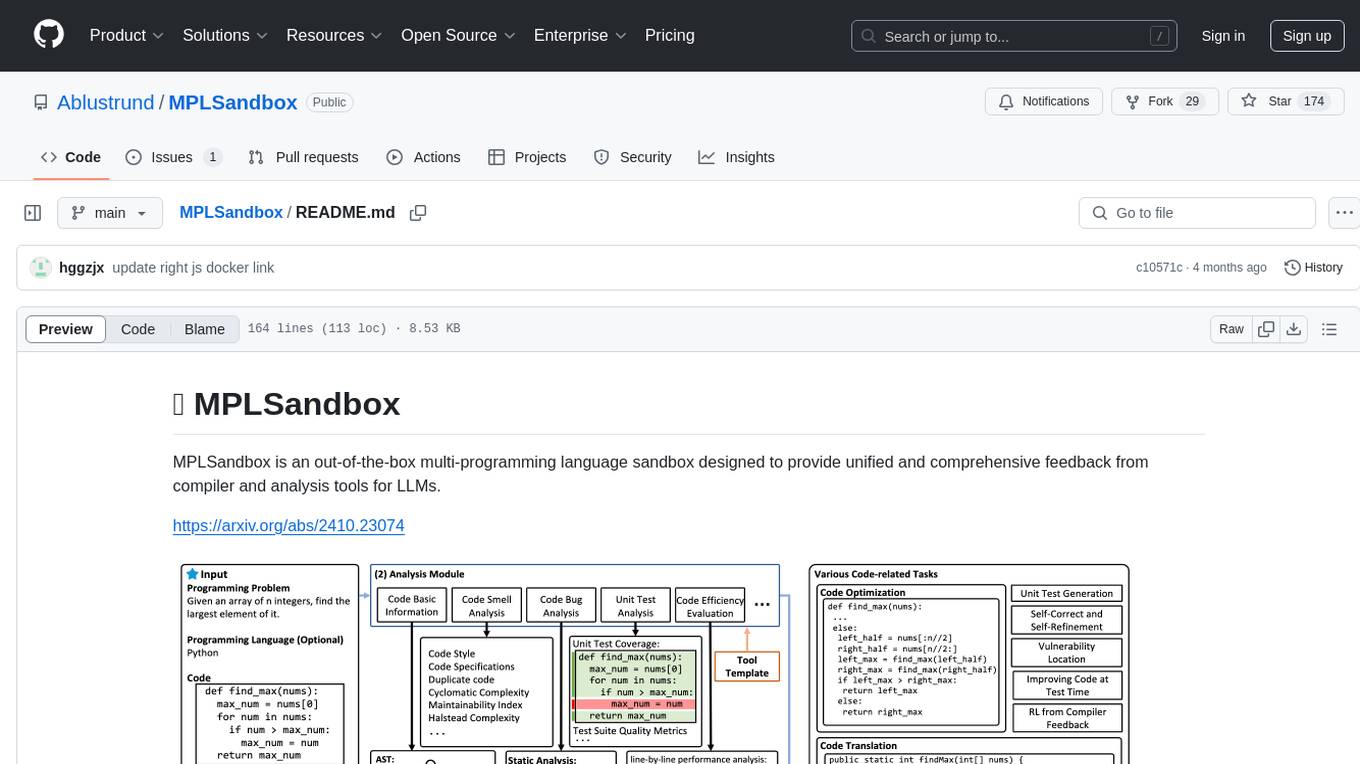

MPLSandbox

MPLSandbox is an out-of-the-box multi-programming language sandbox designed to provide unified and comprehensive feedback from compiler and analysis tools for LLMs. It simplifies code analysis for researchers and can be seamlessly integrated into LLM training and application processes to enhance performance in a range of code-related tasks. The sandbox environment ensures safe code execution, the code analysis module offers comprehensive analysis reports, and the information integration module combines compilation feedback and analysis results for complex code-related tasks.

graphrag-local-ollama

GraphRAG Local Ollama is a repository that offers an adaptation of Microsoft's GraphRAG, customized to support local models downloaded using Ollama. It enables users to leverage local models with Ollama for large language models (LLMs) and embeddings, eliminating the need for costly OpenAPI models. The repository provides a simple setup process and allows users to perform question answering over private text corpora by building a graph-based text index and generating community summaries for closely-related entities. GraphRAG Local Ollama aims to improve the comprehensiveness and diversity of generated answers for global sensemaking questions over datasets.

guidellm

GuideLLM is a powerful tool for evaluating and optimizing the deployment of large language models (LLMs). By simulating real-world inference workloads, GuideLLM helps users gauge the performance, resource needs, and cost implications of deploying LLMs on various hardware configurations. This approach ensures efficient, scalable, and cost-effective LLM inference serving while maintaining high service quality. Key features include performance evaluation, resource optimization, cost estimation, and scalability testing.

semlib

Semlib is a Python library for building data processing and data analysis pipelines that leverage the power of large language models (LLMs). It provides functional programming primitives like map, reduce, sort, and filter, programmed with natural language descriptions. Semlib handles complexities such as prompting, parsing, concurrency control, caching, and cost tracking. The library breaks down sophisticated data processing tasks into simpler steps to improve quality, feasibility, latency, cost, security, and flexibility of data processing tasks.

llms

The 'llms' repository is a comprehensive guide on Large Language Models (LLMs), covering topics such as language modeling, applications of LLMs, statistical language modeling, neural language models, conditional language models, evaluation methods, transformer-based language models, practical LLMs like GPT and BERT, prompt engineering, fine-tuning LLMs, retrieval augmented generation, AI agents, and LLMs for computer vision. The repository provides detailed explanations, examples, and tools for working with LLMs.

CoLLM

CoLLM is a novel method that integrates collaborative information into Large Language Models (LLMs) for recommendation. It converts recommendation data into language prompts, encodes them with both textual and collaborative information, and uses a two-step tuning method to train the model. The method incorporates user/item ID fields in prompts and employs a conventional collaborative model to generate user/item representations. CoLLM is built upon MiniGPT-4 and utilizes pretrained Vicuna weights for training.

BTGenBot

BTGenBot is a tool that generates behavior trees for robots using lightweight large language models (LLMs) with a maximum of 7 billion parameters. It fine-tunes on a specific dataset, compares multiple LLMs, and evaluates generated behavior trees using various methods. The tool demonstrates the potential of LLMs with a limited number of parameters in creating effective and efficient robot behaviors.

For similar tasks

LLMeBench

LLMeBench is a flexible framework designed for accelerating benchmarking of Large Language Models (LLMs) in the field of Natural Language Processing (NLP). It supports evaluation of various NLP tasks using model providers like OpenAI, HuggingFace Inference API, and Petals. The framework is customizable for different NLP tasks, LLM models, and datasets across multiple languages. It features extensive caching capabilities, supports zero- and few-shot learning paradigms, and allows on-the-fly dataset download and caching. LLMeBench is open-source and continuously expanding to support new models accessible through APIs.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.