camel

🐫 CAMEL: The first and the best multi-agent framework. Finding the Scaling Law of Agents. https://www.camel-ai.org

Stars: 14303

CAMEL is an open-source library designed for the study of autonomous and communicative agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

README:

Community | Installation | Examples | Paper | Citation | Contributing | CAMEL-AI

🐫 CAMEL is an open-source community dedicated to finding the scaling laws of agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

Join us (Discord or WeChat) in pushing the boundaries of finding the scaling laws of agents.

🌟 Star CAMEL on GitHub and be instantly notified of new releases.

Table of contents

- CAMEL Framework Design Principles

- Why Use CAMEL for Your Research?

- What Can You Build With CAMEL?

- Quick Start

- Tech Stack

- Research

- Synthetic Datasets

- Cookbooks (Usecases)

- Real-World Usecases

- 🧱 Built with CAMEL (Real-world Producs & Research)

- 🗓️ Events

- Contributing to CAMEL

- Community & Contact

- Citation

- Acknowledgment

- License

The framework enables multi-agent systems to continuously evolve by generating data and interacting with environments. This evolution can be driven by reinforcement learning with verifiable rewards or supervised learning.

The framework is designed to support systems with millions of agents, ensuring efficient coordination, communication, and resource management at scale.

Agents maintain stateful memory, enabling them to perform multi-step interactions with environments and efficiently tackle sophisticated tasks.

Every line of code and comment serves as a prompt for agents. Code should be written clearly and readably, ensuring both humans and agents can interpret it effectively.

We are a community-driven research collective comprising over 100 researchers dedicated to advancing frontier research in Multi-Agent Systems. Researchers worldwide choose CAMEL for their studies based on the following reasons.

| ✅ | Large-Scale Agent System | Simulate up to 1M agents to study emergent behaviors and scaling laws in complex, multi-agent environments. |

| ✅ | Dynamic Communication | Enable real-time interactions among agents, fostering seamless collaboration for tackling intricate tasks. |

| ✅ | Stateful Memory | Equip agents with the ability to retain and leverage historical context, improving decision-making over extended interactions. |

| ✅ | Support for Multiple Benchmarks | Utilize standardized benchmarks to rigorously evaluate agent performance, ensuring reproducibility and reliable comparisons. |

| ✅ | Support for Different Agent Types | Work with a variety of agent roles, tasks, models, and environments, supporting interdisciplinary experiments and diverse research applications. |

| ✅ | Data Generation and Tool Integration | Automate the creation of large-scale, structured datasets while seamlessly integrating with multiple tools, streamlining synthetic data generation and research workflows. |

Installing CAMEL is a breeze thanks to its availability on PyPI. Simply open your terminal and run:

pip install camel-aiThis example demonstrates how to create a ChatAgent using the CAMEL framework and perform a search query using DuckDuckGo.

- Install the tools package:

pip install 'camel-ai[web_tools]'- Set up your OpenAI API key:

export OPENAI_API_KEY='your_openai_api_key'Alternatively, use a .env file:

cp .env.example .env

# then edit .env and add your keys- Run the following Python code:

from camel.models import ModelFactory

from camel.types import ModelPlatformType, ModelType

from camel.agents import ChatAgent

from camel.toolkits import SearchToolkit

model = ModelFactory.create(

model_platform=ModelPlatformType.OPENAI,

model_type=ModelType.GPT_4O,

model_config_dict={"temperature": 0.0},

)

search_tool = SearchToolkit().search_duckduckgo

agent = ChatAgent(model=model, tools=[search_tool])

response_1 = agent.step("What is CAMEL-AI?")

print(response_1.msgs[0].content)

# CAMEL-AI is the first LLM (Large Language Model) multi-agent framework

# and an open-source community focused on finding the scaling laws of agents.

# ...

response_2 = agent.step("What is the Github link to CAMEL framework?")

print(response_2.msgs[0].content)

# The GitHub link to the CAMEL framework is

# [https://github.com/camel-ai/camel](https://github.com/camel-ai/camel).For more detailed instructions and additional configuration options, check out the installation section.

After running, you can explore our CAMEL Tech Stack and Cookbooks at docs.camel-ai.org to build powerful multi-agent systems.

We provide a demo showcasing a conversation between two ChatGPT agents playing roles as a python programmer and a stock trader collaborating on developing a trading bot for stock market.

Explore different types of agents, their roles, and their applications.

Please reach out to us on CAMEL discord if you encounter any issue set up CAMEL.

Core components and utilities to build, operate, and enhance CAMEL-AI agents and societies.

| Module | Description |

|---|---|

| Agents | Core agent architectures and behaviors for autonomous operation. |

| Agent Societies | Components for building and managing multi-agent systems and collaboration. |

| Data Generation | Tools and methods for synthetic data creation and augmentation. |

| Models | Model architectures and customization options for agent intelligence. |

| Tools | Tools integration for specialized agent tasks. |

| Memory | Memory storage and retrieval mechanisms for agent state management. |

| Storage | Persistent storage solutions for agent data and states. |

| Benchmarks | Performance evaluation and testing frameworks. |

| Interpreters | Code and command interpretation capabilities. |

| Data Loaders | Data ingestion and preprocessing tools. |

| Retrievers | Knowledge retrieval and RAG components. |

| Runtime | Execution environment and process management. |

| Human-in-the-Loop | Interactive components for human oversight and intervention. |

We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks.

Explore our research projects:

We warmly invite you to use CAMEL for your impactful research.

Rigorous research takes time and resources. We are a community-driven research collective with 100+ researchers exploring the frontier research of Multi-agent Systems. Join our ongoing projects or test new ideas with us, reach out via email for more information.

For more details, please see our Models Documentation.

Data (Hosted on Hugging Face)

| Dataset | Chat format | Instruction format | Chat format (translated) |

|---|---|---|---|

| AI Society | Chat format | Instruction format | Chat format (translated) |

| Code | Chat format | Instruction format | x |

| Math | Chat format | x | x |

| Physics | Chat format | x | x |

| Chemistry | Chat format | x | x |

| Biology | Chat format | x | x |

| Dataset | Instructions | Tasks |

|---|---|---|

| AI Society | Instructions | Tasks |

| Code | Instructions | Tasks |

| Misalignment | Instructions | Tasks |

Practical guides and tutorials for implementing specific functionalities in CAMEL-AI agents and societies.

| Cookbook | Description |

|---|---|

| Creating Your First Agent | A step-by-step guide to building your first agent. |

| Creating Your First Agent Society | Learn to build a collaborative society of agents. |

| Message Cookbook | Best practices for message handling in agents. |

| Cookbook | Description |

|---|---|

| Tools Cookbook | Integrating tools for enhanced functionality. |

| Memory Cookbook | Implementing memory systems in agents. |

| RAG Cookbook | Recipes for Retrieval-Augmented Generation. |

| Graph RAG Cookbook | Leveraging knowledge graphs with RAG. |

| Track CAMEL Agents with AgentOps | Tools for tracking and managing agents in operations. |

| Cookbook | Description |

|---|---|

| Data Generation with CAMEL and Finetuning with Unsloth | Learn how to generate data with CAMEL and fine-tune models effectively with Unsloth. |

| Data Gen with Real Function Calls and Hermes Format | Explore how to generate data with real function calls and the Hermes format. |

| CoT Data Generation and Upload Data to Huggingface | Uncover how to generate CoT data with CAMEL and seamlessly upload it to Huggingface. |

| CoT Data Generation and SFT Qwen with Unsolth | Discover how to generate CoT data using CAMEL and SFT Qwen with Unsolth, and seamlessly upload your data and model to Huggingface. |

| Cookbook | Description |

|---|---|

| Role-Playing Scraper for Report & Knowledge Graph Generation | Create role-playing agents for data scraping and reporting. |

| Create A Hackathon Judge Committee with Workforce | Building a team of agents for collaborative judging. |

| Dynamic Knowledge Graph Role-Playing: Multi-Agent System with dynamic, temporally-aware knowledge graphs | Builds dynamic, temporally-aware knowledge graphs for financial applications using a multi-agent system. It processes financial reports, news articles, and research papers to help traders analyze data, identify relationships, and uncover market insights. The system also utilizes diverse and optional element node deduplication techniques to ensure data integrity and optimize graph structure for financial decision-making. |

| Customer Service Discord Bot with Agentic RAG | Learn how to build a robust customer service bot for Discord using Agentic RAG. |

| Customer Service Discord Bot with Local Model | Learn how to build a robust customer service bot for Discord using Agentic RAG which supports local deployment. |

| Cookbook | Description |

|---|---|

| Video Analysis | Techniques for agents in video data analysis. |

| 3 Ways to Ingest Data from Websites with Firecrawl | Explore three methods for extracting and processing data from websites using Firecrawl. |

| Create AI Agents that work with your PDFs | Learn how to create AI agents that work with your PDFs using Chunkr and Mistral AI. |

Real-world usecases demonstrating how CAMEL’s multi-agent framework enables real business value across infrastructure automation, productivity workflows, retrieval-augmented conversations, intelligent document/video analysis, and collaborative research.

| Usecase | Description |

|---|---|

| ACI MCP | Real-world usecases demonstrating how CAMEL’s multi-agent framework enables real business value across infrastructure automation, productivity workflows, retrieval-augmented conversations, intelligent document/video analysis, and collaborative research. |

| Cloudflare MCP CAMEL | Intelligent agents manage Cloudflare resources dynamically, enabling scalable and efficient cloud security and performance tuning. |

| Usecase | Description |

|---|---|

| Airbnb MCP | Coordinate agents to optimize and manage Airbnb listings and host operations. |

| PPTX Toolkit Usecase | Analyze PowerPoint documents and extract structured insights through multi-agent collaboration. |

| Usecase | Description |

|---|---|

| Chat with GitHub | Query and understand GitHub codebases through CAMEL agents leveraging RAG-style workflows, accelerating developer onboarding and codebase navigation. |

| Chat with YouTube | Conversational agents extract and summarize video transcripts, enabling faster content understanding and repurposing. |

| Usecase | Description |

|---|---|

| YouTube OCR | Agents perform OCR on video screenshots to summarize visual content, supporting media monitoring and compliance. |

| Mistral OCR | CAMEL agents use OCR with Mistral to analyze documents, reducing manual effort in document understanding workflows. |

| Usecase | Description |

|---|---|

| Multi-Agent Research Assistant | Simulates a team of research agents collaborating on literature review, improving efficiency in exploratory analysis and reporting. |

| Name | Description |

|---|---|

| ChatDev | Communicative Agents for software Development |

| Paper2Poster | Multimodal poster automation from scientific papers |

| Name | Description |

|---|---|

| Eigent | The World First Multi-agent Workforce |

| EigentBot | One EigentBot, |

| Every Code Answer | |

| Matrix | Social Media Simulation |

| AI Geometric | AI-powered interview copilot |

| Log10 | AI accuracy, delivered |

We are actively involved in community events including:

- 🎙️ Community Meetings — Weekly virtual syncs with the CAMEL team

- 🏆 Competitions — Hackathons, Bounty Tasks and coding challenges hosted by CAMEL

- 🤝 Volunteer Activities — Contributions, documentation drives, and mentorship

- 🌍 Ambassador Programs — Represent CAMEL in your university or local tech groups

Want to host or participate in a CAMEL event? Join our Discord or want to be part of Ambassador Program.

For those who'd like to contribute code, we appreciate your interest in contributing to our open-source initiative. Please take a moment to review our contributing guidelines to get started on a smooth collaboration journey.🚀

We also welcome you to help CAMEL grow by sharing it on social media, at events, or during conferences. Your support makes a big difference!

For more information please contact [email protected]

-

GitHub Issues: Report bugs, request features, and track development. Submit an issue

-

Discord: Get real-time support, chat with the community, and stay updated. Join us

-

X (Twitter): Follow for updates, AI insights, and key announcements. Follow us

-

Ambassador Project: Advocate for CAMEL-AI, host events, and contribute content. Learn more

-

WeChat Community: Scan the QR code below to join our WeChat community.

@inproceedings{li2023camel,

title={CAMEL: Communicative Agents for "Mind" Exploration of Large Language Model Society},

author={Li, Guohao and Hammoud, Hasan Abed Al Kader and Itani, Hani and Khizbullin, Dmitrii and Ghanem, Bernard},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023}

}

Special thanks to Nomic AI for giving us extended access to their data set exploration tool (Atlas).

We would also like to thank Haya Hammoud for designing the initial logo of our project.

We implemented amazing research ideas from other works for you to build, compare and customize your agents. If you use any of these modules, please kindly cite the original works:

-

TaskCreationAgent,TaskPrioritizationAgentandBabyAGIfrom Nakajima et al.: Task-Driven Autonomous Agent. [Example] -

PersonaHubfrom Tao Ge et al.: Scaling Synthetic Data Creation with 1,000,000,000 Personas. [Example] -

Self-Instructfrom Yizhong Wang et al.: SELF-INSTRUCT: Aligning Language Models with Self-Generated Instructions. [Example]

The source code is licensed under Apache 2.0.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for camel

Similar Open Source Tools

camel

CAMEL is an open-source library designed for the study of autonomous and communicative agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

EvoAgentX

EvoAgentX is an open-source framework for building, evaluating, and evolving LLM-based agents or agentic workflows in an automated, modular, and goal-driven manner. It enables developers and researchers to move beyond static prompt chaining or manual workflow orchestration by introducing a self-evolving agent ecosystem. The framework includes features such as agent workflow autoconstruction, built-in evaluation, self-evolution engine, plug-and-play compatibility, comprehensive built-in tools, memory module support, and human-in-the-loop interactions.

beeai-framework

BeeAI Framework is a versatile tool for building production-ready multi-agent systems. It offers flexibility in orchestrating agents, seamless integration with various models and tools, and production-grade controls for scaling. The framework supports Python and TypeScript libraries, enabling users to implement simple to complex multi-agent patterns, connect with AI services, and optimize token usage and resource management.

llm4ad

LLM4AD is an open-source Python-based platform leveraging Large Language Models (LLMs) for Automatic Algorithm Design (AD). It provides unified interfaces for methods, tasks, and LLMs, along with features like evaluation acceleration, secure evaluation, logs, GUI support, and more. The platform was originally developed for optimization tasks but is versatile enough to be used in other areas such as machine learning, science discovery, game theory, and engineering design. It offers various search methods and algorithm design tasks across different domains. LLM4AD supports remote LLM API, local HuggingFace LLM deployment, and custom LLM interfaces. The project is licensed under the MIT License and welcomes contributions, collaborations, and issue reports.

runtime

Exosphere is a lightweight runtime designed to make AI agents resilient to failure and enable infinite scaling across distributed compute. It provides a powerful foundation for building and orchestrating AI applications with features such as lightweight runtime, inbuilt failure handling, infinite parallel agents, dynamic execution graphs, native state persistence, and observability. Whether you're working on data pipelines, AI agents, or complex workflow orchestrations, Exosphere offers the infrastructure backbone to make your AI applications production-ready and scalable.

unoplat-code-confluence

Unoplat-CodeConfluence is a universal code context engine that aims to extract, understand, and provide precise code context across repositories tied through domains. It combines deterministic code grammar with state-of-the-art LLM pipelines to achieve human-like understanding of codebases in minutes. The tool offers smart summarization, graph-based embedding, enhanced onboarding, graph-based intelligence, deep dependency insights, and seamless integration with existing development tools and workflows. It provides a precise context API for knowledge engine and AI coding assistants, enabling reliable code understanding through bottom-up code summarization, graph-based querying, and deep package and dependency analysis.

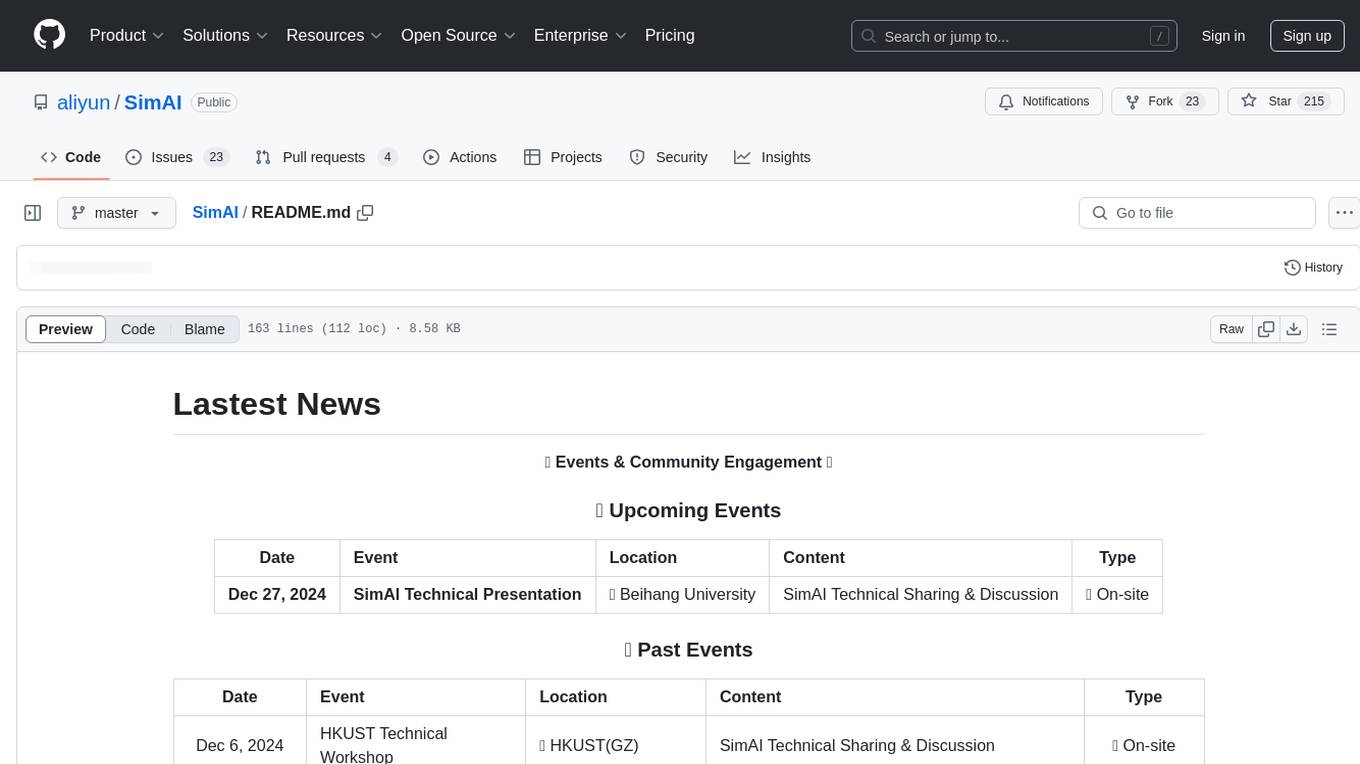

SimAI

SimAI is the industry's first full-stack, high-precision simulator for AI large-scale training. It provides detailed modeling and simulation of the entire LLM training process, encompassing framework, collective communication, network layers, and more. This comprehensive approach offers end-to-end performance data, enabling researchers to analyze training process details, evaluate time consumption of AI tasks under specific conditions, and assess performance gains from various algorithmic optimizations.

superduperdb

SuperDuperDB is a Python framework for integrating AI models, APIs, and vector search engines directly with your existing databases, including hosting of your own models, streaming inference and scalable model training/fine-tuning. Build, deploy and manage any AI application without the need for complex pipelines, infrastructure as well as specialized vector databases, and moving our data there, by integrating AI at your data's source: - Generative AI, LLMs, RAG, vector search - Standard machine learning use-cases (classification, segmentation, regression, forecasting recommendation etc.) - Custom AI use-cases involving specialized models - Even the most complex applications/workflows in which different models work together SuperDuperDB is **not** a database. Think `db = superduper(db)`: SuperDuperDB transforms your databases into an intelligent platform that allows you to leverage the full AI and Python ecosystem. A single development and deployment environment for all your AI applications in one place, fully scalable and easy to manage.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

BharatMLStack

BharatMLStack is a comprehensive, production-ready machine learning infrastructure platform designed to democratize ML capabilities across India and beyond. It provides a robust, scalable, and accessible ML stack empowering organizations to build, deploy, and manage machine learning solutions at massive scale. It includes core components like Horizon, Trufflebox UI, Online Feature Store, Go SDK, Python SDK, and Numerix, offering features such as control plane, ML management console, real-time features, mathematical compute engine, and more. The platform is production-ready, cloud agnostic, and offers observability through built-in monitoring and logging.

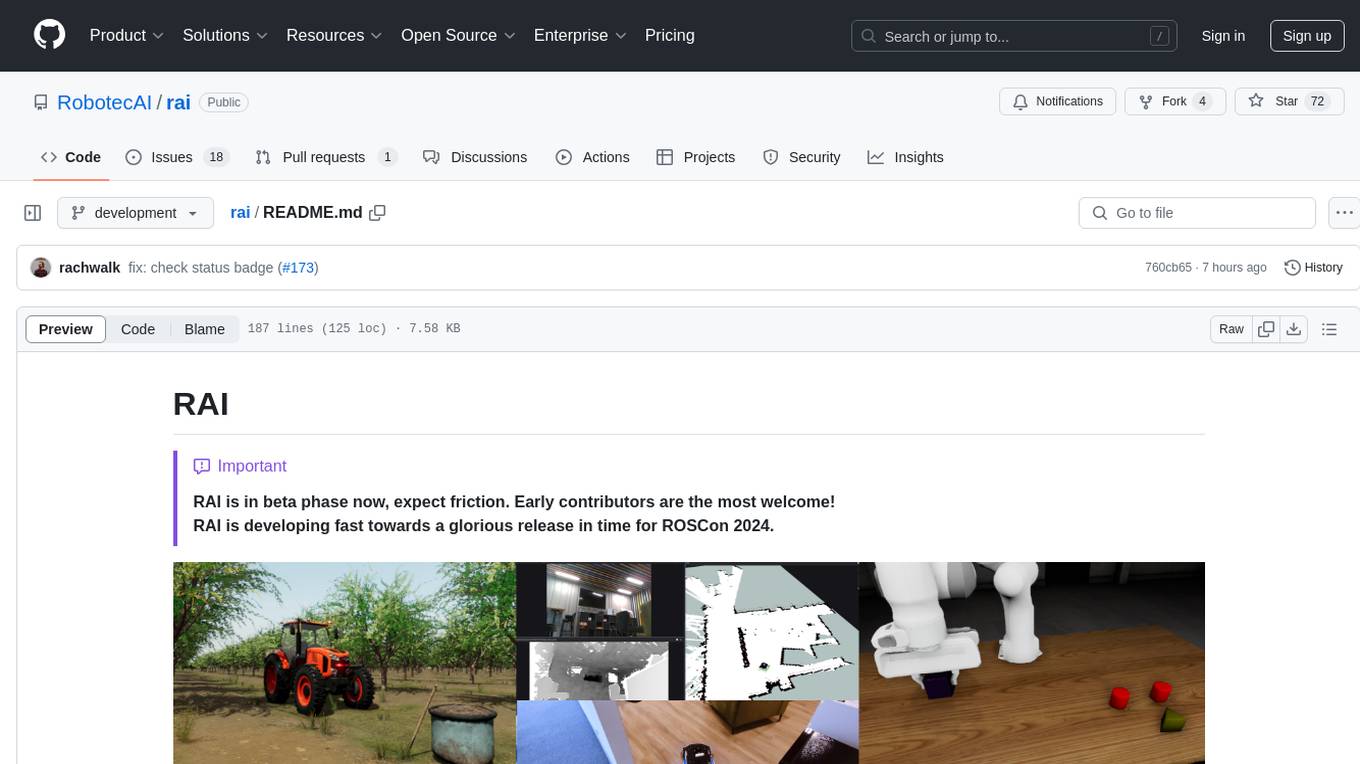

rai

RAI is a framework designed to bring general multi-agent system capabilities to robots, enhancing human interactivity, flexibility in problem-solving, and out-of-the-box AI features. It supports multi-modalities, incorporates an advanced database for agent memory, provides ROS 2-oriented tooling, and offers a comprehensive task/mission orchestrator. The framework includes features such as voice interaction, customizable robot identity, camera sensor access, reasoning through ROS logs, and integration with LangChain for AI tools. RAI aims to support various AI vendors, improve human-robot interaction, provide an SDK for developers, and offer a user interface for configuration.

inference

Xorbits Inference (Xinference) is a powerful and versatile library designed to serve language, speech recognition, and multimodal models. With Xorbits Inference, you can effortlessly deploy and serve your or state-of-the-art built-in models using just a single command. Whether you are a researcher, developer, or data scientist, Xorbits Inference empowers you to unleash the full potential of cutting-edge AI models.

agents-towards-production

Agents Towards Production is an open-source playbook for building production-ready GenAI agents that scale from prototype to enterprise. Tutorials cover stateful workflows, vector memory, real-time web search APIs, Docker deployment, FastAPI endpoints, security guardrails, GPU scaling, browser automation, fine-tuning, multi-agent coordination, observability, evaluation, and UI development.

Vision-Agents

Vision Agents is an open-source project by Stream that provides building blocks for creating intelligent, low-latency video experiences powered by custom models and infrastructure. It offers multi-modal AI agents that watch, listen, and understand video in real-time. The project includes SDKs for various platforms and integrates with popular AI services like Gemini and OpenAI. Vision Agents can be used for tasks such as sports coaching, security camera systems with package theft detection, and building invisible assistants for various applications. The project aims to simplify the development of real-time vision AI applications by providing a range of processors, integrations, and out-of-the-box features.

nuitrack-sdk

Nuitrack™ is an ultimate 3D body tracking solution developed by 3DiVi Inc. It enables body motion analytics applications for virtually any widespread depth sensors and hardware platforms, supporting a wide range of applications from real-time gesture recognition on embedded platforms to large-scale multisensor analytical systems. Nuitrack provides highly-sophisticated 3D skeletal tracking, basic facial analysis, hand tracking, and gesture recognition APIs for UI control. It offers two skeletal tracking engines: classical for embedded hardware and AI for complex poses, providing a human-centric spatial understanding tool for natural and intelligent user engagement.

holmesgpt

HolmesGPT is an AI agent designed for troubleshooting and investigating issues in cloud environments. It utilizes AI models to analyze data from various sources, identify root causes, and provide remediation suggestions. The tool offers integrations with popular cloud providers, observability tools, and on-call systems, enabling users to streamline the troubleshooting process. HolmesGPT can automate the investigation of alerts and tickets from external systems, providing insights back to the source or communication platforms like Slack. It supports end-to-end automation and offers a CLI for interacting with the AI agent. Users can customize HolmesGPT by adding custom data sources and runbooks to enhance investigation capabilities. The tool prioritizes data privacy, ensuring read-only access and respecting RBAC permissions. HolmesGPT is a CNCF Sandbox Project and is distributed under the Apache 2.0 License.

For similar tasks

camel

CAMEL is an open-source library designed for the study of autonomous and communicative agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

For similar jobs

LLM-FineTuning-Large-Language-Models

This repository contains projects and notes on common practical techniques for fine-tuning Large Language Models (LLMs). It includes fine-tuning LLM notebooks, Colab links, LLM techniques and utils, and other smaller language models. The repository also provides links to YouTube videos explaining the concepts and techniques discussed in the notebooks.

lloco

LLoCO is a technique that learns documents offline through context compression and in-domain parameter-efficient finetuning using LoRA, which enables LLMs to handle long context efficiently.

camel

CAMEL is an open-source library designed for the study of autonomous and communicative agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

llm-baselines

LLM-baselines is a modular codebase to experiment with transformers, inspired from NanoGPT. It provides a quick and easy way to train and evaluate transformer models on a variety of datasets. The codebase is well-documented and easy to use, making it a great resource for researchers and practitioners alike.

python-tutorial-notebooks

This repository contains Jupyter-based tutorials for NLP, ML, AI in Python for classes in Computational Linguistics, Natural Language Processing (NLP), Machine Learning (ML), and Artificial Intelligence (AI) at Indiana University.

EvalAI

EvalAI is an open-source platform for evaluating and comparing machine learning (ML) and artificial intelligence (AI) algorithms at scale. It provides a central leaderboard and submission interface, making it easier for researchers to reproduce results mentioned in papers and perform reliable & accurate quantitative analysis. EvalAI also offers features such as custom evaluation protocols and phases, remote evaluation, evaluation inside environments, CLI support, portability, and faster evaluation.

Weekly-Top-LLM-Papers

This repository provides a curated list of weekly published Large Language Model (LLM) papers. It includes top important LLM papers for each week, organized by month and year. The papers are categorized into different time periods, making it easy to find the most recent and relevant research in the field of LLM.

self-llm

This project is a Chinese tutorial for domestic beginners based on the AutoDL platform, providing full-process guidance for various open-source large models, including environment configuration, local deployment, and efficient fine-tuning. It simplifies the deployment, use, and application process of open-source large models, enabling more ordinary students and researchers to better use open-source large models and helping open and free large models integrate into the lives of ordinary learners faster.