nuitrack-sdk

Nuitrack™ is a 3D tracking middleware developed by 3DiVi Inc.

Stars: 489

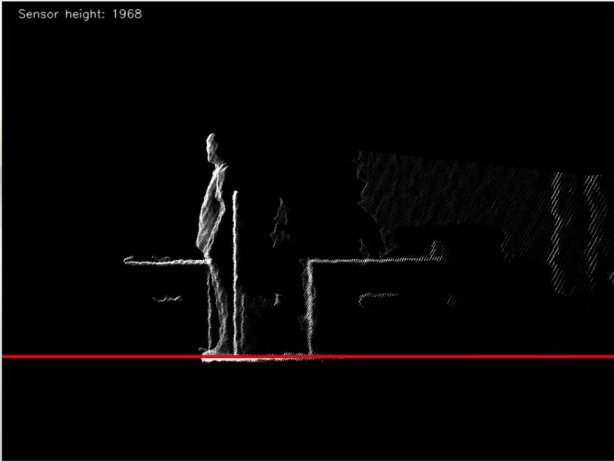

Nuitrack™ is an ultimate 3D body tracking solution developed by 3DiVi Inc. It enables body motion analytics applications for virtually any widespread depth sensors and hardware platforms, supporting a wide range of applications from real-time gesture recognition on embedded platforms to large-scale multisensor analytical systems. Nuitrack provides highly-sophisticated 3D skeletal tracking, basic facial analysis, hand tracking, and gesture recognition APIs for UI control. It offers two skeletal tracking engines: classical for embedded hardware and AI for complex poses, providing a human-centric spatial understanding tool for natural and intelligent user engagement.

README:

- 🔜 Holistic skeletal tracking with multiple sensors

- 🔜 Major update on tracking accuracy

- 🆕 TouchDesigner - the official release is now available.

Now you can activate Nuitrack AI Subscription and start using the plugin without restrictions. 🎆 - 🆕 Write CSV files using Nuitrack App. No knowledge of C++ is required!

- 🆕 Nuitrack now supports Docker

- 🆕 Unreal Engine 5 (Beta) 🚀 is now available !

- ✅ Jul'23 - 0.36.13 - Orbbec Persee+ and Femto (W) are finally supported !

- ✅ Oct'22 - 0.36.7 - 🎥 Multisensor tracking

This opens up a huge opportunities like room-scale tracking with increased accuracy. Please stay tuned to get a firsthand experience of the coming Nuitrack Holistic. - ✅ Sep'22 - 0.36.4 - ⚙️ Failure cases recorder

- ✅ Sep'22 - 0.36.2 - Nuitrack Daemon [Beta]

- ✅ Aug'22 - 0.36.1 - 📝 Person re-identification

Nuitrack™ is an ultimate 3D body tracking solution developed by 3DiVi Inc.

It enables body motion analytics applications for virtually any widespread:

- depth sensors - Orbbec Astra/Persee/Femto, Kinect v1/v2, Kinect Azure, Intel Realsense, Asus Xtion, LIPS, Structure Sensor, etc.

- hardware platforms - x64, x86, ARMv7, ARMv8

- OSes - Windows, Linux, Android, iOS

Inspired initially by Microsoft Kinect, Nuitrack™'s mission is to provide strong skeletal tracking baseline for the next generation of immersive and analytical applications beyond any specific platform or hardware. Think of it as a "Kinect for anything".

With its performance and flexibility resulting from 10 years of development Nuitrack™ is capable to support the wide range of applications:

- from a real-time gesture recognition on embedded platforms like Raspberry Pi4

- to a large-scale multisensor analytical systems

Now it's all yours - try it, use it, challenge it!

After being launched with any supported depth sensor Nuitrack provides:

Nuitrack has two Skeletal Tracking engines:

- "classical" - fast, stable and lightweight, highly-optimized for embedded hardware and limited CPU usage

- AI - new deep-learning based engine, which provides greater coverage for complex poses

Essentially Nuitrack provides a human-centric spatial understanding tool for your applications to engage with a user in a natural and intelligent way.

It's as quick and simple as 1-2-3:

- Download the Nuitrack Runtime package for your Platform of choice

- Install it, in case of any issues please follow the Installation Instructions

- Just plug-in your sensor and launch Nuitrack executable from start menu

- Nuitrack provides the ability to embed it into your application, which means it does not need to be installed on the PC of your customers, for more information, see here

| C++ | C# | Python |

|---|---|---|

| Unity | Unreal Engine 4.20 | 🆕 UE 5 Blueprints (beta) | 🆕 Touch Designer | 🆕 Docker |

|---|---|---|---|---|

If you have any questions, issues or feature ideas - feel free to engage with Nuitrack Team at our Community Forum.

| Resource | Description |

|---|---|

| Nuitrack.com | general information and license purchasing |

| Licensing Dashboard | licenses/subscriptions management |

| Troubleshooting page | known issues with resolution |

| Community Forum | troubleshooting, feature discussions |

| Documentation | documentation index |

| Runtime Components | packages for all supported platforms |

| C++/C# API | auto-generated API reference (Doxygen) |

| C++/C# Examples | These basic examples demonstrate how to use Nuitrack SDK |

| iOS [beta] | Get started with Nuitrack for iOS |

- Games and Training (Fitness, Dance Lessons)

- Medical Rehabilitation

- Smart Home

- Natural/Gesture-based User Interface (NUI)

- Full Body Tracking for AR / VR

- Audience Analytics

- Robot Vision

Nuitrack is widely used in serious research, here are just a few selected references:

- Detecting Short Distance Throwing Motions Using RGB-D Camera

- Planning Collision-Free Robot Motions in a Human–Robot Shared Workspace via Mixed Reality and Sensor-Fusion Skeleton Tracking

- Skeleton Driven Action Recognition Using an Image-Based Spatial-Temporal Representation and Convolution Neural Network

- Towards a Live Feedback Training System: Interchangeability of Orbbec Persee and Microsoft Kinect for Exercise Monitoring

- ROBOGait: A Mobile Robotic Platform for Human Gait Analysis in Clinical Environments

- Robot-Assisted Gait Self-Training: Assessing the Level Achieved

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nuitrack-sdk

Similar Open Source Tools

nuitrack-sdk

Nuitrack™ is an ultimate 3D body tracking solution developed by 3DiVi Inc. It enables body motion analytics applications for virtually any widespread depth sensors and hardware platforms, supporting a wide range of applications from real-time gesture recognition on embedded platforms to large-scale multisensor analytical systems. Nuitrack provides highly-sophisticated 3D skeletal tracking, basic facial analysis, hand tracking, and gesture recognition APIs for UI control. It offers two skeletal tracking engines: classical for embedded hardware and AI for complex poses, providing a human-centric spatial understanding tool for natural and intelligent user engagement.

netdata

Netdata is an open-source, real-time infrastructure monitoring platform that provides instant insights, zero configuration deployment, ML-powered anomaly detection, efficient monitoring with minimal resource usage, and secure & distributed data storage. It offers real-time, per-second updates and clear insights at a glance. Netdata's origin story involves addressing the limitations of existing monitoring tools and led to a fundamental shift in infrastructure monitoring. It is recognized as the most energy-efficient tool for monitoring Docker-based systems according to a study by the University of Amsterdam.

camel

CAMEL is an open-source library designed for the study of autonomous and communicative agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

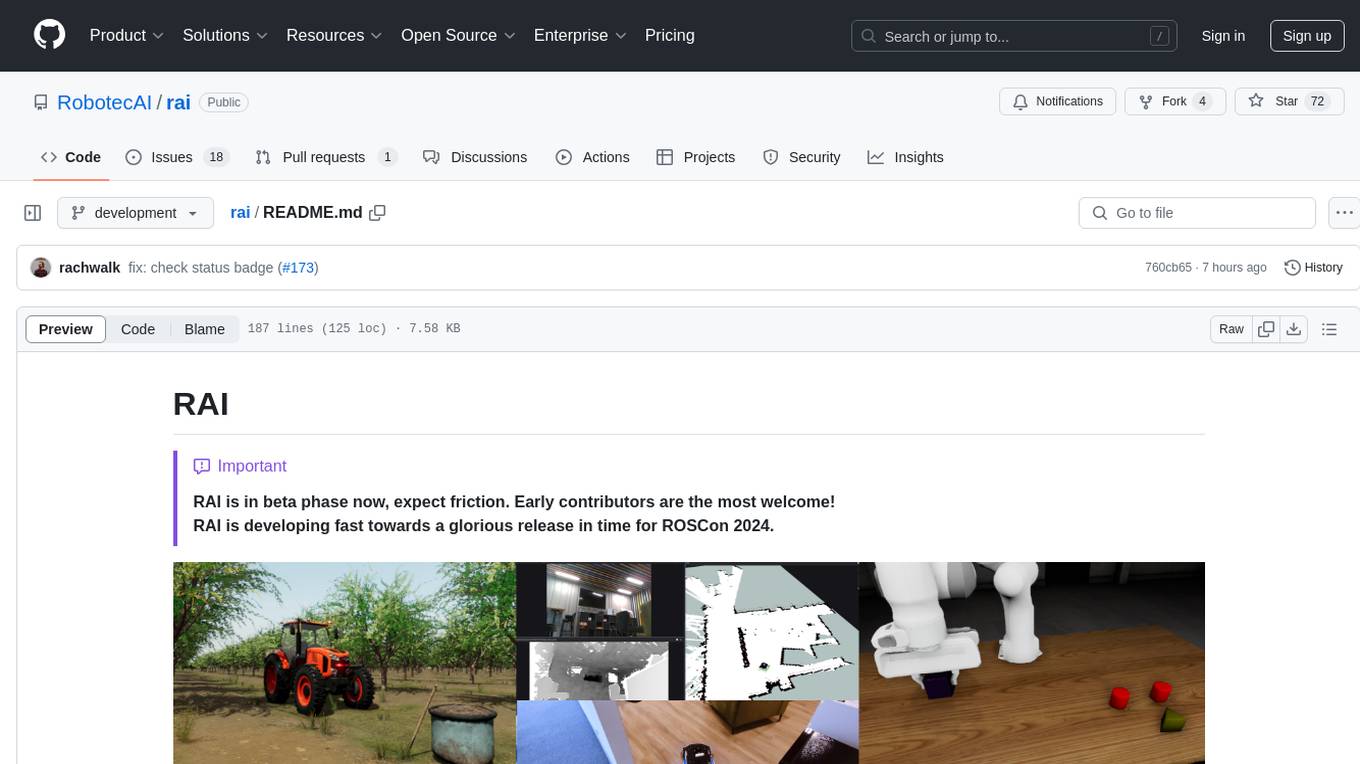

rai

RAI is a framework designed to bring general multi-agent system capabilities to robots, enhancing human interactivity, flexibility in problem-solving, and out-of-the-box AI features. It supports multi-modalities, incorporates an advanced database for agent memory, provides ROS 2-oriented tooling, and offers a comprehensive task/mission orchestrator. The framework includes features such as voice interaction, customizable robot identity, camera sensor access, reasoning through ROS logs, and integration with LangChain for AI tools. RAI aims to support various AI vendors, improve human-robot interaction, provide an SDK for developers, and offer a user interface for configuration.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

runtime

Exosphere is a lightweight runtime designed to make AI agents resilient to failure and enable infinite scaling across distributed compute. It provides a powerful foundation for building and orchestrating AI applications with features such as lightweight runtime, inbuilt failure handling, infinite parallel agents, dynamic execution graphs, native state persistence, and observability. Whether you're working on data pipelines, AI agents, or complex workflow orchestrations, Exosphere offers the infrastructure backbone to make your AI applications production-ready and scalable.

parallax

Parallax is a fully decentralized inference engine developed by Gradient. It allows users to build their own AI cluster for model inference across distributed nodes with varying configurations and physical locations. Core features include hosting local LLM on personal devices, cross-platform support, pipeline parallel model sharding, paged KV cache management, continuous batching for Mac, dynamic request scheduling, and routing for high performance. The backend architecture includes P2P communication powered by Lattica, GPU backend powered by SGLang and vLLM, and MAC backend powered by MLX LM.

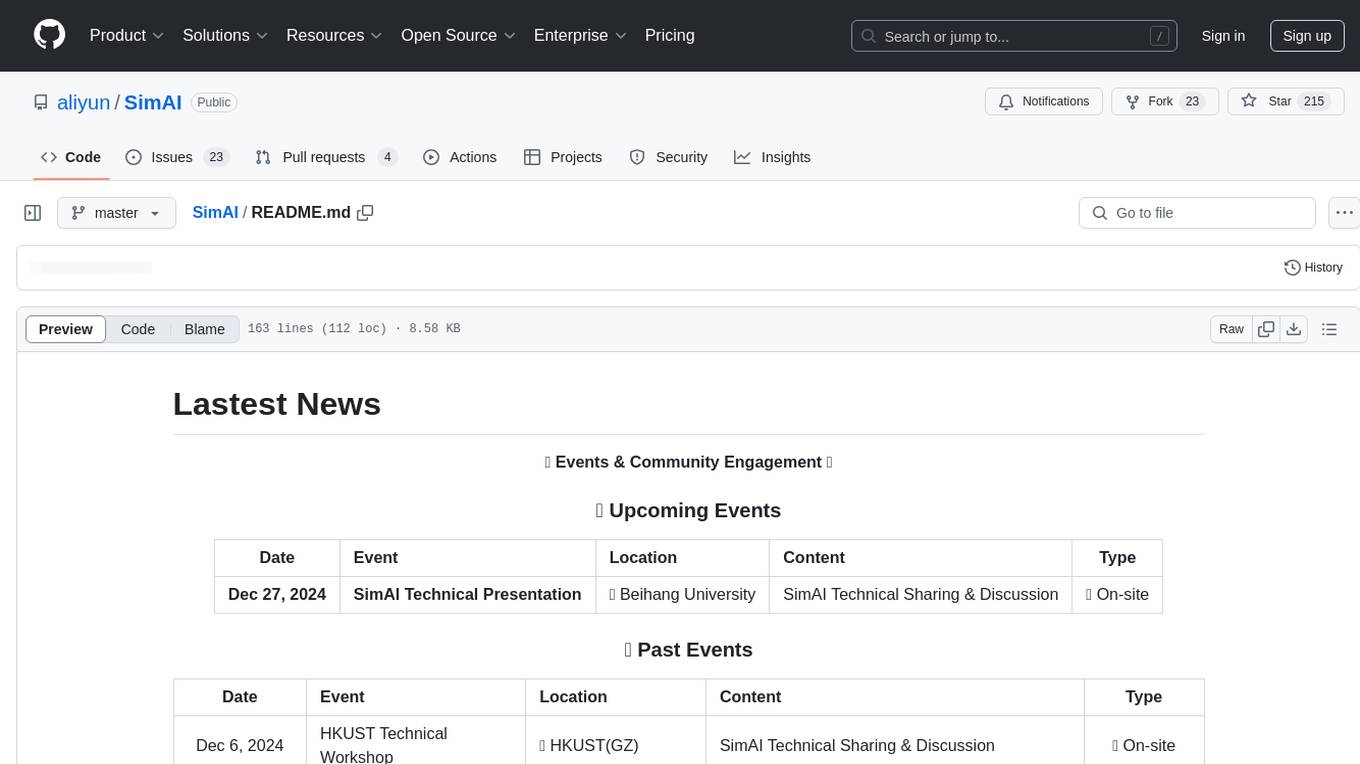

SimAI

SimAI is the industry's first full-stack, high-precision simulator for AI large-scale training. It provides detailed modeling and simulation of the entire LLM training process, encompassing framework, collective communication, network layers, and more. This comprehensive approach offers end-to-end performance data, enabling researchers to analyze training process details, evaluate time consumption of AI tasks under specific conditions, and assess performance gains from various algorithmic optimizations.

inference

Xorbits Inference (Xinference) is a powerful and versatile library designed to serve language, speech recognition, and multimodal models. With Xorbits Inference, you can effortlessly deploy and serve your or state-of-the-art built-in models using just a single command. Whether you are a researcher, developer, or data scientist, Xorbits Inference empowers you to unleash the full potential of cutting-edge AI models.

unoplat-code-confluence

Unoplat-CodeConfluence is a universal code context engine that aims to extract, understand, and provide precise code context across repositories tied through domains. It combines deterministic code grammar with state-of-the-art LLM pipelines to achieve human-like understanding of codebases in minutes. The tool offers smart summarization, graph-based embedding, enhanced onboarding, graph-based intelligence, deep dependency insights, and seamless integration with existing development tools and workflows. It provides a precise context API for knowledge engine and AI coding assistants, enabling reliable code understanding through bottom-up code summarization, graph-based querying, and deep package and dependency analysis.

rhesis

Rhesis is a comprehensive test management platform designed for Gen AI teams, offering tools to create, manage, and execute test cases for generative AI applications. It ensures the robustness, reliability, and compliance of AI systems through features like test set management, automated test generation, edge case discovery, compliance validation, integration capabilities, and performance tracking. The platform is open source, emphasizing community-driven development, transparency, extensible architecture, and democratizing AI safety. It includes components such as backend services, frontend applications, SDK for developers, worker services, chatbot applications, and Polyphemus for uncensored LLM service. Rhesis enables users to address challenges unique to testing generative AI applications, such as non-deterministic outputs, hallucinations, edge cases, ethical concerns, and compliance requirements.

RAG-Retrieval

RAG-Retrieval provides full-chain RAG retrieval fine-tuning and inference code. It supports fine-tuning any open-source RAG retrieval models, including vector (embedding, graph a), delayed interactive models (ColBERT, graph d), interactive models (cross encoder, graph c). For inference, RAG-Retrieval focuses on ranking (reranker) and has developed a lightweight Python library rag-retrieval, providing a unified way to call any different RAG ranking models.

RAG-Retrieval

RAG-Retrieval is an end-to-end code repository that provides training, inference, and distillation capabilities for the RAG retrieval model. It supports fine-tuning of various open-source RAG retrieval models, including embedding models, late interactive models, and reranker models. The repository offers a lightweight Python library for calling different RAG ranking models and allows distillation of LLM-based reranker models into bert-based reranker models. It includes features such as support for end-to-end fine-tuning, distillation of large models, advanced algorithms like MRL, multi-GPU training strategy, and a simple code structure for easy modifications.

buffer-of-thought-llm

Buffer of Thoughts (BoT) is a thought-augmented reasoning framework designed to enhance the accuracy, efficiency, and robustness of large language models (LLMs). It introduces a meta-buffer to store high-level thought-templates distilled from problem-solving processes, enabling adaptive reasoning for efficient problem-solving. The framework includes a buffer-manager to dynamically update the meta-buffer, ensuring scalability and stability. BoT achieves significant performance improvements on reasoning-intensive tasks and demonstrates superior generalization ability and robustness while being cost-effective compared to other methods.

xtuner

XTuner is an efficient, flexible, and full-featured toolkit for fine-tuning large models. It supports various LLMs (InternLM, Mixtral-8x7B, Llama 2, ChatGLM, Qwen, Baichuan, ...), VLMs (LLaVA), and various training algorithms (QLoRA, LoRA, full-parameter fine-tune). XTuner also provides tools for chatting with pretrained / fine-tuned LLMs and deploying fine-tuned LLMs with any other framework, such as LMDeploy.

MNN

MNN is a highly efficient and lightweight deep learning framework that supports inference and training of deep learning models. It has industry-leading performance for on-device inference and training. MNN has been integrated into various Alibaba Inc. apps and is used in scenarios like live broadcast, short video capture, search recommendation, and product searching by image. It is also utilized on embedded devices such as IoT. MNN-LLM and MNN-Diffusion are specific runtime solutions developed based on the MNN engine for deploying language models and diffusion models locally on different platforms. The framework is optimized for devices, supports various neural networks, and offers high performance with optimized assembly code and GPU support. MNN is versatile, easy to use, and supports hybrid computing on multiple devices.

For similar tasks

nuitrack-sdk

Nuitrack™ is an ultimate 3D body tracking solution developed by 3DiVi Inc. It enables body motion analytics applications for virtually any widespread depth sensors and hardware platforms, supporting a wide range of applications from real-time gesture recognition on embedded platforms to large-scale multisensor analytical systems. Nuitrack provides highly-sophisticated 3D skeletal tracking, basic facial analysis, hand tracking, and gesture recognition APIs for UI control. It offers two skeletal tracking engines: classical for embedded hardware and AI for complex poses, providing a human-centric spatial understanding tool for natural and intelligent user engagement.

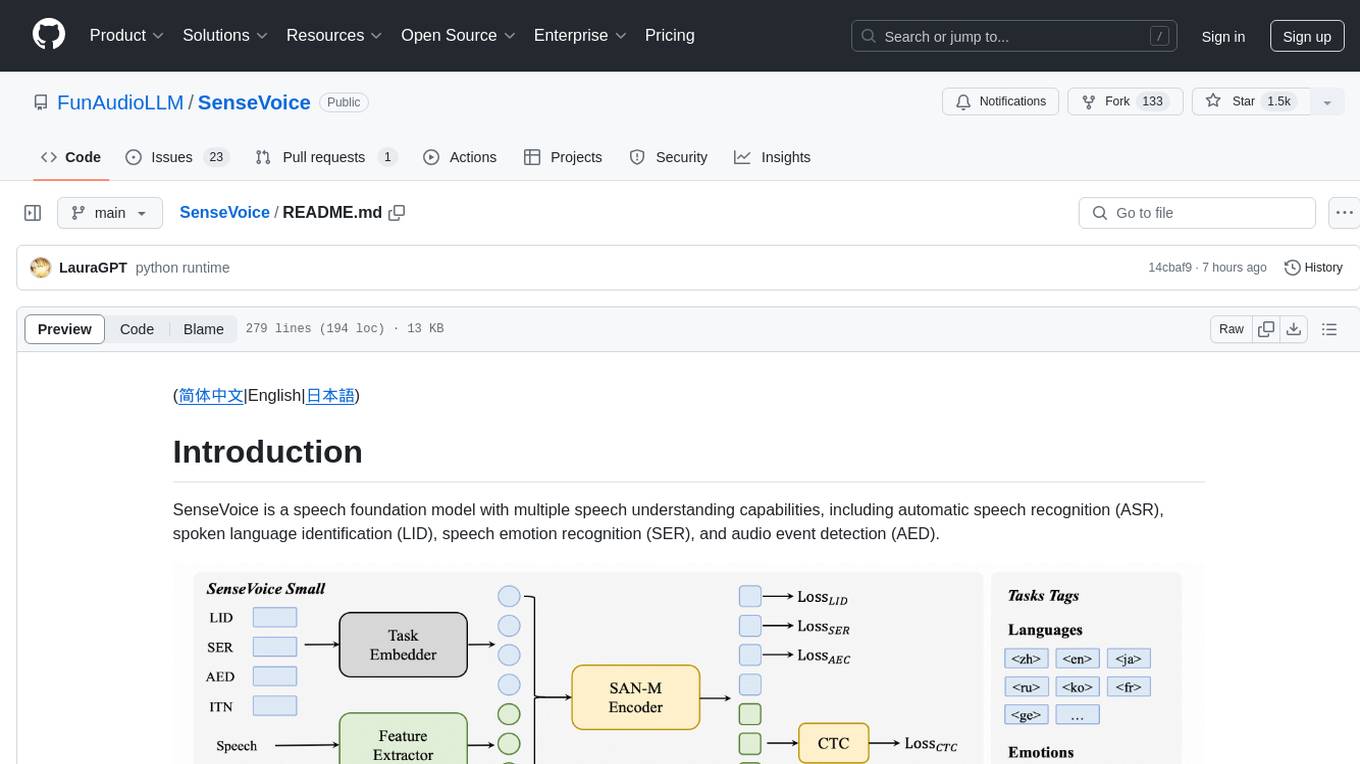

SenseVoice

SenseVoice is a speech foundation model focusing on high-accuracy multilingual speech recognition, speech emotion recognition, and audio event detection. Trained with over 400,000 hours of data, it supports more than 50 languages and excels in emotion recognition and sound event detection. The model offers efficient inference with low latency and convenient finetuning scripts. It can be deployed for service with support for multiple client-side languages. SenseVoice-Small model is open-sourced and provides capabilities for Mandarin, Cantonese, English, Japanese, and Korean. The tool also includes features for natural speech generation and fundamental speech recognition tasks.

multimodal_cognitive_ai

The multimodal cognitive AI repository focuses on research work related to multimodal cognitive artificial intelligence. It explores the integration of multiple modes of data such as text, images, and audio to enhance AI systems' cognitive capabilities. The repository likely contains code, datasets, and research papers related to multimodal AI applications, including natural language processing, computer vision, and audio processing. Researchers and developers interested in advancing AI systems' understanding of multimodal data can find valuable resources and insights in this repository.

For similar jobs

nuitrack-sdk

Nuitrack™ is an ultimate 3D body tracking solution developed by 3DiVi Inc. It enables body motion analytics applications for virtually any widespread depth sensors and hardware platforms, supporting a wide range of applications from real-time gesture recognition on embedded platforms to large-scale multisensor analytical systems. Nuitrack provides highly-sophisticated 3D skeletal tracking, basic facial analysis, hand tracking, and gesture recognition APIs for UI control. It offers two skeletal tracking engines: classical for embedded hardware and AI for complex poses, providing a human-centric spatial understanding tool for natural and intelligent user engagement.

videokit

VideoKit is a full-featured user-generated content solution for Unity Engine, enabling video recording, camera streaming, microphone streaming, social sharing, and conversational interfaces. It is cross-platform, with C# source code available for inspection. Users can share media, save to camera roll, pick from camera roll, stream camera preview, record videos, remove background, caption audio, and convert text commands. VideoKit requires Unity 2022.3+ and supports Android, iOS, macOS, Windows, and WebGL platforms.

AirAPI_Windows

AirAPI_Windows is a work in progress driver for the Nreal Air glasses, providing native windows support without nebula. The project aims to address slight drift in fusion configuration and is a collaborative effort by the community to offer open access to the glasses. Users can access precompiled AirAPI_Windows.dll along with hidapi.dll for usage. The tool allows building from source, including getting hidapi, cloning the project, and building Fusion and AirAPI_Windows DLL. The project is supported by contributors like abls, edwatt, and jakedowns, and users can show appreciation by buying a coffee for msmithdev.

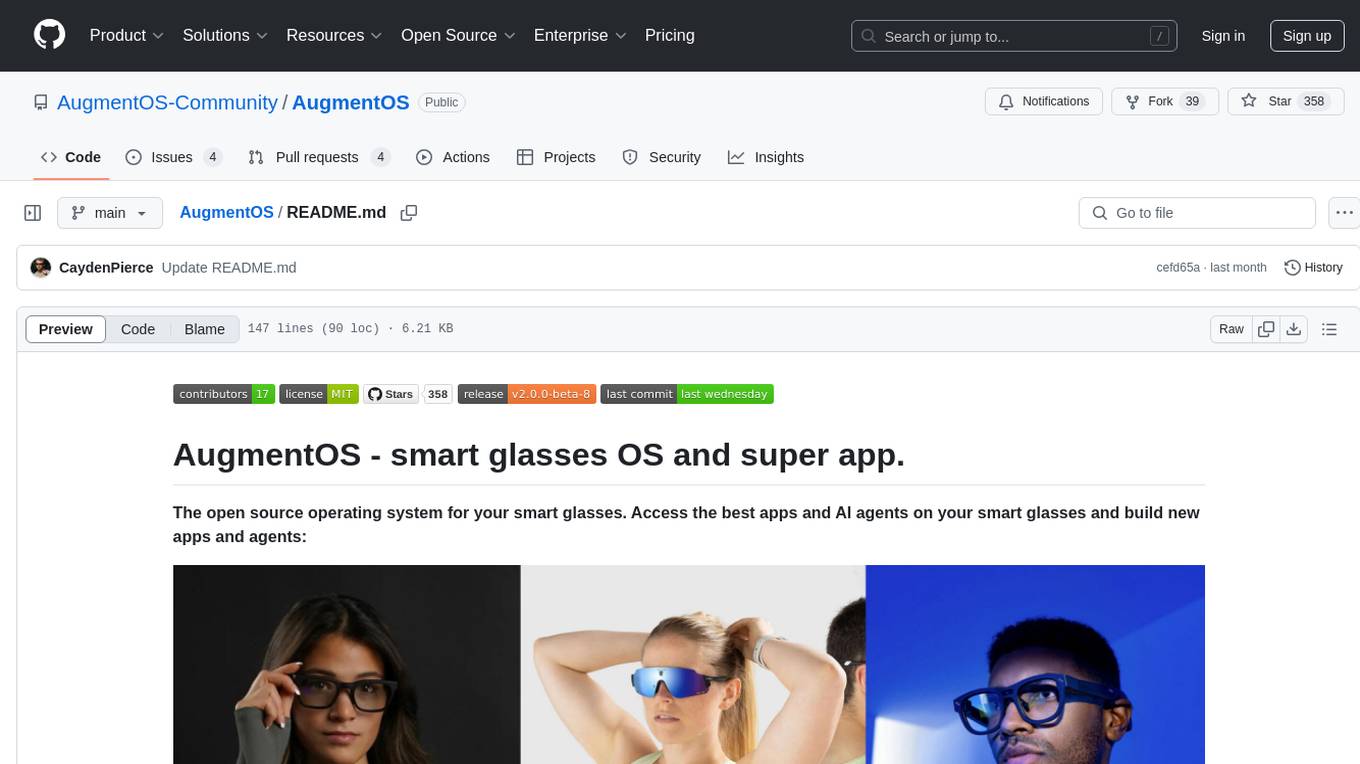

AugmentOS

AugmentOS is an open source operating system for smart glasses that allows users to access various apps and AI agents. It enables developers to easily build and run apps on smart glasses, run multiple apps simultaneously, and interact with AI assistants, translation services, live captions, and more. The platform also supports language learning, ADHD tools, and live language translation. AugmentOS is designed to enhance the user experience of smart glasses by providing a seamless and proactive interaction with AI-first wearables apps.

stable-pi-core

Stable-Pi-Core is a next-generation decentralized ecosystem integrating blockchain, quantum AI, IoT, edge computing, and AR/VR for secure, scalable, and personalized solutions in payments, governance, and real-world applications. It features a Dual-Value System, cross-chain interoperability, AI-powered security, and a self-healing network. The platform empowers seamless payments, decentralized governance via DAO, and real-world applications across industries, bridging digital and physical worlds with innovative features like robotic process automation, machine learning personalization, and a dynamic cross-chain bridge framework.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.