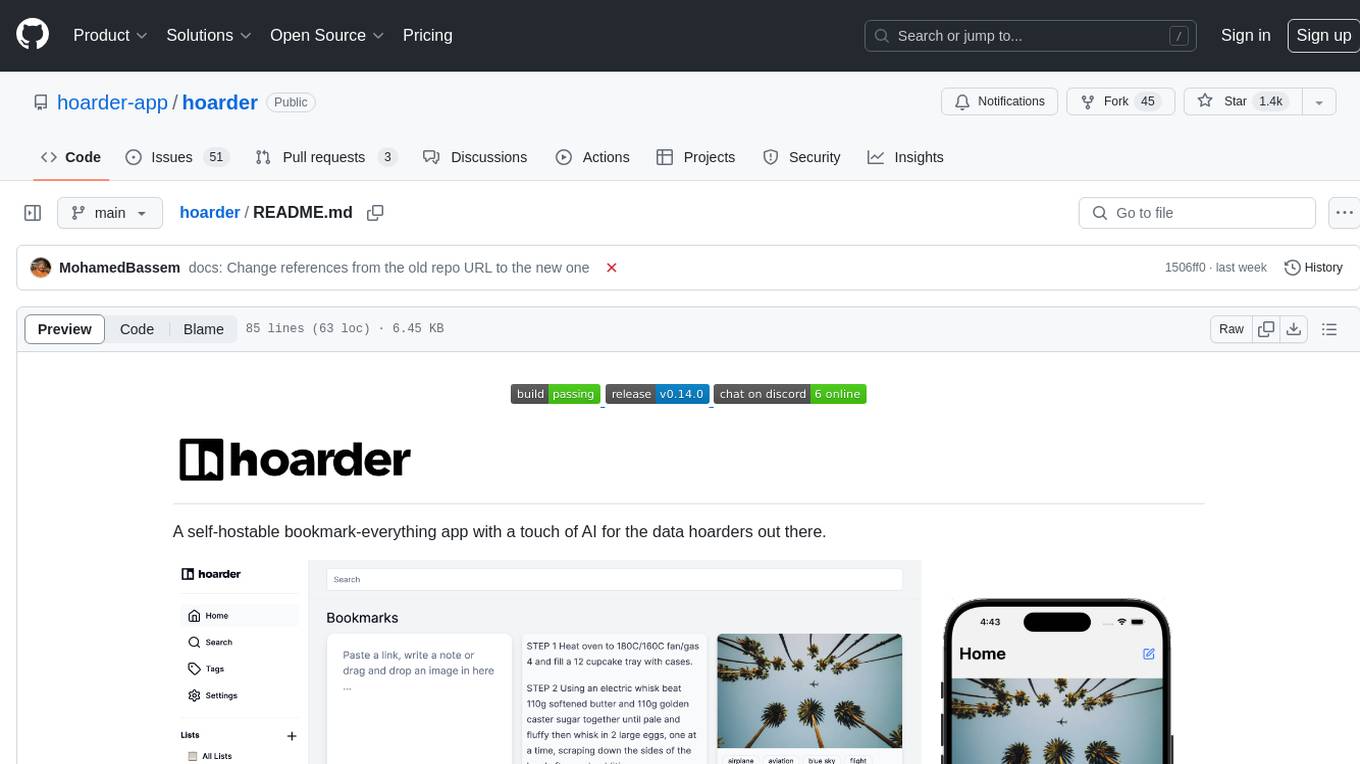

AugmentOS

Smart glasses OS, with dozens of built-in apps. Users get AI assistant, notifications, translation, screen mirror, captions, and more. Devs get to write 1 app that runs on any pair of smart glases.

Stars: 418

AugmentOS is an open source operating system for smart glasses that allows users to access various apps and AI agents. It enables developers to easily build and run apps on smart glasses, run multiple apps simultaneously, and interact with AI assistants, translation services, live captions, and more. The platform also supports language learning, ADHD tools, and live language translation. AugmentOS is designed to enhance the user experience of smart glasses by providing a seamless and proactive interaction with AI-first wearables apps.

README:

The open source operating system for your smart glasses. Access the best apps and AI agents on your smart glasses and build new apps and agents:

- AI Assistant

- Translation

- Live Captions

- Screen Mirror (Teleprompter, Karaoke/Lyrics, video captions, etc.)

- Convoscope

- Language Learning

- ADHD Tools

AugmentOS is the the way to build apps for smart glasses. For developers, it's a no-brainer, because AugmentOS-SDK enables:

- Your app to immediately runs on any pair of smart glasses.

- Simple + fast dev - focus on building a great experience.

- Continuously access smart glasses I/O.

- Run always - run your app for days straight, guaranteed.

- Get seen - get your app in front of everyone using smart glasses.

AugmentOS enables you to run multiple apps at the same time so you can truly take advantage of AI-first wearables apps. Apps that run proactively based on context. That's the power of a smart glasses operating system.

Some of the apps running on AugmentOS...

Smart and fast AI assistant with access to Google search. Say "Hey Mira" and then ask a question/say a command.

- "hey Mira, how long is a direct flight from Toronto to Hong Kong?"

- "hey Mira, what's the weather like this weekend in Cambridge?"

- "hey Mira, how much does YC invest in each company and what do they take?"

Mirror anything on your screen to your smart glasses. We use a lightweight, novel approach, which makes it very fast and makes text easy to read.

Convoscope is a suite of proactive AI agents to augment conversations. Imagine a council of superintelligent assistants listening in to your conversation and helping you solve problems, have new ideas, and better connect with those you're speaking with

- Someone mention a company you've never heard of? A proactice AI agent instantly shows you info on that company

- Your friend is suggesting you have a BBQ tomrrow. A proactive AI agent searches tomorrow's forecast and overlays the rainy forecast on your vision

- Groupthink happening? A devil's advocate agent presents an alternative viewpiont to stimulate thought

- Someone makes a shaky claim? A fact checker agent provides a source to back it up or show it's false

- Can't remember the website your coworker reccomended? Proactive agents review your past conversations and pull up the url.

Learn a new language 10x faster with smart glasses. Partial translation, AI foreign language conversations, word/phrase suggestions, immersive AR language annotations, and more.

Artificial Immersion demo video: https://www.youtube.com/watch?v=UFBEG1s27uU

TEDxMIT Talk on "Can Smart Glasses Revolutionize How We Learn Languages?" by Cayden Pierce: https://www.youtube.com/watch?v=7XuBVY3nVbA

See live captions of everything that is said. 100s of languages supported with high accuracy and low latency.

A 10 minute short term memory buffer to help get back on track during conversations after a zone-out.

Live translate languages - when someone speaks a foreign language, instantly see it translated on your vision. Supports 100s of language.

The community is working on many more apps - fully open source - join us and help build! Check out our roadmap here!

Documentation: docs.AugmentOS.org.

For developers,

AugmentOS is the best way to write a smart glasses app because it enables:

- Your single app runs on any pair of smart glasses.

- Access to smart glasses I/O continously

- Running multiple apps running at the same time

AugmentOS is a fully open source OS for wearables. The AugmentOS SDK is a lightweight wrapper (Python, C++, Javascript, more) that allows any existing app to run as an AugmentOS app, in the cloud or on the edge.

To get started, check out our Example App.

Current Support:

Future Support:

Android:

iPhone / iOS

Coming February/March 2025

AugmentOS is made by a decentralized community of contributors, and managed by Mentra.

- Cayden Pierce

- Alex Israelov

- Nicolo Micheletti

Contributions welcome! Our team is growing and we have a lot to do! Join our Discord and reach out!

The AugmentOS Community is a team building open-source smart glasses tech towards an open, self-empowered, intercognitive, augmented future. Our industry partners include companies like Vuzix, Activelook, TCL, and others. To get involved, check out our website https://AugmentOS.org and join our Discord server.

MIT License Copyright 2025 AugmentOS Community

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AugmentOS

Similar Open Source Tools

AugmentOS

AugmentOS is an open source operating system for smart glasses that allows users to access various apps and AI agents. It enables developers to easily build and run apps on smart glasses, run multiple apps simultaneously, and interact with AI assistants, translation services, live captions, and more. The platform also supports language learning, ADHD tools, and live language translation. AugmentOS is designed to enhance the user experience of smart glasses by providing a seamless and proactive interaction with AI-first wearables apps.

AugmentOS

Convoscope is a suite of smart glasses and web tools designed to augment conversations by providing live proactive agents that answer questions, offer definitions, insights, and alternative viewpoints. It includes features like 'Mira' AI Assistant, Convoscope Proactive AI Agents, Language Learning app, Screen Mirror functionality, and upcoming features such as Live Captions, ADHD Glasses, and Live Language Translation. The tool supports various smart glasses models and Android 12+ phones, offering a unique experience for real-life conversations, meetings, and video calls.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

FreeChat

FreeChat is a native LLM appliance for macOS that runs completely locally. Download it and ask your LLM a question without doing any configuration. A local/llama version of OpenAI's chat without login or tracking. You should be able to install from the Mac App Store and use it immediately.

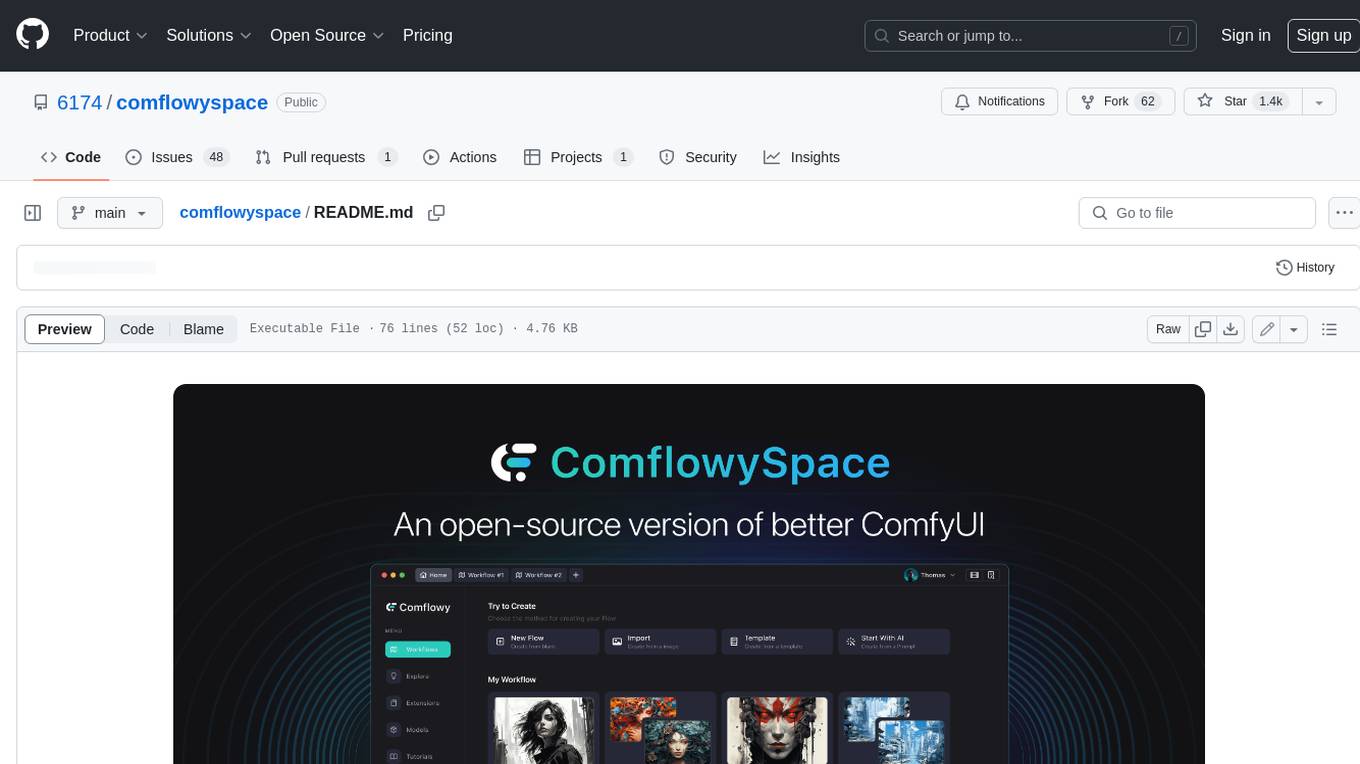

comflowyspace

Comflowyspace is an open-source AI image and video generation tool that aims to provide a more user-friendly and accessible experience than existing tools like SDWebUI and ComfyUI. It simplifies the installation, usage, and workflow management of AI image and video generation, making it easier for users to create and explore AI-generated content. Comflowyspace offers features such as one-click installation, workflow management, multi-tab functionality, workflow templates, and an improved user interface. It also provides tutorials and documentation to lower the learning curve for users. The tool is designed to make AI image and video generation more accessible and enjoyable for a wider range of users.

Second-Me

Second Me is an open-source prototype that allows users to craft their own AI self, preserving their identity, context, and interests. It is locally trained and hosted, yet globally connected, scaling intelligence across an AI network. It serves as an AI identity interface, fostering collaboration among AI selves and enabling the development of native AI apps. The tool prioritizes individuality and privacy, ensuring that user information and intelligence remain local and completely private.

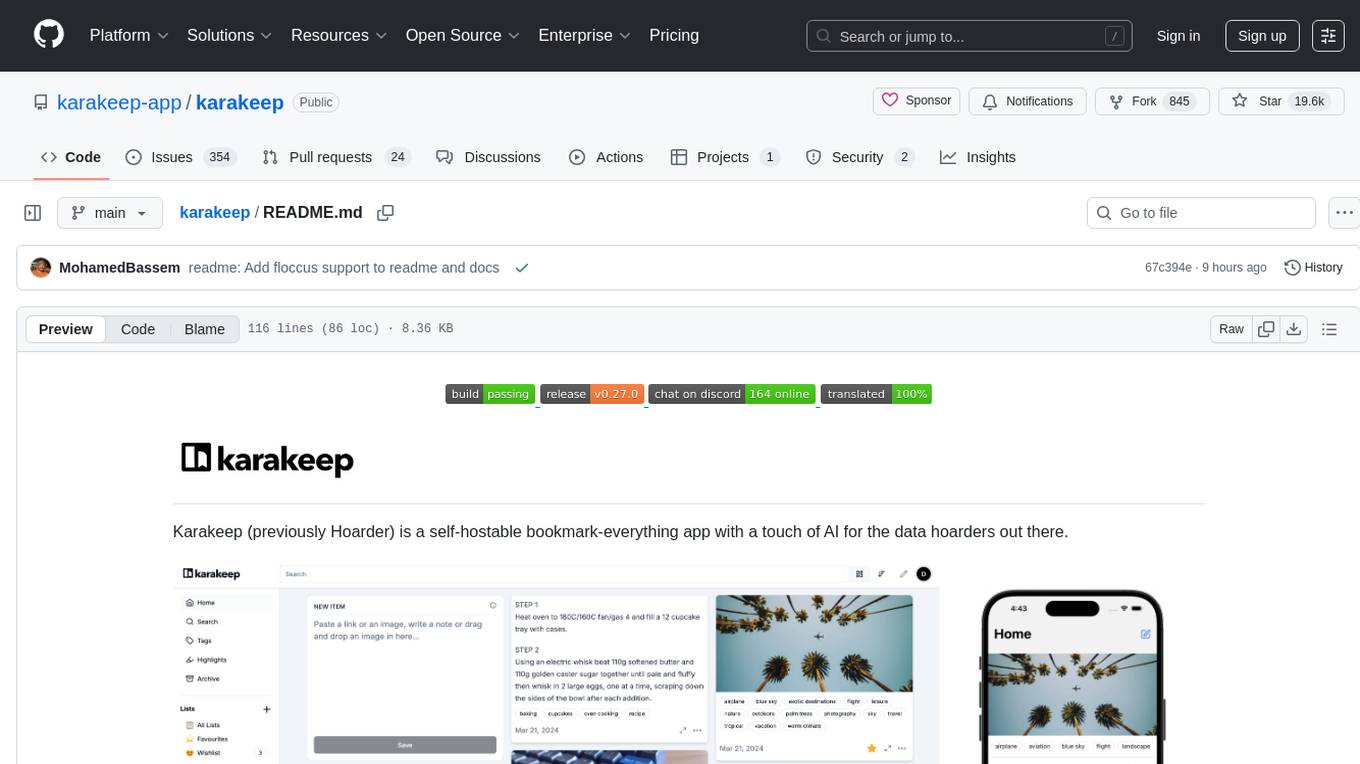

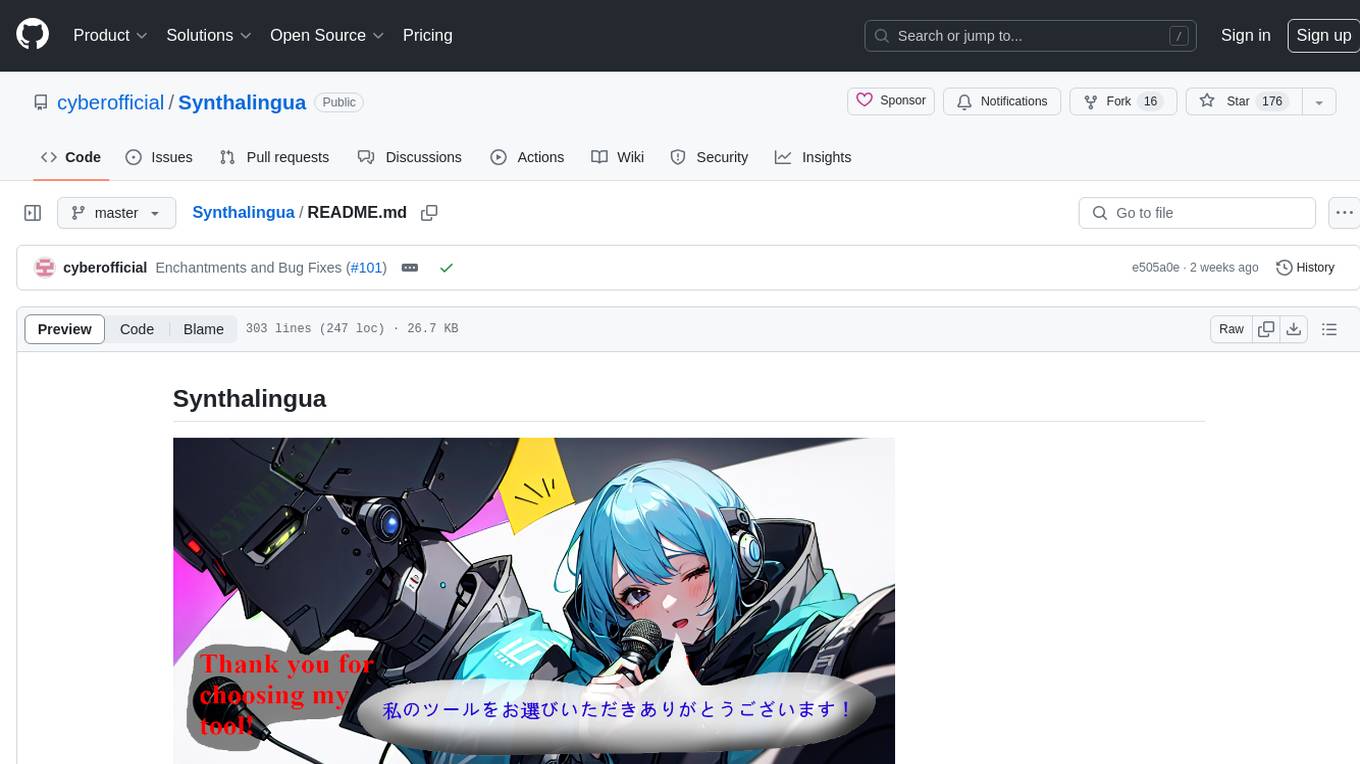

karakeep

Karakeep is a self-hostable bookmark-everything app with a touch of AI for data hoarders. It allows users to bookmark links, take notes, store images and pdfs, and offers features like automatic fetching, full-text search, AI-based tagging, OCR, rule-based engine, Chrome plugin, Firefox addon, iOS and Android apps, auto hoarding from RSS feeds, REST API, multi-language support, and more. The app is under heavy development and aims to provide a self-hosting first solution for managing bookmarks and content.

commanddash

Dash AI is an open-source coding assistant for Flutter developers. It is designed to not only write code but also run and debug it, allowing it to assist beyond code completion and automate routine tasks. Dash AI is powered by Gemini, integrated with the Dart Analyzer, and specifically tailored for Flutter engineers. The vision for Dash AI is to create a single-command assistant that can automate tedious development tasks, enabling developers to focus on creativity and innovation. It aims to assist with the entire process of engineering a feature for an app, from breaking down the task into steps to generating exploratory tests and iterating on the code until the feature is complete. To achieve this vision, Dash AI is working on providing LLMs with the same access and information that human developers have, including full contextual knowledge, the latest syntax and dependencies data, and the ability to write, run, and debug code. Dash AI welcomes contributions from the community, including feature requests, issue fixes, and participation in discussions. The project is committed to building a coding assistant that empowers all Flutter developers.

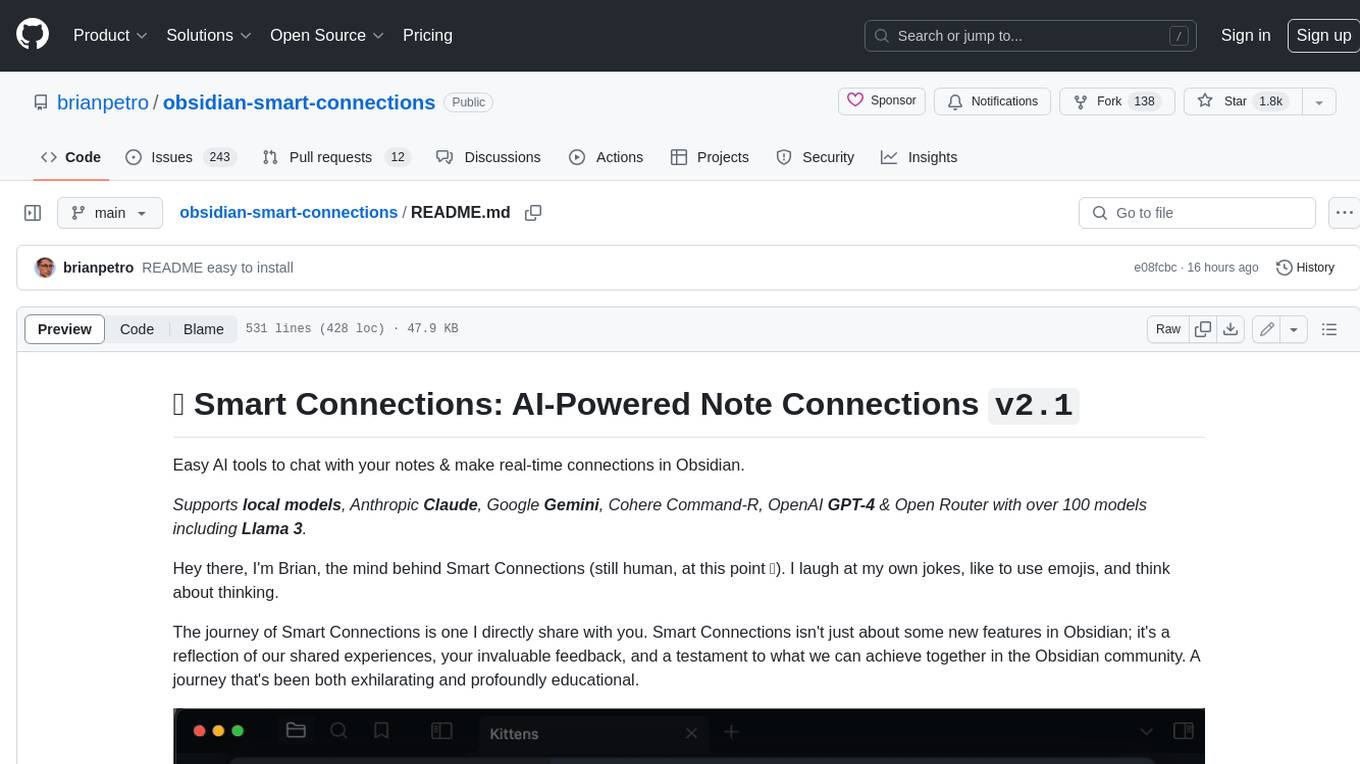

obsidian-smart-connections

Smart Connections is an AI-powered plugin for Obsidian that helps you discover hidden connections and insights in your notes. With features like Smart View for real-time relevant note suggestions and Smart Chat for chatting with your notes, Smart Connections makes it easier than ever to stay organized and uncover hidden connections between your notes. Its intuitive interface and customizable settings ensure a seamless experience, tailored to your unique needs and preferences.

xyne

Xyne is an AI-first Search & Answer Engine for work, serving as an OSS alternative to Glean, Gemini, and MS Copilot. It securely indexes data from various applications like Google Workspace, Atlassian suite, Slack, and Github, providing a Google + ChatGPT-like experience to find information and get up-to-date answers. Users can easily locate files, triage issues, inquire about customers/deals/features/tickets, and discover relevant contacts. Xyne enhances AI models by providing contextual information in a secure, private, and responsible manner, making it the most secure and future-proof solution for integrating AI into work environments.

Virtual_Avatar_ChatBot

Virtual_Avatar_ChatBot is a free AI Chatbot with visual movement that runs on your local computer with minimal GPU requirement. It supports various features like Oogbabooga, betacharacter.ai, and Locall LLM. The tool requires Windows 7 or above, Python, C++ Compiler, Git, and other dependencies. Users can contribute to the open-source project by reporting bugs, creating pull requests, or suggesting new features. The goal is to enhance Voicevox functionality, support local LLM inference, and give the waifu access to the internet. The project references various tools like desktop-waifu, CharacterAI, Whisper, PYVTS, COQUI-AI, VOICEVOX, and VOICEVOX API.

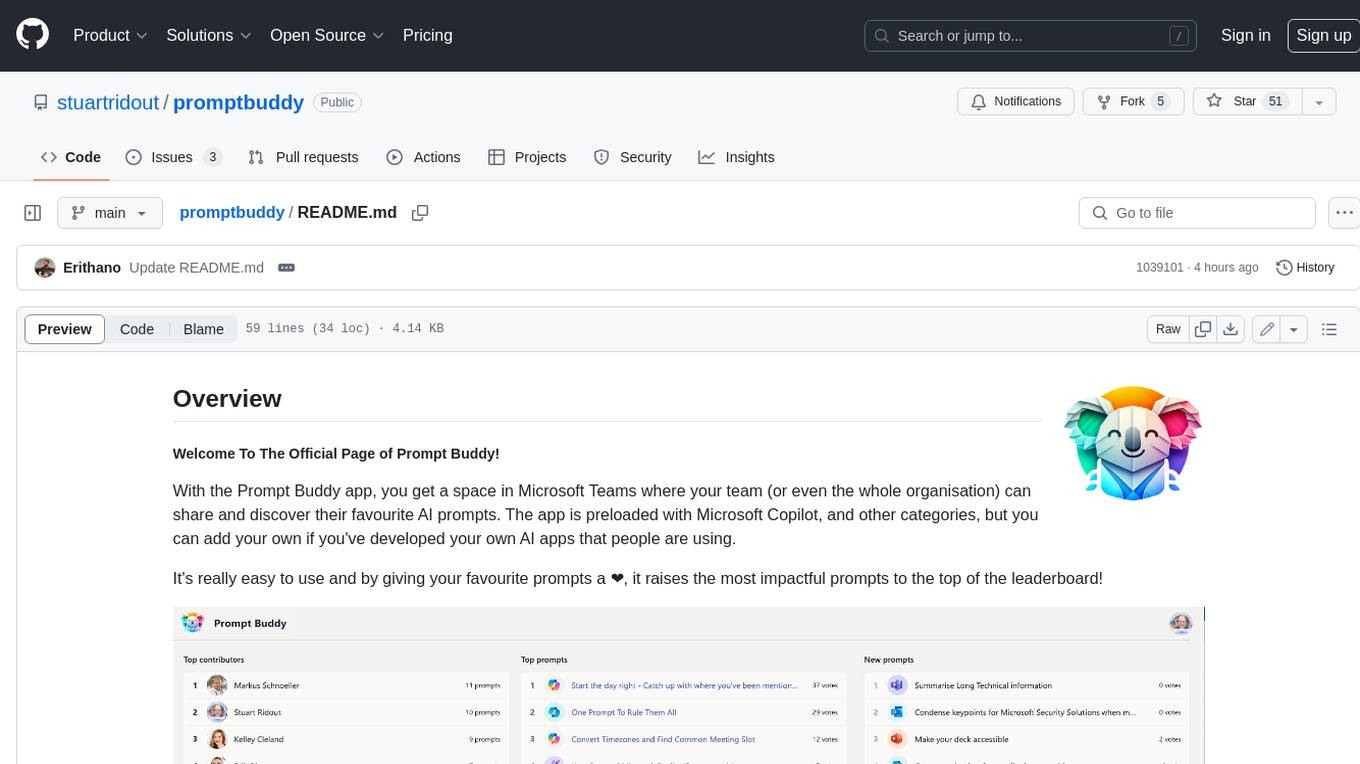

promptbuddy

Prompt Buddy is a Microsoft Teams app that provides a central location for teams to share and discover their favorite AI prompts. It comes preloaded with Microsoft Copilot and other categories, but users can also add their own custom prompts. The app is easy to use and allows users to upvote their favorite prompts, which raises them to the top of the leaderboard. Prompt Buddy also supports dark mode and offers a mobile layout for use on phones. It is built on the Power Platform and can be customized and extended by the installer.

Ollama-SwiftUI

Ollama-SwiftUI is a user-friendly interface for Ollama.ai created in Swift. It allows seamless chatting with local Large Language Models on Mac. Users can change models mid-conversation, restart conversations, send system prompts, and use multimodal models with image + text. The app supports managing models, including downloading, deleting, and duplicating them. It offers light and dark mode, multiple conversation tabs, and a localized interface in English and Arabic.

OpenHands

OpenHands is a community focused on AI-driven development, offering a Software Agent SDK, CLI, Local GUI, Cloud deployment, and Enterprise solutions. The SDK is a Python library for defining and running agents, the CLI provides an easy way to start using OpenHands, the Local GUI allows running agents on a laptop with REST API, the Cloud deployment offers hosted infrastructure with integrations, and the Enterprise solution enables self-hosting via Kubernetes with extended support and access to the research team. OpenHands is available under the MIT license.

hoarder

A self-hostable bookmark-everything app with a touch of AI for data hoarders. Features include bookmarking links, taking notes, storing images, automatic fetching for link details, full-text search, AI-based automatic tagging, Chrome and Firefox plugins, iOS and Android apps, dark mode support, and self-hosting. Built to address the need for archiving and previewing links with automatic tagging. Developed by a systems engineer to stay connected with web development and cater to personal use cases.

wingman-ai

Wingman AI allows you to use your voice to talk to various AI providers and LLMs, process your conversations, and ultimately trigger actions such as pressing buttons or reading answers. Our _Wingmen_ are like characters and your interface to this world, and you can easily control their behavior and characteristics, even if you're not a developer. AI is complex and it scares people. It's also **not just ChatGPT**. We want to make it as easy as possible for you to get started. That's what _Wingman AI_ is all about. It's a **framework** that allows you to build your own Wingmen and use them in your games and programs. The idea is simple, but the possibilities are endless. For example, you could: * **Role play** with an AI while playing for more immersion. Have air traffic control (ATC) in _Star Citizen_ or _Flight Simulator_. Talk to Shadowheart in Baldur's Gate 3 and have her respond in her own (cloned) voice. * Get live data such as trade information, build guides, or wiki content and have it read to you in-game by a _character_ and voice you control. * Execute keystrokes in games/applications and create complex macros. Trigger them in natural conversations with **no need for exact phrases.** The AI understands the context of your dialog and is quite _smart_ in recognizing your intent. Say _"It's raining! I can't see a thing!"_ and have it trigger a command you simply named _WipeVisors_. * Automate tasks on your computer * improve accessibility * ... and much more

For similar tasks

AugmentOS

AugmentOS is an open source operating system for smart glasses that allows users to access various apps and AI agents. It enables developers to easily build and run apps on smart glasses, run multiple apps simultaneously, and interact with AI assistants, translation services, live captions, and more. The platform also supports language learning, ADHD tools, and live language translation. AugmentOS is designed to enhance the user experience of smart glasses by providing a seamless and proactive interaction with AI-first wearables apps.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

WeeaBlind

Weeablind is a program that uses modern AI speech synthesis, diarization, language identification, and voice cloning to dub multi-lingual media and anime. It aims to create a pleasant alternative for folks facing accessibility hurdles such as blindness, dyslexia, learning disabilities, or simply those that don't enjoy reading subtitles. The program relies on state-of-the-art technologies such as ffmpeg, pydub, Coqui TTS, speechbrain, and pyannote.audio to analyze and synthesize speech that stays in-line with the source video file. Users have the option of dubbing every subtitle in the video, setting the start and end times, dubbing only foreign-language content, or full-blown multi-speaker dubbing with speaking rate and volume matching.

Synthalingua

Synthalingua is an advanced, self-hosted tool that leverages artificial intelligence to translate audio from various languages into English in near real time. It offers multilingual outputs and utilizes GPU and CPU resources for optimized performance. Although currently in beta, it is actively developed with regular updates to enhance capabilities. The tool is not intended for professional use but for fun, language learning, and enjoying content at a reasonable pace. Users must ensure speakers speak clearly for accurate translations. It is not a replacement for human translators and users assume their own risk and liability when using the tool.

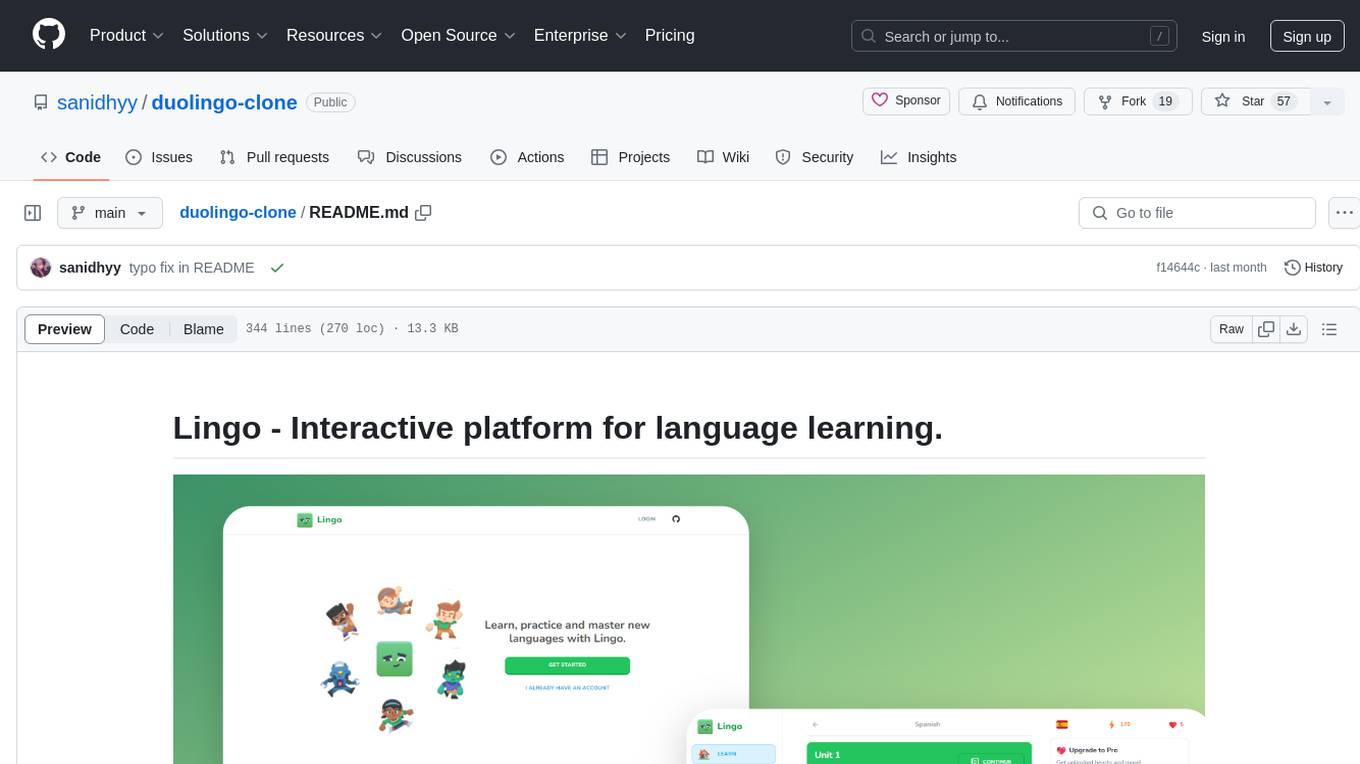

duolingo-clone

Lingo is an interactive platform for language learning that provides a modern UI/UX experience. It offers features like courses, quests, and a shop for users to engage with. The tech stack includes React JS, Next JS, Typescript, Tailwind CSS, Vercel, and Postgresql. Users can contribute to the project by submitting changes via pull requests. The platform utilizes resources from CodeWithAntonio, Kenney Assets, Freesound, Elevenlabs AI, and Flagpack. Key dependencies include @clerk/nextjs, @neondatabase/serverless, @radix-ui/react-avatar, and more. Users can follow the project creator on GitHub and Twitter, as well as subscribe to their YouTube channel for updates. To learn more about Next.js, users can refer to the Next.js documentation and interactive tutorial.

dinopal

DinoPal is an AI voice assistant residing in the Mac menu bar, offering real-time voice and video chat, screen sharing, online search, and multilingual support. It provides various AI assistants with unique strengths and characteristics to meet different conversational needs. Users can easily install DinoPal and access different communication modes, with a call time limit of 30 minutes. User feedback can be shared in the Discord community. DinoPal is powered by Google Gemini & Pipecat.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.