AugmentOS

Smart glasses OS, with dozens of built-in apps. Users get AI assistant, notifications, translation, screen mirror, captions, and more. Devs get to write 1 app that runs on any pair of smart glases.

Stars: 118

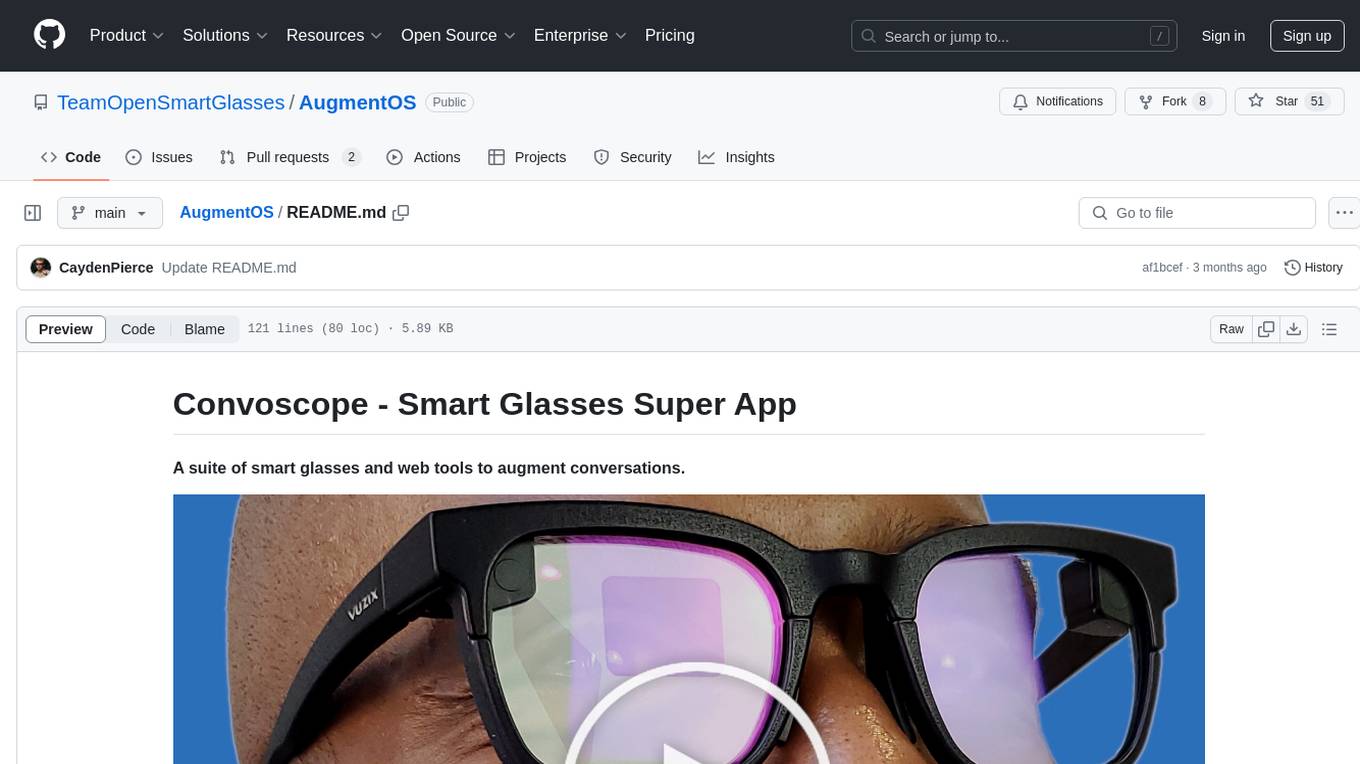

Convoscope is a suite of smart glasses and web tools designed to augment conversations by providing live proactive agents that answer questions, offer definitions, insights, and alternative viewpoints. It includes features like 'Mira' AI Assistant, Convoscope Proactive AI Agents, Language Learning app, Screen Mirror functionality, and upcoming features such as Live Captions, ADHD Glasses, and Live Language Translation. The tool supports various smart glasses models and Android 12+ phones, offering a unique experience for real-life conversations, meetings, and video calls.

README:

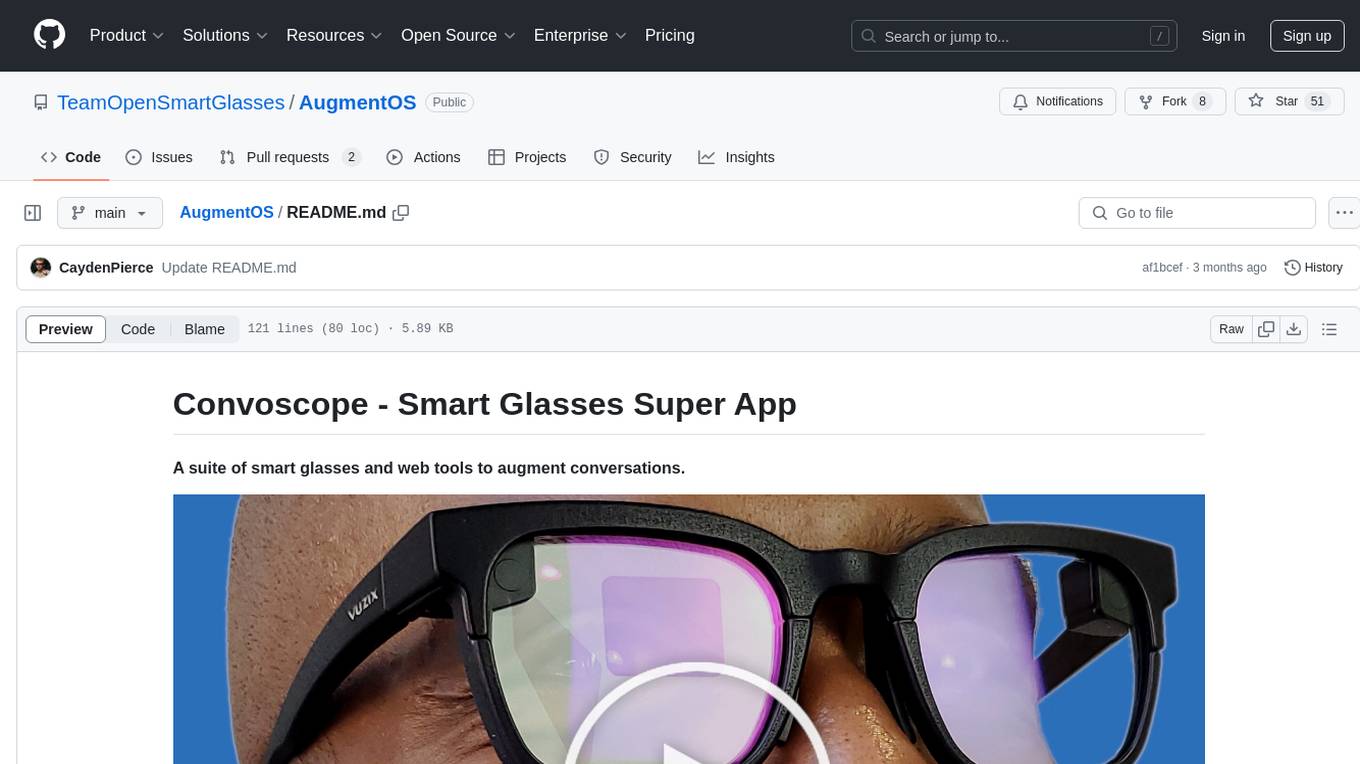

AugmentOS is the home base for your smart glasses, where you gain instant access to dozens of your favourite apps, like:

- AI Assistant

- Translation

- Live Captions

- Screen Mirror (Teleprompter, Karaoke/Lyrics, video captions, etc.)

- Convoscope

- Language Learning

- ADHD Tools

AugmentOS enables you to run multiple apps at the same time - enabling you to truly take advantage of AI-first wearables apps that run proactively based on context.

Available on iOS and Android 2024, supporting all common smart glasses.

add Google Play and iOS App Store Logos and links here

For developers,

AugmentOS SDK is the best way to write a smart glasses app because it enables:

- Your single app runs on any pair of smart glasses.

- Access to smart glasses I/O continously - alongside other apps running at the same time

AugmentOS is a fully open source OS for wearables. The AugmentOS SDK is a lightweight wrapper (Python, C++, Javascript, more) that allows any existing app to run as an AugmentOS app, in the cloud or on the edge.

add images/screenshots/through the lens images of every app

Smart and fast AI assistant with access to Google search. Say "Hey Mira" and then ask a question/say a command.

- "hey Mira, how long is a direct flight from Toronto to Hong Kong?"

- "hey Mira, what's the weather like this weekend in Cambridge?"

- "hey Mira, how much does YC invest in each company and what do they take?"

Convoscope is a suite of proactive AI agents to augment conversations.

TO ADD: Link to videos os stories of real life using it - South Korea AR glasses, chocolate, what is this opensource license?

- Someone mention a company you've never heard of? A proactice AI agent instantly shows you info on that company

- Your friend is suggesting you have a BBQ tomrrow. A proactive AI agent searhces tomorrow's forecast and overlays the rainy forecast on your vision

- Groupthink happening? A devil's advocate agent presents an alternative viewpiont to stimulate thought

- Someone makes a shaky claim? A fact checker agent provides a source to back it up or show it's false

- Can't remember the website your coworker reccomended? Proactive agents review your past conversations and pull up the url.

An app to learn a new language 10x faster with smart glasses. Partial translation, AI foreign language conversations, word/phrase suggestions, immersive AR language annotations, etc.

Artificial Immersion Language Learning Smart Glasses demos video: https://www.youtube.com/watch?v=UFBEG1s27uU

TEDxMIT Talk - "Can Smart Glasses Revolutionize How We Learn Languages?" - Cayden Pierce: https://www.youtube.com/watch?v=7XuBVY3nVbA

Mirror anything on your screen to your smart glasses. We use a lightweight, novel approach, which makes it very fast and makes text easy to read. Some examples of what you can do with it:

- Listen to podcasts and stream the video captions to your glasses from YouTube

- Watch your Uber arrival status while waiting with your friends.

- Be the ultimate karaoke master, streaming lyrics to your glasses from Spotify

- Stream Strava/fitness data to your glasses while exercising

- Pull up recipes on your phone and read them on your glasses.

- Stream your grocery list while at the store instead of pulling out your phone every 3 minutes.

- Stream your phone camera viewfinder to your glasses to get the perfect pose while taking a group shot

- Watch tutorials/lessons with your phone in your pocket - when you're walking, working out, running, etc.

See live captions of everything that is said. 100s of languges supported with high accuracy and low latency.

A 10 minute short term memory buffer to help get back on track during conversations after a zone-out.

Live translate languages - when someone speaks a foreign language, instantly see it translated on your vision. Supports 100s of language.

The community is working on many more apps - fully open source - join us and help build!

Glasses

- Vuzix Z100

- Vuzix Shield

- Inmo Air

- TCL RayNeo X2

- Most other Android smart glasses

- Coming soon: Frames by Brilliant Labs, Meizu Myvu, Even Realities G1

Smart Phones

Any Android 12+ phone will work. We do NOT support Android 11 or below.

- Install the Y app from Google Play or from the Github release.

- Accept all permissions (will not work without permissions).

- Sign in with Google.

- Glasses auto-connect (ensure glasses are connected to host app, if needed).

- Select apps you want to run on the glasses from within Y.

Coming soon: how to write an AugmentOS app.

AugmentOS is made by a decentralized community of people, and headed up by TeamOpenSmartGlasses.

- Cayden Pierce

- Alex Israelov

- Nicolo Micheletti

Contributions welcome! Our team is growing and we have a lot to do! Join our Discord and reach out!

TeamOpenSmartGlasses is a team building open-source smart glasses tech towards an open, self-empowered, intercognitive, augmented future. Our industry partners include companies like Vuzix, Activelook, TCL, and others. To get involved, check out our website https://teamopensmartglasses.com and join our Discord server.

MIT License Copyright 2024 TeamOpenSmartGlasses

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AugmentOS

Similar Open Source Tools

AugmentOS

Convoscope is a suite of smart glasses and web tools designed to augment conversations by providing live proactive agents that answer questions, offer definitions, insights, and alternative viewpoints. It includes features like 'Mira' AI Assistant, Convoscope Proactive AI Agents, Language Learning app, Screen Mirror functionality, and upcoming features such as Live Captions, ADHD Glasses, and Live Language Translation. The tool supports various smart glasses models and Android 12+ phones, offering a unique experience for real-life conversations, meetings, and video calls.

AugmentOS

AugmentOS is an open source operating system for smart glasses that allows users to access various apps and AI agents. It enables developers to easily build and run apps on smart glasses, run multiple apps simultaneously, and interact with AI assistants, translation services, live captions, and more. The platform also supports language learning, ADHD tools, and live language translation. AugmentOS is designed to enhance the user experience of smart glasses by providing a seamless and proactive interaction with AI-first wearables apps.

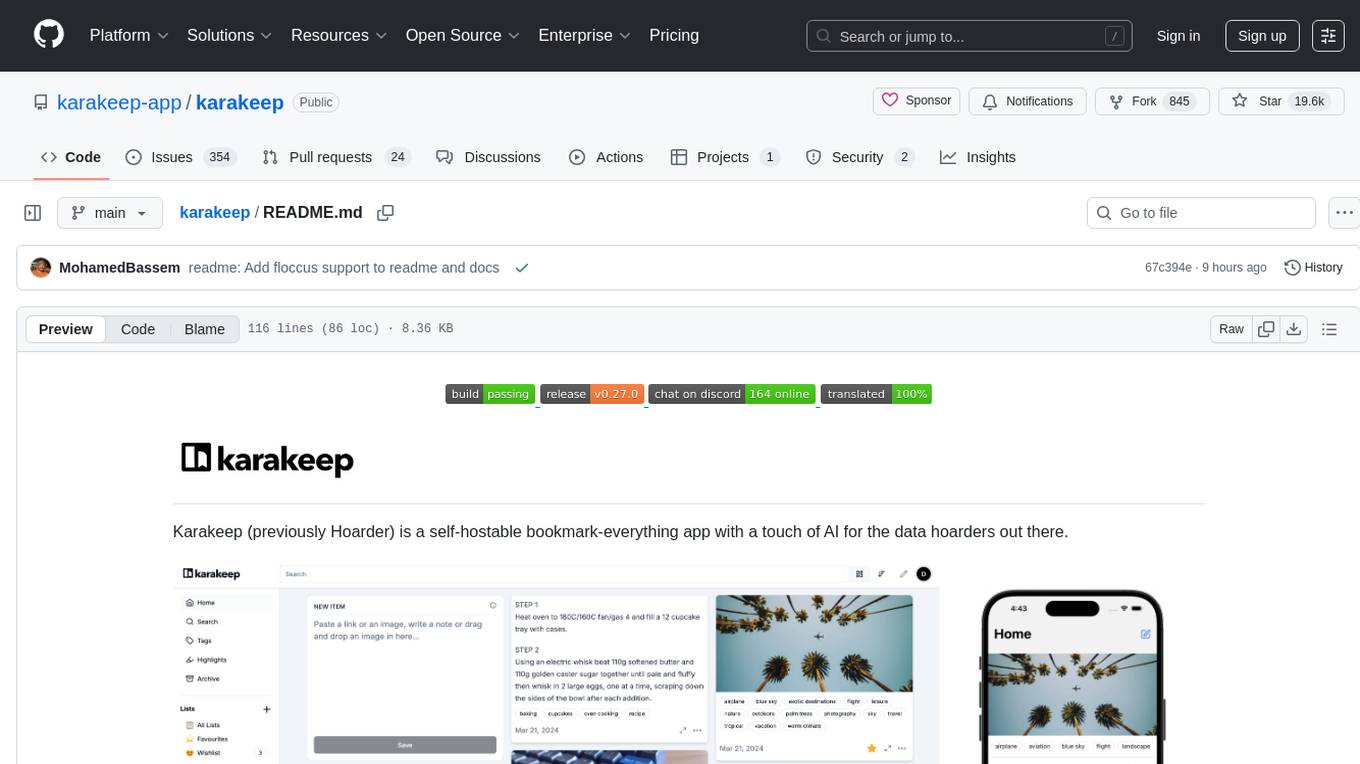

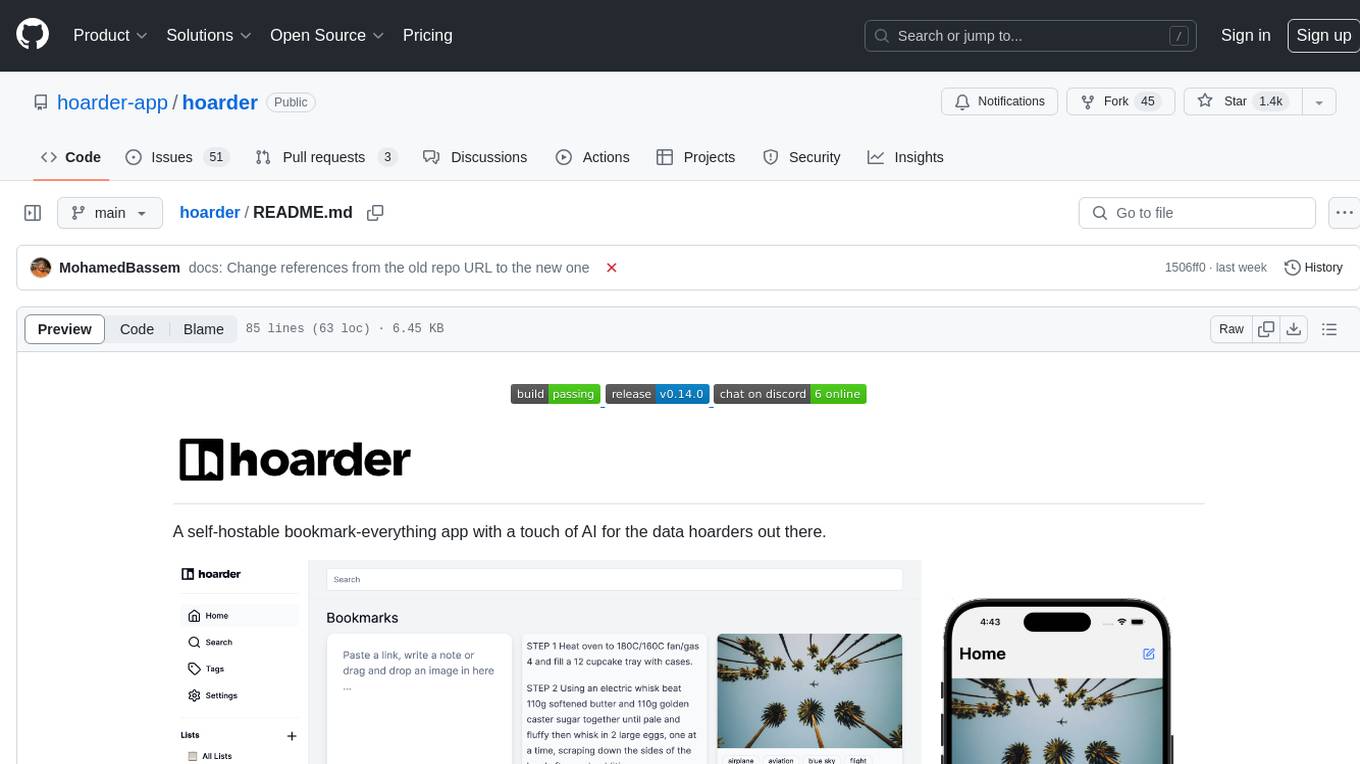

karakeep

Karakeep is a self-hostable bookmark-everything app with a touch of AI for data hoarders. It allows users to bookmark links, take notes, store images and pdfs, and offers features like automatic fetching, full-text search, AI-based tagging, OCR, rule-based engine, Chrome plugin, Firefox addon, iOS and Android apps, auto hoarding from RSS feeds, REST API, multi-language support, and more. The app is under heavy development and aims to provide a self-hosting first solution for managing bookmarks and content.

FreeChat

FreeChat is a native LLM appliance for macOS that runs completely locally. Download it and ask your LLM a question without doing any configuration. A local/llama version of OpenAI's chat without login or tracking. You should be able to install from the Mac App Store and use it immediately.

CodeProject.AI-Server

CodeProject.AI Server is a standalone, self-hosted, fast, free, and open-source Artificial Intelligence microserver designed for any platform and language. It can be installed locally without the need for off-device or out-of-network data transfer, providing an easy-to-use solution for developers interested in AI programming. The server includes a HTTP REST API server, backend analysis services, and the source code, enabling users to perform various AI tasks locally without relying on external services or cloud computing. Current capabilities include object detection, face detection, scene recognition, sentiment analysis, and more, with ongoing feature expansions planned. The project aims to promote AI development, simplify AI implementation, focus on core use-cases, and leverage the expertise of the developer community.

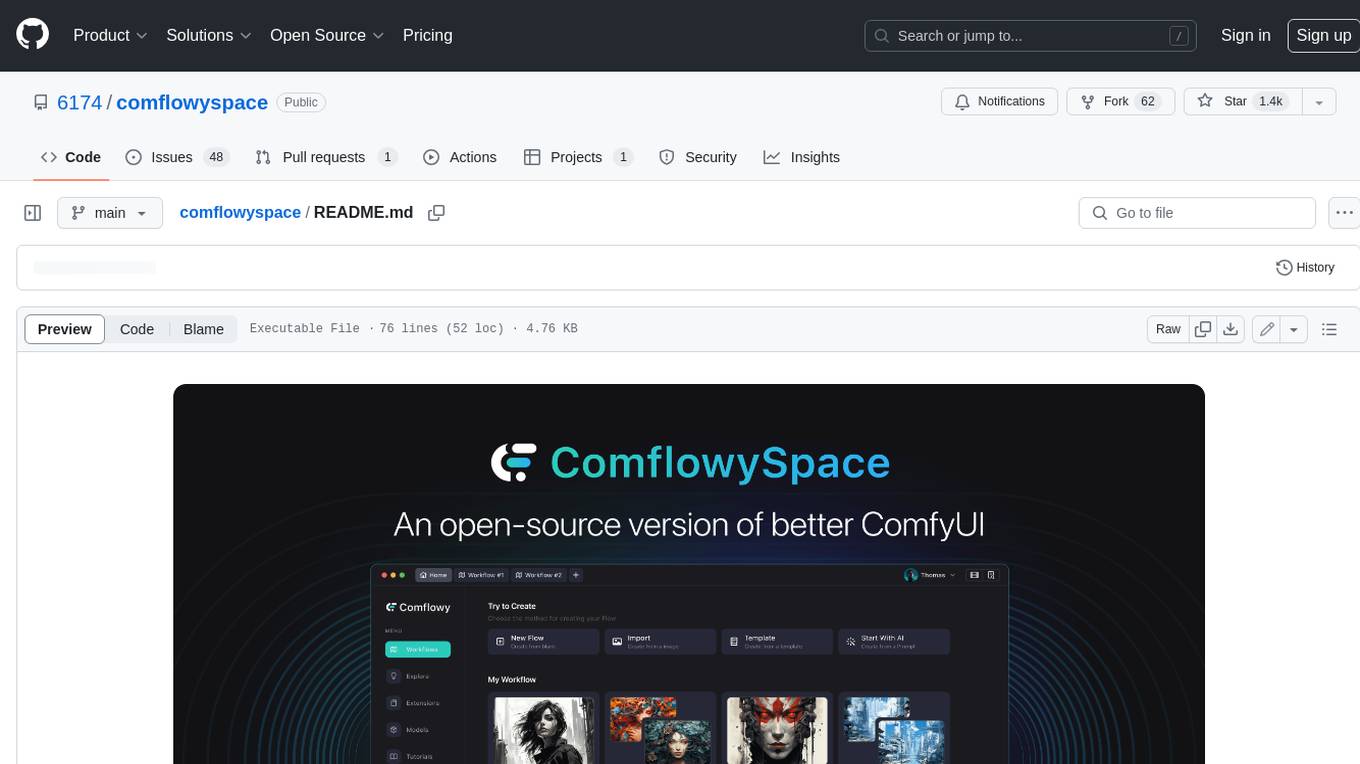

comflowyspace

Comflowyspace is an open-source AI image and video generation tool that aims to provide a more user-friendly and accessible experience than existing tools like SDWebUI and ComfyUI. It simplifies the installation, usage, and workflow management of AI image and video generation, making it easier for users to create and explore AI-generated content. Comflowyspace offers features such as one-click installation, workflow management, multi-tab functionality, workflow templates, and an improved user interface. It also provides tutorials and documentation to lower the learning curve for users. The tool is designed to make AI image and video generation more accessible and enjoyable for a wider range of users.

Ollama-SwiftUI

Ollama-SwiftUI is a user-friendly interface for Ollama.ai created in Swift. It allows seamless chatting with local Large Language Models on Mac. Users can change models mid-conversation, restart conversations, send system prompts, and use multimodal models with image + text. The app supports managing models, including downloading, deleting, and duplicating them. It offers light and dark mode, multiple conversation tabs, and a localized interface in English and Arabic.

obsidian-smart-connections

Smart Connections is an AI-powered plugin for Obsidian that helps you discover hidden connections and insights in your notes. With features like Smart View for real-time relevant note suggestions and Smart Chat for chatting with your notes, Smart Connections makes it easier than ever to stay organized and uncover hidden connections between your notes. Its intuitive interface and customizable settings ensure a seamless experience, tailored to your unique needs and preferences.

wingman-ai

Wingman AI allows you to use your voice to talk to various AI providers and LLMs, process your conversations, and ultimately trigger actions such as pressing buttons or reading answers. Our _Wingmen_ are like characters and your interface to this world, and you can easily control their behavior and characteristics, even if you're not a developer. AI is complex and it scares people. It's also **not just ChatGPT**. We want to make it as easy as possible for you to get started. That's what _Wingman AI_ is all about. It's a **framework** that allows you to build your own Wingmen and use them in your games and programs. The idea is simple, but the possibilities are endless. For example, you could: * **Role play** with an AI while playing for more immersion. Have air traffic control (ATC) in _Star Citizen_ or _Flight Simulator_. Talk to Shadowheart in Baldur's Gate 3 and have her respond in her own (cloned) voice. * Get live data such as trade information, build guides, or wiki content and have it read to you in-game by a _character_ and voice you control. * Execute keystrokes in games/applications and create complex macros. Trigger them in natural conversations with **no need for exact phrases.** The AI understands the context of your dialog and is quite _smart_ in recognizing your intent. Say _"It's raining! I can't see a thing!"_ and have it trigger a command you simply named _WipeVisors_. * Automate tasks on your computer * improve accessibility * ... and much more

hoarder

A self-hostable bookmark-everything app with a touch of AI for data hoarders. Features include bookmarking links, taking notes, storing images, automatic fetching for link details, full-text search, AI-based automatic tagging, Chrome and Firefox plugins, iOS and Android apps, dark mode support, and self-hosting. Built to address the need for archiving and previewing links with automatic tagging. Developed by a systems engineer to stay connected with web development and cater to personal use cases.

xyne

Xyne is an AI-first Search & Answer Engine for work, serving as an OSS alternative to Glean, Gemini, and MS Copilot. It securely indexes data from various applications like Google Workspace, Atlassian suite, Slack, and Github, providing a Google + ChatGPT-like experience to find information and get up-to-date answers. Users can easily locate files, triage issues, inquire about customers/deals/features/tickets, and discover relevant contacts. Xyne enhances AI models by providing contextual information in a secure, private, and responsible manner, making it the most secure and future-proof solution for integrating AI into work environments.

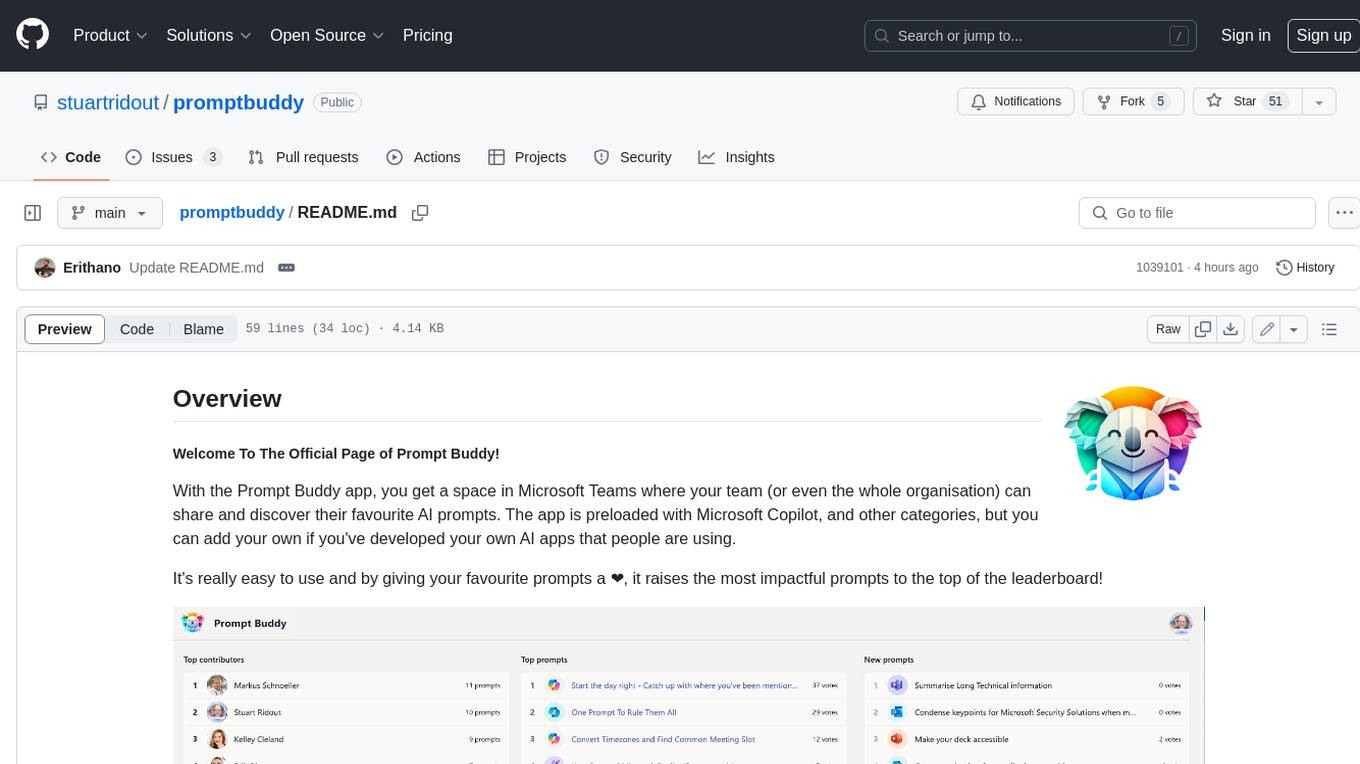

promptbuddy

Prompt Buddy is a Microsoft Teams app that provides a central location for teams to share and discover their favorite AI prompts. It comes preloaded with Microsoft Copilot and other categories, but users can also add their own custom prompts. The app is easy to use and allows users to upvote their favorite prompts, which raises them to the top of the leaderboard. Prompt Buddy also supports dark mode and offers a mobile layout for use on phones. It is built on the Power Platform and can be customized and extended by the installer.

lumentis

Lumentis is a tool that allows users to generate beautiful and comprehensive documentation from meeting transcripts and large documents with a single command. It reads transcripts, asks questions to understand themes and audience, generates an outline, and creates detailed pages with visual variety and styles. Users can switch models for different tasks, control the process, and deploy the generated docs to Vercel. The tool is designed to be open, clean, fast, and easy to use, with upcoming features including folders, PDFs, auto-transcription, website scraping, scientific papers handling, summarization, and continuous updates.

J.A.R.V.I.S

J.A.R.V.I.S. is an offline large language model fine-tuned on custom and open datasets to mimic Jarvis's dialog with Stark. It prioritizes privacy by running locally and excels in responding like Jarvis with a similar tone. Current features include time/date queries, web searches, playing YouTube videos, and webcam image descriptions. Users can interact with Jarvis via command line after installing the model locally using Ollama. Future plans involve voice cloning, voice-to-text input, and deploying the voice model as an API.

macOS-use

macOS-use is a project that enables AI agents to interact with a MacBook across any app. It aims to build an AI agent for the MLX by Apple framework to perform actions on Apple devices. The project is under active development and allows users to prompt the agent to perform various tasks on their MacBook. Users need to be cautious as the tool can interact with apps, UI components, and use private credentials. The project is open source and welcomes contributions from the community.

aitools_client

Seth's AI Tools is a Unity-based front-end that interfaces with various AI APIs to perform tasks such as generating Twine games, quizzes, posters, and more. The tool is a native Windows application that supports features like live update integration with image editors, text-to-image conversion, image processing, mask painting, and more. It allows users to connect to multiple servers for fast generation using GPUs and offers a neat workflow for evolving images in real-time. The tool respects user privacy by operating locally and includes built-in games and apps to test AI/SD capabilities. Additionally, it features an AI Guide for creating motivational posters and illustrated stories, as well as an Adventure mode with presets for generating web quizzes and Twine game projects.

For similar tasks

AugmentOS

Convoscope is a suite of smart glasses and web tools designed to augment conversations by providing live proactive agents that answer questions, offer definitions, insights, and alternative viewpoints. It includes features like 'Mira' AI Assistant, Convoscope Proactive AI Agents, Language Learning app, Screen Mirror functionality, and upcoming features such as Live Captions, ADHD Glasses, and Live Language Translation. The tool supports various smart glasses models and Android 12+ phones, offering a unique experience for real-life conversations, meetings, and video calls.

RTranslator

RTranslator is an almost open-source, free, and offline real-time translation app for Android. It offers Conversation mode for multi-user translations, WalkieTalkie mode for quick conversations, and Text translation mode. It uses Meta's NLLB for translation and OpenAi's Whisper for speech recognition, ensuring privacy. The app is optimized for performance and supports multiple languages. It is ad-free and donation-supported.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.