Synthalingua

Synthalingua - Real Time Translation

Stars: 176

Synthalingua is an advanced, self-hosted tool that leverages artificial intelligence to translate audio from various languages into English in near real time. It offers multilingual outputs and utilizes GPU and CPU resources for optimized performance. Although currently in beta, it is actively developed with regular updates to enhance capabilities. The tool is not intended for professional use but for fun, language learning, and enjoying content at a reasonable pace. Users must ensure speakers speak clearly for accurate translations. It is not a replacement for human translators and users assume their own risk and liability when using the tool.

README:

Read the wiki here

Synthalingua is an advanced, self-hosted tool that leverages the power of artificial intelligence to translate audio from various languages into English in near real time, offering the possibility of multilingual outputs. This innovative solution utilizes both GPU and CPU resources to handle the input transcription and translation, ensuring optimized performance. Although it is currently in beta and not perfect, Synthalingua is actively being developed and will receive regular updates to further enhance its capabilities.

JetBrains kindly approved me for an OSS licenses for their software for use of this project. This will grealty improve my production rate.

Learn about it here: https://jb.gg/OpenSourceSupport

| Table of Contents | Description |

|---|---|

| Disclaimer | Things to know/Disclaimers/Warnings/etc |

| To Do List | Things to do |

| Contributors | People who helped with the project or contributed to the project. |

| Installing/Setup | How to install and setup the tool. |

| Misc | Usage and File Arguments - Examples - Web Server |

| Troubleshooting | Common issues and how to fix them. |

| Additional Info | Additional information about the tool. |

| Video Demos | Video demonstrations of the tool. |

| Extra Notes | Extra notes about the tool. |

- This tool is not perfect. It's still in beta and is a work in progress. It will be updated in a reasonable amount of time. Example: The tool might occasionally provide inaccurate translations or encounter bugs that are being actively worked on by the developers.

- Translations are more accurate when the speaker speaks clearly and slowly. If the speaker is fast or unclear, the translation will be less accurate, though it will still provide some level of translation. Example: If the speaker speaks slowly and enunciates clearly, the tool is likely to provide more accurate translations compared to when the speaker speaks quickly or mumbles.

- The tool is not intended for professional use. It's meant for fun, language learning, and enjoying content at a reasonable pace. You may need to try to understand the content on your own before using this tool. Example: This tool can be used for casual conversations, language practice with friends, or enjoying audio content in different languages.

- You agree not to use the tool to produce or spread misinformation or hate speech. If there is a discrepancy between the tool's output and the speaker's words, you must conduct your own research to determine the truth. Example: If the tool translates a statement into something false or misleading, it is your responsibility to verify the accuracy of the information before sharing it. Avoid using the tool to spread false information or engage in hate speech.

- You assume your own risk and liability. The repository owner will not be held responsible for any damages caused by the tool. You are responsible for your own actions and cannot hold the repository owner accountable if you encounter issues or face consequences due to your usage of the tool. Example: If the tool encounters technical issues, fails to provide accurate translations, or if you face any negative consequences resulting from its usage, the repository owner cannot be held liable.

- The tool is not meant to replace human translators. It is designed for fun, language learning, and enjoying content at a reasonable pace. You may need to make an effort to understand the content on your own before using this tool. Example: When dealing with complex or highly specialized content, it is advisable to consult professional human translators for accurate translations.

- Your hardware can affect the tool's performance. A weak CPU or GPU may hinder its functionality. However, a weak internet connection or microphone will not significantly impact the tool. Example: If you have a powerful computer with a fast processor, the tool is likely to perform better and provide translations more efficiently compared to using it on a slower or older system.

- This is a tool, not a service. You are responsible for your own actions and cannot hold the repository owner accountable if the tool violates terms of service or end-user license agreements, or if you encounter any issues while using the tool. Example: If you use the tool in a way that violates the terms of service or policies of the platform you're using it with, the repository owner cannot be held responsible for any resulting consequences.

| Todo | Sub-Task | Status |

|---|---|---|

| Add support for AMD GPUs. | ROCm support - Linux Only | ✅ |

| OpenCL support - Linux Only | ✅ | |

| Add support API access. | ✅ | |

| Custom localhost web server. | ✅ | |

| Add reverse translation. | ✅ | |

| Localize script to other languages. (Will take place after reverse translations.) | ❌ | |

| Custom dictionary support. | ❌ | |

| GUI. | ✅ | |

| Sub Title Creation | ✅ | |

| Linux support. | ✅ | |

| Improve performance. | ❌ | |

| Compressed Model Format for lower ram users | ✅ | |

| Better large model loading speed | ✅ | |

| Split model up into multiple chunks based on usage | ❌ | |

| Stream Audio from URL | ✅ | |

| Increase model swapping accuracy. | ❌ | |

| No Microphone Required | Streaming Module | ✅ |

| Supported GPUs | Description |

|---|---|

| Nvidia Dedicated Graphics | Supported |

| Nvidia Integrated Graphics | Tested - Not Supported |

| AMD/ATI | * Linux Verified |

| Intel Arc | Not Supported |

| Intel HD | Not Supported |

| Intel iGPU | Not Supported |

You can find full list of supported Nvida GPUs here:

| Requirement | Minimum | Moderate | Recommended | Best Performance |

|---|---|---|---|---|

| CPU Cores | 2 | 6 | 8 | 16 |

| CPU Clock Speed (GHz) | 2.5 or higher | 3.0 or higher | 3.5 or higher | 4.0 or higher |

| RAM (GB) | 4 or higher | 8 or higher | 16 or higher | 16 or higher |

| GPU VRAM (GB) | 2 or higher | 6 or higher | 8 or higher | 12 or higher |

| Free Disk Space (GB) | 10 or higher | 10 or higher | 10 or higher | 10 or higher |

| GPU (suggested) As long as the gpu you have is within vram spec, it should work fine. | Nvidia GTX 1050 or higher | Nvidia GTX 1660 or higher | Nvidia RTX 3070 or higher | Nvidia RTX 3090 or higher |

Note:

- Nvidia GPU support on Linux and Windows

- Nvidia GPU is suggested but not required.

- AMD GPUs are supported on linux, not Windows, but will try to be supported soon.

The tool will work on any system that meets the minimum requirements. The tool will work better on systems that meet the recommended requirements. The tool will work best on systems that meet the best performance requirements. You can mix and match the requirements to get the best performance. For example, you can have a CPU that meets the best performance requirements and a GPU that meets the moderate requirements. The tool will work best on systems that meet the best performance requirements.

A microphone is optional. You can use the --stream flag to stream audio from a HLS stream. See Examples for more information.

You'll need some sort of software input source (or hardware source). See issue #63 for additional information.

- Download and install Python 3.10.9.

- Make sure to check the box that says "Add Python to PATH" when installing. If you don't check the box, you will have to manually add Python to your PATH. You can check this guide: How to add Python to PATH.

- You can choose any python version that is 3.10.9 up to the latest version. The tool will not work on any python version that is 3.11 or higher. Must be 3.10.9+ not 3.11.x.

- Make sure to grab the x64 bit version! This program is not compatible with x86. (32bit)

- Download and install Git.

- Using default settings is fine.

- Download and install FFMPEG

- Download and install CUDA [Optional, but needs to be installed if using GPU]

- Run setup script

-

On Windows:

setup.bat -

On Linux:

setup.bash- Please ensure you have

gccinstalled andportaudio19-devinstalled (orportaudio-develfor some machines`)

- Please ensure you have

- If you get an error saying "Setup.bat is not recognized as an internal or external command, operable program or batch file.", houston we have a problem. This will require you to fix your operating system.

-

On Windows:

- Run the newly created batch file/bash script. You can edit that file to change the settings.

- If you get an error saying it is "not recognized as an internal or external command, operable program or batch file.", make sure you have installed and added to your PATH, and make sure you have git installed. If you have python and git installed and added to your PATH, then create a new issue on the repo and I will try to help you fix the issue.

This script uses argparse to accept command line arguments. The following options are available:

| Flag | Description |

|---|---|

--ram |

Change the amount of RAM to use. Default is 4GB. Choices are "1GB", "2GB", "4GB", "6GB", "12GB". |

--ramforce |

Use this flag to force the script to use desired VRAM. May cause the script to crash if there is not enough VRAM available. |

--energy_threshold |

Set the energy level for microphone to detect. Default is 100. Choose from 1 to 1000; anything higher will be harder to trigger the audio detection. |

--mic_calibration_time |

How long to calibrate the mic for in seconds. To skip user input type 0 and time will be set to 5 seconds. |

--record_timeout |

Set the time in seconds for real-time recording. Default is 2 seconds. |

--phrase_timeout |

Set the time in seconds for empty space between recordings before considering it a new line in the transcription. Default is 1 second. |

--translate |

Translate the transcriptions to English. Enables translation. |

--transcribe |

Transcribe the audio to a set target language. Target Language flag is required. |

--target_language |

Select the language to translate to. Available choices are a list of languages in ISO 639-1 format, as well as their English names. |

--language |

Select the language to translate from. Available choices are a list of languages in ISO 639-1 format, as well as their English names. |

--auto_model_swap |

Automatically swap the model based on the detected language. Enables automatic model swapping. |

--device |

Select the device to use for the model. Default is "cuda" if available. Available options are "cpu" and "cuda". When setting to CPU you can choose any RAM size as long as you have enough RAM. The CPU option is optimized for multi-threading, so if you have like 16 cores, 32 threads, you can see good results. |

--cuda_device |

Select the CUDA device to use for the model. Default is 0. |

--discord_webhook |

Set the Discord webhook to send the transcription to. |

--list_microphones |

List available microphones and exit. |

--set_microphone |

Set the default microphone to use. You can set the name or its ID number from the list. |

--microphone_enabled |

Enables microphone usage. Add true after the flag. |

--auto_language_lock |

Automatically lock the language based on the detected language after 5 detections. Enables automatic language locking. Will help reduce latency. Use this flag if you are using non-English and if you do not know the current spoken language. |

--use_finetune |

Use fine-tuned model. This will increase accuracy, but will also increase latency. Additional VRAM/RAM usage is required. |

--no_log |

Makes it so only the last thing translated/transcribed is shown rather log style list. |

--updatebranch |

Check which branch from the repo to check for updates. Default is master, choices are master and dev-testing and bleeding-under-work. To turn off update checks use disable. bleeding-under-work is basically latest changes and can break at any time. |

--keep_temp |

Keeps audio files in the out folder. This will take up space over time though. |

--portnumber |

Set the port number for the web server. If no number is set then the web server will not start. |

--retry |

Retries translations and transcription if they fail. |

--about |

Shows about the app. |

--save_transcript |

Saves the transcript to a text file. |

--save_folder |

Set the folder to save the transcript to. |

--stream |

Stream audio from a HLS stream. |

--stream_language |

Language of the stream. Default is English. |

--stream_target_language |

Language to translate the stream to. Default is English. Needed for --stream_transcribe

|

--stream_translate |

Translate the stream. |

--stream_transcribe |

Transcribe the stream to different language. Use --stream_target_language to change the output. |

--stream_original_text |

Show the detected original text. |

--stream_chunks |

How many chunks to split the stream into. Default is 5 is recommended to be between 3 and 5. YouTube streams should be 1 or 2, twitch should be 5 to 10. The higher the number, the more accurate, but also the slower and delayed the stream translation and transcription will be. |

--cookies |

Cookies file name, just like twitch, youtube, twitchacc1, twitchacczed |

--makecaptions |

Set program to captions mode, requires file_input, file_output, file_output_name |

--file_input |

Location of file for the input to make captions for, almost all video/audio format supported (uses ffmpeg) |

--file_output |

Location of folder to export the captions |

--file_output_name |

File name to export as without any ext. |

--ignorelist |

Usage is "--ignorelist "C:\quoted\path\to\wordlist.txt"" |

--condition_on_previous_text |

Will help the model from repeating itself, but may slow up the process. |

- When crafting your command line arguments, you need to make sure you adjust the energy threshold to your liking. The default is 100, but you can adjust it to your liking. The higher the number, the harder it is to trigger the audio detection. The lower the number, the easier it is to trigger the audio detection. I recommend you start with 100 and adjust it from there. I seen best results with 250-500.

- When using the discord webhook make sure the url is in quotes. Example:

--discord_webhook "https://discord.com/api/webhooks/1234567890/1234567890" - An active internet connection is required for initial usage. Over time you'll no longer need an internet connection. Changing RAM size will download certain models, once downloaded you'll no longer need internet.

- The fine tuned model will automatically be downloaded from OneDrive via Direct Public link. In the event of failure

- When using more than one streaming option you may experience issues. This adds more jobs to the audio queue.

With the flag --igignorelist you can now load a list of phrases or words to ignore in the api output and subtitle window. This list is already filled with common phrases the AI will think it heard. You can adjust this list as youu please or add more words or phrases to it.

Some streams may require cookies set, you'll need to save cookies as netscape format into the cookies folder as a .txt file. If a folder doesn't exist, create it.

You can save cookies using this https://cookie-editor.com/ or any other cookie editor, but it must be in netscape format.

Example usage --cookies twitchacc1 DO NOT include the .txt file extension.

What ever you named the text file in the cookies folder, you'll need to use that name as the argument.

With the command flag --port 4000, you can use query parameters like ?showoriginal, ?showtranslation, and ?showtranscription to show specific elements. If any other query parameter is used or no query parameters are specified, all elements will be shown by default. You can choose another number other than 4000 if you want. You can mix the query parameters to show specific elements, leave blank to show all elements.

For example:

-

http://localhost:4000?showoriginalwill show theoriginaldetected text. -

http://localhost:4000?showtranslationwill show thetranslatedtext. -

http://localhost:4000?showtranscriptionwill show thetranscribedtext. -

http://localhost:4000/?showoriginal&showtranscriptionwill show theoriginalandtranscribedtext. -

http://localhost:4000orhttp://localhost:4000?otherparam=valuewill show all elements by default.

Please note, make sure you edit the livetranslation.bat/livetranslation.bash file to change the settings. If you do not, it will use the default settings.

This will create captions, with the 12gb option and save to downloads.

PLEASE NOTE, CAPTIONS WILL ONLY BE IN ENGLISH (Model limitation) THOUGH YOU CAN ALWAYS USE OTHER PROGRAMS TO TRANSLATE INTO OTHER LANGUAGES

python transcribe_audio.py --ram 12gb --makecaptions --file_input="C:\Users\username\Downloads\430796208_935901281333537_8407224487814569343_n.mp4" --file_output="C:\Users\username\Downloads" --file_output_name="430796208_935901281333537_8407224487814569343_n" --language Japanese --device cuda

You have a 12gb GPU and want to stream the audio from a live stream https://www.twitch.tv/somestreamerhere and want to translate it to English. You can run the following command:

python transcribe_audio.py --ram 12gb --stream_translate --stream_language Japanese --stream https://www.twitch.tv/somestreamerhere

Stream Sources from YouTube and Twitch are supported. You can also use any other stream source that supports HLS/m3u8.

You have a GPU with 6GB of memory and you want to use the Japanese model. You also want to translate the transcription to English. You also want to send the transcription to a Discord channel. You also want to set the energy threshold to 300. You can run the following command:

python transcribe_audio.py --ram 6gb --translate --language ja --discord_webhook "https://discord.com/api/webhooks/1234567890/1234567890" --energy_threshold 300

When choosing ram, you can only choose 1gb, 2gb, 4gb, 6gb, 12gb. There are no in-betweens.

You have a 12gb GPU and you want to translate to Spanish from English, you can run the following command:

python transcribe_audio.py --ram 12gb --transcribe --target_language Spanish --language en

Lets say you have multiple audio devices and you want to use the one that is not the default. You can run the following command:

python transcribe_audio.py --list_microphones

This command will list all audio devices and their index. You can then use the index to set the default audio device. For example, if you want to use the second audio device, you can run the following command:

python transcribe_audio.py --set_microphone "Realtek Audio (2- High Definiti" to set the device to listen to. *Please note the quotes around the device name. This is required to prevent errors. Some names may be cut off, copy exactly what is in the quotes of the listed devices.

Example lets say I have these devices:

Microphone with name "Microsoft Sound Mapper - Input" found, the device index is 1

Microphone with name "VoiceMeeter VAIO3 Output (VB-Au" found, the device index is 2

Microphone with name "Headset (B01)" found, the device index is 3

Microphone with name "Microphone (Realtek USB2.0 Audi" found, the device index is 4

Microphone with name "Microphone (NVIDIA Broadcast)" found, the device index is 5

I would put python transcribe_audio.py --set_microphone "Microphone (Realtek USB2.0 Audi" to set the device to listen to.

-or-

I would put python transcribe_audio.py --set_microphone 4 to set the device to listen to.

If you encounter any issues with the tool, here are some common problems and their solutions:

- Python is not recognized as an internal or external command, operable program or batch file.

- Make sure you have Python installed and added to your PATH.

- If you recently installed Python, try restarting your computer to refresh the PATH environment variable.

- Check that you installed the correct version of Python required by the application. Some applications may require a specific version of Python.

- If you are still having issues, try running the command prompt as an administrator and running the installation again. However, only do this as a last resort and with caution, as running scripts as an administrator can potentially cause issues with the system.

- I get an error saying "No module named 'transformers'".

- Re-run the setup.bat file.

- If issues persist, make sure you have Python installed and added to your PATH.

- Make sure you have the

transformersmodule installed by runningpip install transformers. - If you have multiple versions of Python installed, make sure you are installing the module for the correct version by specifying the Python version when running the command, e.g.

python -m pip install transformers. - If you are still having issues, create a new issue on the repository and the developer may be able to help you fix the issue.

- Re-run the setup.bat file.

- Git is not recognized as an internal or external command, operable program or batch file.

- Make sure you have Git installed and added to your PATH.

- If you recently installed Git, try restarting your computer to refresh the PATH environment variable.

- If you are still having issues, try running the command prompt as an administrator and running the installation again. However, only do this as a last resort and with caution, as running scripts as an administrator can potentially cause issues with the system.

- CUDA is not recognized or available.

- Make sure you have CUDA installed. You can get it from here.

- CUDA is only for NVIDIA GPUs. If you have an AMD GPU, you have to use the CPU model. ROCm is not supported at this time.

- [WinError 2] The system cannot find the file specified Try this fix: https://github.com/cyberofficial/Real-Time-Translation/issues/2#issuecomment-1491098222

- Translator can't pickup stream sound

- Check out this discussion thread for a possible fix: #12 Discussion

- Error: Audio source must be entered before adjusting.

- You need to make sure you have a microphone set up. See issue #63 for additional information.

- Error: "could not find a version that satisfies the requirement torch" (See Issue #82) )

- Please make sure you have python 64bit installed. If you have 32bit installed, you will need to uninstall it and install 64bit. You can grab it here for windows. Windows Direct: https://www.python.org/ftp/python/3.10.9/python-3.10.9-amd64.exe Main: https://www.python.org/downloads/release/python-3109/

- Error generating captions: Please make sure the file name is in english letters. If you still get an error, please make a bug report.

- Models used are from OpenAI Whisper - Whisper

- Models were fine tuned using this Documentation

Command line arguments used. --ram 6gb --record_timeout 2 --language ja --energy_threshold 500

Command line arguments used. --ram 12gb --record_timeout 5 --language id --energy_threshold 500

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Synthalingua

Similar Open Source Tools

Synthalingua

Synthalingua is an advanced, self-hosted tool that leverages artificial intelligence to translate audio from various languages into English in near real time. It offers multilingual outputs and utilizes GPU and CPU resources for optimized performance. Although currently in beta, it is actively developed with regular updates to enhance capabilities. The tool is not intended for professional use but for fun, language learning, and enjoying content at a reasonable pace. Users must ensure speakers speak clearly for accurate translations. It is not a replacement for human translators and users assume their own risk and liability when using the tool.

slide-deck-ai

SlideDeck AI is a tool that leverages Generative Artificial Intelligence to co-create slide decks on any topic. Users can describe their topic and let SlideDeck AI generate a PowerPoint slide deck, streamlining the presentation creation process. The tool offers an iterative workflow with a conversational interface for creating and improving presentations. It uses Mistral Nemo Instruct to generate initial slide content, searches and downloads images based on keywords, and allows users to refine content through additional instructions. SlideDeck AI provides pre-defined presentation templates and a history of instructions for users to enhance their presentations.

llamafile

llamafile is a tool that enables users to distribute and run Large Language Models (LLMs) with a single file. It combines llama.cpp with Cosmopolitan Libc to create a framework that simplifies the complexity of LLMs into a single-file executable called a 'llamafile'. Users can run these executable files locally on most computers without the need for installation, making open LLMs more accessible to developers and end users. llamafile also provides example llamafiles for various LLM models, allowing users to try out different LLMs locally. The tool supports multiple CPU microarchitectures, CPU architectures, and operating systems, making it versatile and easy to use.

katrain

KaTrain is a tool designed for analyzing games and playing go with AI feedback from KataGo. Users can review their games to find costly moves, play against AI with immediate feedback, play against weakened AI versions, and generate focused SGF reviews. The tool provides various features such as previews, tutorials, installation instructions, and configuration options for KataGo. Users can play against AI, receive instant feedback on moves, explore variations, and request in-depth analysis. KaTrain also supports distributed training for contributing to KataGo's strength and training bigger models. The tool offers themes customization, FAQ section, and opportunities for support and contribution through GitHub issues and Discord community.

generative_ai_with_langchain

Generative AI with LangChain is a code repository for building large language model (LLM) apps with Python, ChatGPT, and other LLMs. The repository provides code examples, instructions, and configurations for creating generative AI applications using the LangChain framework. It covers topics such as setting up the development environment, installing dependencies with Conda or Pip, using Docker for environment setup, and setting API keys securely. The repository also emphasizes stability, code updates, and user engagement through issue reporting and feedback. It aims to empower users to leverage generative AI technologies for tasks like building chatbots, question-answering systems, software development aids, and data analysis applications.

ChatGPT-Telegram-Bot

The ChatGPT Telegram Bot is a powerful Telegram bot that utilizes various GPT models, including GPT3.5, GPT4, GPT4 Turbo, GPT4 Vision, DALL·E 3, Groq Mixtral-8x7b/LLaMA2-70b, and Claude2.1/Claude3 opus/sonnet API. It enables users to engage in efficient conversations and information searches on Telegram. The bot supports multiple AI models, online search with DuckDuckGo and Google, user-friendly interface, efficient message processing, document interaction, Markdown rendering, and convenient deployment options like Zeabur, Replit, and Docker. Users can set environment variables for configuration and deployment. The bot also provides Q&A functionality, supports model switching, and can be deployed in group chats with whitelisting. The project is open source under GPLv3 license.

yuna-ai

Yuna AI is a unique AI companion designed to form a genuine connection with users. It runs exclusively on the local machine, ensuring privacy and security. The project offers features like text generation, language translation, creative content writing, roleplaying, and informal question answering. The repository provides comprehensive setup and usage guides for Yuna AI, along with additional resources and tools to enhance the user experience.

Open-LLM-VTuber

Open-LLM-VTuber is a project in early stages of development that allows users to interact with Large Language Models (LLM) using voice commands and receive responses through a Live2D talking face. The project aims to provide a minimum viable prototype for offline use on macOS, Linux, and Windows, with features like long-term memory using MemGPT, customizable LLM backends, speech recognition, and text-to-speech providers. Users can configure the project to chat with LLMs, choose different backend services, and utilize Live2D models for visual representation. The project supports perpetual chat, offline operation, and GPU acceleration on macOS, addressing limitations of existing solutions on macOS.

LLaMa2lang

LLaMa2lang is a repository containing convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language that isn't English. The repository aims to improve the performance of LLaMa3 for non-English languages by combining fine-tuning with RAG. Users can translate datasets, extract threads, turn threads into prompts, and finetune models using QLoRA and PEFT. Additionally, the repository supports translation models like OPUS, M2M, MADLAD, and base datasets like OASST1 and OASST2. The process involves loading datasets, translating them, combining checkpoints, and running inference using the newly trained model. The repository also provides benchmarking scripts to choose the right translation model for a target language.

Pandrator

Pandrator is a GUI tool for generating audiobooks and dubbing using voice cloning and AI. It transforms text, PDF, EPUB, and SRT files into spoken audio in multiple languages. It leverages XTTS, Silero, and VoiceCraft models for text-to-speech conversion and voice cloning, with additional features like LLM-based text preprocessing and NISQA for audio quality evaluation. The tool aims to be user-friendly with a one-click installer and a graphical interface.

LLaMa2lang

This repository contains convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language (that isn't English). The rationale behind this is that LLaMa3 is trained on primarily English data and while it works to some extent for other languages, its performance is poor compared to English.

obsidian-chat-cbt-plugin

ChatCBT is an AI-powered journaling assistant for Obsidian, inspired by cognitive behavioral therapy (CBT). It helps users reframe negative thoughts and rewire reactions to distressful situations. The tool provides kind and objective responses to uncover negative thinking patterns, store conversations privately, and summarize reframed thoughts. Users can choose between a cloud-based AI service (OpenAI) or a local and private service (Ollama) for handling data. ChatCBT is not a replacement for therapy but serves as a journaling assistant to help users gain perspective on their problems.

HackBot

HackBot is an AI-powered cybersecurity chatbot designed to provide accurate answers to cybersecurity-related queries, conduct code analysis, and scan analysis. It utilizes the Meta-LLama2 AI model through the 'LlamaCpp' library to respond coherently. The chatbot offers features like local AI/Runpod deployment support, cybersecurity chat assistance, interactive interface, clear output presentation, static code analysis, and vulnerability analysis. Users can interact with HackBot through a command-line interface and utilize it for various cybersecurity tasks.

vector_companion

Vector Companion is an AI tool designed to act as a virtual companion on your computer. It consists of two personalities, Axiom and Axis, who can engage in conversations based on what is happening on the screen. The tool can transcribe audio output and user microphone input, take screenshots, and read text via OCR to create lifelike interactions. It requires specific prerequisites to run on Windows and uses VB Cable to capture audio. Users can interact with Axiom and Axis by running the main script after installation and configuration.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

For similar tasks

Synthalingua

Synthalingua is an advanced, self-hosted tool that leverages artificial intelligence to translate audio from various languages into English in near real time. It offers multilingual outputs and utilizes GPU and CPU resources for optimized performance. Although currently in beta, it is actively developed with regular updates to enhance capabilities. The tool is not intended for professional use but for fun, language learning, and enjoying content at a reasonable pace. Users must ensure speakers speak clearly for accurate translations. It is not a replacement for human translators and users assume their own risk and liability when using the tool.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

WeeaBlind

Weeablind is a program that uses modern AI speech synthesis, diarization, language identification, and voice cloning to dub multi-lingual media and anime. It aims to create a pleasant alternative for folks facing accessibility hurdles such as blindness, dyslexia, learning disabilities, or simply those that don't enjoy reading subtitles. The program relies on state-of-the-art technologies such as ffmpeg, pydub, Coqui TTS, speechbrain, and pyannote.audio to analyze and synthesize speech that stays in-line with the source video file. Users have the option of dubbing every subtitle in the video, setting the start and end times, dubbing only foreign-language content, or full-blown multi-speaker dubbing with speaking rate and volume matching.

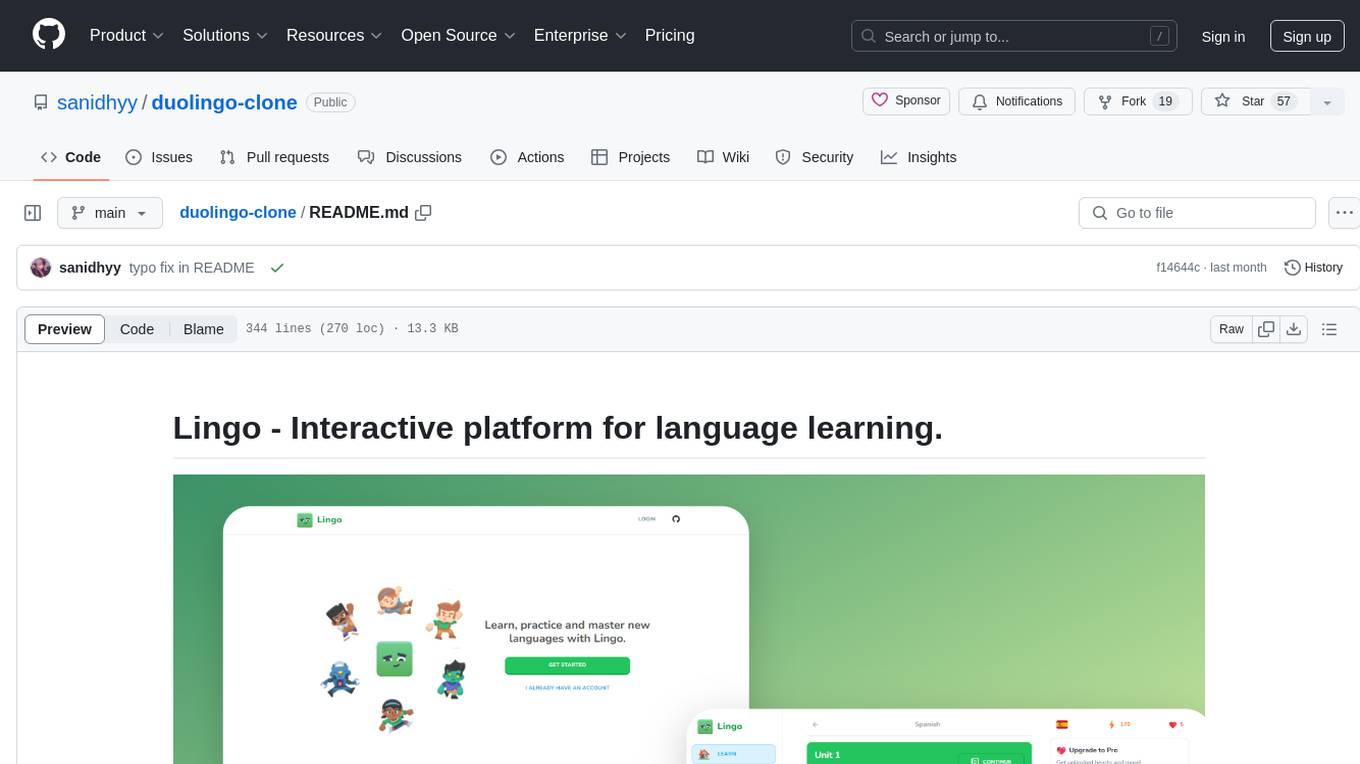

duolingo-clone

Lingo is an interactive platform for language learning that provides a modern UI/UX experience. It offers features like courses, quests, and a shop for users to engage with. The tech stack includes React JS, Next JS, Typescript, Tailwind CSS, Vercel, and Postgresql. Users can contribute to the project by submitting changes via pull requests. The platform utilizes resources from CodeWithAntonio, Kenney Assets, Freesound, Elevenlabs AI, and Flagpack. Key dependencies include @clerk/nextjs, @neondatabase/serverless, @radix-ui/react-avatar, and more. Users can follow the project creator on GitHub and Twitter, as well as subscribe to their YouTube channel for updates. To learn more about Next.js, users can refer to the Next.js documentation and interactive tutorial.

dinopal

DinoPal is an AI voice assistant residing in the Mac menu bar, offering real-time voice and video chat, screen sharing, online search, and multilingual support. It provides various AI assistants with unique strengths and characteristics to meet different conversational needs. Users can easily install DinoPal and access different communication modes, with a call time limit of 30 minutes. User feedback can be shared in the Discord community. DinoPal is powered by Google Gemini & Pipecat.

AugmentOS

AugmentOS is an open source operating system for smart glasses that allows users to access various apps and AI agents. It enables developers to easily build and run apps on smart glasses, run multiple apps simultaneously, and interact with AI assistants, translation services, live captions, and more. The platform also supports language learning, ADHD tools, and live language translation. AugmentOS is designed to enhance the user experience of smart glasses by providing a seamless and proactive interaction with AI-first wearables apps.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.