prime

Official CLI and Python SDK for Prime Intellect - access GPU compute, remote sandboxes, RL environments, and distributed training infrastructure for AI development at scale.

Stars: 151

Prime is a framework for efficient, globally distributed training of AI models over the internet. It includes features such as fault-tolerant training with ElasticDeviceMesh, asynchronous distributed checkpointing, live checkpoint recovery, custom Int8 All-Reduce Kernel, maximizing bandwidth utilization, PyTorch FSDP2/DTensor ZeRO-3 implementation, and CPU off-loading. The framework aims to optimize communication, checkpointing, and bandwidth utilization for large-scale AI model training.

README:

Command line interface and SDKs for managing Prime Intellect GPU resources, sandboxes, and environments.

# Install uv (if not already installed)

curl -LsSf https://astral.sh/uv/install.sh | sh

# Install prime

uv tool install prime

# Authenticate

prime login

# Browse verified environments

prime env list

# List available GPU resources

prime availability list- Environments - Access hundreds of verified environments on our community hub

- Evaluations - Push and manage evaluation results

- GPU Resource Management - Query and filter available GPU resources

- Pod Management - Create, monitor, and terminate compute pods

- Sandboxes - Easily run AI-generated code in the cloud

- SSH Access - Direct SSH access to running pods

- Team Support - Manage resources across team environments

First, install uv if you haven't already:

curl -LsSf https://astral.sh/uv/install.sh | shThen install prime:

uv tool install primepip install primeIf you only need the sandboxes SDK (lightweight, ~50KB):

uv pip install prime-sandboxesSee prime-sandboxes documentation for SDK usage.

# Interactive mode (recommended - hides input)

prime config set-api-key

# Non-interactive mode (for automation)

prime config set-api-key YOUR_API_KEY

# Environment variable (most secure for scripts)

export PRIME_API_KEY="your-api-key-here"# Configure SSH key for pod access

prime config set-ssh-key-path

# View current configuration

prime config viewSecurity Note: When using non-interactive mode, the API key may be visible in your shell history. For enhanced security, use interactive mode or environment variables.

Access hundreds of verified environments on our community hub with deep integrations with sandboxes, training, and evaluation stack.

# Browse available environments

prime env list

# View environment details

prime env info <environment-name>

# Install an environment locally

prime env install <environment-name>

# Create and push your own environment

prime env init my-environment

prime env push my-environment# List all available GPUs

prime availability list

# Filter by GPU type

prime availability list --gpu-type H100_80GB

# Show available GPU types

prime availability gpu-types# List your pods

prime pods list

# Create a pod

prime pods create

prime pods create --id <ID> # With specific GPU config

prime pods create --name my-pod # With custom name

# Monitor and manage pods

prime pods status <pod-id>

prime pods terminate <pod-id>

prime pods ssh <pod-id>Push and manage evaluation results to the Environments Hub.

# Auto-discover and push evaluations from current directory

prime eval push

# Push specific eval directory (verifiers format)

prime eval push outputs/evals/gsm8k--gpt-4/abc123

# List all evaluations

prime eval list

# Get evaluation details

prime eval get <eval-id>

# View evaluation samples

prime eval samples <eval-id># List teams

prime teams list

# Set team context

prime config set-team-id# Clone the repository

git clone https://github.com/PrimeIntellect-ai/prime-cli

cd prime-cli

# Set up workspace (installs all packages in editable mode)

uv sync

# Install CLI globally in editable mode

uv tool install -e packages/prime

# Now you can use the CLI directly

prime --help

# Run tests

uv run pytest packages/prime/tests

uv run pytest packages/prime-sandboxes/testsAll packages (prime-core, prime-sandboxes, prime) are installed in editable mode. Changes to code are immediately reflected.

This monorepo contains two independently versioned packages: prime (CLI + full SDK) and prime-sandboxes (lightweight SDK).

Versions are single-sourced from each package's __init__.py file:

-

prime:

packages/prime/src/prime_cli/__init__.py -

prime-sandboxes:

packages/prime-sandboxes/src/prime_sandboxes/__init__.py

- Update the

__version__string in the appropriate__init__.pyfile - Commit and push the change

Tagging and publishing to PyPI is handled automatically by CI.

When releasing prime, consider whether prime-sandboxes should also be bumped, as prime depends on prime-sandboxes. The packages can be released independently or together depending on what changed.

This project is licensed under the MIT License - see the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for prime

Similar Open Source Tools

prime

Prime is a framework for efficient, globally distributed training of AI models over the internet. It includes features such as fault-tolerant training with ElasticDeviceMesh, asynchronous distributed checkpointing, live checkpoint recovery, custom Int8 All-Reduce Kernel, maximizing bandwidth utilization, PyTorch FSDP2/DTensor ZeRO-3 implementation, and CPU off-loading. The framework aims to optimize communication, checkpointing, and bandwidth utilization for large-scale AI model training.

Shellsage

Shell Sage is an intelligent terminal companion and AI-powered terminal assistant that enhances the terminal experience with features like local and cloud AI support, context-aware error diagnosis, natural language to command translation, and safe command execution workflows. It offers interactive workflows, supports various API providers, and allows for custom model selection. Users can configure the tool for local or API mode, select specific models, and switch between modes easily. Currently in alpha development, Shell Sage has known limitations like limited Windows support and occasional false positives in error detection. The roadmap includes improvements like better context awareness, Windows PowerShell integration, Tmux integration, and CI/CD error pattern database.

backend.ai-webui

Backend.AI Web UI is a user-friendly web and app interface designed to make AI accessible for end-users, DevOps, and SysAdmins. It provides features for session management, inference service management, pipeline management, storage management, node management, statistics, configurations, license checking, plugins, help & manuals, kernel management, user management, keypair management, manager settings, proxy mode support, service information, and integration with the Backend.AI Web Server. The tool supports various devices, offers a built-in websocket proxy feature, and allows for versatile usage across different platforms. Users can easily manage resources, run environment-supported apps, access a web-based terminal, use Visual Studio Code editor, manage experiments, set up autoscaling, manage pipelines, handle storage, monitor nodes, view statistics, configure settings, and more.

mcpm.sh

MCPM is an open source CLI tool for managing MCP servers, providing a simplified global configuration approach to install servers once, organize them with profiles, and integrate them into any MCP client. Features include server discovery, direct execution, sharing capabilities, and client integration tools. It eliminates the complexity of v1's target-based system in favor of a clean global workspace model. The tool is designed to be AI agent friendly with comprehensive automation support and a rich CLI interface.

qwen-code

Qwen Code is an open-source AI agent optimized for Qwen3-Coder, designed to help users understand large codebases, automate tedious work, and expedite the shipping process. It offers an agentic workflow with rich built-in tools, a terminal-first approach with optional IDE integration, and supports both OpenAI-compatible API and Qwen OAuth authentication methods. Users can interact with Qwen Code in interactive mode, headless mode, IDE integration, and through a TypeScript SDK. The tool can be configured via settings.json, environment variables, and CLI flags, and offers benchmark results for performance evaluation. Qwen Code is part of an ecosystem that includes AionUi and Gemini CLI Desktop for graphical interfaces, and troubleshooting guides are available for issue resolution.

comp

Comp AI is an open-source compliance automation platform designed to assist companies in achieving compliance with standards like SOC 2, ISO 27001, and GDPR. It transforms compliance into an engineering problem solved through code, automating evidence collection, policy management, and control implementation while maintaining data and infrastructure control.

backend.ai

Backend.AI is a streamlined, container-based computing cluster platform that hosts popular computing/ML frameworks and diverse programming languages, with pluggable heterogeneous accelerator support including CUDA GPU, ROCm GPU, TPU, IPU and other NPUs. It allocates and isolates the underlying computing resources for multi-tenant computation sessions on-demand or in batches with customizable job schedulers with its own orchestrator. All its functions are exposed as REST/GraphQL/WebSocket APIs.

mlir-aie

This repository contains an MLIR-based toolchain for AI Engine-enabled devices, such as AMD Ryzen™ AI and Versal™. This repository can be used to generate low-level configurations for the AI Engine portion of these devices. AI Engines are organized as a spatial array of tiles, where each tile contains AI Engine cores and/or memories. The spatial array is connected by stream switches that can be configured to route data between AI Engine tiles scheduled by their programmable Data Movement Accelerators (DMAs). This repository contains MLIR representations, with multiple levels of abstraction, to target AI Engine devices. This enables compilers and developers to program AI Engine cores, as well as describe data movements and array connectivity. A Python API is made available as a convenient interface for generating MLIR design descriptions. Backend code generation is also included, targeting the aie-rt library. This toolchain uses the AI Engine compiler tool which is part of the AMD Vitis™ software installation: these tools require a free license for use from the Product Licensing Site.

mcpd

mcpd is a tool developed by Mozilla AI to declaratively manage Model Context Protocol (MCP) servers, enabling consistent interface for defining and running tools across different environments. It bridges the gap between local development and enterprise deployment by providing secure secrets management, declarative configuration, and seamless environment promotion. mcpd simplifies the developer experience by offering zero-config tool setup, language-agnostic tooling, version-controlled configuration files, enterprise-ready secrets management, and smooth transition from local to production environments.

AirCasting

AirCasting is a platform for gathering, visualizing, and sharing environmental data. It aims to provide a central hub for environmental data, making it easier for people to access and use this information to make informed decisions about their environment.

codemie-code

Unified AI Coding Assistant CLI for managing multiple AI agents like Claude Code, Google Gemini, OpenCode, and custom AI agents. Supports OpenAI, Azure OpenAI, AWS Bedrock, LiteLLM, Ollama, and Enterprise SSO. Features built-in LangGraph agent with file operations, command execution, and planning tools. Cross-platform support for Windows, Linux, and macOS. Ideal for developers seeking a powerful alternative to GitHub Copilot or Cursor.

KsanaLLM

KsanaLLM is a high-performance engine for LLM inference and serving. It utilizes optimized CUDA kernels for high performance, efficient memory management, and detailed optimization for dynamic batching. The tool offers flexibility with seamless integration with popular Hugging Face models, support for multiple weight formats, and high-throughput serving with various decoding algorithms. It enables multi-GPU tensor parallelism, streaming outputs, and an OpenAI-compatible API server. KsanaLLM supports NVIDIA GPUs and Huawei Ascend NPU, and seamlessly integrates with verified Hugging Face models like LLaMA, Baichuan, and Qwen. Users can create a docker container, clone the source code, compile for Nvidia or Huawei Ascend NPU, run the tool, and distribute it as a wheel package. Optional features include a model weight map JSON file for models with different weight names.

swarmauri-sdk

Swarmauri SDK is a repository containing core interfaces, standard ABCs, and standard concrete references of the SwarmaURI Framework. It provides a set of tools and functionalities for developers to work with the SwarmaURI ecosystem. The SDK aims to streamline the development process and enhance the interoperability of applications within the framework. Developers can easily integrate SwarmaURI features into their projects by leveraging the resources available in this repository.

UCAgent

UCAgent is an AI-powered automated UT verification agent for chip design. It automates chip verification workflow, supports functional and code coverage analysis, ensures consistency among documentation, code, and reports, and collaborates with mainstream Code Agents via MCP protocol. It offers three intelligent interaction modes and requires Python 3.11+, Linux/macOS OS, 4GB+ memory, and access to an AI model API. Users can clone the repository, install dependencies, configure qwen, and start verification. UCAgent supports various verification quality improvement options and basic operations through TUI shortcuts and stage color indicators. It also provides documentation build and preview using MkDocs, PDF manual build using Pandoc + XeLaTeX, and resources for further help and contribution.

sim

Sim is a platform that allows users to build and deploy AI agent workflows quickly and easily. It provides cloud-hosted and self-hosted options, along with support for local AI models. Users can set up the application using Docker Compose, Dev Containers, or manual setup with PostgreSQL and pgvector extension. The platform utilizes technologies like Next.js, Bun, PostgreSQL with Drizzle ORM, Better Auth for authentication, Shadcn and Tailwind CSS for UI, Zustand for state management, ReactFlow for flow editor, Fumadocs for documentation, Turborepo for monorepo management, Socket.io for real-time communication, and Trigger.dev for background jobs.

CodeRAG

CodeRAG is an AI-powered code retrieval and assistance tool that combines Retrieval-Augmented Generation (RAG) with AI to provide intelligent coding assistance. It indexes your entire codebase for contextual suggestions based on your complete project, offering real-time indexing, semantic code search, and contextual AI responses. The tool monitors your code directory, generates embeddings for Python files, stores them in a FAISS vector database, matches user queries against the code database, and sends retrieved code context to GPT models for intelligent responses. CodeRAG also features a Streamlit web interface with a chat-like experience for easy usage.

For similar tasks

prime

Prime is a framework for efficient, globally distributed training of AI models over the internet. It includes features such as fault-tolerant training with ElasticDeviceMesh, asynchronous distributed checkpointing, live checkpoint recovery, custom Int8 All-Reduce Kernel, maximizing bandwidth utilization, PyTorch FSDP2/DTensor ZeRO-3 implementation, and CPU off-loading. The framework aims to optimize communication, checkpointing, and bandwidth utilization for large-scale AI model training.

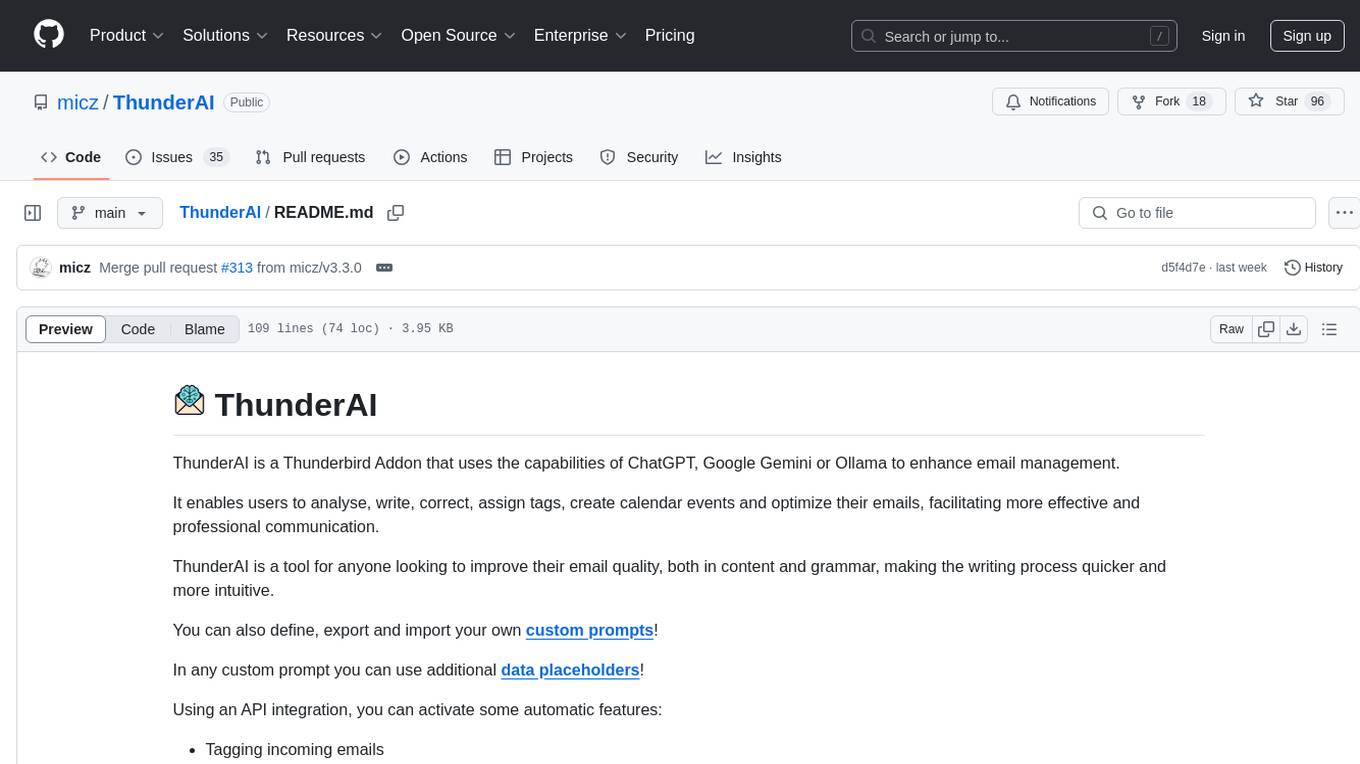

ThunderAI

ThunderAI is a Thunderbird Addon that utilizes ChatGPT, Google Gemini, or Ollama to enhance email management. It enables users to analyze, write, correct, assign tags, create calendar events, and optimize emails for more effective and professional communication. Users can define, export, and import custom prompts with data placeholders. The tool offers API integration for automatic features like tagging incoming emails and moving spam emails to the junk folder.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.