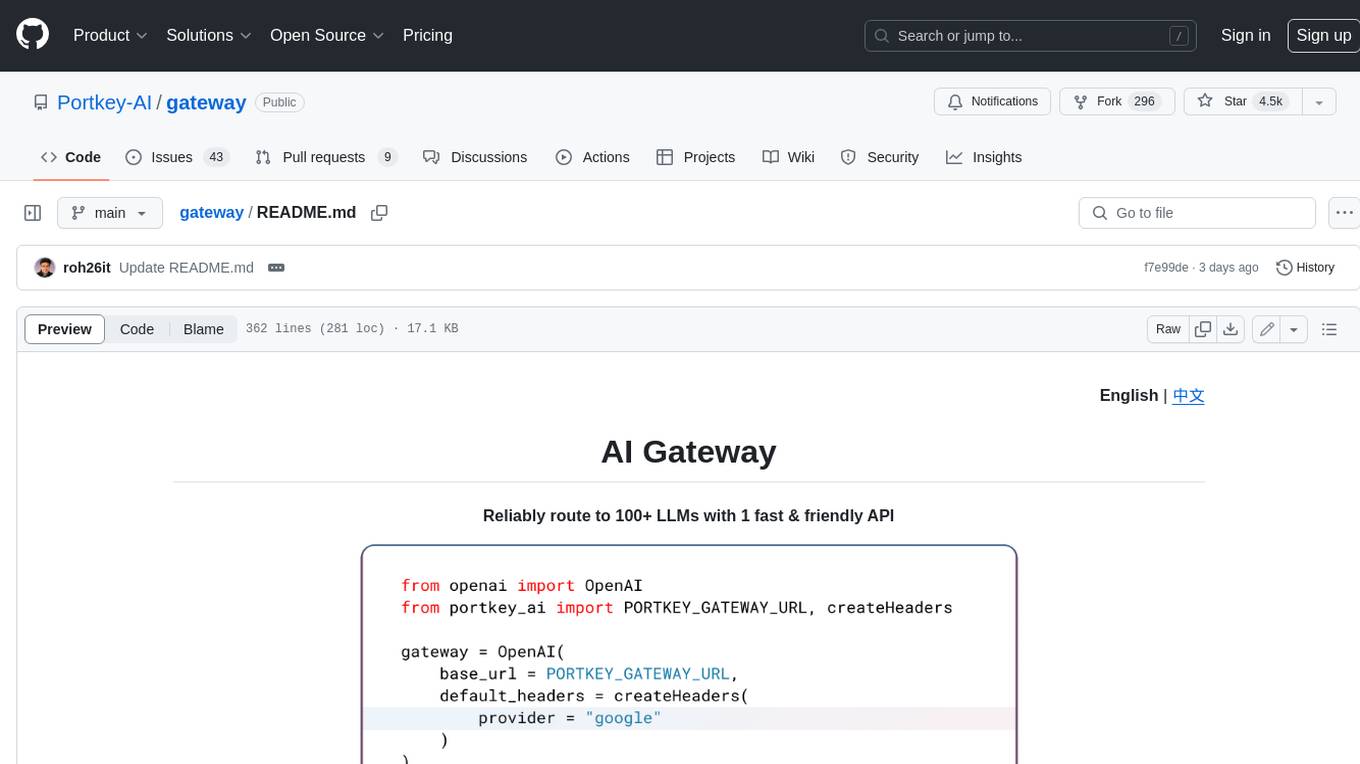

gateway

A blazing fast AI Gateway with integrated guardrails. Route to 200+ LLMs, 50+ AI Guardrails with 1 fast & friendly API.

Stars: 9553

Gateway is a tool that streamlines requests to 100+ open & closed source models with a unified API. It is production-ready with support for caching, fallbacks, retries, timeouts, load balancing, and can be edge-deployed for minimum latency. It is blazing fast with a tiny footprint, supports load balancing across multiple models, providers, and keys, ensures app resilience with fallbacks, offers automatic retries with exponential fallbacks, allows configurable request timeouts, supports multimodal routing, and can be extended with plug-in middleware. It is battle-tested over 300B tokens and enterprise-ready for enhanced security, scale, and custom deployments.

README:

The AI Gateway is designed for fast, reliable & secure routing to 1600+ language, vision, audio, and image models. It is a lightweight, open-source, and enterprise-ready solution that allows you to integrate with any language model in under 2 minutes.

- [x] Blazing fast (<1ms latency) with a tiny footprint (122kb)

- [x] Battle tested, with over 10B tokens processed everyday

- [x] Enterprise-ready with enhanced security, scale, and custom deployments

- Integrate with any LLM in under 2 minutes - Quickstart

- Prevent downtimes through automatic retries and fallbacks

- Scale AI apps with load balancing and conditional routing

- Protect your AI deployments with guardrails

- Go beyond text with multi-modal capabilities

- Finally, explore agentic workflow integrations

[!TIP] Starring this repo helps more developers discover the AI Gateway 🙏🏻

# Run the gateway locally (needs Node.js and npm)

npx @portkey-ai/gatewayDeployment guides:The Gateway is running on

http://localhost:8787/v1The Gateway Console is running on

http://localhost:8787/public/

# pip install -qU portkey-ai

from portkey_ai import Portkey

# OpenAI compatible client

client = Portkey(

provider="openai", # or 'anthropic', 'bedrock', 'groq', etc

Authorization="sk-***" # the provider API key

)

# Make a request through your AI Gateway

client.chat.completions.create(

messages=[{"role": "user", "content": "What's the weather like?"}],

model="gpt-4o-mini"

)Supported Libraries:

JS

Python

REST

OpenAI SDKs

Langchain

LlamaIndex

Autogen

CrewAI

More..

On the Gateway Console (http://localhost:8787/public/) you can see all of your local logs in one place.

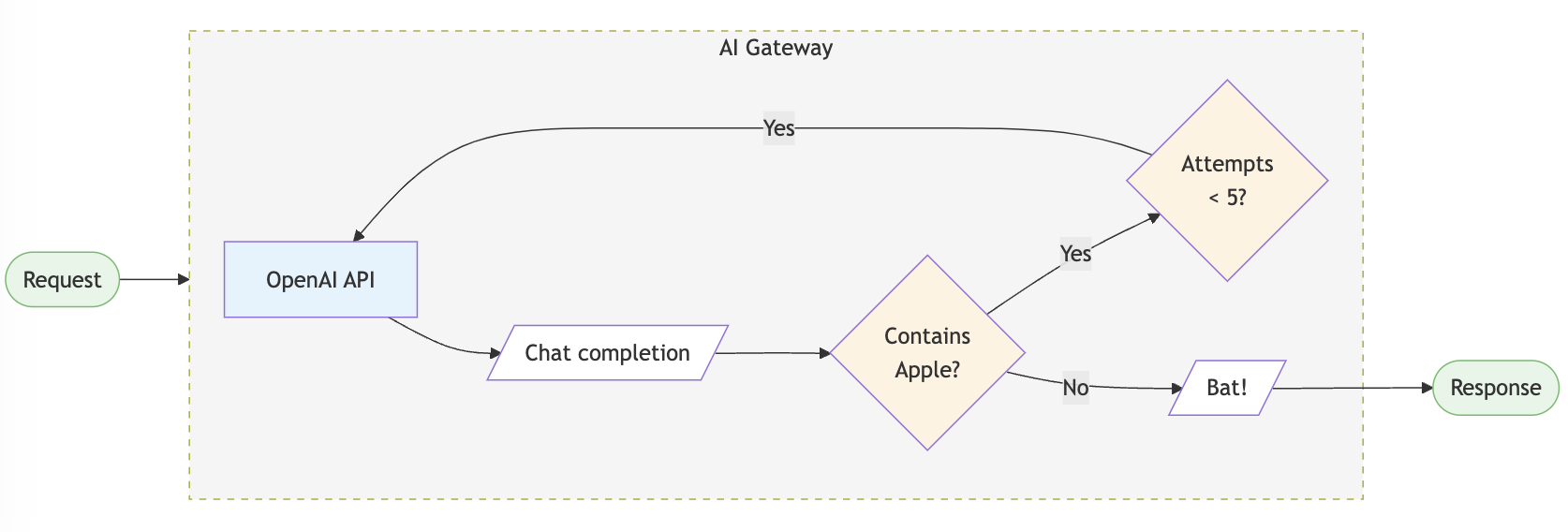

Configs in the LLM gateway allow you to create routing rules, add reliability and setup guardrails.

config = {

"retry": {"attempts": 5},

"output_guardrails": [{

"default.contains": {"operator": "none", "words": ["Apple"]},

"deny": True

}]

}

# Attach the config to the client

client = client.with_options(config=config)

client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": "Reply randomly with Apple or Bat"}]

)

# This would always response with "Bat" as the guardrail denies all replies containing "Apple". The retry config would retry 5 times before giving up.You can do a lot more stuff with configs in your AI gateway. Jump to examples →

AWS

Azure

GCP

OpenShift

Kubernetes

The LLM Gateway's enterprise version offers advanced capabilities for org management, governance, security and more out of the box. View Feature Comparison →

The enterprise deployment architecture for supported platforms is available here - Enterprise Private Cloud Deployments

Join weekly community calls every Friday (8 AM PT) to kickstart your AI Gateway implementation! Happening every Friday

Minutes of Meetings published here.

Insights from analyzing 2 trillion+ tokens, across 90+ regions and 650+ teams in production. What to expect from this report:

- Trends shaping AI adoption and LLM provider growth.

- Benchmarks to optimize speed, cost and reliability.

- Strategies to scale production-grade AI systems.

- Fallbacks: Fallback to another provider or model on failed requests using the LLM gateway. You can specify the errors on which to trigger the fallback. Improves reliability of your application.

- Automatic Retries: Automatically retry failed requests up to 5 times. An exponential backoff strategy spaces out retry attempts to prevent network overload.

- Load Balancing: Distribute LLM requests across multiple API keys or AI providers with weights to ensure high availability and optimal performance.

- Request Timeouts: Manage unruly LLMs & latencies by setting up granular request timeouts, allowing automatic termination of requests that exceed a specified duration.

- Multi-modal LLM Gateway: Call vision, audio (text-to-speech & speech-to-text), and image generation models from multiple providers — all using the familiar OpenAI signature

- Realtime APIs: Call realtime APIs launched by OpenAI through the integrate websockets server.

- Guardrails: Verify your LLM inputs and outputs to adhere to your specified checks. Choose from the 40+ pre-built guardrails to ensure compliance with security and accuracy standards. You can bring your own guardrails or choose from our many partners.

- Secure Key Management: Use your own keys or generate virtual keys on the fly.

- Role-based access control: Granular access control for your users, workspaces and API keys.

- Compliance & Data Privacy: The AI gateway is SOC2, HIPAA, GDPR, and CCPA compliant.

- Smart caching: Cache responses from LLMs to reduce costs and improve latency. Supports simple and semantic* caching.

- Usage analytics: Monitor and analyze your AI and LLM usage, including request volume, latency, costs and error rates.

- Provider optimization*: Automatically switch to the most cost-effective provider based on usage patterns and pricing models.

- Agents Support: Seamlessly integrate with popular agent frameworks to build complex AI applications. The gateway seamlessly integrates with Autogen, CrewAI, LangChain, LlamaIndex, Phidata, Control Flow, and even Custom Agents.

-

Prompt Template Management*: Create, manage and version your prompt templates collaboratively through a universal prompt playground.

- Use models from Nvidia NIM with AI Gateway

- Monitor CrewAI Agents with Portkey!

- Comparing Top 10 LMSYS Models with AI Gateway.

- Create Synthetic Datasets using Nemotron

- Use the LLM Gateway with Vercel's AI SDK

- Monitor Llama Agents with Portkey's LLM Gateway

Explore Gateway integrations with 45+ providers and 8+ agent frameworks.

| Provider | Support | Stream | |

|---|---|---|---|

| OpenAI | ✅ | ✅ | |

| Azure OpenAI | ✅ | ✅ | |

| Anyscale | ✅ | ✅ | |

| Google Gemini | ✅ | ✅ | |

| Anthropic | ✅ | ✅ | |

| Cohere | ✅ | ✅ | |

| Together AI | ✅ | ✅ | |

| Perplexity | ✅ | ✅ | |

| Mistral | ✅ | ✅ | |

| Nomic | ✅ | ✅ | |

| AI21 | ✅ | ✅ | |

| Stability AI | ✅ | ✅ | |

| DeepInfra | ✅ | ✅ | |

| Ollama | ✅ | ✅ | |

| Novita AI | ✅ | ✅ |

Gateway seamlessly integrates with popular agent frameworks. Read the documentation here.

| Framework | Call 200+ LLMs | Advanced Routing | Caching | Logging & Tracing* | Observability* | Prompt Management* |

|---|---|---|---|---|---|---|

| Autogen | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| CrewAI | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| LangChain | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Phidata | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Llama Index | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Control Flow | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| Build Your Own Agents | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

*Available on the hosted app. For detailed documentation click here.

Make your AI app more reliable and forward compatible, while ensuring complete data security and privacy.

✅ Secure Key Management - for role-based access control and tracking

✅ Simple & Semantic Caching - to serve repeat queries faster & save costs

✅ Access Control & Inbound Rules - to control which IPs and Geos can connect to your deployments

✅ PII Redaction - to automatically remove sensitive data from your requests to prevent indavertent exposure

✅ SOC2, ISO, HIPAA, GDPR Compliances - for best security practices

✅ Professional Support - along with feature prioritization

Schedule a call to discuss enterprise deployments

The easiest way to contribute is to pick an issue with the good first issue tag 💪. Read the contribution guidelines here.

Bug Report? File here | Feature Request? File here

Join our weekly AI Engineering Hours every Friday (8 AM PT) to:

- Meet other contributors and community members

- Learn advanced Gateway features and implementation patterns

- Share your experiences and get help

- Stay updated with the latest development priorities

Join the next session → | Meeting notes

Join our growing community around the world, for help, ideas, and discussions on AI.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gateway

Similar Open Source Tools

gateway

Gateway is a tool that streamlines requests to 100+ open & closed source models with a unified API. It is production-ready with support for caching, fallbacks, retries, timeouts, load balancing, and can be edge-deployed for minimum latency. It is blazing fast with a tiny footprint, supports load balancing across multiple models, providers, and keys, ensures app resilience with fallbacks, offers automatic retries with exponential fallbacks, allows configurable request timeouts, supports multimodal routing, and can be extended with plug-in middleware. It is battle-tested over 300B tokens and enterprise-ready for enhanced security, scale, and custom deployments.

langtrace

Langtrace is an open source observability software that lets you capture, debug, and analyze traces and metrics from all your applications that leverage LLM APIs, Vector Databases, and LLM-based Frameworks. It supports Open Telemetry Standards (OTEL), and the traces generated adhere to these standards. Langtrace offers both a managed SaaS version (Langtrace Cloud) and a self-hosted option. The SDKs for both Typescript/Javascript and Python are available, making it easy to integrate Langtrace into your applications. Langtrace automatically captures traces from various vendors, including OpenAI, Anthropic, Azure OpenAI, Langchain, LlamaIndex, Pinecone, and ChromaDB.

LitServe

LitServe is a high-throughput serving engine designed for deploying AI models at scale. It generates an API endpoint for models, handles batching, streaming, and autoscaling across CPU/GPUs. LitServe is built for enterprise scale with a focus on minimal, hackable code-base without bloat. It supports various model types like LLMs, vision, time-series, and works with frameworks like PyTorch, JAX, Tensorflow, and more. The tool allows users to focus on model performance rather than serving boilerplate, providing full control and flexibility.

pr-agent

PR-Agent is a tool that helps to efficiently review and handle pull requests by providing AI feedbacks and suggestions. It supports various commands such as generating PR descriptions, providing code suggestions, answering questions about the PR, and updating the CHANGELOG.md file. PR-Agent can be used via CLI, GitHub Action, GitHub App, Docker, and supports multiple git providers and models. It emphasizes real-life practical usage, with each tool having a single GPT-4 call for quick and affordable responses. The PR Compression strategy enables effective handling of both short and long PRs, while the JSON prompting strategy allows for modular and customizable tools. PR-Agent Pro, the hosted version by CodiumAI, provides additional benefits such as full management, improved privacy, priority support, and extra features.

OpenGateLLM

OpenGateLLM is an open-source API gateway developed by the French Government, designed to serve AI models in production. It follows OpenAI standards and offers robust features like RAG integration, audio transcription, OCR, and more. With support for multiple AI backends and built-in security, OpenGateLLM provides a production-ready solution for various AI tasks.

monoscope

Monoscope is an open-source monitoring and observability platform that uses artificial intelligence to understand and monitor systems automatically. It allows users to ingest and explore logs, traces, and metrics in S3 buckets, query in natural language via LLMs, and create AI agents to detect anomalies. Key capabilities include universal data ingestion, AI-powered understanding, natural language interface, cost-effective storage, and zero configuration. Monoscope is designed to reduce alert fatigue, catch issues before they impact users, and provide visibility across complex systems.

eko

Eko is a lightweight and flexible command-line tool for managing environment variables in your projects. It allows you to easily set, get, and delete environment variables for different environments, making it simple to manage configurations across development, staging, and production environments. With Eko, you can streamline your workflow and ensure consistency in your application settings without the need for complex setup or configuration files.

palico-ai

Palico AI is a tech stack designed for rapid iteration of LLM applications. It allows users to preview changes instantly, improve performance through experiments, debug issues with logs and tracing, deploy applications behind a REST API, and manage applications with a UI control panel. Users have complete flexibility in building their applications with Palico, integrating with various tools and libraries. The tool enables users to swap models, prompts, and logic easily using AppConfig. It also facilitates performance improvement through experiments and provides options for deploying applications to cloud providers or using managed hosting. Contributions to the project are welcomed, with easy ways to get involved by picking issues labeled as 'good first issue'.

terminator

Terminator is an AI-powered desktop automation tool that is open source, MIT-licensed, and cross-platform. It works across all apps and browsers, inspired by GitHub Actions & Playwright. It is 100x faster than generic AI agents, with over 95% success rate and no vendor lock-in. Users can create automations that work across any desktop app or browser, achieve high success rates without costly consultant armies, and pre-train workflows as deterministic code.

axonhub

AxonHub is an all-in-one AI development platform that serves as an AI gateway allowing users to switch between model providers without changing any code. It provides features like vendor lock-in prevention, integration simplification, observability enhancement, and cost control. Users can access any model using any SDK with zero code changes. The platform offers full request tracing, enterprise RBAC, smart load balancing, and real-time cost tracking. AxonHub supports multiple databases, provides a unified API gateway, and offers flexible model management and API key creation for authentication. It also integrates with various AI coding tools and SDKs for seamless usage.

pr-agent

PR-Agent is a tool designed to assist in efficiently reviewing and handling pull requests by providing AI feedback and suggestions. It offers various tools such as Review, Describe, Improve, Ask, Update CHANGELOG, and more, with the ability to run them via different interfaces like CLI, PR Comments, or automatically triggering them when a new PR is opened. The tool supports multiple git platforms and models, emphasizing real-life practical usage and modular, customizable tools.

auto-dev

AutoDev is an AI-powered coding wizard that supports multiple languages, including Java, Kotlin, JavaScript/TypeScript, Rust, Python, Golang, C/C++/OC, and more. It offers a range of features, including auto development mode, copilot mode, chat with AI, customization options, SDLC support, custom AI agent integration, and language features such as language support, extensions, and a DevIns language for AI agent development. AutoDev is designed to assist developers with tasks such as auto code generation, bug detection, code explanation, exception tracing, commit message generation, code review content generation, smart refactoring, Dockerfile generation, CI/CD config file generation, and custom shell/command generation. It also provides a built-in LLM fine-tune model and supports UnitEval for LLM result evaluation and UnitGen for code-LLM fine-tune data generation.

EverMemOS

EverMemOS is an AI memory system that enables AI to not only remember past events but also understand the meaning behind memories and use them to guide decisions. It achieves 93% reasoning accuracy on the LoCoMo benchmark by providing long-term memory capabilities for conversational AI agents through structured extraction, intelligent retrieval, and progressive profile building. The tool is production-ready with support for Milvus vector DB, Elasticsearch, MongoDB, and Redis, and offers easy integration via a simple REST API. Users can store and retrieve memories using Python code and benefit from features like multi-modal memory storage, smart retrieval mechanisms, and advanced techniques for memory management.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

portkey-python-sdk

The Portkey Python SDK is a control panel for AI apps that allows seamless integration of Portkey's advanced features with OpenAI methods. It provides features such as AI gateway for unified API signature, interoperability, automated fallbacks & retries, load balancing, semantic caching, virtual keys, request timeouts, observability with logging, requests tracing, custom metadata, feedback collection, and analytics. Users can make requests to OpenAI using Portkey SDK and also use async functionality. The SDK is compatible with OpenAI SDK methods and offers Portkey-specific methods like feedback and prompts. It supports various providers and encourages contributions through Github issues or direct contact via email or Discord.

motia

Motia is an AI agent framework designed for software engineers to create, test, and deploy production-ready AI agents quickly. It provides a code-first approach, allowing developers to write agent logic in familiar languages and visualize execution in real-time. With Motia, developers can focus on business logic rather than infrastructure, offering zero infrastructure headaches, multi-language support, composable steps, built-in observability, instant APIs, and full control over AI logic. Ideal for building sophisticated agents and intelligent automations, Motia's event-driven architecture and modular steps enable the creation of GenAI-powered workflows, decision-making systems, and data processing pipelines.

For similar tasks

gateway

Gateway is a tool that streamlines requests to 100+ open & closed source models with a unified API. It is production-ready with support for caching, fallbacks, retries, timeouts, load balancing, and can be edge-deployed for minimum latency. It is blazing fast with a tiny footprint, supports load balancing across multiple models, providers, and keys, ensures app resilience with fallbacks, offers automatic retries with exponential fallbacks, allows configurable request timeouts, supports multimodal routing, and can be extended with plug-in middleware. It is battle-tested over 300B tokens and enterprise-ready for enhanced security, scale, and custom deployments.

xllm

xLLM is an efficient LLM inference framework optimized for Chinese AI accelerators, enabling enterprise-grade deployment with enhanced efficiency and reduced cost. It adopts a service-engine decoupled inference architecture, achieving breakthrough efficiency through technologies like elastic scheduling, dynamic PD disaggregation, multi-stream parallel computing, graph fusion optimization, and global KV cache management. xLLM supports deployment of mainstream large models on Chinese AI accelerators, empowering enterprises in scenarios like intelligent customer service, risk control, supply chain optimization, ad recommendation, and more.

instructor-go

Instructor Go is a library that simplifies working with structured outputs from large language models (LLMs). Built on top of `invopop/jsonschema` and utilizing `jsonschema` Go struct tags, it provides a user-friendly API for managing validation, retries, and streaming responses without changing code logic. The library supports LLM provider APIs such as OpenAI, Anthropic, Cohere, and Google, capturing and returning usage data in responses. Users can easily add metadata to struct fields using `jsonschema` tags to enhance model awareness and streamline workflows.

AgC

AgC is an open-core platform designed for deploying, running, and orchestrating AI agents at scale. It treats agents as first-class compute units, providing a modular, observable, cloud-neutral, and production-ready environment. Open Agentic Compute empowers developers and organizations to run agents like cloud-native workloads without lock-in.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.