self-llm

《开源大模型食用指南》针对中国宝宝量身打造的基于Linux环境快速微调(全参数/Lora)、部署国内外开源大模型(LLM)/多模态大模型(MLLM)教程

Stars: 13965

This project is a Chinese tutorial for domestic beginners based on the AutoDL platform, providing full-process guidance for various open-source large models, including environment configuration, local deployment, and efficient fine-tuning. It simplifies the deployment, use, and application process of open-source large models, enabling more ordinary students and researchers to better use open-source large models and helping open and free large models integrate into the lives of ordinary learners faster.

README:

中文 | English

本项目是一个围绕开源大模型、针对国内初学者、基于 Linux 平台的中国宝宝专属大模型教程,针对各类开源大模型提供包括环境配置、本地部署、高效微调等技能在内的全流程指导,简化开源大模型的部署、使用和应用流程,让更多的普通学生、研究者更好地使用开源大模型,帮助开源、自由的大模型更快融入到普通学习者的生活中。

本项目的主要内容包括:

- 基于 Linux 平台的开源 LLM 环境配置指南,针对不同模型要求提供不同的详细环境配置步骤;

- 针对国内外主流开源 LLM 的部署使用教程,包括 LLaMA、ChatGLM、InternLM 等;

- 开源 LLM 的部署应用指导,包括命令行调用、在线 Demo 部署、LangChain 框架集成等;

- 开源 LLM 的全量微调、高效微调方法,包括分布式全量微调、LoRA、ptuning 等。

项目的主要内容就是教程,让更多的学生和未来的从业者了解和熟悉开源大模型的食用方法!任何人都可以提出issue或是提交PR,共同构建维护这个项目。

想要深度参与的同学可以联系我们,我们会将你加入到项目的维护者中。

学习建议:本项目的学习建议是,先学习环境配置,然后再学习模型的部署使用,最后再学习微调。因为环境配置是基础,模型的部署使用是基础,微调是进阶。初学者可以选择Qwen1.5,InternLM2,MiniCPM等模型优先学习。

注:如果有同学希望了解大模型的模型构成,以及从零手写RAG、Agent和Eval等任务,可以学习Datawhale的另一个项目Tiny-Universe,大模型是当下深度学习领域的热点,但现有的大部分大模型教程只在于教给大家如何调用api完成大模型的应用,而很少有人能够从原理层面讲清楚模型结构、RAG、Agent 以及 Eval。所以该仓库会提供全部手写,不采用调用api的形式,完成大模型的 RAG 、 Agent 、Eval 任务。

注:考虑到有同学希望在学习本项目之前,希望学习大模型的理论部分,如果想要进一步深入学习 LLM 的理论基础,并在理论的基础上进一步认识、应用 LLM,可以参考 Datawhale 的 so-large-llm课程。

注:如果有同学在学习本课程之后,想要自己动手开发大模型应用。同学们可以参考 Datawhale 的 动手学大模型应用开发 课程,该项目是一个面向小白开发者的大模型应用开发教程,旨在基于阿里云服务器,结合个人知识库助手项目,向同学们完整的呈现大模型应用开发流程。

什么是大模型?

大模型(LLM)狭义上指基于深度学习算法进行训练的自然语言处理(NLP)模型,主要应用于自然语言理解和生成等领域,广义上还包括机器视觉(CV)大模型、多模态大模型和科学计算大模型等。

百模大战正值火热,开源 LLM 层出不穷。如今国内外已经涌现了众多优秀开源 LLM,国外如 LLaMA、Alpaca,国内如 ChatGLM、BaiChuan、InternLM(书生·浦语)等。开源 LLM 支持用户本地部署、私域微调,每一个人都可以在开源 LLM 的基础上打造专属于自己的独特大模型。

然而,当前普通学生和用户想要使用这些大模型,需要具备一定的技术能力,才能完成模型的部署和使用。对于层出不穷又各有特色的开源 LLM,想要快速掌握一个开源 LLM 的应用方法,是一项比较有挑战的任务。

本项目旨在首先基于核心贡献者的经验,实现国内外主流开源 LLM 的部署、使用与微调教程;在实现主流 LLM 的相关部分之后,我们希望充分聚集共创者,一起丰富这个开源 LLM 的世界,打造更多、更全面特色 LLM 的教程。星火点点,汇聚成海。

我们希望成为 LLM 与普罗大众的阶梯,以自由、平等的开源精神,拥抱更恢弘而辽阔的 LLM 世界。

本项目适合以下学习者:

- 想要使用或体验 LLM,但无条件获得或使用相关 API;

- 希望长期、低成本、大量应用 LLM;

- 对开源 LLM 感兴趣,想要亲自上手开源 LLM;

- NLP 在学,希望进一步学习 LLM;

- 希望结合开源 LLM,打造领域特色的私域 LLM;

- 以及最广大、最普通的学生群体。

本项目拟围绕开源 LLM 应用全流程组织,包括环境配置及使用、部署应用、微调等,每个部分覆盖主流及特点开源 LLM:

-

Chat-嬛嬛: Chat-甄嬛是利用《甄嬛传》剧本中所有关于甄嬛的台词和语句,基于LLM进行LoRA微调得到的模仿甄嬛语气的聊天语言模型。

-

Tianji-天机:天机是一款基于人情世故社交场景,涵盖提示词工程 、智能体制作、 数据获取与模型微调、RAG 数据清洗与使用等全流程的大语言模型系统应用教程。

-

AMChat: AM (Advanced Mathematics) chat 是一个集成了数学知识和高等数学习题及其解答的大语言模型。该模型使用 Math 和高等数学习题及其解析融合的数据集,基于 InternLM2-Math-7B 模型,通过 xtuner 微调,专门设计用于解答高等数学问题。

-

数字生命: 本项目将以我为原型,利用特制的数据集对大语言模型进行微调,致力于创造一个能够真正反映我的个性特征的AI数字人——包括但不限于我的语气、表达方式和思维模式等等,因此无论是日常聊天还是分享心情,它都以一种既熟悉又舒适的方式交流,仿佛我在他们身边一样。整个流程是可迁移复制的,亮点是数据集的制作。

-

- [x] SpatialLM 3D点云理解与目标检测模型部署 @王泽宇

-

- [x] Hunyuan3D-2 系列模型部署 @林恒宇

- [x] Hunyuan3D-2 系列模型代码调用 @林恒宇

- [x] Hunyuan3D-2 系列模型Gradio部署 @林恒宇

- [x] Hunyuan3D-2 系列模型API Server @林恒宇

- [x] Hunyuan3D-2 Docker 镜像 @林恒宇

-

- [x] gemma-3-4b-it FastApi 部署调用 @杜森

- [x] gemma-3-4b-it ollama + open-webui部署 @孙超

- [x] gemma-3-4b-it evalscope 智商情商评测 @张龙斐

- [x] gemma-3-4b-it Lora 微调 @荞麦

- [x] gemma-3-4b-it Docker 镜像 @姜舒凡

-

- [x] DeepSeek-R1-Distill-Qwen-7B FastApi 部署调用 @骆秀韬

- [x] DeepSeek-R1-Distill-Qwen-7B Langchain 接入 @骆秀韬

- [x] DeepSeek-R1-Distill-Qwen-7B WebDemo 部署 @骆秀韬

- [x] DeepSeek-R1-Distill-Qwen-7B vLLM 部署调用 @骆秀韬

-

- [x] minicpm-o-2.6 FastApi 部署调用 @林恒宇

- [x] minicpm-o-2.6 WebDemo 部署 @程宏

- [x] minicpm-o-2.6 多模态语音能力 @邓恺俊

- [x] minicpm-o-2.6 可视化 LaTeX_OCR Lora 微调 @林泽毅

-

- [x] internlm3-8b-instruct FastApi 部署调用 @苏向标

- [x] internlm3-8b-instruct Langchian接入 @赵文恺

- [x] internlm3-8b-instruct WebDemo 部署 @王泽宇

- [x] internlm3-8b-instruct Lora 微调 @程宏

- [x] internlm3-8b-instruct o1-like推理链实现 @陈睿

-

- [x] phi4 FastApi 部署调用 @杜森

- [x] phi4 langchain 接入 @小罗

- [x] phi4 WebDemo 部署 @杜森

- [x] phi4 Lora 微调 @郑远婧

- [x] phi4 Lora 微调 NER任务 SwanLab 可视化记录版 @林泽毅

-

- [x] Qwen2.5-Coder-7B-Instruct FastApi部署调用 @赵文恺

- [x] Qwen2.5-Coder-7B-Instruct Langchian接入 @杨晨旭

- [x] Qwen2.5-Coder-7B-Instruct WebDemo 部署 @王泽宇

- [x] Qwen2.5-Coder-7B-Instruct vLLM 部署 @王泽宇

- [x] Qwen2.5-Coder-7B-Instruct Lora 微调 @荞麦

- [x] Qwen2.5-Coder-7B-Instruct Lora 微调 SwanLab 可视化记录版 @杨卓

-

- [x] Qwen2-vl-2B FastApi 部署调用 @姜舒凡

- [x] Qwen2-vl-2B WebDemo 部署 @赵伟

- [x] Qwen2-vl-2B vLLM 部署 @荞麦

- [x] Qwen2-vl-2B Lora 微调 @李柯辰

- [x] Qwen2-vl-2B Lora 微调 SwanLab 可视化记录版 @林泽毅

- [x] Qwen2-vl-2B Lora 微调案例 - LaTexOCR @林泽毅

-

- [x] Qwen2.5-7B-Instruct FastApi 部署调用 @娄天奥

- [x] Qwen2.5-7B-Instruct langchain 接入 @娄天奥

- [x] Qwen2.5-7B-Instruct vLLM 部署调用 @姜舒凡

- [x] Qwen2.5-7B-Instruct WebDemo 部署 @高立业

- [x] Qwen2.5-7B-Instruct Lora 微调 @左春生

- [x] Qwen2.5-7B-Instruct o1-like 推理链实现 @姜舒凡

- [x] Qwen2.5-7B-Instruct Lora 微调 SwanLab 可视化记录版 @林泽毅

-

- [x] OpenELM-3B-Instruct FastApi 部署调用 @王泽宇

- [x] OpenELM-3B-Instruct Lora 微调 @王泽宇

-

- [x] Llama3_1-8B-Instruct FastApi 部署调用 @不要葱姜蒜

- [x] Llama3_1-8B-Instruct langchain 接入 @张晋

- [x] Llama3_1-8B-Instruct WebDemo 部署 @张晋

- [x] Llama3_1-8B-Instruct Lora 微调 @不要葱姜蒜

- [x] 动手转换GGUF模型并使用Ollama本地部署 @Gaoboy

-

- [x] Gemma-2-9b-it FastApi 部署调用 @不要葱姜蒜

- [x] Gemma-2-9b-it langchain 接入 @不要葱姜蒜

- [x] Gemma-2-9b-it WebDemo 部署 @不要葱姜蒜

- [x] Gemma-2-9b-it Peft Lora 微调 @不要葱姜蒜

-

- [x] Yuan2.0-2B FastApi 部署调用 @张帆

- [x] Yuan2.0-2B Langchain 接入 @张帆

- [x] Yuan2.0-2B WebDemo部署 @张帆

- [x] Yuan2.0-2B vLLM部署调用 @张帆

- [x] Yuan2.0-2B Lora微调 @张帆

-

- [x] Yuan2.0-M32 FastApi 部署调用 @张帆

- [x] Yuan2.0-M32 Langchain 接入 @张帆

- [x] Yuan2.0-M32 WebDemo部署 @张帆

-

- [x] DeepSeek-Coder-V2-Lite-Instruct FastApi 部署调用 @姜舒凡

- [x] DeepSeek-Coder-V2-Lite-Instruct langchain 接入 @姜舒凡

- [x] DeepSeek-Coder-V2-Lite-Instruct WebDemo 部署 @Kailigithub

- [x] DeepSeek-Coder-V2-Lite-Instruct Lora 微调 @余洋

-

- [x] Index-1.9B-Chat FastApi 部署调用 @邓恺俊

- [x] Index-1.9B-Chat langchain 接入 @张友东

- [x] Index-1.9B-Chat WebDemo 部署 @程宏

- [x] Index-1.9B-Chat Lora 微调 @姜舒凡

-

- [x] Qwen2-7B-Instruct FastApi 部署调用 @康婧淇

- [x] Qwen2-7B-Instruct langchain 接入 @不要葱姜蒜

- [x] Qwen2-7B-Instruct WebDemo 部署 @三水

- [x] Qwen2-7B-Instruct vLLM 部署调用 @姜舒凡

- [x] Qwen2-7B-Instruct Lora 微调 @散步

-

- [x] GLM-4-9B-chat FastApi 部署调用 @张友东

- [x] GLM-4-9B-chat langchain 接入 @谭逸珂

- [x] GLM-4-9B-chat WebDemo 部署 @何至轩

- [x] GLM-4-9B-chat vLLM 部署 @王熠明

- [x] GLM-4-9B-chat Lora 微调 @肖鸿儒

- [x] GLM-4-9B-chat-hf Lora 微调 @付志远

-

- [x] Qwen1.5-7B-chat FastApi 部署调用 @颜鑫

- [x] Qwen1.5-7B-chat langchain 接入 @颜鑫

- [x] Qwen1.5-7B-chat WebDemo 部署 @颜鑫

- [x] Qwen1.5-7B-chat Lora 微调 @不要葱姜蒜

- [x] Qwen1.5-72B-chat-GPTQ-Int4 部署环境 @byx020119

- [x] Qwen1.5-MoE-chat Transformers 部署调用 @丁悦

- [x] Qwen1.5-7B-chat vLLM推理部署 @高立业

- [x] Qwen1.5-7B-chat Lora 微调 接入SwanLab实验管理平台 @黄柏特

-

- [x] gemma-2b-it FastApi 部署调用 @东东

- [x] gemma-2b-it langchain 接入 @东东

- [x] gemma-2b-it WebDemo 部署 @东东

- [x] gemma-2b-it Peft Lora 微调 @东东

-

- [x] Phi-3-mini-4k-instruct FastApi 部署调用 @郑皓桦

- [x] Phi-3-mini-4k-instruct langchain 接入 @郑皓桦

- [x] Phi-3-mini-4k-instruct WebDemo 部署 @丁悦

- [x] Phi-3-mini-4k-instruct Lora 微调 @丁悦

-

- [x] CharacterGLM-6B Transformers 部署调用 @孙健壮

- [x] CharacterGLM-6B FastApi 部署调用 @孙健壮

- [x] CharacterGLM-6B webdemo 部署 @孙健壮

- [x] CharacterGLM-6B Lora 微调 @孙健壮

-

- [x] LLaMA3-8B-Instruct FastApi 部署调用 @高立业

- [X] LLaMA3-8B-Instruct langchain 接入 @不要葱姜蒜

- [x] LLaMA3-8B-Instruct WebDemo 部署 @不要葱姜蒜

- [x] LLaMA3-8B-Instruct Lora 微调 @高立业

-

- [x] XVERSE-7B-Chat transformers 部署调用 @郭志航

- [x] XVERSE-7B-Chat FastApi 部署调用 @郭志航

- [x] XVERSE-7B-Chat langchain 接入 @郭志航

- [x] XVERSE-7B-Chat WebDemo 部署 @郭志航

- [x] XVERSE-7B-Chat Lora 微调 @郭志航

-

- [X] TransNormerLLM-7B-Chat FastApi 部署调用 @王茂霖

- [X] TransNormerLLM-7B-Chat langchain 接入 @王茂霖

- [X] TransNormerLLM-7B-Chat WebDemo 部署 @王茂霖

- [x] TransNormerLLM-7B-Chat Lora 微调 @王茂霖

-

- [x] BlueLM-7B-Chat FatApi 部署调用 @郭志航

- [x] BlueLM-7B-Chat langchain 接入 @郭志航

- [x] BlueLM-7B-Chat WebDemo 部署 @郭志航

- [x] BlueLM-7B-Chat Lora 微调 @郭志航

-

- [x] InternLM2-7B-chat FastApi 部署调用 @不要葱姜蒜

- [x] InternLM2-7B-chat langchain 接入 @不要葱姜蒜

- [x] InternLM2-7B-chat WebDemo 部署 @郑皓桦

- [x] InternLM2-7B-chat Xtuner Qlora 微调 @郑皓桦

-

- [x] DeepSeek-7B-chat FastApi 部署调用 @不要葱姜蒜

- [x] DeepSeek-7B-chat langchain 接入 @不要葱姜蒜

- [x] DeepSeek-7B-chat WebDemo @不要葱姜蒜

- [x] DeepSeek-7B-chat Lora 微调 @不要葱姜蒜

- [x] DeepSeek-7B-chat 4bits量化 Qlora 微调 @不要葱姜蒜

- [x] DeepSeek-MoE-16b-chat Transformers 部署调用 @Kailigithub

- [x] DeepSeek-MoE-16b-chat FastApi 部署调用 @Kailigithub

- [x] DeepSeek-coder-6.7b finetune colab @Swiftie

- [x] Deepseek-coder-6.7b webdemo colab @Swiftie

-

- [x] MiniCPM-2B-chat transformers 部署调用 @Kailigithub

- [x] MiniCPM-2B-chat FastApi 部署调用 @Kailigithub

- [x] MiniCPM-2B-chat langchain 接入 @不要葱姜蒜

- [x] MiniCPM-2B-chat webdemo 部署 @Kailigithub

- [x] MiniCPM-2B-chat Lora && Full 微调 @不要葱姜蒜

- [x] 官方友情链接:面壁小钢炮MiniCPM教程 @OpenBMB

- [x] 官方友情链接:MiniCPM-Cookbook @OpenBMB

-

- [x] Qwen-Audio FastApi 部署调用 @陈思州

- [x] Qwen-Audio WebDemo @陈思州

-

- [x] Qwen-7B-chat Transformers 部署调用 @李娇娇

- [x] Qwen-7B-chat FastApi 部署调用 @李娇娇

- [x] Qwen-7B-chat WebDemo @李娇娇

- [x] Qwen-7B-chat Lora 微调 @不要葱姜蒜

- [x] Qwen-7B-chat ptuning 微调 @肖鸿儒

- [x] Qwen-7B-chat 全量微调 @不要葱姜蒜

- [x] Qwen-7B-Chat 接入langchain搭建知识库助手 @李娇娇

- [x] Qwen-7B-chat 低精度训练 @肖鸿儒

- [x] Qwen-1_8B-chat CPU 部署 @散步

-

- [x] Yi-6B-chat FastApi 部署调用 @李柯辰

- [x] Yi-6B-chat langchain接入 @李柯辰

- [x] Yi-6B-chat WebDemo @肖鸿儒

- [x] Yi-6B-chat Lora 微调 @李娇娇

-

- [x] Baichuan2-7B-chat FastApi 部署调用 @惠佳豪

- [x] Baichuan2-7B-chat WebDemo @惠佳豪

- [x] Baichuan2-7B-chat 接入 LangChain 框架 @惠佳豪

- [x] Baichuan2-7B-chat Lora 微调 @惠佳豪

-

- [x] InternLM-Chat-7B Transformers 部署调用 @小罗

- [x] InternLM-Chat-7B FastApi 部署调用 @不要葱姜蒜

- [x] InternLM-Chat-7B WebDemo @不要葱姜蒜

- [x] Lagent+InternLM-Chat-7B-V1.1 WebDemo @不要葱姜蒜

- [x] 浦语灵笔图文理解&创作 WebDemo @不要葱姜蒜

- [x] InternLM-Chat-7B 接入 LangChain 框架 @Logan Zou

-

- [x] Atom-7B-chat WebDemo @Kailigithub

- [x] Atom-7B-chat Lora 微调 @Logan Zou

- [x] Atom-7B-Chat 接入langchain搭建知识库助手 @陈思州

- [x] Atom-7B-chat 全量微调 @Logan Zou

-

- [x] ChatGLM3-6B Transformers 部署调用 @丁悦

- [x] ChatGLM3-6B FastApi 部署调用 @丁悦

- [x] ChatGLM3-6B chat WebDemo @不要葱姜蒜

- [x] ChatGLM3-6B Code Interpreter WebDemo @不要葱姜蒜

- [x] ChatGLM3-6B 接入 LangChain 框架 @Logan Zou

- [x] ChatGLM3-6B Lora 微调 @肖鸿儒

-

[x] pip、conda 换源 @不要葱姜蒜

-

[x] AutoDL 开放端口 @不要葱姜蒜

-

模型下载

- [x] hugging face @不要葱姜蒜

- [x] hugging face 镜像下载 @不要葱姜蒜

- [x] modelscope @不要葱姜蒜

- [x] git-lfs @不要葱姜蒜

- [x] Openxlab

-

Issue && PR

- 宋志学(不要葱姜蒜)-项目负责人 (Datawhale成员-中国矿业大学(北京))

- 邹雨衡-项目负责人 (Datawhale成员-对外经济贸易大学)

- 肖鸿儒 (Datawhale成员-同济大学)

- 郭志航(内容创作者)

- 林泽毅(内容创作者-SwanLab产品负责人)

- 张帆(内容创作者-Datawhale成员)

- 姜舒凡(内容创作者-Datawhale成员)

- 王泽宇(内容创作者-太原理工大学-鲸英助教)

- 李娇娇 (Datawhale成员)

- 丁悦 (Datawhale-鲸英助教)

- 林恒宇(内容创作者-广东东软学院-鲸英助教)

- 惠佳豪 (Datawhale-宣传大使)

- 王茂霖(内容创作者-Datawhale成员)

- 孙健壮(内容创作者-对外经济贸易大学)

- 东东(内容创作者-谷歌开发者机器学习技术专家)

- 高立业(内容创作者-DataWhale成员)

- 荞麦(内容创作者-Datawhale成员)

- Kailigithub (Datawhale成员)

- 郑皓桦 (内容创作者)

- 李柯辰 (Datawhale成员)

- 程宏(内容创作者-Datawhale意向成员)

- 骆秀韬(内容创作者-Datawhale成员-似然实验室)

- 陈思州 (Datawhale成员)

- 散步 (Datawhale成员)

- 颜鑫 (Datawhale成员)

- 杜森(内容创作者-Datawhale成员-南阳理工学院)

- Swiftie (小米NLP算法工程师)

- 黄柏特(内容创作者-西安电子科技大学)

- 张友东(内容创作者-Datawhale成员)

- 余洋(内容创作者-Datawhale成员)

- 张晋(内容创作者-Datawhale成员)

- 娄天奥(内容创作者-中国科学院大学-鲸英助教)

- 左春生(内容创作者-Datawhale成员)

- 杨卓(内容创作者-西安电子科技大学-鲸英助教)

- 小罗 (内容创作者-Datawhale成员)

- 邓恺俊(内容创作者-Datawhale成员)

- 赵文恺(内容创作者-太原理工大学-鲸英助教)

- 付志远(内容创作者-海南大学)

- 郑远婧(内容创作者-鲸英助教-福州大学)

- 谭逸珂(内容创作者-对外经济贸易大学)

- 王熠明(内容创作者-Datawhale成员)

- 何至轩(内容创作者-鲸英助教)

- 康婧淇(内容创作者-Datawhale成员)

- 三水(内容创作者-鲸英助教)

- 杨晨旭(内容创作者-太原理工大学-鲸英助教)

- 赵伟(内容创作者-鲸英助教)

- 苏向标(内容创作者-广州大学-鲸英助教)

- 陈睿(内容创作者-西交利物浦大学-鲸英助教)

- 张龙斐(内容创作者-鲸英助教)

- 孙超(内容创作者-Datawhale成员)

注:排名根据贡献程度排序

- 特别感谢@Sm1les对本项目的帮助与支持

- 部分lora代码和讲解参考仓库:https://github.com/zyds/transformers-code.git

- 如果有任何想法可以联系我们 DataWhale 也欢迎大家多多提出 issue

- 特别感谢以下为教程做出贡献的同学!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for self-llm

Similar Open Source Tools

self-llm

This project is a Chinese tutorial for domestic beginners based on the AutoDL platform, providing full-process guidance for various open-source large models, including environment configuration, local deployment, and efficient fine-tuning. It simplifies the deployment, use, and application process of open-source large models, enabling more ordinary students and researchers to better use open-source large models and helping open and free large models integrate into the lives of ordinary learners faster.

Semi-Auto-NovelAI-to-Pixiv

Semi-Auto-NovelAI-to-Pixiv is a powerful tool that enables batch image generation with NovelAI, along with various other useful features in a super user-friendly interface. It allows users to create images, generate random images, upload images to Pixiv, apply filters, enhance images, add watermarks, and more. The tool also supports video-to-image conversion and various image manipulation tasks. It offers a seamless experience for users looking to automate image processing tasks.

Awesome-Chinese-LLM

Analyze the following text from a github repository (name and readme text at end) . Then, generate a JSON object with the following keys and provide the corresponding information for each key, ,'for_jobs' (List 5 jobs suitable for this tool,in lowercase letters), 'ai_keywords' (keywords of the tool,in lowercase letters), 'for_tasks' (list of 5 specific tasks user can use this tool to do,in less than 3 words,Verb + noun form,in daily spoken language,in lowercase letters).Answer in english languagesname:Awesome-Chinese-LLM readme:# Awesome Chinese LLM   An Awesome Collection for LLM in Chinese 收集和梳理中文LLM相关    自ChatGPT为代表的大语言模型(Large Language Model, LLM)出现以后,由于其惊人的类通用人工智能(AGI)的能力,掀起了新一轮自然语言处理领域的研究和应用的浪潮。尤其是以ChatGLM、LLaMA等平民玩家都能跑起来的较小规模的LLM开源之后,业界涌现了非常多基于LLM的二次微调或应用的案例。本项目旨在收集和梳理中文LLM相关的开源模型、应用、数据集及教程等资料,目前收录的资源已达100+个! 如果本项目能给您带来一点点帮助,麻烦点个⭐️吧~ 同时也欢迎大家贡献本项目未收录的开源模型、应用、数据集等。提供新的仓库信息请发起PR,并按照本项目的格式提供仓库链接、star数,简介等相关信息,感谢~

all-in-rag

All-in-RAG is a comprehensive repository for all things related to Randomized Algorithms and Graphs. It provides a wide range of resources, including implementations of various randomized algorithms, graph data structures, and visualization tools. The repository aims to serve as a one-stop solution for researchers, students, and enthusiasts interested in exploring the intersection of randomized algorithms and graph theory. Whether you are looking to study theoretical concepts, implement algorithms in practice, or visualize graph structures, All-in-RAG has got you covered.

99AI

99AI is a commercializable AI web application based on NineAI 2.4.2 (no authorization, no backdoors, no piracy, integrated front-end and back-end integration packages, supports Docker rapid deployment). The uncompiled source code is temporarily closed. Compared with the stable version, the development version is faster.

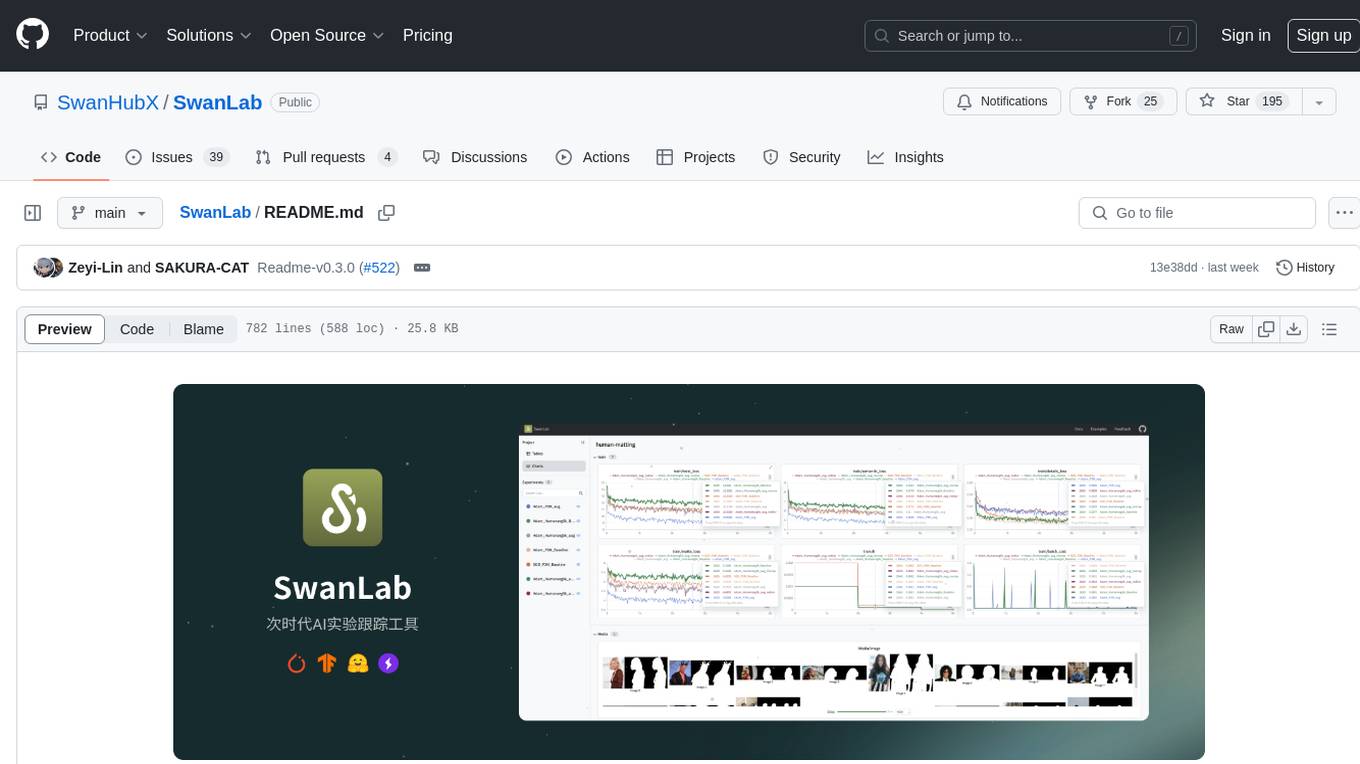

SwanLab

SwanLab is an open-source, lightweight AI experiment tracking tool that provides a platform for tracking, comparing, and collaborating on experiments, aiming to accelerate the research and development efficiency of AI teams by 100 times. It offers a friendly API and a beautiful interface, combining hyperparameter tracking, metric recording, online collaboration, experiment link sharing, real-time message notifications, and more. With SwanLab, researchers can document their training experiences, seamlessly communicate and collaborate with collaborators, and machine learning engineers can develop models for production faster.

base-llm

Base LLM is a comprehensive learning tutorial from traditional Natural Language Processing (NLP) to Large Language Models (LLM), covering core technologies such as word embeddings, RNN, Transformer architecture, BERT, GPT, and Llama series models. The project aims to help developers build a solid technical foundation by providing a clear path from theory to practical engineering. It covers NLP theory, Transformer architecture, pre-trained language models, advanced model implementation, and deployment processes.

AI-Vtuber

AI-VTuber is a highly customizable AI VTuber project that integrates with Bilibili live streaming, uses Zhifu API as the language base model, and includes intent recognition, short-term and long-term memory, cognitive library building, song library creation, and integration with various voice conversion, voice synthesis, image generation, and digital human projects. It provides a user-friendly client for operations. The project supports virtual VTuber template construction, multi-person device template management, real-time switching of virtual VTuber templates, and offers various practical tools such as video/audio crawlers, voice recognition, voice separation, voice synthesis, voice conversion, AI drawing, and image background removal.

do-research-in-AI

This repository is a collection of research lectures and experience sharing posts from frontline researchers in the field of AI. It aims to help individuals upgrade their research skills and knowledge through insightful talks and experiences shared by experts. The content covers various topics such as evaluating research papers, choosing research directions, research methodologies, and tips for writing high-quality scientific papers. The repository also includes discussions on academic career paths, research ethics, and the emotional aspects of research work. Overall, it serves as a valuable resource for individuals interested in advancing their research capabilities in the field of AI.

LLM-Dojo

LLM-Dojo is an open-source platform for learning and practicing large models, providing a framework for building custom large model training processes, implementing various tricks and principles in the llm_tricks module, and mainstream model chat templates. The project includes an open-source large model training framework, detailed explanations and usage of the latest LLM tricks, and a collection of mainstream model chat templates. The term 'Dojo' symbolizes a place dedicated to learning and practice, borrowing its meaning from martial arts training.

Awesome-Embodied-AI-Job

Awesome Embodied AI Job is a curated list of resources related to jobs in the field of Embodied Artificial Intelligence. It includes job boards, companies hiring, and resources for job seekers interested in roles such as robotics engineer, computer vision specialist, AI researcher, machine learning engineer, and data scientist.

LogChat

LogChat is an open-source and free AI chat client that supports various chat models and technologies such as ChatGPT, 讯飞星火, DeepSeek, LLM, TTS, STT, and Live2D. The tool provides a user-friendly interface designed using Qt Creator and can be used on Windows systems without any additional environment requirements. Users can interact with different AI models, perform voice synthesis and recognition, and customize Live2D character models. LogChat also offers features like language translation, AI platform integration, and menu items like screenshot editing, clock, and application launcher.

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface with features such as independent development documentation page support, service monitoring page configuration support, and third-party login support. Users can manage user registration time, optimize interface elements, and support features like online recharge, model pricing display, and sensitive word filtering. VoAPI also provides support for various AI models and platforms, with the ability to configure homepage templates, model information, and manufacturer information.

omnia

Omnia is a deployment tool designed to turn servers with RPM-based Linux images into functioning Slurm/Kubernetes clusters. It provides an Ansible playbook-based deployment for Slurm and Kubernetes on servers running an RPM-based Linux OS. The tool simplifies the process of setting up and managing clusters, making it easier for users to deploy and maintain their infrastructure.

kirara-ai

Kirara AI is a chatbot that supports mainstream large language models and chat platforms. It provides features such as image sending, keyword-triggered replies, multi-account support, personality settings, and support for various chat platforms like QQ, Telegram, Discord, and WeChat. The tool also supports HTTP server for Web API, popular large models like OpenAI and DeepSeek, plugin mechanism, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, custom workflows, web management interface, and built-in Frpc intranet penetration.

For similar tasks

LLM-FineTuning-Large-Language-Models

This repository contains projects and notes on common practical techniques for fine-tuning Large Language Models (LLMs). It includes fine-tuning LLM notebooks, Colab links, LLM techniques and utils, and other smaller language models. The repository also provides links to YouTube videos explaining the concepts and techniques discussed in the notebooks.

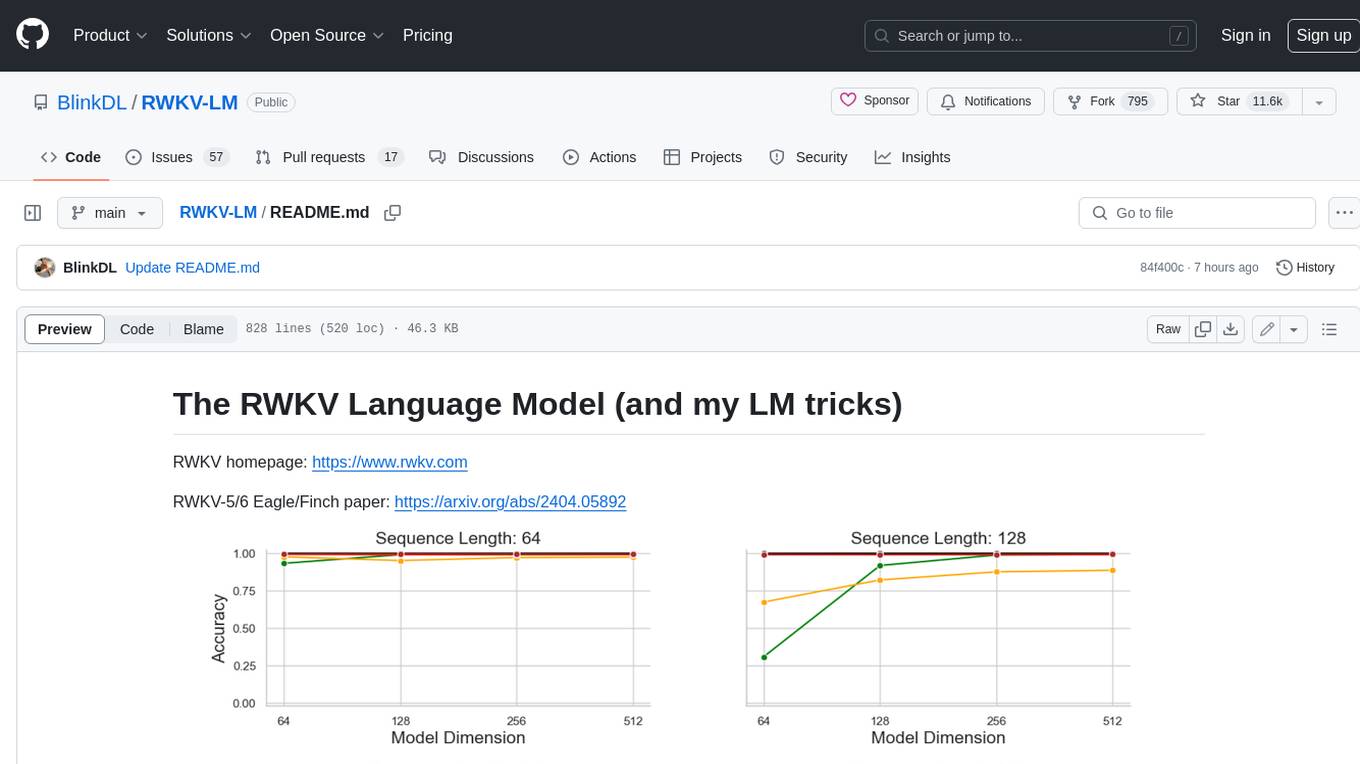

RWKV-LM

RWKV is an RNN with Transformer-level LLM performance, which can also be directly trained like a GPT transformer (parallelizable). And it's 100% attention-free. You only need the hidden state at position t to compute the state at position t+1. You can use the "GPT" mode to quickly compute the hidden state for the "RNN" mode. So it's combining the best of RNN and transformer - **great performance, fast inference, saves VRAM, fast training, "infinite" ctx_len, and free sentence embedding** (using the final hidden state).

awesome-transformer-nlp

This repository contains a hand-curated list of great machine (deep) learning resources for Natural Language Processing (NLP) with a focus on Generative Pre-trained Transformer (GPT), Bidirectional Encoder Representations from Transformers (BERT), attention mechanism, Transformer architectures/networks, Chatbot, and transfer learning in NLP.

self-llm

This project is a Chinese tutorial for domestic beginners based on the AutoDL platform, providing full-process guidance for various open-source large models, including environment configuration, local deployment, and efficient fine-tuning. It simplifies the deployment, use, and application process of open-source large models, enabling more ordinary students and researchers to better use open-source large models and helping open and free large models integrate into the lives of ordinary learners faster.

LLMs-from-scratch

This repository contains the code for coding, pretraining, and finetuning a GPT-like LLM and is the official code repository for the book Build a Large Language Model (From Scratch). In _Build a Large Language Model (From Scratch)_, you'll discover how LLMs work from the inside out. In this book, I'll guide you step by step through creating your own LLM, explaining each stage with clear text, diagrams, and examples. The method described in this book for training and developing your own small-but-functional model for educational purposes mirrors the approach used in creating large-scale foundational models such as those behind ChatGPT.

PaddleNLP

PaddleNLP is an easy-to-use and high-performance NLP library. It aggregates high-quality pre-trained models in the industry and provides out-of-the-box development experience, covering a model library for multiple NLP scenarios with industry practice examples to meet developers' flexible customization needs.

Tutorial

The Bookworm·Puyu large model training camp aims to promote the implementation of large models in more industries and provide developers with a more efficient platform for learning the development and application of large models. Within two weeks, you will learn the entire process of fine-tuning, deploying, and evaluating large models.

LLM-Finetune-Guide

This project provides a comprehensive guide to fine-tuning large language models (LLMs) with efficient methods like LoRA and P-tuning V2. It includes detailed instructions, code examples, and performance benchmarks for various LLMs and fine-tuning techniques. The guide also covers data preparation, evaluation, prediction, and running inference on CPU environments. By leveraging this guide, users can effectively fine-tune LLMs for specific tasks and applications.

For similar jobs

LLM-FineTuning-Large-Language-Models

This repository contains projects and notes on common practical techniques for fine-tuning Large Language Models (LLMs). It includes fine-tuning LLM notebooks, Colab links, LLM techniques and utils, and other smaller language models. The repository also provides links to YouTube videos explaining the concepts and techniques discussed in the notebooks.

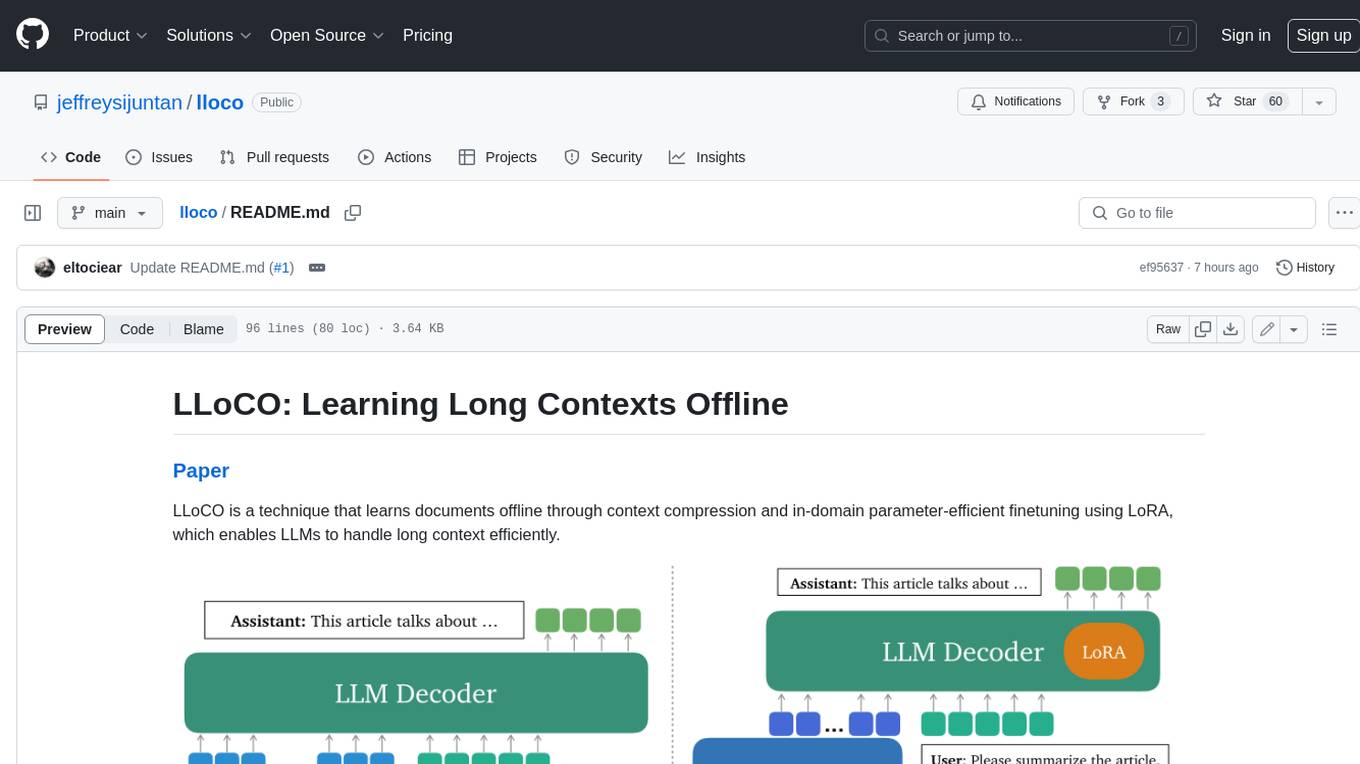

lloco

LLoCO is a technique that learns documents offline through context compression and in-domain parameter-efficient finetuning using LoRA, which enables LLMs to handle long context efficiently.

camel

CAMEL is an open-source library designed for the study of autonomous and communicative agents. We believe that studying these agents on a large scale offers valuable insights into their behaviors, capabilities, and potential risks. To facilitate research in this field, we implement and support various types of agents, tasks, prompts, models, and simulated environments.

llm-baselines

LLM-baselines is a modular codebase to experiment with transformers, inspired from NanoGPT. It provides a quick and easy way to train and evaluate transformer models on a variety of datasets. The codebase is well-documented and easy to use, making it a great resource for researchers and practitioners alike.

python-tutorial-notebooks

This repository contains Jupyter-based tutorials for NLP, ML, AI in Python for classes in Computational Linguistics, Natural Language Processing (NLP), Machine Learning (ML), and Artificial Intelligence (AI) at Indiana University.

EvalAI

EvalAI is an open-source platform for evaluating and comparing machine learning (ML) and artificial intelligence (AI) algorithms at scale. It provides a central leaderboard and submission interface, making it easier for researchers to reproduce results mentioned in papers and perform reliable & accurate quantitative analysis. EvalAI also offers features such as custom evaluation protocols and phases, remote evaluation, evaluation inside environments, CLI support, portability, and faster evaluation.

Weekly-Top-LLM-Papers

This repository provides a curated list of weekly published Large Language Model (LLM) papers. It includes top important LLM papers for each week, organized by month and year. The papers are categorized into different time periods, making it easy to find the most recent and relevant research in the field of LLM.

self-llm

This project is a Chinese tutorial for domestic beginners based on the AutoDL platform, providing full-process guidance for various open-source large models, including environment configuration, local deployment, and efficient fine-tuning. It simplifies the deployment, use, and application process of open-source large models, enabling more ordinary students and researchers to better use open-source large models and helping open and free large models integrate into the lives of ordinary learners faster.