qserve

QServe: W4A8KV4 Quantization and System Co-design for Efficient LLM Serving

Stars: 383

QServe is a serving system designed for efficient and accurate Large Language Models (LLM) on GPUs with W4A8KV4 quantization. It achieves higher throughput compared to leading industry solutions, allowing users to achieve A100-level throughput on cheaper L40S GPUs. The system introduces the QoQ quantization algorithm with 4-bit weight, 8-bit activation, and 4-bit KV cache, addressing runtime overhead challenges. QServe improves serving throughput for various LLM models by implementing compute-aware weight reordering, register-level parallelism, and fused attention memory-bound techniques.

README:

[Paper] [LMQuant Quantization Algorithm Library] [Website]

QServe: Efficient and accurate LLM serving system on GPUs with W4A8KV4 quantization (4-bit weights, 8-bit activations, and 4-bit KV cache). Compared with leading industry solution TensorRT-LLM, QServe achieves 1.2x-1.4x higher throughput when serving Llama-3-8B, and 2.4x-3.5x higher throughput when serving Qwen1.5-72B, on L40S and A100 GPUs. QServe also allows users to achieve A100-level throughput on 3x cheaper L40S GPUs.

Quantization can accelerate large language model (LLM) inference. Going beyond INT8 quantization, the research community is actively exploring even lower precision, such as INT4. Nonetheless, state-of-the-art INT4 quantization techniques only accelerate low-batch, edge LLM inference, failing to deliver performance gains in large-batch, cloud-based LLM serving. We uncover a critical issue: existing INT4 quantization methods suffer from significant runtime overhead (20-90%) when dequantizing either weights or partial sums on GPUs. To address this challenge, we introduce QoQ, a W4A8KV4 quantization algorithm with 4-bit weight, 8-bit activation, and 4-bit KV cache. QoQ stands for quattuor-octo-quattuor, which represents 4-8-4 in Latin. QoQ is implemented by the QServe inference library that achieves measured speedup. The key insight driving QServe is that the efficiency of LLM serving on GPUs is critically influenced by operations on low-throughput CUDA cores. Building upon this insight, in QoQ algorithm, we introduce progressive quantization that can allow low dequantization overhead in W4A8 GEMM. Additionally, we develop SmoothAttention to effectively mitigate the accuracy degradation incurred by 4-bit KV quantization. In the QServe system, we perform compute-aware weight reordering and take advantage of register-level parallelism to reduce dequantization latency. We also make fused attention memory-bound, harnessing the performance gain brought by KV4 quantization. As a result, QServe improves the maximum achievable serving throughput of Llama-3-8B by 1.2× on A100, 1.4× on L40S; and Qwen1.5-72B by 2.4× on A100, 3.5× on L40S, compared to TensorRT-LLM. Remarkably, QServe on L40S GPU can achieve even higher throughput than TensorRT-LLM on A100. Thus, QServe effectively reduces the dollar cost of LLM serving by 3×.

The current release supports:

- Blazingly fast system support for QoQ W4A8KV4 quantization (Algorithim release: LMQuant);

- Pre-quantized QServe model zoo with W4A8KV4 QoQ for mainstream LLMs;

- Fully PyTorch-based runtime and user interface for LLM serving, with TensorRT-LLM-level efficiency and PyTorch-level flexibility;

- Full support for in-flight batching and paged attention;

- Efficient fused CUDA kernels for W4A8/W8A8 GEMM and KV4/KV8 attention;

- Easy-to-use examples on speed benchmarking and large-scale end-to-end content generation (with W4A8KV4, in-flight batching and paged attention).

- [2024/07] QServe W4A8 GEMM kernels source code now available!

- [2024/05] 🔥 QServe is publicly released! Check our paper here.

- Clone this repository and navigate to the corresponding folder:

git clone https://github.com/mit-han-lab/qserve

cd qserve

- Install QServe

conda create -n QServe python=3.10 -y

conda activate QServe

pip install --upgrade pip # enable PEP 660 support

pip install -e .

pip install flash-attn --no-build-isolation

We recommend starting an interactive python CLI interface and run

import flash_attnto check whether FlashAttention-2 is installed successfully. If not, we recommend downloading pre-built wheels from here. Please notice:

- PyTorch version needs to exactly match with the version specified in the

.whlname; - Check out both

cxx11abiTRUEandcxx11abiFALSEwheels if one of them does not work; - It's recommended to match CUDA version specified in the

.whlfilename, but minor mismatches (e.g. 12.1 vs 12.2, or even 11.8 vs 12.2) usually do not matter.

- Compile the CUDA kernels.

cd kernels

python setup.py install

- If you want to clone our model zoo, please make sure that

git-lfsis installed.

We provide pre-quantized checkpoints for multiple model families. For example, for Llama-3-8B model, please run the following commands to download:

# git lfs install # install git lfs if not already

mkdir -p qserve_checkpoints && cd qserve_checkpoints

git clone https://huggingface.co/mit-han-lab/Llama-3-8B-QServe For other models, please refer to the detailed support list for the links to download:

| Models | W4A8-per-channel | W4A8-g128 |

|---|---|---|

| Llama3 | ✅ 8B/70B | ✅ 8B/70B |

| Llama3-Instruct | ✅ 8B/70B | ✅ 8B/70B |

| Llama2 | ✅ 7B/13B/70B | ✅ 7B/13B/70B |

| Vicuna | ✅ 7B/13B/30B | ✅ 7B/13B/30B |

| Mistral | ✅ 7B | ✅ 7B |

| Yi | ✅ 34B | ✅ 34B |

| Qwen | ✅ 72B | ✅ 72B |

For flagship datacenter GPUs such as the A100, it is recommended to use QServe-per-channel, while for inference datacenter GPUs like the L40S, QServe-per-group is the recommended approach.

If you are interested in generating the quantized checkpoints on your own, please follow the instructions in LMQuant to run QoQ quantization and dump the fake-quantized models. We then provide checkpoint converter to real-quantize and pack the model into QServe format:

python checkpoint_converter.py --model-path <hf-model-path> --quant-path <fake-quant-model-path> --group-size -1 --device cpu

# <fake-quant-model-path> is a directory generated by LMQuant, including model.pt and scale.ptWe also provide a script to run the checkpoint converter. The final model will be automatically stored under qserve_checkpoints.

We support both offline benchmarking and online generation (in-flight-batching) in QServe.

- Offline speed benchmarking (Batched input sequences, fixed context length = 1024 and generation length = 512). We take Llama-3-8B (per-channel quant) as an example here. Please make sure that you have already downloaded the QoQ-quantized QServe model.

export MODEL_PATH=./qserve_checkpoints/Llama-3-8B-QServe # Please set the path accordingly

GLOBAL_BATCH_SIZE=128 \

python qserve_benchmark.py \

--model $MODEL_PATH \

--benchmarking \

--precision w4a8kv4 \

--group-size -1If you hope to use larger batch sizes such as 256, you may need to change NUM_GPU_PAGE_BLOCKS to a larger value than the automatically-determined value on A100. For example:

export MODEL_PATH=./qserve_checkpoints/Llama-3-8B-QServe # Please set the path accordingly

GLOBAL_BATCH_SIZE=256 \

NUM_GPU_PAGE_BLOCKS=6400 \

python qserve_benchmark.py \

--model $MODEL_PATH \

--benchmarking \

--precision w4a8kv4 \

--group-size -1- This is an online demonstration of batched generation, showcasing in-flight batching, paged attention of W4A8KV4 QoQ LLMs. We will randomly sample a set of safety-moderated conversations from the WildChat dataset and process them efficiently through in-flight batching.

export MODEL_PATH=./qserve_checkpoints/Llama-3-8B-Instruct-QServe # Please set the path accordingly

python qserve_e2e_generation.py \

--model $MODEL_PATH \

--ifb-mode \

--precision w4a8kv4 \

--quant-path $MODEL_PATH \

--group-size -1-

Argument list in QServe

Below are some frequently used arguments in QServe interface:

-

--model: Path to the folder containing hf model configs. Can be the same as--quant-pathif you directly download the models from QServe model zoo. -

--quant-path: Path to the folder containing quantized LLM checkpoints. -

--precision: The precision for GEMM in QServe, please choose from the following values:w4a8kv4,w4a8kv8,w4a8(meansw4a8kv8),w8a8kv4,w8a8kv8,w8a8(meansw8a8kv8). Default:w4a8kv4. -

--group-size: Group size for weight quantization, -1 means per-channel quantization. QServe only supports -1 or 128. Please make sure your group size matches the checkpoint. -

--max-num-batched-tokens: Maximum number of batched tokens per iteration. Default: 262144. -

--max-num-seqs: Maximum number of sequences per iteration. Default: 256. Remember to increase it if you want larger batch sizes. -

--ifb-mode: Enable in-flight batching mode. Suggest to activate in e2e generation. -

--benchmarking: Enable speed profiling mode. Benchmark settings aligned with TensorRT-LLM.Environment variables in QServe:

-

GLOBAL_BATCH_SIZE: Batch size used in offline speed benchmarking. -

NUM_GPU_PAGE_BLOCKS: Number of pages to be allocated on GPU. If not specified, it will be automatically determined based on available GPU memory. Note that the current automatic GPU page allocation algorithm is very conservative. It is recommended to manually set this value to a larger number if you observe that GPU memory utilization is relatively low.

- One-line scripts:

We also provide sample scripts in QServe.

- End to end generation:

./scripts/run_e2e.sh; - Speed benchmarking:

./scripts/benchmark/benchmark_a100.shor./scripts/benchmark/benchmark_l40s.sh.

These scripts are expected to be executed in the QServe project folder (not in the scripts folder). Please note that git-lfs is needed for downloading QServe benchmark config files from huggingface before running the benchmark scripts.

We evaluate QServe W4A8KV4 quantization on a wide range of mainstream LLMs. QServe consistently outperforms existing W4A4 or W4A8 solutions from the accuracy perspective, while providing State-of-the-Art LLM serving efficiency.

When serving the large language models Llama-3-8B and Qwen1.5-72B on L40S and A100 GPUs, QServe demonstrates superior performance, achieving 1.2x-1.4x higher throughput compared to the leading industry solution, TensorRT-LLM, for Llama-3-8B, and a 2.4x-3.5x higher throughput for Qwen1.5-72B. It is also able to deliver higher throughput and accomodate the same batch size on L40S compared with TensorRT-LLM on A100 for six of eight models benchmarked, effectively saving the dollar cost of LLM serving by around 3x.

Benchmarking setting: the criterion is maximum achieveable throughput on NVIDIA GPUs, and the input context length is 1024 tokens, output generation length is 512 tokens. For all systems that support paged attention, we enable this feature. In-flight batching is turned off in the efficiency benchmarks.

| L40S (48G) | Llama-3-8B | Llama-2-7B | Mistral-7B | Llama-2-13B | Llama-30B | Yi-34B | Llama-2-70B | Qwen-1.5-72B |

|---|---|---|---|---|---|---|---|---|

| TRT-LLM-FP16 | 1326 | 444 | 1566 | 92 | OOM | OOM | OOM | OOM |

| TRT-LLM-W4A16 | 1431 | 681 | 1457 | 368 | 148 | 313 | 119 | 17 |

| TRT-LLM-W8A8 | 2634 | 1271 | 2569 | 440 | 123 | 364 | OOM | OOM |

| Atom-W4A4 | -- | 2120 | -- | -- | -- | -- | -- | -- |

| QuaRot-W4A4 | -- | 805 | -- | 413 | 133 | -- | -- | 15 |

| QServe-W4A8KV4 | 3656 | 2394 | 3774 | 1327 | 504 | 869 | 286 | 59 |

| Throughput Increase* | 1.39x | 1.13x | 1.47x | 3.02x | 3.41x | 2.39x | 2.40x | 3.47x |

| A100 (80G) | Llama-3-8B | Llama-2-7B | Mistral-7B | Llama-2-13B | Llama-30B | Yi-34B | Llama-2-70B | Qwen-1.5-72B |

|---|---|---|---|---|---|---|---|---|

| TRT-LLM-FP16 | 2503 | 1549 | 2371 | 488 | 80 | 145 | OOM | OOM |

| TRT-LLM-W4A16 | 2370 | 1549 | 2403 | 871 | 352 | 569 | 358 | 143 |

| TRT-LLM-W8A8 | 2396 | 2334 | 2427 | 1277 | 361 | 649 | 235 | 53 |

| Atom-W4A4 | -- | 1160 | -- | -- | -- | -- | -- | -- |

| QuaRot-W4A4 | -- | 1370 | -- | 289 | 267 | -- | -- | 68 |

| QServe-W4A8KV4 | 3005 | 2908 | 2970 | 1741 | 749 | 803 | 419 | 340 |

| Throughput Increase* | 1.20x | 1.25x | 1.22x | 1.36x | 2.07x | 1.23x | 1.17x | 2.38x |

The absolute token generation throughputs of QServe and baseline systems (Unit: tokens/second. -- means unsupported). All experiments were

conducted under the same device memory budget. Throughput increase of QServe is calculated with regard to the best baseline in each column. It is recommended to use QServe-per-channel on high-end datacenter GPUs like A100 and QServe-per-group is recommended on inference GPUs like L40S.

Max throughput batch sizes used by QServe:

| Device | Llama-3-8B | Llama-2-7B | Mistral-7B | Llama-2-13B | Llama-30B | Yi-34B | Llama-2-70B | Qwen-1.5-72B |

|---|---|---|---|---|---|---|---|---|

| L40S | 128 | 128 | 128 | 75 | 32 | 64 | 24 | 4 |

| A100 | 256 | 190 | 256 | 128 | 64 | 196 | 96 | 32 |

We recommend direcly setting the NUM_GPU_PAGE_BLOCKS environmental variable to 25 * batch size, since in our benchmarking setting we have a context length of 1024 and generation length of 512, which corresponds to 24 pages (each page contains 64 tokens). We leave some buffer by allocating one more page for each sequence.

QServe also maintains high accuracy thanks to the QoQ algorithm provided in our LMQuant quantization library.

Below is the WikiText2 perplexity evaluated with 2048 sequence length. The lower is the better.

| Models | Precision | Llama-3 8B | Llama-2 7B | Llama-2 13B | Llama-2 70B | Llama 7B | Llama 13B | Llama 30B | Mistral 7B | Yi 34B |

|---|---|---|---|---|---|---|---|---|---|---|

| FP16 | 6.14 | 5.47 | 4.88 | 3.32 | 5.68 | 5.09 | 4.10 | 5.25 | 4.60 | |

| SmoothQuant | W8A8 | 6.28 | 5.54 | 4.95 | 3.36 | 5.73 | 5.13 | 4.23 | 5.29 | 4.69 |

| GPTQ-R | W4A16 g128 | 6.56 | 5.63 | 4.99 | 3.43 | 5.83 | 5.20 | 4.22 | 5.39 | 4.68 |

| AWQ | W4A16 g128 | 6.54 | 5.60 | 4.97 | 3.41 | 5.78 | 5.19 | 4.21 | 5.37 | 4.67 |

| QuaRot | W4A4 | 8.33 | 6.19 | 5.45 | 3.83 | 6.34 | 5.58 | 4.64 | 5.77 | NaN |

| Atom | W4A4 g128 | 7.76 | 6.12 | 5.31 | 3.73 | 6.25 | 5.52 | 4.61 | 5.76 | 4.97 |

| QoQ | W4A8KV4 | 6.89 | 5.75 | 5.12 | 3.52 | 5.93 | 5.28 | 4.34 | 5.45 | 4.74 |

| QoQ | W4A8KV4 g128 | 6.76 | 5.70 | 5.08 | 3.47 | 5.89 | 5.25 | 4.28 | 5.42 | 4.76 |

* SmoothQuant is evaluated with per-tensor static KV cache quantization.

If you find QServe useful or relevant to your research and work, please kindly cite our paper:

@article{lin2024qserve,

title={QServe: W4A8KV4 Quantization and System Co-design for Efficient LLM Serving},

author={Lin*, Yujun and Tang*, Haotian and Yang*, Shang and Zhang, Zhekai and Xiao, Guangxuan and Gan, Chuang and Han, Song},

journal={arXiv preprint arXiv:2405.04532},

year={2024}

}

The QServe serving library is maintained by the following research team:

- Haotian Tang, system lead, MIT EECS;

- Shang Yang, system lead, MIT EECS;

- Yujun Lin, algorithm lead, MIT EECS;

- Zhekai Zhang, system evaluation, MIT EECS;

- Guangxuan Xiao, algorithm evaluation, MIT EECS;

- Chuang Gan, advisor, UMass Amherst and MIT-IBM Watson AI Lab;

- Song Han, advisor, MIT EECS and NVIDIA.

The following projects are highly related to QServe. Our group has developed full-stack application-algorithm-system-hardware support for efficient large models, receiving 9k+ GitHub stars and over 1M Huggingface community downloads.

You are also welcome to check out MIT HAN LAB for other exciting projects on Efficient Generative AI!

-

[Algorithm] LMQuant: Large Foundation Models Quantization

-

[Algorithm] AWQ: Activation-aware Weight Quantization for LLM Compression and Acceleration

-

[System] TinyChat: Efficient and Lightweight Chatbot with AWQ

-

[Application] VILA: On Pretraining of Visual-Language Models

-

[Algorithm] SmoothQuant: Accurate and Efficient Post-Training Quantization for Large Language Models

-

[Algorithm] StreamingLLM: Efficient Streaming Language Models with Attention Sinks

-

[Hardware] SpAtten: Efficient Sparse Attention Architecture with Cascade Token and Head Pruning

We thank Julien Demouth, Jun Yang, and Dongxu Yang from NVIDIA for the helpful discussions. QServe is also inspired by many open-source libraries, including (but not limited to) TensorRT-LLM, vLLM, vLLM-SmoothQuant, FlashAttention-2, LMDeploy, TorchSparse++, GPTQ, QuaRot and Atom.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for qserve

Similar Open Source Tools

qserve

QServe is a serving system designed for efficient and accurate Large Language Models (LLM) on GPUs with W4A8KV4 quantization. It achieves higher throughput compared to leading industry solutions, allowing users to achieve A100-level throughput on cheaper L40S GPUs. The system introduces the QoQ quantization algorithm with 4-bit weight, 8-bit activation, and 4-bit KV cache, addressing runtime overhead challenges. QServe improves serving throughput for various LLM models by implementing compute-aware weight reordering, register-level parallelism, and fused attention memory-bound techniques.

UniCoT

Uni-CoT is a unified reasoning framework that extends Chain-of-Thought (CoT) principles to the multimodal domain, enabling Multimodal Large Language Models (MLLMs) to perform interpretable, step-by-step reasoning across both text and vision. It decomposes complex multimodal tasks into structured, manageable steps that can be executed sequentially or in parallel, allowing for more scalable and systematic reasoning.

IDvs.MoRec

This repository contains the source code for the SIGIR 2023 paper 'Where to Go Next for Recommender Systems? ID- vs. Modality-based Recommender Models Revisited'. It provides resources for evaluating foundation, transferable, multi-modal, and LLM recommendation models, along with datasets, pre-trained models, and training strategies for IDRec and MoRec using in-batch debiased cross-entropy loss. The repository also offers large-scale datasets, code for SASRec with in-batch debias cross-entropy loss, and information on joining the lab for research opportunities.

BitBLAS

BitBLAS is a library for mixed-precision BLAS operations on GPUs, for example, the $W_{wdtype}A_{adtype}$ mixed-precision matrix multiplication where $C_{cdtype}[M, N] = A_{adtype}[M, K] \times W_{wdtype}[N, K]$. BitBLAS aims to support efficient mixed-precision DNN model deployment, especially the $W_{wdtype}A_{adtype}$ quantization in large language models (LLMs), for example, the $W_{UINT4}A_{FP16}$ in GPTQ, the $W_{INT2}A_{FP16}$ in BitDistiller, the $W_{INT2}A_{INT8}$ in BitNet-b1.58. BitBLAS is based on techniques from our accepted submission at OSDI'24.

EasyEdit

EasyEdit is a Python package for edit Large Language Models (LLM) like `GPT-J`, `Llama`, `GPT-NEO`, `GPT2`, `T5`(support models from **1B** to **65B**), the objective of which is to alter the behavior of LLMs efficiently within a specific domain without negatively impacting performance across other inputs. It is designed to be easy to use and easy to extend.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features like Virtual API System, Solvable Queries, and Stable Evaluation System. The benchmark ensures consistency through a caching system and API simulators, filters queries based on solvability using LLMs, and evaluates model performance using GPT-4 with metrics like Solvable Pass Rate and Solvable Win Rate.

optillm

optillm is an OpenAI API compatible optimizing inference proxy implementing state-of-the-art techniques to enhance accuracy and performance of LLMs, focusing on reasoning over coding, logical, and mathematical queries. By leveraging additional compute at inference time, it surpasses frontier models across diverse tasks.

MooER

MooER (摩耳) is an LLM-based speech recognition and translation model developed by Moore Threads. It allows users to transcribe speech into text (ASR) and translate speech into other languages (AST) in an end-to-end manner. The model was trained using 5K hours of data and is now also available with an 80K hours version. MooER is the first LLM-based speech model trained and inferred using domestic GPUs. The repository includes pretrained models, inference code, and a Gradio demo for a better user experience.

EVE

EVE is an official PyTorch implementation of Unveiling Encoder-Free Vision-Language Models. The project aims to explore the removal of vision encoders from Vision-Language Models (VLMs) and transfer LLMs to encoder-free VLMs efficiently. It also focuses on bridging the performance gap between encoder-free and encoder-based VLMs. EVE offers a superior capability with arbitrary image aspect ratio, data efficiency by utilizing publicly available data for pre-training, and training efficiency with a transparent and practical strategy for developing a pure decoder-only architecture across modalities.

pai-opencode

PAI-OpenCode is a complete port of Daniel Miessler's Personal AI Infrastructure (PAI) to OpenCode, an open-source, provider-agnostic AI coding assistant. It brings modular capabilities, dynamic multi-agent orchestration, session history, and lifecycle automation to personalize AI assistants for users. With support for 75+ AI providers, PAI-OpenCode offers dynamic per-task model routing, full PAI infrastructure, real-time session sharing, and multiple client options. The tool optimizes cost and quality with a 3-tier model strategy and a 3-tier research system, allowing users to switch presets for different routing strategies. PAI-OpenCode's architecture preserves PAI's design while adapting to OpenCode, documented through Architecture Decision Records (ADRs).

MiniCPM-V

MiniCPM-V is a series of end-side multimodal LLMs designed for vision-language understanding. The models take image and text inputs to provide high-quality text outputs. The series includes models like MiniCPM-Llama3-V 2.5 with 8B parameters surpassing proprietary models, and MiniCPM-V 2.0, a lighter model with 2B parameters. The models support over 30 languages, efficient deployment on end-side devices, and have strong OCR capabilities. They achieve state-of-the-art performance on various benchmarks and prevent hallucinations in text generation. The models can process high-resolution images efficiently and support multilingual capabilities.

deepfabric

DeepFabric is a CLI tool and SDK designed for researchers and developers to generate high-quality synthetic datasets at scale using large language models. It leverages a graph and tree-based architecture to create diverse and domain-specific datasets while minimizing redundancy. The tool supports generating Chain of Thought datasets for step-by-step reasoning tasks and offers multi-provider support for using different language models. DeepFabric also allows for automatic dataset upload to Hugging Face Hub and uses YAML configuration files for flexibility in dataset generation.

DeepRetrieval

DeepRetrieval is a tool designed to enhance search engines and retrievers using Large Language Models (LLMs) and Reinforcement Learning (RL). It allows LLMs to learn how to search effectively by integrating with search engine APIs and customizing reward functions. The tool provides functionalities for data preparation, training, evaluation, and monitoring search performance. DeepRetrieval aims to improve information retrieval tasks by leveraging advanced AI techniques.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features such as a Virtual API System with caching and API simulators, a new set of solvable queries determined by LLMs, and a Stable Evaluation System using GPT-4. The Virtual API Server can be set up either by building from source or using a prebuilt Docker image. Users can test the server using provided scripts and evaluate models with Solvable Pass Rate and Solvable Win Rate metrics. The tool also includes model experiments results comparing different models' performance.

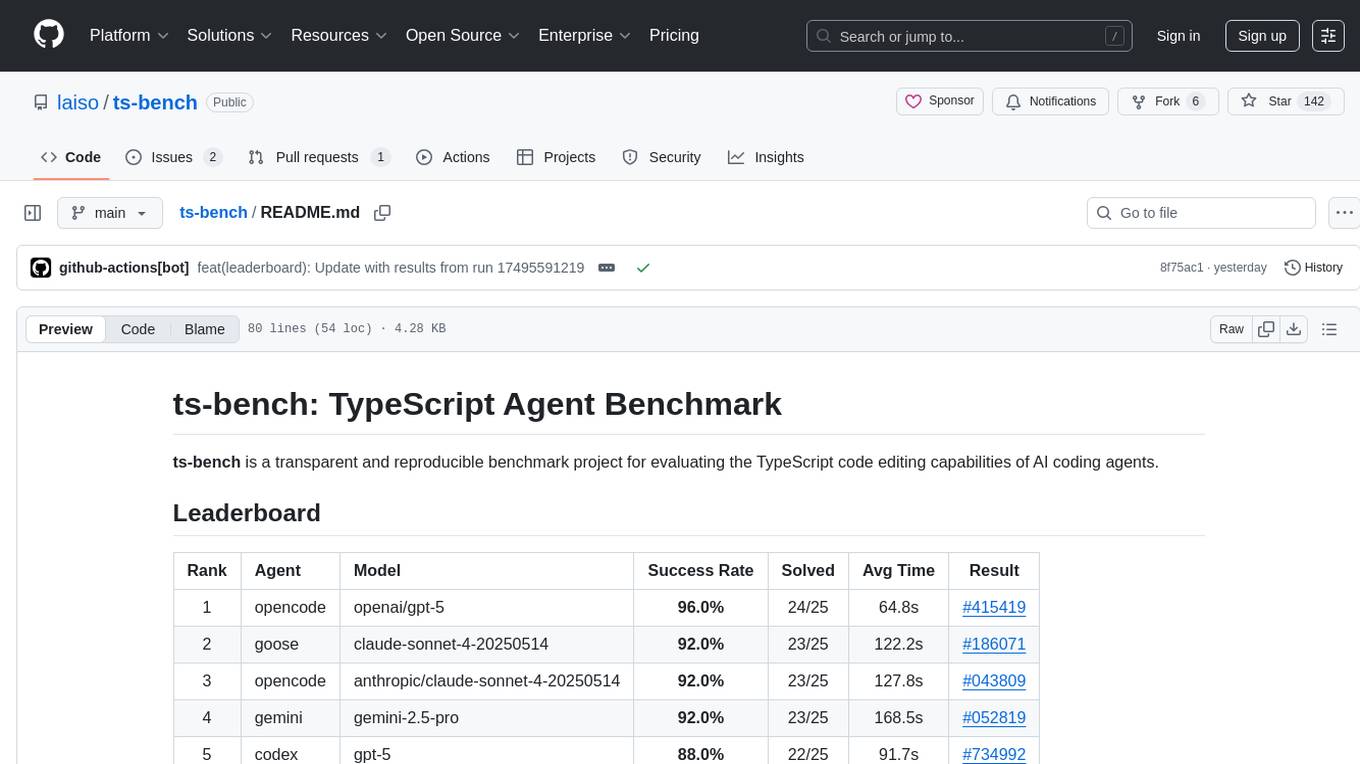

ts-bench

TS-Bench is a performance benchmarking tool for TypeScript projects. It provides detailed insights into the performance of TypeScript code, helping developers optimize their projects. With TS-Bench, users can measure and compare the execution time of different code snippets, functions, or modules. The tool offers a user-friendly interface for running benchmarks and analyzing the results. TS-Bench is a valuable asset for developers looking to enhance the performance of their TypeScript applications.

KwaiAgents

KwaiAgents is a series of Agent-related works open-sourced by the [KwaiKEG](https://github.com/KwaiKEG) from [Kuaishou Technology](https://www.kuaishou.com/en). The open-sourced content includes: 1. **KAgentSys-Lite**: a lite version of the KAgentSys in the paper. While retaining some of the original system's functionality, KAgentSys-Lite has certain differences and limitations when compared to its full-featured counterpart, such as: (1) a more limited set of tools; (2) a lack of memory mechanisms; (3) slightly reduced performance capabilities; and (4) a different codebase, as it evolves from open-source projects like BabyAGI and Auto-GPT. Despite these modifications, KAgentSys-Lite still delivers comparable performance among numerous open-source Agent systems available. 2. **KAgentLMs**: a series of large language models with agent capabilities such as planning, reflection, and tool-use, acquired through the Meta-agent tuning proposed in the paper. 3. **KAgentInstruct**: over 200k Agent-related instructions finetuning data (partially human-edited) proposed in the paper. 4. **KAgentBench**: over 3,000 human-edited, automated evaluation data for testing Agent capabilities, with evaluation dimensions including planning, tool-use, reflection, concluding, and profiling.

For similar tasks

qserve

QServe is a serving system designed for efficient and accurate Large Language Models (LLM) on GPUs with W4A8KV4 quantization. It achieves higher throughput compared to leading industry solutions, allowing users to achieve A100-level throughput on cheaper L40S GPUs. The system introduces the QoQ quantization algorithm with 4-bit weight, 8-bit activation, and 4-bit KV cache, addressing runtime overhead challenges. QServe improves serving throughput for various LLM models by implementing compute-aware weight reordering, register-level parallelism, and fused attention memory-bound techniques.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.