auto-news

A personal news aggregator to pull information from multi-sources + LLM (ChatGPT/Gemini/Ollama via LangChain) to help us reading efficiently with less noises, the sources including: Tweets, RSS, YouTube, Web Articles, Reddit, and personal Journal notes.

Stars: 465

Auto-News is an automatic news aggregator tool that utilizes Large Language Models (LLM) to pull information from various sources such as Tweets, RSS feeds, YouTube videos, web articles, Reddit, and journal notes. The tool aims to help users efficiently read and filter content based on personal interests, providing a unified reading experience and organizing information effectively. It features feed aggregation with summarization, transcript generation for videos and articles, noise reduction, task organization, and deep dive topic exploration. The tool supports multiple LLM backends, offers weekly top-k aggregations, and can be deployed on Linux/MacOS using docker-compose or Kubernetes.

README:

The ultimate personal productivity content aggregator: Designed to effortlessly navigate and maximize your efficiency in the AI era.

- [x] Super busy but still wants to catch the trends in a few minutes?

Yes - [x] Want to be a super individual, to handle vast amounts of information in the GenAI world?

Yes - [x] Become a super executor, tell less, and achieve more?

Yes

With auto-news you'll get:

-

Faster learning:Navigate trends and catch up in minutes. -

Recap reinforcement:Smooth and periodic memory recall. -

Intelligent actions:Route actions with a single message.

In the AI era, speed and productivity are extremely important. We need AI tools to help us talk less and achieve more!

For more background, see this Blog post and these videos Introduction, Data flows.

- Aggregate feed sources (including RSS, Reddit, Tweets, etc), and proactive generate with insights

- Generate insights of YouTube videos (Do transcoding if no transcript provided)

- Generate insights of Web Articles

- Filter content based on personal interests and remove 80%+ noises

- Weekly Top-k Recap

- Unified and central reading experience (RSS reader-like style, Notion-based)

- Generate

TODOlist from takeaways and journal notes - Organize Journal notes with insights daily

- [Multi-Agents] Experimental Deepdive topic via web search agent and autogen

- Multi-LLM backend: OpenAI ChatGPT, Google Gemini, Ollama

https://github.com/finaldie/auto-news/wiki

Great News! Now we have the in-house managed solution, it is powered by the auto-news as the backend. For the client App, download it from App Store or Google Play, install and enjoy. It is the quickest and easiest solution for anyone who doesn't want to/or does not have time to set up by themselves. (Notes: App is available in US and Canada at this point)

For more details, please check out the App official website. Click below to install the App directly:

The client is using Notion, and the backend is fully self-hosted by ourselves.

| Component | Minimum | Recommended |

|---|---|---|

| OS | Linux, MacOS | Linux, MacOS |

| CPU | 2 cores | 8 cores |

| Memory | 6GB | 16GB |

| Disk | 20GB | 100GB |

Feel free to open an issue and start the conversation.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for auto-news

Similar Open Source Tools

auto-news

Auto-News is an automatic news aggregator tool that utilizes Large Language Models (LLM) to pull information from various sources such as Tweets, RSS feeds, YouTube videos, web articles, Reddit, and journal notes. The tool aims to help users efficiently read and filter content based on personal interests, providing a unified reading experience and organizing information effectively. It features feed aggregation with summarization, transcript generation for videos and articles, noise reduction, task organization, and deep dive topic exploration. The tool supports multiple LLM backends, offers weekly top-k aggregations, and can be deployed on Linux/MacOS using docker-compose or Kubernetes.

MarkFlowy

MarkFlowy is a lightweight and feature-rich Markdown editor with built-in AI capabilities. It supports one-click export of conversations, translation of articles, and obtaining article abstracts. Users can leverage large AI models like DeepSeek and Chatgpt as intelligent assistants. The editor provides high availability with multiple editing modes and custom themes. Available for Linux, macOS, and Windows, MarkFlowy aims to offer an efficient, beautiful, and data-safe Markdown editing experience for users.

biochatter

Generative AI models have shown tremendous usefulness in increasing accessibility and automation of a wide range of tasks. This repository contains the `biochatter` Python package, a generic backend library for the connection of biomedical applications to conversational AI. It aims to provide a common framework for deploying, testing, and evaluating diverse models and auxiliary technologies in the biomedical domain. BioChatter is part of the BioCypher ecosystem, connecting natively to BioCypher knowledge graphs.

note-gen

Note-gen is a simple tool for generating notes automatically based on user input. It uses natural language processing techniques to analyze text and extract key information to create structured notes. The tool is designed to save time and effort for users who need to summarize large amounts of text or generate notes quickly. With note-gen, users can easily create organized and concise notes for study, research, or any other purpose.

coreply

Coreply is an open-source Android app that provides texting suggestions while typing, enhancing the typing experience with intelligent, context-aware suggestions. It supports various texting apps and offers real-time AI suggestions, customizable LLM settings, and ensures no data collection. Users can install the app, configure it with an API key, and start receiving suggestions while typing in messaging apps. The tool supports different AI models from providers like OpenAI, Google AI Studio, Openrouter, Groq, and Codestral for chat completion and fill-in-the-middle tasks.

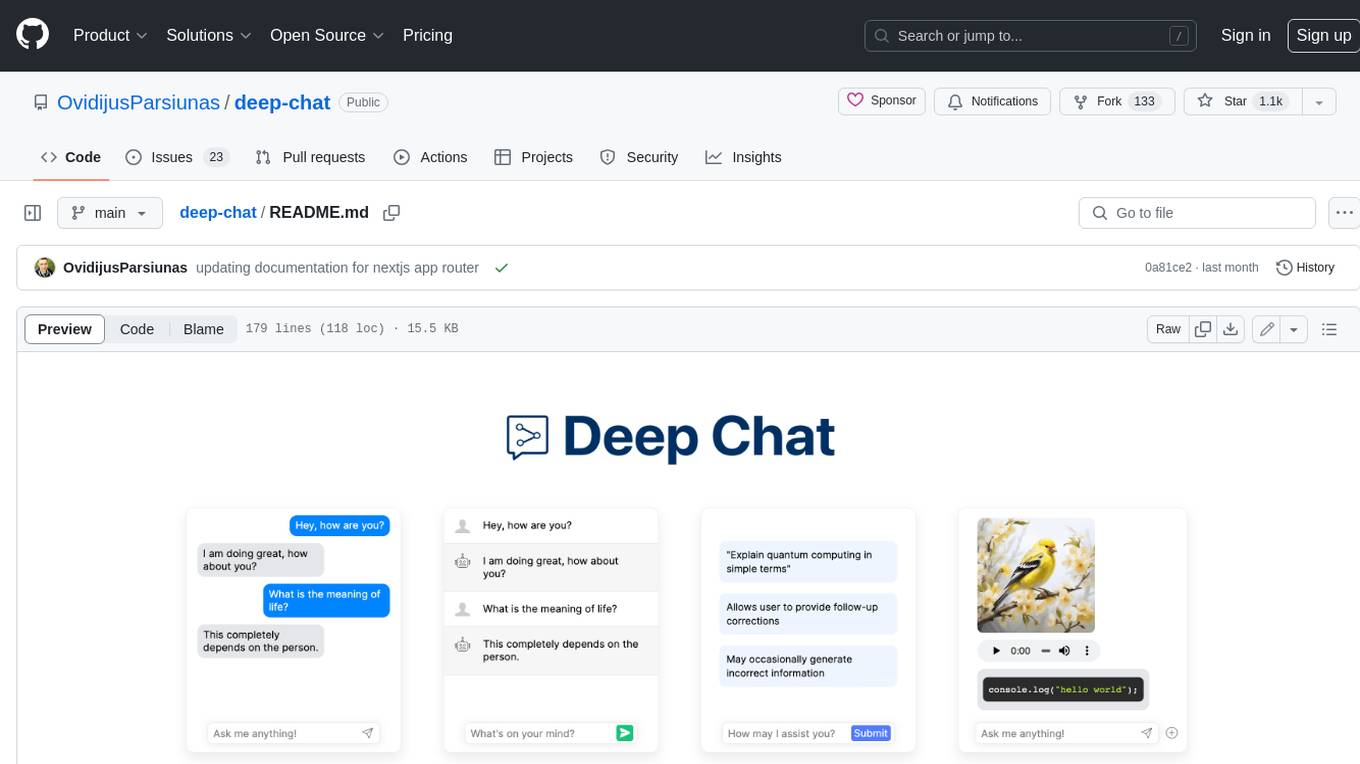

deep-chat

Deep Chat is a fully customizable AI chat component that can be injected into your website with minimal to no effort. Whether you want to create a chatbot that leverages popular APIs such as ChatGPT or connect to your own custom service, this component can do it all! Explore deepchat.dev to view all of the available features, how to use them, examples and more!

FAV0

FAV0 Weekly is a repository that records weekly updates on front-end, AI, and computer-related content. It provides light and dark mode switching, bilingual interface, RSS subscription function, Giscus comment system, high-definition image preview, font settings customization, and SEO optimization. Users can stay updated with the latest weekly releases by starring/watching the repository. The repository is dual-licensed under the MIT License and CC-BY-4.0 License.

EvoAgentX

EvoAgentX is an open-source framework for building, evaluating, and evolving LLM-based agents or agentic workflows in an automated, modular, and goal-driven manner. It enables developers and researchers to move beyond static prompt chaining or manual workflow orchestration by introducing a self-evolving agent ecosystem. The framework includes features such as agent workflow autoconstruction, built-in evaluation, self-evolution engine, plug-and-play compatibility, comprehensive built-in tools, memory module support, and human-in-the-loop interactions.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

PromptClip

PromptClip is a tool that allows developers to create video clips using LLM prompts. Users can upload videos from various sources, prompt the video in natural language, use different LLM models, instantly watch the generated clips, finetune the clips, and add music or image overlays. The tool provides a seamless way to extract specific moments from videos based on user queries, making video editing and content creation more efficient and intuitive.

superduperdb

SuperDuperDB is a Python framework for integrating AI models, APIs, and vector search engines directly with your existing databases, including hosting of your own models, streaming inference and scalable model training/fine-tuning. Build, deploy and manage any AI application without the need for complex pipelines, infrastructure as well as specialized vector databases, and moving our data there, by integrating AI at your data's source: - Generative AI, LLMs, RAG, vector search - Standard machine learning use-cases (classification, segmentation, regression, forecasting recommendation etc.) - Custom AI use-cases involving specialized models - Even the most complex applications/workflows in which different models work together SuperDuperDB is **not** a database. Think `db = superduper(db)`: SuperDuperDB transforms your databases into an intelligent platform that allows you to leverage the full AI and Python ecosystem. A single development and deployment environment for all your AI applications in one place, fully scalable and easy to manage.

openrl

OpenRL is an open-source general reinforcement learning research framework that supports training for various tasks such as single-agent, multi-agent, offline RL, self-play, and natural language. Developed based on PyTorch, the goal of OpenRL is to provide a simple-to-use, flexible, efficient and sustainable platform for the reinforcement learning research community. It supports a universal interface for all tasks/environments, single-agent and multi-agent tasks, offline RL training with expert dataset, self-play training, reinforcement learning training for natural language tasks, DeepSpeed, Arena for evaluation, importing models and datasets from Hugging Face, user-defined environments, models, and datasets, gymnasium environments, callbacks, visualization tools, unit testing, and code coverage testing. It also supports various algorithms like PPO, DQN, SAC, and environments like Gymnasium, MuJoCo, Atari, and more.

AI-Writer

AI-Writer is an AI content generation toolkit called Alwrity that automates and enhances the process of blog creation, optimization, and management. It integrates advanced AI models for text generation, image creation, and data analysis, offering features such as online research integration, long-form content generation, AI content planning, multilingual support, prevention of AI hallucinations, multimodal content generation, SEO optimization, and integration with platforms like Wordpress and Jekyll. The toolkit is designed for automated blog management and requires appropriate API keys and access credentials for full functionality.

project-blog

Welcome to the Blog Script Project, a collaborative platform for developers and writers to create, manage, and share content. With features like Markdown support, submodule integration, customizable templates, project contribution workflow, global visibility, community discussions, SEO optimization, and role-based dashboard, Blog Script enhances collaboration and visibility for your work. You can contribute by adding new projects, improving existing projects, updating documentation, fixing bugs, optimizing, and ensuring code readability. Follow the contribution guidelines to star the repository, find tasks, fork the repository, make changes, add screenshots, submit a pull request, and contribute to the open-source community. Additionally, you can add your project as a submodule by following the provided guidelines. Join us, contribute, and grow together!

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

pipecat

Pipecat is an open-source framework designed for building generative AI voice bots and multimodal assistants. It provides code building blocks for interacting with AI services, creating low-latency data pipelines, and transporting audio, video, and events over the Internet. Pipecat supports various AI services like speech-to-text, text-to-speech, image generation, and vision models. Users can implement new services and contribute to the framework. Pipecat aims to simplify the development of applications like personal coaches, meeting assistants, customer support bots, and more by providing a complete framework for integrating AI services.

For similar tasks

auto-news

Auto-News is an automatic news aggregator tool that utilizes Large Language Models (LLM) to pull information from various sources such as Tweets, RSS feeds, YouTube videos, web articles, Reddit, and journal notes. The tool aims to help users efficiently read and filter content based on personal interests, providing a unified reading experience and organizing information effectively. It features feed aggregation with summarization, transcript generation for videos and articles, noise reduction, task organization, and deep dive topic exploration. The tool supports multiple LLM backends, offers weekly top-k aggregations, and can be deployed on Linux/MacOS using docker-compose or Kubernetes.

fast-llm-security-guardrails

ZenGuard AI enables AI developers to integrate production-level, low-code LLM (Large Language Model) guardrails into their generative AI applications effortlessly. With ZenGuard AI, ensure your application operates within trusted boundaries, is protected from prompt injections, and maintains user privacy without compromising on performance.

RSSbrew

RSSBrew is a self-hosted RSS tool designed for aggregating multiple RSS feeds, applying custom filters, and generating AI summaries. It allows users to control content through custom filters based on Link, Title, and Description, with various match types and relationship operators. Users can easily combine multiple feeds into a single processed feed and use AI for article summarization and digest creation. The tool supports Docker deployment and regular installation, with ongoing documentation and development. Licensed under AGPL-3.0, RSSBrew is a versatile tool for managing and summarizing RSS content.

openshield

OpenShield is a firewall designed for AI models to protect against various attacks such as prompt injection, insecure output handling, training data poisoning, model denial of service, supply chain vulnerabilities, sensitive information disclosure, insecure plugin design, excessive agency granting, overreliance, and model theft. It provides rate limiting, content filtering, and keyword filtering for AI models. The tool acts as a transparent proxy between AI models and clients, allowing users to set custom rate limits for OpenAI endpoints and perform tokenizer calculations for OpenAI models. OpenShield also supports Python and LLM based rules, with upcoming features including rate limiting per user and model, prompts manager, content filtering, keyword filtering based on LLM/Vector models, OpenMeter integration, and VectorDB integration. The tool requires an OpenAI API key, Postgres, and Redis for operation.

AIO-Firebog-Blocklists

AIO-Firebog-Blocklists is a comprehensive tool that combines various sources into a single, cohesive blocklist. It offers customizable options to suit individual preferences and needs, ensuring regular updates to stay up-to-date with the latest threats. The tool focuses on performance optimization to minimize impact while maintaining effective filtering. It is designed to help users with ad blocking, malware protection, tracker prevention, and content filtering.

zhihu-plus-plus

Zhihu++ is a third-party client for Zhihu that focuses on privacy, individual internet rights, and ad-free experience. It features a unique local recommendation algorithm that provides and filters high-quality content locally. The algorithm is independent of Zhihu's algorithm, runs on web crawlers, and allows customization of recommendation weights. The project aims to help users reclaim their right to choose their own content and not be enslaved by algorithms. It supports various recommendation solutions for mobile/web platforms and offers features like setting block words, AI answer blocking, user blocking, and topic blocking. Zhihu++ is not a traditional free software, see the licensing agreement for details.

llms-txt

The llms-txt repository proposes a standardization on using an `/llms.txt` file to provide information to help large language models (LLMs) use a website at inference time. The `llms.txt` file is a markdown file that offers brief background information, guidance, and links to more detailed information in markdown files. It aims to provide concise and structured information for LLMs to access easily, helping users interact with websites via AI helpers. The repository also includes tools like a CLI and Python module for parsing `llms.txt` files and generating LLM context from them, along with a sample JavaScript implementation. The proposal suggests adding clean markdown versions of web pages alongside the original HTML pages to facilitate LLM readability and access to essential information.

note-gen

Note-gen is a simple tool for generating notes automatically based on user input. It uses natural language processing techniques to analyze text and extract key information to create structured notes. The tool is designed to save time and effort for users who need to summarize large amounts of text or generate notes quickly. With note-gen, users can easily create organized and concise notes for study, research, or any other purpose.

For similar jobs

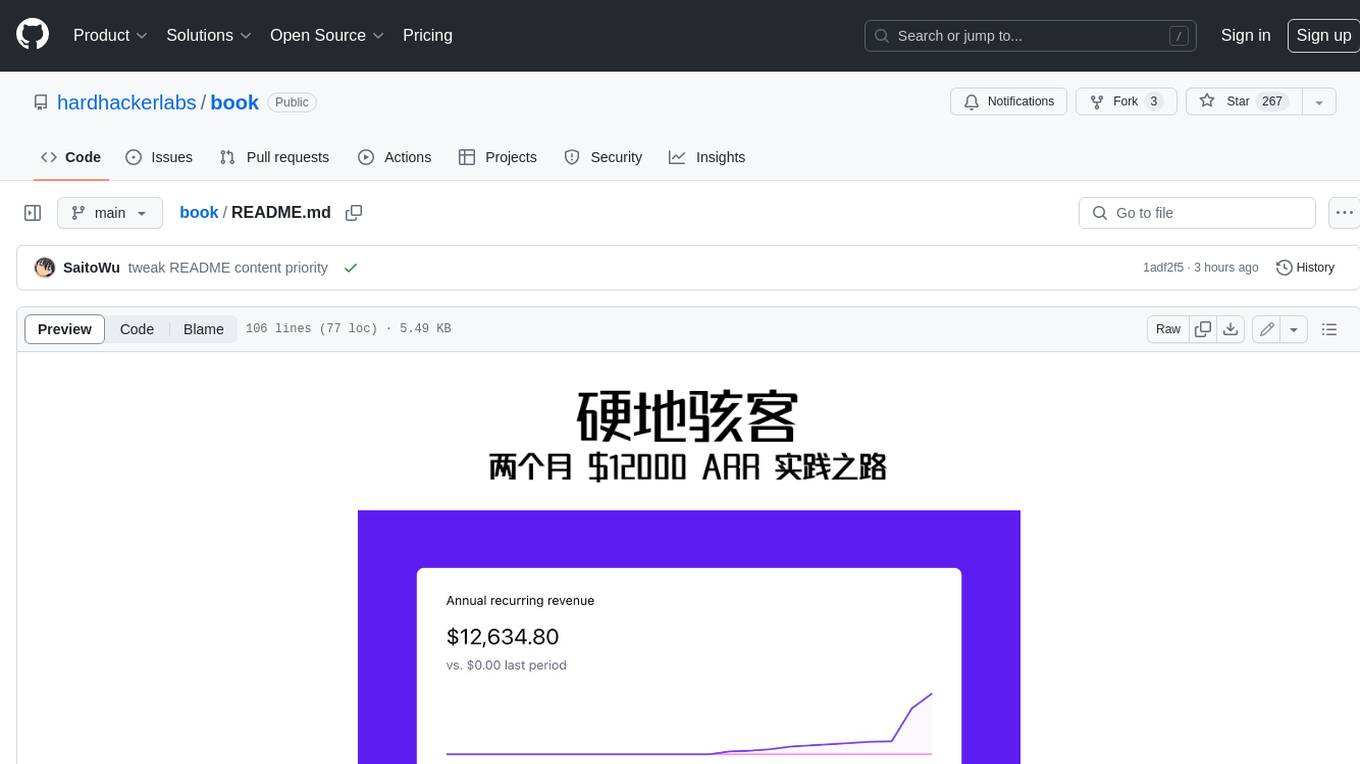

book

Podwise is an AI knowledge management app designed specifically for podcast listeners. With the Podwise platform, you only need to follow your favorite podcasts, such as "Hardcore Hackers". When a program is released, Podwise will use AI to transcribe, extract, summarize, and analyze the podcast content, helping you to break down the hard-core podcast knowledge. At the same time, it is connected to platforms such as Notion, Obsidian, Logseq, and Readwise, embedded in your knowledge management workflow, and integrated with content from other channels including news, newsletters, and blogs, helping you to improve your second brain 🧠.

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

Scrapegraph-ai

ScrapeGraphAI is a Python library that uses Large Language Models (LLMs) and direct graph logic to create web scraping pipelines for websites, documents, and XML files. It allows users to extract specific information from web pages by providing a prompt describing the desired data. ScrapeGraphAI supports various LLMs, including Ollama, OpenAI, Gemini, and Docker, enabling users to choose the most suitable model for their needs. The library provides a user-friendly interface through its `SmartScraper` class, which simplifies the process of building and executing scraping pipelines. ScrapeGraphAI is open-source and available on GitHub, with extensive documentation and examples to guide users. It is particularly useful for researchers and data scientists who need to extract structured data from web pages for analysis and exploration.

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

auto-news

Auto-News is an automatic news aggregator tool that utilizes Large Language Models (LLM) to pull information from various sources such as Tweets, RSS feeds, YouTube videos, web articles, Reddit, and journal notes. The tool aims to help users efficiently read and filter content based on personal interests, providing a unified reading experience and organizing information effectively. It features feed aggregation with summarization, transcript generation for videos and articles, noise reduction, task organization, and deep dive topic exploration. The tool supports multiple LLM backends, offers weekly top-k aggregations, and can be deployed on Linux/MacOS using docker-compose or Kubernetes.

SemanticFinder

SemanticFinder is a frontend-only live semantic search tool that calculates embeddings and cosine similarity client-side using transformers.js and SOTA embedding models from Huggingface. It allows users to search through large texts like books with pre-indexed examples, customize search parameters, and offers data privacy by keeping input text in the browser. The tool can be used for basic search tasks, analyzing texts for recurring themes, and has potential integrations with various applications like wikis, chat apps, and personal history search. It also provides options for building browser extensions and future ideas for further enhancements and integrations.

1filellm

1filellm is a command-line data aggregation tool designed for LLM ingestion. It aggregates and preprocesses data from various sources into a single text file, facilitating the creation of information-dense prompts for large language models. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, and token count reporting. Users can input local files, directories, GitHub repositories, pull requests, issues, ArXiv papers, YouTube transcripts, web pages, Sci-Hub papers via DOI or PMID. The tool provides uncompressed and compressed text outputs, with the uncompressed text automatically copied to the clipboard for easy pasting into LLMs.

Agently-Daily-News-Collector

Agently Daily News Collector is an open-source project showcasing a workflow powered by the Agent ly AI application development framework. It allows users to generate news collections on various topics by inputting the field topic. The AI agents automatically perform the necessary tasks to generate a high-quality news collection saved in a markdown file. Users can edit settings in the YAML file, install Python and required packages, input their topic idea, and wait for the news collection to be generated. The process involves tasks like outlining, searching, summarizing, and preparing column data. The project dependencies include Agently AI Development Framework, duckduckgo-search, BeautifulSoup4, and PyYAM.