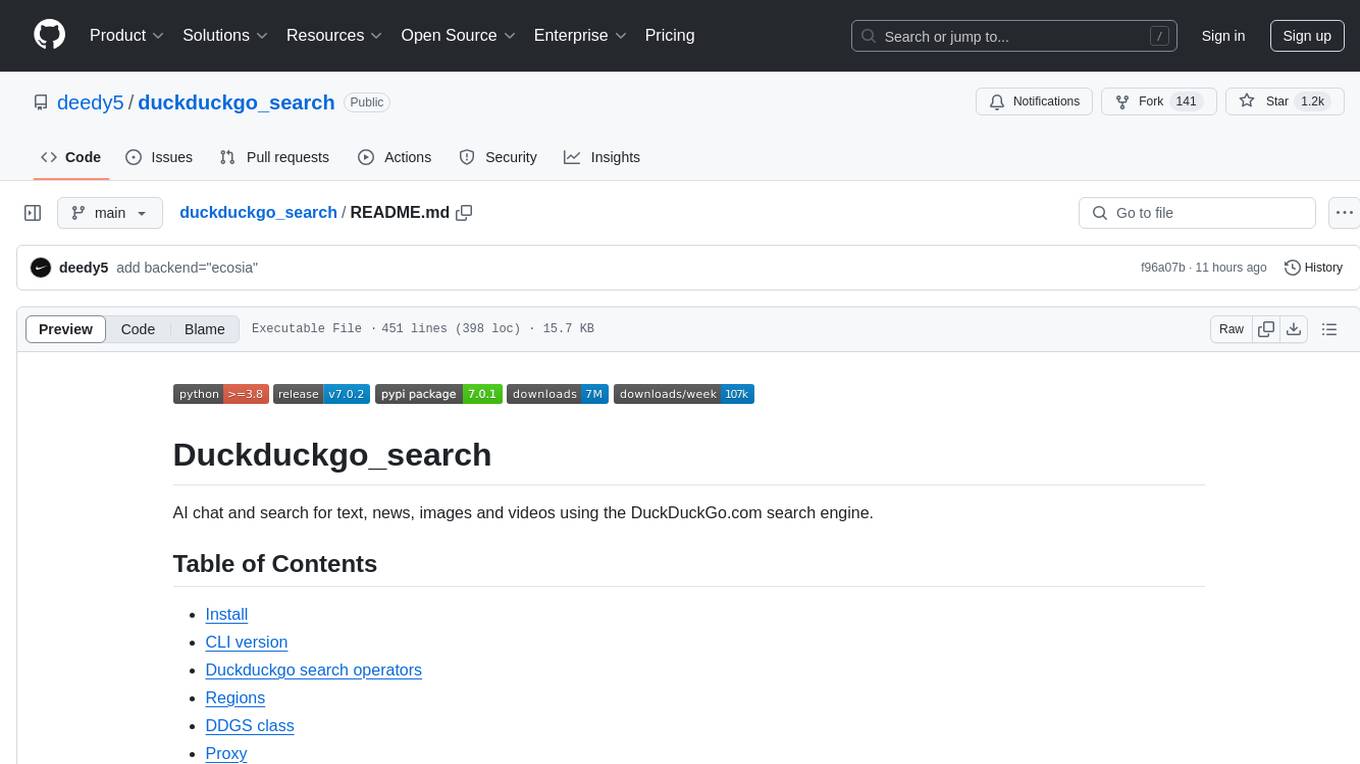

duckduckgo_search

AI chat and search for text, news, images and videos using the DuckDuckGo.com search engine.

Stars: 1260

Duckduckgo_search is a Python library that enables AI chat and search functionalities for text, news, images, and videos using the DuckDuckGo.com search engine. It provides various methods for different search types such as text, images, videos, and news. The library also supports search operators, regions, proxy settings, and exception handling. Users can interact with the DuckDuckGo API to retrieve search results based on specific queries and parameters.

README:

AI chat and search for text, news, images and videos using the DuckDuckGo.com search engine.

- Install

- CLI version

- Duckduckgo search operators

- Regions

- DDGS class

- Proxy

- Exceptions

- 1. chat() - AI chat

- 2. text() - text search

- 3. images() - image search

- 4. videos() - video search

- 5. news() - news search

- Disclaimer

pip install -U duckduckgo_searchddgs --helpCLI examples:

# AI chat

ddgs chat

# text search

ddgs text -k "Assyrian siege of Jerusalem"

# find and download pdf files via proxy

ddgs text -k "Economics in one lesson filetype:pdf" -r wt-wt -m 50 -p https://1.2.3.4:1234 -d -dd economics_reading

# using Tor Browser as a proxy (`tb` is an alias for `socks5://127.0.0.1:9150`)

ddgs text -k "'The history of the Standard Oil Company' filetype:doc" -m 50 -d -p tb

# find and save to csv

ddgs text -k "'neuroscience exploring the brain' filetype:pdf" -m 70 -o neuroscience_list.csv

# don't verify SSL when making the request

ddgs text -k "Mississippi Burning" -v false

# find and download images

ddgs images -k "beware of false prophets" -r wt-wt -type photo -m 500 -d

# get news for the last day and save to json

ddgs news -k "sanctions" -m 100 -t d -o json| Keywords example | Result |

|---|---|

| cats dogs | Results about cats or dogs |

| "cats and dogs" | Results for exact term "cats and dogs". If no results are found, related results are shown. |

| cats -dogs | Fewer dogs in results |

| cats +dogs | More dogs in results |

| cats filetype:pdf | PDFs about cats. Supported file types: pdf, doc(x), xls(x), ppt(x), html |

| dogs site:example.com | Pages about dogs from example.com |

| cats -site:example.com | Pages about cats, excluding example.com |

| intitle:dogs | Page title includes the word "dogs" |

| inurl:cats | Page url includes the word "cats" |

expand

xa-ar for Arabia

xa-en for Arabia (en)

ar-es for Argentina

au-en for Australia

at-de for Austria

be-fr for Belgium (fr)

be-nl for Belgium (nl)

br-pt for Brazil

bg-bg for Bulgaria

ca-en for Canada

ca-fr for Canada (fr)

ct-ca for Catalan

cl-es for Chile

cn-zh for China

co-es for Colombia

hr-hr for Croatia

cz-cs for Czech Republic

dk-da for Denmark

ee-et for Estonia

fi-fi for Finland

fr-fr for France

de-de for Germany

gr-el for Greece

hk-tzh for Hong Kong

hu-hu for Hungary

in-en for India

id-id for Indonesia

id-en for Indonesia (en)

ie-en for Ireland

il-he for Israel

it-it for Italy

jp-jp for Japan

kr-kr for Korea

lv-lv for Latvia

lt-lt for Lithuania

xl-es for Latin America

my-ms for Malaysia

my-en for Malaysia (en)

mx-es for Mexico

nl-nl for Netherlands

nz-en for New Zealand

no-no for Norway

pe-es for Peru

ph-en for Philippines

ph-tl for Philippines (tl)

pl-pl for Poland

pt-pt for Portugal

ro-ro for Romania

ru-ru for Russia

sg-en for Singapore

sk-sk for Slovak Republic

sl-sl for Slovenia

za-en for South Africa

es-es for Spain

se-sv for Sweden

ch-de for Switzerland (de)

ch-fr for Switzerland (fr)

ch-it for Switzerland (it)

tw-tzh for Taiwan

th-th for Thailand

tr-tr for Turkey

ua-uk for Ukraine

uk-en for United Kingdom

us-en for United States

ue-es for United States (es)

ve-es for Venezuela

vn-vi for Vietnam

wt-wt for No region

The DDGS classes is used to retrieve search results from DuckDuckGo.com.

class DDGS:

"""DuckDuckgo_search class to get search results from duckduckgo.com

Args:

headers (dict, optional): Dictionary of headers for the HTTP client. Defaults to None.

proxy (str, optional): proxy for the HTTP client, supports http/https/socks5 protocols.

example: "http://user:[email protected]:3128". Defaults to None.

timeout (int, optional): Timeout value for the HTTP client. Defaults to 10.

verify (bool): SSL verification when making the request. Defaults to True.

"""Here is an example of initializing the DDGS class.

from duckduckgo_search import DDGS

results = DDGS().text("python programming", max_results=5)

print(results)Package supports http/https/socks proxies. Example: http://user:[email protected]:3128.

Use a rotating proxy. Otherwise, use a new proxy with each DDGS class initialization.

1. The easiest way. Launch the Tor Browser

ddgs = DDGS(proxy="tb", timeout=20) # "tb" is an alias for "socks5://127.0.0.1:9150"

results = ddgs.text("something you need", max_results=50)2. Use any proxy server (example with iproyal rotating residential proxies)

ddgs = DDGS(proxy="socks5h://user:[email protected]:32325", timeout=20)

results = ddgs.text("something you need", max_results=50)3. The proxy can also be set using the DDGS_PROXY environment variable.

export DDGS_PROXY="socks5h://user:[email protected]:32325"Exceptions:

-

DuckDuckGoSearchException: Base exception for duckduckgo_search errors. -

RatelimitException: Inherits from DuckDuckGoSearchException, raised for exceeding API request rate limits. -

TimeoutException: Inherits from DuckDuckGoSearchException, raised for API request timeouts.

def chat(self, keywords: str, model: str = "gpt-4o-mini", timeout: int = 30) -> str:

"""Initiates a chat session with DuckDuckGo AI.

Args:

keywords (str): The initial message or question to send to the AI.

model (str): The model to use: "gpt-4o-mini", "claude-3-haiku", "llama-3.1-70b", "mixtral-8x7b".

Defaults to "gpt-4o-mini".

timeout (int): Timeout value for the HTTP client. Defaults to 30.

Returns:

str: The response from the AI.

"""Example

results = DDGS().chat("summarize Daniel Defoe's The Consolidator", model='claude-3-haiku')def text(

keywords: str,

region: str = "wt-wt",

safesearch: str = "moderate",

timelimit: str | None = None,

backend: str = "auto",

max_results: int | None = None,

) -> list[dict[str, str]]:

"""DuckDuckGo text search generator. Query params: https://duckduckgo.com/params.

Args:

keywords: keywords for query.

region: wt-wt, us-en, uk-en, ru-ru, etc. Defaults to "wt-wt".

safesearch: on, moderate, off. Defaults to "moderate".

timelimit: d, w, m, y. Defaults to None.

backend: auto, html, lite. Defaults to auto.

auto - try all backends in random order,

html - collect data from https://html.duckduckgo.com,

lite - collect data from https://lite.duckduckgo.com.

max_results: max number of results. If None, returns results only from the first response. Defaults to None.

Returns:

List of dictionaries with search results.

"""Example

results = DDGS().text('live free or die', region='wt-wt', safesearch='off', timelimit='y', max_results=10)

# Searching for pdf files

results = DDGS().text('russia filetype:pdf', region='wt-wt', safesearch='off', timelimit='y', max_results=10)

print(results)

[

{

"title": "News, sport, celebrities and gossip | The Sun",

"href": "https://www.thesun.co.uk/",

"body": "Get the latest news, exclusives, sport, celebrities, showbiz, politics, business and lifestyle from The Sun",

}, ...

]def images(

keywords: str,

region: str = "wt-wt",

safesearch: str = "moderate",

timelimit: str | None = None,

size: str | None = None,

color: str | None = None,

type_image: str | None = None,

layout: str | None = None,

license_image: str | None = None,

max_results: int | None = None,

) -> list[dict[str, str]]:

"""DuckDuckGo images search. Query params: https://duckduckgo.com/params.

Args:

keywords: keywords for query.

region: wt-wt, us-en, uk-en, ru-ru, etc. Defaults to "wt-wt".

safesearch: on, moderate, off. Defaults to "moderate".

timelimit: Day, Week, Month, Year. Defaults to None.

size: Small, Medium, Large, Wallpaper. Defaults to None.

color: color, Monochrome, Red, Orange, Yellow, Green, Blue,

Purple, Pink, Brown, Black, Gray, Teal, White. Defaults to None.

type_image: photo, clipart, gif, transparent, line.

Defaults to None.

layout: Square, Tall, Wide. Defaults to None.

license_image: any (All Creative Commons), Public (PublicDomain),

Share (Free to Share and Use), ShareCommercially (Free to Share and Use Commercially),

Modify (Free to Modify, Share, and Use), ModifyCommercially (Free to Modify, Share, and

Use Commercially). Defaults to None.

max_results: max number of results. If None, returns results only from the first response. Defaults to None.

Returns:

List of dictionaries with images search results.

"""Example

results = DDGS().images(

keywords="butterfly",

region="wt-wt",

safesearch="off",

size=None,

color="Monochrome",

type_image=None,

layout=None,

license_image=None,

max_results=100,

)

print(images)

[

{

"title": "File:The Sun by the Atmospheric Imaging Assembly of NASA's Solar ...",

"image": "https://upload.wikimedia.org/wikipedia/commons/b/b4/The_Sun_by_the_Atmospheric_Imaging_Assembly_of_NASA's_Solar_Dynamics_Observatory_-_20100819.jpg",

"thumbnail": "https://tse4.mm.bing.net/th?id=OIP.lNgpqGl16U0ft3rS8TdFcgEsEe&pid=Api",

"url": "https://en.wikipedia.org/wiki/File:The_Sun_by_the_Atmospheric_Imaging_Assembly_of_NASA's_Solar_Dynamics_Observatory_-_20100819.jpg",

"height": 3860,

"width": 4044,

"source": "Bing",

}, ...

]def videos(

keywords: str,

region: str = "wt-wt",

safesearch: str = "moderate",

timelimit: str | None = None,

resolution: str | None = None,

duration: str | None = None,

license_videos: str | None = None,

max_results: int | None = None,

) -> list[dict[str, str]]:

"""DuckDuckGo videos search. Query params: https://duckduckgo.com/params.

Args:

keywords: keywords for query.

region: wt-wt, us-en, uk-en, ru-ru, etc. Defaults to "wt-wt".

safesearch: on, moderate, off. Defaults to "moderate".

timelimit: d, w, m. Defaults to None.

resolution: high, standart. Defaults to None.

duration: short, medium, long. Defaults to None.

license_videos: creativeCommon, youtube. Defaults to None.

max_results: max number of results. If None, returns results only from the first response. Defaults to None.

Returns:

List of dictionaries with videos search results.

"""Example

results = DDGS().videos(

keywords="cars",

region="wt-wt",

safesearch="off",

timelimit="w",

resolution="high",

duration="medium",

max_results=100,

)

print(results)

[

{

"content": "https://www.youtube.com/watch?v=6901-C73P3g",

"description": "Watch the Best Scenes of popular Tamil Serial #Meena that airs on Sun TV. Watch all Sun TV serials immediately after the TV telecast on Sun NXT app. *Free for Indian Users only Download here: Android - http://bit.ly/SunNxtAdroid iOS: India - http://bit.ly/sunNXT Watch on the web - https://www.sunnxt.com/ Two close friends, Chidambaram ...",

"duration": "8:22",

"embed_html": '<iframe width="1280" height="720" src="https://www.youtube.com/embed/6901-C73P3g?autoplay=1" frameborder="0" allowfullscreen></iframe>',

"embed_url": "https://www.youtube.com/embed/6901-C73P3g?autoplay=1",

"image_token": "6c070b5f0e24e5972e360d02ddeb69856202f97718ea6c5d5710e4e472310fa3",

"images": {

"large": "https://tse4.mm.bing.net/th?id=OVF.JWBFKm1u%2fHd%2bz2e1GitsQw&pid=Api",

"medium": "https://tse4.mm.bing.net/th?id=OVF.JWBFKm1u%2fHd%2bz2e1GitsQw&pid=Api",

"motion": "",

"small": "https://tse4.mm.bing.net/th?id=OVF.JWBFKm1u%2fHd%2bz2e1GitsQw&pid=Api",

},

"provider": "Bing",

"published": "2024-07-03T05:30:03.0000000",

"publisher": "YouTube",

"statistics": {"viewCount": 29059},

"title": "Meena - Best Scenes | 02 July 2024 | Tamil Serial | Sun TV",

"uploader": "Sun TV",

}, ...

]def news(

keywords: str,

region: str = "wt-wt",

safesearch: str = "moderate",

timelimit: str | None = None,

max_results: int | None = None,

) -> list[dict[str, str]]:

"""DuckDuckGo news search. Query params: https://duckduckgo.com/params.

Args:

keywords: keywords for query.

region: wt-wt, us-en, uk-en, ru-ru, etc. Defaults to "wt-wt".

safesearch: on, moderate, off. Defaults to "moderate".

timelimit: d, w, m. Defaults to None.

max_results: max number of results. If None, returns results only from the first response. Defaults to None.

Returns:

List of dictionaries with news search results.

"""Example

results = DDGS().news(keywords="sun", region="wt-wt", safesearch="off", timelimit="m", max_results=20)

print(results)

[

{

"date": "2024-07-03T16:25:22+00:00",

"title": "Murdoch's Sun Endorses Starmer's Labour Day Before UK Vote",

"body": "Rupert Murdoch's Sun newspaper endorsed Keir Starmer and his opposition Labour Party to win the UK general election, a dramatic move in the British media landscape that illustrates the country's shifting political sands.",

"url": "https://www.msn.com/en-us/money/other/murdoch-s-sun-endorses-starmer-s-labour-day-before-uk-vote/ar-BB1plQwl",

"image": "https://img-s-msn-com.akamaized.net/tenant/amp/entityid/BB1plZil.img?w=2000&h=1333&m=4&q=79",

"source": "Bloomberg on MSN.com",

}, ...

]This library is not affiliated with DuckDuckGo and is for educational purposes only. It is not intended for commercial use or any purpose that violates DuckDuckGo's Terms of Service. By using this library, you acknowledge that you will not use it in a way that infringes on DuckDuckGo's terms. The official DuckDuckGo website can be found at https://duckduckgo.com.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for duckduckgo_search

Similar Open Source Tools

duckduckgo_search

Duckduckgo_search is a Python library that enables AI chat and search functionalities for text, news, images, and videos using the DuckDuckGo.com search engine. It provides various methods for different search types such as text, images, videos, and news. The library also supports search operators, regions, proxy settings, and exception handling. Users can interact with the DuckDuckGo API to retrieve search results based on specific queries and parameters.

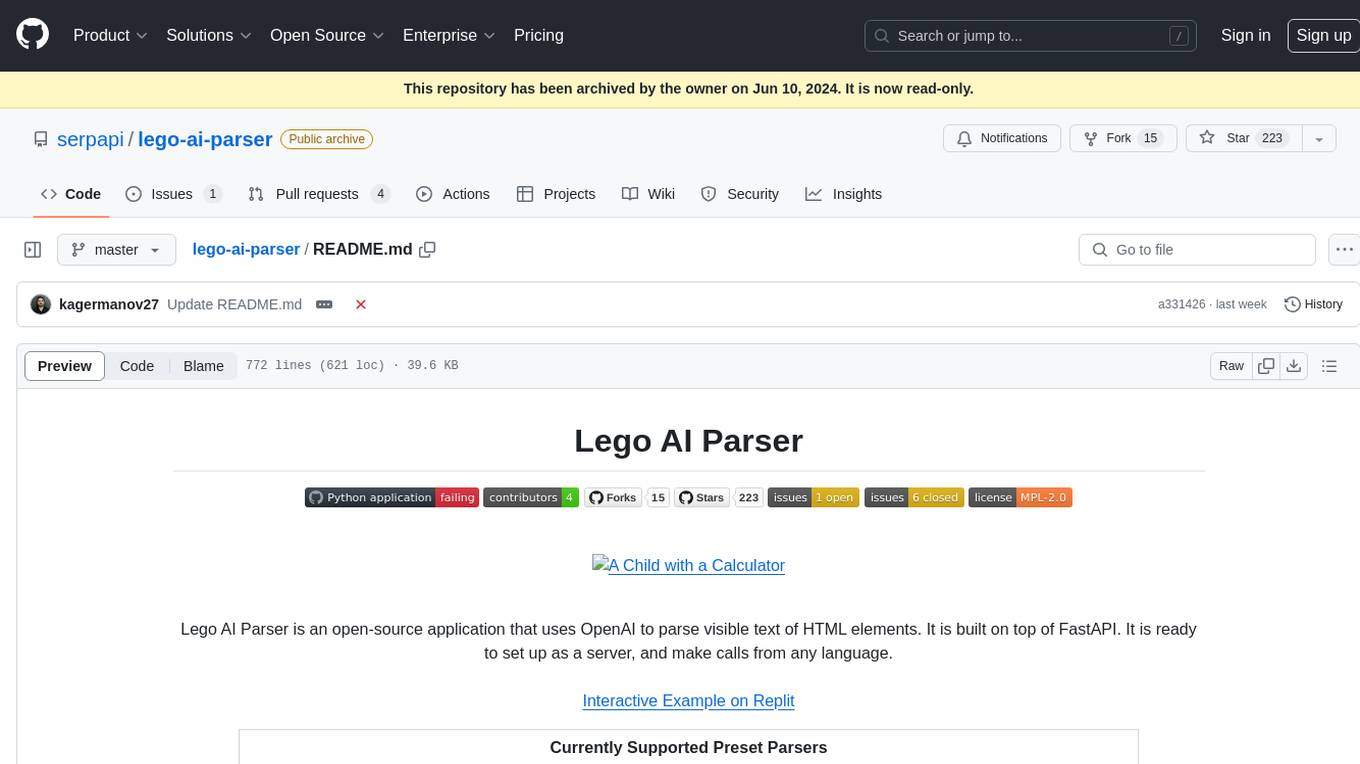

lego-ai-parser

Lego AI Parser is an open-source application that uses OpenAI to parse visible text of HTML elements. It is built on top of FastAPI, ready to set up as a server, and make calls from any language. It supports preset parsers for Google Local Results, Amazon Listings, Etsy Listings, Wayfair Listings, BestBuy Listings, Costco Listings, Macy's Listings, and Nordstrom Listings. Users can also design custom parsers by providing prompts, examples, and details about the OpenAI model under the classifier key.

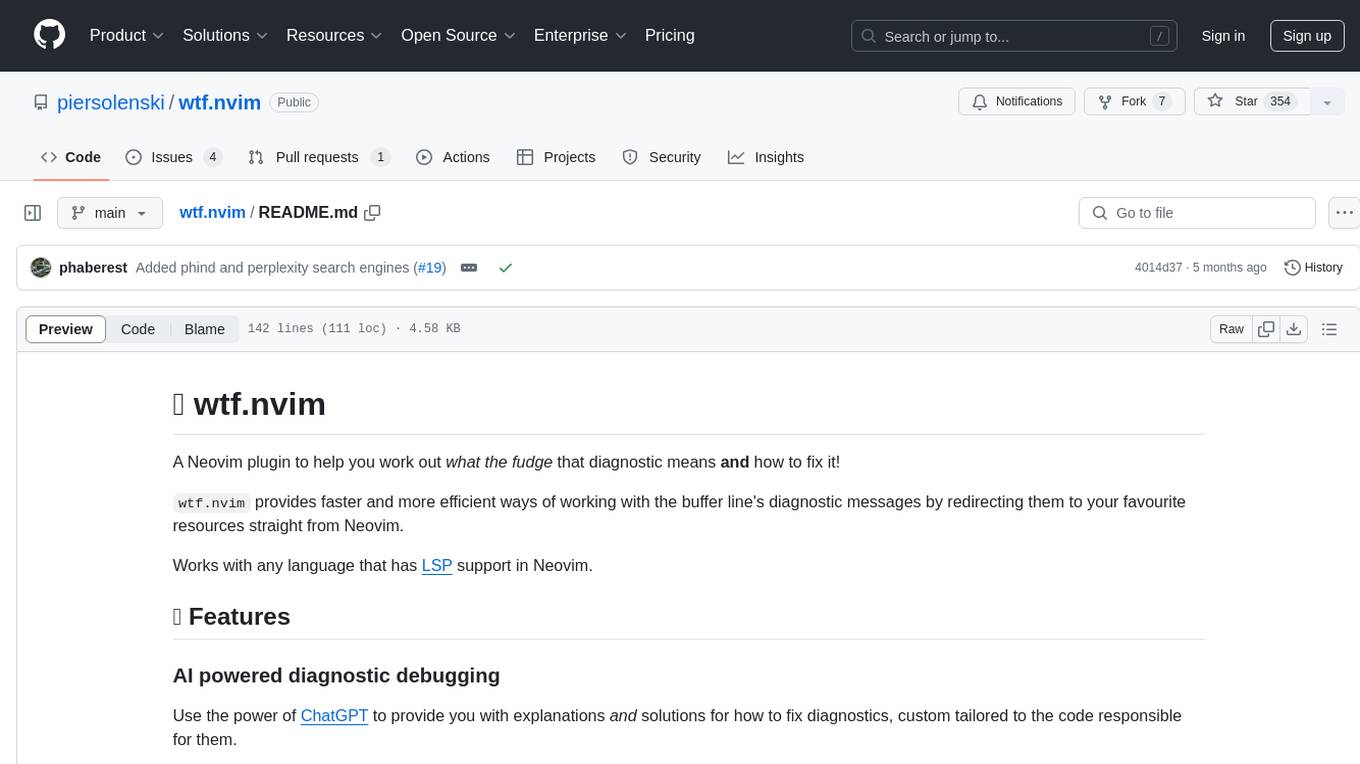

wtf.nvim

wtf.nvim is a Neovim plugin that enhances diagnostic debugging by providing explanations and solutions for code issues using ChatGPT. It allows users to search the web for answers directly from Neovim, making the debugging process faster and more efficient. The plugin works with any language that has LSP support in Neovim, offering AI-powered diagnostic assistance and seamless integration with various resources for resolving coding problems.

firecrawl

Firecrawl is an API service that empowers AI applications with clean data from any website. It features advanced scraping, crawling, and data extraction capabilities. The repository is still in development, integrating custom modules into the mono repo. Users can run it locally but it's not fully ready for self-hosted deployment yet. Firecrawl offers powerful capabilities like scraping, crawling, mapping, searching, and extracting structured data from single pages, multiple pages, or entire websites with AI. It supports various formats, actions, and batch scraping. The tool is designed to handle proxies, anti-bot mechanisms, dynamic content, media parsing, change tracking, and more. Firecrawl is available as an open-source project under the AGPL-3.0 license, with additional features offered in the cloud version.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.

functionary

Functionary is a language model that interprets and executes functions/plugins. It determines when to execute functions, whether in parallel or serially, and understands their outputs. Function definitions are given as JSON Schema Objects, similar to OpenAI GPT function calls. It offers documentation and examples on functionary.meetkai.com. The newest model, meetkai/functionary-medium-v3.1, is ranked 2nd in the Berkeley Function-Calling Leaderboard. Functionary supports models with different context lengths and capabilities for function calling and code interpretation. It also provides grammar sampling for accurate function and parameter names. Users can deploy Functionary models serverlessly using Modal.com.

ruby-openai

Use the OpenAI API with Ruby! 🤖🩵 Stream text with GPT-4, transcribe and translate audio with Whisper, or create images with DALL·E... Hire me | 🎮 Ruby AI Builders Discord | 🐦 Twitter | 🧠 Anthropic Gem | 🚂 Midjourney Gem ## Table of Contents * Ruby OpenAI * Table of Contents * Installation * Bundler * Gem install * Usage * Quickstart * With Config * Custom timeout or base URI * Extra Headers per Client * Logging * Errors * Faraday middleware * Azure * Ollama * Counting Tokens * Models * Examples * Chat * Streaming Chat * Vision * JSON Mode * Functions * Edits * Embeddings * Batches * Files * Finetunes * Assistants * Threads and Messages * Runs * Runs involving function tools * Image Generation * DALL·E 2 * DALL·E 3 * Image Edit * Image Variations * Moderations * Whisper * Translate * Transcribe * Speech * Errors * Development * Release * Contributing * License * Code of Conduct

avante.nvim

avante.nvim is a Neovim plugin that emulates the behavior of the Cursor AI IDE, providing AI-driven code suggestions and enabling users to apply recommendations to their source files effortlessly. It offers AI-powered code assistance and one-click application of suggested changes, streamlining the editing process and saving time. The plugin is still in early development, with functionalities like setting API keys, querying AI about code, reviewing suggestions, and applying changes. Key bindings are available for various actions, and the roadmap includes enhancing AI interactions, stability improvements, and introducing new features for coding tasks.

parakeet

Parakeet is a Go library for creating GenAI apps with Ollama. It enables the creation of generative AI applications that can generate text-based content. The library provides tools for simple completion, completion with context, chat completion, and more. It also supports function calling with tools and Wasm plugins. Parakeet allows users to interact with language models and create AI-powered applications easily.

bosquet

Bosquet is a tool designed for LLMOps in large language model-based applications. It simplifies building AI applications by managing LLM and tool services, integrating with Selmer templating library for prompt templating, enabling prompt chaining and composition with Pathom graph processing, defining agents and tools for external API interactions, handling LLM memory, and providing features like call response caching. The tool aims to streamline the development process for AI applications that require complex prompt templates, memory management, and interaction with external systems.

langchain-swift

LangChain for Swift. Optimized for iOS, macOS, watchOS (part) and visionOS.(beta) This is a pure client library, no server required

Scrapegraph-ai

ScrapeGraphAI is a Python library that uses Large Language Models (LLMs) and direct graph logic to create web scraping pipelines for websites, documents, and XML files. It allows users to extract specific information from web pages by providing a prompt describing the desired data. ScrapeGraphAI supports various LLMs, including Ollama, OpenAI, Gemini, and Docker, enabling users to choose the most suitable model for their needs. The library provides a user-friendly interface through its `SmartScraper` class, which simplifies the process of building and executing scraping pipelines. ScrapeGraphAI is open-source and available on GitHub, with extensive documentation and examples to guide users. It is particularly useful for researchers and data scientists who need to extract structured data from web pages for analysis and exploration.

ollama-ex

Ollama is a powerful tool for running large language models locally or on your own infrastructure. It provides a full implementation of the Ollama API, support for streaming requests, and tool use capability. Users can interact with Ollama in Elixir to generate completions, chat messages, and perform streaming requests. The tool also supports function calling on compatible models, allowing users to define tools with clear descriptions and arguments. Ollama is designed to facilitate natural language processing tasks and enhance user interactions with language models.

crush

Crush is a versatile tool designed to enhance coding workflows in your terminal. It offers support for multiple LLMs, allows for flexible switching between models, and enables session-based work management. Crush is extensible through MCPs and works across various operating systems. It can be installed using package managers like Homebrew and NPM, or downloaded directly. Crush supports various APIs like Anthropic, OpenAI, Groq, and Google Gemini, and allows for customization through environment variables. The tool can be configured locally or globally, and supports LSPs for additional context. Crush also provides options for ignoring files, allowing tools, and configuring local models. It respects `.gitignore` files and offers logging capabilities for troubleshooting and debugging.

bellman

Bellman is a unified interface to interact with language and embedding models, supporting various vendors like VertexAI/Gemini, OpenAI, Anthropic, VoyageAI, and Ollama. It consists of a library for direct interaction with models and a service 'bellmand' for proxying requests with one API key. Bellman simplifies switching between models, vendors, and common tasks like chat, structured data, tools, and binary input. It addresses the lack of official SDKs for major players and differences in APIs, providing a single proxy for handling different models. The library offers clients for different vendors implementing common interfaces for generating and embedding text, enabling easy interchangeability between models.

langcorn

LangCorn is an API server that enables you to serve LangChain models and pipelines with ease, leveraging the power of FastAPI for a robust and efficient experience. It offers features such as easy deployment of LangChain models and pipelines, ready-to-use authentication functionality, high-performance FastAPI framework for serving requests, scalability and robustness for language processing applications, support for custom pipelines and processing, well-documented RESTful API endpoints, and asynchronous processing for faster response times.

For similar tasks

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

twinny

Twinny is a free and open-source AI code completion plugin for Visual Studio Code and compatible editors. It integrates with various tools and frameworks, including Ollama, llama.cpp, oobabooga/text-generation-webui, LM Studio, LiteLLM, and Open WebUI. Twinny offers features such as fill-in-the-middle code completion, chat with AI about your code, customizable API endpoints, and support for single or multiline fill-in-middle completions. It is easy to install via the Visual Studio Code extensions marketplace and provides a range of customization options. Twinny supports both online and offline operation and conforms to the OpenAI API standard.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.