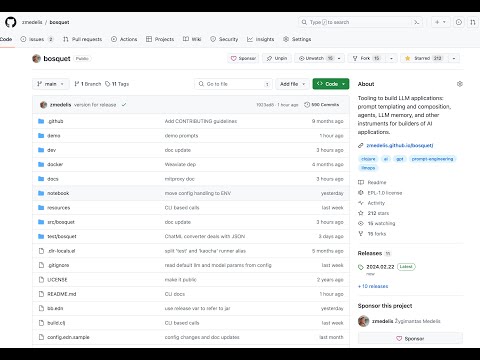

bosquet

Tooling to build LLM applications: prompt templating and composition, agents, LLM memory, and other instruments for builders of AI applications.

Stars: 329

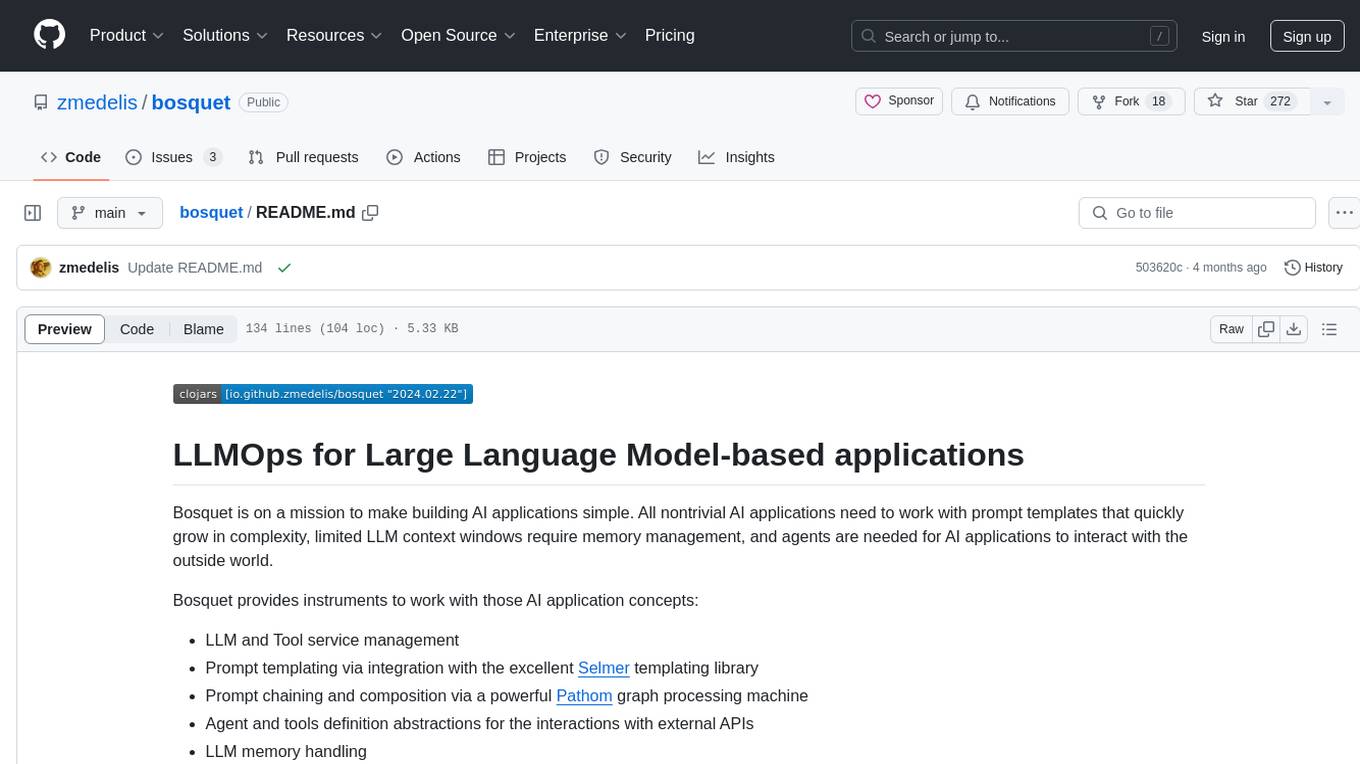

Bosquet is a tool designed for LLMOps in large language model-based applications. It simplifies building AI applications by managing LLM and tool services, integrating with Selmer templating library for prompt templating, enabling prompt chaining and composition with Pathom graph processing, defining agents and tools for external API interactions, handling LLM memory, and providing features like call response caching. The tool aims to streamline the development process for AI applications that require complex prompt templates, memory management, and interaction with external systems.

README:

Bosquet is on a mission to make building AI applications simple. All nontrivial AI applications need to work with prompt templates that quickly grow in complexity, limited LLM context windows require memory management, and agents are needed for AI applications to interact with the outside world.

Bosquet provides instruments to work with those AI application concepts:

- LLM and Tool service management

- Prompt templating via integration with the excellent Selmer templating library

- Prompt chaining and composition via a powerful Pathom graph processing machine

- Agent and tools definition abstractions for the interactions with external APIs

- LLM memory handling

- Other instruments like call response caching (see documentation)

Full project documentation (WIP)

Secrets like keys are stored in secrets.edn file and local parameters are kept in config.edn. Make a copy of config.edn.sample and config.edn.sample files. Change as needed.

Command line interface demo

Run the following command to get CLI options

clojure -M -m bosquet.cli

Set the default model with

clojure -M -m bosquet.cli llms set --service openai --temperature 0 --model gpt-4o

Do not forget to set the API KEY for your service (change 'openai' to a different name if needed)

clojure -M -m bosquet.cli keys set openai

With that set, you can run generations:

clojure -M -m bosquet.cli "2+{{x}}="

Or using files

clojure -M -m bosquet.cli -p demo/play-writer-prompt.edn -d demo/play-writer-data.edn

Simple prompt completion can be done like this.

(require '[bosquet.llm.generator :refer [generate llm]])

(generate "When I was 6 my sister was half my age. Now I’m 70 how old is my sister?")

=>

"When you were 6, your sister was half your age, which means she was 6 / 2 = 3 years old.\nSince then, there is a constant age difference of 3 years between you and your sister.\nNow that you are 70, your sister would be 70 - 6 = 64 years old."}

(require '[bosquet.llm :as llm])

(require '[bosquet.llm.generator :refer [generate llm]])

(generate

llm/default-services

{:question-answer "Question: {{question}} Answer: {{answer}}"

:answer (llm :openai)

:self-eval ["Question: {{question}}"

"Answer: {{answer}}"

""

"Is this a correct answer?"

"{{test}}"]

:test (llm :openai)}

{:question "What is the distance from Moon to Io?"})

=>

{:question-answer

"Question: What is the distance from Moon to Io? Answer:",

:answer

"The distance from the Moon to Io varies, as both are orbiting different bodies. On average, the distance between the Moon and Io is approximately 760,000 kilometers (470,000 miles). However, this distance can change due to the elliptical nature of their orbits.",

:self-eval

"Question: What is the distance from Moon to Io?\nAnswer: The distance from the Moon to Io varies, as both are orbiting different bodies. On average, the distance between the Moon and Io is approximately 760,000 kilometers (470,000 miles). However, this distance can change due to the elliptical nature of their orbits.\n\nIs this a correct answer?",

:test

"No, the answer provided is incorrect. The Moon is Earth's natural satellite, while Io is one of Jupiter's moons. Therefore, the distance between the Moon and Io can vary significantly depending on their relative positions in their respective orbits around Earth and Jupiter."}

(require '[bosquet.llm.wkk :as wkk])

(generate

[[:system "You are an amazing writer."]

[:user ["Write a synopsis for the play:"

"Title: {{title}}"

"Genre: {{genre}}"

"Synopsis:"]]

[:assistant (llm wkk/openai

wkk/model-params {:temperature 0.8 :max-tokens 120}

wkk/var-name :synopsis)]

[:user "Now write a critique of the above synopsis:"]

[:assistant (llm wkk/openai

wkk/model-params {:temperature 0.2 :max-tokens 120}

wkk/var-name :critique)]]

{:title "Mr. X"

:genre "Sci-Fi"})

=>

#:bosquet{:conversation

[[:system "You are an amazing writer."]

[:user

"Write a synopsis for the play:\nTitle: Mr. X\nGenre: Sci-Fi\nSynopsis:"]

[:assistant "In a futuristic world where technology ..."]

[:user "Now write a critique of the above synopsis:"]

[:assistant

"The synopsis for the play \"Mr. X\" presents an intriguing premise ..."]],

:completions

{:synopsis

"In a futuristic world where technology has ...",

:critique

"The synopsis for the play \"Mr. X\" presents an intriguing premise set ..."}}Generation returns :bosquet/conversation listing full chat with generated parts filled in, and :bosquet/completions containing only generated data

Supports tools for chats for openai and ollama based models similar to how ragtacts does it

(require '[bosquet.llm.generator :refer [generate llm]])

(require 'bosquet.llm.wkk :as wkk)

;define a tool for the weather station

(defn ^{:desc "Get the current weather in a given location"} get-current-weather

[^{:type "string" :desc "The city, e.g. San Francisco"} location]

(prn (format "Applying get-current-weather for location %s" location))

(case (str/lower-case location)

"tokyo" {:location "Tokyo" :temperature "10" :unit "fahrenheit"}

"san francisco" {:location "San Francisco" :temperature "72" :unit "fahrenheit"}

"paris" {:location "Paris" :temperature "22" :unit "fahrenheit"}

{:location location :temperature "unknown"}))

(generate [[:system "You are a weather reporter"]

[:user "What is the temperature in San Francisco"]

[:assistant (llm wkk/ollama wkk/model-params

{:model "llama3.2:b" wkk/tools [#'get-current-weather]}

wkk/var-name :weather-report)]])

=>

#:bosquet{:conversation

[[:system "You are a weather reporter"]

[:user "What is the temperature in San Francisco"]

[:assistant

"According to the current weather conditions, the temperature in San Francisco is 72°F (Fahrenheit)."]],

:completions

{:weather-report

"According to the current weather conditions, the temperature in San Francisco is 72°F (Fahrenheit)."},

:usage

{:weather-report {:prompt 90, :completion 21, :total 111},

:bosquet/total {:prompt 90, :completion 21, :total 111}},

:time 3034}

;;Write some calculator functions

(defn ^{:desc "add 'x' and 'y'"} add

[^{:type "number" :desc "First number to add"} x

^{:type "number" :desc "Second number to add"} y]

(prn (format "Applying add for %s %s" x y))

(+ (if (number? x) x (Float/parseFloat x) )

(if (number? y) y (Float/parseFloat y) )))

(defn ^{:desc "subtract 'y' from 'x'"} sub

[^{:type "number" :desc "Number to subtract from"} x

^{:type "number" :desc "Number to subtract"} y]

(prn (format "Applying sub %s %s" x y))

(- (if (number? x) x (Float/parseFloat x) )

(if (number? y) y (Float/parseFloat y) )))

(generate [[:system "You are a math wizard"]

[:user "What is 2 + 2 - 3"]

[:assistant (llm wkk/openai

wkk/model-params {wkk/tools [#'add #'sub]}

wkk/var-name :answer)]])

=>

#:bosquet{:conversation

[[:system "You are a math wizard"]

[:user "What is 2 + 2 - 3"]

[:assistant "2 + 2 - 3 equals 1"]],

:completions {:answer "2 + 2 - 3 equals 1"},

:usage

{:answer {:prompt 181, :completion 12, :total 193},

:bosquet/total {:prompt 181, :completion 12, :total 193}},

:time 1749}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for bosquet

Similar Open Source Tools

bosquet

Bosquet is a tool designed for LLMOps in large language model-based applications. It simplifies building AI applications by managing LLM and tool services, integrating with Selmer templating library for prompt templating, enabling prompt chaining and composition with Pathom graph processing, defining agents and tools for external API interactions, handling LLM memory, and providing features like call response caching. The tool aims to streamline the development process for AI applications that require complex prompt templates, memory management, and interaction with external systems.

ling

Ling is a workflow framework supporting streaming of structured content from large language models. It enables quick responses to content streams, reducing waiting times. Ling parses JSON data streams character by character in real-time, outputting content in jsonuri format. It facilitates immediate front-end processing by converting content during streaming input. The framework supports data stream output via JSONL protocol, correction of token errors in JSON output, complex asynchronous workflows, status messages during streaming output, and Server-Sent Events.

llm-sandbox

LLM Sandbox is a lightweight and portable sandbox environment designed to securely execute large language model (LLM) generated code in a safe and isolated manner using Docker containers. It provides an easy-to-use interface for setting up, managing, and executing code in a controlled Docker environment, simplifying the process of running code generated by LLMs. The tool supports multiple programming languages, offers flexibility with predefined Docker images or custom Dockerfiles, and allows scalability with support for Kubernetes and remote Docker hosts.

aiohttp-pydantic

Aiohttp pydantic is an aiohttp view to easily parse and validate requests. You define using function annotations what your methods for handling HTTP verbs expect, and Aiohttp pydantic parses the HTTP request for you, validates the data, and injects the parameters you want. It provides features like query string, request body, URL path, and HTTP headers validation, as well as Open API Specification generation.

agent-kit

AgentKit is a framework for creating and orchestrating AI Agents, enabling developers to build, test, and deploy reliable AI applications at scale. It allows for creating networked agents with separate tasks and instructions to solve specific tasks, as well as simple agents for tasks like writing content. The framework requires the Inngest TypeScript SDK as a dependency and provides documentation on agents, tools, network, state, and routing. Example projects showcase AgentKit in action, such as the Test Writing Network demo using Workflow Kit, Supabase, and OpenAI.

letta

Letta is an open source framework for building stateful LLM applications. It allows users to build stateful agents with advanced reasoning capabilities and transparent long-term memory. The framework is white box and model-agnostic, enabling users to connect to various LLM API backends. Letta provides a graphical interface, the Letta ADE, for creating, deploying, interacting, and observing with agents. Users can access Letta via REST API, Python, Typescript SDKs, and the ADE. Letta supports persistence by storing agent data in a database, with PostgreSQL recommended for data migrations. Users can install Letta using Docker or pip, with Docker defaulting to PostgreSQL and pip defaulting to SQLite. Letta also offers a CLI tool for interacting with agents. The project is open source and welcomes contributions from the community.

structured-logprobs

This Python library enhances OpenAI chat completion responses by providing detailed information about token log probabilities. It works with OpenAI Structured Outputs to ensure model-generated responses adhere to a JSON Schema. Developers can analyze and incorporate token-level log probabilities to understand the reliability of structured data extracted from OpenAI models.

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

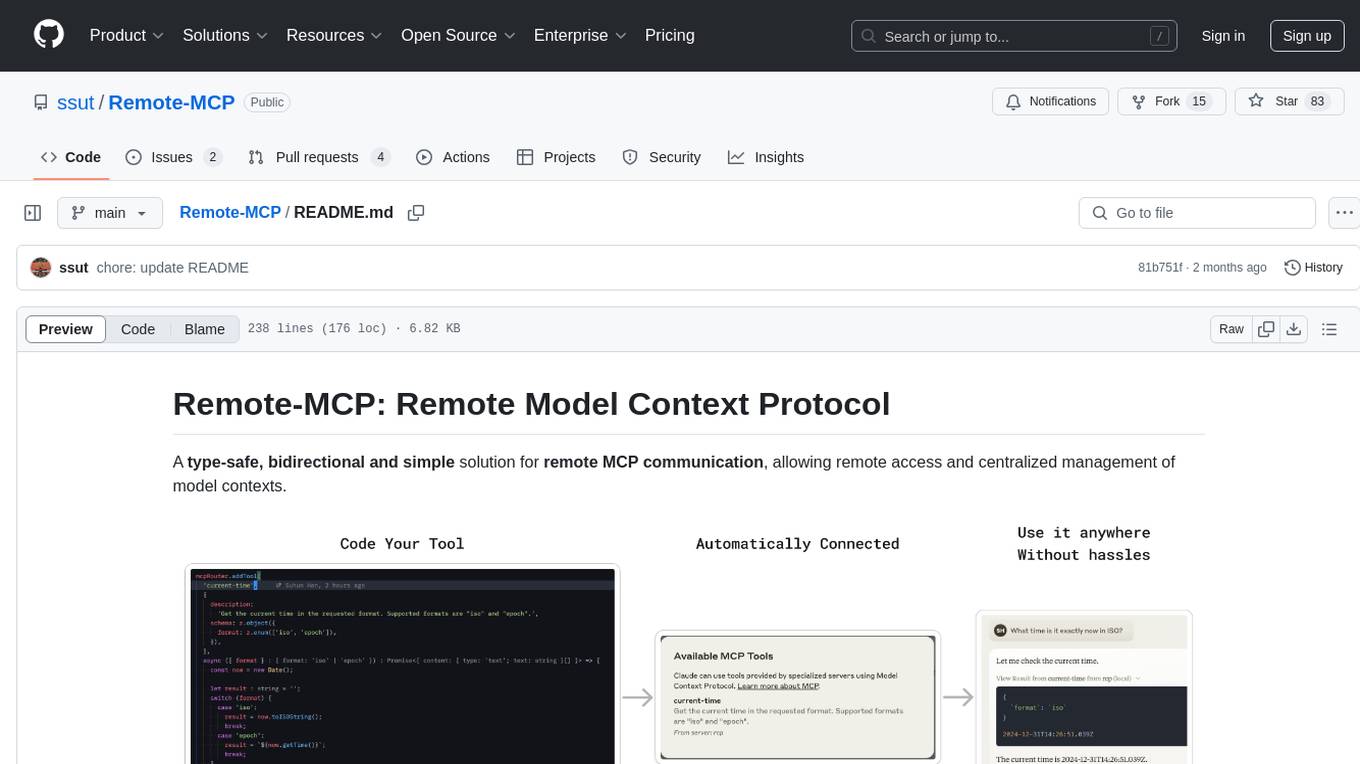

Remote-MCP

Remote-MCP is a type-safe, bidirectional, and simple solution for remote MCP communication, enabling remote access and centralized management of model contexts. It provides a bridge for immediate remote access to a remote MCP server from a local MCP client, without waiting for future official implementations. The repository contains client and server libraries for creating and connecting to remotely accessible MCP services. The core features include basic type-safe client/server communication, MCP command/tool/prompt support, custom headers, and ongoing work on crash-safe handling and event subscription system.

redis-vl-python

The Python Redis Vector Library (RedisVL) is a tailor-made client for AI applications leveraging Redis. It enhances applications with Redis' speed, flexibility, and reliability, incorporating capabilities like vector-based semantic search, full-text search, and geo-spatial search. The library bridges the gap between the emerging AI-native developer ecosystem and the capabilities of Redis by providing a lightweight, elegant, and intuitive interface. It abstracts the features of Redis into a grammar that is more aligned to the needs of today's AI/ML Engineers or Data Scientists.

dynamiq

Dynamiq is an orchestration framework designed to streamline the development of AI-powered applications, specializing in orchestrating retrieval-augmented generation (RAG) and large language model (LLM) agents. It provides an all-in-one Gen AI framework for agentic AI and LLM applications, offering tools for multi-agent orchestration, document indexing, and retrieval flows. With Dynamiq, users can easily build and deploy AI solutions for various tasks.

AIGODLIKE-ComfyUI-Translation

A plugin for multilingual translation of ComfyUI, This plugin implements translation of resident menu bar/search bar/right-click context menu/node, etc

npi

NPi is an open-source platform providing Tool-use APIs to empower AI agents with the ability to take action in the virtual world. It is currently under active development, and the APIs are subject to change in future releases. NPi offers a command line tool for installation and setup, along with a GitHub app for easy access to repositories. The platform also includes a Python SDK and examples like Calendar Negotiator and Twitter Crawler. Join the NPi community on Discord to contribute to the development and explore the roadmap for future enhancements.

comet-llm

CometLLM is a tool to log and visualize your LLM prompts and chains. Use CometLLM to identify effective prompt strategies, streamline your troubleshooting, and ensure reproducible workflows!

parakeet

Parakeet is a Go library for creating GenAI apps with Ollama. It enables the creation of generative AI applications that can generate text-based content. The library provides tools for simple completion, completion with context, chat completion, and more. It also supports function calling with tools and Wasm plugins. Parakeet allows users to interact with language models and create AI-powered applications easily.

For similar tasks

bosquet

Bosquet is a tool designed for LLMOps in large language model-based applications. It simplifies building AI applications by managing LLM and tool services, integrating with Selmer templating library for prompt templating, enabling prompt chaining and composition with Pathom graph processing, defining agents and tools for external API interactions, handling LLM memory, and providing features like call response caching. The tool aims to streamline the development process for AI applications that require complex prompt templates, memory management, and interaction with external systems.

semantic-router

Semantic Router is a superfast decision-making layer for your LLMs and agents. Rather than waiting for slow LLM generations to make tool-use decisions, we use the magic of semantic vector space to make those decisions — _routing_ our requests using _semantic_ meaning.

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

luna-ai

Luna AI is a virtual streamer driven by a 'brain' composed of ChatterBot, GPT, Claude, langchain, chatglm, text-generation-webui, 讯飞星火, 智谱AI. It can interact with viewers in real-time during live streams on platforms like Bilibili, Douyin, Kuaishou, Douyu, or chat with you locally. Luna AI uses natural language processing and text-to-speech technologies like Edge-TTS, VITS-Fast, elevenlabs, bark-gui, VALL-E-X to generate responses to viewer questions and can change voice using so-vits-svc, DDSP-SVC. It can also collaborate with Stable Diffusion for drawing displays and loop custom texts. This project is completely free, and any identical copycat selling programs are pirated, please stop them promptly.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

cria

Cria is a Python library designed for running Large Language Models with minimal configuration. It provides an easy and concise way to interact with LLMs, offering advanced features such as custom models, streams, message history management, and running multiple models in parallel. Cria simplifies the process of using LLMs by providing a straightforward API that requires only a few lines of code to get started. It also handles model installation automatically, making it efficient and user-friendly for various natural language processing tasks.

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.