Best AI tools for< Generate Responses >

13 - AI tool Sites

Vidura

Vidura is a prompt management system integrated with multiple AI systems, designed to enhance the Generative AI experience. Users can compose, organize, share, and export AI prompts easily. It offers features like categorizing prompts, built-in templates, prompt history, dynamic prompting, and community sharing. Vidura aims to make Generative AI accessible and user-friendly, providing a platform for learning and collaboration in the AI community.

Fastbreak

Fastbreak is an AI Assistant application designed to help users win Request for Proposals (RFPs) and Request for Information (RFIs) by providing cognitive proposal management for high-stakes enterprise deals. The tool accelerates completion time by 10x, optimizes access to relevant information, streamlines leveraging domain expertise, and allows users to relax by saving time and winning more business. Fastbreak enables users to answer business questionnaires in minutes, offers one-step onboarding, flexible answers, powerful export capabilities, and contextual smarts to synthesize answers based on previous responses and documents.

ChatTab

ChatTab is a desktop application for macOS that serves as a ChatGPT API client, offering a seamless experience for users to interact with various GPT models. It provides a native Mac app with features like Markdown support, multiple tabs for conversations, shortcut keys, iCloud sync, and GPT4-Vision for image-related queries. ChatTab prioritizes security and privacy by not storing user data or logs, and encrypting the API Key. It supports multiple languages and offers different pricing plans to cater to various user needs.

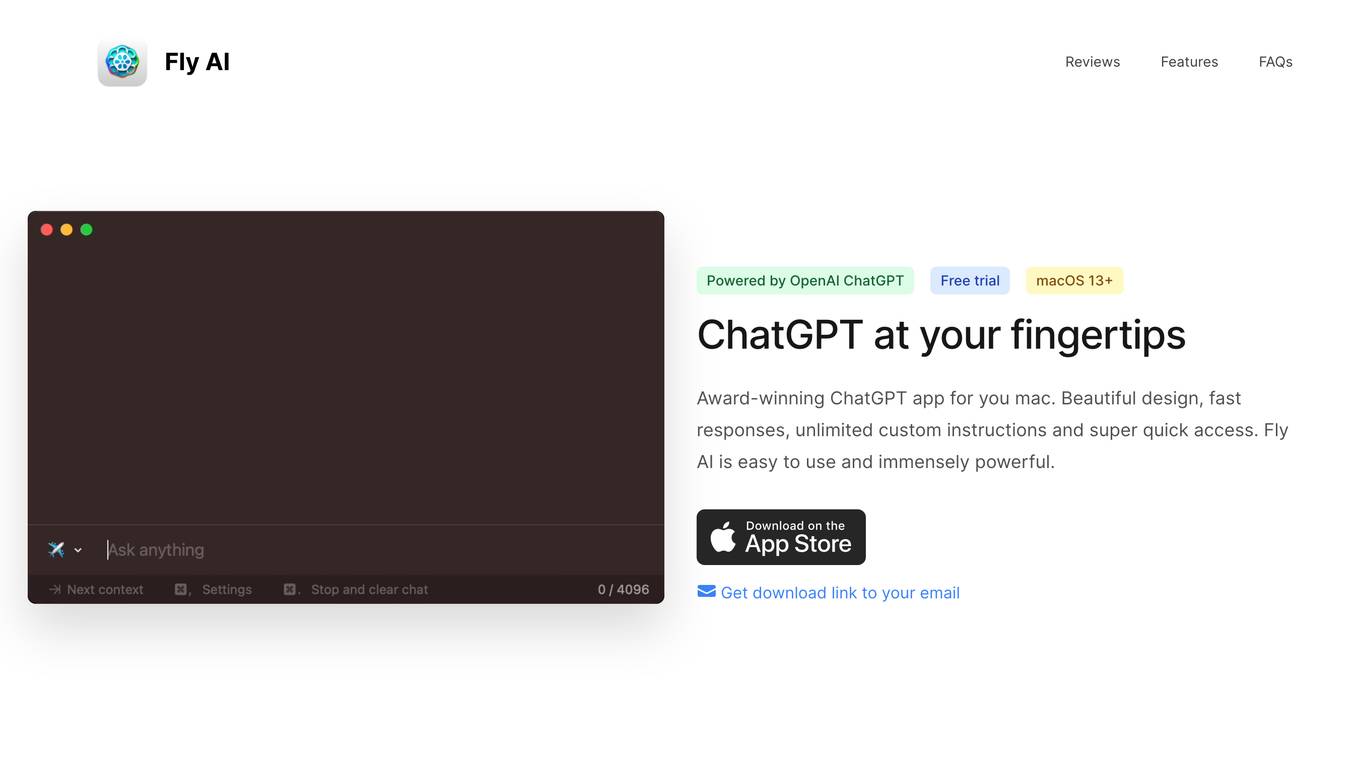

Fly AI

Fly AI is a powerful and user-friendly AI application designed for Mac users. It leverages the capabilities of OpenAI's ChatGPT to provide fast responses, unlimited custom instructions, and quick access. With a beautiful design and context-aware features, Fly AI allows users to work faster and smarter by creating custom AI mini-apps. The application prioritizes user privacy by not recording data and offering a standalone subscription model. Fly AI is a native Mac app, ensuring a seamless user experience without the need to open a browser.

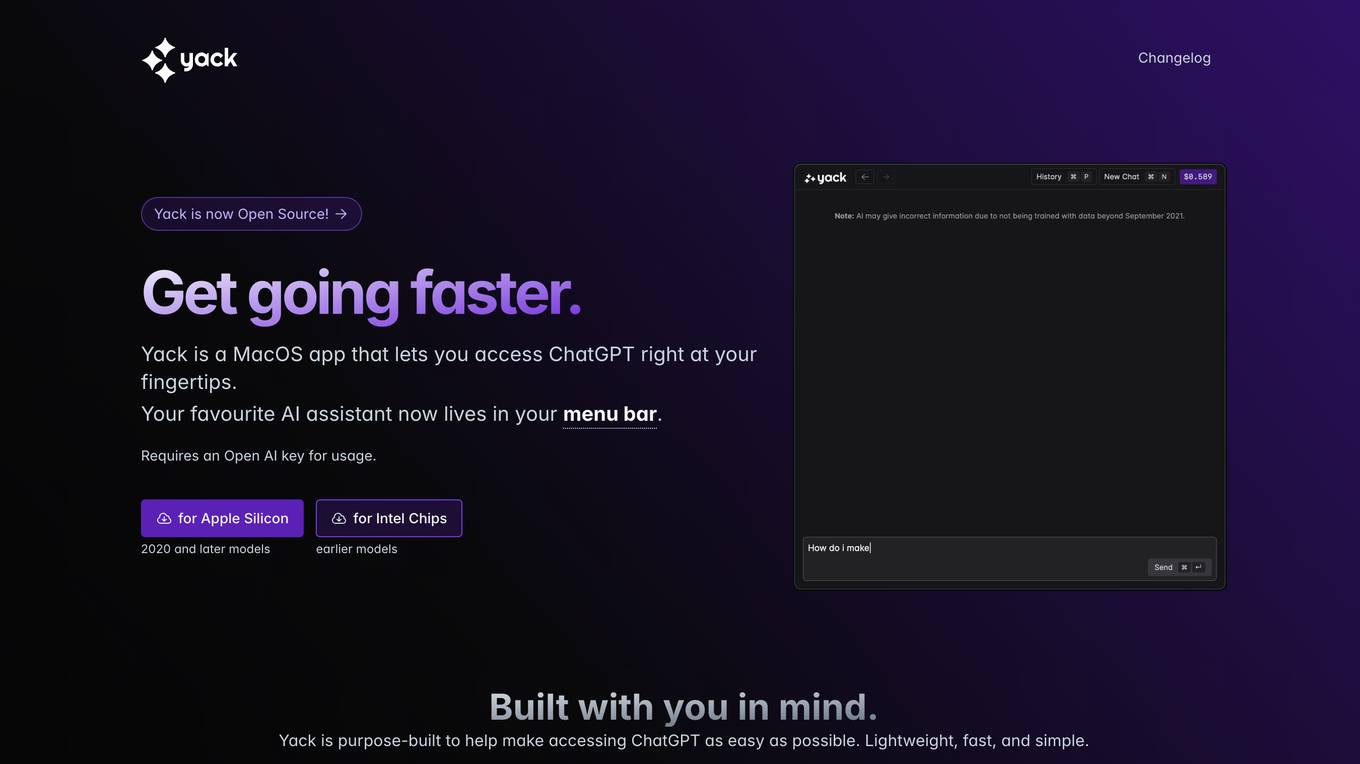

Yack

Yack is an AI tool that provides easy access to ChatGPT on MacOS. It is a lightweight and fast application designed to be used with a keyboard, offering features like multiple themes, Markdown support, and upcoming features such as cross-app integration and prompt templates. Yack prioritizes user privacy by not storing any data on external servers, ensuring that all information remains on the user's device. Built with Rust, Yack is efficient and compact, making it a convenient tool for generating AI-powered responses and completing prompts.

ConversAI

ConversAI is an AI-powered chat assistant designed to enhance online communication. It uses natural language processing and machine learning to understand and respond to messages in a conversational manner. With ConversAI, users can quickly generate personalized responses, summarize long messages, detect the tone of conversations, communicate in multiple languages, and even add GIFs to their replies. It integrates seamlessly with various messaging platforms and tools, making it easy to use and efficient. ConversAI helps users save time, improve their communication skills, and have more engaging conversations online.

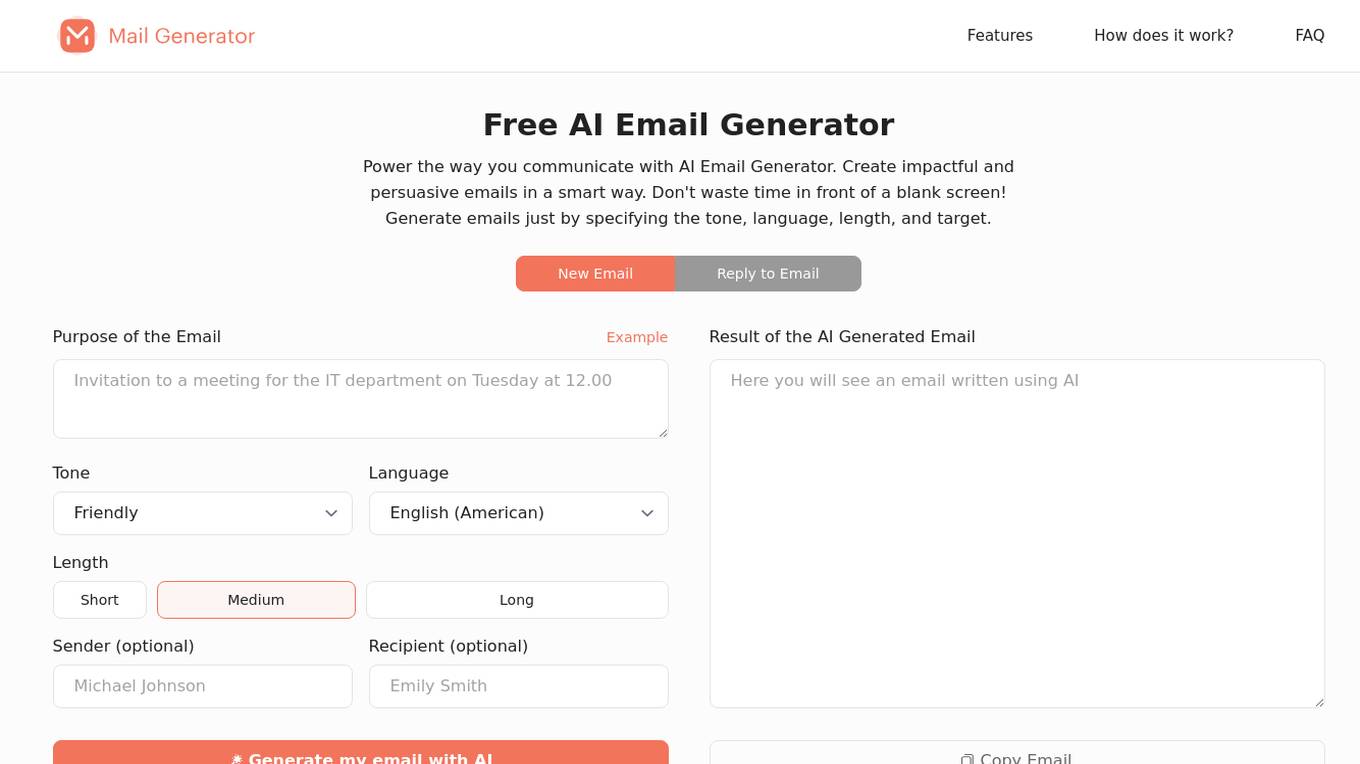

MailGenerator.ai

MailGenerator.ai is an AI-powered email generator tool that helps users create impactful and persuasive emails in a smart way. It allows users to specify the tone, language, length, and target to generate personalized email content quickly and efficiently. The tool adapts to user needs, increases email response rates, and improves email content quality. With an intuitive interface, users can easily create professional emails for various business purposes, such as marketing, sales, and customer service.

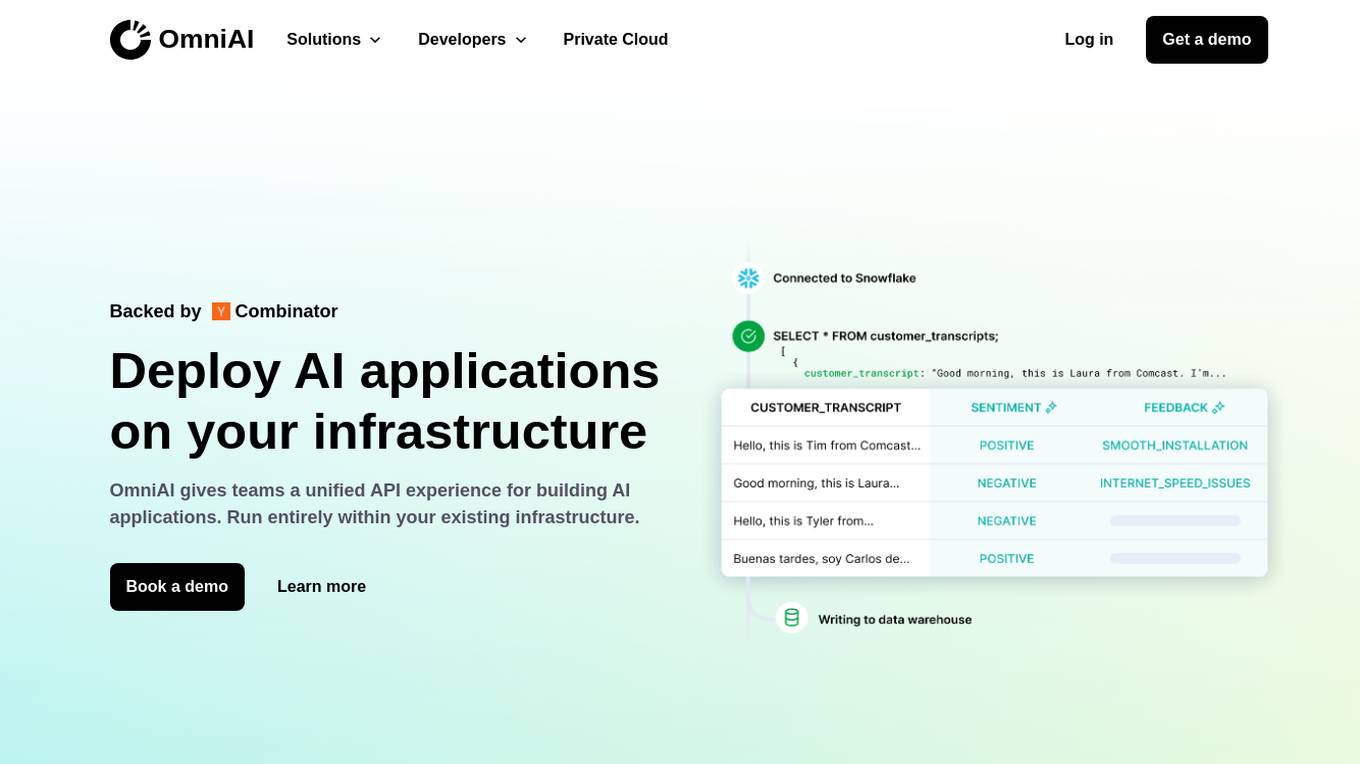

OmniAI

OmniAI is an AI tool that allows teams to deploy AI applications on their existing infrastructure. It provides a unified API experience for building AI applications and offers a wide selection of industry-leading models. With tools like Llama 3, Claude 3, Mistral Large, and AWS Titan, OmniAI excels in tasks such as natural language understanding, generation, safety, ethical behavior, and context retention. It also enables users to deploy and query the latest AI models quickly and easily within their virtual private cloud environment.

ChatGPT

ChatGPT is an AI-powered chatbot tool that allows users to create and train chatbots for various purposes. It uses advanced natural language processing to generate human-like responses and engage in conversations with users. With ChatGPT, you can customize the chatbot's responses, train it on specific topics, and integrate it into your website or application to provide interactive and personalized experiences for your users.

QuickMail AI

QuickMail AI is an AI-powered email assistant that helps users craft professional emails in seconds. It utilizes AI technology to generate full, well-structured emails from brief prompts, saving users time and effort. The tool offers customizable outputs, allowing users to fine-tune emails to match their personal style. With features like AI-powered generation and time-saving efficiency, QuickMail AI is designed to streamline the email writing process and enhance productivity.

Messenger Genie

Messenger Genie is an AI-powered tool designed to help users effortlessly generate tailored comments and messages based on the content of any website, social media post, or email. It uses advanced AI to provide context-aware replies in just one click, enhancing online communication across various platforms. With features like AI-powered response generation, real-time content analysis, adjustable response tone and length, auto-save, and content personalization, Messenger Genie aims to elevate the quality and efficiency of online interactions.

Chat GPT AI

Chat GPT AI is an online chatbot application based on a large language model running via a neural network machine learning model. It provides 24/7 customer support, quick-fix replies to personal queries, timely suggested ideas, and addressing common issues. The application assists users with various tasks, including multiple queries, and has grabbed a significant world audience through its various AI assistance mates. Chat GPT AI is designed to help users from child-age queries to professional worker tasks, providing accurate responses in a friendly user tone.

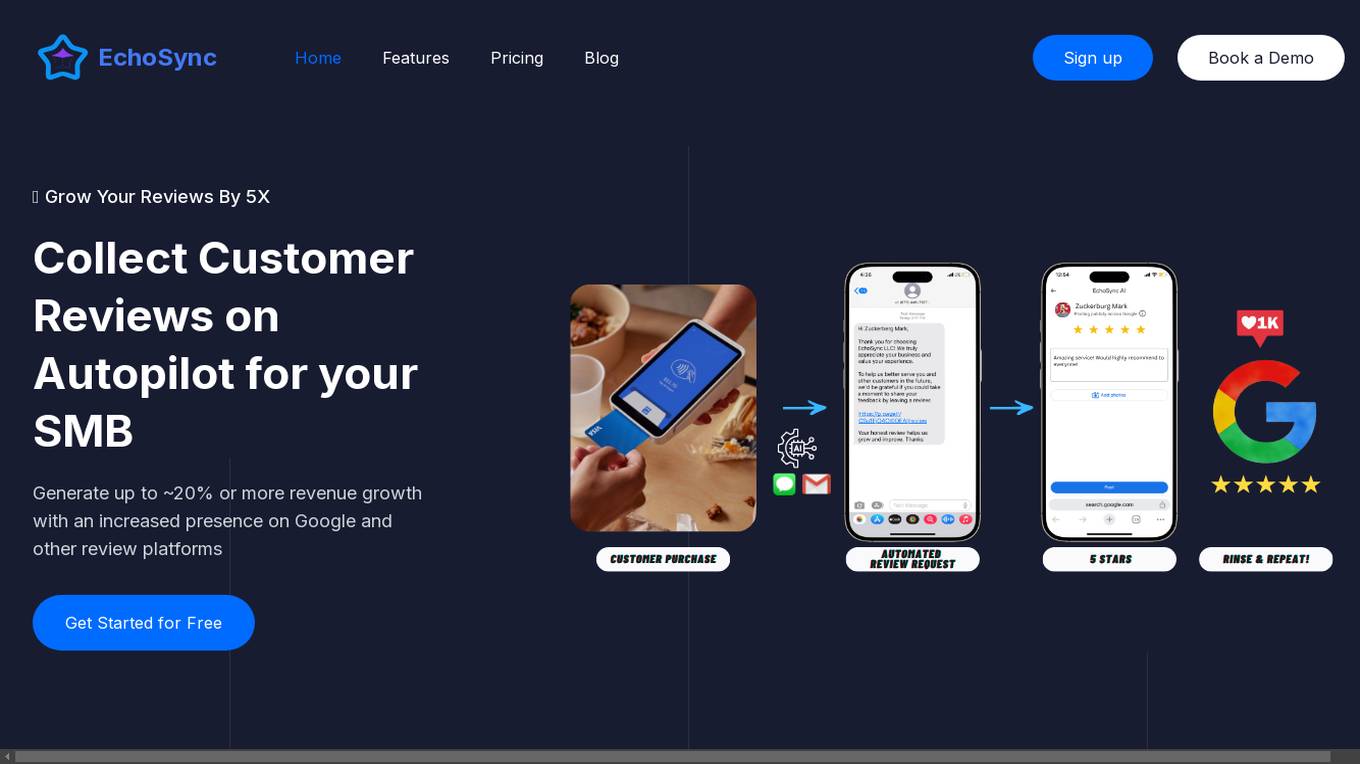

EchoSync

EchoSync is an AI-powered review management software that revolutionizes the way businesses handle their online reputation. It leverages advanced algorithms and natural language processing to automate and streamline the review management process. EchoSync offers a comprehensive suite of AI-powered features designed to simplify review management for businesses, including automated review monitoring, sentiment analysis, intelligent response suggestions, and detailed analytics. By harnessing the power of AI, businesses can efficiently monitor, analyze, and respond to online reviews, saving time and resources while enhancing their online reputation.

55 - Open Source AI Tools

semantic-router

Semantic Router is a superfast decision-making layer for your LLMs and agents. Rather than waiting for slow LLM generations to make tool-use decisions, we use the magic of semantic vector space to make those decisions — _routing_ our requests using _semantic_ meaning.

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

luna-ai

Luna AI is a virtual streamer driven by a 'brain' composed of ChatterBot, GPT, Claude, langchain, chatglm, text-generation-webui, 讯飞星火, 智谱AI. It can interact with viewers in real-time during live streams on platforms like Bilibili, Douyin, Kuaishou, Douyu, or chat with you locally. Luna AI uses natural language processing and text-to-speech technologies like Edge-TTS, VITS-Fast, elevenlabs, bark-gui, VALL-E-X to generate responses to viewer questions and can change voice using so-vits-svc, DDSP-SVC. It can also collaborate with Stable Diffusion for drawing displays and loop custom texts. This project is completely free, and any identical copycat selling programs are pirated, please stop them promptly.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

cria

Cria is a Python library designed for running Large Language Models with minimal configuration. It provides an easy and concise way to interact with LLMs, offering advanced features such as custom models, streams, message history management, and running multiple models in parallel. Cria simplifies the process of using LLMs by providing a straightforward API that requires only a few lines of code to get started. It also handles model installation automatically, making it efficient and user-friendly for various natural language processing tasks.

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

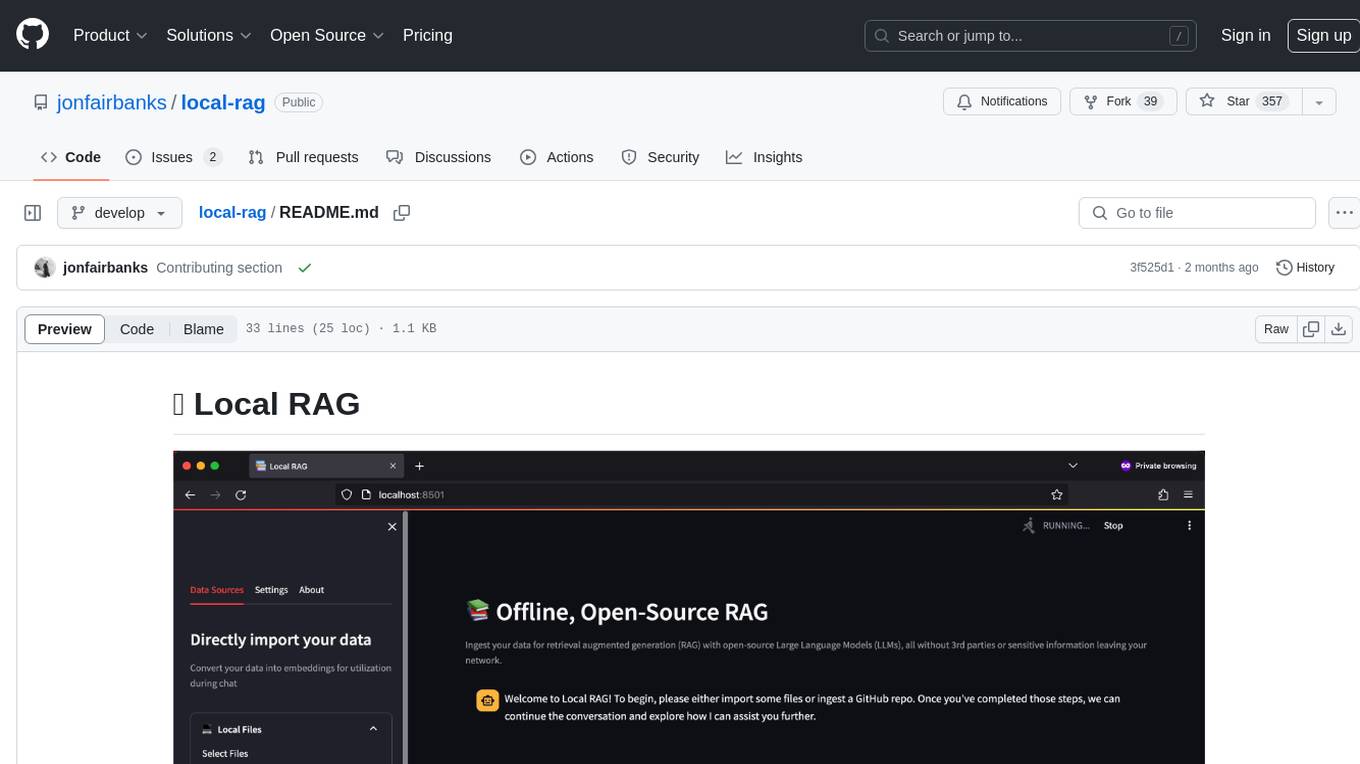

local-rag

Local RAG is an offline, open-source tool that allows users to ingest files for retrieval augmented generation (RAG) using large language models (LLMs) without relying on third parties or exposing sensitive data. It supports offline embeddings and LLMs, multiple sources including local files, GitHub repos, and websites, streaming responses, conversational memory, and chat export. Users can set up and deploy the app, learn how to use Local RAG, explore the RAG pipeline, check planned features, known bugs and issues, access additional resources, and contribute to the project.

whatsapp-ai-bot

The WhatsApp AI Bot is a chatbot that utilizes various AI models APIs to generate responses to user input. Users can interact with the bot using commands to access different AI models such as Gemini, Gemini-Vision, CHAT-GPT, DALL-E, and Stability AI. Additionally, users have the flexibility to create their own custom models to personalize the bot's behavior. The bot operates on WhatsApp Web through Puppeteer and requires API keys for Gemini, OpenAI, and StabilityAI. It provides a range of functionalities and customization options for users interested in AI-powered chatbots.

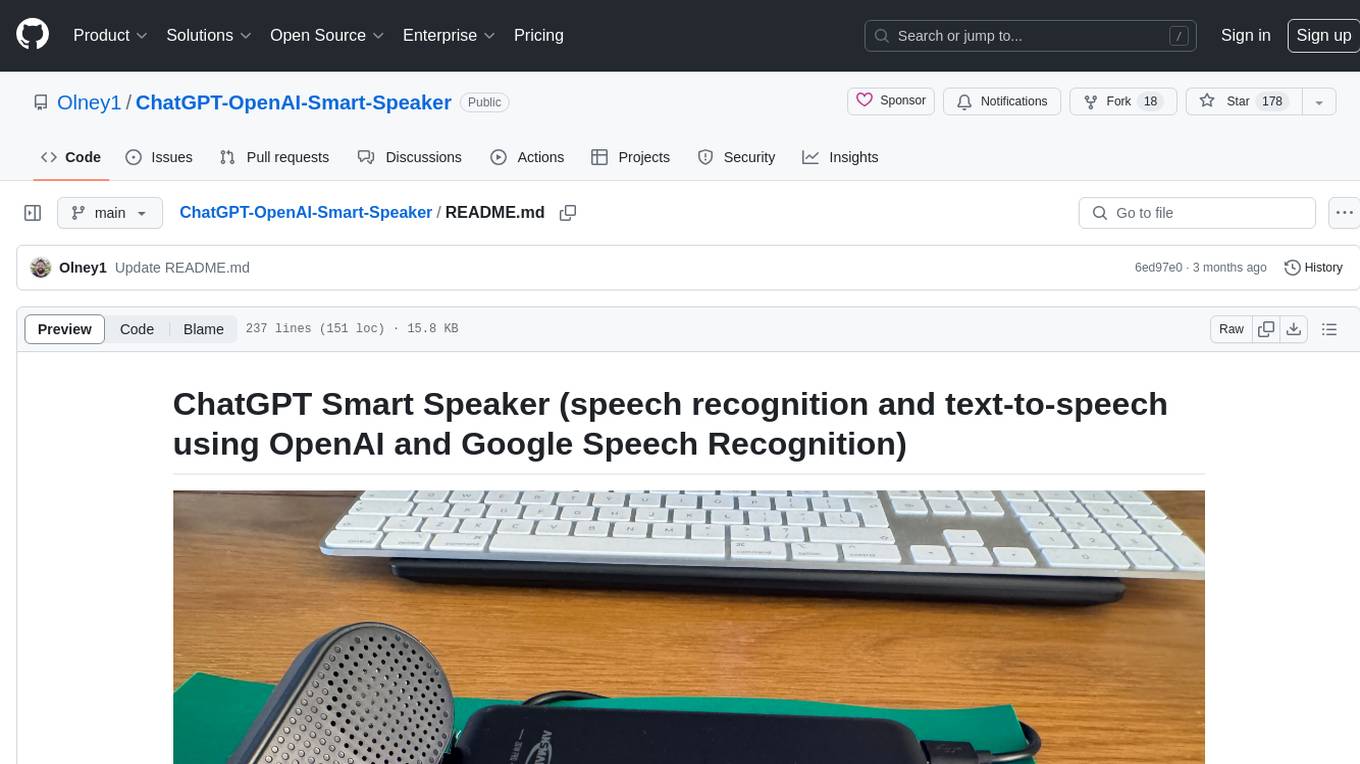

ChatGPT-OpenAI-Smart-Speaker

ChatGPT Smart Speaker is a project that enables speech recognition and text-to-speech functionalities using OpenAI and Google Speech Recognition. It provides scripts for running on PC/Mac and Raspberry Pi, allowing users to interact with a smart speaker setup. The project includes detailed instructions for setting up the required hardware and software dependencies, along with customization options for the OpenAI model engine, language settings, and response randomness control. The Raspberry Pi setup involves utilizing the ReSpeaker hardware for voice feedback and light shows. The project aims to offer an advanced smart speaker experience with features like wake word detection and response generation using AI models.

Online-RLHF

This repository, Online RLHF, focuses on aligning large language models (LLMs) through online iterative Reinforcement Learning from Human Feedback (RLHF). It aims to bridge the gap in existing open-source RLHF projects by providing a detailed recipe for online iterative RLHF. The workflow presented here has shown to outperform offline counterparts in recent LLM literature, achieving comparable or better results than LLaMA3-8B-instruct using only open-source data. The repository includes model releases for SFT, Reward model, and RLHF model, along with installation instructions for both inference and training environments. Users can follow step-by-step guidance for supervised fine-tuning, reward modeling, data generation, data annotation, and training, ultimately enabling iterative training to run automatically.

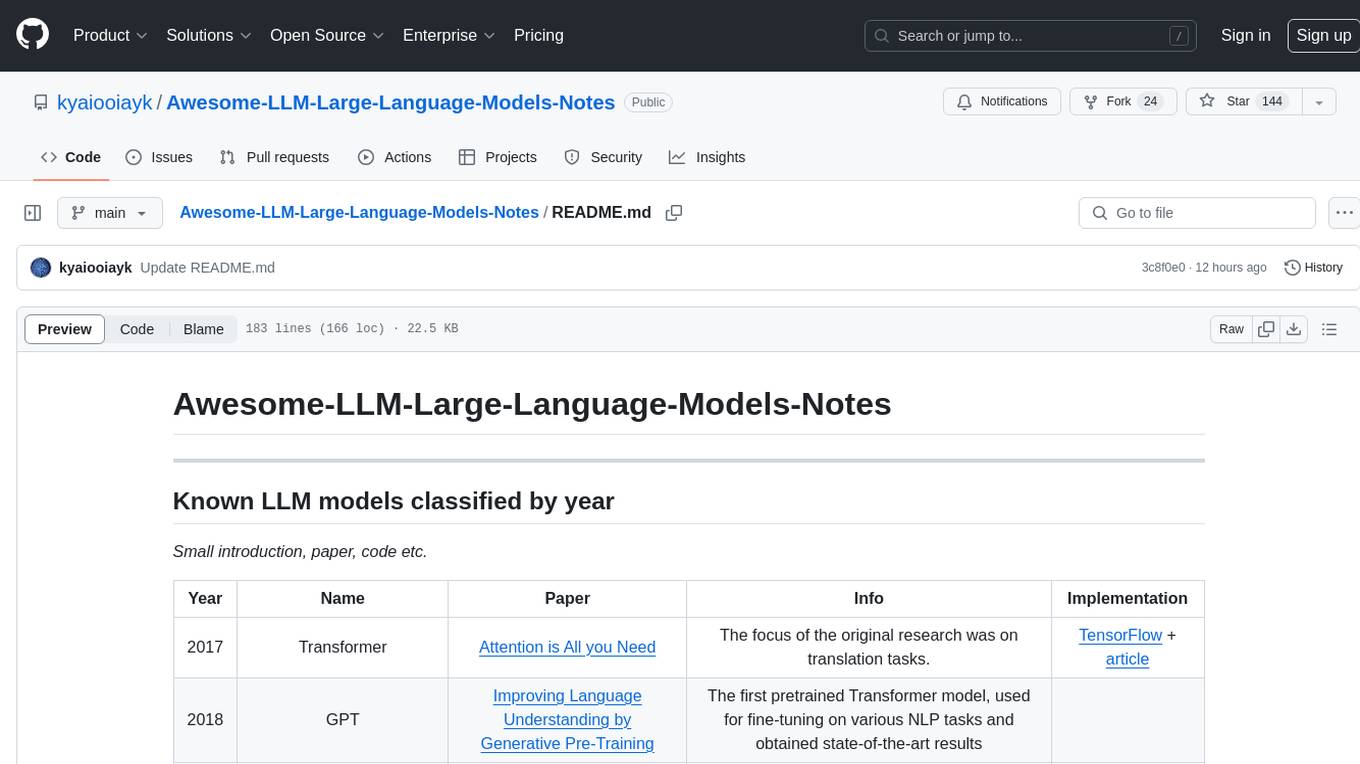

Awesome-LLM-Large-Language-Models-Notes

Awesome-LLM-Large-Language-Models-Notes is a repository that provides a comprehensive collection of information on various Large Language Models (LLMs) classified by year, size, and name. It includes details on known LLM models, their papers, implementations, and specific characteristics. The repository also covers LLM models classified by architecture, must-read papers, blog articles, tutorials, and implementations from scratch. It serves as a valuable resource for individuals interested in understanding and working with LLMs in the field of Natural Language Processing (NLP).

allms

allms is a versatile and powerful library designed to streamline the process of querying Large Language Models (LLMs). Developed by Allegro engineers, it simplifies working with LLM applications by providing a user-friendly interface, asynchronous querying, automatic retrying mechanism, error handling, and output parsing. It supports various LLM families hosted on different platforms like OpenAI, Google, Azure, and GCP. The library offers features for configuring endpoint credentials, batch querying with symbolic variables, and forcing structured output format. It also provides documentation, quickstart guides, and instructions for local development, testing, updating documentation, and making new releases.

biochatter

Generative AI models have shown tremendous usefulness in increasing accessibility and automation of a wide range of tasks. This repository contains the `biochatter` Python package, a generic backend library for the connection of biomedical applications to conversational AI. It aims to provide a common framework for deploying, testing, and evaluating diverse models and auxiliary technologies in the biomedical domain. BioChatter is part of the BioCypher ecosystem, connecting natively to BioCypher knowledge graphs.

merlin

Merlin is a groundbreaking model capable of generating natural language responses intricately linked with object trajectories of multiple images. It excels in predicting and reasoning about future events based on initial observations, showcasing unprecedented capability in future prediction and reasoning. Merlin achieves state-of-the-art performance on the Future Reasoning Benchmark and multiple existing multimodal language models benchmarks, demonstrating powerful multi-modal general ability and foresight minds.

rubra

Rubra is a collection of open-weight large language models enhanced with tool-calling capability. It allows users to call user-defined external tools in a deterministic manner while reasoning and chatting, making it ideal for agentic use cases. The models are further post-trained to teach instruct-tuned models new skills and mitigate catastrophic forgetting. Rubra extends popular inferencing projects for easy use, enabling users to run the models easily.

tiny-ai-client

Tiny AI Client is a lightweight tool designed for easy usage and switching of Language Model Models (LLMs) with support for vision and tool usage. It aims to provide a simple and intuitive interface for interacting with various LLMs, allowing users to easily set, change models, send messages, use tools, and handle vision tasks. The core logic of the tool is kept minimal and easy to understand, with separate modules for vision and tool usage utilities. Users can interact with the tool through simple Python scripts, passing model names, messages, tools, and images as required.

candle-vllm

Candle-vllm is an efficient and easy-to-use platform designed for inference and serving local LLMs, featuring an OpenAI compatible API server. It offers a highly extensible trait-based system for rapid implementation of new module pipelines, streaming support in generation, efficient management of key-value cache with PagedAttention, and continuous batching. The tool supports chat serving for various models and provides a seamless experience for users to interact with LLMs through different interfaces.

ask-astro

Ask Astro is an open-source reference implementation of Andreessen Horowitz's LLM Application Architecture built by Astronomer. It provides an end-to-end example of a Q&A LLM application used to answer questions about Apache Airflow® and Astronomer. Ask Astro includes Airflow DAGs for data ingestion, an API for business logic, a Slack bot, a public UI, and DAGs for processing user feedback. The tool is divided into data retrieval & embedding, prompt orchestration, and feedback loops.

UnionLLM

UnionLLM is a lightweight open-source Python toolkit that provides a unified way to access various domestic and foreign large language models and Agent orchestration tools compatible with OpenAI. It aims to connect various large language models in a unified and easily extensible way, making it more convenient to use multiple large language models. UnionLLM currently supports various domestic large language models and Agent orchestration tools, as well as over 100 models through LiteLLM, including models from major overseas language model developers and cloud service providers. It simplifies the process of calling different models by providing a consistent interface and expanding the returned information to include context for knowledge base retrieval.

unify

The Unify Python Package provides access to the Unify REST API, allowing users to query Large Language Models (LLMs) from any Python 3.7.1+ application. It includes Synchronous and Asynchronous clients with Streaming responses support. Users can easily use any endpoint with a single key, route to the best endpoint for optimal throughput, cost, or latency, and customize prompts to interact with the models. The package also supports dynamic routing to automatically direct requests to the top-performing provider. Additionally, users can enable streaming responses and interact with the models asynchronously for handling multiple user requests simultaneously.

bosquet

Bosquet is a tool designed for LLMOps in large language model-based applications. It simplifies building AI applications by managing LLM and tool services, integrating with Selmer templating library for prompt templating, enabling prompt chaining and composition with Pathom graph processing, defining agents and tools for external API interactions, handling LLM memory, and providing features like call response caching. The tool aims to streamline the development process for AI applications that require complex prompt templates, memory management, and interaction with external systems.

gigachat

GigaChat is a Python library that allows GigaChain to interact with GigaChat, a neural network model capable of engaging in dialogue, writing code, creating texts, and images on demand. Data exchange with the service is facilitated through the GigaChat API. The library supports processing token streaming, as well as working in synchronous or asynchronous mode. It enables precise token counting in text using the GigaChat API.

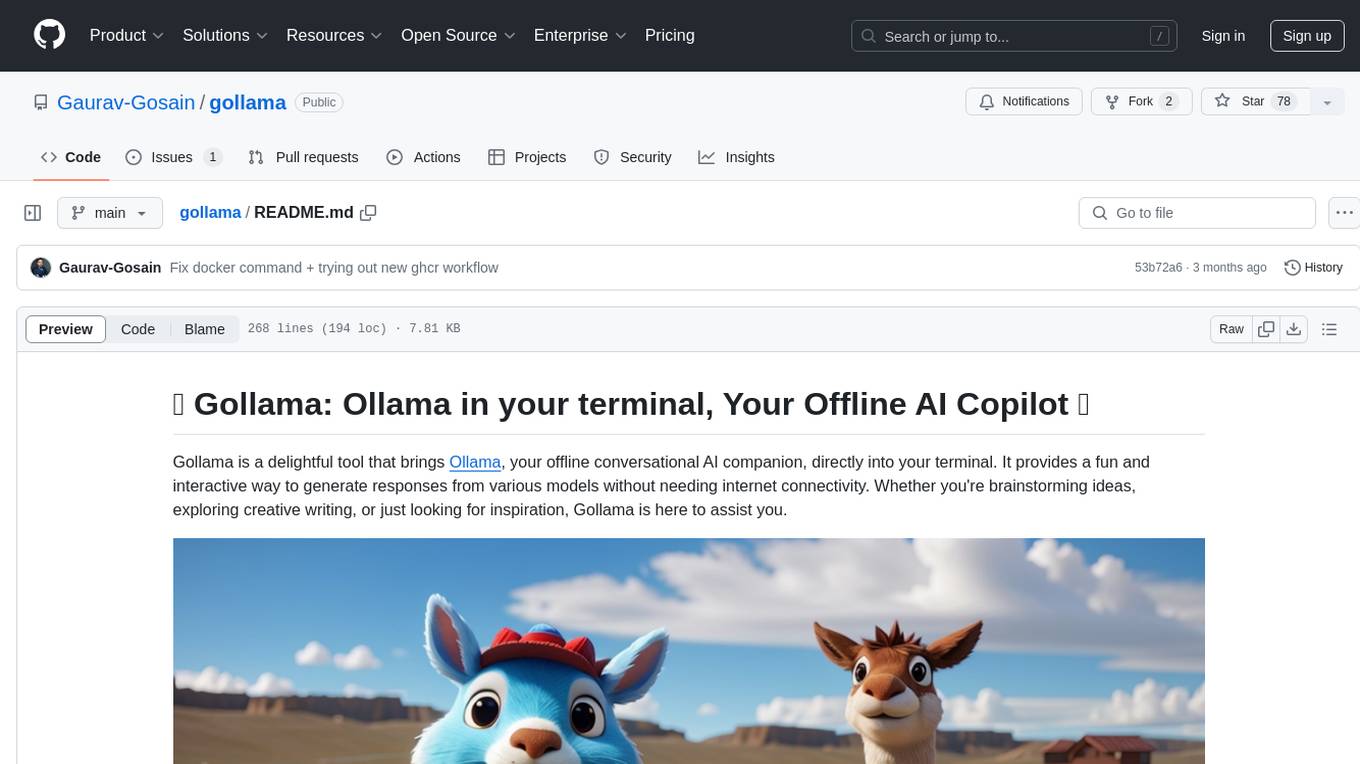

gollama

Gollama is a delightful tool that brings Ollama, your offline conversational AI companion, directly into your terminal. It provides a fun and interactive way to generate responses from various models without needing internet connectivity. Whether you're brainstorming ideas, exploring creative writing, or just looking for inspiration, Gollama is here to assist you. The tool offers an interactive interface, customizable prompts, multiple models selection, and visual feedback to enhance user experience. It can be installed via different methods like downloading the latest release, using Go, running with Docker, or building from source. Users can interact with Gollama through various options like specifying a custom base URL, prompt, model, and enabling raw output mode. The tool supports different modes like interactive, piped, CLI with image, and TUI with image. Gollama relies on third-party packages like bubbletea, glamour, huh, and lipgloss. The roadmap includes implementing piped mode, support for extracting codeblocks, copying responses/codeblocks to clipboard, GitHub Actions for automated releases, and downloading models directly from Ollama using the rest API. Contributions are welcome, and the project is licensed under the MIT License.

conversational-agent-langchain

This repository contains a Rest-Backend for a Conversational Agent that allows embedding documents, semantic search, QA based on documents, and document processing with Large Language Models. It uses Aleph Alpha and OpenAI Large Language Models to generate responses to user queries, includes a vector database, and provides a REST API built with FastAPI. The project also features semantic search, secret management for API keys, installation instructions, and development guidelines for both backend and frontend components.

token.js

Token.js is a TypeScript SDK that integrates with over 200 LLMs from 10 providers using OpenAI's format. It allows users to call LLMs, supports tools, JSON outputs, image inputs, and streaming, all running on the client side without the need for a proxy server. The tool is free and open source under the MIT license.

ComposeAI

ComposeAI is an Android & iOS application similar to ChatGPT, built using Compose Multiplatform. It utilizes various technologies such as Compose Multiplatform, Material 3, OpenAI Kotlin, Voyager, Koin, SQLDelight, Multiplatform Settings, Coil3, Napier, BuildKonfig, Firebase Analytics & Crashlytics, and AdMob. The app architecture follows Google's latest guidelines. Users need to set up their own OpenAI API key before using the app.

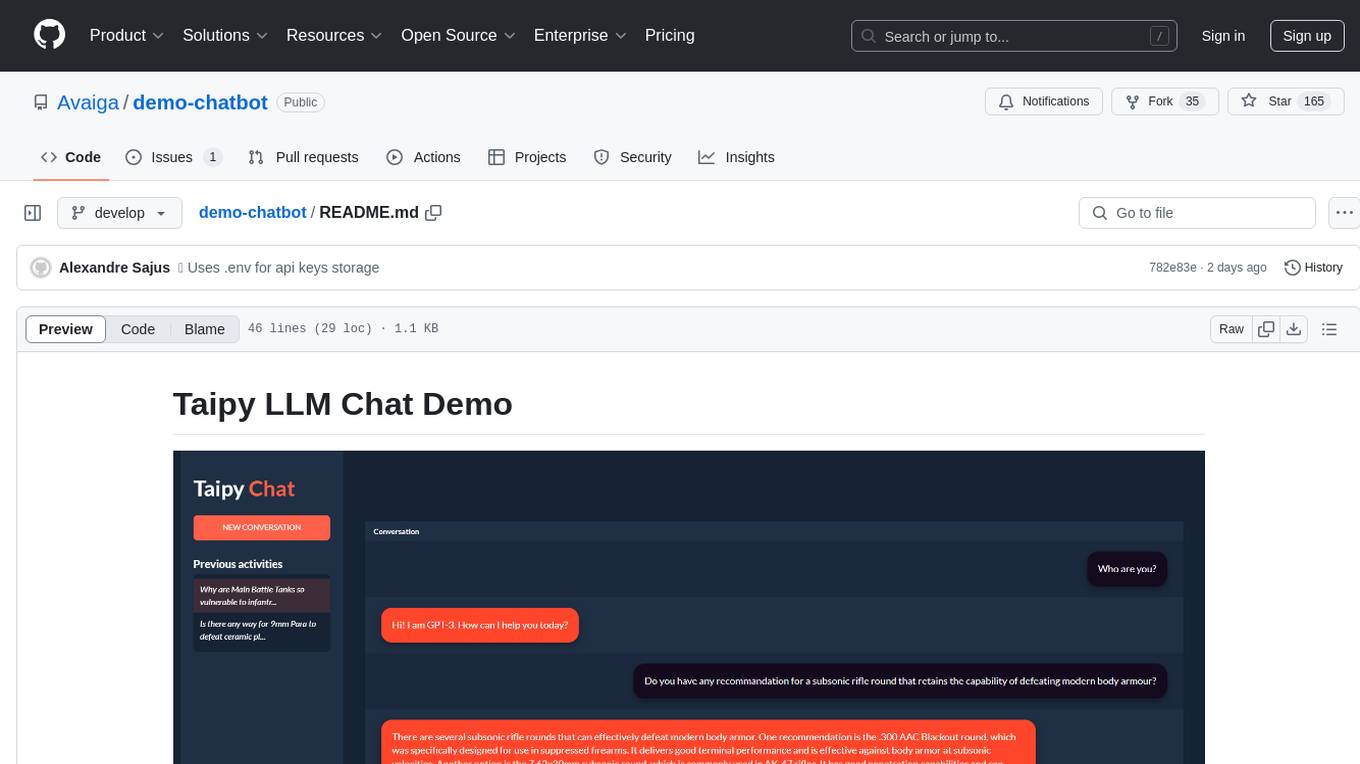

demo-chatbot

The demo-chatbot repository contains a simple app to chat with an LLM, allowing users to create any LLM Inference Web Apps using Python. The app utilizes OpenAI's GPT-4 API to generate responses to user messages, with the flexibility to switch to other APIs or models. The repository includes a tutorial in the Taipy documentation for creating the app. Users need an OpenAI account with an active API key to run the app by cloning the repository, installing dependencies, setting up the API key in a .env file, and running the main.py file.

raft

RAFT (Retrieval-Augmented Fine-Tuning) is a method for creating conversational agents that realistically emulate specific human targets. It involves a dual-phase process of fine-tuning and retrieval-based augmentation to generate nuanced and personalized dialogue. The tool is designed to combine interview transcripts with memories from past writings to enhance language model responses. RAFT has the potential to advance the field of personalized, context-sensitive conversational agents.

llm-interface

LLM Interface is an npm module that streamlines interactions with various Large Language Model (LLM) providers in Node.js applications. It offers a unified interface for switching between providers and models, supporting 36 providers and hundreds of models. Features include chat completion, streaming, error handling, extensibility, response caching, retries, JSON output, and repair. The package relies on npm packages like axios, @google/generative-ai, dotenv, jsonrepair, and loglevel. Installation is done via npm, and usage involves sending prompts to LLM providers. Tests can be run using npm test. Contributions are welcome under the MIT License.

hf-llm.rs

HF-LLM.rs is a CLI tool for accessing Large Language Models (LLMs) like Llama 3.1, Mistral, Gemma 2, Cohere and more hosted on Hugging Face. It allows interaction with various models, providing input and receiving responses in a terminal environment. Users can select models, input prompts, receive streaming output, and engage in chat mode. The tool supports a variety of models available on Hugging Face infrastructure, with the list continuously updated. Some models may require a Pro subscription for access.

toolmate

ToolMate AI is an advanced AI companion that integrates agents, tools, and plugins to excel in conversations, generative work, and task execution. It supports multi-step actions, allowing users to customize workflows for tackling complex projects with ease. The tool offers a wide range of AI backends and models, including Ollama, Llama.cpp, Groq Cloud API, OpenAI API, and Google Gemini via Vertex AI. Users can easily switch between backends and leverage AI models like wizardlm2 and mixtral. ToolMate AI stands out for its distinctive features such as tool calling for any LLMs, running multiple tools in one go, highly customizable plugins, and integration with popular AI tools. It also supports quick tool calling using '@' notation and enables the execution of computing tasks on demand. With features like multiple tools in one go, customizable plugins, system command and fabric integration, GPU offloading support, real-time data access, and device information retrieval, ToolMate AI offers a comprehensive solution for various tasks and content creation.

awesome-rag

Awesome RAG is a curated list of retrieval-augmented generation (RAG) in large language models. It includes papers, surveys, general resources, lectures, talks, tutorials, workshops, tools, and other collections related to retrieval-augmented generation. The repository aims to provide a comprehensive overview of the latest advancements, techniques, and applications in the field of RAG.

pacha

Pacha is an AI tool designed for retrieving context for natural language queries using a SQL interface and Python programming environment. It is optimized for working with Hasura DDN for multi-source querying. Pacha is used in conjunction with language models to produce informed responses in AI applications, agents, and chatbots.

lite_llama

lite_llama is a llama model inference lite framework by triton. It offers accelerated inference for llama3, Qwen2.5, and Llava1.5 models with up to 4x speedup compared to transformers. The framework supports top-p sampling, stream output, GQA, and cuda graph optimizations. It also provides efficient dynamic management for kv cache, operator fusion, and custom operators like rmsnorm, rope, softmax, and element-wise multiplication using triton kernels.

Macaw-LLM

Macaw-LLM is a pioneering multi-modal language modeling tool that seamlessly integrates image, audio, video, and text data. It builds upon CLIP, Whisper, and LLaMA models to process and analyze multi-modal information effectively. The tool boasts features like simple and fast alignment, one-stage instruction fine-tuning, and a new multi-modal instruction dataset. It enables users to align multi-modal features efficiently, encode instructions, and generate responses across different data types.

llm-web-api

LLM Web API is a tool that provides a web page to API interface for ChatGPT, allowing users to bypass Cloudflare challenges, switch models, and dynamically display supported models. It uses Playwright to control a fingerprint browser, simulating user operations to send requests to the OpenAI website and converting the responses into API interfaces. The API currently supports the OpenAI-compatible /v1/chat/completions API, accessible using OpenAI or other compatible clients.

MemoryLLM

MemoryLLM is a large language model designed for self-updating capabilities. It offers pretrained models with different memory capacities and features, such as chat models. The repository provides training code, evaluation scripts, and datasets for custom experiments. MemoryLLM aims to enhance knowledge retention and performance on various natural language processing tasks.

aisuite

Aisuite is a simple, unified interface to multiple Generative AI providers. It allows developers to easily interact with various Language Model (LLM) providers like OpenAI, Anthropic, Azure, Google, AWS, and more through a standardized interface. The library focuses on chat completions and provides a thin wrapper around python client libraries, enabling creators to test responses from different LLM providers without changing their code. Aisuite maximizes stability by using HTTP endpoints or SDKs for making calls to the providers. Users can install the base package or specific provider packages, set up API keys, and utilize the library to generate chat completion responses from different models.

langfair

LangFair is a Python library for bias and fairness assessments of large language models (LLMs). It offers a comprehensive framework for choosing bias and fairness metrics, demo notebooks, and a technical playbook. Users can tailor evaluations to their use cases with a Bring Your Own Prompts approach. The focus is on output-based metrics practical for governance audits and real-world testing.

langchainjs-quickstart-demo

Discover the journey of building a generative AI application using LangChain.js and Azure. This demo explores the development process from idea to production, using a RAG-based approach for a Q&A system based on YouTube video transcripts. The application allows to ask text-based questions about a YouTube video and uses the transcript of the video to generate responses. The code comes in two versions: local prototype using FAISS and Ollama with LLaMa3 model for completion and all-minilm-l6-v2 for embeddings, and Azure cloud version using Azure AI Search and GPT-4 Turbo model for completion and text-embedding-3-large for embeddings. Either version can be run as an API using the Azure Functions runtime.

CAG

Cache-Augmented Generation (CAG) is an alternative paradigm to Retrieval-Augmented Generation (RAG) that eliminates real-time retrieval delays and errors by preloading all relevant resources into the model's context. CAG leverages extended context windows of large language models (LLMs) to generate responses directly, providing reduced latency, improved reliability, and simplified design. While CAG has limitations in knowledge size and context length, advancements in LLMs are addressing these issues, making CAG a practical and scalable alternative for complex applications.

ellmer

ellmer is a tool that facilitates the use of large language models (LLM) from R. It supports various LLM providers and offers features such as streaming outputs, tool/function calling, and structured data extraction. Users can interact with ellmer in different ways, including interactive chat console, interactive method call, and programmatic chat. The tool provides support for multiple model providers and offers recommendations for different use cases, such as exploration or organizational use.

rag-web-ui

RAG Web UI is an intelligent dialogue system based on RAG (Retrieval-Augmented Generation) technology. It helps enterprises and individuals build intelligent Q&A systems based on their own knowledge bases. By combining document retrieval and large language models, it delivers accurate and reliable knowledge-based question-answering services. The system is designed with features like intelligent document management, advanced dialogue engine, and a robust architecture. It supports multiple document formats, async document processing, multi-turn contextual dialogue, and reference citations in conversations. The architecture includes a backend stack with Python FastAPI, MySQL + ChromaDB, MinIO, Langchain, JWT + OAuth2 for authentication, and a frontend stack with Next.js, TypeScript, Tailwind CSS, Shadcn/UI, and Vercel AI SDK for AI integration. Performance optimization includes incremental document processing, streaming responses, vector database performance tuning, and distributed task processing. The project is licensed under the Apache-2.0 License and is intended for learning and sharing RAG knowledge only, not for commercial purposes.

every-chatgpt-gui

Every front-end GUI client for ChatGPT API is a curated list of graphical user interface alternatives to access the API for ChatGPT, Claude, and other LLMs. The repository serves as a collection of open-source, self-hosted, and desktop applications that provide different interfaces for interacting with ChatGPT and similar language models. Users can contribute by adding their own apps through pull requests, ensuring alphabetical order and easy access to various GUI options for ChatGPT API.

jaison-core

J.A.I.son is a Python project designed for generating responses using various components and applications. It requires specific plugins like STT, T2T, TTSG, and TTSC to function properly. Users can customize responses, voice, and configurations. The project provides a Discord bot, Twitch events and chat integration, and VTube Studio Animation Hotkeyer. It also offers features for managing conversation history, training AI models, and monitoring conversations.

ragpi

Ragpi is an open-source AI assistant that answers questions using your documentation, GitHub issues, and READMEs. It combines LLMs with intelligent search to provide relevant, documentation-backed answers through a simple API. It supports multiple providers like OpenAI, Ollama, and Deepseek, and has built-in integrations with Discord and Slack. Ragpi builds knowledge bases from docs, GitHub issues, and READMEs, with an agentic RAG system for dynamic document retrieval. It has an API-first design with Docker deployment.

deepchat

DeepChat is a versatile chat tool that supports multiple model cloud services and local model deployment. It offers multi-channel chat concurrency support, platform compatibility, complete Markdown rendering, and easy usability with a comprehensive guide. The tool aims to enhance chat experiences by leveraging various AI models and ensuring efficient conversation management.

tool-ahead-of-time

Tool-Ahead-of-Time (TAoT) is a Python package that enables tool calling for any model available through Langchain's ChatOpenAI library, even before official support is provided. It reformats model output into a JSON parser for tool calling. The package supports OpenAI and non-OpenAI models, following LangChain's syntax for tool calling. Users can start using the tool without waiting for official support, providing a more robust solution for tool calling.

flutter_gemma

Flutter Gemma is a family of lightweight, state-of-the art open models that bring the power of Google's Gemma language models directly to Flutter applications. It allows for local execution on user devices, supports both iOS and Android platforms, and offers LoRA support for tailored AI behavior. The tool provides a simple interface for integrating Gemma models into Flutter projects, enabling advanced AI capabilities without relying on external servers. Users can easily download pre-trained Gemma models, fine-tune them for specific use cases, and customize behavior using LoRA weights. The tool supports model and LoRA weight management, model initialization, response generation, and chat scenarios, with considerations for model size, LoRA weights, and production app deployment.

trapster-community

Trapster Community is a low-interaction honeypot designed for internal networks or credential capture. It monitors and detects suspicious activities, providing deceptive security layer. Features include mimicking network services, asynchronous framework, easy configuration, expandable services, and HTTP honeypot engine with AI capabilities. Supported protocols include DNS, HTTP/HTTPS, FTP, LDAP, MSSQL, POSTGRES, RDP, SNMP, SSH, TELNET, VNC, and RSYNC. The tool generates various types of logs and offers HTTP engine with AI capabilities to emulate websites using YAML configuration. Contributions are welcome under AGPLv3+ license.

chatlas

Chatlas is a Python tool that provides a simple and unified interface across various large language model providers. It helps users prototype faster by abstracting complexity from tasks like streaming chat interfaces, tool calling, and structured output. Users can easily switch providers by changing one line of code and access provider-specific features when needed. Chatlas focuses on developer experience with typing support, rich console output, and extension points.

Ling

Ling is a MoE LLM provided and open-sourced by InclusionAI. It includes two different sizes, Ling-Lite with 16.8 billion parameters and Ling-Plus with 290 billion parameters. These models show impressive performance and scalability for various tasks, from natural language processing to complex problem-solving. The open-source nature of Ling encourages collaboration and innovation within the AI community, leading to rapid advancements and improvements. Users can download the models from Hugging Face and ModelScope for different use cases. Ling also supports offline batched inference and online API services for deployment. Additionally, users can fine-tune Ling models using Llama-Factory for tasks like SFT and DPO.

nonebot-plugin-marshoai

nonebot-plugin-marshoai is a chatbot plugin that utilizes the OpenAI standard format API, such as the GitHub Models API, to enable chat functionalities. The plugin features the character Marsho, a cute cat girl, for engaging conversations. It supports OneBot adapters and GitHub Models API, with limited validation for other adapters. Developed by Melobot.

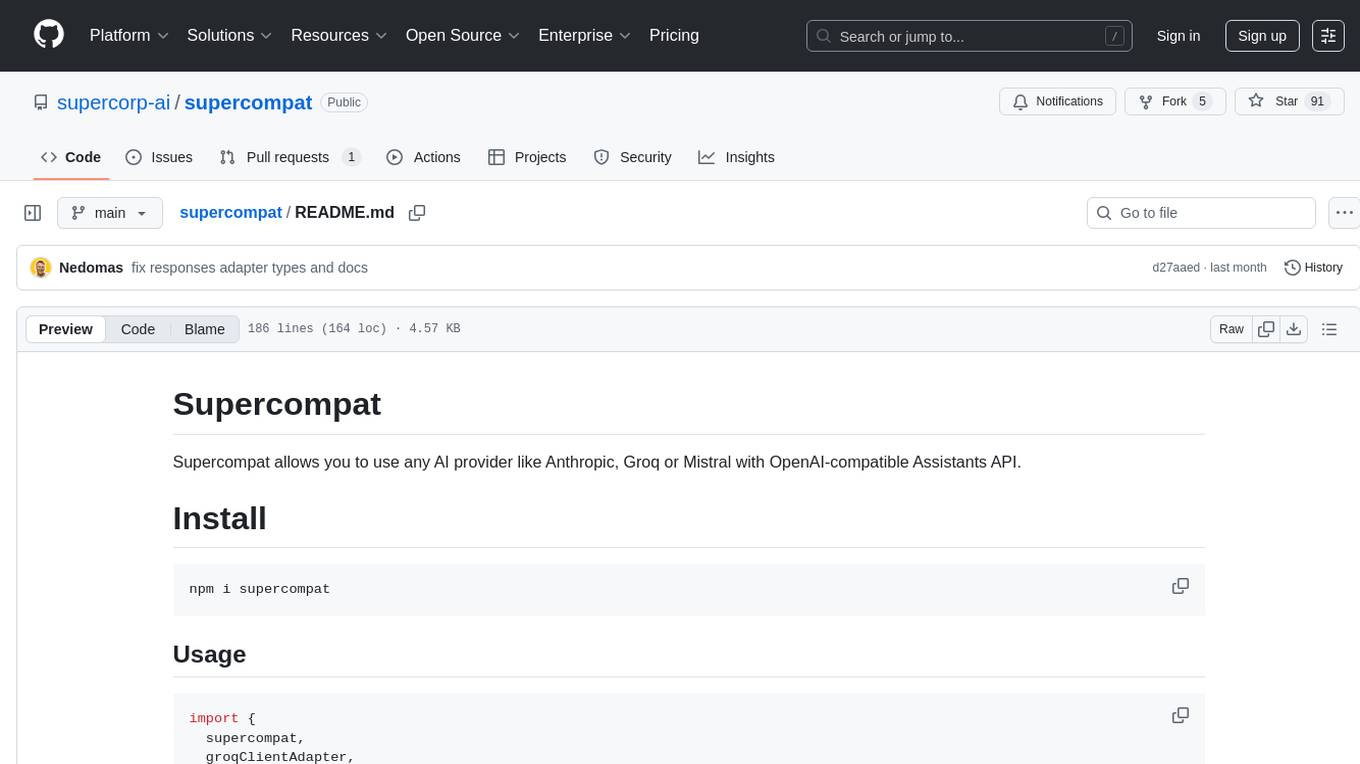

supercompat

Supercompat is a tool that enables users to integrate various AI providers like Anthropic, Groq, or Mistral with the OpenAI-compatible Assistants API. It provides adapters for different AI services and storage options, allowing seamless communication between the user's application and the AI providers. With Supercompat, developers can easily leverage the capabilities of multiple AI services within their projects, enhancing the functionality and intelligence of their applications.

20 - OpenAI Gpts

RISEN Prompt Engineer

RISEN Prompt Engineer GPT streamlines AI interactions by applying the RISEN framework, focusing on specific, structured prompts for targeted, creative responses. This method improves the precision of AI-generated answers, ensuring they meet user expectations effectively.

Prompt Hero

Write prompt like a professional! I refine user prompts for optimal ChatGPT responses. Type "Start" to begin.

Custom GPT Builder

Create personalized GPTs with my simple builder. Click the conversation starter (starting with ###) to begin.

恋のゆくえ Koi No Yukue

大阪のおばちゃんがLINEで受け取った異性のメッセージを脈ありかないかを占ってくれます。 Analyzes messages with a friendly Osaka-style tone, focusing on response speed and emoticons.

Awkward Situation Solver

Welcome to AwkwardSituation Solver GPT! I am here to help you handle those cringe-worthy social moments with a touch of humor and creativity.

TRUCK meaning?

What is TRUCK lyrics meaning? TRUCK singer:Michael Hardy, Hunter Phelps, Benjamin Johnson,album:A ROCK ,album_time:2020. Click The LINK For More ↓↓↓

Angular Architect AI: Generate Angular Components

Generates Angular components based on requirements, with a focus on code-first responses.

The Epic Bard

I am a writer in the style of Homer, crafting responses in dactylic hexameters.