lite_llama

A light llama-like llm inference framework based on the triton kernel.

Stars: 105

lite_llama is a llama model inference lite framework by triton. It offers accelerated inference for llama3, Qwen2.5, and Llava1.5 models with up to 4x speedup compared to transformers. The framework supports top-p sampling, stream output, GQA, and cuda graph optimizations. It also provides efficient dynamic management for kv cache, operator fusion, and custom operators like rmsnorm, rope, softmax, and element-wise multiplication using triton kernels.

README:

A light llama-like llm inference framework based on the triton kernel.

- 相比 transformers, llama3 1B 和 3B 模型加速比最高达

4x倍。 - 支持最新的

llama3、Qwen2.5、Llava1.5模型推理,支持top-p采样, 支持流式输出。 - 支持 GQA、

cuda graph 优化(有限制)。 - 支持

flashattention1、flashattention2、flashdecoding(支持NopadAttention)。 - 支持 kv cache 的高效动态管理(

auto tokenattnetion)。 - 支持算子融合,如:逐元素相乘

*和silu的融合, k v 线性层融合,skip和rmsnorm融合。 - 部分自定义算子如:

rmsnorm、rope、softmax、逐元素相乘等采用高效triton内核实现。

趋动云 GPU 开发环境,cuda 版本以及 torch、triton 版本:

# nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2023 NVIDIA Corporation

Built on Mon_Apr__3_17:16:06_PDT_2023

Cuda compilation tools, release 12.1, V12.1.105

Build cuda_12.1.r12.1/compiler.32688072_0

# Python 3.11.8 包版本:

# pip list | grep torch

torch 2.1.2

triton 2.1.0

triton-nightly 3.0.0.post20240716052845rocm 版本以及 torch、triton 版本:

# rocminfo | grep -i version

ROCk module version 6.10.5 is loaded

Runtime Version: 1.14

Runtime Ext Version: 1.6

# Python 3.11.8 包版本:

# pip list | grep torch

pytorch-triton-rocm 3.2.0

torch 2.6.0+rocm6.2.4

torchaudio 2.6.0+rocm6.2.4

torchvision 0.21.0+rocm6.2.4

日常问答测试结果:

和 transformers 库回答结果对比、精度验证:

llama3.2-1.5B-Instruct 模型流式输出结果测试:

Qwen2.5-3B 模型流式输出结果测试:

Llava1.5-7b-hf 模型流式输出结果测试:

|

|

趋动云 B1.small 等同于 3090 的 1/4 之一卡的硬件测试环境。运行性能测试对比 python benchmark.py,lite_llama 的运行速度最高是 transformers 的 4x 倍。batch_size = 16 的提示词,max_gen_len = 1900 时,benchmark 性能测试结果:

lite_llama inference time: 67.8760 s

Transformers inference time: 131.8708 s

lite_llama throughput: 411.04 tokens/s

Transformers throughput: 104.70 tokens/s

lite_llama per token latency: 2.432831 ms/token

Transformers per token latency: 9.551007 ms/token趋动云 B1.big 等同于 3090 卡的硬件测试环境。运行性能测试对比 python benchmark.py,lite_llama 的运行速度最高是 transformers 的 4x 倍。max_gen_len = 1900 时,benchmark 性能测试结果:

lite_llama inference time: 31.3463 s

Transformers inference time: 69.1433 s

lite_llama throughput: 730.45 tokens/s

Transformers throughput: 183.95 tokens/s

lite_llama per token latency: 1.369015 ms/token

Transformers per token latency: 5.436221 ms/token更多性能测试结果参考文档 benchmark_models(更多模型性能测试结果有待更新)。

推荐 cuda 版本 12.0 及以上。下载 llama3.2-1B-Instruct 模型并放到指定 cli.py 文件的指定 checkpoints_dir 目录。cli.py 运行前,需要先运行 python apply_weight_convert.py 将 hf 模型权重转换为 lite_llama 权重格式。

apt update

apt install imagemagick

conda create --name lite_llama python >= 3.12

conda activate lite_llama

git clone https://github.com/harleyszhang/lite_llama.git

cd lite_llama/

pip install -r requirement.txt

python test_weight_convert.py # 进行模型权重转换。

python cli.py # 已经下载好模型并放在指定目录的基础上运行推荐 ROCm 版本 5.7 及以上。

pip install matplotlib

pip install pandas

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/rocm6.2.4

apt update

apt install imagemagick

conda create --name lite_llama python >= 3.10

conda activate lite_llama

git clone https://github.com/harleyszhang/lite_llama.git

cd lite_llama/

pip install -r requirement.txt

python test_weight_convert.py # 进行模型权重转换。

python cli.py # 已经下载好模型并放在指定目录的基础上运行cli.py 程序运行成功后,终端显示界面如下所示,在终端中输入你的问题即可。

cli_llava.py 程序运行成功后,终端显示界面如下所示,在终端中输入你图片和提示词,然后回车即可。

性能测试,改好自己的模型权重路径后,直接运行 lite_llama/examples/benchmark.py 文件,会输出 lite_llama 和 transformers 库的 latency 和吞吐量性能对比,第一次运行结果不太准确,建议以第二次结果为准。如 Llama-3.2-3B 模型 在 prompt_len = 25、batch_size = 12 和 max_gen_len = 1900 时,benchmark 性能测试运行结果:

lite_llama inference time: 31.3463 s

Transformers inference time: 69.1433 s

lite_llama throughput: 730.45 tokens/s

Transformers throughput: 183.95 tokens/s

lite_llama per token latency: 1.369015 ms/token

Transformers per token latency: 5.436221 ms/token输入提示词:

prompts: List[str] = [

# For these prompts, the expected answer is the natural continuation of the prompt

"I believe the meaning of life is",

"Simply put, the theory of relativity states that ",

"""A brief message congratulating the team on the launch:

Hi everyone,

I just """,

# Few shot prompt (providing a few examples before asking model to complete more);

"Roosevelt was the first president of the United States, he has",

]1,针对 decode 阶段使用 cuda graph 优化后,单次 decode 阶段时间为 8.2402 ms,使用之前为 17.2241 ms,性能提升 2x 倍,这个结果跟 vllm 应用 cuda graph 后的性能提升倍数几乎一致。

INFO: After apply cuda graph, Decode inference time: 8.2402 ms

INFO: Before apply cuda graph, Decode inference time: 17.2241 ms2,在前面的基础上,继续优化,使用 flashattention 替代原有的标准 attention。

flashattention1 对训练模型帮助更大,在提示词很短时,其速度提升效果有限。推理时的 decode 阶段应该用 flash-decoding。

INFO: input tokens shape is torch.Size([8, 115])

# 使用 flashattention 前

INFO:lite_llama.generate:Batch inference time: 3152.0476 ms

INFO:lite_llama.generate:Tokens per second: 97.71 tokens/s

# 使用 flashattention1 后

INFO:lite_llama.generate:Batch inference time: 2681.3823 ms

INFO:lite_llama.generate:Tokens per second: 114.87 tokens/s3,继续优化, 将 flashattention 升级到 flashattention2, 减少一定计算量。

INFO:lite_llama.generate:Batch inference time: 2103.0737 ms

INFO:lite_llama.generate:Tokens per second: 146.45 tokens/s4,再次优化,decode 阶段的推理使用 flashdecoding,提升 decode 阶段的 attention 计算并行度,充分发挥 GPU 算力。

INFO:lite_llama.generate:Decode stage Batch inference time: 1641.4178 ms

INFO:lite_llama.generate:Decode stage tokens per second : 187.64 tokens/s5,继续再次优化,支持 kv cache 高效的动态管理(类似 tokenattention),解决了 kv cache 显存浪费和分配低效的问题。

INFO:lite_llama.generate:Decode stage Batch inference time: 1413.9111 ms

INFO:lite_llama.generate:Decode stage tokens per second : 217.84 tokens/s6,一个简单的优化, 使用 GQA_KV_heads_index 替代 repeat_kv 函数。

7,一个常见且简单的优化, kv 线性层融合。

8,一个常用的优化,算子融合:残差连接的 skip 操作和 rmsnorm 算子融合,形成新的 skip_rmsnorm 算子。

9,重构并优化 MHA 模块,优化 context_attention 和 token_attention 内核支持 Nopad attention 和 kv cache 动态分配和管理:

- token_attention 支持直接传入 kv_cache 索引和序列实际长度 seq_len, 减少了 kv cache 在

MHA模块中的concat和view操作,并实现了Nopadtoken_attention。 - 将每次 prefill/decode 过程动态分配实际 prompts 长度的 kv cache 索引个数,而不是在模型推理之前一次性分配连续的

(max(promptes_len) + max_gen_len) * batch_size个 tokens 的 kv cache 空间。

- 支持连续批处理优化。

- 支持 AWQ 和 SmoothQuant 量化。

- 重构代码以及修复 cuda graph 在 使用 AutoTokenAttention 优化后无法正常运行的问题。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for lite_llama

Similar Open Source Tools

lite_llama

lite_llama is a llama model inference lite framework by triton. It offers accelerated inference for llama3, Qwen2.5, and Llava1.5 models with up to 4x speedup compared to transformers. The framework supports top-p sampling, stream output, GQA, and cuda graph optimizations. It also provides efficient dynamic management for kv cache, operator fusion, and custom operators like rmsnorm, rope, softmax, and element-wise multiplication using triton kernels.

ruler

Ruler is a tool designed to centralize AI coding assistant instructions, providing a single source of truth for managing instructions across multiple AI coding tools. It helps in avoiding inconsistent guidance, duplicated effort, context drift, onboarding friction, and complex project structures by automatically distributing instructions to the right configuration files. With support for nested rule loading, Ruler can handle complex project structures with context-specific instructions for different components. It offers features like centralised rule management, nested rule loading, automatic distribution, targeted agent configuration, MCP server propagation, .gitignore automation, and a simple CLI for easy configuration management.

ruby-nano-bots

Ruby Nano Bots is an implementation of the Nano Bots specification supporting various AI providers like Cohere Command, Google Gemini, Maritaca AI MariTalk, Mistral AI, Ollama, OpenAI ChatGPT, and others. It allows calling tools (functions) and provides a helpful assistant for interacting with AI language models. The tool can be used both from the command line and as a library in Ruby projects, offering features like REPL, debugging, and encryption for data privacy.

code-graph-rag

Graph-Code is an accurate Retrieval-Augmented Generation (RAG) system that analyzes multi-language codebases using Tree-sitter. It builds comprehensive knowledge graphs, enabling natural language querying of codebase structure and relationships, along with editing capabilities. The system supports various languages, uses Tree-sitter for parsing, Memgraph for storage, and AI models for natural language to Cypher translation. It offers features like code snippet retrieval, advanced file editing, shell command execution, interactive code optimization, reference-guided optimization, dependency analysis, and more. The architecture consists of a multi-language parser and an interactive CLI for querying the knowledge graph.

app-platform

AppPlatform is an advanced large-scale model application engineering aimed at simplifying the development process of AI applications through integrated declarative programming and low-code configuration tools. This project provides a powerful and scalable environment for software engineers and product managers to support the full-cycle development of AI applications from concept to deployment. The backend module is based on the FIT framework, utilizing a plugin-based development approach, including application management and feature extension modules. The frontend module is developed using React framework, focusing on core modules such as application development, application marketplace, intelligent forms, and plugin management. Key features include low-code graphical interface, powerful operators and scheduling platform, and sharing and collaboration capabilities. The project also provides detailed instructions for setting up and running both backend and frontend environments for development and testing.

aicommit2

AICommit2 is a Reactive CLI tool that streamlines interactions with various AI providers such as OpenAI, Anthropic Claude, Gemini, Mistral AI, Cohere, and unofficial providers like Huggingface and Clova X. Users can request multiple AI simultaneously to generate git commit messages without waiting for all AI responses. The tool runs 'git diff' to grab code changes, sends them to configured AI, and returns the AI-generated commit message. Users can set API keys or Cookies for different providers and configure options like locale, generate number of messages, commit type, proxy, timeout, max-length, and more. AICommit2 can be used both locally with Ollama and remotely with supported providers, offering flexibility and efficiency in generating commit messages.

rag-chatbot

rag-chatbot is a tool that allows users to chat with multiple PDFs using Ollama and LlamaIndex. It provides an easy setup for running on local machines or Kaggle notebooks. Users can leverage models from Huggingface and Ollama, process multiple PDF inputs, and chat in multiple languages. The tool offers a simple UI with Gradio, supporting chat with history and QA modes. Setup instructions are provided for both Kaggle and local environments, including installation steps for Docker, Ollama, Ngrok, and the rag_chatbot package. Users can run the tool locally and access it via a web interface. Future enhancements include adding evaluation, better embedding models, knowledge graph support, improved document processing, MLX model integration, and Corrective RAG.

react-native-rag

React Native RAG is a library that enables private, local RAGs to supercharge LLMs with a custom knowledge base. It offers modular and extensible components like `LLM`, `Embeddings`, `VectorStore`, and `TextSplitter`, with multiple integration options. The library supports on-device inference, vector store persistence, and semantic search implementation. Users can easily generate text responses, manage documents, and utilize custom components for advanced use cases.

airdrop-tools

Airdrop-tools is a repository containing tools for all Telegram bots. Users can join the Telegram group for support and access various bot apps like Moonbix, Blum, Major, Memefi, and more. The setup requires Node.js and Python, with instructions on creating data directories and installing extensions. Users can run different tools like Blum, Major, Moonbix, Yescoin, Matchain, Fintopio, Agent301, IAMDOG, Banana, Cats, Wonton, and Xkucoin by following specific commands. The repository also provides contact information and options for supporting the creator.

wechat-bot

WeChat Bot is a simple and easy-to-use WeChat robot based on chatgpt and wechaty. It can help you automatically reply to WeChat messages or manage WeChat groups/friends. The tool requires configuration of AI services such as Xunfei, Kimi, or ChatGPT. Users can customize the tool to automatically reply to group or private chat messages based on predefined conditions. The tool supports running in Docker for easy deployment and provides a convenient way to interact with various AI services for WeChat automation.

nextlint

Nextlint is a rich text editor (WYSIWYG) written in Svelte, using MeltUI headless UI and tailwindcss CSS framework. It is built on top of tiptap editor (headless editor) and prosemirror. Nextlint is easy to use, develop, and maintain. It has a prompt engine that helps to integrate with any AI API and enhance the writing experience. Dark/Light theme is supported and customizable.

awesome-rag

Awesome RAG is a curated list of retrieval-augmented generation (RAG) in large language models. It includes papers, surveys, general resources, lectures, talks, tutorials, workshops, tools, and other collections related to retrieval-augmented generation. The repository aims to provide a comprehensive overview of the latest advancements, techniques, and applications in the field of RAG.

AI-Agent-Starter-Kit

AI Agent Starter Kit is a modern full-stack AI-enabled template using Next.js for frontend and Express.js for backend, with Telegram and OpenAI integrations. It offers AI-assisted development, smart environment variable setup assistance, intelligent error resolution, context-aware code completion, and built-in debugging helpers. The kit provides a structured environment for developers to interact with AI tools seamlessly, enhancing the development process and productivity.

morgana-form

MorGana Form is a full-stack form builder project developed using Next.js, React, TypeScript, Ant Design, PostgreSQL, and other technologies. It allows users to quickly create and collect data through survey forms. The project structure includes components, hooks, utilities, pages, constants, Redux store, themes, types, server-side code, and component packages. Environment variables are required for database settings, NextAuth login configuration, and file upload services. Additionally, the project integrates an AI model for form generation using the Ali Qianwen model API.

docutranslate

Docutranslate is a versatile tool for translating documents efficiently. It supports multiple file formats and languages, making it ideal for businesses and individuals needing quick and accurate translations. The tool uses advanced algorithms to ensure high-quality translations while maintaining the original document's formatting. With its user-friendly interface, Docutranslate simplifies the translation process and saves time for users. Whether you need to translate legal documents, technical manuals, or personal letters, Docutranslate is the go-to solution for all your document translation needs.

vicinity

Vicinity is a lightweight, low-dependency vector store that provides a unified interface for nearest neighbor search with support for different backends and evaluation. It simplifies the process of comparing and evaluating different nearest neighbors packages by offering a simple and intuitive API. Users can easily experiment with various indexing methods and distance metrics to choose the best one for their use case. Vicinity also allows for measuring performance metrics like queries per second and recall.

For similar tasks

semantic-router

Semantic Router is a superfast decision-making layer for your LLMs and agents. Rather than waiting for slow LLM generations to make tool-use decisions, we use the magic of semantic vector space to make those decisions — _routing_ our requests using _semantic_ meaning.

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

luna-ai

Luna AI is a virtual streamer driven by a 'brain' composed of ChatterBot, GPT, Claude, langchain, chatglm, text-generation-webui, 讯飞星火, 智谱AI. It can interact with viewers in real-time during live streams on platforms like Bilibili, Douyin, Kuaishou, Douyu, or chat with you locally. Luna AI uses natural language processing and text-to-speech technologies like Edge-TTS, VITS-Fast, elevenlabs, bark-gui, VALL-E-X to generate responses to viewer questions and can change voice using so-vits-svc, DDSP-SVC. It can also collaborate with Stable Diffusion for drawing displays and loop custom texts. This project is completely free, and any identical copycat selling programs are pirated, please stop them promptly.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

cria

Cria is a Python library designed for running Large Language Models with minimal configuration. It provides an easy and concise way to interact with LLMs, offering advanced features such as custom models, streams, message history management, and running multiple models in parallel. Cria simplifies the process of using LLMs by providing a straightforward API that requires only a few lines of code to get started. It also handles model installation automatically, making it efficient and user-friendly for various natural language processing tasks.

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

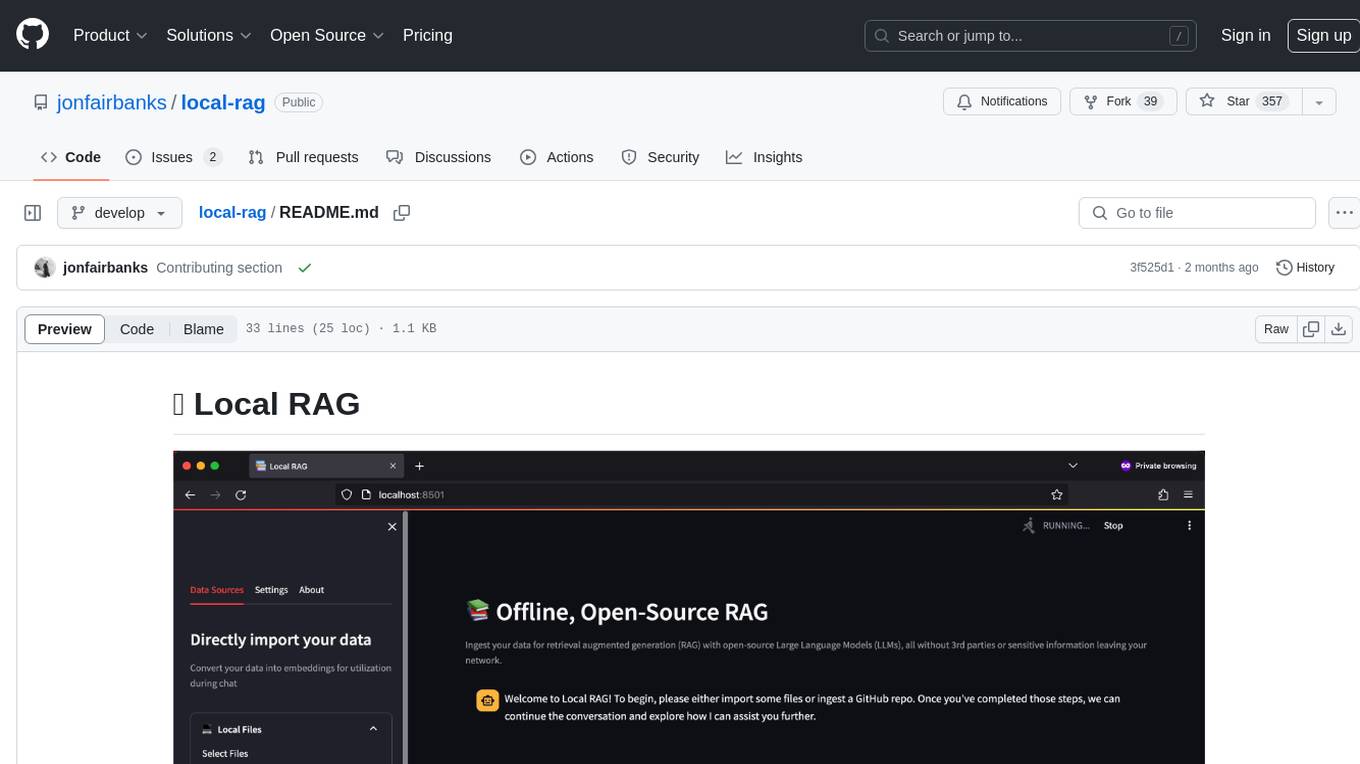

local-rag

Local RAG is an offline, open-source tool that allows users to ingest files for retrieval augmented generation (RAG) using large language models (LLMs) without relying on third parties or exposing sensitive data. It supports offline embeddings and LLMs, multiple sources including local files, GitHub repos, and websites, streaming responses, conversational memory, and chat export. Users can set up and deploy the app, learn how to use Local RAG, explore the RAG pipeline, check planned features, known bugs and issues, access additional resources, and contribute to the project.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.