ruby-nano-bots

Ruby Implementation of Nano Bots: small, AI-powered bots that can be easily shared as a single file, designed to support multiple providers such as Cohere Command, Google Gemini, Maritaca AI MariTalk, Mistral AI, Ollama, OpenAI ChatGPT, and others, with support for calling tools (functions).

Stars: 76

Ruby Nano Bots is an implementation of the Nano Bots specification supporting various AI providers like Cohere Command, Google Gemini, Maritaca AI MariTalk, Mistral AI, Ollama, OpenAI ChatGPT, and others. It allows calling tools (functions) and provides a helpful assistant for interacting with AI language models. The tool can be used both from the command line and as a library in Ruby projects, offering features like REPL, debugging, and encryption for data privacy.

README:

An implementation of the Nano Bots specification with support for Cohere Command, Google Gemini, Maritaca AI MariTalk, Mistral AI, Ollama, OpenAI ChatGPT, and others, with support for calling tools (functions).

gem install nano-bots -v 3.1.0nb - - eval "hello"

# => Hello! How may I assist you today?nb - - repl🤖> Hi, how are you doing?

As an AI language model, I do not experience emotions but I am functioning

well. How can I assist you?

🤖> |

---

meta:

symbol: 🤖

name: Nano Bot Name

author: Your Name

version: 1.0.0

license: CC0-1.0

description: A helpful assistant.

behaviors:

interaction:

directive: You are a helpful assistant.

provider:

id: openai

credentials:

access-token: ENV/OPENAI_API_KEY

settings:

user: ENV/NANO_BOTS_END_USER

model: gpt-4onb gpt.yml - eval "hi"

# => Hello! How can I assist you today?gem 'nano-bots', '~> 3.1.0'require 'nano-bots'

bot = NanoBot.new(cartridge: 'gpt.yml')

bot.eval('Hi!') do |content, fragment, finished, meta|

print fragment unless fragment.nil?

end

# => Hello! How can I assist you today?- TL;DR and Quick Start

- Usage

- Setup

- Cartridges

- Security and Privacy

- Supported Providers

- Docker

- Development

After installing the gem, the nb binary command will be available for your project or system.

Examples of usage:

nb - - eval "hello"

# => Hello! How may I assist you today?

nb to-en-us-translator.yml - eval "Salut, comment ça va?"

# => Hello, how are you doing?

nb midjourney.yml - eval "happy cyberpunk robot"

# => A cheerful and fun-loving robot is dancing wildly amidst a

# futuristic and lively cityscape. Holographic advertisements

# and vibrant neon colors can be seen in the background.

nb lisp.yml - eval "(+ 1 2)"

# => 3

cat article.txt |

nb to-en-us-translator.yml - eval |

nb summarizer.yml - eval

# -> LLM stands for Large Language Model, which refers to an

# artificial intelligence algorithm capable of processing

# and understanding vast amounts of natural language data,

# allowing it to generate human-like responses and perform

# a range of language-related tasks.nb - - repl

nb assistant.yml - repl🤖> Hi, how are you doing?

As an AI language model, I do not experience emotions but I am functioning

well. How can I assist you?

🤖> |

You can exit the REPL by typing exit.

All of the commands above are stateless. If you want to preserve the history of your interactions, replace the - with a state key:

nb assistant.yml your-user eval "Salut, comment ça va?"

nb assistant.yml your-user repl

nb assistant.yml 6ea6c43c42a1c076b1e3c36fa349ac2c eval "Salut, comment ça va?"

nb assistant.yml 6ea6c43c42a1c076b1e3c36fa349ac2c replYou can use a simple key, such as your username, or a randomly generated one:

require 'securerandom'

SecureRandom.hex # => 6ea6c43c42a1c076b1e3c36fa349ac2cnb - - cartridge

nb cartridge.yml - cartridge

nb - STATE-KEY state

nb cartridge.yml STATE-KEY stateTo use it as a library:

require 'nano-bots/cli' # Equivalent to the `nb` command.require 'nano-bots'

NanoBot.cli # Equivalent to the `nb` command.

NanoBot.repl(cartridge: 'cartridge.yml') # Starts a new REPL.

bot = NanoBot.new(cartridge: 'cartridge.yml')

bot = NanoBot.new(

cartridge: YAML.safe_load(File.read('cartridge.yml'), permitted_classes: [Symbol])

)

bot = NanoBot.new(

cartridge: { ... } # Parsed Cartridge Hash

)

bot.eval('Hello')

bot.eval('Hello', as: 'eval')

bot.eval('Hello', as: 'repl')

# When stream is enabled and available:

bot.eval('Hi!') do |content, fragment, finished, meta|

print fragment unless fragment.nil?

end

bot.repl # Starts a new REPL.

NanoBot.repl(cartridge: 'cartridge.yml', state: '6ea6c43c42a1c076b1e3c36fa349ac2c')

bot = NanoBot.new(cartridge: 'cartridge.yml', state: '6ea6c43c42a1c076b1e3c36fa349ac2c')

bot.prompt # => "🤖\u001b[34m> \u001b[0m"

bot.boot

bot.boot(as: 'eval')

bot.boot(as: 'repl')

bot.boot do |content, fragment, finished, meta|

print fragment unless fragment.nil?

endTo install the CLI on your system:

gem install nano-bots -v 3.1.0To use it in a Ruby project as a library, add to your Gemfile:

gem 'nano-bots', '~> 3.1.0'bundle installFor credentials and configurations, relevant environment variables can be set in your .bashrc, .zshrc, or equivalent files, as well as in your Docker Container or System Environment. Example:

export NANO_BOTS_ENCRYPTION_PASSWORD=UNSAFE

export NANO_BOTS_END_USER=your-user

# export NANO_BOTS_STATE_PATH=/home/user/.local/state/nano-bots

# export NANO_BOTS_CARTRIDGES_PATH=/home/user/.local/share/nano-bots/cartridgesAlternatively, if your current directory has a .env file with the environment variables, they will be automatically loaded:

NANO_BOTS_ENCRYPTION_PASSWORD=UNSAFE

NANO_BOTS_END_USER=your-user

# NANO_BOTS_STATE_PATH=/home/user/.local/state/nano-bots

# NANO_BOTS_CARTRIDGES_PATH=/home/user/.local/share/nano-bots/cartridgesYou can obtain your credentials on the Cohere Platform.

export COHERE_API_KEY=your-api-keyAlternatively, if your current directory has a .env file with the environment variables, they will be automatically loaded:

COHERE_API_KEY=your-api-keyCreate a cartridge.yml file:

---

meta:

symbol: 🤖

name: Nano Bot Name

author: Your Name

version: 1.0.0

license: CC0-1.0

description: A helpful assistant.

behaviors:

interaction:

directive: You are a helpful assistant.

provider:

id: cohere

credentials:

api-key: ENV/COHERE_API_KEY

settings:

model: commandRead the full specification for Cohere Command.

nb cartridge.yml - eval "Hello"

nb cartridge.yml - replbot = NanoBot.new(cartridge: 'cartridge.yml')

puts bot.eval('Hello')You can obtain your API key at MariTalk.

Enclose credentials in single quotes when using environment variables to prevent issues with the $ character in the API key:

export MARITACA_API_KEY='123...$a12...'Alternatively, if your current directory has a .env file with the environment variables, they will be automatically loaded:

MARITACA_API_KEY='123...$a12...'Create a cartridge.yml file:

---

meta:

symbol: 🤖

name: Nano Bot Name

author: Your Name

version: 1.0.0

license: CC0-1.0

description: A helpful assistant.

behaviors:

interaction:

directive: You are a helpful assistant.

provider:

id: maritaca

credentials:

api-key: ENV/MARITACA_API_KEY

settings:

model: sabia-2-mediumRead the full specification for Mistral AI.

nb cartridge.yml - eval "Hello"

nb cartridge.yml - replbot = NanoBot.new(cartridge: 'cartridge.yml')

puts bot.eval('Hello')You can obtain your credentials on the Mistral Platform.

export MISTRAL_API_KEY=your-api-keyAlternatively, if your current directory has a .env file with the environment variables, they will be automatically loaded:

MISTRAL_API_KEY=your-api-keyCreate a cartridge.yml file:

---

meta:

symbol: 🤖

name: Nano Bot Name

author: Your Name

version: 1.0.0

license: CC0-1.0

description: A helpful assistant.

behaviors:

interaction:

directive: You are a helpful assistant.

provider:

id: mistral

credentials:

api-key: ENV/MISTRAL_API_KEY

settings:

model: mistral-medium-latestRead the full specification for Mistral AI.

nb cartridge.yml - eval "Hello"

nb cartridge.yml - replbot = NanoBot.new(cartridge: 'cartridge.yml')

puts bot.eval('Hello')To install and set up, follow the instructions on the Ollama website.

export OLLAMA_API_ADDRESS=http://localhost:11434Alternatively, if your current directory has a .env file with the environment variables, they will be automatically loaded:

OLLAMA_API_ADDRESS=http://localhost:11434Create a cartridge.yml file:

---

meta:

symbol: 🤖

name: Nano Bot Name

author: Your Name

version: 1.0.0

license: CC0-1.0

description: A helpful assistant.

behaviors:

interaction:

directive: You are a helpful assistant.

provider:

id: ollama

credentials:

address: ENV/OLLAMA_API_ADDRESS

settings:

model: llama3Read the full specification for Ollama.

nb cartridge.yml - eval "Hello"

nb cartridge.yml - replbot = NanoBot.new(cartridge: 'cartridge.yml')

puts bot.eval('Hello')You can obtain your credentials on the OpenAI Platform.

export OPENAI_API_KEY=your-access-tokenAlternatively, if your current directory has a .env file with the environment variables, they will be automatically loaded:

OPENAI_API_KEY=your-access-tokenCreate a cartridge.yml file:

---

meta:

symbol: 🤖

name: Nano Bot Name

author: Your Name

version: 1.0.0

license: CC0-1.0

description: A helpful assistant.

behaviors:

interaction:

directive: You are a helpful assistant.

provider:

id: openai

credentials:

access-token: ENV/OPENAI_API_KEY

settings:

user: ENV/NANO_BOTS_END_USER

model: gpt-4oRead the full specification for OpenAI ChatGPT.

nb cartridge.yml - eval "Hello"

nb cartridge.yml - replbot = NanoBot.new(cartridge: 'cartridge.yml')

puts bot.eval('Hello')Click here to learn how to obtain your credentials.

export GOOGLE_API_KEY=your-api-keyAlternatively, if your current directory has a .env file with the environment variables, they will be automatically loaded:

GOOGLE_API_KEY=your-api-keyCreate a cartridge.yml file:

---

meta:

symbol: 🤖

name: Nano Bot Name

author: Your Name

version: 1.0.0

license: CC0-1.0

description: A helpful assistant.

behaviors:

interaction:

directive: You are a helpful assistant.

provider:

id: google

credentials:

service: generative-language-api

api-key: ENV/GOOGLE_API_KEY

options:

model: gemini-proRead the full specification for Google Gemini.

nb cartridge.yml - eval "Hello"

nb cartridge.yml - replbot = NanoBot.new(cartridge: 'cartridge.yml')

puts bot.eval('Hello')export GOOGLE_CREDENTIALS_FILE_PATH=google-credentials.json

export GOOGLE_REGION=us-east4Alternatively, if your current directory has a .env file with the environment variables, they will be automatically loaded:

GOOGLE_CREDENTIALS_FILE_PATH=google-credentials.json

GOOGLE_REGION=us-east4Create a cartridge.yml file:

---

meta:

symbol: 🤖

name: Nano Bot Name

author: Your Name

version: 1.0.0

license: CC0-1.0

description: A helpful assistant.

behaviors:

interaction:

directive: You are a helpful assistant.

provider:

id: google

credentials:

service: vertex-ai-api

file-path: ENV/GOOGLE_CREDENTIALS_FILE_PATH

region: ENV/GOOGLE_REGION

options:

model: gemini-proRead the full specification for Google Gemini.

nb cartridge.yml - eval "Hello"

nb cartridge.yml - replbot = NanoBot.new(cartridge: 'cartridge.yml')

puts bot.eval('Hello')export GOOGLE_REGION=us-east4Alternatively, if your current directory has a .env file with the environment variables, they will be automatically loaded:

GOOGLE_REGION=us-east4Create a cartridge.yml file:

---

meta:

symbol: 🤖

name: Nano Bot Name

author: Your Name

version: 1.0.0

license: CC0-1.0

description: A helpful assistant.

behaviors:

interaction:

directive: You are a helpful assistant.

provider:

id: google

credentials:

service: vertex-ai-api

region: ENV/GOOGLE_REGION

options:

model: gemini-proRead the full specification for Google Gemini.

nb cartridge.yml - eval "Hello"

nb cartridge.yml - replbot = NanoBot.new(cartridge: 'cartridge.yml')

puts bot.eval('Hello')If you need to manually set a Google Project ID:

export GOOGLE_PROJECT_ID=your-project-idAlternatively, if your current directory has a .env file with the environment variables, they will be automatically loaded:

GOOGLE_PROJECT_ID=your-project-idAdd to your cartridge.yml file:

---

provider:

id: google

credentials:

project-id: ENV/GOOGLE_PROJECT_IDCheck the Nano Bots specification to learn more about how to build cartridges.

Try the Nano Bots Clinic (Live Editor) to learn about creating Cartridges.

Here's what a Nano Bot Cartridge looks like:

---

meta:

symbol: 🤖

name: Nano Bot Name

author: Your Name

version: 1.0.0

license: CC0-1.0

description: A helpful assistant.

behaviors:

interaction:

directive: You are a helpful assistant.

provider:

id: openai

credentials:

access-token: ENV/OPENAI_API_KEY

settings:

user: ENV/NANO_BOTS_END_USER

model: gpt-4oNano Bots can also be powered by Tools (Functions):

---

tools:

- name: random-number

description: Generates a random number between 1 and 100.

fennel: |

(math.random 1 100)🤖> please generate a random number

random-number {} [yN] y

random-number {}

59

The randomly generated number is 59.

🤖> |

To successfully use Tools (Functions), you need to specify a provider and a model that supports them. As of the writing of this README, the provider that supports them is OpenAI, with models gpt-3.5-turbo-1106 and gpt-4o, and Google, with the vertex-ai-api service and the model gemini-pro. Other providers do not yet have support.

Check the Nano Bots specification to learn more about Tools (Functions).

We are exploring the use of Clojure through Babashka, powered by GraalVM.

The experimental support for Clojure would be similar to Lua and Fennel, using the clojure: key:

---

clojure: |

(-> (java.time.ZonedDateTime/now)

(.format (java.time.format.DateTimeFormatter/ofPattern "yyyy-MM-dd HH:mm"))

(clojure.string/trimr))Unlike Lua and Fennel, Clojure support is not embedded in this implementation. It relies on having the Babashka binary (bb) available in your environment where the Nano Bot is running.

Here's how to install Babashka:

curl -s https://raw.githubusercontent.com/babashka/babashka/master/install | sudo bashThis is a quick check to ensure that it is available and working:

bb -e '{:hello "world"}'

# => {:hello "world"}We don't have sandbox support for Clojure; this means that you need to disable it to be able to run Clojure code, which you do at your own risk:

---

safety:

functions:

sandboxed: falseYou can explore the Nano Bots Marketplace to discover new Cartridges that can help you.

Each provider will have its own security and privacy policies (e.g. OpenAI Policy), so you must consult them to understand their implications.

By default, all states stored in your local disk are encrypted.

To ensure that the encryption is secure, you need to define a password through the NANO_BOTS_ENCRYPTION_PASSWORD environment variable. Otherwise, although the content will be encrypted, anyone would be able to decrypt it without a password.

It's important to note that the content shared with providers, despite being transmitted over secure connections (e.g., HTTPS), will be readable by the provider. This is because providers need to operate on the data, which would not be possible if the content was encrypted beyond HTTPS. So, the data stored locally on your system is encrypted, which does not mean that what you share with providers will not be readable by them.

To ensure that your encryption and password are configured properly, you can run the following command:

nb securityWhich should return:

✅ Encryption is enabled and properly working.

This means that your data is stored in an encrypted format on your disk.

✅ A password is being used for the encrypted content.

This means that only those who possess the password can decrypt your data.

Alternatively, you can check it at runtime with:

require 'nano-bots'

NanoBot.security.check

# => { encryption: true, password: true }A common strategy for deploying Nano Bots to multiple users through APIs or automations is to assign a unique end-user ID for each user. This can be useful if any of your users violate the provider's policy due to abusive behavior. By providing the end-user ID, you can unravel that even though the activity originated from your API Key, the actions taken were not your own.

You can define custom end-user identifiers in the following way:

NanoBot.new(environment: { NANO_BOTS_END_USER: 'custom-user-a' })

NanoBot.new(environment: { NANO_BOTS_END_USER: 'custom-user-b' })Consider that you have the following end-user identifier in your environment:

NANO_BOTS_END_USER=your-nameOr a configuration in your Cartridge:

---

provider:

id: openai

settings:

user: your-nameThe requests will be performed as follows:

NanoBot.new(cartridge: '-')

# { user: 'your-name' }

NanoBot.new(cartridge: '-', environment: { NANO_BOTS_END_USER: 'custom-user-a' })

# { user: 'custom-user-a' }

NanoBot.new(cartridge: '-', environment: { NANO_BOTS_END_USER: 'custom-user-b' })

# { user: 'custom-user-b' }Actually, to enhance privacy, neither your user nor your users' identifiers will be shared in this way. Instead, they will be encrypted before being shared with the provider:

'your-name'

# _O7OjYUESagb46YSeUeSfSMzoO1Yg0BZqpsAkPg4j62SeNYlgwq3kn51Ob2wmIehoA==

'custom-user-a'

# _O7OjYUESagb46YSeUeSfSMzoO1Yg0BZJgIXHCBHyADW-rn4IQr-s2RvP7vym8u5tnzYMIs=

'custom-user-b'

# _O7OjYUESagb46YSeUeSfSMzoO1Yg0BZkjUwCcsh9sVppKvYMhd2qGRvP7vym8u5tnzYMIg=In this manner, you possess identifiers if required, however, their actual content can only be decrypted by you via your secure password (NANO_BOTS_ENCRYPTION_PASSWORD).

To decrypt your encrypted data, once you have properly configured your password, you can simply run:

require 'nano-bots'

NanoBot.security.decrypt('_O7OjYUESagb46YSeUeSfSMzoO1Yg0BZqpsAkPg4j62SeNYlgwq3kn51Ob2wmIehoA==')

# your-name

NanoBot.security.decrypt('_O7OjYUESagb46YSeUeSfSMzoO1Yg0BZJgIXHCBHyADW-rn4IQr-s2RvP7vym8u5tnzYMIs=')

# custom-user-a

NanoBot.security.decrypt('_O7OjYUESagb46YSeUeSfSMzoO1Yg0BZkjUwCcsh9sVppKvYMhd2qGRvP7vym8u5tnzYMIg=')

# custom-user-bIf you lose your password, you lose your data. It is not possible to recover it at all. For real.

- [ ] Anthropic Claude

- [x] Cohere Command

- [x] Google Gemini

- [x] Maritaca AI MariTalk

- [x] Mistral AI

- [x] Ollama

- [x] 01.AI Yi

- [x] LMSYS Vicuna

- [x] Meta Llama

- [x] WizardLM

- [x] Open AI ChatGPT

01.AI Yi, LMSYS Vicuna, Meta Llama, and WizardLM are open-source models that are supported through Ollama.

Clone the repository and copy the Docker Compose template:

git clone https://github.com/icebaker/ruby-nano-bots.git

cd ruby-nano-bots

cp docker-compose.example.yml docker-compose.yml

Set your provider credentials and choose your desired path for the cartridges files:

---

services:

nano-bots:

image: ruby:3.2.2-slim-bookworm

command: sh -c "apt-get update && apt-get install -y --no-install-recommends build-essential libffi-dev libsodium-dev lua5.4-dev curl && curl -s https://raw.githubusercontent.com/babashka/babashka/master/install | bash && gem install nano-bots -v 3.1.0 && bash"

environment:

COHERE_API_KEY: your-api-key

NANO_BOTS_ENCRYPTION_PASSWORD: UNSAFE

NANO_BOTS_END_USER: your-user

volumes:

- ./your-cartridges:/root/.local/share/nano-bots/cartridges

- ./your-state-path:/root/.local/state/nano-bots---

services:

nano-bots:

image: ruby:3.2.2-slim-bookworm

command: sh -c "apt-get update && apt-get install -y --no-install-recommends build-essential libffi-dev libsodium-dev lua5.4-dev curl && curl -s https://raw.githubusercontent.com/babashka/babashka/master/install | bash && gem install nano-bots -v 3.1.0 && bash"

environment:

MARITACA_API_KEY: your-api-key

NANO_BOTS_ENCRYPTION_PASSWORD: UNSAFE

NANO_BOTS_END_USER: your-user

volumes:

- ./your-cartridges:/root/.local/share/nano-bots/cartridges

- ./your-state-path:/root/.local/state/nano-bots---

services:

nano-bots:

image: ruby:3.2.2-slim-bookworm

command: sh -c "apt-get update && apt-get install -y --no-install-recommends build-essential libffi-dev libsodium-dev lua5.4-dev curl && curl -s https://raw.githubusercontent.com/babashka/babashka/master/install | bash && gem install nano-bots -v 3.1.0 && bash"

environment:

MISTRAL_API_KEY: your-api-key

NANO_BOTS_ENCRYPTION_PASSWORD: UNSAFE

NANO_BOTS_END_USER: your-user

volumes:

- ./your-cartridges:/root/.local/share/nano-bots/cartridges

- ./your-state-path:/root/.local/state/nano-botsRemember that your localhost is by default inaccessible from inside Docker. You need to either establish inter-container networking, use the host's address, or use the host network, depending on where the Ollama server is running and your preferences.

---

services:

nano-bots:

image: ruby:3.2.2-slim-bookworm

command: sh -c "apt-get update && apt-get install -y --no-install-recommends build-essential libffi-dev libsodium-dev lua5.4-dev curl && curl -s https://raw.githubusercontent.com/babashka/babashka/master/install | bash && gem install nano-bots -v 3.1.0 && bash"

environment:

OLLAMA_API_ADDRESS: http://localhost:11434

NANO_BOTS_ENCRYPTION_PASSWORD: UNSAFE

NANO_BOTS_END_USER: your-user

volumes:

- ./your-cartridges:/root/.local/share/nano-bots/cartridges

- ./your-state-path:/root/.local/state/nano-bots

# If you are running the Ollama server on your localhost:

network_mode: host # WARNING: Be careful, this may be a security risk.---

services:

nano-bots:

image: ruby:3.2.2-slim-bookworm

command: sh -c "apt-get update && apt-get install -y --no-install-recommends build-essential libffi-dev libsodium-dev lua5.4-dev curl && curl -s https://raw.githubusercontent.com/babashka/babashka/master/install | bash && gem install nano-bots -v 3.1.0 && bash"

environment:

OPENAI_API_KEY: your-access-token

NANO_BOTS_ENCRYPTION_PASSWORD: UNSAFE

NANO_BOTS_END_USER: your-user

volumes:

- ./your-cartridges:/root/.local/share/nano-bots/cartridges

- ./your-state-path:/root/.local/state/nano-bots---

services:

nano-bots:

image: ruby:3.2.2-slim-bookworm

command: sh -c "apt-get update && apt-get install -y --no-install-recommends build-essential libffi-dev libsodium-dev lua5.4-dev curl && curl -s https://raw.githubusercontent.com/babashka/babashka/master/install | bash && gem install nano-bots -v 3.1.0 && bash"

environment:

GOOGLE_API_KEY: your-api-key

NANO_BOTS_ENCRYPTION_PASSWORD: UNSAFE

NANO_BOTS_END_USER: your-user

volumes:

- ./your-cartridges:/root/.local/share/nano-bots/cartridges

- ./your-state-path:/root/.local/state/nano-bots---

services:

nano-bots:

image: ruby:3.2.2-slim-bookworm

command: sh -c "apt-get update && apt-get install -y --no-install-recommends build-essential libffi-dev libsodium-dev lua5.4-dev curl && curl -s https://raw.githubusercontent.com/babashka/babashka/master/install | bash && gem install nano-bots -v 3.1.0 && bash"

environment:

GOOGLE_CREDENTIALS_FILE_PATH: /root/.config/google-credentials.json

GOOGLE_REGION: us-east4

NANO_BOTS_ENCRYPTION_PASSWORD: UNSAFE

NANO_BOTS_END_USER: your-user

volumes:

- ./google-credentials.json:/root/.config/google-credentials.json

- ./your-cartridges:/root/.local/share/nano-bots/cartridges

- ./your-state-path:/root/.local/state/nano-bots---

services:

nano-bots:

image: ruby:3.2.2-slim-bookworm

command: sh -c "apt-get update && apt-get install -y --no-install-recommends build-essential libffi-dev libsodium-dev lua5.4-dev curl && curl -s https://raw.githubusercontent.com/babashka/babashka/master/install | bash && gem install nano-bots -v 3.1.0 && bash"

environment:

GOOGLE_REGION: us-east4

NANO_BOTS_ENCRYPTION_PASSWORD: UNSAFE

NANO_BOTS_END_USER: your-user

volumes:

- ./your-cartridges:/root/.local/share/nano-bots/cartridges

- ./your-state-path:/root/.local/state/nano-botsIf you need to manually set a Google Project ID:

environment:

GOOGLE_PROJECT_ID=your-project-idEnter the container:

docker compose run nano-botsStart playing:

nb - - eval "hello"

nb - - repl

nb assistant.yml - eval "hello"

nb assistant.yml - replYou can exit the REPL by typing exit.

bundle

rubocop -A

rspec

bundle exec ruby spec/tasks/run-all-models.rb

bundle exec ruby spec/tasks/run-model.rb spec/data/cartridges/models/openai/gpt-4-turbo.yml

bundle exec ruby spec/tasks/run-model.rb spec/data/cartridges/models/openai/gpt-4-turbo.yml streamIf you face issues upgrading gem versions:

bundle install --full-indexgem build nano-bots.gemspec

gem signin

gem push nano-bots-3.1.0.gemFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ruby-nano-bots

Similar Open Source Tools

ruby-nano-bots

Ruby Nano Bots is an implementation of the Nano Bots specification supporting various AI providers like Cohere Command, Google Gemini, Maritaca AI MariTalk, Mistral AI, Ollama, OpenAI ChatGPT, and others. It allows calling tools (functions) and provides a helpful assistant for interacting with AI language models. The tool can be used both from the command line and as a library in Ruby projects, offering features like REPL, debugging, and encryption for data privacy.

forge

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

llama.vim

llama.vim is a plugin that provides local LLM-assisted text completion for Vim users. It offers features such as auto-suggest on cursor movement, manual suggestion toggling, suggestion acceptance with Tab and Shift+Tab, control over text generation time, context configuration, ring context with chunks from open and edited files, and performance stats display. The plugin requires a llama.cpp server instance to be running and supports FIM-compatible models. It aims to be simple, lightweight, and provide high-quality and performant local FIM completions even on consumer-grade hardware.

ahnlich

Ahnlich is a tool that provides multiple components for storing and searching similar vectors using linear or non-linear similarity algorithms. It includes 'ahnlich-db' for in-memory vector key value store, 'ahnlich-ai' for AI proxy communication, 'ahnlich-client-rs' for Rust client, and 'ahnlich-client-py' for Python client. The tool is not production-ready yet and is still in testing phase, allowing AI/ML engineers to issue queries using raw input such as images/text and features off-the-shelf models for indexing and querying.

QA-Pilot

QA-Pilot is an interactive chat project that leverages online/local LLM for rapid understanding and navigation of GitHub code repository. It allows users to chat with GitHub public repositories using a git clone approach, store chat history, configure settings easily, manage multiple chat sessions, and quickly locate sessions with a search function. The tool integrates with `codegraph` to view Python files and supports various LLM models such as ollama, openai, mistralai, and localai. The project is continuously updated with new features and improvements, such as converting from `flask` to `fastapi`, adding `localai` API support, and upgrading dependencies like `langchain` and `Streamlit` to enhance performance.

text-extract-api

The text-extract-api is a powerful tool that allows users to convert images, PDFs, or Office documents to Markdown text or JSON structured documents with high accuracy. It is built using FastAPI and utilizes Celery for asynchronous task processing, with Redis for caching OCR results. The tool provides features such as PDF/Office to Markdown and JSON conversion, improving OCR results with LLama, removing Personally Identifiable Information from documents, distributed queue processing, caching using Redis, switchable storage strategies, and a CLI tool for task management. Users can run the tool locally or on cloud services, with support for GPU processing. The tool also offers an online demo for testing purposes.

zsh_codex

Zsh Codex is a ZSH plugin that enables AI-powered code completion in the command line. It supports both OpenAI's Codex and Google's Generative AI (Gemini), providing advanced language model capabilities for coding tasks directly in the terminal. Users can easily install the plugin and configure it to enhance their coding experience with AI assistance.

comp

Comp AI is an open-source compliance automation platform designed to assist companies in achieving compliance with standards like SOC 2, ISO 27001, and GDPR. It transforms compliance into an engineering problem solved through code, automating evidence collection, policy management, and control implementation while maintaining data and infrastructure control.

open-edison

OpenEdison is a secure MCP control panel that connects AI to data/software with additional security controls to reduce data exfiltration risks. It helps address the lethal trifecta problem by providing visibility, monitoring potential threats, and alerting on data interactions. The tool offers features like data leak monitoring, controlled execution, easy configuration, visibility into agent interactions, a simple API, and Docker support. It integrates with LangGraph, LangChain, and plain Python agents for observability and policy enforcement. OpenEdison helps gain observability, control, and policy enforcement for AI interactions with systems of records, existing company software, and data to reduce risks of AI-caused data leakage.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

sonarqube-mcp-server

The SonarQube MCP Server is a Model Context Protocol (MCP) server that enables seamless integration with SonarQube Server or Cloud for code quality and security. It supports the analysis of code snippets directly within the agent context. The server provides various tools for analyzing code, managing issues, accessing metrics, and interacting with SonarQube projects. It also supports advanced features like dependency risk analysis, enterprise portfolio management, and system health checks. The server can be configured for different transport modes, proxy settings, and custom certificates. Telemetry data collection can be disabled if needed.

TalkWithGemini

Talk With Gemini is a web application that allows users to deploy their private Gemini application for free with one click. It supports Gemini Pro and Gemini Pro Vision models. The application features talk mode for direct communication with Gemini, visual recognition for understanding picture content, full Markdown support, automatic compression of chat records, privacy and security with local data storage, well-designed UI with responsive design, fast loading speed, and multi-language support. The tool is designed to be user-friendly and versatile for various deployment options and language preferences.

flapi

flAPI is a powerful service that automatically generates read-only APIs for datasets by utilizing SQL templates. Built on top of DuckDB, it offers features like automatic API generation, support for Model Context Protocol (MCP), connecting to multiple data sources, caching, security implementation, and easy deployment. The tool allows users to create APIs without coding and enables the creation of AI tools alongside REST endpoints using SQL templates. It supports unified configuration for REST endpoints and MCP tools/resources, concurrent servers for REST API and MCP server, and automatic tool discovery. The tool also provides DuckLake-backed caching for modern, snapshot-based caching with features like full refresh, incremental sync, retention, compaction, and audit logs.

mediasoup-client-aiortc

mediasoup-client-aiortc is a handler for the aiortc Python library, allowing Node.js applications to connect to a mediasoup server using WebRTC for real-time audio, video, and DataChannel communication. It facilitates the creation of Worker instances to manage Python subprocesses, obtain audio/video tracks, and create mediasoup-client handlers. The tool supports features like getUserMedia, handlerFactory creation, and event handling for subprocess closure and unexpected termination. It provides custom classes for media stream and track constraints, enabling diverse audio/video sources like devices, files, or URLs. The tool enhances WebRTC capabilities in Node.js applications through seamless Python subprocess communication.

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

LEANN

LEANN is an innovative vector database that democratizes personal AI, transforming your laptop into a powerful RAG system that can index and search through millions of documents using 97% less storage than traditional solutions without accuracy loss. It achieves this through graph-based selective recomputation and high-degree preserving pruning, computing embeddings on-demand instead of storing them all. LEANN allows semantic search of file system, emails, browser history, chat history, codebase, or external knowledge bases on your laptop with zero cloud costs and complete privacy. It is a drop-in semantic search MCP service fully compatible with Claude Code, enabling intelligent retrieval without changing your workflow.

For similar tasks

ruby-nano-bots

Ruby Nano Bots is an implementation of the Nano Bots specification supporting various AI providers like Cohere Command, Google Gemini, Maritaca AI MariTalk, Mistral AI, Ollama, OpenAI ChatGPT, and others. It allows calling tools (functions) and provides a helpful assistant for interacting with AI language models. The tool can be used both from the command line and as a library in Ruby projects, offering features like REPL, debugging, and encryption for data privacy.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

E2B

E2B Sandbox is a secure sandboxed cloud environment made for AI agents and AI apps. Sandboxes allow AI agents and apps to have long running cloud secure environments. In these environments, large language models can use the same tools as humans do. For example: * Cloud browsers * GitHub repositories and CLIs * Coding tools like linters, autocomplete, "go-to defintion" * Running LLM generated code * Audio & video editing The E2B sandbox can be connected to any LLM and any AI agent or app.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

generative-ai-for-beginners

This course has 18 lessons. Each lesson covers its own topic so start wherever you like! Lessons are labeled either "Learn" lessons explaining a Generative AI concept or "Build" lessons that explain a concept and code examples in both **Python** and **TypeScript** when possible. Each lesson also includes a "Keep Learning" section with additional learning tools. **What You Need** * Access to the Azure OpenAI Service **OR** OpenAI API - _Only required to complete coding lessons_ * Basic knowledge of Python or Typescript is helpful - *For absolute beginners check out these Python and TypeScript courses. * A Github account to fork this entire repo to your own GitHub account We have created a **Course Setup** lesson to help you with setting up your development environment. Don't forget to star (🌟) this repo to find it easier later. ## 🧠 Ready to Deploy? If you are looking for more advanced code samples, check out our collection of Generative AI Code Samples in both **Python** and **TypeScript**. ## 🗣️ Meet Other Learners, Get Support Join our official AI Discord server to meet and network with other learners taking this course and get support. ## 🚀 Building a Startup? Sign up for Microsoft for Startups Founders Hub to receive **free OpenAI credits** and up to **$150k towards Azure credits to access OpenAI models through Azure OpenAI Services**. ## 🙏 Want to help? Do you have suggestions or found spelling or code errors? Raise an issue or Create a pull request ## 📂 Each lesson includes: * A short video introduction to the topic * A written lesson located in the README * Python and TypeScript code samples supporting Azure OpenAI and OpenAI API * Links to extra resources to continue your learning ## 🗃️ Lessons | | Lesson Link | Description | Additional Learning | | :-: | :------------------------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------: | ------------------------------------------------------------------------------ | | 00 | Course Setup | **Learn:** How to Setup Your Development Environment | Learn More | | 01 | Introduction to Generative AI and LLMs | **Learn:** Understanding what Generative AI is and how Large Language Models (LLMs) work. | Learn More | | 02 | Exploring and comparing different LLMs | **Learn:** How to select the right model for your use case | Learn More | | 03 | Using Generative AI Responsibly | **Learn:** How to build Generative AI Applications responsibly | Learn More | | 04 | Understanding Prompt Engineering Fundamentals | **Learn:** Hands-on Prompt Engineering Best Practices | Learn More | | 05 | Creating Advanced Prompts | **Learn:** How to apply prompt engineering techniques that improve the outcome of your prompts. | Learn More | | 06 | Building Text Generation Applications | **Build:** A text generation app using Azure OpenAI | Learn More | | 07 | Building Chat Applications | **Build:** Techniques for efficiently building and integrating chat applications. | Learn More | | 08 | Building Search Apps Vector Databases | **Build:** A search application that uses Embeddings to search for data. | Learn More | | 09 | Building Image Generation Applications | **Build:** A image generation application | Learn More | | 10 | Building Low Code AI Applications | **Build:** A Generative AI application using Low Code tools | Learn More | | 11 | Integrating External Applications with Function Calling | **Build:** What is function calling and its use cases for applications | Learn More | | 12 | Designing UX for AI Applications | **Learn:** How to apply UX design principles when developing Generative AI Applications | Learn More | | 13 | Securing Your Generative AI Applications | **Learn:** The threats and risks to AI systems and methods to secure these systems. | Learn More | | 14 | The Generative AI Application Lifecycle | **Learn:** The tools and metrics to manage the LLM Lifecycle and LLMOps | Learn More | | 15 | Retrieval Augmented Generation (RAG) and Vector Databases | **Build:** An application using a RAG Framework to retrieve embeddings from a Vector Databases | Learn More | | 16 | Open Source Models and Hugging Face | **Build:** An application using open source models available on Hugging Face | Learn More | | 17 | AI Agents | **Build:** An application using an AI Agent Framework | Learn More | | 18 | Fine-Tuning LLMs | **Learn:** The what, why and how of fine-tuning LLMs | Learn More |

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

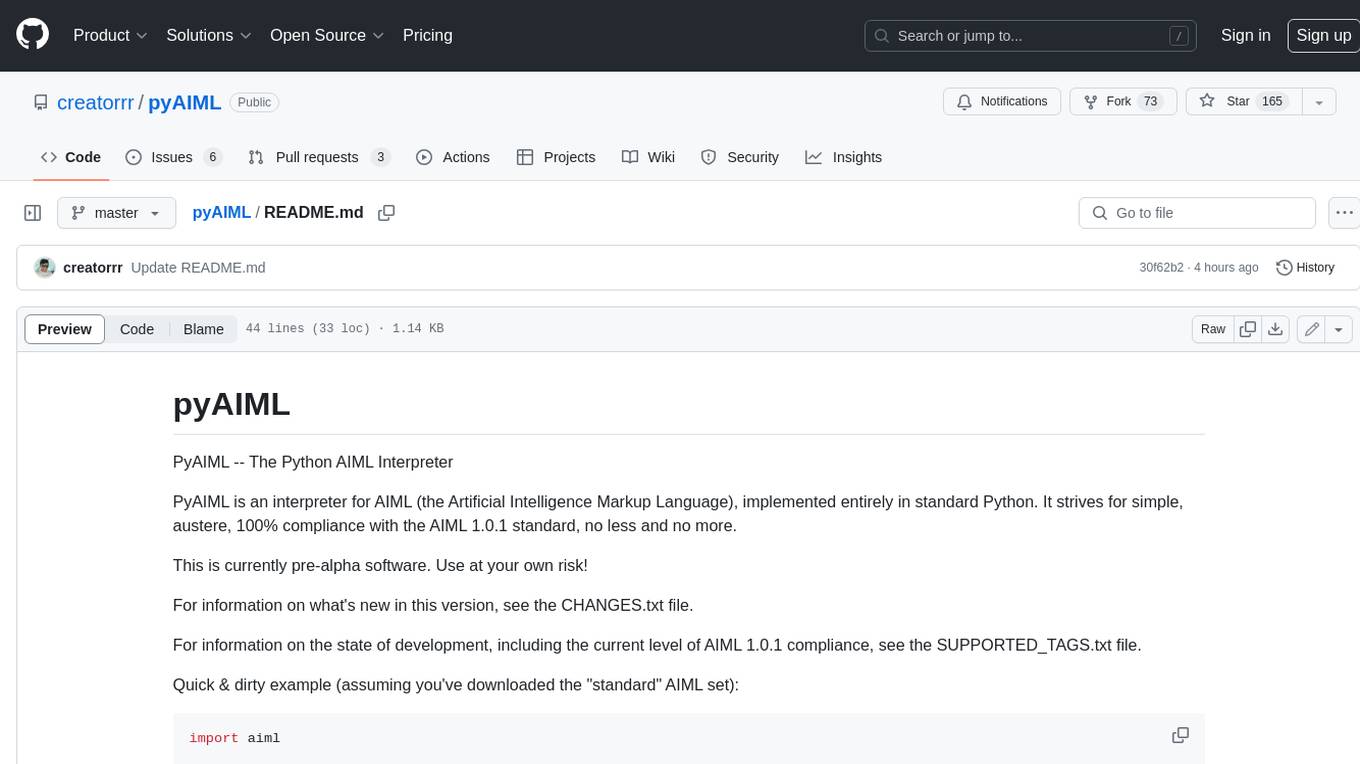

pyAIML

PyAIML is a Python implementation of the AIML (Artificial Intelligence Markup Language) interpreter. It aims to be a simple, standards-compliant interpreter for AIML 1.0.1. PyAIML is currently in pre-alpha development, so use it at your own risk. For more information on PyAIML, see the CHANGES.txt and SUPPORTED_TAGS.txt files.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.