amazon-q-developer-cli

✨ Agentic chat experience in your terminal. Build applications using natural language.

Stars: 1628

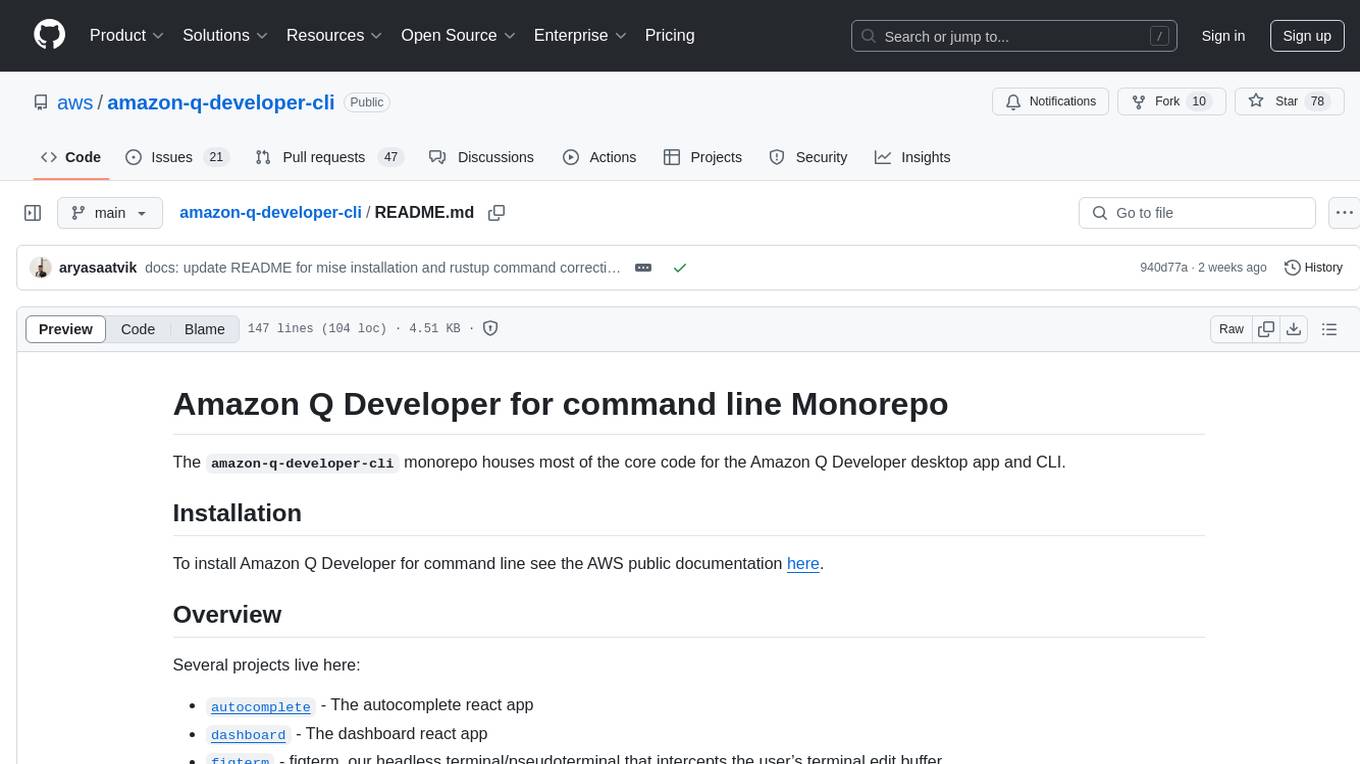

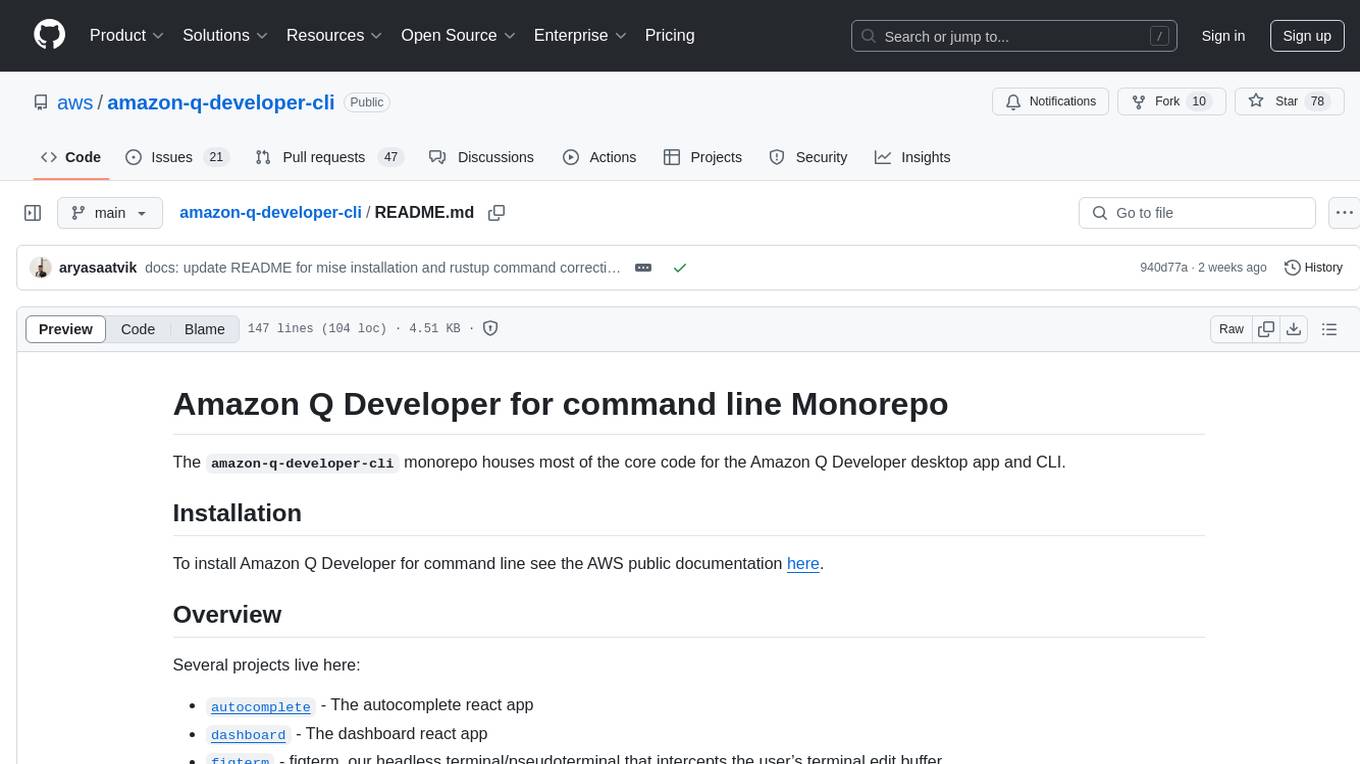

The `amazon-q-developer-cli` monorepo houses core code for the Amazon Q Developer desktop app and CLI. It includes projects like autocomplete, dashboard, figterm, q CLI, fig_desktop, fig_input_method, VSCode plugin, and JetBrains plugin. The repo also contains build scripts, internal rust crates, internal npm packages, protocol buffer message specification, and integration tests. The architecture involves different components communicating via IPC.

README:

-

macOS:

- DMG: Download now

- Linux:

Thank you so much for considering to contribute to Amazon Q.

Before getting started, see our contributing docs.

- MacOS

- Xcode 13 or later

- Brew

git clone https://github.com/aws/amazon-q-developer-cli.git2. Install the Rust toolchain using Rustup:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

rustup default stable

rustup toolchain install nightly

cargo install typos-cli- To compile and run:

cargo run --bin chat_cli. - To run tests:

cargo test. - To run lints:

cargo clippy. - To format rust files:

cargo +nightly fmt. - To run subcommands:

cargo run --bin chat_cli -- {subcommand}.- Login would then be:

cargo run --bin chat_cli -- login

- Login would then be:

-

chat_cli- theqCLI, allows users to interface with Amazon Q Developer from the command line -

scripts/- Contains ops and build related scripts -

crates/- Contains all rust crates -

docs/- Contains technical documentation

For security related concerns, see here.

This repo is dual licensed under MIT and Apache 2.0 licenses.

Those licenses can be found here and here.

“Amazon Web Services” and all related marks, including logos, graphic designs, and service names, are trademarks or trade dress of AWS in the U.S. and other countries. AWS’s trademarks and trade dress may not be used in connection with any product or service that is not AWS’s, in any manner that is likely to cause confusion among customers, or in any manner that disparages or discredits AWS.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for amazon-q-developer-cli

Similar Open Source Tools

amazon-q-developer-cli

The `amazon-q-developer-cli` monorepo houses core code for the Amazon Q Developer desktop app and CLI. It includes projects like autocomplete, dashboard, figterm, q CLI, fig_desktop, fig_input_method, VSCode plugin, and JetBrains plugin. The repo also contains build scripts, internal rust crates, internal npm packages, protocol buffer message specification, and integration tests. The architecture involves different components communicating via IPC.

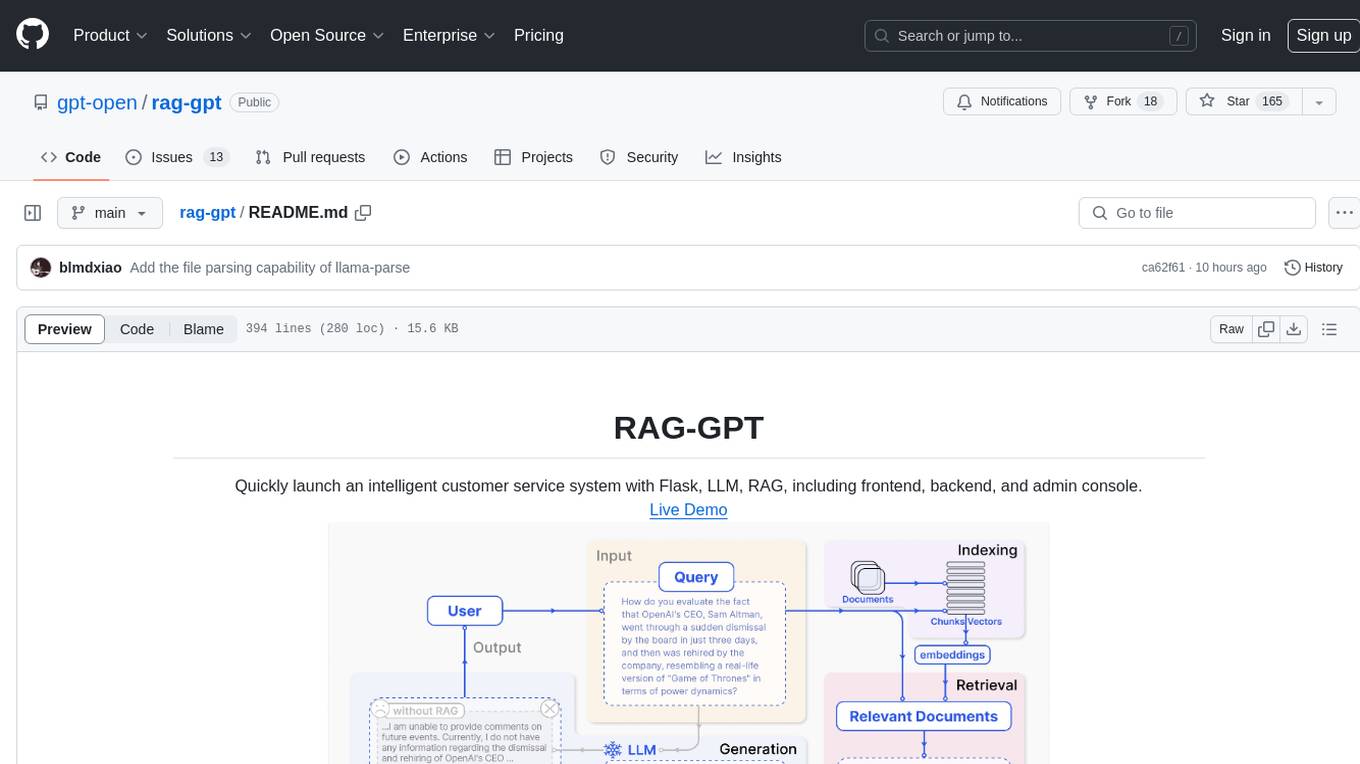

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, enables deployment of conversational service robots in minutes, integrates diverse knowledge bases, offers flexible configuration options, and features an attractive user interface.

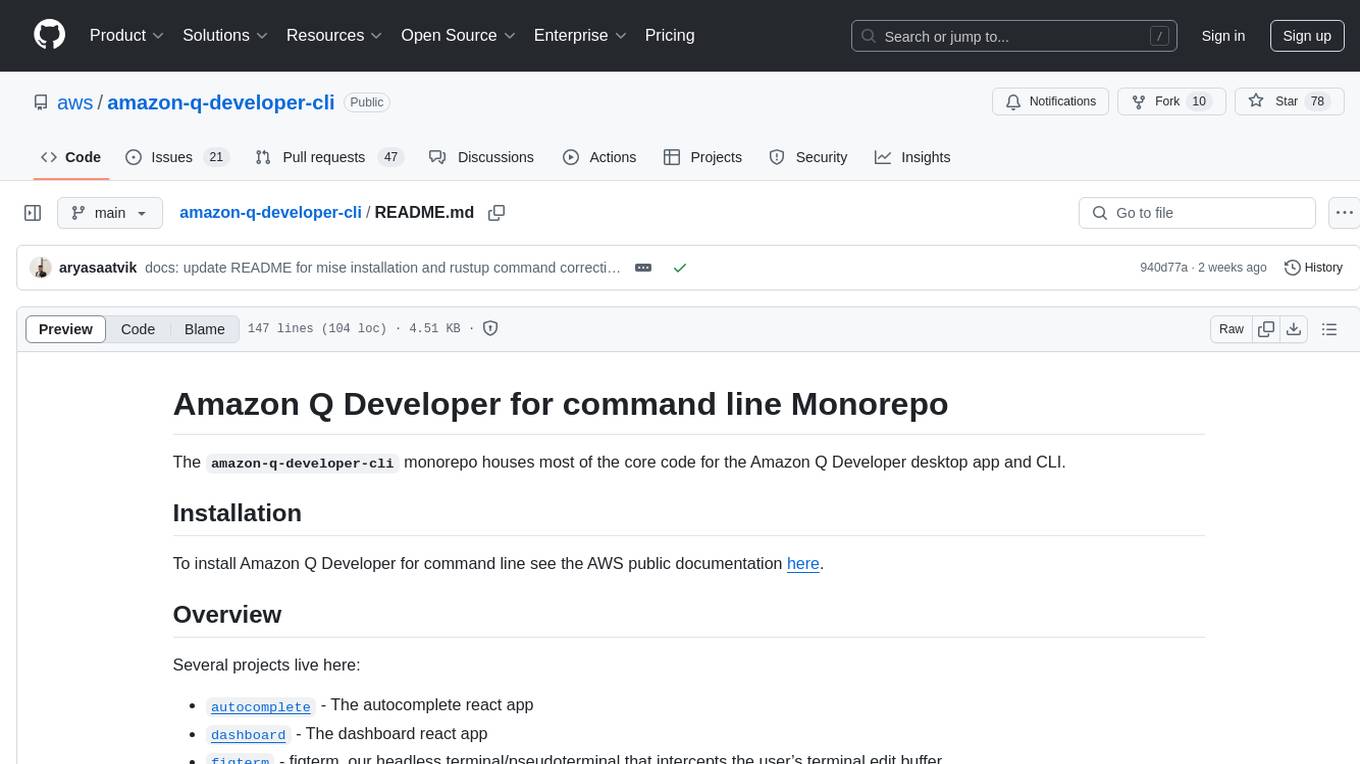

BuildCLI

BuildCLI is a command-line interface (CLI) tool designed for managing and automating common tasks in Java project development. It simplifies the development process by allowing users to create, compile, manage dependencies, run projects, generate documentation, manage configuration profiles, dockerize projects, integrate CI/CD tools, and generate structured changelogs. The tool aims to enhance productivity and streamline Java project management by providing a range of functionalities accessible directly from the terminal.

galxe-aio

Galxe AIO is a versatile tool designed to automate various tasks on social media platforms like Twitter, email, and Discord. It supports tasks such as following, retweeting, liking, and quoting on Twitter, as well as solving quizzes, submitting surveys, and more. Users can link their Twitter accounts, email accounts (IMAP or mail3.me), and Discord accounts to the tool to streamline their activities. Additionally, the tool offers features like claiming rewards, quiz solving, submitting surveys, and managing referral links and account statistics. It also supports different types of rewards like points, mystery boxes, gas-less OATs, gas OATs and NFTs, and participation in raffles. The tool provides settings for managing EVM wallets, proxies, twitters, emails, and discords, along with custom configurations in the `config.toml` file. Users can run the tool using Python 3.11 and install dependencies using `pip` and `playwright`. The tool generates results and logs in specific folders and allows users to donate using TRC-20 or ERC-20 tokens.

Toolify

Toolify is a middleware proxy that empowers Large Language Models (LLMs) and OpenAI API interfaces by enabling function calling capabilities. It acts as an intermediary between applications and LLM APIs, injecting prompts and parsing tool calls from the model's response. Key features include universal function calling, multiple function calls support, flexible initiation, compatibility with

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, offers quick setup for conversational service robots, integrates diverse knowledge bases, provides flexible configuration options, and features an attractive user interface.

elasticsearch-labs

This repository contains executable Python notebooks, sample apps, and resources for testing out the Elastic platform. Users can learn how to use Elasticsearch as a vector database for storing embeddings, build use cases like retrieval augmented generation (RAG), summarization, and question answering (QA), and test Elastic's leading-edge capabilities like the Elastic Learned Sparse Encoder and reciprocal rank fusion (RRF). It also allows integration with projects like OpenAI, Hugging Face, and LangChain to power LLM-powered applications. The repository enables modern search experiences powered by AI/ML.

rclip

rclip is a command-line photo search tool powered by the OpenAI's CLIP neural network. It allows users to search for images using text queries, similar image search, and combining multiple queries. The tool extracts features from photos to enable searching and indexing, with options for previewing results in supported terminals or custom viewers. Users can install rclip on Linux, macOS, and Windows using different installation methods. The repository follows the Conventional Commits standard and welcomes contributions from the community.

sandbox

Sandbox is an open-source cloud-based code editing environment with custom AI code autocompletion and real-time collaboration. It consists of a frontend built with Next.js, TailwindCSS, Shadcn UI, Clerk, Monaco, and Liveblocks, and a backend with Express, Socket.io, Cloudflare Workers, D1 database, R2 storage, Workers AI, and Drizzle ORM. The backend includes microservices for database, storage, and AI functionalities. Users can run the project locally by setting up environment variables and deploying the containers. Contributions are welcome following the commit convention and structure provided in the repository.

ChatGPT

The ChatGPT API Free Reverse Proxy provides free self-hosted API access to ChatGPT (`gpt-3.5-turbo`) with OpenAI's familiar structure, eliminating the need for code changes. It offers streaming response, API endpoint compatibility, and complimentary access without an API key. Installation options include Docker, PC/Server, and Termux on Android devices. The API can be accessed through a self-hosted local server or a pre-hosted API with an API key obtained from the Discord server. Usage examples are provided for Python and Node.js, and the project is licensed under AGPL-3.0.

AI-Video-Boilerplate-Simple

AI-video-boilerplate-simple is a free Live AI Video boilerplate for testing out live video AI experiments. It includes a simple Flask server that serves files, supports live video from various sources, and integrates with Roboflow for AI vision. Users can use this template for projects, research, business ideas, and homework. It is lightweight and can be deployed on popular cloud platforms like Replit, Vercel, Digital Ocean, or Heroku.

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

basehub

JavaScript / TypeScript SDK for BaseHub, the first AI-native content hub. **Features:** * ✨ Infers types from your BaseHub repository... _meaning IDE autocompletion works great._ * 🏎️ No dependency on graphql... _meaning your bundle is more lightweight._ * 🌐 Works everywhere `fetch` is supported... _meaning you can use it anywhere._

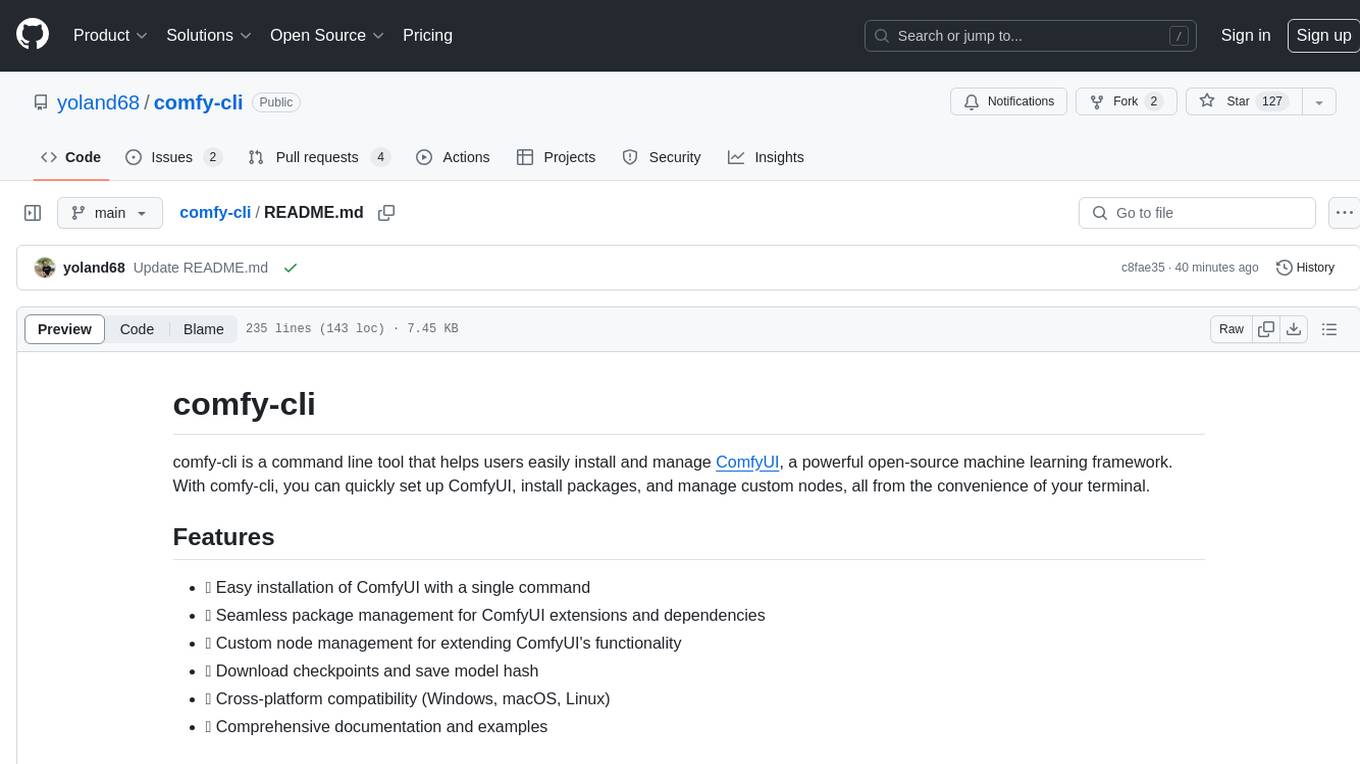

comfy-cli

comfy-cli is a command line tool designed to simplify the installation and management of ComfyUI, an open-source machine learning framework. It allows users to easily set up ComfyUI, install packages, manage custom nodes, download checkpoints, and ensure cross-platform compatibility. The tool provides comprehensive documentation and examples to aid users in utilizing ComfyUI efficiently.

llm-vscode

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

For similar tasks

amazon-q-developer-cli

The `amazon-q-developer-cli` monorepo houses core code for the Amazon Q Developer desktop app and CLI. It includes projects like autocomplete, dashboard, figterm, q CLI, fig_desktop, fig_input_method, VSCode plugin, and JetBrains plugin. The repo also contains build scripts, internal rust crates, internal npm packages, protocol buffer message specification, and integration tests. The architecture involves different components communicating via IPC.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.