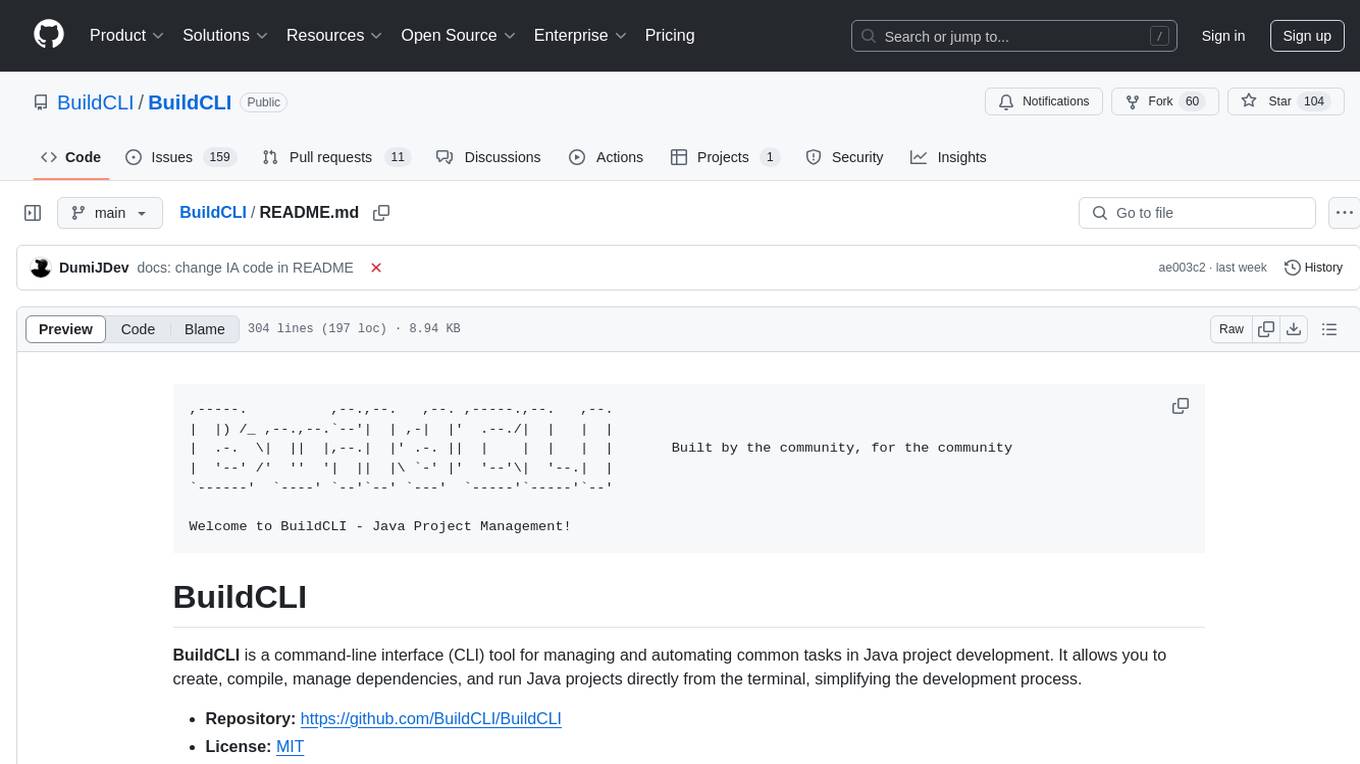

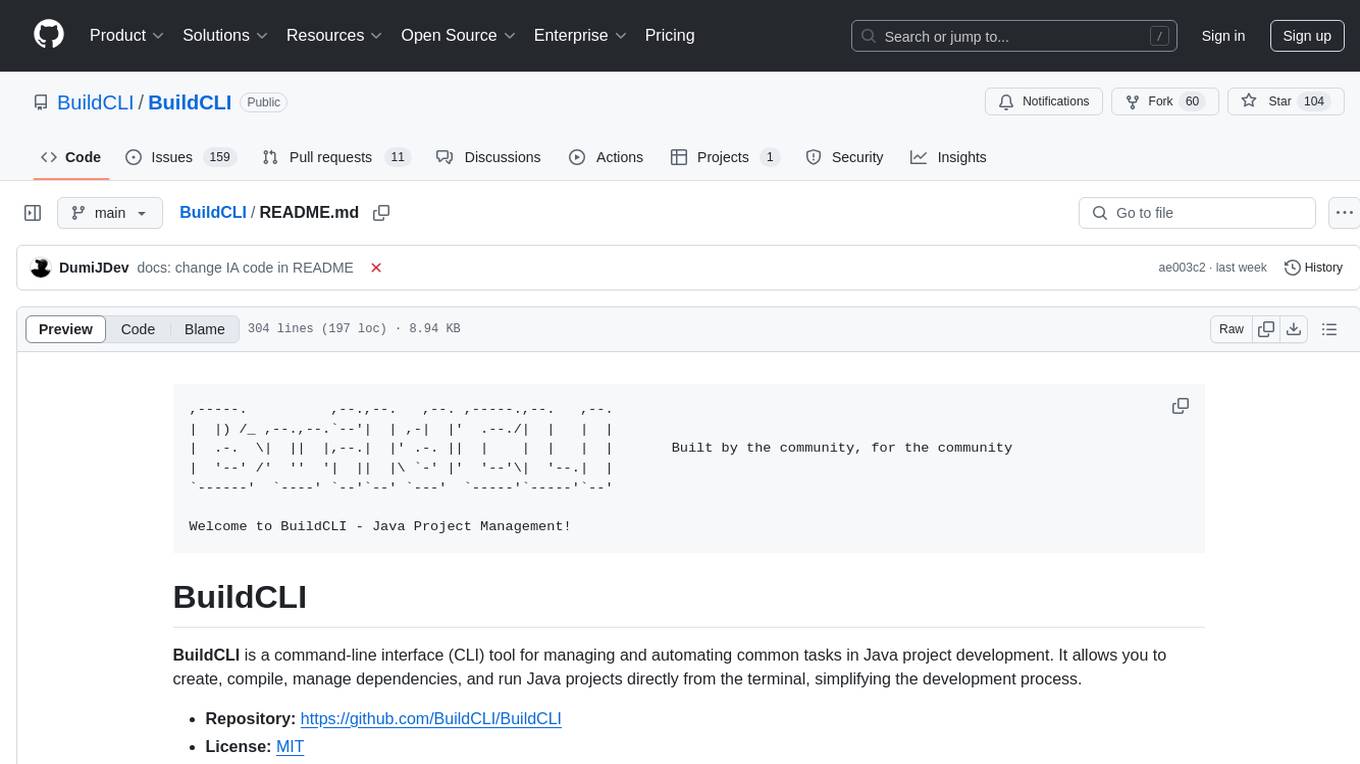

BuildCLI

BuildCLI is a command-line interface (CLI) tool for managing and automating common tasks in Java project development.

Stars: 104

BuildCLI is a command-line interface (CLI) tool designed for managing and automating common tasks in Java project development. It simplifies the development process by allowing users to create, compile, manage dependencies, run projects, generate documentation, manage configuration profiles, dockerize projects, integrate CI/CD tools, and generate structured changelogs. The tool aims to enhance productivity and streamline Java project management by providing a range of functionalities accessible directly from the terminal.

README:

,-----. ,--.,--. ,--. ,-----.,--. ,--.

| |) /_ ,--.,--.`--'| | ,-| |' .--./| | | |

| .-. \| || |,--.| |' .-. || | | | | | Built by the community, for the community

| '--' /' '' '| || |\ `-' |' '--'\| '--.| |

`------' `----' `--'`--' `---' `-----'`-----'`--'

Welcome to BuildCLI - Java Project Management!

BuildCLI is a command-line interface (CLI) tool for managing and automating common tasks in Java project development. It allows you to create, compile, manage dependencies, and run Java projects directly from the terminal, simplifying the development process.

- Repository: https://github.com/BuildCLI/BuildCLI

- License: MIT

- Initialize Project: Creates the basic structure of directories and files for a Java project.

- Compile Project: Compiles the project source code using Maven.

-

Add Dependency: Adds new dependencies to the

pom.xml. -

Remove Dependency: Remove dependencies from

pom.xml. - Document Code: [Beta] Generates documentation for a Java file using AI.

-

Manage Configuration Profiles: Creates specific configuration files for profiles (

application-dev.properties,application-test.properties, etc.). - Run Project: Starts the project directly from the CLI using Spring Boot.

- Dockerize Project: Generates a Dockerfile for the project, allowing easy containerization.

- Build and Run Docker Container: Builds and runs the Docker container using the generated Dockerfile.

- CI/CD Integration: Automatically generates configuration files por CI/CD tools (e.g., Jenkins, GitHub Actions) and triggers pipelines based on project changes.

- Changelog Generation: Automatically generates a structured changelog by analyzing the Git commit history, facilitating the understanding of changes between releases.

-

Script Installation: Just download the .sh or .bat file and execute.

- On a Unix-like system (Linux, macOS), simply give execution permission to

install.shand run it:

sudo chmod +x install.sh ./install.sh

- On Windows: Run

install.batby double-clicking it or executing the following command in the Command Prompt (cmd):

install.bat

- On a Unix-like system (Linux, macOS), simply give execution permission to

Now BuildCLI is ready to use. Test the buildcli command in the terminal.

We made a major refactor of the BuildCLI architecture. Please use the buildcli help command to see all available options. Also, refer to issue #89 and pull request #79 for more details.

Creates the basic Java project structure, including src/main/java, pom.xml, and README.md.

You can specify a project name to dynamically set the package structure and project artifact.

- To initialize a project with a specific name:

buildcli project init MyProjectThis will create the project structure with MyProject as the base package name, resulting in a directory like src/main/java/org/myproject.

- To initialize a project without specifying a name:

buildcli project initThis will create the project structure with buildcli as the base package name, resulting in a directory like src/main/java/org/buildcli.

Compiles the Java project using Maven:

buildcli project build --compileAdds a dependency to the project in the groupId:artifactId format. You can also specify a version using the format groupId:artifactId:version. If no version is specified, the dependency will default to the latest version available.

- To add a dependency with the latest version:

buildcli project add dependency org.springframework:spring-core- To add a dependency with a specified version:

buildcli p a d org.springframework:spring-core:5.3.21After executing these commands, the dependency will be appended to your pom.xml file under the <dependencies> section.

Creates a configuration file with the specified profile, for example, application-dev.properties:

buildcli project add profile devRuns the Java project using Spring Boot:

buildcli project runAutomatically generates inline documentation for a Java file using AI:

# File or directory

buildcli ai code document File.java This command sends the specified Java file to the local Ollama server, which generates documentation and comments directly within the code. The modified file with documentation will be saved back to the same location.

Sets the active environment profile, saving it to the environment.config file. The profile is referenced during project execution, ensuring that the correct configuration is loaded.

buildcli p set env devAfter running this command, the active profile is set to dev, and the environment.config file is updated accordingly.

With the --set-environment functionality, you can set the active environment profile. When running the project with buildcli --run, the active profile will be displayed in the terminal.

This command generates a Dockerfile for your Java project, making it easier to containerize your application.

buildcli p add dockerfileThis command automatically builds and runs the Docker container for you. After running the command, the Docker image will be created, and your project will run inside the container.

buildcli project run dockerGenerates configuration files for CI/CD tools and prepares the project for automated pipelines. Supports Jenkins, Gitlab and GitHub Actions.

buildcli project add pipeline githubbuildcli project add pipeline gitlabbuildcli project add pipeline jenkinsEnsure you have the Ollama server running locally, as the docs functionality relies on an AI model accessible via a local API.

You can start the Ollama server by running:

ollama run llama3.2- Jenkins: Ensure Jenkins is installed and accessible in your environment.

- GitHub Actions: Ensure your repository is hosted on GitHub with Actions enabled.

BuildCLI now includes an automatic changelog generation feature that analyzes your Git commit history and produces a structured changelog. This helps developers and end-users easily track changes between releases.

To generate a changelog, run:

buildcli changelog [OPTIONS]Or use the alias:

buildcli cl [OPTIONS]-

--version, -v <version>:Specify the release version for the changelog. If omitted, BuildCLI attempts to detect the latest Git tag. If no tag is found, it defaults to "Unreleased". -

--format, -f <format>:Specify the output format. Supported formats:- markdown (default)

- html

- json

-

--output, -o <file>:Specify the output file name. If not provided, defaults to CHANGELOG.. -

--include, -i <commit types>:Provide a comma-separated list of commit types to include (e.g., feat,fix,docs,refactor).

buildcli changelog --version v1.0.0 --format markdown --include feat,fix --output CHANGELOG.mdbuildcli changelog -v v1.0.0 -f markdown -i feat,fix -o CHANGELOG.mdContributions are welcome! Feel free to open Issues and submit Pull Requests. See the CONTRIBUTING.md file for more details.

Quick steps to contribute:

-

Fork the project.

-

Create a branch for your changes:

git checkout -b feature/my-feature

-

Commit your changes:

git commit -m "My new feature" -

Push to your branch:

git push origin feature/my-feature

-

Open a Pull Request in the main repository.

This project is licensed under the MIT License - see the LICENSE file for details.

To get a deeper understanding of the BuildCLI project structure, key classes, commands, and how to contribute, check out our comprehensive guide in PROJECT_FAMILIARIZATION.md.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for BuildCLI

Similar Open Source Tools

BuildCLI

BuildCLI is a command-line interface (CLI) tool designed for managing and automating common tasks in Java project development. It simplifies the development process by allowing users to create, compile, manage dependencies, run projects, generate documentation, manage configuration profiles, dockerize projects, integrate CI/CD tools, and generate structured changelogs. The tool aims to enhance productivity and streamline Java project management by providing a range of functionalities accessible directly from the terminal.

pipecat-flows

Pipecat Flows is a framework designed for building structured conversations in AI applications. It allows users to create both predefined conversation paths and dynamically generated flows, handling state management and LLM interactions. The framework includes a Python module for building conversation flows and a visual editor for designing and exporting flow configurations. Pipecat Flows is suitable for scenarios such as customer service scripts, intake forms, personalized experiences, and complex decision trees.

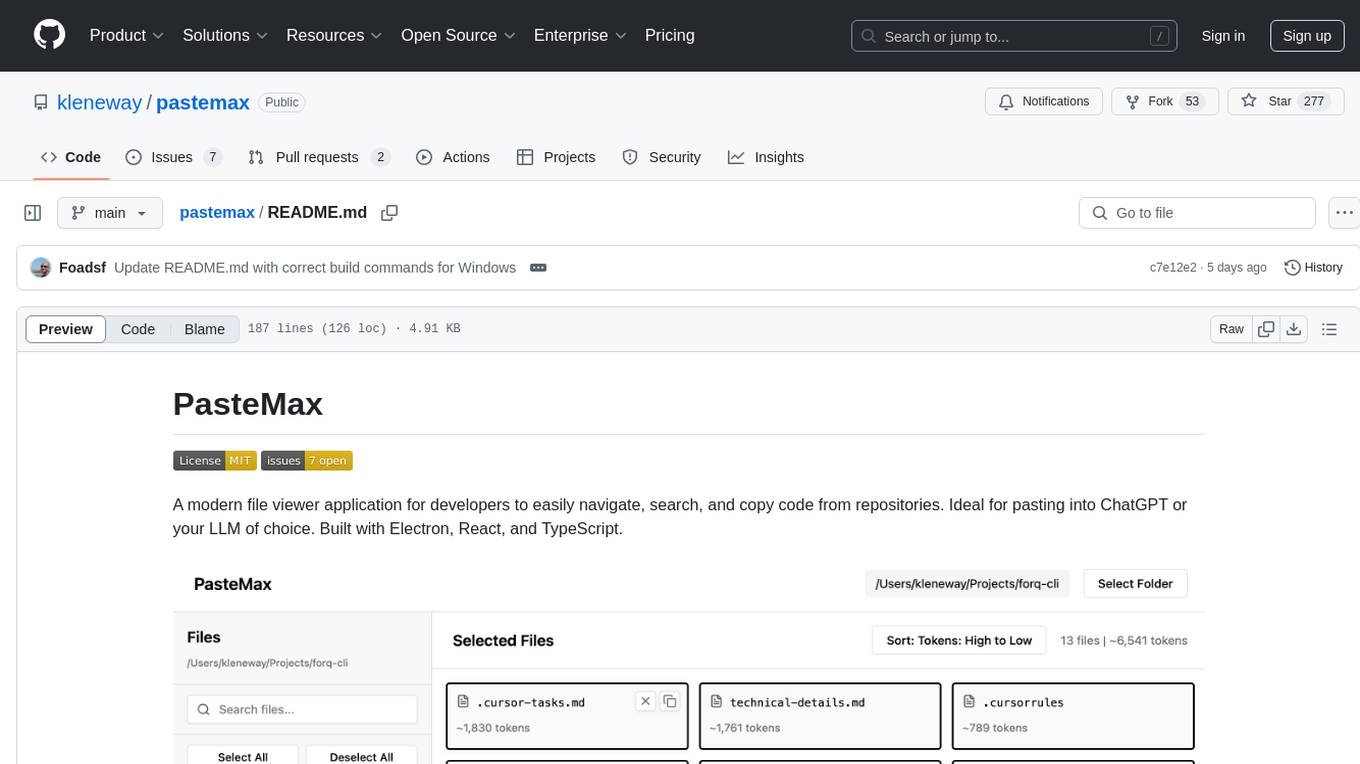

pastemax

PasteMax is a modern file viewer application designed for developers to easily navigate, search, and copy code from repositories. It provides features such as file tree navigation, token counting, search capabilities, selection management, sorting options, dark mode, binary file detection, and smart file exclusion. Built with Electron, React, and TypeScript, PasteMax is ideal for pasting code into ChatGPT or other language models. Users can download the application or build it from source, and customize file exclusions. Troubleshooting steps are provided for common issues, and contributions to the project are welcome under the MIT License.

manifold

Manifold is a powerful platform for workflow automation using AI models. It supports text generation, image generation, and retrieval-augmented generation, integrating seamlessly with popular AI endpoints. Additionally, Manifold provides robust semantic search capabilities using PGVector combined with the SEFII engine. It is under active development and not production-ready.

company-research-agent

Agentic Company Researcher is a multi-agent tool that generates comprehensive company research reports by utilizing a pipeline of AI agents to gather, curate, and synthesize information from various sources. It features multi-source research, AI-powered content filtering, real-time progress streaming, dual model architecture, modern React frontend, and modular architecture. The tool follows an agentic framework with specialized research and processing nodes, leverages separate models for content generation, uses a content curation system for relevance scoring and document processing, and implements a real-time communication system via WebSocket connections. Users can set up the tool quickly using the provided setup script or manually, and it can also be deployed using Docker and Docker Compose. The application can be used for local development and deployed to various cloud platforms like AWS Elastic Beanstalk, Docker, Heroku, and Google Cloud Run.

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

AirCasting

AirCasting is a platform for gathering, visualizing, and sharing environmental data. It aims to provide a central hub for environmental data, making it easier for people to access and use this information to make informed decisions about their environment.

codepair

CodePair is an open-source real-time collaborative markdown editor with AI intelligence, allowing users to collaboratively edit documents, share documents with external parties, and utilize AI intelligence within the editor. It is built using React, NestJS, and LangChain. The repository contains frontend and backend code, with detailed instructions for setting up and running each part. Users can choose between Frontend Development Only Mode or Full Stack Development Mode based on their needs. CodePair also integrates GitHub OAuth for Social Login feature. Contributors are welcome to submit patches and follow the contribution workflow.

UCAgent

UCAgent is an AI-powered automated UT verification agent for chip design. It automates chip verification workflow, supports functional and code coverage analysis, ensures consistency among documentation, code, and reports, and collaborates with mainstream Code Agents via MCP protocol. It offers three intelligent interaction modes and requires Python 3.11+, Linux/macOS OS, 4GB+ memory, and access to an AI model API. Users can clone the repository, install dependencies, configure qwen, and start verification. UCAgent supports various verification quality improvement options and basic operations through TUI shortcuts and stage color indicators. It also provides documentation build and preview using MkDocs, PDF manual build using Pandoc + XeLaTeX, and resources for further help and contribution.

NeoGPT

NeoGPT is an AI assistant that transforms your local workspace into a powerhouse of productivity from your CLI. With features like code interpretation, multi-RAG support, vision models, and LLM integration, NeoGPT redefines how you work and create. It supports executing code seamlessly, multiple RAG techniques, vision models, and interacting with various language models. Users can run the CLI to start using NeoGPT and access features like Code Interpreter, building vector database, running Streamlit UI, and changing LLM models. The tool also offers magic commands for chat sessions, such as resetting chat history, saving conversations, exporting settings, and more. Join the NeoGPT community to experience a new era of efficiency and contribute to its evolution.

alog

ALog is an open-source project designed to facilitate the deployment of server-side code to Cloudflare. It provides a step-by-step guide on creating a Cloudflare worker, configuring environment variables, and updating API base URL. The project aims to simplify the process of deploying server-side code and interacting with OpenAI API. ALog is distributed under the GNU General Public License v2.0, allowing users to modify and distribute the app while adhering to App Store Review Guidelines.

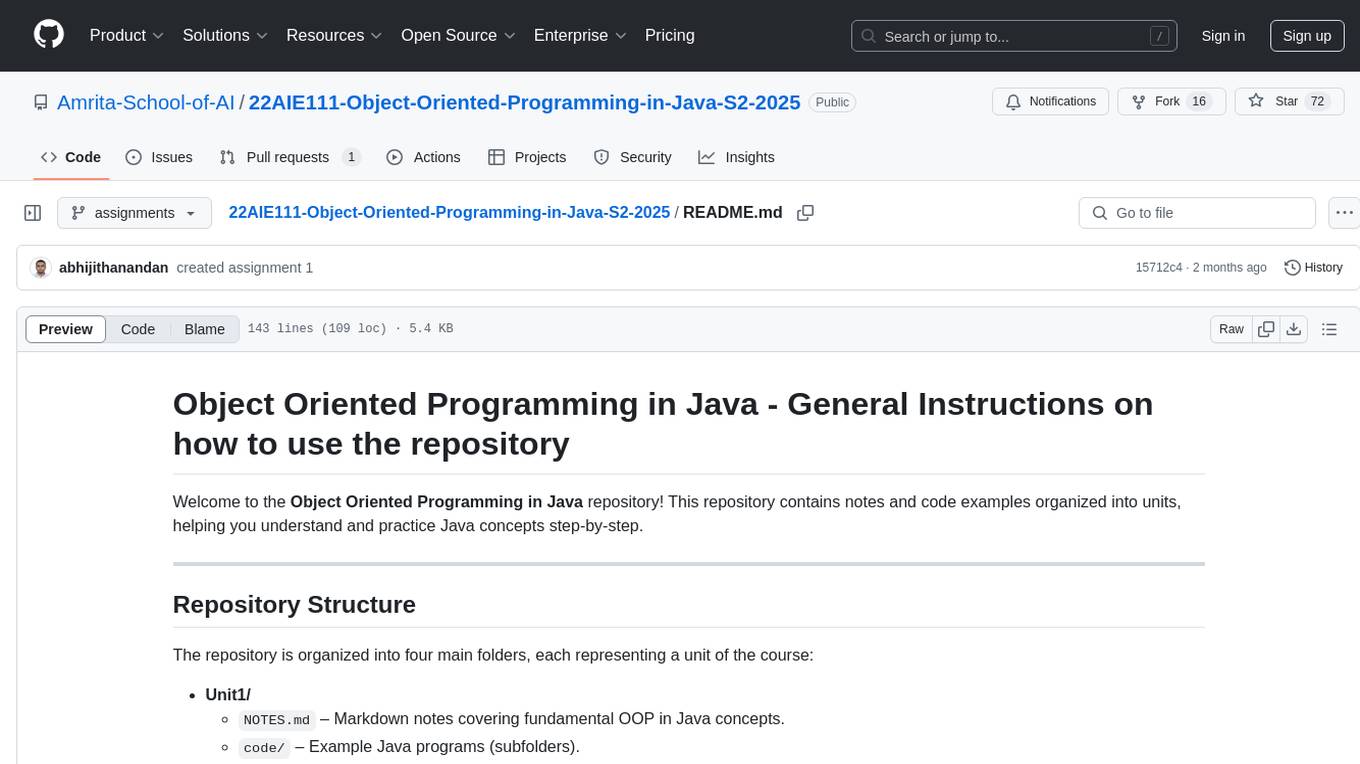

22AIE111-Object-Oriented-Programming-in-Java-S2-2025

The 'Object Oriented Programming in Java' repository provides notes and code examples organized into units to help users understand and practice Java concepts step-by-step. It includes theoretical notes, practical Java examples, setup files for Visual Studio Code and IntelliJ IDEA, instructions on setting up Java, running Java programs from the command line, and loading projects in VS Code or IntelliJ IDEA. Users can contribute by opening issues or submitting pull requests. The repository is intended for educational purposes, allowing forking and modification for personal study or classroom use.

openai-kotlin

OpenAI Kotlin API client is a Kotlin client for OpenAI's API with multiplatform and coroutines capabilities. It allows users to interact with OpenAI's API using Kotlin programming language. The client supports various features such as models, chat, images, embeddings, files, fine-tuning, moderations, audio, assistants, threads, messages, and runs. It also provides guides on getting started, chat & function call, file source guide, and assistants. Sample apps are available for reference, and troubleshooting guides are provided for common issues. The project is open-source and licensed under the MIT license, allowing contributions from the community.

backend.ai-webui

Backend.AI Web UI is a user-friendly web and app interface designed to make AI accessible for end-users, DevOps, and SysAdmins. It provides features for session management, inference service management, pipeline management, storage management, node management, statistics, configurations, license checking, plugins, help & manuals, kernel management, user management, keypair management, manager settings, proxy mode support, service information, and integration with the Backend.AI Web Server. The tool supports various devices, offers a built-in websocket proxy feature, and allows for versatile usage across different platforms. Users can easily manage resources, run environment-supported apps, access a web-based terminal, use Visual Studio Code editor, manage experiments, set up autoscaling, manage pipelines, handle storage, monitor nodes, view statistics, configure settings, and more.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

For similar tasks

BuildCLI

BuildCLI is a command-line interface (CLI) tool designed for managing and automating common tasks in Java project development. It simplifies the development process by allowing users to create, compile, manage dependencies, run projects, generate documentation, manage configuration profiles, dockerize projects, integrate CI/CD tools, and generate structured changelogs. The tool aims to enhance productivity and streamline Java project management by providing a range of functionalities accessible directly from the terminal.

bedrock-engineer

Bedrock Engineer is an AI assistant for software development tasks powered by Amazon Bedrock. It combines large language models with file system operations and web search functionality to support development processes. The autonomous AI agent provides interactive chat, file system operations, web search, project structure management, code analysis, code generation, data analysis, agent and tool customization, chat history management, and multi-language support. Users can select agents, customize them, select tools, and customize tools. The tool also includes a website generator for React.js, Vue.js, Svelte.js, and Vanilla.js, with support for inline styling, Tailwind.css, and Material UI. Users can connect to design system data sources and generate AWS Step Functions ASL definitions.

For similar jobs

BuildCLI

BuildCLI is a command-line interface (CLI) tool designed for managing and automating common tasks in Java project development. It simplifies the development process by allowing users to create, compile, manage dependencies, run projects, generate documentation, manage configuration profiles, dockerize projects, integrate CI/CD tools, and generate structured changelogs. The tool aims to enhance productivity and streamline Java project management by providing a range of functionalities accessible directly from the terminal.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students