agenticSeek

Fully Local Manus AI. No APIs, No $200 monthly bills. Enjoy an autonomous agent that thinks, browses the web, and code for the sole cost of electricity. 🔔 Official updates only via twitter @Martin993886460 (Beware of fake account)

Stars: 21840

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

README:

English | 中文 | 繁體中文 | Français | 日本語 | Português (Brasil) | Español

A 100% local alternative to Manus AI, this voice-enabled AI assistant autonomously browses the web, writes code, and plans tasks while keeping all data on your device. Tailored for local reasoning models, it runs entirely on your hardware, ensuring complete privacy and zero cloud dependency.

-

🔒 Fully Local & Private - Everything runs on your machine — no cloud, no data sharing. Your files, conversations, and searches stay private.

-

🌐 Smart Web Browsing - AgenticSeek can browse the internet by itself — search, read, extract info, fill web form — all hands-free.

-

💻 Autonomous Coding Assistant - Need code? It can write, debug, and run programs in Python, C, Go, Java, and more — all without supervision.

-

🧠 Smart Agent Selection - You ask, it figures out the best agent for the job automatically. Like having a team of experts ready to help.

-

📋 Plans & Executes Complex Tasks - From trip planning to complex projects — it can split big tasks into steps and get things done using multiple AI agents.

-

🎙️ Voice-Enabled - Clean, fast, futuristic voice and speech to text allowing you to talk to it like it's your personal AI from a sci-fi movie. (In progress)

Can you search for the agenticSeek project, learn what skills are required, then open the CV_candidates.zip and then tell me which match best the project

https://github.com/user-attachments/assets/b8ca60e9-7b3b-4533-840e-08f9ac426316

Disclaimer: This demo, including all the files that appear (e.g: CV_candidates.zip), are entirely fictional. We are not a corporation, we seek open-source contributors not candidates.

🛠

⚠️ ️ Active Work in Progress

🙏 This project started as a side-project and has zero roadmap and zero funding. It's grown way beyond what I expected by ending in GitHub Trending. Contributions, feedback, and patience are deeply appreciated.

Before you begin, ensure you have the following software installed:

- Git: For cloning the repository. Download Git

- Python 3.10.x: We strongly recommend using Python version 3.10.x. Using other versions might lead to dependency errors. Download Python 3.10 (pick a 3.10.x version).

-

Docker Engine & Docker Compose: For running bundled services like SearxNG.

- Install Docker Desktop (which includes Docker Compose V2): Windows | Mac | Linux

- Alternatively, install Docker Engine and Docker Compose separately on Linux: Docker Engine | Docker Compose (ensure you install Compose V2, e.g.,

sudo apt-get install docker-compose-plugin).

git clone https://github.com/Fosowl/agenticSeek.git

cd agenticSeek

mv .env.example .envSEARXNG_BASE_URL="http://127.0.0.1:8080"

REDIS_BASE_URL="redis://redis:6379/0"

WORK_DIR="/Users/mlg/Documents/workspace_for_ai"

OLLAMA_PORT="11434"

LM_STUDIO_PORT="1234"

CUSTOM_ADDITIONAL_LLM_PORT="11435"

OPENAI_API_KEY='optional'

DEEPSEEK_API_KEY='optional'

OPENROUTER_API_KEY='optional'

TOGETHER_API_KEY='optional'

GOOGLE_API_KEY='optional'

ANTHROPIC_API_KEY='optional'Update the .env file with your own values as needed:

- SEARXNG_BASE_URL: Leave unchanged

- REDIS_BASE_URL: Leave unchanged

- WORK_DIR: Path to your working directory on your local machine. AgenticSeek will be able to read and interact with these files.

- OLLAMA_PORT: Port number for the Ollama service.

- LM_STUDIO_PORT: Port number for the LM Studio service.

- CUSTOM_ADDITIONAL_LLM_PORT: Port for any additional custom LLM service.

API Key are totally optional for user who choose to run LLM locally. Which is the primary purpose of this project. Leave empty if you have sufficient hardware

Make sure Docker is installed and running on your system. You can start Docker using the following commands:

-

On Linux/macOS:

Open a terminal and run:sudo systemctl start docker

Or launch Docker Desktop from your applications menu if installed.

-

On Windows:

Start Docker Desktop from the Start menu.

You can verify Docker is running by executing:

docker infoIf you see information about your Docker installation, it is running correctly.

See the table of Local Providers below for a summary.

Next step: Run AgenticSeek locally

See the Troubleshooting section if you are having issues.

If your hardware can't run LLMs locally, see Setup to run with an API.

For detailed config.ini explanations, see Config Section.

Hardware Requirements:

To run LLMs locally, you'll need sufficient hardware. At a minimum, a GPU capable of running Magistral, Qwen or Deepseek 14B is required. See the FAQ for detailed model/performance recommendations.

Setup your local provider

Start your local provider, for example with ollama:

ollama serveSee below for a list of local supported provider.

Update the config.ini

Change the config.ini file to set the provider_name to a supported provider and provider_model to a LLM supported by your provider. We recommend reasoning model such as Magistral or Deepseek.

See the FAQ at the end of the README for required hardware.

[MAIN]

is_local = True # Whenever you are running locally or with remote provider.

provider_name = ollama # or lm-studio, openai, etc..

provider_model = deepseek-r1:14b # choose a model that fit your hardware

provider_server_address = 127.0.0.1:11434

agent_name = Jarvis # name of your AI

recover_last_session = True # whenever to recover the previous session

save_session = True # whenever to remember the current session

speak = False # text to speech

listen = False # Speech to text, only for CLI, experimental

jarvis_personality = False # Whenever to use a more "Jarvis" like personality (experimental)

languages = en zh # The list of languages, Text to speech will default to the first language on the list

[BROWSER]

headless_browser = True # leave unchanged unless using CLI on host.

stealth_mode = True # Use undetected selenium to reduce browser detectionWarning:

-

The

config.inifile format does not support comments. Do not copy and paste the example configuration directly, as comments will cause errors. Instead, manually modify theconfig.inifile with your desired settings, excluding any comments. -

Do NOT set provider_name to

openaiif using LM-studio for running LLMs. Set it tolm-studio. -

Some provider (eg: lm-studio) require you to have

http://in front of the IP. For examplehttp://127.0.0.1:1234

List of local providers

| Provider | Local? | Description |

|---|---|---|

| ollama | Yes | Run LLMs locally with ease using ollama as a LLM provider |

| lm-studio | Yes | Run LLM locally with LM studio (set provider_name to lm-studio) |

| openai | Yes | Use openai compatible API (eg: llama.cpp server) |

Next step: Start services and run AgenticSeek

See the Troubleshooting section if you are having issues.

If your hardware can't run LLMs locally, see Setup to run with an API.

For detailed config.ini explanations, see Config Section.

This setup uses external, cloud-based LLM providers. You'll need an API key from your chosen service.

1. Choose an API Provider and Get an API Key:

Refer to the List of API Providers below. Visit their websites to sign up and obtain an API key.

2. Set Your API Key as an Environment Variable:

-

Linux/macOS: Open your terminal and use the

exportcommand. It's best to add this to your shell's profile file (e.g.,~/.bashrc,~/.zshrc) for persistence.export PROVIDER_API_KEY="your_api_key_here" # Replace PROVIDER_API_KEY with the specific variable name, e.g., OPENAI_API_KEY, GOOGLE_API_KEY

Example for TogetherAI:

export TOGETHER_API_KEY="xxxxxxxxxxxxxxxxxxxxxx"

-

Windows:

-

Command Prompt (Temporary for current session):

set PROVIDER_API_KEY=your_api_key_here

-

PowerShell (Temporary for current session):

$env:PROVIDER_API_KEY="your_api_key_here"

-

Permanently: Search for "environment variables" in the Windows search bar, click "Edit the system environment variables," then click the "Environment Variables..." button. Add a new User variable with the appropriate name (e.g.,

OPENAI_API_KEY) and your key as the value.

(See FAQ: How do I set API keys? for more details).

-

Command Prompt (Temporary for current session):

3. Update config.ini:

[MAIN]

is_local = False

provider_name = openai # Or google, deepseek, togetherAI, huggingface

provider_model = gpt-3.5-turbo # Or gemini-1.5-flash, deepseek-chat, mistralai/Mixtral-8x7B-Instruct-v0.1 etc.

provider_server_address = # Typically ignored or can be left blank when is_local = False for most APIs

# ... other settings ...Warning: Make sure there are no trailing spaces in the config.ini values.

List of API Providers

| Provider | provider_name |

Local? | Description | API Key Link (Examples) |

|---|---|---|---|---|

| OpenAI | openai |

No | Use ChatGPT models via OpenAI's API. | platform.openai.com/signup |

| Google Gemini | google |

No | Use Google Gemini models via Google AI Studio. | aistudio.google.com/keys |

| Deepseek | deepseek |

No | Use Deepseek models via their API. | platform.deepseek.com |

| Hugging Face | huggingface |

No | Use models from Hugging Face Inference API. | huggingface.co/settings/tokens |

| TogetherAI | togetherAI |

No | Use various open-source models via TogetherAI API. | api.together.ai/settings/api-keys |

Note:

- We advise against using

gpt-4oor other OpenAI models for complex web browsing and task planning as current prompt optimizations are geared towards models like Deepseek. - Coding/bash tasks might encounter issues with Gemini, as it may not strictly follow formatting prompts optimized for Deepseek.

- The

provider_server_addressinconfig.iniis generally not used whenis_local = Falseas the API endpoint is usually hardcoded in the respective provider's library.

Next step: Start services and run AgenticSeek

See the Known issues section if you are having issues

See the Config section for detailed config file explanation.

By default AgenticSeek is run fully in docker.

Option 1: Run in Docker, use web interface:

Start required services. This will start all services from the docker-compose.yml, including:

- searxng

- redis (required by searxng)

- frontend

- backend (if using full when using the web interface)

./start_services.sh full # MacOS

start start_services.cmd full # WindowWarning: This step will download and load all Docker images, which may take up to 30 minutes. After starting the services, please wait until the backend service is fully running (you should see backend: "GET /health HTTP/1.1" 200 OK in the log) before sending any messages. The backend services might take 5 minute to start on first run.

Go to http://localhost:3000/ and you should see the web interface.

Troubleshooting service start: If these scripts fail, ensure Docker Engine is running and Docker Compose (V2, docker compose) is correctly installed. Check the output in the terminal for error messages. See FAQ: Help! I get an error when running AgenticSeek or its scripts.

Option 2: CLI mode:

To run with CLI interface you would have to install package on host:

./install.sh

./install.bat # windowsStart required services. This will start some services from the docker-compose.yml, including: - searxng - redis (required by searxng) - frontend

./start_services.sh # MacOS

start start_services.cmd # WindowUse the CLI: uv run cli.py

Make sure the services are up and running with ./start_services.sh full and go to localhost:3000 for web interface.

You can also use speech to text by setting listen = True in the config. Only for CLI mode.

To exit, simply say/type goodbye.

Here are some example usage:

Make a snake game in python!

Search the web for top cafes in Rennes, France, and save a list of three with their addresses in rennes_cafes.txt.

Write a Go program to calculate the factorial of a number, save it as factorial.go in your workspace

Search my summer_pictures folder for all JPG files, rename them with today’s date, and save a list of renamed files in photos_list.txt

Search online for popular sci-fi movies from 2024 and pick three to watch tonight. Save the list in movie_night.txt.

Search the web for the latest AI news articles from 2025, select three, and write a Python script to scrape their titles and summaries. Save the script as news_scraper.py and the summaries in ai_news.txt in /home/projects

Friday, search the web for a free stock price API, register with [email protected] then write a Python script to fetch using the API daily prices for Tesla, and save the results in stock_prices.csv

Note that form filling capabilities are still experimental and might fail.

After you type your query, AgenticSeek will allocate the best agent for the task.

Because this is an early prototype, the agent routing system might not always allocate the right agent based on your query.

Therefore, you should be very explicit in what you want and how the AI might proceed for example if you want it to conduct a web search, do not say:

Do you know some good countries for solo-travel?

Instead, ask:

Do a web search and find out which are the best country for solo-travel

If you have a powerful computer or a server that you can use, but you want to use it from your laptop you have the options to run the LLM on a remote server using our custom llm server.

On your "server" that will run the AI model, get the ip address

ip a | grep "inet " | grep -v 127.0.0.1 | awk '{print $2}' | cut -d/ -f1 # local ip

curl https://ipinfo.io/ip # public ipNote: For Windows or macOS, use ipconfig or ifconfig respectively to find the IP address.

Clone the repository and enter the server/folder.

git clone --depth 1 https://github.com/Fosowl/agenticSeek.git

cd agenticSeek/llm_server/Install server specific requirements:

pip3 install -r requirements.txtRun the server script.

python3 app.py --provider ollama --port 3333You have the choice between using ollama and llamacpp as a LLM service.

Now on your personal computer:

Change the config.ini file to set the provider_name to server and provider_model to deepseek-r1:xxb.

Set the provider_server_address to the ip address of the machine that will run the model.

[MAIN]

is_local = False

provider_name = server

provider_model = deepseek-r1:70b

provider_server_address = http://x.x.x.x:3333Next step: Start services and run AgenticSeek

Warning: speech to text only work in CLI mode at the moment.

Please note that currently speech to text only work in english.

The speech-to-text functionality is disabled by default. To enable it, set the listen option to True in the config.ini file:

listen = True

When enabled, the speech-to-text feature listens for a trigger keyword, which is the agent's name, before it begins processing your input. You can customize the agent's name by updating the agent_name value in the config.ini file:

agent_name = Friday

For optimal recognition, we recommend using a common English name like "John" or "Emma" as the agent name

Once you see the transcript start to appear, say the agent's name aloud to wake it up (e.g., "Friday").

Speak your query clearly.

End your request with a confirmation phrase to signal the system to proceed. Examples of confirmation phrases include:

"do it", "go ahead", "execute", "run", "start", "thanks", "would ya", "please", "okay?", "proceed", "continue", "go on", "do that", "go it", "do you understand?"

Example config:

[MAIN]

is_local = True

provider_name = ollama

provider_model = deepseek-r1:32b

provider_server_address = http://127.0.0.1:11434 # Example for Ollama; use http://127.0.0.1:1234 for LM-Studio

agent_name = Friday

recover_last_session = False

save_session = False

speak = False

listen = False

jarvis_personality = False

languages = en zh # List of languages for TTS and potentially routing.

[BROWSER]

headless_browser = False

stealth_mode = False

Explanation of config.ini Settings:

-

[MAIN]Section:-

is_local:Trueif using a local LLM provider (Ollama, LM-Studio, local OpenAI-compatible server) or the self-hosted server option.Falseif using a cloud-based API (OpenAI, Google, etc.). -

provider_name: Specifies the LLM provider.- Local options:

ollama,lm-studio,openai(for local OpenAI-compatible servers),server(for the self-hosted server setup). - API options:

openai,google,deepseek,huggingface,togetherAI.

- Local options:

-

provider_model: The specific model name or ID for the chosen provider (e.g.,deepseekcoder:6.7bfor Ollama,gpt-3.5-turbofor OpenAI API,mistralai/Mixtral-8x7B-Instruct-v0.1for TogetherAI). -

provider_server_address: The address of your LLM provider.- For local providers: e.g.,

http://127.0.0.1:11434for Ollama,http://127.0.0.1:1234for LM-Studio. - For the

serverprovider type: The address of your self-hosted LLM server (e.g.,http://your_server_ip:3333). - For cloud APIs (

is_local = False): This is often ignored or can be left blank, as the API endpoint is usually handled by the client library.

- For local providers: e.g.,

-

agent_name: Name of the AI assistant (e.g., Friday). Used as a trigger word for speech-to-text if enabled. -

recover_last_session:Trueto attempt to restore the previous session's state,Falseto start fresh. -

save_session:Trueto save the current session's state for potential recovery,Falseotherwise. -

speak:Trueto enable text-to-speech voice output,Falseto disable. -

listen:Trueto enable speech-to-text voice input (CLI mode only),Falseto disable. -

work_dir: Crucial: The directory where AgenticSeek will read/write files. Ensure this path is valid and accessible on your system. -

jarvis_personality:Trueto use a more "Jarvis-like" system prompt (experimental),Falsefor the standard prompt. -

languages: A comma-separated list of languages (e.g.,en, zh, fr). Used for TTS voice selection (defaults to the first) and can assist the LLM router. Avoid too many or very similar languages for router efficiency.

-

-

[BROWSER]Section:-

headless_browser:Trueto run the automated browser without a visible window (recommended for web interface or non-interactive use).Falseto show the browser window (useful for CLI mode or debugging). -

stealth_mode:Trueto enable measures to make browser automation harder to detect. May require manual installation of browser extensions like anticaptcha.

-

This section summarizes the supported LLM provider types. Configure them in config.ini.

Local Providers (Run on Your Own Hardware):

Provider Name in config.ini

|

is_local |

Description | Setup Section |

|---|---|---|---|

ollama |

True |

Use Ollama to serve local LLMs. | Setup for running LLM locally |

lm-studio |

True |

Use LM-Studio to serve local LLMs. | Setup for running LLM locally |

openai (for local server) |

True |

Connect to a local server that exposes an OpenAI-compatible API (e.g., llama.cpp). | Setup for running LLM locally |

server |

False |

Connect to the AgenticSeek self-hosted LLM server running on another machine. | Setup to run the LLM on your own server |

API Providers (Cloud-Based):

Provider Name in config.ini

|

is_local |

Description | Setup Section |

|---|---|---|---|

openai |

False |

Use OpenAI's official API (e.g., GPT-3.5, GPT-4). | Setup to run with an API |

google |

False |

Use Google's Gemini models via API. | Setup to run with an API |

deepseek |

False |

Use Deepseek's official API. | Setup to run with an API |

huggingface |

False |

Use Hugging Face Inference API. | Setup to run with an API |

togetherAI |

False |

Use TogetherAI's API for various open models. | Setup to run with an API |

If you encounter issues, this section provides guidance.

Error Example: SessionNotCreatedException: Message: session not created: This version of ChromeDriver only supports Chrome version XXX

ChromeDriver version incompatibility occurs when:

- Your installed ChromeDriver version doesn't match your Chrome browser version

- In Docker environments,

undetected_chromedrivermay download its own ChromeDriver version, bypassing the mounted binary

Open Google Chrome → Settings > About Chrome to find your version (e.g., "Version 134.0.6998.88")

For Chrome 115 and newer: Use the Chrome for Testing API

- Visit the Chrome for Testing availability dashboard

- Find your Chrome version or the closest available match

- Download the ChromeDriver for your OS (Linux64 for Docker environments)

For older Chrome versions: Use the legacy ChromeDriver downloads

Method A: Project Root Directory (Recommended for Docker)

# Place the downloaded chromedriver binary in your project root

cp path/to/downloaded/chromedriver ./chromedriver

chmod +x ./chromedriver # Make executable on Linux/macOSMethod B: System PATH

# Linux/macOS

sudo mv chromedriver /usr/local/bin/

sudo chmod +x /usr/local/bin/chromedriver

# Windows: Place chromedriver.exe in a folder that's in your PATH# Test the ChromeDriver version

./chromedriver --version

# OR if in PATH:

chromedriver --version- The Docker volume mount approach may not work with stealth mode (

undetected_chromedriver) -

Solution: Place ChromeDriver in the project root directory as

./chromedriver - The application will automatically detect and use this binary

- You should see:

"Using ChromeDriver from project root: ./chromedriver"in the logs

-

Still getting version mismatch?

- Verify the ChromeDriver is executable:

ls -la ./chromedriver - Check the ChromeDriver version:

./chromedriver --version - Ensure it matches your Chrome browser version

- Verify the ChromeDriver is executable:

-

Docker container issues?

- Check backend logs:

docker logs backend - Look for the message:

"Using ChromeDriver from project root" - If not found, verify the file exists and is executable

- Check backend logs:

-

Chrome for Testing versions

- Use the exact version match when possible

- For version 134.0.6998.88, use ChromeDriver 134.0.6998.165 (closest available)

- Major version numbers must match (134 = 134)

| Chrome Version | ChromeDriver Version | Status |

|---|---|---|

| 134.0.6998.x | 134.0.6998.165 | ✅ Works |

| 133.0.6943.x | 133.0.6943.141 | ✅ Works |

| 132.0.6834.x | 132.0.6834.159 | ✅ Works |

For the latest compatibility, check the Chrome for Testing dashboard

Exception: Failed to initialize browser: Message: session not created: This version of ChromeDriver only supports Chrome version 113 Current browser version is 134.0.6998.89 with binary path

This happen if there is a mismatch between your browser and chromedriver version.

You need to navigate to download the latest version:

https://developer.chrome.com/docs/chromedriver/downloads

If you're using Chrome version 115 or newer go to:

https://googlechromelabs.github.io/chrome-for-testing/

And download the chromedriver version matching your OS.

If this section is incomplete please raise an issue.

Exception: Provider lm-studio failed: HTTP request failed: No connection adapters were found for '127.0.0.1:1234/v1/chat/completions'` (Note: port may vary)

-

Cause: The

provider_server_addressinconfig.iniforlm-studio(or other similar local OpenAI-compatible servers) is missing thehttp://prefix or is pointing to the wrong port. -

Solution:

- Ensure the address includes

http://. LM-Studio typically defaults tohttp://127.0.0.1:1234. - Correct

config.ini:provider_server_address = http://127.0.0.1:1234(or your actual LM-Studio server port).

- Ensure the address includes

raise ValueError("SearxNG base URL must be provided either as an argument or via the SEARXNG_BASE_URL environment variable.")

ValueError: SearxNG base URL must be provided either as an argument or via the SEARXNG_BASE_URL environment variable.`

Q: What hardware do I need?

| Model Size | GPU | Comment |

|---|---|---|

| 7B | 8GB Vram | |

| 14B | 12 GB VRAM (e.g. RTX 3060) | ✅ Usable for simple tasks. May struggle with web browsing and planning tasks. |

| 32B | 24+ GB VRAM (e.g. RTX 4090) | 🚀 Success with most tasks, might still struggle with task planning |

| 70B+ | 48+ GB Vram | 💪 Excellent. Recommended for advanced use cases. |

Q: I get an error what do I do?

Ensure local is running (ollama serve), your config.ini matches your provider, and dependencies are installed. If none work feel free to raise an issue.

Q: Can it really run 100% locally?

Yes with Ollama, lm-studio or server providers, all speech to text, LLM and text to speech model run locally. Non-local options (OpenAI or others API) are optional.

Q: Why should I use AgenticSeek when I have Manus?

Unlike Manus, AgenticSeek prioritizes independence from external systems, giving you more control, privacy and avoid api cost.

Q: Who is behind the project ?

The project was created by me, along with two friends who serve as maintainers and contributors from the open-source community on GitHub. We’re just a group of passionate individuals, not a startup or affiliated with any organization.

Any AgenticSeek account on X other than my personal account (https://x.com/Martin993886460) is an impersonation.

We’re looking for developers to improve AgenticSeek! Check out open issues or discussion.

Want to level up AgenticSeek capabilities with features like flight search, trip planning, or snagging the best shopping deals? Consider crafting a custom tool with SerpApi to unlock more Jarvis-like capabilities. With SerpApi, you can turbocharge your agent for specialized tasks while staying in full control.

See Contributing.md to learn how to integrate custom tools!

Fosowl | Paris Time

antoineVIVIES | Taipei Time

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for agenticSeek

Similar Open Source Tools

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

iloom-cli

iloom is a tool designed to streamline AI-assisted development by focusing on maintaining alignment between human developers and AI agents. It treats context as a first-class concern, persisting AI reasoning in issue comments rather than temporary chats. The tool allows users to collaborate with AI agents in an isolated environment, switch between complex features without losing context, document AI decisions publicly, and capture key insights and lessons learned from AI sessions. iloom is not just a tool for managing git worktrees, but a control plane for maintaining alignment between users and their AI assistants.

joinly

joinly.ai is a connector middleware designed to enable AI agents to actively participate in video calls, providing essential meeting tools for AI agents to perform tasks and interact in real time. It supports live interaction, conversational flow, cross-platform compatibility, bring-your-own-LLM, and choose-your-preferred-TTS/STT services. The tool is 100% open-source, self-hosted, and privacy-first, aiming to make meetings accessible to AI agents by joining and participating in video calls.

kwaak

Kwaak is a tool that allows users to run a team of autonomous AI agents locally from their own machine. It enables users to write code, improve test coverage, update documentation, and enhance code quality while focusing on building innovative projects. Kwaak is designed to run multiple agents in parallel, interact with codebases, answer questions about code, find examples, write and execute code, create pull requests, and more. It is free and open-source, allowing users to bring their own API keys or models via Ollama. Kwaak is part of the bosun.ai project, aiming to be a platform for autonomous code improvement.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

runpod-worker-comfy

runpod-worker-comfy is a serverless API tool that allows users to run any ComfyUI workflow to generate an image. Users can provide input images as base64-encoded strings, and the generated image can be returned as a base64-encoded string or uploaded to AWS S3. The tool is built on Ubuntu + NVIDIA CUDA and provides features like built-in checkpoints and VAE models. Users can configure environment variables to upload images to AWS S3 and interact with the RunPod API to generate images. The tool also supports local testing and deployment to Docker hub using Github Actions.

shellChatGPT

ShellChatGPT is a shell wrapper for OpenAI's ChatGPT, DALL-E, Whisper, and TTS, featuring integration with LocalAI, Ollama, Gemini, Mistral, Groq, and GitHub Models. It provides text and chat completions, vision, reasoning, and audio models, voice-in and voice-out chatting mode, text editor interface, markdown rendering support, session management, instruction prompt manager, integration with various service providers, command line completion, file picker dialogs, color scheme personalization, stdin and text file input support, and compatibility with Linux, FreeBSD, MacOS, and Termux for a responsive experience.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

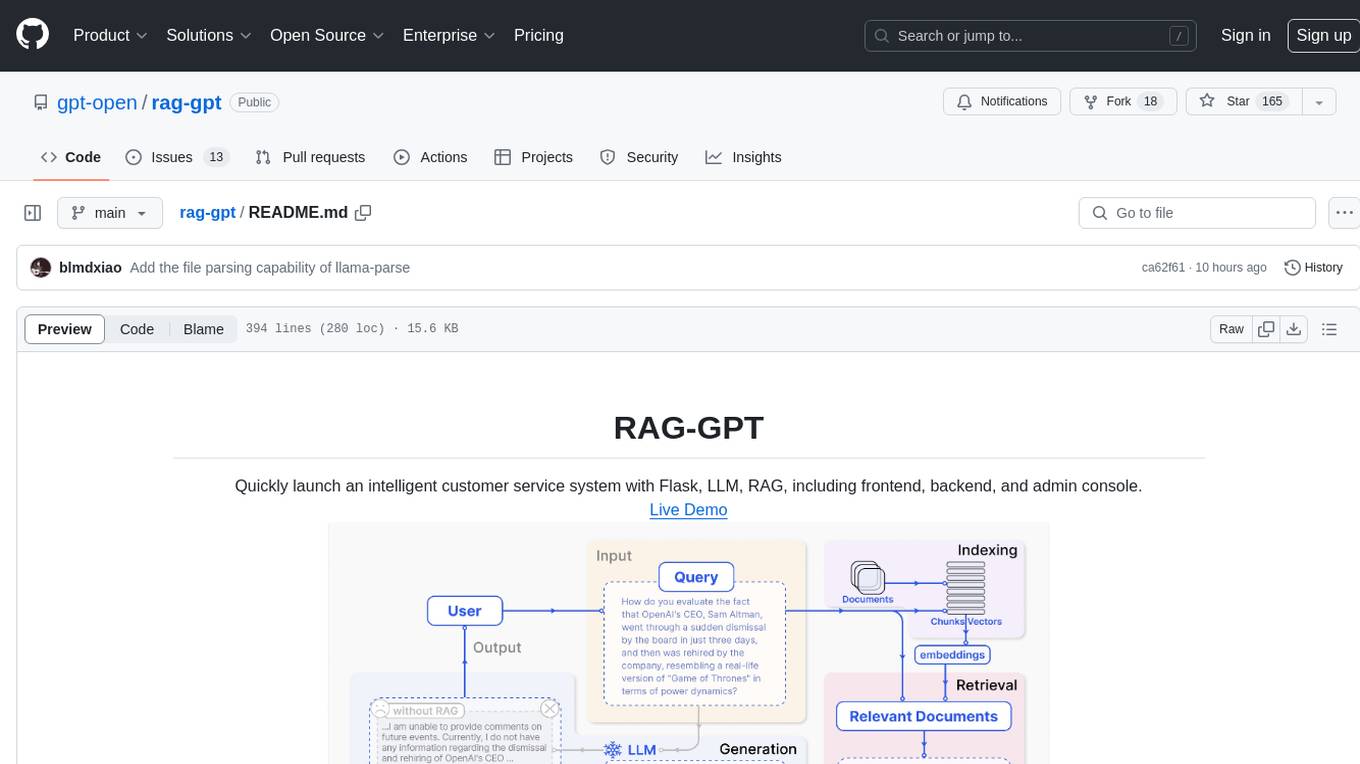

rag-gpt

RAG-GPT is a tool that allows users to quickly launch an intelligent customer service system with Flask, LLM, and RAG. It includes frontend, backend, and admin console components. The tool supports cloud-based and local LLMs, offers quick setup for conversational service robots, integrates diverse knowledge bases, provides flexible configuration options, and features an attractive user interface.

consult-llm-mcp

Consult LLM MCP is an MCP server that enables users to consult powerful AI models like GPT-5.2, Gemini 3.0 Pro, and DeepSeek Reasoner for complex problem-solving. It supports multi-turn conversations, direct queries with optional file context, git changes inclusion for code review, comprehensive logging with cost estimation, and various CLI modes for Gemini and Codex. The tool is designed to simplify the process of querying AI models for assistance in resolving coding issues and improving code quality.

AutoAgent

AutoAgent is a fully-automated and zero-code framework that enables users to create and deploy LLM agents through natural language alone. It is a top performer on the GAIA Benchmark, equipped with a native self-managing vector database, and allows for easy creation of tools, agents, and workflows without any coding. AutoAgent seamlessly integrates with a wide range of LLMs and supports both function-calling and ReAct interaction modes. It is designed to be dynamic, extensible, customized, and lightweight, serving as a personal AI assistant.

llm2sh

llm2sh is a command-line utility that leverages Large Language Models (LLMs) to translate plain-language requests into shell commands. It provides a convenient way to interact with your system using natural language. The tool supports multiple LLMs for command generation, offers a customizable configuration file, YOLO mode for running commands without confirmation, and is easily extensible with new LLMs and system prompts. Users can set up API keys for OpenAI, Claude, Groq, and Cerebras to use the tool effectively. llm2sh does not store user data or command history, and it does not record or send telemetry by itself, but the LLM APIs may collect and store requests and responses for their purposes.

For similar tasks

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

quantalogic

QuantaLogic is a ReAct framework for building advanced AI agents that seamlessly integrates large language models with a robust tool system. It aims to bridge the gap between advanced AI models and practical implementation in business processes by enabling agents to understand, reason about, and execute complex tasks through natural language interaction. The framework includes features such as ReAct Framework, Universal LLM Support, Secure Tool System, Real-time Monitoring, Memory Management, and Enterprise Ready components.

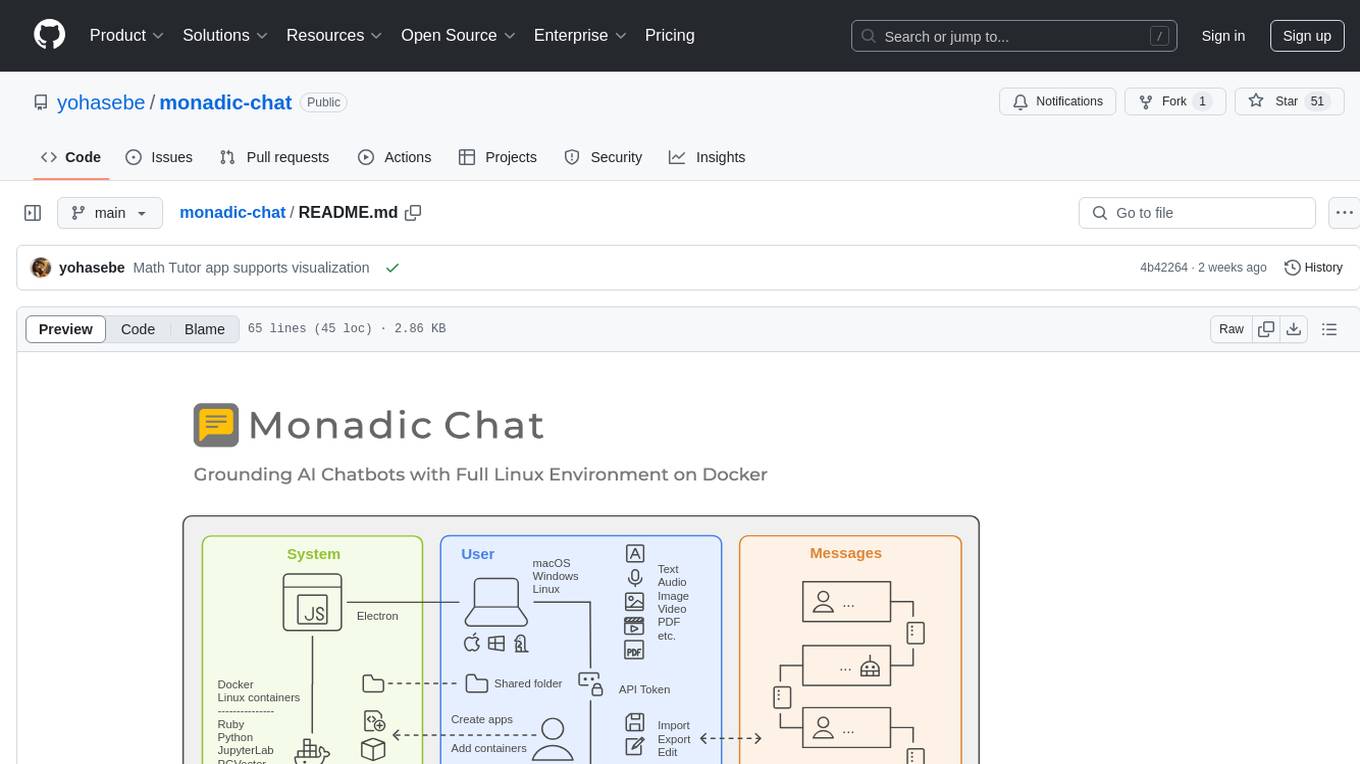

monadic-chat

Monadic Chat is a locally hosted web application designed to create and utilize intelligent chatbots. It provides a Linux environment on Docker to GPT and other LLMs, enabling the execution of advanced tasks that require external tools. The tool supports voice interaction, image and video recognition and generation, and AI-to-AI chat, making it useful for using AI and developing various applications. It is available for Mac, Windows, and Linux (Debian/Ubuntu) with easy-to-use installers.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.