vue-markdown-render

A Vue 3 renderer specifically built for AI-powered streaming Markdown: Monaco incremental, Mermaid progressive, and KaTeX formula speed, with real-time updates and no jitter, ready to use out of the box.

Stars: 333

vue-renderer-markdown is a high-performance tool designed for streaming and rendering Markdown content in real-time. It is optimized for handling incomplete or rapidly changing Markdown blocks, making it ideal for scenarios like AI model responses, live content updates, and real-time Markdown rendering. The tool offers features such as ultra-high performance, streaming-first design, Monaco integration, progressive Mermaid rendering, custom components integration, complete Markdown support, real-time updates, TypeScript support, and zero configuration setup. It solves challenges like incomplete syntax blocks, rapid content changes, cursor positioning complexities, and graceful handling of partial tokens with a streaming-optimized architecture.

README:

Fast, streaming-friendly Markdown rendering for Vue 3 — progressive Mermaid, streaming diff code blocks, and real-time previews optimized for large documents.

- Progressive Mermaid: diagrams render incrementally so users see results earlier.

- Streaming diff code blocks: show diffs as they arrive for instant feedback.

- Built for scale: optimized DOM updates and memory usage for very large documents.

Traditional Markdown renderers typically convert a finished Markdown string into a static HTML tree. This library is designed for streaming and interactive workflows and therefore provides capabilities you won't find in a classic renderer:

- Streaming-first rendering: render partial or incrementally-updated Markdown content without re-parsing the whole document each time. This enables live previews for AI outputs or editors that emit tokens progressively.

- Streaming-aware code blocks and "code-jump" UX: large code blocks are updated incrementally and the renderer can maintain cursor/selection context and fine-grained edits. This enables smooth code-editing experiences and programmatic "jump to" behaviors that traditional renderers do not support.

- Built-in diff/code-stream components: show diffs as they arrive (line-by-line or token-by-token) with minimal reflow. This is ideal for streaming AI edits or progressive code reviews — functionality that is not available in plain Markdown renderers.

- Progressive diagrams and editors: Mermaid diagrams and Monaco-based previews update progressively and render as soon as they become valid.

- Smooth, interactive UI: the renderer is optimized for minimal DOM churn and silky interactions (e.g. streaming diffs, incremental diagram updates, and editor integrations) so UX remains responsive even with very large documents.

These features make the library especially suited for real-time, AI-driven, and large-document scenarios where a conventional, static Markdown-to-HTML conversion would lag or break the user experience.

Demo site — try large Markdown files and progressive diagrams to feel the difference.

- ⚡ Ultra-High Performance: Optimized for real-time streaming with minimal re-renders and efficient DOM updates

- 🌊 Streaming-First Design: Built specifically to handle incomplete, rapidly updating, and tokenized Markdown content

- 🧠 Monaco Streaming Updates: High-performance Monaco integration with smooth, incremental updates for large code blocks

- 🪄 Progressive Mermaid Rendering: Diagrams render as they become valid and update incrementally without jank

- 🧩 Custom Components: Seamlessly integrate your Vue components within Markdown content

- 📝 Complete Markdown Support: Tables, math formulas, emoji, checkboxes, code blocks, and more

- 🔄 Real-Time Updates: Handles partial content and incremental updates without breaking formatting

- 📦 TypeScript First: Full type definitions with intelligent auto-completion

- 🔌 Zero Configuration: Drop-in component that works with any Vue 3 project out of the box

pnpm add vue-renderer-markdown

# or

npm install vue-renderer-markdown

# or

yarn add vue-renderer-markdownThis package declares several peer dependencies. Some are required for core rendering and others are optional and enable extra features. Since the library now lazy-loads heavyweight optional peers at runtime, you can choose a minimal install for basic rendering or a full install to enable advanced features.

Minimal (core) peers — required for basic rendering:

pnpm (recommended):

pnpm add vueFull install — enables diagrams, Monaco editor preview and icon UI (recommended if you want all features):

pnpm add vue @iconify/vue katex mermaid vue-use-monaconpm equivalent:

npm install vue @iconify/vue katex mermaid vue-use-monacoyarn equivalent:

yarn add vue @iconify/vue katex mermaid vue-use-monacoNotes:

- The exact peer version ranges are declared in this package's

package.json— consult it if you need specific versions. - Optional peers and the features they enable:

-

mermaid— enables Mermaid diagram rendering (progressive rendering is supported). If absent, code blocks taggedmermaidfall back to showing the source text without runtime errors. -

vue-use-monaco— enables Monaco Editor based previews/editing and advanced streaming updates for large code blocks. If absent, the component degrades to plain text rendering and no editor is created. -

@iconify/vue— enables iconography in the UI (toolbar buttons). If absent, simple fallback elements are shown in place of icons so the UI remains functional.

-

-

vue-i18nis optional: the library provides a synchronous fallback translator. If your app usesvue-i18n, the library will automatically wire into it at runtime when available. - If you're installing this library inside a monorepo or using pnpm workspaces, install peers at the workspace root so they are available to consuming packages.

Streaming Markdown content from AI models, live editors, or real-time updates presents unique challenges:

- Incomplete syntax blocks can break traditional parsers

- Rapid content changes cause excessive re-renders and performance issues

- Cursor positioning becomes complex with dynamic content

- Partial tokens need graceful handling without visual glitches

vue-renderer-markdown solves these challenges with a streaming-optimized architecture that maintains perfect formatting and performance, even with the most demanding real-time scenarios.

Perfect for AI model responses, live content updates, or any scenario requiring real-time Markdown rendering:

<script setup lang="ts">

import { ref } from 'vue'

import MarkdownRender from 'vue-renderer-markdown'

const content = ref('')

const fullContent = `# Streaming Content\n\nThis text appears character by character...`

// Simulate streaming content

let index = 0

const interval = setInterval(() => {

if (index < fullContent.length) {

content.value += fullContent[index]

index++

}

else {

clearInterval(interval)

}

}, 50)

</script>

<template>

<MarkdownRender :content="content" />

</template>For static or pre-generated Markdown content:

<script setup lang="ts">

import MarkdownRender from 'vue-renderer-markdown'

const markdownContent = `

# Hello Vue Markdown

This is **markdown** rendered as HTML!

- Supports lists

- [x] Checkboxes

- :smile: Emoji

`

</script>

<template>

<MarkdownRender :content="markdownContent" />

</template>The streaming-optimized engine delivers:

- Incremental Parsing Code Blocks: Only processes changed content, not the entire code block

- Efficient DOM Updates: Minimal re-renders

- Monaco Streaming: Fast, incremental updates for large code snippets without blocking the UI

- Progressive Mermaid: Diagrams render as soon as syntax is valid and refine as content streams in

- Memory Optimized: Intelligent cleanup prevents memory leaks during long streaming sessions

- Animation Frame Based: Smooth animations

- Graceful Degradation: Handles malformed or incomplete Markdown without breaking

| Name | Type | Required | Description |

|---|---|---|---|

content |

string |

✓ | Markdown string to render |

nodes |

BaseNode[] |

Parsed markdown AST nodes (alternative to content) | |

customComponents |

Record<string, any> |

Custom Vue components for rendering | |

renderCodeBlocksAsPre |

boolean |

When true, render all code_block nodes as simple <pre><code> blocks (uses PreCodeNode) instead of the full CodeBlockNode. Useful for lightweight, dependency-free rendering of multi-line text such as AI "thinking" outputs. Defaults to false. |

Either

contentornodesmust be provided.

Note: when using the component in a Vue template, camelCase prop names should be written in kebab-case. For example, customComponents becomes custom-components in templates.

- 类型:

boolean - 默认:

false

描述:

- 当设置为

true时,所有解析到的code_block节点会以简单的<pre><code>(库内为PreCodeNode)渲染,而不是使用带有可选依赖(如 Monaco、mermaid)的完整CodeBlockNode组件。 - 适用场景:需要以原始、轻量的预格式化文本展示代码或 AI 模型(例如“thinking”/推理输出)返回的多行文本与推理步骤时,建议开启此选项以保证格式保留且不依赖可选同伴库。

注意:

- 当

renderCodeBlocksAsPre: true时,传递给CodeBlockNode的codeBlockDarkTheme、codeBlockMonacoOptions、themes、minWidth、maxWidth等属性不会生效(因为不再使用CodeBlockNode)。 - 若需要完整代码块功能(语法高亮、折叠、复制按钮等),请保持默认

false并安装可选依赖(mermaid,vue-use-monaco,@iconify/vue)。

示例(Vue 使用):

<script setup lang="ts">

import MarkdownRender from 'vue-renderer-markdown'

const markdown = `Here is an AI thinking output:\n\n\`\`\`text\nStep 1...\nStep 2...\n\`\`\`\n`

</script>

<template>

<MarkdownRender :content="markdown" :render-code-blocks-as-pre="true" />

</template>-

Custom Components: Pass your own components via

customComponentsprop to render custom tags inside markdown. -

TypeScript: Full type support. Import types as needed:

import type { MyMarkdownProps } from 'vue-renderer-markdown/dist/types'

ImageNode 现在支持两个命名插槽,方便你自定义加载与错误状态的渲染:

- 插槽名:

placeholder - 插槽名:

error

这两个插槽都会接收相同的一组 slot props(均为响应式):

-

node— 原始的 ImageNode 对象({ type:'image', src, alt, title, raw }) -

displaySrc— 当前用于渲染的 src(如果触发了 fallback,会是 fallbackSrc) -

imageLoaded— boolean,图片是否已加载完成 -

hasError— boolean,是否进入错误状态 -

fallbackSrc— string,组件传入的 fallbackSrc(若有) -

lazy— boolean,表示是否使用 lazy loading -

isSvg— boolean,当前 displaySrc 是否为 svg

默认行为:如果不提供插槽,组件会显示内置的 CSS spinner(placeholder)或一个简单的错误占位(error)。

示例:自定义加载与错误插槽

<ImageNode :node="node" :fallback-src="fallback" :lazy="true">

<template #placeholder="{ node, displaySrc, imageLoaded }">

<div class="p-4 bg-gray-50 rounded shadow-sm flex items-center justify-center">

<div class="animate-pulse w-full h-24 bg-gray-200"></div>

<span class="sr-only">Loading image</span>

</div>

</template>

<template #error="{ node, displaySrc }">

<div class="p-4 text-sm text-red-600 flex items-center gap-2">

<strong>无法加载图片</strong>

<span class="truncate">{{ displaySrc }}</span>

</div>

</template>

</ImageNode>提示:为避免占位与图片切换时的布局抖动,建议自定义占位时保留与图片相近的宽高(或使用 aspect-ratio / min-height),以便图片加载完成后仅做 opacity/transform 的合成动画,不触发 layout/reflow。

Override how code language icons are resolved via the plugin option getLanguageIcon.

This keeps your usage unchanged and centralizes customization.

Plugin usage:

import { createApp } from 'vue'

import { VueRendererMarkdown } from 'vue-renderer-markdown'

import App from './App.vue'

const app = createApp(App)

// Example 1: replace shell/Shellscript icon with a remote SVG URL

const SHELL_ICON_URL = 'https://raw.githubusercontent.com/catppuccin/vscode-icons/refs/heads/main/icons/mocha/bash.svg'

app.use(VueRendererMarkdown, {

getLanguageIcon(lang) {

const l = (lang || '').toLowerCase()

if (

l === 'shell'

|| l === 'shellscript'

|| l === 'sh'

|| l === 'bash'

|| l === 'zsh'

|| l === 'powershell'

|| l === 'ps1'

|| l === 'bat'

|| l === 'batch'

) {

return `<img src="${SHELL_ICON_URL}" alt="${l}" />`

}

// return empty/undefined to use the library default icon

return undefined

},

})Local file example (import inline SVG):

import { createApp } from 'vue'

import { VueRendererMarkdown } from 'vue-renderer-markdown'

import App from './App.vue'

import JsIcon from './assets/javascript.svg?raw'

const app = createApp(App)

app.use(VueRendererMarkdown, {

getLanguageIcon(lang) {

const l = (lang || '').toLowerCase()

if (l === 'javascript' || l === 'js')

return JsIcon // inline SVG string

return undefined

},

})Notes:

- The resolver returns raw HTML/SVG string. Returning

undefined/empty value defers to the built-in mapping. - Works across all code blocks without changing component usage.

- Alignment: icons render inside a fixed-size slot; both

<svg>and<img>align consistently, no inline styles needed. - For local files, import with

?rawand ensure the file is a pure SVG (not an HTML page). Download the raw SVG instead of GitHub’s HTML preview. - The resolver receives the raw language string (e.g.,

tsx:src/components/file.tsx). The built-in fallback mapping uses only the base segment before:.

If you are using Monaco Editor in your project, configure vite-plugin-monaco-editor-esm to handle global injection of workers. Our renderer is optimized for streaming updates to large code blocks—when content changes incrementally, only the necessary parts are updated for smooth, responsive rendering. On Windows, you may encounter issues during the build process. To resolve this, configure customDistPath to ensure successful packaging.

Note: If you only need to render a Monaco editor (for editing or previewing code) and don't require this library's full Markdown rendering pipeline, you can integrate Monaco directly using

vue-use-monacofor a lighter, more direct integration.

pnpm add vite-plugin-monaco-editor-esm monaco-editor -dnpm equivalent:

npm install vite-plugin-monaco-editor-esm monaco-editor --save-devyarn equivalent:

yarn add vite-plugin-monaco-editor-esm monaco-editor -dimport path from 'node:path'

import monacoEditorPlugin from 'vite-plugin-monaco-editor-esm'

export default {

plugins: [

monacoEditorPlugin({

languageWorkers: [

'editorWorkerService',

'typescript',

'css',

'html',

'json',

],

customDistPath(root, buildOutDir, base) {

return path.resolve(buildOutDir, 'monacoeditorwork')

},

}),

],

}The code block component now exposes a flexible header API so consumers can:

- Toggle the entire header on/off.

- Show or hide built-in toolbar buttons (copy, expand, preview, font-size controls).

- Fully replace the left or right header content via named slots.

This makes it easy to adapt the header to your application's UX or to inject custom controls.

Props (new)

| Name | Type | Default | Description |

|---|---|---|---|

showHeader |

boolean |

true |

Toggle rendering of the header bar. |

showCopyButton |

boolean |

true |

Show the built-in copy button. |

showExpandButton |

boolean |

true |

Show the built-in expand/collapse button. |

showPreviewButton |

boolean |

true |

Show the built-in preview button (when preview is available). |

showFontSizeButtons |

boolean |

true |

Show the built-in font-size controls (also requires enableFontSizeControl). |

Slots

-

header-left— Replace the left side of the header (language icon + label by default). -

header-right— Replace the right side of the header (built-in action buttons by default).

Example: hide the header

<CodeBlockNode

:node="{ type: 'code_block', language: 'javascript', code: 'console.log(1)', raw: 'console.log(1)' }"

:showHeader="false"

:loading="false"

/>Example: custom header via slots

<CodeBlockNode

:node="{ type: 'code_block', language: 'html', code: '<div>Hello</div>', raw: '<div>Hello</div>' }"

:loading="false"

:showCopyButton="false"

>

<template #header-left>

<div class="flex items-center space-x-2">

<!-- custom icon or label -->

<span class="text-sm font-medium">My HTML</span>

</div>

</template>

<template #header-right>

<div class="flex items-center space-x-2">

<button class="px-2 py-1 bg-blue-600 text-white rounded">Run</button>

<button class="px-2 py-1 bg-gray-200 dark:bg-gray-700 rounded">Inspect</button>

</div>

</template>

</CodeBlockNode>Notes

- The new

showFontSizeButtonsprop provides an additional toggle; the existingenableFontSizeControlprop still controls whether the font-size feature is enabled at all. Keep both in mind when hiding/showing font controls. - Existing behavior is unchanged by default — all new props default to

trueto preserve the original UI.

This configuration ensures that Monaco Editor workers are correctly packaged and accessible in your project.

如果你的项目使用 Webpack 而不是 Vite,可以使用官方的 monaco-editor-webpack-plugin 来打包并注入 Monaco 的 worker 文件。下面给出一个简单示例(Webpack 5):

安装:

pnpm add -D monaco-editor monaco-editor-webpack-plugin

# 或

npm install --save-dev monaco-editor monaco-editor-webpack-plugin示例 webpack.config.js:

const path = require('node:path')

const MonacoEditorPlugin = require('monaco-editor-webpack-plugin')

module.exports = {

// ...你的其他配置...

output: {

// 确保 worker 文件被正确放置,可按需调整 publicPath/filename

publicPath: '/',

},

plugins: [

new MonacoEditorPlugin({

// 指定需要的语言/功能以减小体积

languages: ['javascript', 'typescript', 'css', 'html', 'json'],

// 可选项:调整输出 worker 文件名模式

filename: 'static/[name].worker.js',

}),

],

}说明:

- 对于使用

monaco-editor的项目,务必将对应 worker 交由插件处理,否则运行时会在浏览器尝试加载缺失的 worker 文件(类似于 Vite 的 dep optimizer 错误)。 - 如果你在构建后看到了类似 “file does not exist” 的错误(例如某些 worker 在依赖优化目录中找不到),请确保通过插件或构建输出将 worker 打包到可访问的位置。

Mermaid diagrams can be streamed progressively. The diagram renders as soon as the syntax becomes valid and refines as more content arrives.

<script setup lang="ts">

import { ref } from 'vue'

import MarkdownRender from 'vue-renderer-markdown'

const content = ref('')

const steps = [

'```mermaid\n',

'graph TD\n',

'A[Start]-->B{Is valid?}\n',

'B -- Yes --> C[Render]\n',

'B -- No --> D[Wait]\n',

'```\n',

]

let i = 0

const id = setInterval(() => {

content.value += steps[i] || ''

i++

if (i >= steps.length)

clearInterval(id)

}, 120)

</script>

<template>

<MarkdownRender :content="content" />

<!-- Diagram progressively appears as content streams in -->

<!-- Mermaid must be installed as a peer dependency -->

</template>如果你在项目中使用像 shadcn 这样的 Tailwind 组件库,可能会遇到样式层级/覆盖问题。推荐在你的全局样式文件中通过 Tailwind 的 layer 把库样式以受控顺序导入。例如,在你的主样式文件(例如 src/styles/index.css 或 src/main.css)中:

/* main.css 或 index.css */

@tailwind base;

@tailwind components;

@tailwind utilities;

/* 推荐:将库样式放入 components 层,方便项目组件覆盖它们 */

@layer components {

@import 'vue-renderer-markdown/index.css';

}

/* 备选:如需库样式优先于 Tailwind 的 components 覆盖,可放入 base 层:

@layer base {

@import 'vue-renderer-markdown/index.css';

}

*/选择放入 components(常用)或 base(当你希望库样式更“基础”且不易被覆盖时)取决于你希望的覆盖优先级。调整后运行你的构建/开发命令(例如 pnpm dev)以验证样式顺序是否符合预期。

This project is built with the help of these awesome libraries:

- vue-use-monaco — Monaco Editor integration for Vue

- shiki — Syntax highlighter powered by TextMate grammars and VS Code themes

Thanks to the authors and contributors of these projects!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for vue-markdown-render

Similar Open Source Tools

vue-markdown-render

vue-renderer-markdown is a high-performance tool designed for streaming and rendering Markdown content in real-time. It is optimized for handling incomplete or rapidly changing Markdown blocks, making it ideal for scenarios like AI model responses, live content updates, and real-time Markdown rendering. The tool offers features such as ultra-high performance, streaming-first design, Monaco integration, progressive Mermaid rendering, custom components integration, complete Markdown support, real-time updates, TypeScript support, and zero configuration setup. It solves challenges like incomplete syntax blocks, rapid content changes, cursor positioning complexities, and graceful handling of partial tokens with a streaming-optimized architecture.

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

mini.ai

mini.ai is a plugin for Neovim that extends and creates textobjects for enhanced text manipulation. It supports customization via Lua patterns or functions, dot-repeat, different search methods, consecutive application, and has builtins for brackets, quotes, function calls, arguments, tags, user prompts, and punctuation/whitespace characters.

text-extract-api

The text-extract-api is a powerful tool that allows users to convert images, PDFs, or Office documents to Markdown text or JSON structured documents with high accuracy. It is built using FastAPI and utilizes Celery for asynchronous task processing, with Redis for caching OCR results. The tool provides features such as PDF/Office to Markdown and JSON conversion, improving OCR results with LLama, removing Personally Identifiable Information from documents, distributed queue processing, caching using Redis, switchable storage strategies, and a CLI tool for task management. Users can run the tool locally or on cloud services, with support for GPU processing. The tool also offers an online demo for testing purposes.

metis

Metis is an open-source, AI-driven tool for deep security code review, created by Arm's Product Security Team. It helps engineers detect subtle vulnerabilities, improve secure coding practices, and reduce review fatigue. Metis uses LLMs for semantic understanding and reasoning, RAG for context-aware reviews, and supports multiple languages and vector store backends. It provides a plugin-friendly and extensible architecture, named after the Greek goddess of wisdom, Metis. The tool is designed for large, complex, or legacy codebases where traditional tooling falls short.

forge

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

llm-vscode

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

dexter

Dexter is a set of mature LLM tools used in production at Dexa, with a focus on real-world RAG (Retrieval Augmented Generation). It is a production-quality RAG that is extremely fast and minimal, and handles caching, throttling, and batching for ingesting large datasets. It also supports optional hybrid search with SPLADE embeddings, and is a minimal TS package with full typing that uses `fetch` everywhere and supports Node.js 18+, Deno, Cloudflare Workers, Vercel edge functions, etc. Dexter has full docs and includes examples for basic usage, caching, Redis caching, AI function, AI runner, and chatbot.

nano-graphrag

nano-GraphRAG is a simple, easy-to-hack implementation of GraphRAG that provides a smaller, faster, and cleaner version of the official implementation. It is about 800 lines of code, small yet scalable, asynchronous, and fully typed. The tool supports incremental insert, async methods, and various parameters for customization. Users can replace storage components and LLM functions as needed. It also allows for embedding function replacement and comes with pre-defined prompts for entity extraction and community reports. However, some features like covariates and global search implementation differ from the original GraphRAG. Future versions aim to address issues related to data source ID, community description truncation, and add new components.

llama.vim

llama.vim is a plugin that provides local LLM-assisted text completion for Vim users. It offers features such as auto-suggest on cursor movement, manual suggestion toggling, suggestion acceptance with Tab and Shift+Tab, control over text generation time, context configuration, ring context with chunks from open and edited files, and performance stats display. The plugin requires a llama.cpp server instance to be running and supports FIM-compatible models. It aims to be simple, lightweight, and provide high-quality and performant local FIM completions even on consumer-grade hardware.

iloom-cli

iloom is a tool designed to streamline AI-assisted development by focusing on maintaining alignment between human developers and AI agents. It treats context as a first-class concern, persisting AI reasoning in issue comments rather than temporary chats. The tool allows users to collaborate with AI agents in an isolated environment, switch between complex features without losing context, document AI decisions publicly, and capture key insights and lessons learned from AI sessions. iloom is not just a tool for managing git worktrees, but a control plane for maintaining alignment between users and their AI assistants.

mini.ai

This plugin extends and creates `a`/`i` textobjects in Neovim. It enhances some builtin textobjects (like `a(`, `a)`, `a'`, and more), creates new ones (like `a*`, `a

chatgpt-subtitle-translator

This tool utilizes the OpenAI ChatGPT API to translate text, with a focus on line-based translation, particularly for SRT subtitles. It optimizes token usage by removing SRT overhead and grouping text into batches, allowing for arbitrary length translations without excessive token consumption while maintaining a one-to-one match between line input and output.

Unity-MCP

Unity-MCP is an AI helper designed for game developers using Unity. It facilitates a wide range of tasks in Unity Editor and running games on any platform by connecting to AI via TCP connection. The tool allows users to chat with AI like with a human, supports local and remote usage, and offers various default AI tools. Users can provide detailed information for classes, fields, properties, and methods using the 'Description' attribute in C# code. Unity-MCP enables instant C# code compilation and execution, provides access to assets and C# scripts, and offers tools for proper issue understanding and project data manipulation. It also allows users to find and call methods in the codebase, work with Unity API, and access human-readable descriptions of code elements.

consult-llm-mcp

Consult LLM MCP is an MCP server that enables users to consult powerful AI models like GPT-5.2, Gemini 3.0 Pro, and DeepSeek Reasoner for complex problem-solving. It supports multi-turn conversations, direct queries with optional file context, git changes inclusion for code review, comprehensive logging with cost estimation, and various CLI modes for Gemini and Codex. The tool is designed to simplify the process of querying AI models for assistance in resolving coding issues and improving code quality.

For similar tasks

vue-markdown-render

vue-renderer-markdown is a high-performance tool designed for streaming and rendering Markdown content in real-time. It is optimized for handling incomplete or rapidly changing Markdown blocks, making it ideal for scenarios like AI model responses, live content updates, and real-time Markdown rendering. The tool offers features such as ultra-high performance, streaming-first design, Monaco integration, progressive Mermaid rendering, custom components integration, complete Markdown support, real-time updates, TypeScript support, and zero configuration setup. It solves challenges like incomplete syntax blocks, rapid content changes, cursor positioning complexities, and graceful handling of partial tokens with a streaming-optimized architecture.

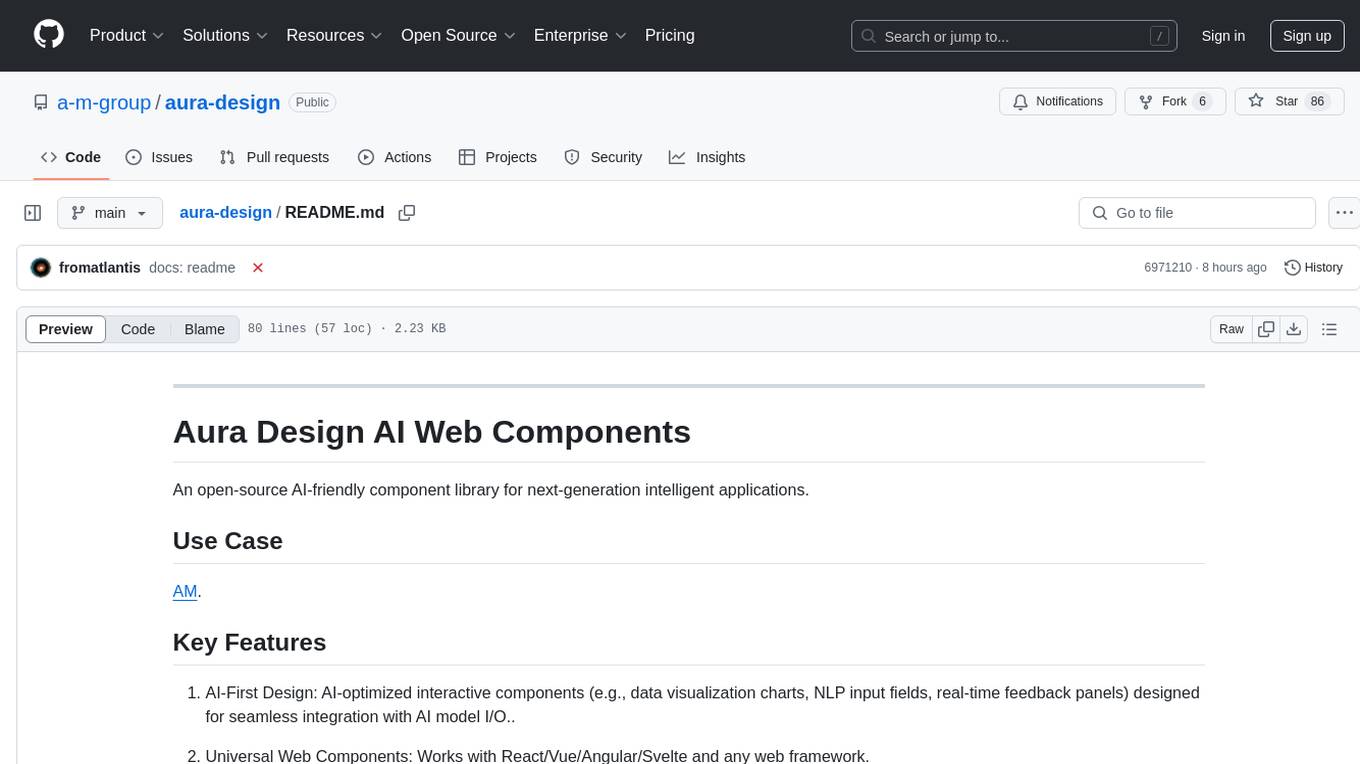

aura-design

Aura Design is an open-source AI-friendly component library for next-generation intelligent applications. It offers AI-optimized interactive components designed for seamless integration with AI model I/O. The library works with various web frameworks and allows easy customization to suit specific requirements. With a modern design and smart markdown renderer, Aura Design enhances the overall user experience.

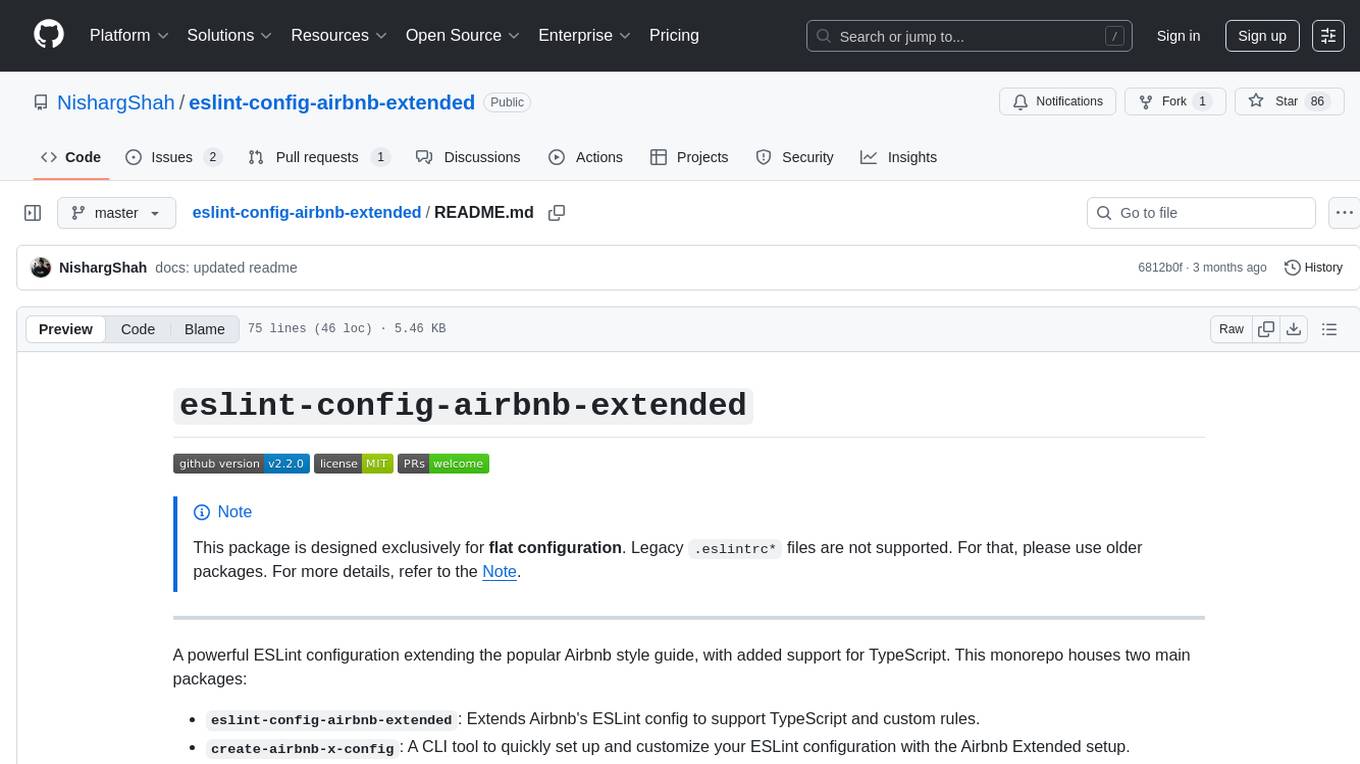

eslint-config-airbnb-extended

A powerful ESLint configuration extending the popular Airbnb style guide, with added support for TypeScript. It provides a one-to-one replacement for old Airbnb ESLint configs, TypeScript support, customizable settings, pre-configured rules, and a CLI utility for quick setup. The package 'eslint-config-airbnb-extended' fully supports TypeScript to enforce consistent coding standards across JavaScript and TypeScript files. The 'create-airbnb-x-config' tool automates the setup of the ESLint configuration package and ensures correct ESLint rules application across JavaScript and TypeScript code.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.