nano-graphrag

A simple, easy-to-hack GraphRAG implementation

Stars: 2643

nano-GraphRAG is a simple, easy-to-hack implementation of GraphRAG that provides a smaller, faster, and cleaner version of the official implementation. It is about 800 lines of code, small yet scalable, asynchronous, and fully typed. The tool supports incremental insert, async methods, and various parameters for customization. Users can replace storage components and LLM functions as needed. It also allows for embedding function replacement and comes with pre-defined prompts for entity extraction and community reports. However, some features like covariates and global search implementation differ from the original GraphRAG. Future versions aim to address issues related to data source ID, community description truncation, and add new components.

README:

😭 GraphRAG is good and powerful, but the official implementation is difficult/painful to read or hack.

😊 This project provides a smaller, faster, cleaner GraphRAG, while remaining the core functionality(see benchmark and issues ).

🎁 Excluding tests and prompts, nano-graphrag is about 1100 lines of code.

👌 Small yet portable(faiss, neo4j, ollama...), asynchronous and fully typed.

If you're looking for a multi-user RAG solution for long-term user memory, have a look at this project: memobase :)

Install from source (recommend)

# clone this repo first

cd nano-graphrag

pip install -e .Install from PyPi

pip install nano-graphrag[!TIP]

Please set OpenAI API key in environment:

export OPENAI_API_KEY="sk-...".

[!TIP] If you're using Azure OpenAI API, refer to the .env.example to set your azure openai. Then pass

GraphRAG(...,using_azure_openai=True,...)to enable.

[!TIP] If you're using Amazon Bedrock API, please ensure your credentials are properly set through commands like

aws configure. Then enable it by configuring like this:GraphRAG(...,using_amazon_bedrock=True, best_model_id="us.anthropic.claude-3-sonnet-20240229-v1:0", cheap_model_id="us.anthropic.claude-3-haiku-20240307-v1:0",...). Refer to an example script.

[!TIP]

If you don't have any key, check out this example that using

transformersandollama. If you like to use another LLM or Embedding Model, check Advances.

download a copy of A Christmas Carol by Charles Dickens:

curl https://raw.githubusercontent.com/gusye1234/nano-graphrag/main/tests/mock_data.txt > ./book.txtUse the below python snippet:

from nano_graphrag import GraphRAG, QueryParam

graph_func = GraphRAG(working_dir="./dickens")

with open("./book.txt") as f:

graph_func.insert(f.read())

# Perform global graphrag search

print(graph_func.query("What are the top themes in this story?"))

# Perform local graphrag search (I think is better and more scalable one)

print(graph_func.query("What are the top themes in this story?", param=QueryParam(mode="local")))Next time you initialize a GraphRAG from the same working_dir, it will reload all the contexts automatically.

graph_func.insert(["TEXT1", "TEXT2",...])Incremental Insert

nano-graphrag supports incremental insert, no duplicated computation or data will be added:

with open("./book.txt") as f:

book = f.read()

half_len = len(book) // 2

graph_func.insert(book[:half_len])

graph_func.insert(book[half_len:])

nano-graphraguse md5-hash of the content as the key, so there is no duplicated chunk.However, each time you insert, the communities of graph will be re-computed and the community reports will be re-generated

Naive RAG

nano-graphrag supports naive RAG insert and query as well:

graph_func = GraphRAG(working_dir="./dickens", enable_naive_rag=True)

...

# Query

print(rag.query(

"What are the top themes in this story?",

param=QueryParam(mode="naive")

)For each method NAME(...) , there is a corresponding async method aNAME(...)

await graph_func.ainsert(...)

await graph_func.aquery(...)

...GraphRAG and QueryParam are dataclass in Python. Use help(GraphRAG) and help(QueryParam) to see all available parameters! Or check out the Advances section to see some options.

Below are the components you can use:

| Type | What | Where |

|---|---|---|

| LLM | OpenAI | Built-in |

| Amazon Bedrock | Built-in | |

| DeepSeek | examples | |

ollama |

examples | |

| Embedding | OpenAI | Built-in |

| Amazon Bedrock | Built-in | |

| Sentence-transformers | examples | |

| Vector DataBase | nano-vectordb |

Built-in |

hnswlib |

Built-in, examples | |

milvus-lite |

examples | |

| faiss | examples | |

| Graph Storage | networkx |

Built-in |

neo4j |

Built-in(doc) | |

| Visualization | graphml | examples |

| Chunking | by token size | Built-in |

| by text splitter | Built-in |

-

Built-inmeans we have that implementation insidenano-graphrag.examplesmeans we have that implementation inside an tutorial under examples folder. -

Check examples/benchmarks to see few comparisons between components.

-

Always welcome to contribute more components.

Some setup options

-

GraphRAG(...,always_create_working_dir=False,...)will skip the dir-creating step. Use it if you switch all your components to non-file storages.

Only query the related context

graph_func.query return the final answer without streaming.

If you like to interagte nano-graphrag in your project, you can use param=QueryParam(..., only_need_context=True,...), which will only return the retrieved context from graph, something like:

# Local mode

-----Reports-----

```csv

id, content

0, # FOX News and Key Figures in Media and Politics...

1, ...

```

...

# Global mode

----Analyst 3----

Importance Score: 100

Donald J. Trump: Frequently discussed in relation to his political activities...

...

You can integrate that context into your customized prompt.

Prompt

nano-graphrag use prompts from nano_graphrag.prompt.PROMPTS dict object. You can play with it and replace any prompt inside.

Some important prompts:

-

PROMPTS["entity_extraction"]is used to extract the entities and relations from a text chunk. -

PROMPTS["community_report"]is used to organize and summary the graph cluster's description. -

PROMPTS["local_rag_response"]is the system prompt template of the local search generation. -

PROMPTS["global_reduce_rag_response"]is the system prompt template of the global search generation. -

PROMPTS["fail_response"]is the fallback response when nothing is related to the user query.

Customize Chunking

nano-graphrag allow you to customize your own chunking method, check out the example.

Switch to the built-in text splitter chunking method:

from nano_graphrag._op import chunking_by_seperators

GraphRAG(...,chunk_func=chunking_by_seperators,...)LLM Function

In nano-graphrag, we requires two types of LLM, a great one and a cheap one. The former is used to plan and respond, the latter is used to summary. By default, the great one is gpt-4o and the cheap one is gpt-4o-mini

You can implement your own LLM function (refer to _llm.gpt_4o_complete):

async def my_llm_complete(

prompt, system_prompt=None, history_messages=[], **kwargs

) -> str:

# pop cache KV database if any

hashing_kv: BaseKVStorage = kwargs.pop("hashing_kv", None)

# the rest kwargs are for calling LLM, for example, `max_tokens=xxx`

...

# YOUR LLM calling

response = await call_your_LLM(messages, **kwargs)

return responseReplace the default one with:

# Adjust the max token size or the max async requests if needed

GraphRAG(best_model_func=my_llm_complete, best_model_max_token_size=..., best_model_max_async=...)

GraphRAG(cheap_model_func=my_llm_complete, cheap_model_max_token_size=..., cheap_model_max_async=...)You can refer to this example that use deepseek-chat as the LLM model

You can refer to this example that use ollama as the LLM model

nano-graphrag will use best_model_func to output JSON with params "response_format": {"type": "json_object"}. However there are some open-source model maybe produce unstable JSON.

nano-graphrag introduces a post-process interface for you to convert the response to JSON. This func's signature is below:

def YOUR_STRING_TO_JSON_FUNC(response: str) -> dict:

"Convert the string response to JSON"

...And pass your own func by GraphRAG(...convert_response_to_json_func=YOUR_STRING_TO_JSON_FUNC,...).

For example, you can refer to json_repair to repair the JSON string returned by LLM.

Embedding Function

You can replace the default embedding functions with any _utils.EmbedddingFunc instance.

For example, the default one is using OpenAI embedding API:

@wrap_embedding_func_with_attrs(embedding_dim=1536, max_token_size=8192)

async def openai_embedding(texts: list[str]) -> np.ndarray:

openai_async_client = AsyncOpenAI()

response = await openai_async_client.embeddings.create(

model="text-embedding-3-small", input=texts, encoding_format="float"

)

return np.array([dp.embedding for dp in response.data])Replace default embedding function with:

GraphRAG(embedding_func=your_embed_func, embedding_batch_num=..., embedding_func_max_async=...)You can refer to an example that use sentence-transformer to locally compute embeddings.

Storage Component

You can replace all storage-related components to your own implementation, nano-graphrag mainly uses three kinds of storage:

base.BaseKVStorage for storing key-json pairs of data

- By default we use disk file storage as the backend.

GraphRAG(.., key_string_value_json_storage_cls=YOURS,...)

base.BaseVectorStorage for indexing embeddings

- By default we use

nano-vectordbas the backend. - We have a built-in

hnswlibstorage also, check out this example. - Check out this example that implements

milvus-liteas the backend (not available in Windows). GraphRAG(.., vector_db_storage_cls=YOURS,...)

base.BaseGraphStorage for storing knowledge graph

- By default we use

networkxas the backend. - We have a built-in

Neo4jStoragefor graph, check out this tutorial. GraphRAG(.., graph_storage_cls=YOURS,...)

You can refer to nano_graphrag.base to see detailed interfaces for each components.

Check FQA.

See ROADMAP.md

nano-graphrag is open to any kind of contribution. Read this before you contribute.

- Medical Graph RAG: Graph RAG for the Medical Data

- LightRAG: Simple and Fast Retrieval-Augmented Generation

- fast-graphrag: RAG that intelligently adapts to your use case, data, and queries

- HiRAG: Retrieval-Augmented Generation with Hierarchical Knowledge

Welcome to pull requests if your project uses

nano-graphrag, it will help others to trust this repo❤️

-

nano-graphragdidn't implement thecovariatesfeature ofGraphRAG -

nano-graphragimplements the global search different from the original. The original use a map-reduce-like style to fill all the communities into context, whilenano-graphragonly use the top-K important and central communites (useQueryParam.global_max_consider_communityto control, default to 512 communities).

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nano-graphrag

Similar Open Source Tools

nano-graphrag

nano-GraphRAG is a simple, easy-to-hack implementation of GraphRAG that provides a smaller, faster, and cleaner version of the official implementation. It is about 800 lines of code, small yet scalable, asynchronous, and fully typed. The tool supports incremental insert, async methods, and various parameters for customization. Users can replace storage components and LLM functions as needed. It also allows for embedding function replacement and comes with pre-defined prompts for entity extraction and community reports. However, some features like covariates and global search implementation differ from the original GraphRAG. Future versions aim to address issues related to data source ID, community description truncation, and add new components.

magentic

Easily integrate Large Language Models into your Python code. Simply use the `@prompt` and `@chatprompt` decorators to create functions that return structured output from the LLM. Mix LLM queries and function calling with regular Python code to create complex logic.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

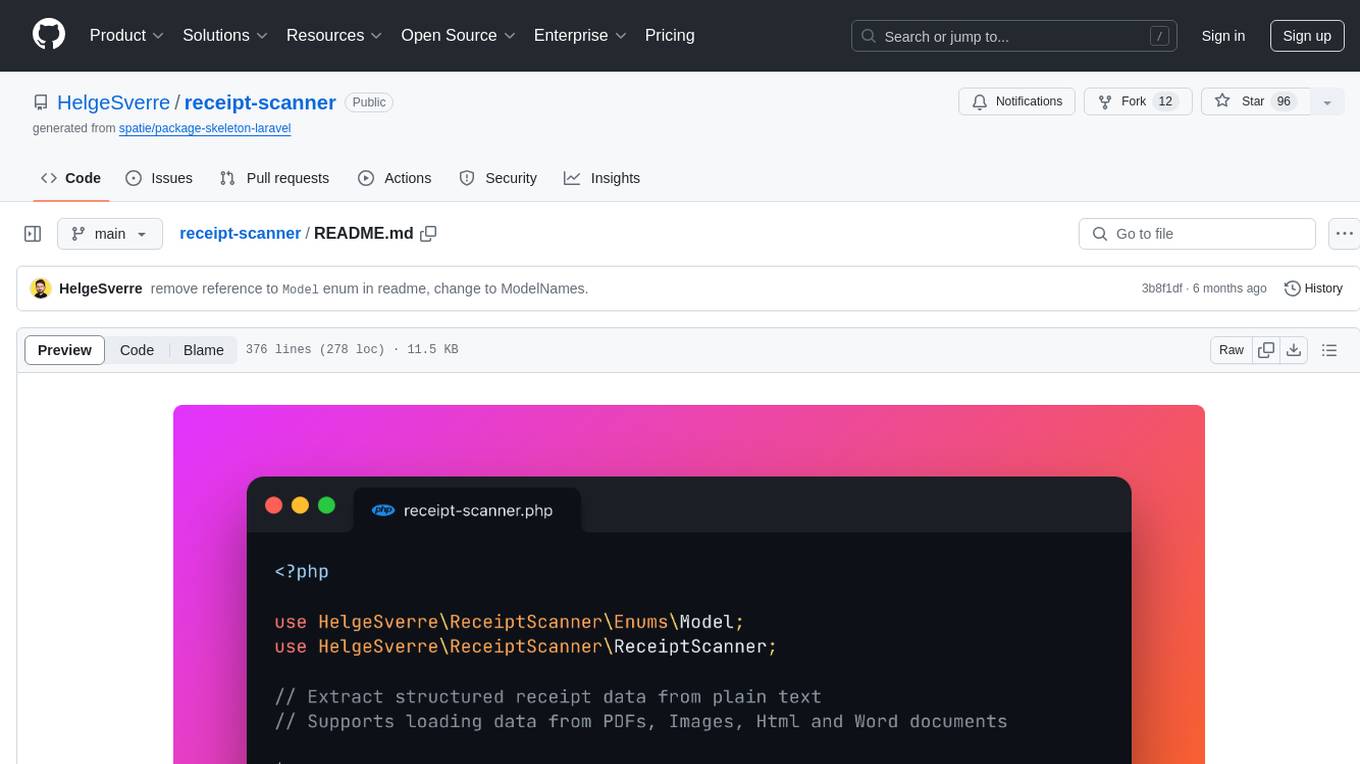

receipt-scanner

The receipt-scanner repository is an AI-Powered Receipt and Invoice Scanner for Laravel that allows users to easily extract structured receipt data from images, PDFs, and emails within their Laravel application using OpenAI. It provides a light wrapper around OpenAI Chat and Completion endpoints, supports various input formats, and integrates with Textract for OCR functionality. Users can install the package via composer, publish configuration files, and use it to extract data from plain text, PDFs, images, Word documents, and web content. The scanned receipt data is parsed into a DTO structure with main classes like Receipt, Merchant, and LineItem.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

mistral-inference

Mistral Inference repository contains minimal code to run 7B, 8x7B, and 8x22B models. It provides model download links, installation instructions, and usage guidelines for running models via CLI or Python. The repository also includes information on guardrailing, model platforms, deployment, and references. Users can interact with models through commands like mistral-demo, mistral-chat, and mistral-common. Mistral AI models support function calling and chat interactions for tasks like testing models, chatting with models, and using Codestral as a coding assistant. The repository offers detailed documentation and links to blogs for further information.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

litserve

LitServe is a high-throughput serving engine for deploying AI models at scale. It generates an API endpoint for a model, handles batching, streaming, autoscaling across CPU/GPUs, and more. Built for enterprise scale, it supports every framework like PyTorch, JAX, Tensorflow, and more. LitServe is designed to let users focus on model performance, not the serving boilerplate. It is like PyTorch Lightning for model serving but with broader framework support and scalability.

chatgpt-subtitle-translator

This tool utilizes the OpenAI ChatGPT API to translate text, with a focus on line-based translation, particularly for SRT subtitles. It optimizes token usage by removing SRT overhead and grouping text into batches, allowing for arbitrary length translations without excessive token consumption while maintaining a one-to-one match between line input and output.

curator

Bespoke Curator is an open-source tool for data curation and structured data extraction. It provides a Python library for generating synthetic data at scale, with features like programmability, performance optimization, caching, and integration with HuggingFace Datasets. The tool includes a Curator Viewer for dataset visualization and offers a rich set of functionalities for creating and refining data generation strategies.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output with respect to defined Context-Free Grammar (CFG) rules. It supports general-purpose programming languages like Python, Go, SQL, JSON, and more, allowing users to define custom grammars using EBNF syntax. The tool compares favorably to other constrained decoders and offers features like fast grammar-guided generation, compatibility with HuggingFace Language Models, and the ability to work with various decoding strategies.

stark

STaRK is a large-scale semi-structure retrieval benchmark on Textual and Relational Knowledge Bases. It provides natural-sounding and practical queries crafted to incorporate rich relational information and complex textual properties, closely mirroring real-life scenarios. The benchmark aims to assess how effectively large language models can handle the interplay between textual and relational requirements in queries, using three diverse knowledge bases constructed from public sources.

syncode

SynCode is a novel framework for the grammar-guided generation of Large Language Models (LLMs) that ensures syntactically valid output based on a Context-Free Grammar (CFG). It supports various programming languages like Python, Go, SQL, Math, JSON, and more. Users can define custom grammars using EBNF syntax. SynCode offers fast generation, seamless integration with HuggingFace Language Models, and the ability to sample with different decoding strategies.

monacopilot

Monacopilot is a powerful and customizable AI auto-completion plugin for the Monaco Editor. It supports multiple AI providers such as Anthropic, OpenAI, Groq, and Google, providing real-time code completions with an efficient caching system. The plugin offers context-aware suggestions, customizable completion behavior, and framework agnostic features. Users can also customize the model support and trigger completions manually. Monacopilot is designed to enhance coding productivity by providing accurate and contextually appropriate completions in daily spoken language.

extractor

Extractor is an AI-powered data extraction library for Laravel that leverages OpenAI's capabilities to effortlessly extract structured data from various sources, including images, PDFs, and emails. It features a convenient wrapper around OpenAI Chat and Completion endpoints, supports multiple input formats, includes a flexible Field Extractor for arbitrary data extraction, and integrates with Textract for OCR functionality. Extractor utilizes JSON Mode from the latest GPT-3.5 and GPT-4 models, providing accurate and efficient data extraction.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

For similar tasks

phospho

Phospho is a text analytics platform for LLM apps. It helps you detect issues and extract insights from text messages of your users or your app. You can gather user feedback, measure success, and iterate on your app to create the best conversational experience for your users.

OpenFactVerification

Loki is an open-source tool designed to automate the process of verifying the factuality of information. It provides a comprehensive pipeline for dissecting long texts into individual claims, assessing their worthiness for verification, generating queries for evidence search, crawling for evidence, and ultimately verifying the claims. This tool is especially useful for journalists, researchers, and anyone interested in the factuality of information.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

spaCy

spaCy is an industrial-strength Natural Language Processing (NLP) library in Python and Cython. It incorporates the latest research and is designed for real-world applications. The library offers pretrained pipelines supporting 70+ languages, with advanced neural network models for tasks such as tagging, parsing, named entity recognition, and text classification. It also facilitates multi-task learning with pretrained transformers like BERT, along with a production-ready training system and streamlined model packaging, deployment, and workflow management. spaCy is commercial open-source software released under the MIT license.

NanoLLM

NanoLLM is a tool designed for optimized local inference for Large Language Models (LLMs) using HuggingFace-like APIs. It supports quantization, vision/language models, multimodal agents, speech, vector DB, and RAG. The tool aims to provide efficient and effective processing for LLMs on local devices, enhancing performance and usability for various AI applications.

ontogpt

OntoGPT is a Python package for extracting structured information from text using large language models, instruction prompts, and ontology-based grounding. It provides a command line interface and a minimal web app for easy usage. The tool has been evaluated on test data and is used in related projects like TALISMAN for gene set analysis. OntoGPT enables users to extract information from text by specifying relevant terms and provides the extracted objects as output.

lima

LIMA is a multilingual linguistic analyzer developed by the CEA LIST, LASTI laboratory. It is Free Software available under the MIT license. LIMA has state-of-the-art performance for more than 60 languages using deep learning modules. It also includes a powerful rules-based mechanism called ModEx for extracting information in new domains without annotated data.

liboai

liboai is a simple C++17 library for the OpenAI API, providing developers with access to OpenAI endpoints through a collection of methods and classes. It serves as a spiritual port of OpenAI's Python library, 'openai', with similar structure and features. The library supports various functionalities such as ChatGPT, Audio, Azure, Functions, Image DALL·E, Models, Completions, Edit, Embeddings, Files, Fine-tunes, Moderation, and Asynchronous Support. Users can easily integrate the library into their C++ projects to interact with OpenAI services.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.