olah

Self-hosted huggingface mirror service.

Stars: 132

Olah is a self-hosted lightweight Huggingface mirror service that implements mirroring feature for Huggingface resources at file block level, enhancing download speeds and saving bandwidth. It offers cache control policies and allows administrators to configure accessible repositories. Users can install Olah with pip or from source, set up the mirror site, and download models and datasets using huggingface-cli. Olah provides additional configurations through a configuration file for basic setup and accessibility restrictions. Future work includes implementing an administrator and user system, OOS backend support, and mirror update schedule task. Olah is released under the MIT License.

README:

Self-hosted Lightweight Huggingface Mirror Service

Olah is a self-hosted lightweight huggingface mirror service. Olah means hello in Hilichurlian.

Olah implemented the mirroring feature for huggingface resources, rather than just a simple reverse proxy.

Olah does not immediately mirror the entire huggingface website but mirrors the resources at the file block level when users download them (or we can say cache them).

Other languages: 中文

Olah has the capability to cache files in chunks while users download them. Upon subsequent downloads, the files can be directly retrieved from the cache, greatly enhancing download speeds and saving bandwidth. Additionally, Olah offers a range of cache control policies. Administrators can configure which repositories are accessible and which ones can be cached through a configuration file.

- Huggingface Data Cache

- Models mirror

- Datasets mirror

- Spaces mirror

pip install olahor:

pip install git+https://github.com/vtuber-plan/olah.git - Clone this repository

git clone https://github.com/vtuber-plan/olah.git

cd olah- Install the Package

pip install --upgrade pip

pip install -e .Run the command in the console:

olah-cliThen set the Environment Variable HF_ENDPOINT to the mirror site (Here is http://localhost:8090).

Linux:

export HF_ENDPOINT=http://localhost:8090Windows Powershell:

$env:HF_ENDPOINT = "http://localhost:8090"Starting from now on, all download operations in the HuggingFace library will be proxied through this mirror site.

pip install -U huggingface_hubfrom huggingface_hub import snapshot_download

snapshot_download(repo_id='Qwen/Qwen-7B', repo_type='model',

local_dir='./model_dir', resume_download=True,

max_workers=8)Or you can download models and datasets by using huggingface cli.

Download GPT2:

huggingface-cli download --resume-download openai-community/gpt2 --local-dir gpt2Download WikiText:

huggingface-cli download --repo-type dataset --resume-download Salesforce/wikitext --local-dir wikitextYou can check the path ./repos, in which olah stores all cached datasets and models.

Run the command in the console:

olah-cliOr you can specify the host address and listening port:

olah-cli --host localhost --port 8090Note: Please change --mirror-netloc and --mirror-lfs-netloc to the actual URLs of the mirror sites when modifying the host and port.

olah-cli --host 192.168.1.100 --port 8090 --mirror-netloc 192.168.1.100:8090The default mirror cache path is ./repos, you can change it by --repos-path parameter:

olah-cli --host localhost --port 8090 --repos-path ./hf_mirrorsNote that the cached data between different versions cannot be migrated. Please delete the cache folder before upgrading to the latest version of Olah.

In deployment scenarios, there may be high concurrent downloads, leading to Timeout errors for new connections.

You can set the WEB_CONCURRENCY variable for uvicorn to increase the number of workers, thereby enhancing concurrency in production environments.

For example, on Linux:

export WEB_CONCURRENCY=4Additional configurations can be controlled through a configuration file by passing the configs.toml file as a command parameter:

olah-cli -c configs.tomlThe complete content of the configuration file can be found at assets/full_configs.toml.

The first section, basic, is used to set up basic configurations for the mirror site:

[basic]

host = "localhost"

port = 8090

ssl-key = ""

ssl-cert = ""

repos-path = "./repos"

cache-size-limit = ""

cache-clean-strategy = "LRU"

hf-scheme = "https"

hf-netloc = "huggingface.co"

hf-lfs-netloc = "cdn-lfs.huggingface.co"

mirror-scheme = "http"

mirror-netloc = "localhost:8090"

mirror-lfs-netloc = "localhost:8090"

mirrors-path = ["./mirrors_dir"]-

host: Sets the host address that Olah listens to. -

port: Sets the port that Olah listens to. -

ssl-keyandssl-cert: When enabling HTTPS, specify the file paths for the key and certificate. -

repos-path: Specifies the directory for storing cached data. -

cache-size-limit: Specifies cache size limit (For example, 100G, 500GB, 2TB). Olah will scan the size of the cache folder every hour. If it exceeds the limit, olah will delete some cache files. -

cache-clean-strategy: Specifies cache cleaning strategy (Available strategies: LRU, FIFO, LARGE_FIRST). -

hf-scheme: Network protocol for the Hugging Face official site (usually no need to modify). -

hf-netloc: Network location of the Hugging Face official site (usually no need to modify). -

hf-lfs-netloc: Network location for Hugging Face official site's LFS files (usually no need to modify). -

mirror-scheme: Network protocol for the Olah mirror site (should match the above settings; change to HTTPS if providingssl-keyandssl-cert). -

mirror-netloc: Network location of the Olah mirror site (should matchhostandportsettings). -

mirror-lfs-netloc: Network location for Olah mirror site's LFS (should matchhostandportsettings). -

mirrors-path: Additional mirror file directories. If you have already cloned some Git repositories, you can place them in this directory for downloading. In this example, the directory is./mirrors_dir. To add a dataset likeSalesforce/wikitext, you can place the Git repository in the directory./mirrors_dir/datasets/Salesforce/wikitext. Similarly, models can be placed under./mirrors_dir/models/organization/repository.

The second section allows for accessibility restrictions:

[accessibility]

offline = false

[[accessibility.proxy]]

repo = "cais/mmlu"

allow = true

[[accessibility.proxy]]

repo = "adept/fuyu-8b"

allow = false

[[accessibility.proxy]]

repo = "mistralai/*"

allow = true

[[accessibility.proxy]]

repo = "mistralai/Mistral.*"

allow = false

use_re = true

[[accessibility.cache]]

repo = "cais/mmlu"

allow = true

[[accessibility.cache]]

repo = "adept/fuyu-8b"

allow = false-

offline: Sets whether the Olah mirror site enters offline mode, no longer making requests to the Hugging Face official site for data updates. However, cached repositories can still be downloaded. -

proxy: Determines if the repository can be accessed through a proxy. By default, all repositories are allowed. Therepofield is used to match the repository name. Regular expressions and wildcards can be used by settinguse_reto control whether to use regular expressions (default is to use wildcards). Theallowfield controls whether the repository is allowed to be proxied. -

cache: Determines if the repository will be cached. By default, all repositories are allowed. Therepofield is used to match the repository name. Regular expressions and wildcards can be used by settinguse_reto control whether to use regular expressions (default is to use wildcards). Theallowfield controls whether the repository is allowed to be cached.

- Administrator and user system

- OOS backend support

- Mirror Update Schedule Task

olah is released under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for olah

Similar Open Source Tools

olah

Olah is a self-hosted lightweight Huggingface mirror service that implements mirroring feature for Huggingface resources at file block level, enhancing download speeds and saving bandwidth. It offers cache control policies and allows administrators to configure accessible repositories. Users can install Olah with pip or from source, set up the mirror site, and download models and datasets using huggingface-cli. Olah provides additional configurations through a configuration file for basic setup and accessibility restrictions. Future work includes implementing an administrator and user system, OOS backend support, and mirror update schedule task. Olah is released under the MIT License.

godot-llm

Godot LLM is a plugin that enables the utilization of large language models (LLM) for generating content in games. It provides functionality for text generation, text embedding, multimodal text generation, and vector database management within the Godot game engine. The plugin supports features like Retrieval Augmented Generation (RAG) and integrates llama.cpp-based functionalities for text generation, embedding, and multimodal capabilities. It offers support for various platforms and allows users to experiment with LLM models in their game development projects.

cheating-based-prompt-engine

This is a vulnerability mining engine purely based on GPT, requiring no prior knowledge base, no fine-tuning, yet its effectiveness can overwhelmingly surpass most of the current related research. The core idea revolves around being task-driven, not question-driven, driven by prompts, not by code, and focused on prompt design, not model design. The essence is encapsulated in one word: deception. It is a type of code understanding logic vulnerability mining that fully stimulates the capabilities of GPT, suitable for real actual projects.

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

tenere

Tenere is a TUI interface for Language Model Libraries (LLMs) written in Rust. It provides syntax highlighting, chat history, saving chats to files, Vim keybindings, copying text from/to clipboard, and supports multiple backends. Users can configure Tenere using a TOML configuration file, set key bindings, and use different LLMs such as ChatGPT, llama.cpp, and ollama. Tenere offers default key bindings for global and prompt modes, with features like starting a new chat, saving chats, scrolling, showing chat history, and quitting the app. Users can interact with the prompt in different modes like Normal, Visual, and Insert, with various key bindings for navigation, editing, and text manipulation.

cli-agent

Pieces CLI for Developers is a comprehensive command-line interface (CLI) tool designed to interact seamlessly with Pieces OS. It provides functionalities such as asset management, application interaction, and integration with various Pieces OS features. The tool is compatible with Windows 10 or greater, Mac, and Windows operating systems. Users can install the tool by running 'pip install pieces-cli' or 'brew install pieces-cli'. After installation, users can access the tool's functionalities through the terminal by using the 'pieces' command followed by subcommands and options. The tool supports various commands, which can be found in the documentation. Developers can contribute to the project by forking and cloning the repository, setting up a virtual environment, installing dependencies with poetry, and running test cases with pytest and coverage.

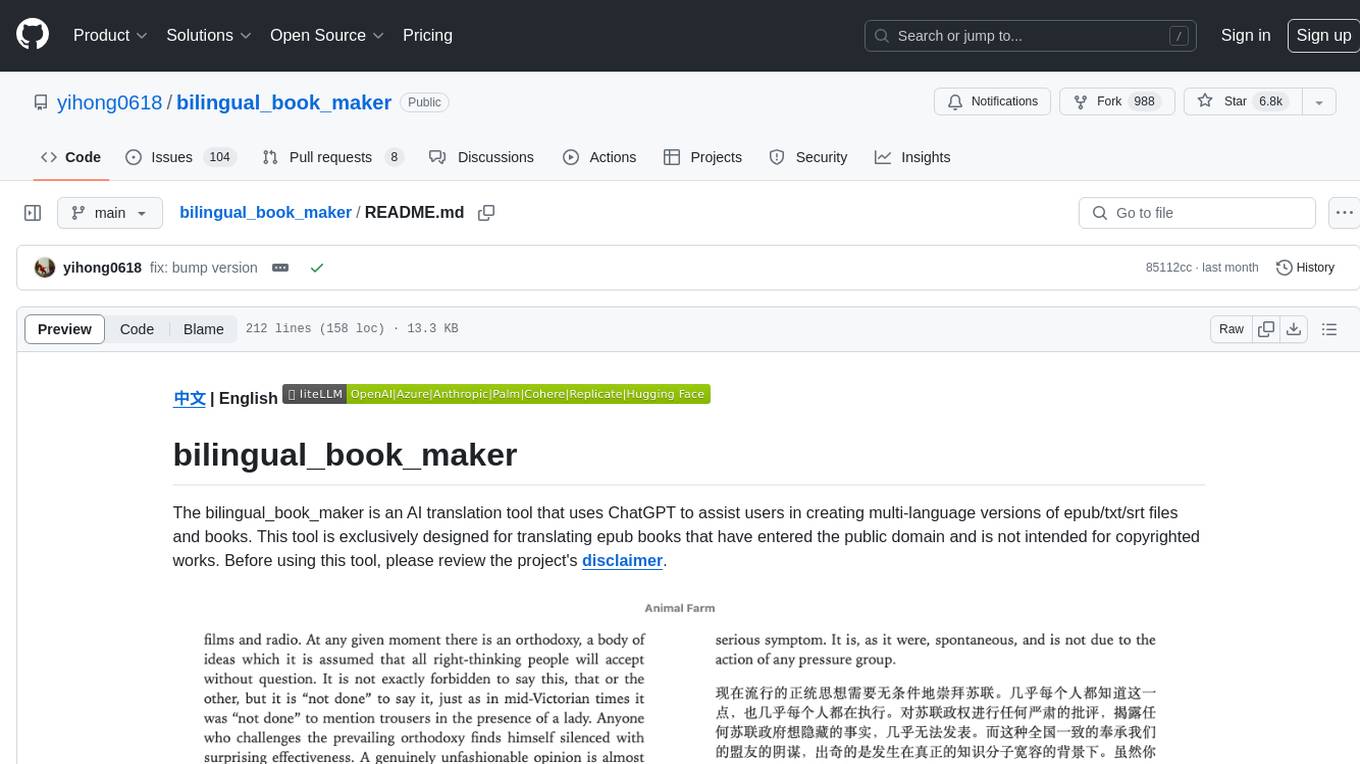

bilingual_book_maker

The bilingual_book_maker is an AI translation tool that uses ChatGPT to assist users in creating multi-language versions of epub/txt/srt files and books. It supports various models like gpt-4, gpt-3.5-turbo, claude-2, palm, llama-2, azure-openai, command-nightly, and gemini. Users need ChatGPT or OpenAI token, epub/txt books, internet access, and Python 3.8+. The tool provides options to specify OpenAI API key, model selection, target language, proxy server, context addition, translation style, and more. It generates bilingual books in epub format after translation. Users can test translations, set batch size, tweak prompts, and use different models like DeepL, Google Gemini, Tencent TranSmart, and more. The tool also supports retranslation, translating specific tags, and e-reader type specification. Docker usage is available for easy setup.

mods

AI for the command line, built for pipelines. LLM based AI is really good at interpreting the output of commands and returning the results in CLI friendly text formats like Markdown. Mods is a simple tool that makes it super easy to use AI on the command line and in your pipelines. Mods works with OpenAI, Groq, Azure OpenAI, and LocalAI To get started, install Mods and check out some of the examples below. Since Mods has built-in Markdown formatting, you may also want to grab Glow to give the output some _pizzazz_.

ChatDBG

ChatDBG is an AI-based debugging assistant for C/C++/Python/Rust code that integrates large language models into a standard debugger (`pdb`, `lldb`, `gdb`, and `windbg`) to help debug your code. With ChatDBG, you can engage in a dialog with your debugger, asking open-ended questions about your program, like `why is x null?`. ChatDBG will _take the wheel_ and steer the debugger to answer your queries. ChatDBG can provide error diagnoses and suggest fixes. As far as we are aware, ChatDBG is the _first_ debugger to automatically perform root cause analysis and to provide suggested fixes.

k8sgpt

K8sGPT is a tool for scanning your Kubernetes clusters, diagnosing, and triaging issues in simple English. It has SRE experience codified into its analyzers and helps to pull out the most relevant information to enrich it with AI.

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

ML-Bench

ML-Bench is a tool designed to evaluate large language models and agents for machine learning tasks on repository-level code. It provides functionalities for data preparation, environment setup, usage, API calling, open source model fine-tuning, and inference. Users can clone the repository, load datasets, run ML-LLM-Bench, prepare data, fine-tune models, and perform inference tasks. The tool aims to facilitate the evaluation of language models and agents in the context of machine learning tasks on code repositories.

chatgpt-subtitle-translator

This tool utilizes the OpenAI ChatGPT API to translate text, with a focus on line-based translation, particularly for SRT subtitles. It optimizes token usage by removing SRT overhead and grouping text into batches, allowing for arbitrary length translations without excessive token consumption while maintaining a one-to-one match between line input and output.

shell-pilot

Shell-pilot is a simple, lightweight shell script designed to interact with various AI models such as OpenAI, Ollama, Mistral AI, LocalAI, ZhipuAI, Anthropic, Moonshot, and Novita AI from the terminal. It enhances intelligent system management without any dependencies, offering features like setting up a local LLM repository, using official models and APIs, viewing history and session persistence, passing input prompts with pipe/redirector, listing available models, setting request parameters, generating and running commands in the terminal, easy configuration setup, system package version checking, and managing system aliases.

partial-json-parser-js

Partial JSON Parser is a lightweight and customizable library for parsing partial JSON strings. It allows users to parse incomplete JSON data and stream it to the user. The library provides options to specify what types of partialness are allowed during parsing, such as strings, objects, arrays, special values, and more. It helps handle malformed JSON and returns the parsed JavaScript value. Partial JSON Parser is implemented purely in JavaScript and offers both commonjs and esm builds.

For similar tasks

vulcan-sql

VulcanSQL is an Analytical Data API Framework for AI agents and data apps. It aims to help data professionals deliver RESTful APIs from databases, data warehouses or data lakes much easier and secure. It turns your SQL into APIs in no time!

olah

Olah is a self-hosted lightweight Huggingface mirror service that implements mirroring feature for Huggingface resources at file block level, enhancing download speeds and saving bandwidth. It offers cache control policies and allows administrators to configure accessible repositories. Users can install Olah with pip or from source, set up the mirror site, and download models and datasets using huggingface-cli. Olah provides additional configurations through a configuration file for basic setup and accessibility restrictions. Future work includes implementing an administrator and user system, OOS backend support, and mirror update schedule task. Olah is released under the MIT License.

comfy-cli

Comfy-cli is a command line tool designed to facilitate the installation and management of ComfyUI, an open-source machine learning framework. Users can easily set up ComfyUI, install packages, and manage custom nodes directly from the terminal. The tool offers features such as easy installation, seamless package management, custom node management, checkpoint downloads, cross-platform compatibility, and comprehensive documentation. Comfy-cli simplifies the process of working with ComfyUI, making it convenient for users to handle various tasks related to the framework.

sdkit

sdkit (stable diffusion kit) is an easy-to-use library for utilizing Stable Diffusion in AI Art projects. It includes features like ControlNets, LoRAs, Textual Inversion Embeddings, GFPGAN, CodeFormer for face restoration, RealESRGAN for upscaling, k-samplers, support for custom VAEs, NSFW filter, model-downloader, parallel GPU support, and more. It offers a model database, auto-scanning for malicious models, and various optimizations. The API consists of modules for loading models, generating images, filters, model merging, and utilities, all managed through the sdkit.Context object.

Jlama

Jlama is a modern Java inference engine designed for large language models. It supports various model types such as Gemma, Llama, Mistral, GPT-2, BERT, and more. The tool implements features like Flash Attention, Mixture of Experts, and supports different model quantization formats. Built with Java 21 and utilizing the new Vector API for faster inference, Jlama allows users to add LLM inference directly to their Java applications. The tool includes a CLI for running models, a simple UI for chatting with LLMs, and examples for different model types.

gemma

Gemma is a family of open-weights Large Language Model (LLM) by Google DeepMind, based on Gemini research and technology. This repository contains an inference implementation and examples, based on the Flax and JAX frameworks. Gemma can run on CPU, GPU, and TPU, with model checkpoints available for download. It provides tutorials, reference implementations, and Colab notebooks for tasks like sampling and fine-tuning. Users can contribute to Gemma through bug reports and pull requests. The code is licensed under the Apache License, Version 2.0.

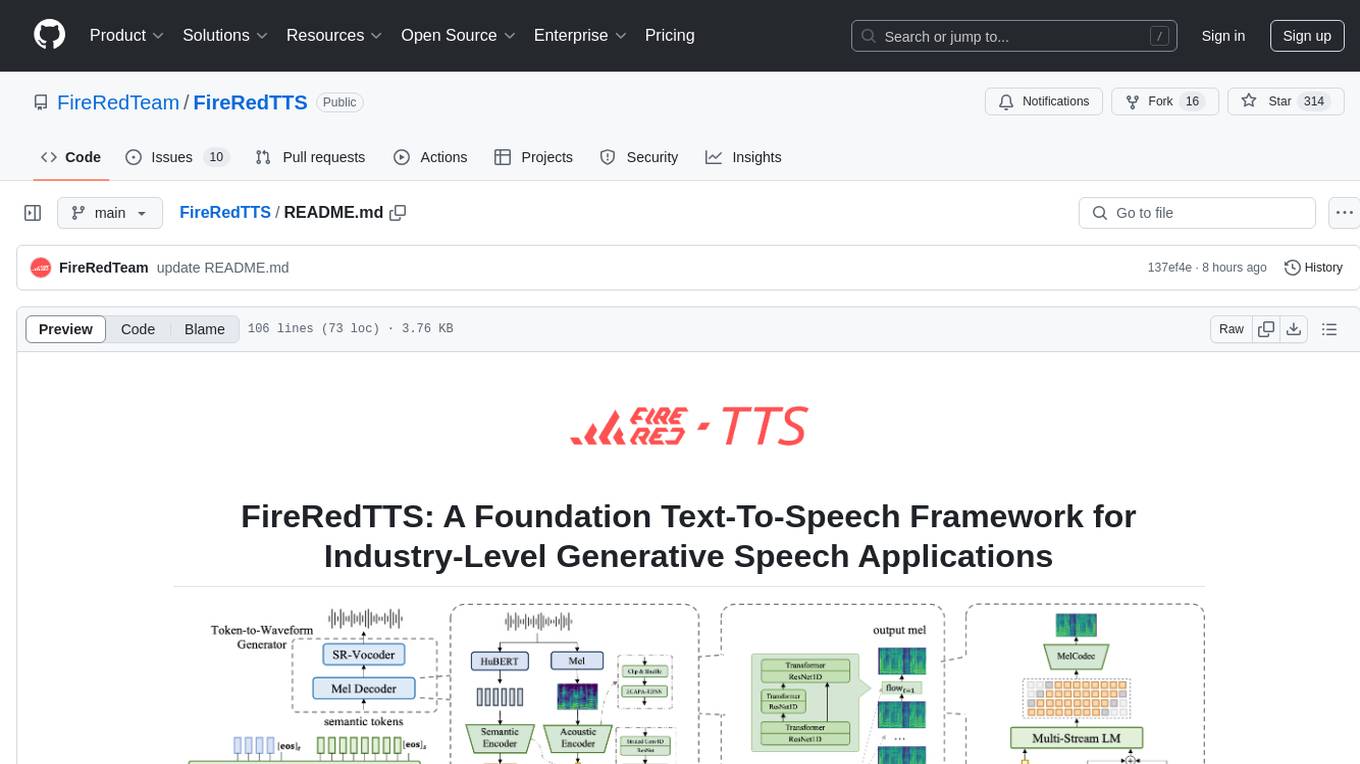

FireRedTTS

FireRedTTS is a foundation text-to-speech framework designed for industry-level generative speech applications. It offers a rich-punctuation model with expanded punctuation coverage and enhanced audio production consistency. The tool provides pre-trained checkpoints, inference code, and an interactive demo space. Users can clone the repository, create a conda environment, download required model files, and utilize the tool for synthesizing speech in various languages. FireRedTTS aims to enhance stability and provide controllable human-like speech generation capabilities.

ai-dev-gallery

The AI Dev Gallery is an app designed to help Windows developers integrate AI capabilities within their own apps and projects. It contains over 25 interactive samples powered by local AI models, allows users to explore, download, and run models from Hugging Face and GitHub, and provides the ability to view the C# source code and export a standalone Visual Studio project for each sample. The app is open-source and welcomes contributions and suggestions from the community.

For similar jobs

llm-resource

llm-resource is a comprehensive collection of high-quality resources for Large Language Models (LLM). It covers various aspects of LLM including algorithms, training, fine-tuning, alignment, inference, data engineering, compression, evaluation, prompt engineering, AI frameworks, AI basics, AI infrastructure, AI compilers, LLM application development, LLM operations, AI systems, and practical implementations. The repository aims to gather and share valuable resources related to LLM for the community to benefit from.

LitServe

LitServe is a high-throughput serving engine designed for deploying AI models at scale. It generates an API endpoint for models, handles batching, streaming, and autoscaling across CPU/GPUs. LitServe is built for enterprise scale with a focus on minimal, hackable code-base without bloat. It supports various model types like LLMs, vision, time-series, and works with frameworks like PyTorch, JAX, Tensorflow, and more. The tool allows users to focus on model performance rather than serving boilerplate, providing full control and flexibility.

how-to-optim-algorithm-in-cuda

This repository documents how to optimize common algorithms based on CUDA. It includes subdirectories with code implementations for specific optimizations. The optimizations cover topics such as compiling PyTorch from source, NVIDIA's reduce optimization, OneFlow's elementwise template, fast atomic add for half data types, upsample nearest2d optimization in OneFlow, optimized indexing in PyTorch, OneFlow's softmax kernel, linear attention optimization, and more. The repository also includes learning resources related to deep learning frameworks, compilers, and optimization techniques.

aiac

AIAC is a library and command line tool to generate Infrastructure as Code (IaC) templates, configurations, utilities, queries, and more via LLM providers such as OpenAI, Amazon Bedrock, and Ollama. Users can define multiple 'backends' targeting different LLM providers and environments using a simple configuration file. The tool allows users to ask a model to generate templates for different scenarios and composes an appropriate request to the selected provider, storing the resulting code to a file and/or printing it to standard output.

ENOVA

ENOVA is an open-source service for Large Language Model (LLM) deployment, monitoring, injection, and auto-scaling. It addresses challenges in deploying stable serverless LLM services on GPU clusters with auto-scaling by deconstructing the LLM service execution process and providing configuration recommendations and performance detection. Users can build and deploy LLM with few command lines, recommend optimal computing resources, experience LLM performance, observe operating status, achieve load balancing, and more. ENOVA ensures stable operation, cost-effectiveness, efficiency, and strong scalability of LLM services.

jina

Jina is a tool that allows users to build multimodal AI services and pipelines using cloud-native technologies. It provides a Pythonic experience for serving ML models and transitioning from local deployment to advanced orchestration frameworks like Docker-Compose, Kubernetes, or Jina AI Cloud. Users can build and serve models for any data type and deep learning framework, design high-performance services with easy scaling, serve LLM models while streaming their output, integrate with Docker containers via Executor Hub, and host on CPU/GPU using Jina AI Cloud. Jina also offers advanced orchestration and scaling capabilities, a smooth transition to the cloud, and easy scalability and concurrency features for applications. Users can deploy to their own cloud or system with Kubernetes and Docker Compose integration, and even deploy to JCloud for autoscaling and monitoring.

vidur

Vidur is a high-fidelity and extensible LLM inference simulator designed for capacity planning, deployment configuration optimization, testing new research ideas, and studying system performance of models under different workloads and configurations. It supports various models and devices, offers chrome trace exports, and can be set up using mamba, venv, or conda. Users can run the simulator with various parameters and monitor metrics using wandb. Contributions are welcome, subject to a Contributor License Agreement and adherence to the Microsoft Open Source Code of Conduct.

AI-System-School

AI System School is a curated list of research in machine learning systems, focusing on ML/DL infra, LLM infra, domain-specific infra, ML/LLM conferences, and general resources. It provides resources such as data processing, training systems, video systems, autoML systems, and more. The repository aims to help users navigate the landscape of AI systems and machine learning infrastructure, offering insights into conferences, surveys, books, videos, courses, and blogs related to the field.