llm-resource

LLM全栈优质资源汇总

Stars: 309

llm-resource is a comprehensive collection of high-quality resources for Large Language Models (LLM). It covers various aspects of LLM including algorithms, training, fine-tuning, alignment, inference, data engineering, compression, evaluation, prompt engineering, AI frameworks, AI basics, AI infrastructure, AI compilers, LLM application development, LLM operations, AI systems, and practical implementations. The repository aims to gather and share valuable resources related to LLM for the community to benefit from.

README:

LLM全栈优质资源汇总

非常欢迎大家也参与进来,收集更多优质大模型相关资源。

- 🐼 LLM算法

- 🐘 LLM训练

- 🔥 LLM推理

- 🌴 LLM数据工程(Data Engineering)

- 📡 LLM压缩

- 🐰 LLM测评

- 🐘 AI基础知识

- 📡 AI基础设施

- 🐘 AI编译器

- 🐰 AI框架

- 📡 LLM应用开发

- 🐘 LLMOps

- 📡 LLM实践

- 📡微信公众号文章集锦

原理:

- Transformer模型详解(图解最完整版

- OpenAI ChatGPT(一):十分钟读懂 Transformer

- Transformer的结构是什么样的?各个子模块各有什么作用?

- 以Transformer结构为基础的大模型参数量、计算量、中间激活以及KV cache剖析

- Transformer 一起动手编码学原理

- 为什么transformer(Bert)的多头注意力要对每一个head进行降维?

源码:

- OpenAI ChatGPT(一):Tensorflow实现Transformer

- OpenAI ChatGPT(一):十分钟读懂 Transformer

- GPT (一)transformer原理和代码详解

- Transformer源码详解(Pytorch版本)

- 搞懂Transformer结构,看这篇PyTorch实现就够了

-

GPT2 源码:https://github.com/huggingface/transformers/blob/main/src/transformers/models/gpt2/modeling_gpt2.py

-

GPT2 源码解析:https://zhuanlan.zhihu.com/p/630970209

-

nanoGPT:https://github.com/karpathy/nanoGPT/blob/master/model.py

-

7.3 GPT2模型深度解析:http://121.199.45.168:13013/7_3.html

-

GPT(三)GPT2原理和代码详解: https://zhuanlan.zhihu.com/p/637782385

-

GPT2参数量剖析: https://zhuanlan.zhihu.com/p/640501114

- Mixtral-8x7B MoE大模型微调实践,超越Llama2-65B

- 大模型分布式训练并行技术(八)-MOE并行

- MoE架构模型爆发或将带飞国产AI芯片

- 大模型的模型融合方法概述

- 混合专家模型 (MoE) 详解

- 群魔乱舞:MoE大模型详解

- 大模型LLM之混合专家模型MoE(上-基础篇)

- 大模型LLM之混合专家模型MoE(下-实现篇)

- https://github.com/NExT-GPT/NExT-GPT

- https://next-gpt.github.io/

- Introduction to NExT-GPT: Any-to-Any Multimodal Large Language Model

A Survey on Multimodal Large Language Models:https://arxiv.org/pdf/2306.13549 Efficient-Multimodal-LLMs-Survey:https://github.com/lijiannuist/Efficient-Multimodal-LLMs-Survey

-

Transformer Math 101 - 如何计算显存消耗?

-

Megatron-LM 第三篇Paper总结——Sequence Parallelism & Selective Checkpointing

-

学习率(warmup, decay):

- 使用HuggingFace的Accelerate库加载和运行超大模型 : device_map、no_split_module_classes、 offload_folder、 offload_state_dict

- 借助 PyTorch,Accelerate 如何运行超大模型

- 使用 DeepSpeed 和 Accelerate 进行超快 BLOOM 模型推理

- LLM七种推理服务框架总结

- LLM投机采样(Speculative Sampling)为何能加速模型推理

- 大模型推理妙招—投机采样(Speculative Decoding)

- https://github.com/flexflow/FlexFlow/tree/inference

- TensorRT-LLM(3)--架构

- NLP(十八):LLM 的推理优化技术纵览:https://zhuanlan.zhihu.com/p/642412124

- 揭秘NVIDIA大模型推理框架:TensorRT-LLM:https://zhuanlan.zhihu.com/p/680808866

- 如何生成文本: 通过 Transformers 用不同的解码方法生成文本 | How to generate text: using different decoding methods for language generation with Transformers

KV Cache:

解码优化:

- vLLM(六)源码解读下 @HelloWorld

- 猛猿:图解大模型计算加速系列:vLLM源码解析1,整体架构

- LLM推理2:vLLM源码学习 @ akaihaoshuai

- 大模型推理框架 vLLM 源码解析(一):框架概览

- Awesome Model Quantization

- Efficient-LLMs-Survey

- Awesome LLM Compression

- 模型转换、模型压缩、模型加速工具汇总

- AI 框架部署方案之模型转换

- Pytorch 模型转 TensorRT (torch2trt 教程)

- CLiB中文大模型能力评测榜单

- huggingface Open LLM Leaderboard

- HELM:https://github.com/stanford-crfm/helm

- HELM:https://crfm.stanford.edu/helm/latest/

- lm-evaluation-harness:https://github.com/EleutherAI/lm-evaluation-harness/

- CLEVA:http://www.lavicleva.com/#/homepage/overview

- CLEVA:https://github.com/LaVi-Lab/CLEVA/blob/main/README_zh-CN.md

- 做数据关键步骤:怎么写好prompt?

- 从1000+模板中总结出的10大提示工程方法助你成为提示词大师!

- 一文搞懂提示工程的原理及前世今生

- Effective Prompt: 编写高质量Prompt的14个有效方法

- 提示工程和提示构造技巧

- 一文带你了解提示攻击!

- 通向AGI之路:大型语言模型(LLM)技术精要

- 大语言模型的涌现能力:现象与解释

- NLP(十八):LLM 的推理优化技术纵览

- 并行计算3:并行计算模型

- 大模型“幻觉”,看这一篇就够了 | 哈工大华为出品

safetensors:

- bin和safetensors区别是什么?

- Safetensors:保存模型权重的新格式

- github: safetensors

- huggingface: safetensors

- Safetensors: a simple, safe and faster way to store and distribute tensors.

- https://huggingface.co/docs/safetensors/index

- https://github.com/huggingface/safetensors/tree/v0.3.3

- 手把手教你:LLama2原始权重转HF模型

- PyTorch 源码解读系列 @ OpenMMLab 团队

- [源码解析] PyTorch 分布式 @ 罗西的思考

- PyTorch 分布式(18) --- 使用 RPC 的分布式流水线并行 @ 罗西的思考

- 【Pytorch】model.train() 和 model.eval() 原理与用法

- Megatron-LM 近期的改动

- 深入理解 Megatron-LM(1)基础知识 @ 简枫

- 深入理解 Megatron-LM(2)原理介绍

- [源码解析] 模型并行分布式训练Megatron (1) --- 论文 & 基础 @ 罗西的思考

- [源码解析] 模型并行分布式训练Megatron (2) --- 整体架构

- [细读经典]Megatron论文和代码详细分析(1) @迷途小书僮

- [细读经典]Megatron论文和代码详细分析(2)

- 业界AI加速芯片浅析(一)百度昆仑芯

- NVIDIA CUDA-X AI:https://www.nvidia.cn/technologies/cuda-x/

- Intel,Nvidia,AMD三大巨头火拼GPU与CPU

- 处理器与AI芯片-Google-TPU:https://zhuanlan.zhihu.com/p/646793355

- 一文看懂国产AI芯片玩家

- 深度 | 国产AI芯片,玩家几何

- 动手学大模型应用开发

- langchain java

- 大模型主流应用RAG的介绍——从架构到技术细节

- 基于检索的大语言模型和应用(陈丹琦)

- 大模型bad case修复方案思考

- 《综述:全新大语言模型驱动的Agent》——4.5万字详细解读复旦NLP和米哈游最新Agent Survey

- MLOps Landscape in 2023: Top Tools and Platforms

- What Constitutes A Large Language Model Application? :LLM Functionality Landscape

- AI System @吃果冻不吐果冻皮

- 大语言模型原理与工程 @杨青

- 大语言模型从理论到实践 @张奇 :https://intro-llm.github.io/

- 动手学大模型

- minGPT @karpathy

- llm.c @karpathy: LLM training in simple, raw C/CUDA

- LLM101n

- llama2.c: Inference Llama 2 in one file of pure C

- nanoGPT

- Baby-Llama2-Chinese

- 从0到1构建一个MiniLLM

- gpt-fast 、blog

- Awesome-Chinese-LLM

- Awesome-LLM-Survey

- Large Language Model Course

- Awesome-Quantization-Papers

- Awesome Model Quantization (GitHub)

- Awesome Transformer Attention (GitHub)

- 语言模型数据选择综述

- Awesome Knowledge Distillation of LLM Papers

- Awasome-Pruning @ghimiredhikura

- Awesome-Pruning @he-y

- awesome-pruning @hrcheng1066

- Awesome-LLM-Inference

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-resource

Similar Open Source Tools

llm-resource

llm-resource is a comprehensive collection of high-quality resources for Large Language Models (LLM). It covers various aspects of LLM including algorithms, training, fine-tuning, alignment, inference, data engineering, compression, evaluation, prompt engineering, AI frameworks, AI basics, AI infrastructure, AI compilers, LLM application development, LLM operations, AI systems, and practical implementations. The repository aims to gather and share valuable resources related to LLM for the community to benefit from.

LLMLanding

LLMLanding is a repository focused on practical implementation of large models, covering topics from theory to practice. It provides a structured learning path for training large models, including specific tasks like training 1B-scale models, exploring SFT, and working on specialized tasks such as code generation, NLP tasks, and domain-specific fine-tuning. The repository emphasizes a dual learning approach: quickly applying existing tools for immediate output benefits and delving into foundational concepts for long-term understanding. It offers detailed resources and pathways for in-depth learning based on individual preferences and goals, combining theory with practical application to avoid overwhelm and ensure sustained learning progress.

LLMForEverybody

LLMForEverybody is a comprehensive repository covering various aspects of large language models (LLMs) including pre-training, architecture, optimizers, activation functions, attention mechanisms, tokenization, parallel strategies, training frameworks, deployment, fine-tuning, quantization, GPU parallelism, prompt engineering, agent design, RAG architecture, enterprise deployment challenges, evaluation metrics, and current hot topics in the field. It provides detailed explanations, tutorials, and insights into the workings and applications of LLMs, making it a valuable resource for researchers, developers, and enthusiasts interested in understanding and working with large language models.

MaiMBot

MaiMBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, utilizes LLM for conversation abilities, MongoDB for data persistence, and NapCat for QQ protocol support. The bot features keyword-triggered proactive responses, dynamic prompt construction, support for images and message forwarding, typo generation, multiple replies, emotion-based emoji responses, daily schedule generation, user relationship management, knowledge base, and group impressions. Work-in-progress features include personality, group atmosphere, image handling, humor, meme functions, and Minecraft interactions. The tool is in active development with plans for GIF compatibility, mini-program link parsing, bug fixes, documentation improvements, and logic enhancements for emoji sending.

claude-init

Claude Code Chinese development suite is a localized version based on the Claude Code Development Kit, offering a seamless Chinese AI programming experience. It features complete Chinese AI commands, documentation system, error messages, and installation experience. The suite includes intelligent context management with a three-tier document structure, automatic context injection, smart document routing, and cross-session state management. It integrates development tools like Hook system, MCP server support, security scans, and notification system. Additionally, it provides a comprehensive template library with project templates, document templates, and configuration examples.

bk-lite

Blueking Lite is an AI First lightweight operation product with low deployment resource requirements, low usage costs, and progressive experience, providing essential tools for operation administrators.

AHU-AI-Repository

This repository is dedicated to the learning and exchange of resources for the School of Artificial Intelligence at Anhui University. Notes will be published on this website first: https://www.aoaoaoao.cn and will be synchronized to the repository regularly. You can also contact me at [email protected].

AI-Drug-Discovery-Design

AI-Drug-Discovery-Design is a repository focused on Artificial Intelligence-assisted Drug Discovery and Design. It explores the use of AI technology to accelerate and optimize the drug development process. The advantages of AI in drug design include speeding up research cycles, improving accuracy through data-driven models, reducing costs by minimizing experimental redundancies, and enabling personalized drug design for specific patients or disease characteristics.

awesome-chatgpt-zh

The Awesome ChatGPT Chinese Guide project aims to help Chinese users understand and use ChatGPT. It collects various free and paid ChatGPT resources, as well as methods to communicate more effectively with ChatGPT in Chinese. The repository contains a rich collection of ChatGPT tools, applications, and examples.

Fay

Fay is an open-source digital human framework that offers different versions for various purposes. The '带货完整版' is suitable for online and offline salespersons. The '助理完整版' serves as a human-machine interactive digital assistant that can also control devices upon command. The 'agent版' is designed to be an autonomous agent capable of making decisions and contacting its owner. The framework provides updates and improvements across its different versions, including features like emotion analysis integration, model optimizations, and compatibility enhancements. Users can access detailed documentation for each version through the provided links.

BiBi-Keyboard

BiBi-Keyboard is an AI-based intelligent voice input method that aims to make voice input more natural and efficient. It provides features such as voice recognition with simple and intuitive operations, multiple ASR engine support, AI text post-processing, floating ball input for cross-input method usage, AI editing panel with rich editing tools, Material3 design for modern interface style, and support for multiple languages. Users can adjust keyboard height, test input directly in the settings page, view recognition word count statistics, receive vibration feedback, and check for updates automatically. The tool requires Android 10.0 or higher, microphone permission for voice recognition, optional overlay permission for the floating ball feature, and optional accessibility permission for automatic text insertion.

Daily-DeepLearning

Daily-DeepLearning is a repository that covers various computer science topics such as data structures, operating systems, computer networks, Python programming, data science packages like numpy, pandas, matplotlib, machine learning theories, deep learning theories, NLP concepts, machine learning practical applications, deep learning practical applications, and big data technologies like Hadoop and Hive. It also includes coding exercises related to '剑指offer'. The repository provides detailed explanations and examples for each topic, making it a comprehensive resource for learning and practicing different aspects of computer science and data-related fields.

Code-Review-GPT-Gitlab

A project that utilizes large models to help with Code Review on Gitlab, aimed at improving development efficiency. The project is customized for Gitlab and is developing a Multi-Agent plugin for collaborative review. It integrates various large models for code security issues and stays updated with the latest Code Review trends. The project architecture is designed to be powerful, flexible, and efficient, with easy integration of different models and high customization for developers.

kcores-llm-arena

KCORES LLM Arena is a large model evaluation tool that focuses on real-world scenarios, using human scoring and benchmark testing to assess performance. It aims to provide an unbiased evaluation of large models in real-world applications. The tool includes programming ability tests and specific benchmarks like Mandelbrot Set, Mars Mission, Solar System, and Ball Bouncing Inside Spinning Heptagon. It supports various programming languages and emphasizes performance optimization, rendering, animations, physics simulations, and creative implementations.

Snap-Solver

Snap-Solver is a revolutionary AI tool for online exam solving, designed for students, test-takers, and self-learners. With just a keystroke, it automatically captures any question on the screen, analyzes it using AI, and provides detailed answers. Whether it's complex math formulas, physics problems, coding issues, or challenges from other disciplines, Snap-Solver offers clear, accurate, and structured solutions to help you better understand and master the subject matter.

CodeAsk

CodeAsk is a code analysis tool designed to tackle complex issues such as code that seems to self-replicate, cryptic comments left by predecessors, messy and unclear code, and long-lasting temporary solutions. It offers intelligent code organization and analysis, security vulnerability detection, code quality assessment, and other interesting prompts to help users understand and work with legacy code more efficiently. The tool aims to translate 'legacy code mountains' into understandable language, creating an illusion of comprehension and facilitating knowledge transfer to new team members.

For similar tasks

aimet

AIMET is a library that provides advanced model quantization and compression techniques for trained neural network models. It provides features that have been proven to improve run-time performance of deep learning neural network models with lower compute and memory requirements and minimal impact to task accuracy. AIMET is designed to work with PyTorch, TensorFlow and ONNX models. We also host the AIMET Model Zoo - a collection of popular neural network models optimized for 8-bit inference. We also provide recipes for users to quantize floating point models using AIMET.

hqq

HQQ is a fast and accurate model quantizer that skips the need for calibration data. It's super simple to implement (just a few lines of code for the optimizer). It can crunch through quantizing the Llama2-70B model in only 4 minutes! 🚀

llm-resource

llm-resource is a comprehensive collection of high-quality resources for Large Language Models (LLM). It covers various aspects of LLM including algorithms, training, fine-tuning, alignment, inference, data engineering, compression, evaluation, prompt engineering, AI frameworks, AI basics, AI infrastructure, AI compilers, LLM application development, LLM operations, AI systems, and practical implementations. The repository aims to gather and share valuable resources related to LLM for the community to benefit from.

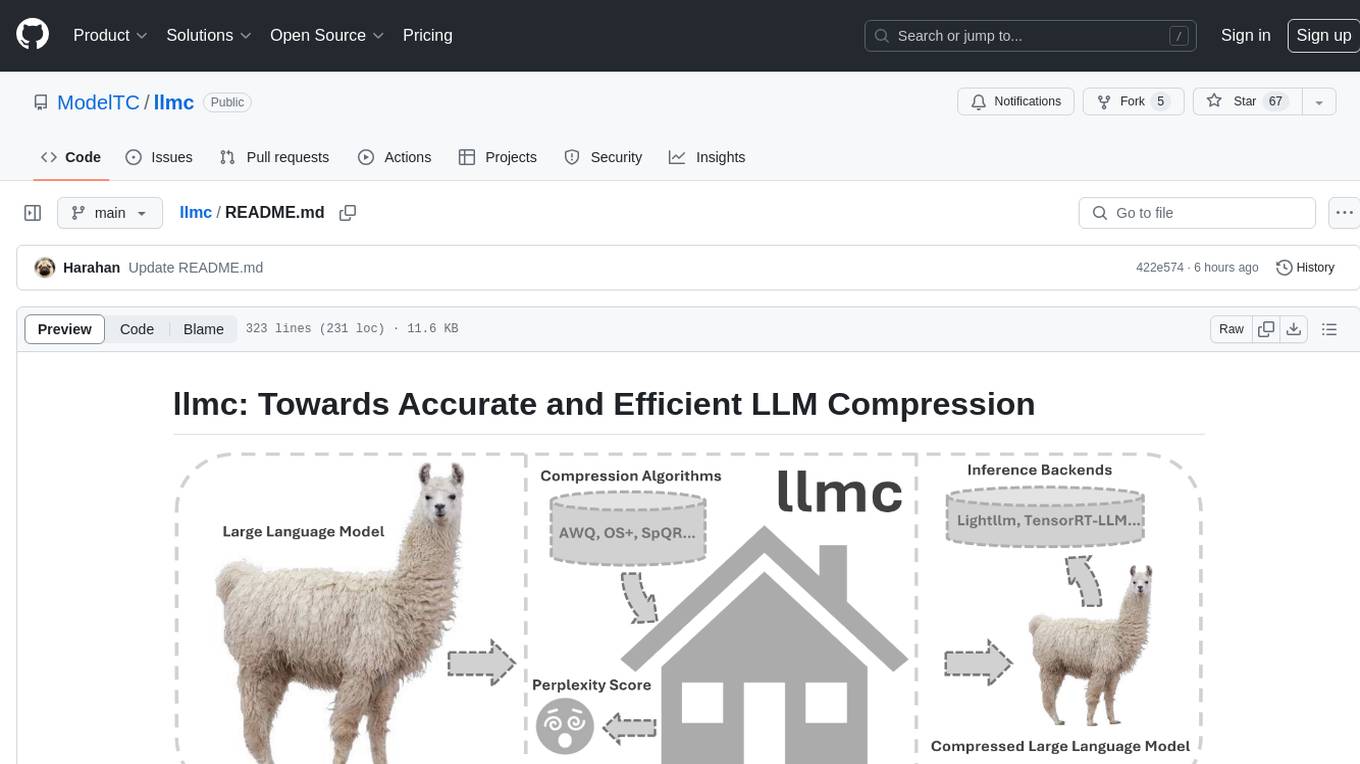

llmc

llmc is an off-the-shell tool designed for compressing LLM, leveraging state-of-the-art compression algorithms to enhance efficiency and reduce model size without compromising performance. It provides users with the ability to quantize LLMs, choose from various compression algorithms, export transformed models for further optimization, and directly infer compressed models with a shallow memory footprint. The tool supports a range of model types and quantization algorithms, with ongoing development to include pruning techniques. Users can design their configurations for quantization and evaluation, with documentation and examples planned for future updates. llmc is a valuable resource for researchers working on post-training quantization of large language models.

Awesome-Efficient-LLM

Awesome-Efficient-LLM is a curated list focusing on efficient large language models. It includes topics such as knowledge distillation, network pruning, quantization, inference acceleration, efficient MOE, efficient architecture of LLM, KV cache compression, text compression, low-rank decomposition, hardware/system, tuning, and survey. The repository provides a collection of papers and projects related to improving the efficiency of large language models through various techniques like sparsity, quantization, and compression.

TensorRT-Model-Optimizer

The NVIDIA TensorRT Model Optimizer is a library designed to quantize and compress deep learning models for optimized inference on GPUs. It offers state-of-the-art model optimization techniques including quantization and sparsity to reduce inference costs for generative AI models. Users can easily stack different optimization techniques to produce quantized checkpoints from torch or ONNX models. The quantized checkpoints are ready for deployment in inference frameworks like TensorRT-LLM or TensorRT, with planned integrations for NVIDIA NeMo and Megatron-LM. The tool also supports 8-bit quantization with Stable Diffusion for enterprise users on NVIDIA NIM. Model Optimizer is available for free on NVIDIA PyPI, and this repository serves as a platform for sharing examples, GPU-optimized recipes, and collecting community feedback.

Awesome_LLM_System-PaperList

Since the emergence of chatGPT in 2022, the acceleration of Large Language Model has become increasingly important. Here is a list of papers on LLMs inference and serving.

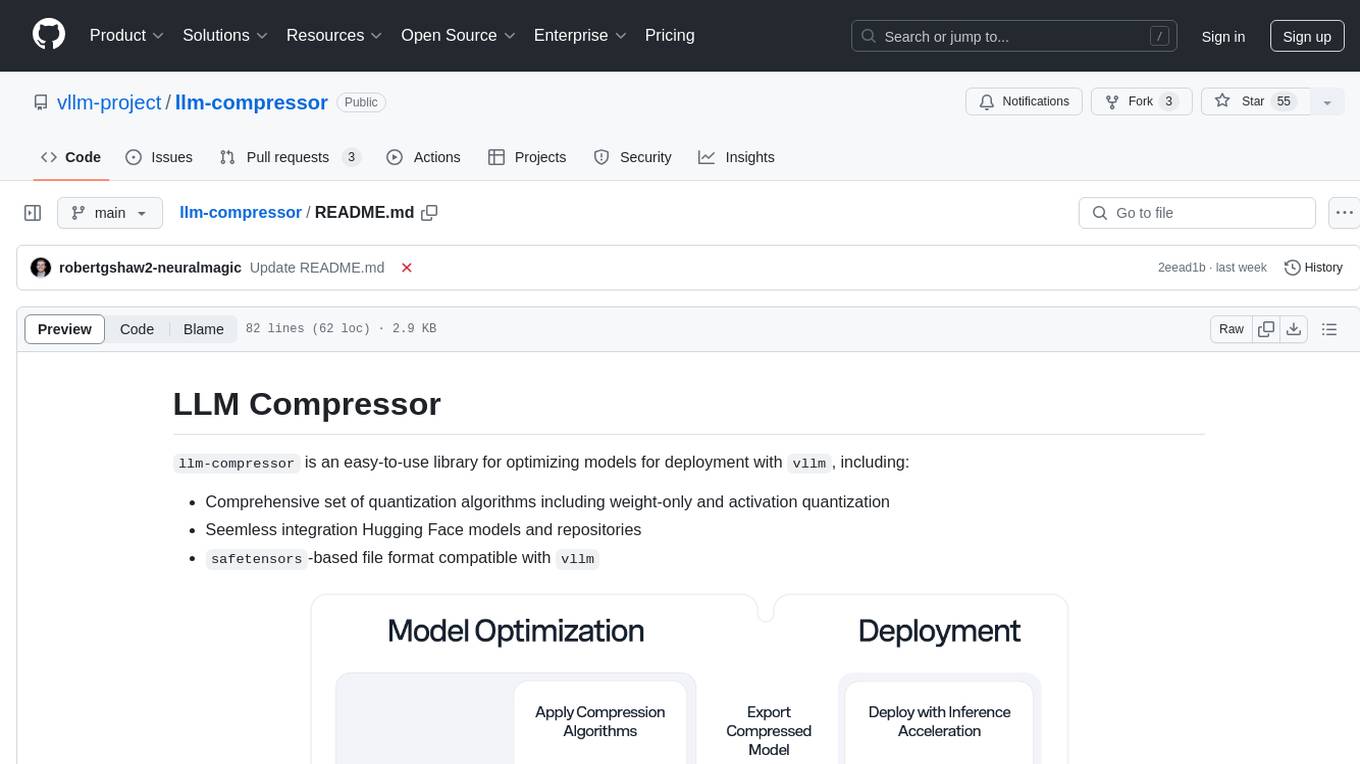

llm-compressor

llm-compressor is an easy-to-use library for optimizing models for deployment with vllm. It provides a comprehensive set of quantization algorithms, seamless integration with Hugging Face models and repositories, and supports mixed precision, activation quantization, and sparsity. Supported algorithms include PTQ, GPTQ, SmoothQuant, and SparseGPT. Installation can be done via git clone and local pip install. Compression can be easily applied by selecting an algorithm and calling the oneshot API. The library also offers end-to-end examples for model compression. Contributions to the code, examples, integrations, and documentation are appreciated.

For similar jobs

llm-resource

llm-resource is a comprehensive collection of high-quality resources for Large Language Models (LLM). It covers various aspects of LLM including algorithms, training, fine-tuning, alignment, inference, data engineering, compression, evaluation, prompt engineering, AI frameworks, AI basics, AI infrastructure, AI compilers, LLM application development, LLM operations, AI systems, and practical implementations. The repository aims to gather and share valuable resources related to LLM for the community to benefit from.

LitServe

LitServe is a high-throughput serving engine designed for deploying AI models at scale. It generates an API endpoint for models, handles batching, streaming, and autoscaling across CPU/GPUs. LitServe is built for enterprise scale with a focus on minimal, hackable code-base without bloat. It supports various model types like LLMs, vision, time-series, and works with frameworks like PyTorch, JAX, Tensorflow, and more. The tool allows users to focus on model performance rather than serving boilerplate, providing full control and flexibility.

how-to-optim-algorithm-in-cuda

This repository documents how to optimize common algorithms based on CUDA. It includes subdirectories with code implementations for specific optimizations. The optimizations cover topics such as compiling PyTorch from source, NVIDIA's reduce optimization, OneFlow's elementwise template, fast atomic add for half data types, upsample nearest2d optimization in OneFlow, optimized indexing in PyTorch, OneFlow's softmax kernel, linear attention optimization, and more. The repository also includes learning resources related to deep learning frameworks, compilers, and optimization techniques.

aiac

AIAC is a library and command line tool to generate Infrastructure as Code (IaC) templates, configurations, utilities, queries, and more via LLM providers such as OpenAI, Amazon Bedrock, and Ollama. Users can define multiple 'backends' targeting different LLM providers and environments using a simple configuration file. The tool allows users to ask a model to generate templates for different scenarios and composes an appropriate request to the selected provider, storing the resulting code to a file and/or printing it to standard output.

ENOVA

ENOVA is an open-source service for Large Language Model (LLM) deployment, monitoring, injection, and auto-scaling. It addresses challenges in deploying stable serverless LLM services on GPU clusters with auto-scaling by deconstructing the LLM service execution process and providing configuration recommendations and performance detection. Users can build and deploy LLM with few command lines, recommend optimal computing resources, experience LLM performance, observe operating status, achieve load balancing, and more. ENOVA ensures stable operation, cost-effectiveness, efficiency, and strong scalability of LLM services.

jina

Jina is a tool that allows users to build multimodal AI services and pipelines using cloud-native technologies. It provides a Pythonic experience for serving ML models and transitioning from local deployment to advanced orchestration frameworks like Docker-Compose, Kubernetes, or Jina AI Cloud. Users can build and serve models for any data type and deep learning framework, design high-performance services with easy scaling, serve LLM models while streaming their output, integrate with Docker containers via Executor Hub, and host on CPU/GPU using Jina AI Cloud. Jina also offers advanced orchestration and scaling capabilities, a smooth transition to the cloud, and easy scalability and concurrency features for applications. Users can deploy to their own cloud or system with Kubernetes and Docker Compose integration, and even deploy to JCloud for autoscaling and monitoring.

vidur

Vidur is a high-fidelity and extensible LLM inference simulator designed for capacity planning, deployment configuration optimization, testing new research ideas, and studying system performance of models under different workloads and configurations. It supports various models and devices, offers chrome trace exports, and can be set up using mamba, venv, or conda. Users can run the simulator with various parameters and monitor metrics using wandb. Contributions are welcome, subject to a Contributor License Agreement and adherence to the Microsoft Open Source Code of Conduct.

AI-System-School

AI System School is a curated list of research in machine learning systems, focusing on ML/DL infra, LLM infra, domain-specific infra, ML/LLM conferences, and general resources. It provides resources such as data processing, training systems, video systems, autoML systems, and more. The repository aims to help users navigate the landscape of AI systems and machine learning infrastructure, offering insights into conferences, surveys, books, videos, courses, and blogs related to the field.