ENOVA

A deployment, monitoring and autoscaling service towards serverless LLM serving.

Stars: 124

ENOVA is an open-source service for Large Language Model (LLM) deployment, monitoring, injection, and auto-scaling. It addresses challenges in deploying stable serverless LLM services on GPU clusters with auto-scaling by deconstructing the LLM service execution process and providing configuration recommendations and performance detection. Users can build and deploy LLM with few command lines, recommend optimal computing resources, experience LLM performance, observe operating status, achieve load balancing, and more. ENOVA ensures stable operation, cost-effectiveness, efficiency, and strong scalability of LLM services.

README:

ENOVA is an open-source service for LLM deployment, monitoring, injection, and auto-scaling. With the increasing popularity of large language model (LLM) backend systems, deploying stable serverless LLM services on GPU clusters with auto-scaling has become essential. However, challenges arise due to the diversity and co-location of applications in GPU clusters, leading to low service quality and GPU utilization.

To address these issues, ENOVA deconstructs the LLM service execution process and incorporates a configuration recommendation module for automatic deployment on any GPU cluster and a performance detection module for auto-scaling. Additionally, ENOVA features a deployment execution engine for efficient GPU cluster scheduling.

With ENOVA, users can:

- Build and deploy LLM with only few command lines

- Recommend optimal computing resources and operating parameter configurations for LLM

- Quick experience LLM performance with ENOVA by request injection offered

- In-depth observation of LLM operating status and abnormal self-healing

- Achieve load balancing through autoscaling

Here are ENOVA's core technical points and values:

- Configuration Recommendation: ENOVA can automatically identify various LLMs (open-source or fine-tuned), and recommend the most suitable parameter configurations for deploying the model, such as GPU type, maximal batch size, replicas, weights etc.

- Performance Detection: ENOVA enables real-time monitoring of service quality and abnormal usage of computational resources.

- Deep Observability: By conducting in-depth observation of the entire chain of task execution of large models, we can provide the best guidance for maximizing model performance and optimizing the utilization of computing resources.

- Deployment & Execution: Achieve rapid deployment and model serving, aiming to achieve autoscaling objectives.

Based on the aforementioned capabilities of ENOVA, we can ensure that LLM services with ENOVA are:

- Stable: Achieve a high availability rate of over 99%, ensuring stable operation without downtime.

- Cost-effective: Increase resource utilization by over 50% and enhance comprehensive GPU memory utilization from 40% to 90%.

- Efficient: Boost deployment efficiency by over 10 times and run LLMs with lower latency and higher throughputs

- Strong Scalability: ENOVA can automatically cluster different task types, thus adapting to applications in many fields.

We can demonstrate the powerful capabilities of E NOVA in model deployment and performance monitoring by swiftly running an open-source AI model on your GPUs and conducting request injection tests.

- OS: Linux

- Docker

- Python: >=3.10

- GPU: Nvidia GPUs with compute capability 7.0 or higher

[!NOTE]

If the above conditions are not met, the installation and operation of ENOVA may fail. If you do not have available GPU resources, we recommend that you use the free GPU resources on Google Colab to install and experience ENOVA.

# Create a new Python environment

conda create -n enova_env python=3.10

conda activate enova_env

# Install ENOVA

# Source: https://pypi.python.org/simple/

pip install enova_instrumentation_llmo

pip install enova- To verify the installation, run:

enova -h The expected output is:

Usage: enova [OPTIONS] COMMAND [ARGS]...

███████╗███╗ ██╗ ██████╗ ██╗ ██╗ █████╗

██╔════╝████╗ ██║██╔═══██╗██║ ██║██╔══██╗

█████╗ ██╔██╗ ██║██║ ██║██║ ██║███████║

██╔══╝ ██║╚██╗██║██║ ██║╚██╗ ██╔╝██╔══██║

███████╗██║ ╚████║╚██████╔╝ ╚████╔╝ ██║ ██║

╚══════╝╚═╝ ╚═══╝ ╚═════╝ ╚═══╝ ╚═╝ ╚═╝

ENOVA is an open-source llm deployment, monitoring, injection and auto-scaling service.

It provides a set of commands to deploy stable serverless serving of LLM on GPU clusters with auto-scaling.

Options:

-v, --version Show the version and exit.

-h, --help Show this message and exit.

Commands:

algo Run the autoscaling service.

app Start ENOVA application server.

enode Deploy the target LLM and launch the LLM API service.

injector Run the autoscaling service.

mon Run the monitors of LLM server

pilot Start an all-in-one LLM server with deployment, monitoring,...

webui Build agent at this page based on the launched LLM API service.

- Start an all-in-one LLM server with deployment, monitoring, injection and auto-scaling service:

enova pilot run --model mistralai/Mistral-7B-Instruct-v0.1

# openai

enova pilot run --model mistralai/Mistral-7B-Instruct-v0.1 --vllm_mode openaiUse proxy to download LLMs:

enova pilot run --model mistralai/Mistral-7B-Instruct-v0.1 --hf_proxy xxx[!TIP]

- The default port of LLM service is 9199.

- The default port of grafana server is 32827.

- The default port of LLM webUI server is 8501.

- The default port of ENOVA application server is 8182.

- Check Deployed LLM service via ENOVA Application Server:

http://localhost:8182/instance

- Test the Deployed LLM service with an prompt:

Use WebUI:

http://localhost:8501

Use Shell:

curl -X POST http://localhost:9199/generate \

-d '{

"prompt": "San Francisco is a",

"max_tokens": 1024,

"temperature": 0.9,

"top_p": 0.9

}'

# openai

curl http://localhost:9199/v1/completions \

-H "Content-Type: application/json" \

-d '{

"model": "mistralai/Mistral-7B-Instruct-v0.1",

"prompt": "San Francisco is a",

"max_tokens": 128,

"temperature": 0

}'- Monitor the LLM Service Quality via ENOVA Application Server:

http://localhost:8182/instance

- Stop all service

enova pilot stop --service all

In addition to offering an all-in-one solution for service deployment, monitoring, and autoscaling, ENOVA also provides support for single modules.

The LLM deployment service facilitates the deployment of LLMs and provides a stable API for accessing LLMs.

enova enode run --model mistralai/Mistral-7B-Instruct-v0.1[!NOTE]

The LLM server is launched with default vllm backend. OpenAI API and Generate API are both supported. vllm config can be specified using command-line parameters like:

enova enode run --model mistralai/Mistral-7B-Instruct-v0.1 --host 127.0.0.1 --port 9199This service features a WebUI page for dialog interaction, where the serving host and port for the LLM server, and the host and port for the WebUI service, are configurable parameters.

enova webui run --serving_host 127.0.0.1 --serving_port 9199 --host 127.0.0.1 --port 8501The autoscaling service is automatically launched and managed by escaler module.

We implemented a request injection module using JMeter to simulate real user requests for evaluating LLM performance. The module allows simulation of request arrival probabilities using two modes: Poisson distribution and normal distribution. Further details on the injection operation are available at:

http://localhost:8182/instance

The monitoring system is designed for monitoring and autoscaling, which contains real-time data collection, storage, and consumption. We can manage the LLM monitoring service via:

- Start llm monitoring service

enova mon start- Check service status

enova mon status- Stop monitoring service

enova mon stopMonitoring metrics are collected using the DCGM exporter, Prometheus exporters, and the OpenTelemetry collector. A brief description is provided in the following tables. For more details, please refer to the Grafana dashboard.

| Metric Type | Metric Description |

|---|---|

| API Service | The number of requests sent to LLM services per second |

| API Service | The number of requests processed by LLM services per second |

| API Service | The number of requests successfully processed per second |

| API Service | The success rate of requests processed by LLM services per second |

| API Service | The number of requests being processed by LLM services |

| API Service | The average execution time per request processed by LLM services |

| API Service | The average request size of requests per second |

| API Service | The average response size of requests per second |

| LLM Performance | The average prompt throughput per second |

| LLM Performance | The average generation throughput per second |

| LLM Performance | The number of requests being processed by the deployed LLM |

| LLM Performance | The number of requests being pended by the deployed LLM |

| LLM Performance | The utilization ratio of memory allocated for KV cache |

| GPU Utilization | DCGM Metrics, like DCGM_FI_DEV_GPU_UTIL. |

More detailed metrics can be viewed on our application server. In the process of deploying the all-in-one llm service with ENOVA, we also created the corresponding indicator dashboard in Grafana and supported viewing of more detailed trace data.

- URL:http://localhost:32827/dashboards

- Default user account:admin

- Password:grafana

@inproceedings{tao2024ENOVA,

title={ENOVA: Autoscaling towards Cost-effective and Stable Serverless LLM Serving},

author={Tao Huang and Pengfei Chen and Kyoka Gong and Jocky Hawk and Zachary Bright and Wenxin Xie and Kecheng Huang and Zhi Ji},

booktitle={arXiv preprint arXiv:},

year={2024}

}

We use Slack workspace for the collaboration on building ENOVA.

- Slack workspace

- Browse our website for more informations

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ENOVA

Similar Open Source Tools

ENOVA

ENOVA is an open-source service for Large Language Model (LLM) deployment, monitoring, injection, and auto-scaling. It addresses challenges in deploying stable serverless LLM services on GPU clusters with auto-scaling by deconstructing the LLM service execution process and providing configuration recommendations and performance detection. Users can build and deploy LLM with few command lines, recommend optimal computing resources, experience LLM performance, observe operating status, achieve load balancing, and more. ENOVA ensures stable operation, cost-effectiveness, efficiency, and strong scalability of LLM services.

proton

Proton is the fastest SQL pipeline engine in a single C++ binary, designed for stream processing, analytics, observability, and AI. It provides a simple, fast, and efficient alternative to ksqlDB and Apache Flink, powered by ClickHouse engine. Proton offers native source/sink support for various databases, streaming ingestion, multi-stream JOINs, incremental materialized views, alerting, tasks, and UDF in Python/JS. It is lightweight, with no JVM or dependencies, and offers high performance through SIMD optimization. Proton is ideal for real-time analytics ETL/pipeline, telemetry pipeline and alerting, real-time feature pipeline for AI/ML, and more.

OpenManus

OpenManus is an open-source project aiming to replicate the capabilities of the Manus AI agent, known for autonomously executing complex tasks like travel planning and stock analysis. The project provides a modular, containerized framework using Docker, Python, and JavaScript, allowing developers to build, deploy, and experiment with a multi-agent AI system. Features include collaborative AI agents, Dockerized environment, task execution support, tool integration, modular design, and community-driven development. Users can interact with OpenManus via CLI, API, or web UI, and the project welcomes contributions to enhance its capabilities.

ccprompts

ccprompts is a collection of ~70 Claude Code commands for software development workflows with agent generation capabilities. It includes safety validation and can be used directly with Claude Code or adapted for specific needs. The agent template system provides a wizard for creating specialized sub-agents (e.g., security auditors, systems architects) with standardized formatting and proper tool access. The repository is under active development, so caution is advised when using it in production environments.

Kuzco

Enhance your Terraform and OpenTofu configurations with intelligent analysis powered by local LLMs. Kuzco reviews your resources, compares them to the provider schema, detects unused parameters, and suggests improvements for a more secure, reliable, and optimized setup. It saves time by avoiding the need to dig through the Terraform registry and decipher unclear options.

cookiecutter-data-science

Cookiecutter Data Science (CCDS) is a tool for setting up a data science project template that incorporates best practices. It provides a logical, reasonably standardized but flexible project structure for doing and sharing data science work. The tool helps users to easily start new data science projects with a well-organized directory structure, including folders for data, models, notebooks, reports, and more. By following the project template created by CCDS, users can streamline their data science workflow and ensure consistency across projects.

agentkit

AgentKit is a framework developed by Coinbase Developer Platform for enabling AI agents to take actions onchain. It is designed to be framework-agnostic and wallet-agnostic, allowing users to integrate it with any AI framework and any wallet. The tool is actively being developed and encourages community contributions. AgentKit provides support for various protocols, frameworks, wallets, and networks, making it versatile for blockchain transactions and API integrations using natural language inputs.

openkf

OpenKF (Open Knowledge Flow) is an online intelligent customer service system. It is an open-source customer service system based on OpenIM, supporting LLM (Local Knowledgebase) customer service and multi-channel customer service. It is easy to integrate with third-party systems, deploy, and perform secondary development. The system provides features like login page, config page, dashboard page, platform page, and session page. Users can quickly get started with OpenKF by following the installation and run instructions. The architecture follows MVC design with a standardized directory structure. The community encourages involvement through community meetings, contributions, and development. OpenKF is licensed under the Apache 2.0 license.

nodejs-todo-api-boilerplate

An LLM-powered code generation tool that relies on the built-in Node.js API Typescript Template Project to easily generate clean, well-structured CRUD module code from text description. It orchestrates 3 LLM micro-agents (`Developer`, `Troubleshooter` and `TestsFixer`) to generate code, fix compilation errors, and ensure passing E2E tests. The process includes module code generation, DB migration creation, seeding data, and running tests to validate output. By cycling through these steps, it guarantees consistent and production-ready CRUD code aligned with vertical slicing architecture.

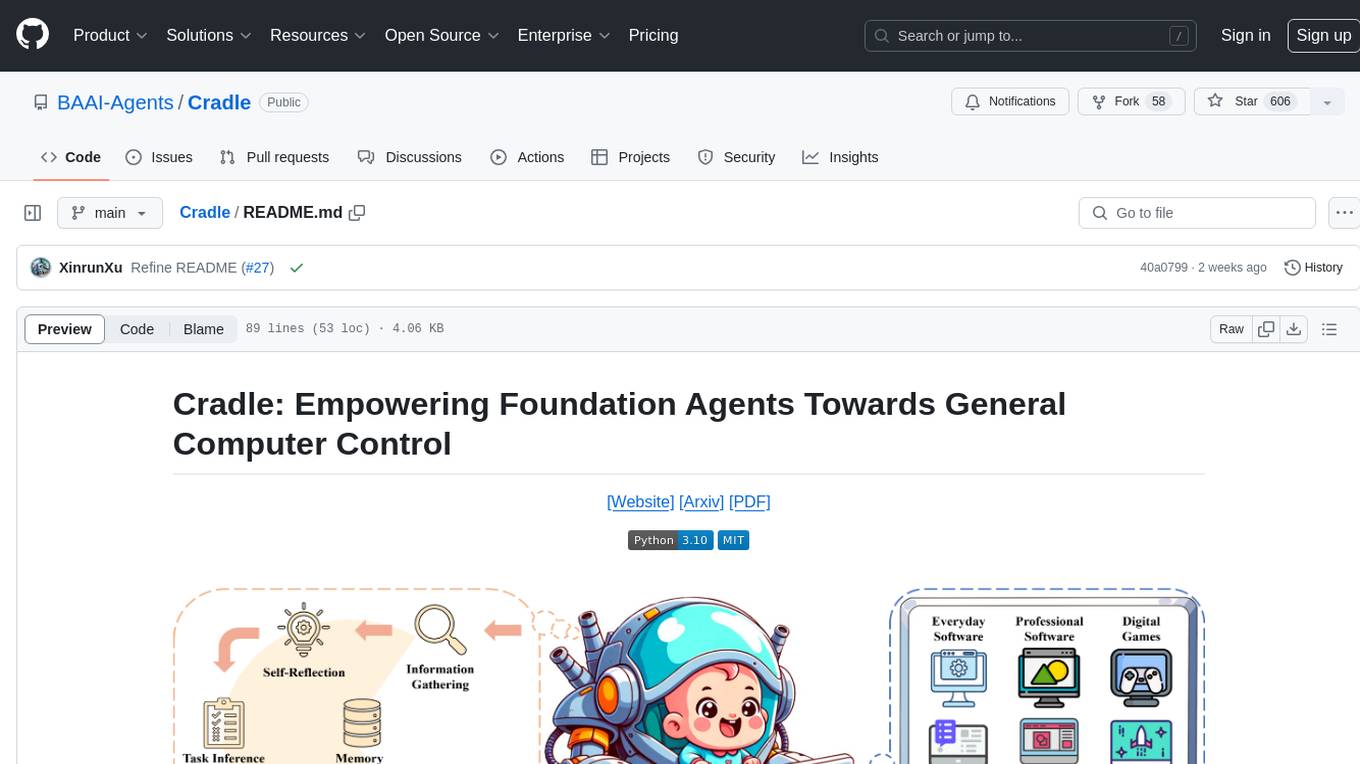

Cradle

The Cradle project is a framework designed for General Computer Control (GCC), empowering foundation agents to excel in various computer tasks through strong reasoning abilities, self-improvement, and skill curation. It provides a standardized environment with minimal requirements, constantly evolving to support more games and software. The repository includes released versions, publications, and relevant assets.

eliza

Eliza is a versatile AI agent operating system designed to support various models and connectors, enabling users to create chatbots, autonomous agents, handle business processes, create video game NPCs, and engage in trading. It offers multi-agent and room support, document ingestion and interaction, retrievable memory and document store, and extensibility to create custom actions and clients. Eliza is easy to use and provides a comprehensive solution for AI agent development.

clearml-server

ClearML Server is a backend service infrastructure for ClearML, facilitating collaboration and experiment management. It includes a web app, RESTful API, and file server for storing images and models. Users can deploy ClearML Server using Docker, AWS EC2 AMI, or Kubernetes. The system design supports single IP or sub-domain configurations with specific open ports. ClearML-Agent Services container allows launching long-lasting jobs and various use cases like auto-scaler service, controllers, optimizer, and applications. Advanced functionality includes web login authentication and non-responsive experiments watchdog. Upgrading ClearML Server involves stopping containers, backing up data, downloading the latest docker-compose.yml file, configuring ClearML-Agent Services, and spinning up docker containers. Community support is available through ClearML FAQ, Stack Overflow, GitHub issues, and email contact.

chatnio

Chat Nio is a next-generation AIGC one-stop business solution that combines the advantages of frontend-oriented lightweight deployment projects with powerful API distribution systems. It offers rich model support, beautiful UI design, complete Markdown support, multi-theme support, internationalization support, text-to-image support, powerful conversation sync, model market & preset system, rich file parsing, full model internet search, Progressive Web App (PWA) support, comprehensive backend management, multiple billing methods, innovative model caching, and additional features. The project aims to address limitations in conversation synchronization, billing, file parsing, conversation URL sharing, channel management, and API call support found in existing AIGC commercial sites, while also providing a user-friendly interface design and C-end features.

y-gui

y-gui is a web-based graphical interface for AI chat interactions with support for multiple AI models and powerful MCP integrations. It provides interactive chat capabilities with AI models, supports multiple bot configurations, and integrates with Gmail, Google Calendar, and image generation services. The tool offers a comprehensive MCP integration system, secure authentication with Auth0 and Google login, dark/light theme support, real-time updates, and responsive design for all devices. The architecture consists of a frontend React application and a backend Cloudflare Workers with D1 storage. It allows users to manage emails, create calendar events, and generate images directly within chat conversations.

trendFinder

Trend Finder is a tool designed to help users stay updated on trending topics on social media by collecting and analyzing posts from key influencers. It sends Slack notifications when new trends or product launches are detected, saving time, keeping users informed, and enabling quick responses to emerging opportunities. The tool features AI-powered trend analysis, social media and website monitoring, instant Slack notifications, and scheduled monitoring using cron jobs. Built with Node.js and Express.js, Trend Finder integrates with Together AI, Twitter/X API, Firecrawl, and Slack Webhooks for notifications.

kubewall

kubewall is an open-source, single-binary Kubernetes dashboard with multi-cluster management and AI integration. It provides a simple and rich real-time interface to manage and investigate your clusters. With features like multi-cluster management, AI-powered troubleshooting, real-time monitoring, single-binary deployment, in-depth resource views, browser-based access, search and filter capabilities, privacy by default, port forwarding, live refresh, aggregated pod logs, and clean resource management, kubewall offers a comprehensive solution for Kubernetes cluster management.

For similar tasks

ENOVA

ENOVA is an open-source service for Large Language Model (LLM) deployment, monitoring, injection, and auto-scaling. It addresses challenges in deploying stable serverless LLM services on GPU clusters with auto-scaling by deconstructing the LLM service execution process and providing configuration recommendations and performance detection. Users can build and deploy LLM with few command lines, recommend optimal computing resources, experience LLM performance, observe operating status, achieve load balancing, and more. ENOVA ensures stable operation, cost-effectiveness, efficiency, and strong scalability of LLM services.

ai-app

The 'ai-app' repository is a comprehensive collection of tools and resources related to artificial intelligence, focusing on topics such as server environment setup, PyCharm and Anaconda installation, large model deployment and training, Transformer principles, RAG technology, vector databases, AI image, voice, and music generation, and AI Agent frameworks. It also includes practical guides and tutorials on implementing various AI applications. The repository serves as a valuable resource for individuals interested in exploring different aspects of AI technology.

step_into_llm

The 'step_into_llm' repository is dedicated to the 昇思MindSpore technology open class, which focuses on exploring cutting-edge technologies, combining theory with practical applications, expert interpretations, open sharing, and empowering competitions. The repository contains course materials, including slides and code, for the ongoing second phase of the course. It covers various topics related to large language models (LLMs) such as Transformer, BERT, GPT, GPT2, and more. The course aims to guide developers interested in LLMs from theory to practical implementation, with a special emphasis on the development and application of large models.

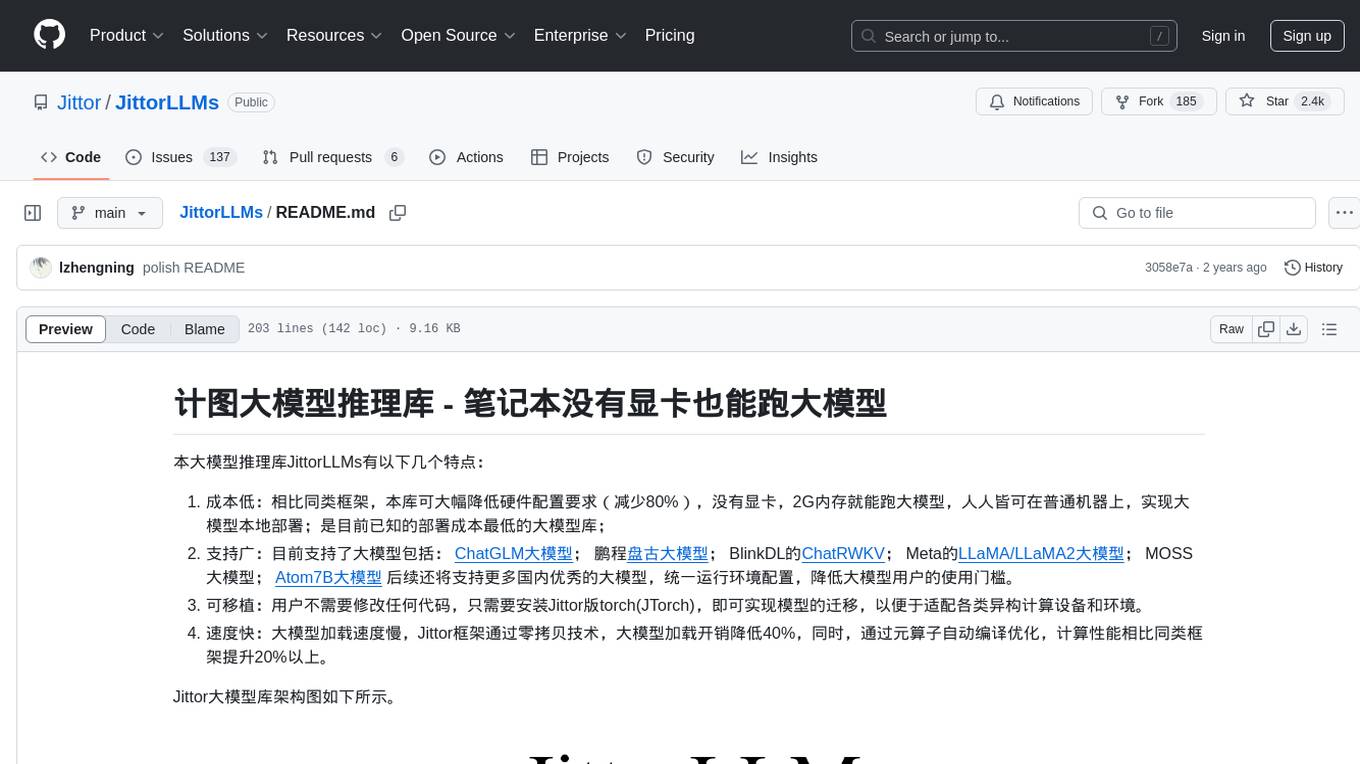

JittorLLMs

JittorLLMs is a large model inference library that allows running large models on machines with low hardware requirements. It significantly reduces hardware configuration demands, enabling deployment on ordinary machines with 2GB of memory. It supports various large models and provides a unified environment configuration for users. Users can easily migrate models without modifying any code by installing Jittor version of torch (JTorch). The framework offers fast model loading speed, optimized computation performance, and portability across different computing devices and environments.

xllm

xLLM is an efficient LLM inference framework optimized for Chinese AI accelerators, enabling enterprise-grade deployment with enhanced efficiency and reduced cost. It adopts a service-engine decoupled inference architecture, achieving breakthrough efficiency through technologies like elastic scheduling, dynamic PD disaggregation, multi-stream parallel computing, graph fusion optimization, and global KV cache management. xLLM supports deployment of mainstream large models on Chinese AI accelerators, empowering enterprises in scenarios like intelligent customer service, risk control, supply chain optimization, ad recommendation, and more.

For similar jobs

llm-resource

llm-resource is a comprehensive collection of high-quality resources for Large Language Models (LLM). It covers various aspects of LLM including algorithms, training, fine-tuning, alignment, inference, data engineering, compression, evaluation, prompt engineering, AI frameworks, AI basics, AI infrastructure, AI compilers, LLM application development, LLM operations, AI systems, and practical implementations. The repository aims to gather and share valuable resources related to LLM for the community to benefit from.

LitServe

LitServe is a high-throughput serving engine designed for deploying AI models at scale. It generates an API endpoint for models, handles batching, streaming, and autoscaling across CPU/GPUs. LitServe is built for enterprise scale with a focus on minimal, hackable code-base without bloat. It supports various model types like LLMs, vision, time-series, and works with frameworks like PyTorch, JAX, Tensorflow, and more. The tool allows users to focus on model performance rather than serving boilerplate, providing full control and flexibility.

how-to-optim-algorithm-in-cuda

This repository documents how to optimize common algorithms based on CUDA. It includes subdirectories with code implementations for specific optimizations. The optimizations cover topics such as compiling PyTorch from source, NVIDIA's reduce optimization, OneFlow's elementwise template, fast atomic add for half data types, upsample nearest2d optimization in OneFlow, optimized indexing in PyTorch, OneFlow's softmax kernel, linear attention optimization, and more. The repository also includes learning resources related to deep learning frameworks, compilers, and optimization techniques.

aiac

AIAC is a library and command line tool to generate Infrastructure as Code (IaC) templates, configurations, utilities, queries, and more via LLM providers such as OpenAI, Amazon Bedrock, and Ollama. Users can define multiple 'backends' targeting different LLM providers and environments using a simple configuration file. The tool allows users to ask a model to generate templates for different scenarios and composes an appropriate request to the selected provider, storing the resulting code to a file and/or printing it to standard output.

ENOVA

ENOVA is an open-source service for Large Language Model (LLM) deployment, monitoring, injection, and auto-scaling. It addresses challenges in deploying stable serverless LLM services on GPU clusters with auto-scaling by deconstructing the LLM service execution process and providing configuration recommendations and performance detection. Users can build and deploy LLM with few command lines, recommend optimal computing resources, experience LLM performance, observe operating status, achieve load balancing, and more. ENOVA ensures stable operation, cost-effectiveness, efficiency, and strong scalability of LLM services.

jina

Jina is a tool that allows users to build multimodal AI services and pipelines using cloud-native technologies. It provides a Pythonic experience for serving ML models and transitioning from local deployment to advanced orchestration frameworks like Docker-Compose, Kubernetes, or Jina AI Cloud. Users can build and serve models for any data type and deep learning framework, design high-performance services with easy scaling, serve LLM models while streaming their output, integrate with Docker containers via Executor Hub, and host on CPU/GPU using Jina AI Cloud. Jina also offers advanced orchestration and scaling capabilities, a smooth transition to the cloud, and easy scalability and concurrency features for applications. Users can deploy to their own cloud or system with Kubernetes and Docker Compose integration, and even deploy to JCloud for autoscaling and monitoring.

vidur

Vidur is a high-fidelity and extensible LLM inference simulator designed for capacity planning, deployment configuration optimization, testing new research ideas, and studying system performance of models under different workloads and configurations. It supports various models and devices, offers chrome trace exports, and can be set up using mamba, venv, or conda. Users can run the simulator with various parameters and monitor metrics using wandb. Contributions are welcome, subject to a Contributor License Agreement and adherence to the Microsoft Open Source Code of Conduct.

AI-System-School

AI System School is a curated list of research in machine learning systems, focusing on ML/DL infra, LLM infra, domain-specific infra, ML/LLM conferences, and general resources. It provides resources such as data processing, training systems, video systems, autoML systems, and more. The repository aims to help users navigate the landscape of AI systems and machine learning infrastructure, offering insights into conferences, surveys, books, videos, courses, and blogs related to the field.