nous

TypeScript AI agent platform with Autonomous agents, Software developer agents, AI code review agents and more

Stars: 766

Nous is an open-source TypeScript platform for autonomous AI agents and LLM based workflows. It aims to automate processes, support requests, review code, assist with refactorings, and more. The platform supports various integrations, multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It offers advanced features like reasoning/planning, memory and function call history, hierarchical task decomposition, and control-loop function calling options. Nous is designed to be a flexible platform for the TypeScript community to expand and support different use cases and integrations.

README:

The open-source TypeScript platform for autonomous AI agents and LLM based workflows

Nous (Greek: νοῦς) is a term from classical philosophy often associated with intellect or intelligence, represents the human mind's capacity to comprehend truth and reality.Home | Setup | Observability | Function calling | Autonomous AI Agent | AI Software Engineer | AI Code reviews | Tools/Integrations | Roadmap

The Nous Story | Features | UI Examples | Code examples | Contributing

Nous started from a simple goal: to harness AI's potential to enhance real-world productivity, born in DevOps and Platform Engineering space. We envisioned a tool that could:

- Automate various processes and support requests, and triage build failures.

- Review code for compliance with standards and best practices.

- Assist with large refactorings, and more.

At TrafficGuard we process billions of events a month for our global clients, increasing their ad spend ROI by protecting against bots and other forms of invalid traffic. Our SaaS on GCP comprises projects developed in TypeScript, Python, GoogleSQL, PHP and Terraform, deployed from GitLab.

With open source projects typically Python/GitHub focused, and the vendor AI tools being focused in their silos, we saw a need for TypeScript based tooling which can work across our entire tech stack, and understand the overall architecture.

Through its evolution we've designed nous as a flexible platform for the TypeScript community to expand and support the use cases and integrations of your choice.

Our design choice of Firestore for the initial database implementation, with Cloud Run, provides a scale-to-zero solution with zero-cost using the free tier. With the intention to support uses cases such as your own custom personal assistant, always available via mobile.

Key features include:

- Advanced autonomous agents

- Reasoning/planning inspired from Google's Self-Discover paper

- Memory and function call history for complex, multi-step workflows

- Adaptive iterative planning with hierarchical task decomposition

- Two control-loop function calling options (LLM-independent):

- Custom XML-based function calling

- Dynamic code generation with sandboxed execution for multistep function calling and logic

- Opportunistically can significantly reduce cost and latency over LLM-native/XML function calling

- LLM function schemas auto-generated from source code

- Function callable integrations:

- Filesystem, Jira, Slack, Perplexity, Gitlab, GitHub and more

- Supports multiple LLMs/Services:

- OpenAI, Anthropic (native & Vertex), Gemini, Groq, Fireworks, Together.ai, DeepSeek, Ollama

- CLI and Web interface

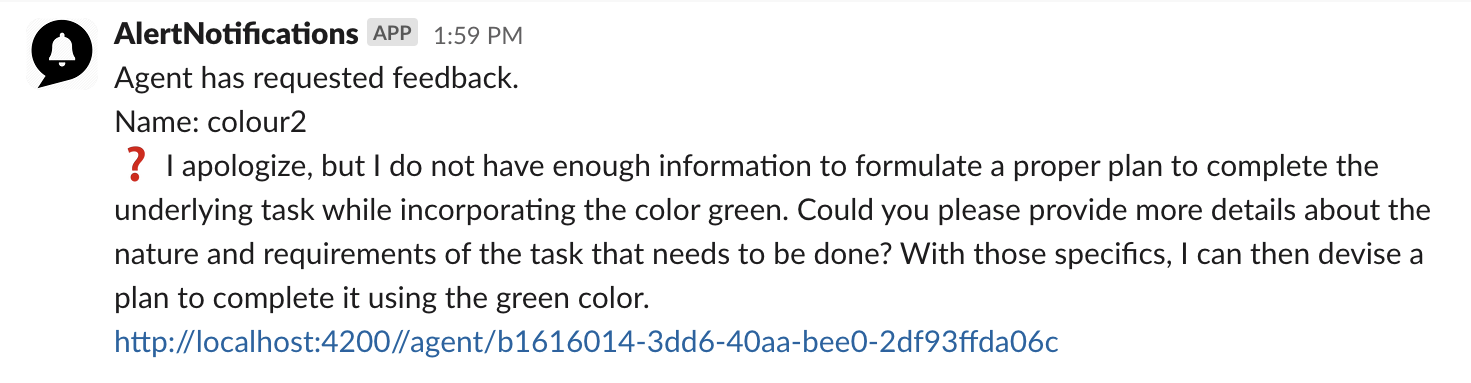

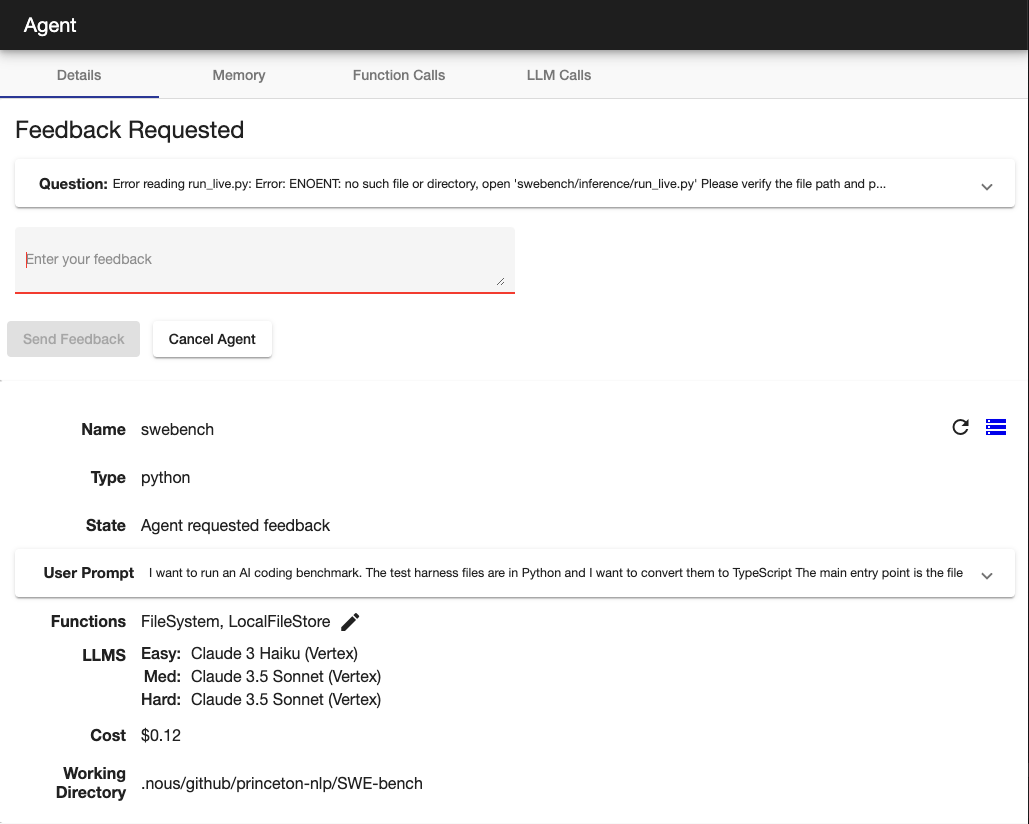

- Human-in-the-loop for:

- Budget control

- Agent initiated questions

- Error handling

- Flexible deployment options:

- Run locally from the command line or through the web UI

- Scale-to-zero deployment on Firestore & Cloud Run

- Multi-user SSO enterprise deployment (with Google Cloud IAP)

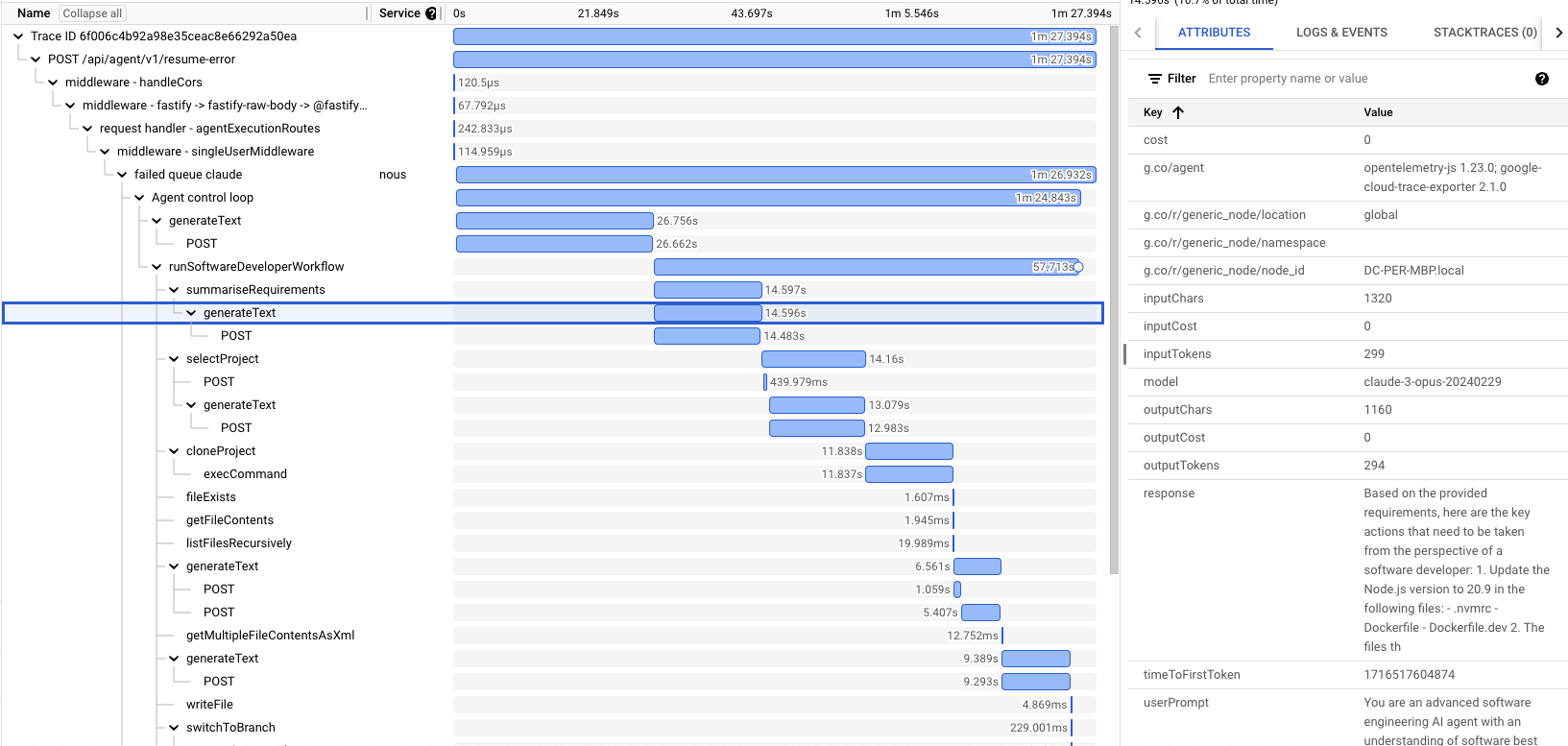

- Observability with OpenTelemetry tracing

- Code Editing Agent:

- Auto-detection of project initialization, compile, test and lint

- Find the relevant files to edit and perform initial analysis

- Code editing loop with compile, lint, test, fix (editing delegates to Aider)

- Compile error analyser can search online, add additional files and packages

- Software Engineer Agent:

- Find the appropriate repository from GitLab/GitHub

- Clone and create branch

- Call the Code Editing Agent

- Create merge request

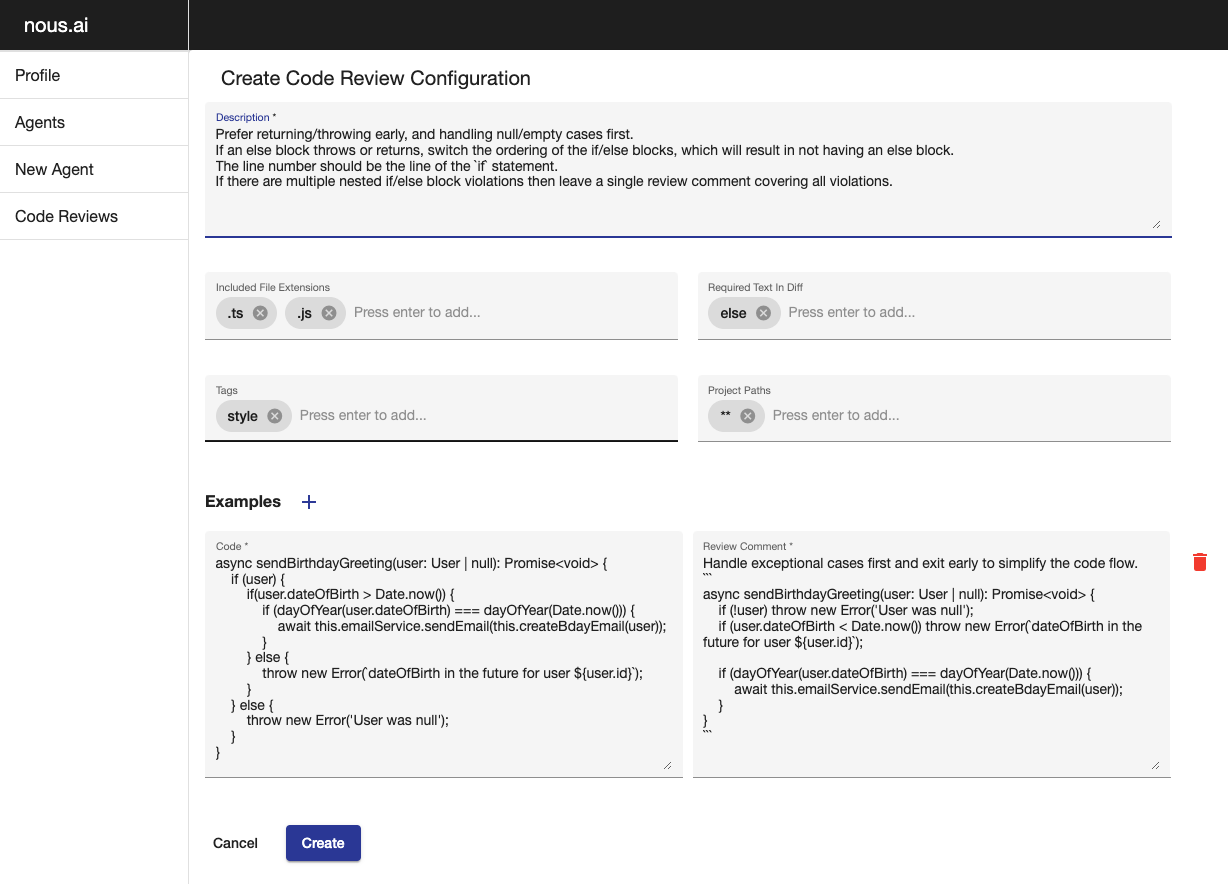

- Code Review agent:

- Configurable code review guidelines

- Posts comments on GitLab merge requests at the appropriate line with suggested changes

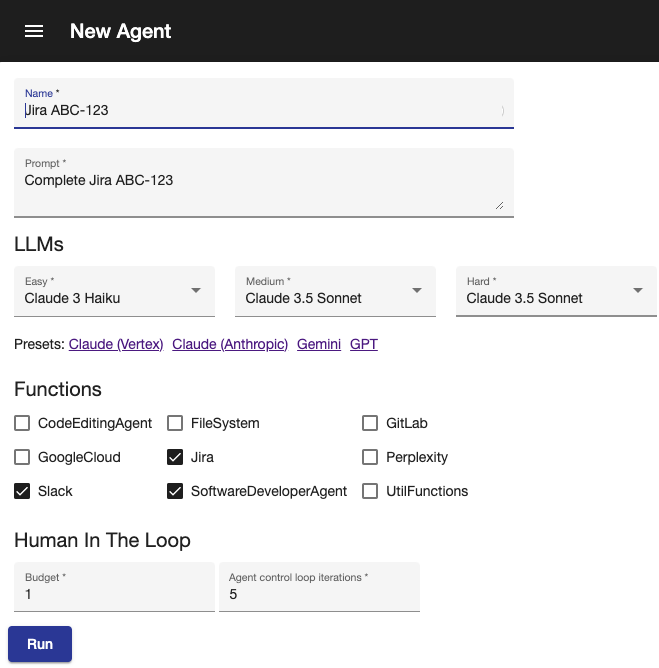

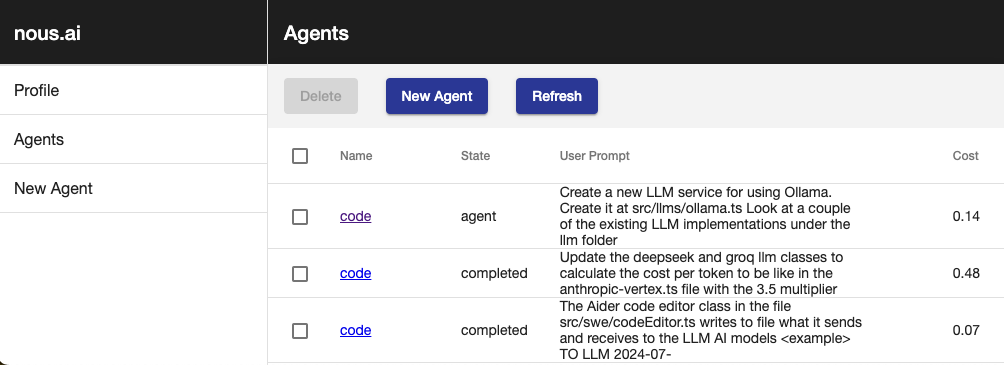

New Agent | Sample trace | Human in the loop notification | Agent requested feedback | List agents | Code review config

Nous doesn't use LangChain, for many reasons that you can read online

Let's compare the LangChain document example for Multiple Chains to the equivalent Nous implementation.

import { PromptTemplate } from "@langchain/core/prompts";

import { RunnableSequence } from "@langchain/core/runnables";

import { StringOutputParser } from "@langchain/core/output_parsers";

import { ChatAnthropic } from "@langchain/anthropic";

const prompt1 = PromptTemplate.fromTemplate(

`What is the city {person} is from? Only respond with the name of the city.`

);

const prompt2 = PromptTemplate.fromTemplate(

`What country is the city {city} in? Respond in {language}.`

);

const model = new ChatAnthropic({});

const chain = prompt1.pipe(model).pipe(new StringOutputParser());

const combinedChain = RunnableSequence.from([

{

city: chain,

language: (input) => input.language,

},

prompt2,

model,

new StringOutputParser(),

]);

const result = await combinedChain.invoke({

person: "Obama",

language: "German",

});

console.log(result);import { llms } from '#agent/context'

import { anthropicLLMs } from '#llms/anthropic'

const prompt1 = (person: string) => `What is the city ${person} is from? Only respond with the name of the city.`;

const prompt2 = (city: string, language: string) => `What country is the city ${city} in? Respond in ${language}.`;

runAgentWorkflow({ llms: anthropicLLMs() }, async () => {

const city = await llms().easy.generateText(prompt1('Obama'));

const result = await llms().easy.generateText(prompt2(city, 'German'));

console.log(result);

});The Nous code also has the advantage of static typing with the prompt arguments, enabling you to refactor with ease. Using simple control flow allows easy debugging with breakpoints/logging.

To run a fully autonomous agent:

startAgent({

agentName: 'Create ollama',

initialPrompt: 'Research how to use ollama using node.js and create a new implementation under the llm folder. Look at a couple of the other files in that folder for the style which must be followed',

functions: [FileSystem, Perplexity, CodeEditinAgent],

llms,

});The Nous code also has the advantage of static typing with the prompt arguments, enabling you to refactor with ease. Using simple control flow allows easy debugging with breakpoints/logging.

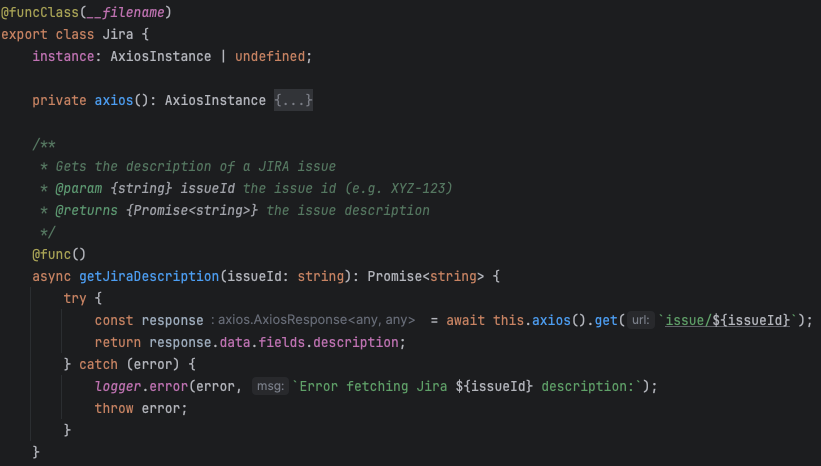

LLM function calling schemas are automatically generated by having the @func decorator on class methods.

Visit our documentation site for the getting started guide and more details.

We warmly welcome contributions to the project through issues, pull requests or discussions

Contributed by TrafficGuard - Increasing the ROI on your ad spend.

Reach out to us as [email protected] if you'd like support to ramp up as a contributor.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nous

Similar Open Source Tools

nous

Nous is an open-source TypeScript platform for autonomous AI agents and LLM based workflows. It aims to automate processes, support requests, review code, assist with refactorings, and more. The platform supports various integrations, multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It offers advanced features like reasoning/planning, memory and function call history, hierarchical task decomposition, and control-loop function calling options. Nous is designed to be a flexible platform for the TypeScript community to expand and support different use cases and integrations.

neuron-ai

Neuron AI is a PHP framework that provides an Agent class for creating fully functional agents to perform tasks like analyzing text for SEO optimization. The framework manages advanced mechanisms such as memory, tools, and function calls. Users can extend the Agent class to create custom agents and interact with them to get responses based on the underlying LLM. Neuron AI aims to simplify the development of AI-powered applications by offering a structured framework with documentation and guidelines for contributions under the MIT license.

codellm-devkit

Codellm-devkit (CLDK) is a Python library that serves as a multilingual program analysis framework bridging traditional static analysis tools and Large Language Models (LLMs) specialized for code (CodeLLMs). It simplifies the process of analyzing codebases across multiple programming languages, enabling the extraction of meaningful insights and facilitating LLM-based code analysis. The library provides a unified interface for integrating outputs from various analysis tools and preparing them for effective use by CodeLLMs. Codellm-devkit aims to enable the development and experimentation of robust analysis pipelines that combine traditional program analysis tools and CodeLLMs, reducing friction in multi-language code analysis and ensuring compatibility across different tools and LLM platforms. It is designed to seamlessly integrate with popular analysis tools like WALA, Tree-sitter, LLVM, and CodeQL, acting as a crucial intermediary layer for efficient communication between these tools and CodeLLMs. The project is continuously evolving to include new tools and frameworks, maintaining its versatility for code analysis and LLM integration.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

neuron-ai

Neuron is a PHP framework for creating and orchestrating AI Agents, providing tools for the entire agentic application development lifecycle. It allows integration of AI entities in existing PHP applications with a powerful and flexible architecture. Neuron offers tutorials and educational content to help users get started using AI Agents in their projects. The framework supports various LLM providers, tools, and toolkits, enabling users to create fully functional agents for tasks like data analysis, chatbots, and structured output. Neuron also facilitates monitoring and debugging of AI applications, ensuring control over agent behavior and decision-making processes.

agents

The LiveKit Agent Framework is designed for building real-time, programmable participants that run on servers. Easily tap into LiveKit WebRTC sessions and process or generate audio, video, and data streams. The framework includes plugins for common workflows, such as voice activity detection and speech-to-text. Agents integrates seamlessly with LiveKit server, offloading job queuing and scheduling responsibilities to it. This eliminates the need for additional queuing infrastructure. Agent code developed on your local machine can scale to support thousands of concurrent sessions when deployed to a server in production.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

tinyllm

tinyllm is a lightweight framework designed for developing, debugging, and monitoring LLM and Agent powered applications at scale. It aims to simplify code while enabling users to create complex agents or LLM workflows in production. The core classes, Function and FunctionStream, standardize and control LLM, ToolStore, and relevant calls for scalable production use. It offers structured handling of function execution, including input/output validation, error handling, evaluation, and more, all while maintaining code readability. Users can create chains with prompts, LLM models, and evaluators in a single file without the need for extensive class definitions or spaghetti code. Additionally, tinyllm integrates with various libraries like Langfuse and provides tools for prompt engineering, observability, logging, and finite state machine design.

DemoGPT

DemoGPT is an all-in-one agent library that provides tools, prompts, frameworks, and LLM models for streamlined agent development. It leverages GPT-3.5-turbo to generate LangChain code, creating interactive Streamlit applications. The tool is designed for creating intelligent, interactive, and inclusive solutions in LLM-based application development. It offers model flexibility, iterative development, and a commitment to user engagement. Future enhancements include integrating Gorilla for autonomous API usage and adding a publicly available database for refining the generation process.

BentoML

BentoML is an open-source model serving library for building performant and scalable AI applications with Python. It comes with everything you need for serving optimization, model packaging, and production deployment.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

multi-agent-orchestrator

Multi-Agent Orchestrator is a flexible and powerful framework for managing multiple AI agents and handling complex conversations. It intelligently routes queries to the most suitable agent based on context and content, supports dual language implementation in Python and TypeScript, offers flexible agent responses, context management across agents, extensible architecture for customization, universal deployment options, and pre-built agents and classifiers. It is suitable for various applications, from simple chatbots to sophisticated AI systems, accommodating diverse requirements and scaling efficiently.

exospherehost

Exosphere is an open source infrastructure designed to run AI agents at scale for large data and long running flows. It allows developers to define plug and playable nodes that can be run on a reliable backbone in the form of a workflow, with features like dynamic state creation at runtime, infinite parallel agents, persistent state management, and failure handling. This enables the deployment of production agents that can scale beautifully to build robust autonomous AI workflows.

TaskWeaver

TaskWeaver is a code-first agent framework designed for planning and executing data analytics tasks. It interprets user requests through code snippets, coordinates various plugins to execute tasks in a stateful manner, and preserves both chat history and code execution history. It supports rich data structures, customized algorithms, domain-specific knowledge incorporation, stateful execution, code verification, easy debugging, security considerations, and easy extension. TaskWeaver is easy to use with CLI and WebUI support, and it can be integrated as a library. It offers detailed documentation, demo examples, and citation guidelines.

aiscript

AIScript is a unique programming language and web framework written in Rust, designed to help developers effortlessly build AI applications. It combines the strengths of Python, JavaScript, and Rust to create an intuitive, powerful, and easy-to-use tool. The language features first-class functions, built-in AI primitives, dynamic typing with static type checking, data validation, error handling inspired by Rust, a rich standard library, and automatic garbage collection. The web framework offers an elegant route DSL, automatic parameter validation, OpenAPI schema generation, database modules, authentication capabilities, and more. AIScript excels in AI-powered APIs, prototyping, microservices, data validation, and building internal tools.

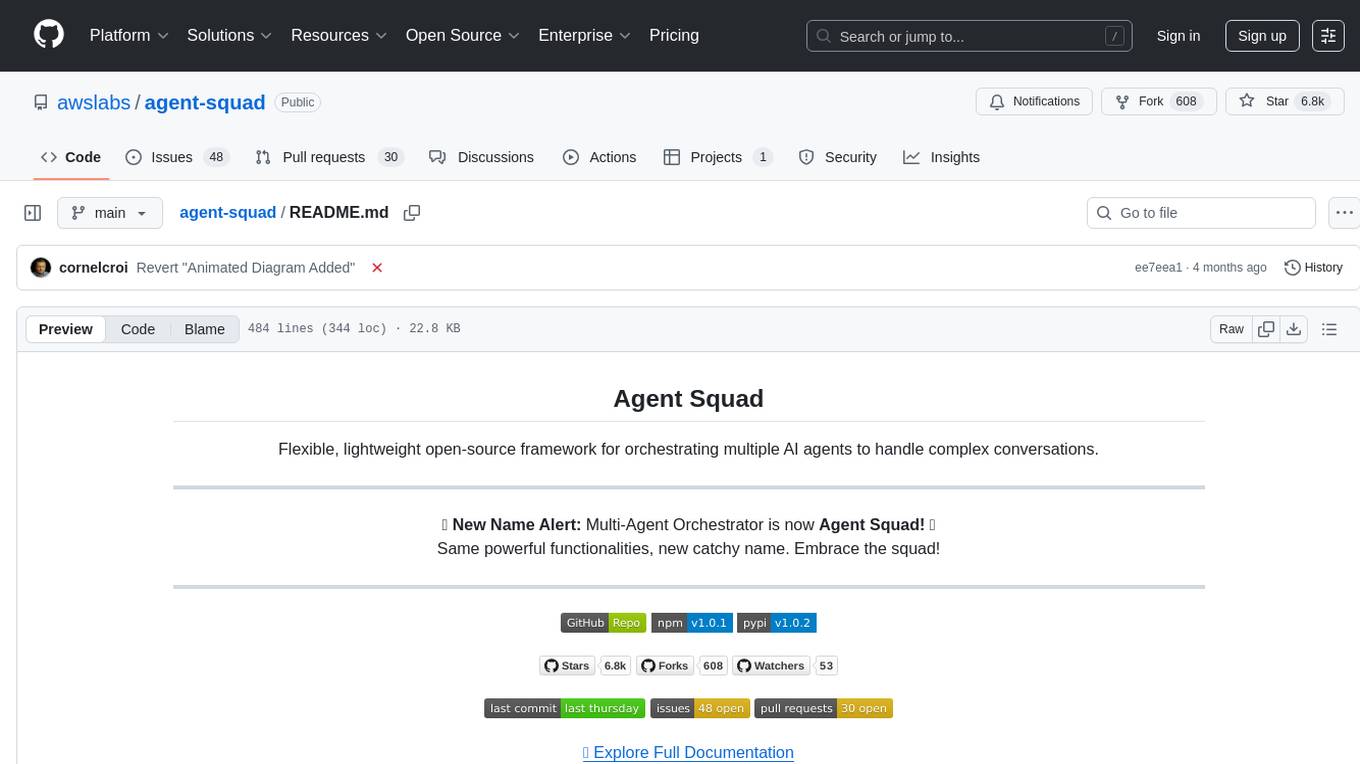

agent-squad

Agent Squad is a flexible, lightweight open-source framework for orchestrating multiple AI agents to handle complex conversations. It intelligently routes queries, maintains context across interactions, and offers pre-built components for quick deployment. The system allows easy integration of custom agents and conversation messages storage solutions, making it suitable for various applications from simple chatbots to sophisticated AI systems, scaling efficiently.

For similar tasks

nous

Nous is an open-source TypeScript platform for autonomous AI agents and LLM based workflows. It aims to automate processes, support requests, review code, assist with refactorings, and more. The platform supports various integrations, multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It offers advanced features like reasoning/planning, memory and function call history, hierarchical task decomposition, and control-loop function calling options. Nous is designed to be a flexible platform for the TypeScript community to expand and support different use cases and integrations.

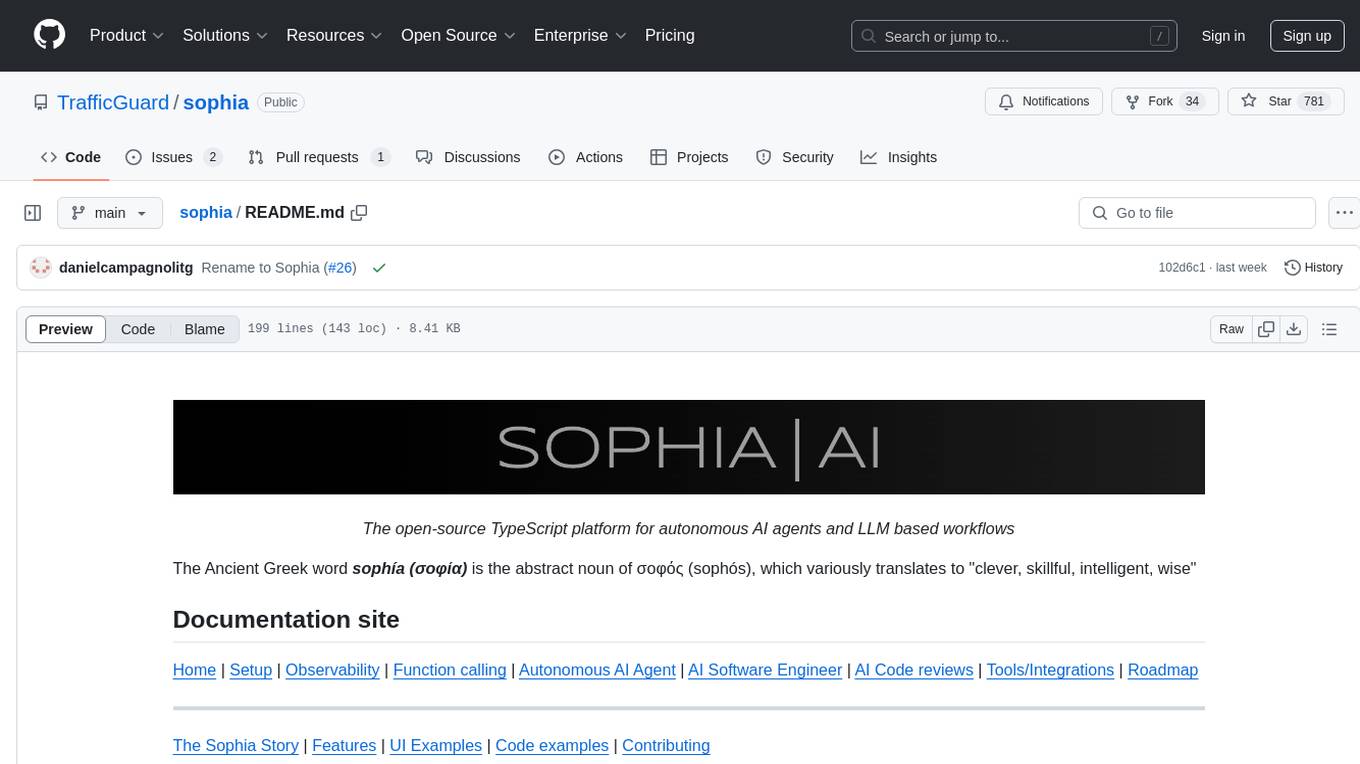

sophia

Sophia is an open-source TypeScript platform designed for autonomous AI agents and LLM based workflows. It aims to automate processes, review code, assist with refactorings, and support various integrations. The platform offers features like advanced autonomous agents, reasoning/planning inspired by Google's Self-Discover paper, memory and function call history, adaptive iterative planning, and more. Sophia supports multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It provides a flexible platform for the TypeScript community to expand and support various use cases and integrations.

unstract

Unstract is a no-code platform that enables users to launch APIs and ETL pipelines to structure unstructured documents. With Unstract, users can go beyond co-pilots by enabling machine-to-machine automation. Unstract's Prompt Studio provides a simple, no-code approach to creating prompts for LLMs, vector databases, embedding models, and text extractors. Users can then configure Prompt Studio projects as API deployments or ETL pipelines to automate critical business processes that involve complex documents. Unstract supports a wide range of LLM providers, vector databases, embeddings, text extractors, ETL sources, and ETL destinations, providing users with the flexibility to choose the best tools for their needs.

mslearn-knowledge-mining

The mslearn-knowledge-mining repository contains lab files for Azure AI Knowledge Mining modules. It provides resources for learning and implementing knowledge mining techniques using Azure AI services. The repository is designed to help users explore and understand how to leverage AI for knowledge mining purposes within the Azure ecosystem.

LLMs-in-Finance

This repository focuses on the application of Large Language Models (LLMs) in the field of finance. It provides insights and knowledge about how LLMs can be utilized in various scenarios within the finance industry, particularly in generating AI agents. The repository aims to explore the potential of LLMs to enhance financial processes and decision-making through the use of advanced natural language processing techniques.

docq

Docq is a private and secure GenAI tool designed to extract knowledge from business documents, enabling users to find answers independently. It allows data to stay within organizational boundaries, supports self-hosting with various cloud vendors, and offers multi-model and multi-modal capabilities. Docq is extensible, open-source (AGPLv3), and provides commercial licensing options. The tool aims to be a turnkey solution for organizations to adopt AI innovation safely, with plans for future features like more data ingestion options and model fine-tuning.

Upsonic

Upsonic offers a cutting-edge enterprise-ready framework for orchestrating LLM calls, agents, and computer use to complete tasks cost-effectively. It provides reliable systems, scalability, and a task-oriented structure for real-world cases. Key features include production-ready scalability, task-centric design, MCP server support, tool-calling server, computer use integration, and easy addition of custom tools. The framework supports client-server architecture and allows seamless deployment on AWS, GCP, or locally using Docker.

clearml

ClearML is an auto-magical suite of tools designed to streamline AI workflows. It includes modules for experiment management, MLOps/LLMOps, data management, model serving, and more. ClearML offers features like experiment tracking, model serving, orchestration, and automation. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm for remote debugging. ClearML aims to simplify collaboration, automate processes, and enhance visibility in AI projects.

For similar jobs

alan-sdk-ios

Alan AI SDK for iOS is a powerful tool that allows developers to quickly create AI agents for their iOS apps. With Alan AI Platform, users can easily design, embed, and host conversational experiences in their applications. The platform offers a web-based IDE called Alan AI Studio for creating dialog scenarios, lightweight SDKs for embedding AI agents, and a backend powered by top-notch speech recognition and natural language understanding technologies. Alan AI enables human-like conversations and actions through voice commands, with features like on-the-fly updates, dialog flow testing, and analytics.

EvoMaster

EvoMaster is an open-source AI-driven tool that automatically generates system-level test cases for web/enterprise applications. It uses an Evolutionary Algorithm and Dynamic Program Analysis to evolve test cases, maximizing code coverage and fault detection. The tool supports REST, GraphQL, and RPC APIs, with whitebox testing for JVM-compiled languages. It generates JUnit tests, detects faults, handles SQL databases, and supports authentication. EvoMaster has been funded by the European Research Council and the Research Council of Norway.

nous

Nous is an open-source TypeScript platform for autonomous AI agents and LLM based workflows. It aims to automate processes, support requests, review code, assist with refactorings, and more. The platform supports various integrations, multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It offers advanced features like reasoning/planning, memory and function call history, hierarchical task decomposition, and control-loop function calling options. Nous is designed to be a flexible platform for the TypeScript community to expand and support different use cases and integrations.

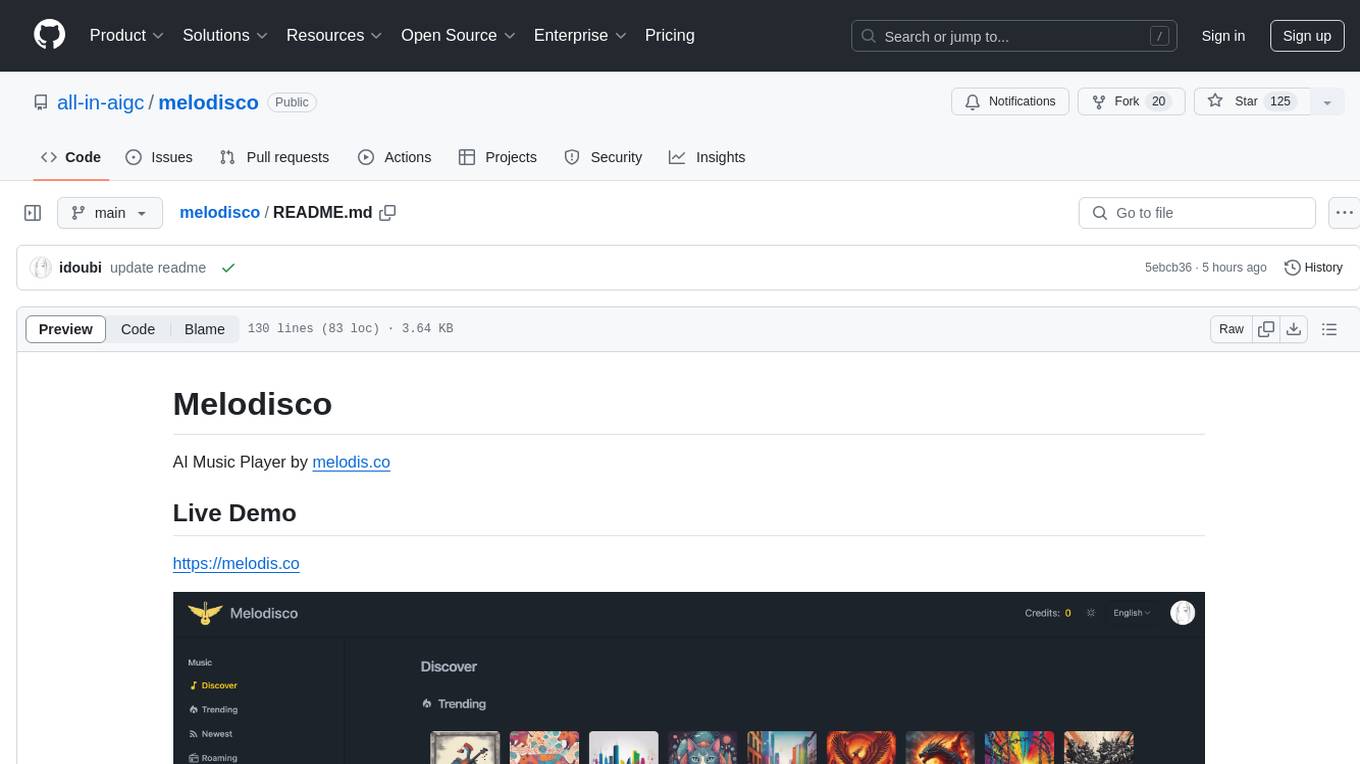

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

kobold_assistant

Kobold-Assistant is a fully offline voice assistant interface to KoboldAI's large language model API. It can work online with the KoboldAI horde and online speech-to-text and text-to-speech models. The assistant, called Jenny by default, uses the latest coqui 'jenny' text to speech model and openAI's whisper speech recognition. Users can customize the assistant name, speech-to-text model, text-to-speech model, and prompts through configuration. The tool requires system packages like GCC, portaudio development libraries, and ffmpeg, along with Python >=3.7, <3.11, and runs on Ubuntu/Debian systems. Users can interact with the assistant through commands like 'serve' and 'list-mics'.

pgx

Pgx is a collection of GPU/TPU-accelerated parallel game simulators for reinforcement learning (RL). It provides JAX-native game simulators for various games like Backgammon, Chess, Shogi, and Go, offering super fast parallel execution on accelerators and beautiful visualization in SVG format. Pgx focuses on faster implementations while also being sufficiently general, allowing environments to be converted to the AEC API of PettingZoo for running Pgx environments through the PettingZoo API.

sophia

Sophia is an open-source TypeScript platform designed for autonomous AI agents and LLM based workflows. It aims to automate processes, review code, assist with refactorings, and support various integrations. The platform offers features like advanced autonomous agents, reasoning/planning inspired by Google's Self-Discover paper, memory and function call history, adaptive iterative planning, and more. Sophia supports multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It provides a flexible platform for the TypeScript community to expand and support various use cases and integrations.

skyeye

SkyEye is an AI-powered Ground Controlled Intercept (GCI) bot designed for the flight simulator Digital Combat Simulator (DCS). It serves as an advanced replacement for the in-game E-2, E-3, and A-50 AI aircraft, offering modern voice recognition, natural-sounding voices, real-world brevity and procedures, a wide range of commands, and intelligent battlespace monitoring. The tool uses Speech-To-Text and Text-To-Speech technology, can run locally or on a cloud server, and is production-ready software used by various DCS communities.