skyrim

🌎 🤝 AI weather models united

Stars: 150

Skyrim is a weather forecasting tool that enables users to run large weather models using consumer-grade GPUs. It provides access to state-of-the-art foundational weather models through a well-maintained infrastructure. Users can forecast weather conditions, such as wind speed and direction, by running simulations on their own GPUs or using modal volume or cloud services like s3 buckets. Skyrim supports various large weather models like Graphcast, Pangu, Fourcastnet, and DLWP, with plans for future enhancements like ensemble prediction and model quantization.

README:

🔥 Run state-of-the-art large weather models in less than 2 minutes.

🌪️ Ensemble and fine-tune (soon) to push the limits on forecasting.

🌎 Simulate extreme weather events!

Skyrim allows you to run any large weather model with a consumer grade GPU.

Until very recently, weather forecasts were run in 100K+ CPU HPC clusters, solving massive numerical weather models (NWP). Within last 2 years, open-source foundation models trained on weather simulation datasets surpassed the skill level of these numerical models.

Our goal is to make these models accessible by providing a well maintained infrastructure.

Clone the repo, set an env (either conda or venv) and then run

git clone https://github.com/your-repo/skyrim.git

cd skyrim

pip install .Depending on your use-case (i.e. AWS storage needs or CDS initial conditions), you may need to fill in a .env by cp .env.example .env.

Skyrim currently supports either running on on modal, on a container –for instance vast.ai or bare metal(you will need an NVIDIA GPU with at least 24GB and installation can be long).

Modal is the fastest option, it will run forecasts "serverless" so you don't have to worry about the infrastructure.

You will need a modal key. Run modal setup and set it up (<1 min).

Modal comes with $30 free credits and a single forecast costs about 2 cents as of May 2024.

Once you are all good to go, then run:

modal run skyrim/modal/forecast.pyThis by default uses pangu model to forecast for the next 6 hours, starting from yesterday. It gets initial conditions from NOAA GFS and writes the forecast to a modal volume. You can choose different dates and weather models as shown in here.

After you have your forecast, you can explore it by running a notebook (without GPU, so cheap) in modal:

modal run skyrim/modal/forecast.py::run_analysisThis will output a jupyter notebook link that you can follow and access the forecast. For instance, to read the forecast you can run from the notebook the following:

import xarray as xr

forecast = xr.open_dataset('/skyrim/outputs/[forecast_id]/[filename], engine='scipy')

Once you are done, best is to delete the volume as a daily forecast is about 2GB:

modal volume rm forecasts /[forecast_id] -rIf you don't want to use modal volume, and want to aggregate results in a bucket (currently only s3), you just have to run:

modal run skyrim/modal/forecast.py --output_dir s3://skyrim-devwhere skyrim-dev is the bucket that you want to aggregate the forecasts. By default, zarr format is used to store in AWS/GCP so you can read and move only the parts of the forecasts that you need.

See examples section for more.✌️

If you are running on your own GPUs, installed either via bare metal or via containers such as vast.ai then you can directly get forecasts as such:

from skyrim.core import Skyrim

model = Skyrim("pangu")

final_pred, pred_paths = model.predict(

date="20240507", # format: YYYYMMDD, start date of the forecast

time="0000", # format: HHMM, start time of the forecast

lead_time=24 * 7, # in hours, next week

save=True,

)To visualise the forecast:

from skyrim.libs.plotting import visualize_rollout

visualize_rollout(output_paths=pred_paths, channels=["u10m", "v10m"], output_dir=".")or you can still use the command line:

forecast -m graphcast --lead_time 24 --initial_conditions cds --date 20240330`See examples section for more.✌️

- Find a machine you like RTX3090 or above with at least 24GB memory. Make sure you have good bandwith (+500MB/s).

- Select the instance template from here.

- Then clone the repo and

pip install . && pip install -r requirements.txt

- You will need a NVIDIA GPU with at least 24GB. We are working on quantization as well so that in the future it would be possible to run simulations with much less compute. Have an environment set with Python == 3.10, Pytorch => 2.2.2 and CUDA +12.x. Or if easier start with the docker image:

nvcr.io/nvidia/pytorch:24.01-py3. - Install conda (miniconda for instance). Then run in that environment:

conda create -y -n skyenv python=3.10

conda activate skyenv

conda install eccodes python-eccodes -c conda-forge

pip install . && pip install -r requirements.txtFor each run, you will first pull the initial conditions of your interest (most recent one by default), then the model will run for the desired time step. Initial conditions are pulled from GFS, ECMWF IFS (Operational) or CDS (ERA5 Reanalysis Dataset).

If you are using CDS initial conditions, then you will need a CDS API key in your .env –cp .env.example and paste.

All examples can be run using forecast or modal run skyrim/modal/forecast.py. You just have to make snake case options kebab-case -i.e. model_name to model-name.

Forecast using graphcast model, with ECMWF IFS initial conditions, starting from 2024-04-30T00:00:00 and with a lead time of a week (forecast for the next week, i.e. 168 hours):

forecast --model_name graphcast --initial_conditions ifs --date 20240403 -output_dir s3://skyrim-dev --lead_time 168or in modal:

modal run skyrim/modal/forecast.py --model-name graphcast --initial-conditions ifs --date 20240403 --output-dir s3://skyrim-dev --lead-time 168Say you re interested in wind at 37.0344° N, 27.4305 E to see if we can kite tomorrow. If we need wind speed, we need to pull wind vectors at about surface level, these are u10m and v10m components of wind. Here is how you go about it:

modal run skyrim/modal/forecast.py --output-dir s3://[your_bucket]/[optional_path] --lead-time 24Then you can read the forecast as below:

import xarray as xr

import pandas as pd

zarr_store_path = "s3://[your_bucket]/[forecast_id]"

forecast = xr.open_dataset(zarr_store_path, engine='zarr') # reads the metadata

df = forecast.sel(lat=37.0344, lon=27.4305, channel=['u10m', 'v10m']).to_pandas()Normally each day is about 2GB but using zarr_store you will only fetch what you need.✌️

Assuming you have a local gpu set up ready to roll:

from skyrim.core import Skyrim

model = Skyrim("pangu")

final_pred, pred_paths = model.predict(

date="20240501", # format: YYYYMMDD, start date of the forecast

time="0000", # format: HHMM, start time of the forecast

lead_time=12, # in hours

save=True,

)

akyaka_coords = {"lat": 37.0557, "lon": 28.3242}

wind_speed = final_pred.wind_speed(**akyaka_coords) * 1.94384 # m/s to knots

print(f"Wind speed at Akyaka: {wind_speed:.2f} knots")- NOAA GFS

- ECMWF IFS

- ERA5 Re-analysis Dataset

Currently supported models are:

- [x] Graphcast

- [x] Pangu

- [x] Fourcastnet (v1 & v2)

- [x] DLWP

- [x] (NWP) ECMWF IFS (HRES) -- notebook

- [x] (NWP) NOAA GFS -- notebook

- [x] (NWP) ECMWF ENS

- [ ] (NWP) ICON

- [ ] Aurora

- [X] FuXi

- [X] FengWu

- [ ] Nano MetNet

For detailed information regarding licensing, please refer to the license details provided on each model's main homepage, which we link to from each of the corresponding components within our repository.

- Pangu Weather : Original, ECMWF, NVIDIA

- FourcastNet : Original, ECMWF,NVIDIA

- Graphcast : Original, ECMWF, NVIDIA

- Fuxi: Original

- FengWu Original

- [x] ensemble prediction

- [x] interface to fetch real-time NWP-based predictions, e.g. via ECMWF API.

- [ ] global model performance comparison across various regions and parameters.

- [ ] finetuning api that trains a downstream model on top of features coming from a global/foundation model, that is optimized wrt to a specific criteria and region

- [ ] model quantization and its effect on model efficiency and accuracy.

This README will be updated regularly to reflect the progress and integration of new models or features into the library. It serves as a guide for internal development efforts and aids in prioritizing tasks and milestones.

All in here ✌️

Skyrim is built on top of NVIDIA's earth2mip, earth2studio, and ECMWF's ai-models. Definitely check them out!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for skyrim

Similar Open Source Tools

skyrim

Skyrim is a weather forecasting tool that enables users to run large weather models using consumer-grade GPUs. It provides access to state-of-the-art foundational weather models through a well-maintained infrastructure. Users can forecast weather conditions, such as wind speed and direction, by running simulations on their own GPUs or using modal volume or cloud services like s3 buckets. Skyrim supports various large weather models like Graphcast, Pangu, Fourcastnet, and DLWP, with plans for future enhancements like ensemble prediction and model quantization.

Easy-Translate

Easy-Translate is a script designed for translating large text files with a single command. It supports various models like M2M100, NLLB200, SeamlessM4T, LLaMA, and Bloom. The tool is beginner-friendly and offers seamless and customizable features for advanced users. It allows acceleration on CPU, multi-CPU, GPU, multi-GPU, and TPU, with support for different precisions and decoding strategies. Easy-Translate also provides an evaluation script for translations. Built on HuggingFace's Transformers and Accelerate library, it supports prompt usage and loading huge models efficiently.

LLM-Pruner

LLM-Pruner is a tool for structural pruning of large language models, allowing task-agnostic compression while retaining multi-task solving ability. It supports automatic structural pruning of various LLMs with minimal human effort. The tool is efficient, requiring only 3 minutes for pruning and 3 hours for post-training. Supported LLMs include Llama-3.1, Llama-3, Llama-2, LLaMA, BLOOM, Vicuna, and Baichuan. Updates include support for new LLMs like GQA and BLOOM, as well as fine-tuning results achieving high accuracy. The tool provides step-by-step instructions for pruning, post-training, and evaluation, along with a Gradio interface for text generation. Limitations include issues with generating repetitive or nonsensical tokens in compressed models and manual operations for certain models.

TokenFormer

TokenFormer is a fully attention-based neural network architecture that leverages tokenized model parameters to enhance architectural flexibility. It aims to maximize the flexibility of neural networks by unifying token-token and token-parameter interactions through the attention mechanism. The architecture allows for incremental model scaling and has shown promising results in language modeling and visual modeling tasks. The codebase is clean, concise, easily readable, state-of-the-art, and relies on minimal dependencies.

MemoryLLM

MemoryLLM is a large language model designed for self-updating capabilities. It offers pretrained models with different memory capacities and features, such as chat models. The repository provides training code, evaluation scripts, and datasets for custom experiments. MemoryLLM aims to enhance knowledge retention and performance on various natural language processing tasks.

llmware

LLMWare is a framework for quickly developing LLM-based applications including Retrieval Augmented Generation (RAG) and Multi-Step Orchestration of Agent Workflows. This project provides a comprehensive set of tools that anyone can use - from a beginner to the most sophisticated AI developer - to rapidly build industrial-grade, knowledge-based enterprise LLM applications. Our specific focus is on making it easy to integrate open source small specialized models and connecting enterprise knowledge safely and securely.

WildBench

WildBench is a tool designed for benchmarking Large Language Models (LLMs) with challenging tasks sourced from real users in the wild. It provides a platform for evaluating the performance of various models on a range of tasks. Users can easily add new models to the benchmark by following the provided guidelines. The tool supports models from Hugging Face and other APIs, allowing for comprehensive evaluation and comparison. WildBench facilitates running inference and evaluation scripts, enabling users to contribute to the benchmark and collaborate on improving model performance.

TileRT

TileRT is a project designed to serve large language models (LLMs) in ultra-low-latency scenarios. It aims to push the latency limits of LLMs without compromising model size or quality, enabling models with hundreds of billions of parameters to achieve millisecond-level time per output token. TileRT prioritizes responsiveness for applications like high-frequency trading, interactive AI, real-time decision-making, long-running agents, and AI-assisted coding. It introduces a tile-level runtime engine that dynamically reschedules computation, I/O, and communication across multiple devices to minimize idle time and improve hardware utilization. The project is actively evolving, with compiler techniques gradually shared with the community through TileLang and TileScale.

supallm

Supallm is a Python library for super resolution of images using deep learning techniques. It provides pre-trained models for enhancing image quality by increasing resolution. The library is easy to use and allows users to upscale images with high fidelity and detail. Supallm is suitable for tasks such as enhancing image quality, improving visual appearance, and increasing the resolution of low-quality images. It is a valuable tool for researchers, photographers, graphic designers, and anyone looking to enhance image quality using AI technology.

baml

BAML is a config file format for declaring LLM functions that you can then use in TypeScript or Python. With BAML you can Classify or Extract any structured data using Anthropic, OpenAI or local models (using Ollama) ## Resources  [Discord Community](https://discord.gg/boundaryml)  [Follow us on Twitter](https://twitter.com/boundaryml) * Discord Office Hours - Come ask us anything! We hold office hours most days (9am - 12pm PST). * Documentation - Learn BAML * Documentation - BAML Syntax Reference * Documentation - Prompt engineering tips * Boundary Studio - Observability and more #### Starter projects * BAML + NextJS 14 * BAML + FastAPI + Streaming ## Motivation Calling LLMs in your code is frustrating: * your code uses types everywhere: classes, enums, and arrays * but LLMs speak English, not types BAML makes calling LLMs easy by taking a type-first approach that lives fully in your codebase: 1. Define what your LLM output type is in a .baml file, with rich syntax to describe any field (even enum values) 2. Declare your prompt in the .baml config using those types 3. Add additional LLM config like retries or redundancy 4. Transpile the .baml files to a callable Python or TS function with a type-safe interface. (VSCode extension does this for you automatically). We were inspired by similar patterns for type safety: protobuf and OpenAPI for RPCs, Prisma and SQLAlchemy for databases. BAML guarantees type safety for LLMs and comes with tools to give you a great developer experience:  Jump to BAML code or how Flexible Parsing works without additional LLM calls. | BAML Tooling | Capabilities | | ----------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | | BAML Compiler install | Transpiles BAML code to a native Python / Typescript library (you only need it for development, never for releases) Works on Mac, Windows, Linux  | | VSCode Extension install | Syntax highlighting for BAML files Real-time prompt preview Testing UI | | Boundary Studio open (not open source) | Type-safe observability Labeling |

PowerInfer

PowerInfer is a high-speed Large Language Model (LLM) inference engine designed for local deployment on consumer-grade hardware, leveraging activation locality to optimize efficiency. It features a locality-centric design, hybrid CPU/GPU utilization, easy integration with popular ReLU-sparse models, and support for various platforms. PowerInfer achieves high speed with lower resource demands and is flexible for easy deployment and compatibility with existing models like Falcon-40B, Llama2 family, ProSparse Llama2 family, and Bamboo-7B.

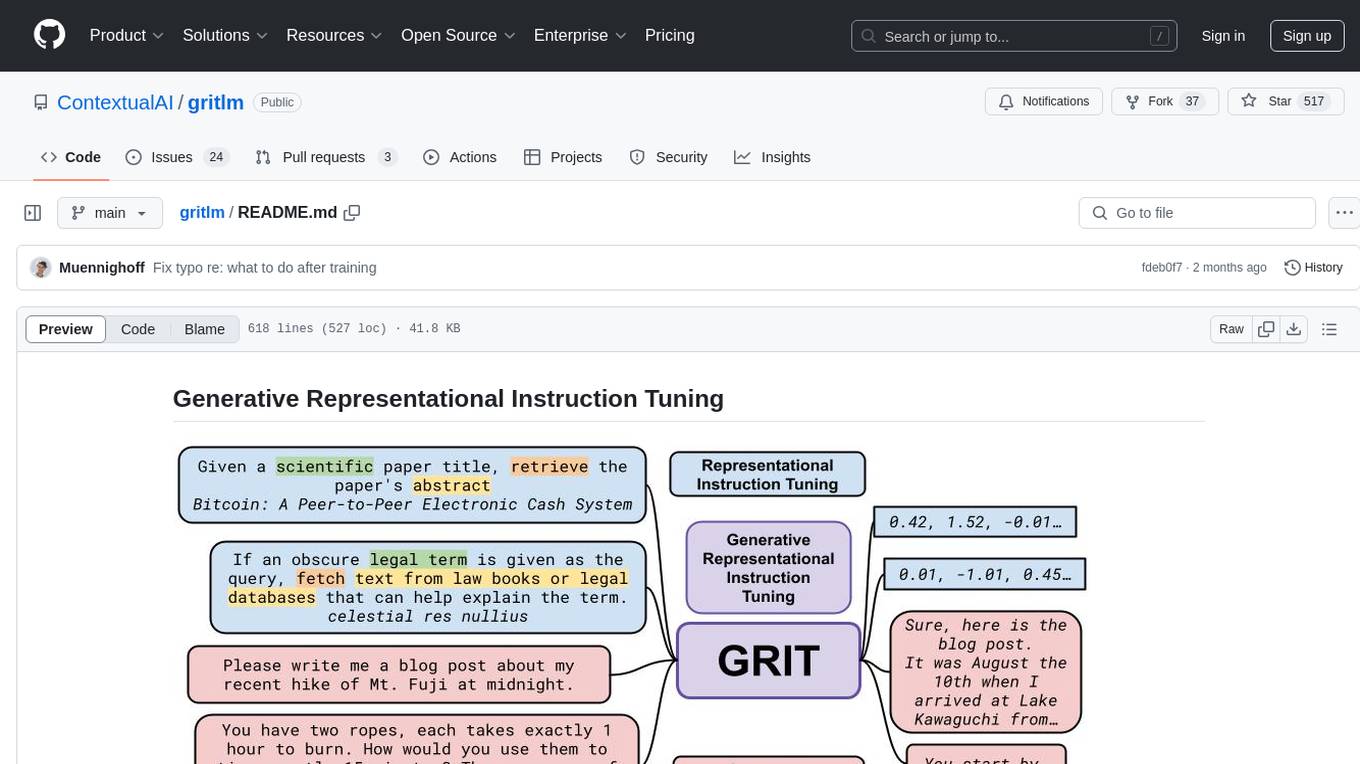

gritlm

The 'gritlm' repository provides all materials for the paper Generative Representational Instruction Tuning. It includes code for inference, training, evaluation, and known issues related to the GritLM model. The repository also offers models for embedding and generation tasks, along with instructions on how to train and evaluate the models. Additionally, it contains visualizations, acknowledgements, and a citation for referencing the work.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

generative-models

Generative Models by Stability AI is a repository that provides various generative models for research purposes. It includes models like Stable Video 4D (SV4D) for video synthesis, Stable Video 3D (SV3D) for multi-view synthesis, SDXL-Turbo for text-to-image generation, and more. The repository focuses on modularity and implements a config-driven approach for building and combining submodules. It supports training with PyTorch Lightning and offers inference demos for different models. Users can access pre-trained models like SDXL-base-1.0 and SDXL-refiner-1.0 under a CreativeML Open RAIL++-M license. The codebase also includes tools for invisible watermark detection in generated images.

habitat-sim

Habitat-Sim is a high-performance physics-enabled 3D simulator with support for 3D scans of indoor/outdoor spaces, CAD models of spaces and piecewise-rigid objects, configurable sensors, robots described via URDF, and rigid-body mechanics. It prioritizes simulation speed over the breadth of simulation capabilities, achieving several thousand frames per second (FPS) running single-threaded and over 10,000 FPS multi-process on a single GPU when rendering a scene from the Matterport3D dataset. Habitat-Sim simulates a Fetch robot interacting in ReplicaCAD scenes at over 8,000 steps per second (SPS), where each ‘step’ involves rendering 1 RGBD observation (128×128 pixels) and rigid-body dynamics for 1/30sec.

FlexFlow

FlexFlow Serve is an open-source compiler and distributed system for **low latency**, **high performance** LLM serving. FlexFlow Serve outperforms existing systems by 1.3-2.0x for single-node, multi-GPU inference and by 1.4-2.4x for multi-node, multi-GPU inference.

For similar tasks

skyrim

Skyrim is a weather forecasting tool that enables users to run large weather models using consumer-grade GPUs. It provides access to state-of-the-art foundational weather models through a well-maintained infrastructure. Users can forecast weather conditions, such as wind speed and direction, by running simulations on their own GPUs or using modal volume or cloud services like s3 buckets. Skyrim supports various large weather models like Graphcast, Pangu, Fourcastnet, and DLWP, with plans for future enhancements like ensemble prediction and model quantization.

chinese-llm-benchmark

The Chinese LLM Benchmark is a continuous evaluation list of large models in CLiB, covering a wide range of commercial and open-source models from various companies and research institutions. It supports multidimensional evaluation of capabilities including classification, information extraction, reading comprehension, data analysis, Chinese encoding efficiency, and Chinese instruction compliance. The benchmark not only provides capability score rankings but also offers the original output results of all models for interested individuals to score and rank themselves.

gollm

gollm is a Go package designed to simplify interactions with Large Language Models (LLMs) for AI engineers and developers. It offers a unified API for multiple LLM providers, easy provider and model switching, flexible configuration options, advanced prompt engineering, prompt optimization, memory retention, structured output and validation, provider comparison tools, high-level AI functions, robust error handling and retries, and extensible architecture. The package enables users to create AI-powered golems for tasks like content creation workflows, complex reasoning tasks, structured data generation, model performance analysis, prompt optimization, and creating a mixture of agents.

LMeterX

LMeterX is a professional large language model performance testing platform that supports model inference services based on large model inference frameworks and cloud services. It provides an intuitive Web interface for creating and managing test tasks, monitoring testing processes, and obtaining detailed performance analysis reports to support model deployment and optimization.

Time-LLM

Time-LLM is a reprogramming framework that repurposes large language models (LLMs) for time series forecasting. It allows users to treat time series analysis as a 'language task' and effectively leverage pre-trained LLMs for forecasting. The framework involves reprogramming time series data into text representations and providing declarative prompts to guide the LLM reasoning process. Time-LLM supports various backbone models such as Llama-7B, GPT-2, and BERT, offering flexibility in model selection. The tool provides a general framework for repurposing language models for time series forecasting tasks.

Awesome-LWMs

Awesome Large Weather Models (LWMs) is a curated collection of articles and resources related to large weather models used in AI for Earth and AI for Science. It includes information on various cutting-edge weather forecasting models, benchmark datasets, and research papers. The repository serves as a hub for researchers and enthusiasts to explore the latest advancements in weather modeling and forecasting.

awesome-weather-models

A catalogue and categorization of AI-based weather forecasting models. This page provides a catalogue and categorization of AI-based weather forecasting models to enable discovery and comparison of different available model options. The weather models are categorized based on metadata found in the JSON schema specification. The table includes information such as the name of the weather model, the organization that developed it, operational data availability, open-source status, and links for further details.

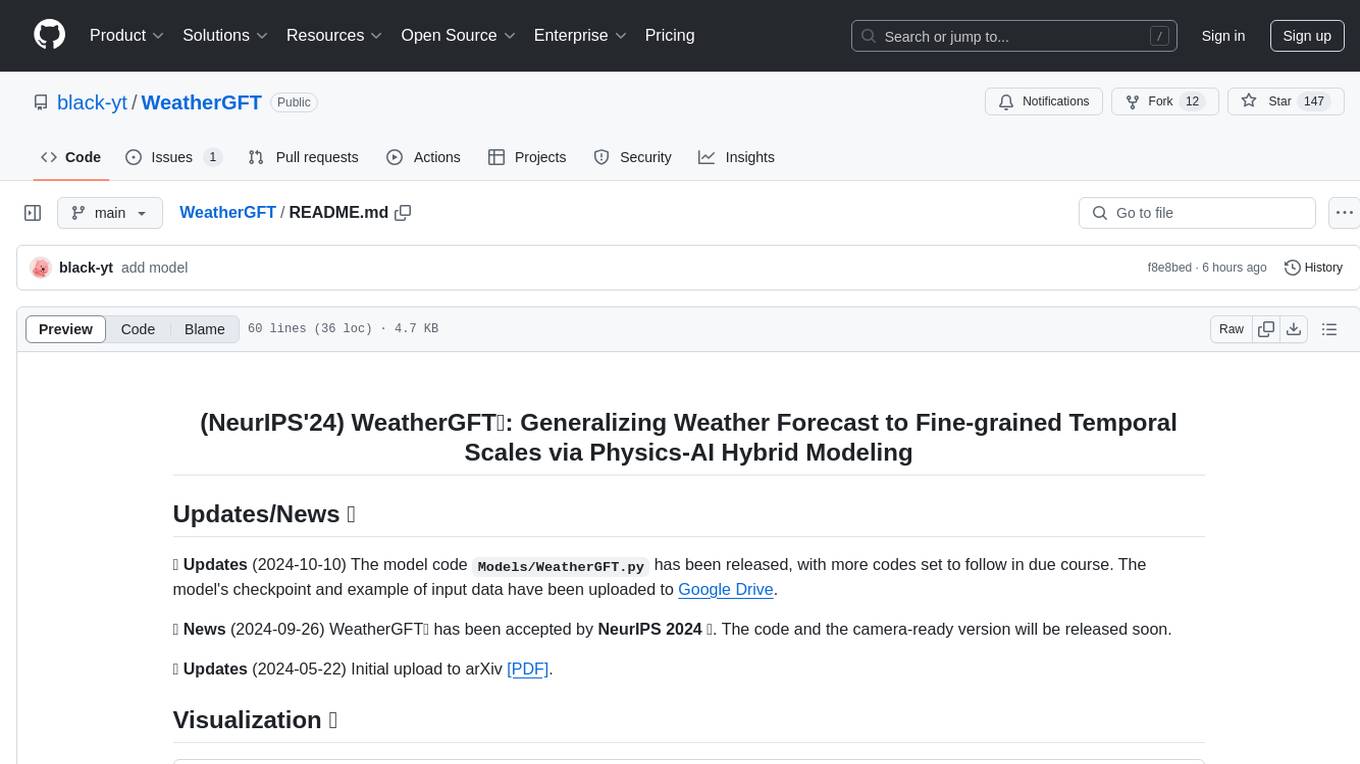

WeatherGFT

WeatherGFT is a physics-AI hybrid model designed to generalize weather forecasts to finer-grained temporal scales beyond the training dataset. It incorporates physical partial differential equations (PDEs) into neural networks to simulate fine-grained physical evolution and correct biases. The model achieves state-of-the-art performance in forecasting tasks at different time scales, from nowcasting to medium-range forecasts, by utilizing a lead time-aware training framework and a carefully designed PDE kernel. WeatherGFT bridges the gap between nowcast and medium-range forecast by extending forecasting abilities to predict accurately at a 30-minute time scale.

For similar jobs

skyrim

Skyrim is a weather forecasting tool that enables users to run large weather models using consumer-grade GPUs. It provides access to state-of-the-art foundational weather models through a well-maintained infrastructure. Users can forecast weather conditions, such as wind speed and direction, by running simulations on their own GPUs or using modal volume or cloud services like s3 buckets. Skyrim supports various large weather models like Graphcast, Pangu, Fourcastnet, and DLWP, with plans for future enhancements like ensemble prediction and model quantization.

ai-models

The `ai-models` command is a tool used to run AI-based weather forecasting models. It provides functionalities to install, run, and manage different AI models for weather forecasting. Users can easily install and run various models, customize model settings, download assets, and manage input data from different sources such as ECMWF, CDS, and GRIB files. The tool is designed to optimize performance by running on GPUs and provides options for better organization of assets and output files. It offers a range of command line options for users to interact with the models and customize their forecasting tasks.

earth2studio

Earth2Studio is a Python-based package designed to enable users to quickly get started with AI weather and climate models. It provides access to pre-trained models, diagnostic tools, data sources, IO utilities, perturbation methods, and sample workflows for building custom weather prediction workflows. The package aims to empower users to explore AI-driven meteorology through modular components and seamless integration with other Nvidia packages like Modulus.

awesome-weather-models

A catalogue and categorization of AI-based weather forecasting models. This page provides a catalogue and categorization of AI-based weather forecasting models to enable discovery and comparison of different available model options. The weather models are categorized based on metadata found in the JSON schema specification. The table includes information such as the name of the weather model, the organization that developed it, operational data availability, open-source status, and links for further details.

WeatherGFT

WeatherGFT is a physics-AI hybrid model designed to generalize weather forecasts to finer-grained temporal scales beyond the training dataset. It incorporates physical partial differential equations (PDEs) into neural networks to simulate fine-grained physical evolution and correct biases. The model achieves state-of-the-art performance in forecasting tasks at different time scales, from nowcasting to medium-range forecasts, by utilizing a lead time-aware training framework and a carefully designed PDE kernel. WeatherGFT bridges the gap between nowcast and medium-range forecast by extending forecasting abilities to predict accurately at a 30-minute time scale.

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.