NoLabs

Open source biolab

Stars: 75

NoLabs is an open-source biolab that provides easy access to state-of-the-art models for bio research. It supports various tasks, including drug discovery, protein analysis, and small molecule design. NoLabs aims to accelerate bio research by making inference models accessible to everyone.

README:

NoLabs is an open source biolab that lets you run experiments with the latest state-of-the-art models for bio research.

The goal of the project is to accelerate bio research by making inference models easy to use for everyone. We are currently supporting protein biolab (predicting useful protein properties such as solubility, localisation, gene ontology, folding, etc.), drug discovery biolab (construct ligands and test binding to target proteins) and small molecules design biolab (design small molecules given a protein target and check drug-likeness and binding affinity).

We are working on expanding both and adding a cell biolab and genetic biolab, and we will appreciate your support and contributions.

Let's accelerate bio research!

Bio Buddy - drug discovery co-pilot:

BioBuddy is a drug discovery copilot that supports:

- Downloading data from ChemBL

- Downloading data from RcsbPDB

- Questions about drug discovery process, targets, chemical components etc

- Writing review reports based on published papers

For example, you can ask

- "Can you pull me some latest approved drugs?"

- "Can you download me 1000 rhodopsins?"

- "How does an aspirin molecule look like?" and it will do this and answer other questions.

To enable biobuddy run this command when starting nolabs:

$ ENABLE_BIOBUDDY=true docker compose up nolabsAnd also start the biobuddy microservice:

$ OPENAI_API_KEY=your_openai_api_key TAVILY_API_KEY=your_tavily_api_key docker compose up biobuddyNolabs is running on GPT4 for the best performance. You can adjust the model you use in microservices/biobuddy/biobuddy/services.py

You can ignore OPENAI_API_KEY warnings when running other services using docker compose.

Drug discovery lab:

- Drug-target interaction prediction, high throughput virtual screening (HTVS) based on:

- Automatic pocket prediction via P2Rank

- Automatic MSA generation via HH-suite3

Protein lab:

- Prediction of subcellular localisation via fine-tuned ritakurban/ESM_protein_localization model (to be updated with a better model)

- Prediction of folded structure via facebook/esmfold_v1

- Gene ontology prediction for 200 most popular gene ontologies

- Protein solubility prediction

Protein design Lab:

- Protein generation via RFDiffusion

Conformations Lab:

Small molecules design lab:

- Small molecules design using a protein target with drug-likeness scoring component REINVENT4

Specify the search space (location) where designed molecule would bind relative to protein target. Then run reinforcement learning to generate new molecules in specified binding region.

WARNING: Reinforcement learning process might take a long time (with 128 molecules per 1 epoch and 50 epochs it could take a day)

# Clone this project

$ git clone https://github.com/BasedLabs/nolabs

$ cd nolabsGenerate a new token for docker registry https://github.com/settings/tokens/new Select 'read:packages'

$ docker login ghcr.io -u username -p ghp_xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxIf you want to run a single feature (recommended)

$ docker compose up nolabs

$ docker compose up diffdock

$ docker compose up p2rank

...OR if you want to run everything on one machine:

$ docker compose upServer will be available on http://localhost:9000

nolabs:

-

Create a Python Environment with Python 3.11

- First, ensure you have Python 3.11 installed. If not, you can download it from python.org or use a version manager like

pyenv. - Create a new virtual environment:

python3.11 -m venv nolabs-env

- First, ensure you have Python 3.11 installed. If not, you can download it from python.org or use a version manager like

-

Activate the Virtual Environment and Install Poetry

- Activate the virtual environment:

source nolabs-env/bin/activate - Install Poetry, a tool for dependency management and packaging in Python. You can install it with pip:

pip install poetry uvicorn

- Activate the virtual environment:

-

Install Dependencies Using Poetry

poetry install

-

Start a Uvicorn Server

- Set your environment variable and start the Uvicorn server with the following command:

NOLABS_ENVIRONMENT=dev poetry run uvicorn nolabs.api:app --host=127.0.0.1 --port=8000

- This command runs the

nolabsAPI server onlocalhostat port8000.

- Set your environment variable and start the Uvicorn server with the following command:

-

Set Up the Frontend

- In a separate terminal, ensure you have

npminstalled. If not, you can install Node.js andnpmfrom nodejs.org. - Run

npm installto install the necessary Node.js packages:npm install

- In a separate terminal, ensure you have

- After installing the packages, start the frontend development server:

npm run dev

Server will be available on http://localhost:9000

We provide individual Docker containers backed by FastAPI for each feature, which are available in the /microservices

folder. You can use them individually as APIs.

For example, to run the esmfold service, you can use Docker Compose:

$ docker compose up esmfoldOnce the service is up, you can make a POST request to perform a task, such as predicting a protein's folded structure. Here's a simple Python example:

import requests

# Define the API endpoint

url = 'http://127.0.0.1:5736/run-folding'

# Specify the protein sequence in the request body

data = {

'protein_sequence': 'YOUR_PROTEIN_SEQUENCE_HERE'

}

# Make the POST request and get the response

response = requests.post(url, json=data)

# Extract the PDB content from the response

pdb_content = response.json().get('pdb_content', '')

print(pdb_content)This Python script makes a POST request to the esmfold microservice with a protein sequence and prints the predicted PDB content.

Since we provide individual Docker containers backed by FastAPI for each feature, available in the /microservices

folder, you can run them on separate machines. This setup is particularly useful if you're developing on a computer

without GPU support but have access to a VM with a GPU for tasks like folding, docking, etc.

For instance, to run the diffdock service, use Docker Compose on the VM or computer equipped with a GPU.

On your server/VM/computer with a GPU, run:

$ docker compose up diffdockOnce the service is up, you can check that you can access it from your computer by navigating to http://< gpu_machine_ip>:5737/docs

If everything is correct, you should see the FastAPI page with diffdock's API surface like this:

Next, update the nolabs/infrastructure/settings.ini file on your primary machine to include the IP address of the service (replace 127.0.0.1 with your GPU machine's IP):

...

p2rank = http://127.0.0.1:5731

esmfold = http://127.0.0.1:5736

esmfold_light = http://127.0.0.1:5733

msa_light = http://127.0.0.1:5734

umol = http://127.0.0.1:5735

diffdock = http://127.0.0.1:5737 -> http://74.82.28.227:5737

...And now you are ready to use this service hosted on a separate machine!

Model: RFdiffusion

RFdiffusion is an open source method for structure generation, with or without conditional information (a motif, target etc).

docker compose up protein_designSwagger UI will be available on http://localhost:5789/docs

or install as a python package

Model: ESMFold - Evolutionary Scale Modeling

docker compose up esmfoldSwagger UI will be available on http://localhost:5736/docs

or install as a python package

Model: ESMAtlas

docker compose up esmfold_lightSwagger UI will be available on http://localhost:5733/docs

or install as a python package

Model: Hugging Face

docker compose up gene_ontologySwagger UI will be available on http://localhost:5788/docs

or install as a python package

Model: Hugging Face

docker compose up localisationSwagger UI will be available on http://localhost:5787/docs

or install as a python package

Model: p2rank

docker compose up p2rankSwagger UI will be available on http://localhost:5731/docs

or install as a python package

Model: Hugging Face

docker compose up solubilitySwagger UI will be available on http://localhost:5786/docs

Model: UMol

docker compose up umolSwagger UI will be available on http://localhost:5735/docs

Model: RoseTTAFold

docker compose up rosettafoldSwagger UI will be available on http://localhost:5738/docs

WARNING: To use Rosettafold you must change the volumes '.' to point to the specified folders.

Model: REINVENT4

Misc: DockStream, QED, AutoDock Vina

docker compose up reinventSwagger UI will be available on http://localhost:5790/docs

WARNING: Do not change the number of guvicorn workers (1), this will lead to microservice issues.

The following tools were used in this project:

[Recommended for laptops] If you are using a laptop, use --test argument (no need to have a lot of compute):

- RAM > 16GB

- [Optional] GPU memory >= 16GB (REALLY speeds up the inference)

[Recommended for powerful workstations] Else, if you want to host everything on your machine and have faster inference (also a requirement for folding sequences > 400 amino acids in length):

- RAM > 30GB

- [Optional] GPU memory >= 40GB (REALLY speeds up the inference)

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for NoLabs

Similar Open Source Tools

NoLabs

NoLabs is an open-source biolab that provides easy access to state-of-the-art models for bio research. It supports various tasks, including drug discovery, protein analysis, and small molecule design. NoLabs aims to accelerate bio research by making inference models accessible to everyone.

agent-service-toolkit

The AI Agent Service Toolkit is a comprehensive toolkit designed for running an AI agent service using LangGraph, FastAPI, and Streamlit. It includes a LangGraph agent, a FastAPI service, a client for interacting with the service, and a Streamlit app for providing a chat interface. The project offers a template for building and running agents with the LangGraph framework, showcasing a complete setup from agent definition to user interface. Key features include LangGraph Agent with latest features, FastAPI Service, Advanced Streaming support, Streamlit Interface, Multiple Agent Support, Asynchronous Design, Content Moderation, RAG Agent implementation, Feedback Mechanism, Docker Support, and Testing. The repository structure includes directories for defining agents, protocol schema, core modules, service, client, Streamlit app, and tests.

patchwork

PatchWork is an open-source framework designed for automating development tasks using large language models. It enables users to automate workflows such as PR reviews, bug fixing, security patching, and more through a self-hosted CLI agent and preferred LLMs. The framework consists of reusable atomic actions called Steps, customizable LLM prompts known as Prompt Templates, and LLM-assisted automations called Patchflows. Users can run Patchflows locally in their CLI/IDE or as part of CI/CD pipelines. PatchWork offers predefined patchflows like AutoFix, PRReview, GenerateREADME, DependencyUpgrade, and ResolveIssue, with the flexibility to create custom patchflows. Prompt templates are used to pass queries to LLMs and can be customized. Contributions to new patchflows, steps, and the core framework are encouraged, with chat assistants available to aid in the process. The roadmap includes expanding the patchflow library, introducing a debugger and validation module, supporting large-scale code embeddings, parallelization, fine-tuned models, and an open-source GUI. PatchWork is licensed under AGPL-3.0 terms, while custom patchflows and steps can be shared using the Apache-2.0 licensed patchwork template repository.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

sail

Sail is a tool designed to unify stream processing, batch processing, and compute-intensive workloads, serving as a drop-in replacement for Spark SQL and the Spark DataFrame API in single-process settings. It aims to streamline data processing tasks and facilitate AI workloads.

gitingest

GitIngest is a tool that allows users to turn any Git repository into a prompt-friendly text ingest for LLMs. It provides easy code context by generating a text digest from a git repository URL or directory. The tool offers smart formatting for optimized output format for LLM prompts and provides statistics about file and directory structure, size of the extract, and token count. GitIngest can be used as a CLI tool on Linux and as a Python package for code integration. The tool is built using Tailwind CSS for frontend, FastAPI for backend framework, tiktoken for token estimation, and apianalytics.dev for simple analytics. Users can self-host GitIngest by building the Docker image and running the container. Contributions to the project are welcome, and the tool aims to be beginner-friendly for first-time contributors with a simple Python and HTML codebase.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

Fabric

Fabric is an open-source framework designed to augment humans using AI by organizing prompts by real-world tasks. It addresses the integration problem of AI by creating and organizing prompts for various tasks. Users can create, collect, and organize AI solutions in a single place for use in their favorite tools. Fabric also serves as a command-line interface for those focused on the terminal. It offers a wide range of features and capabilities, including support for multiple AI providers, internationalization, speech-to-text, AI reasoning, model management, web search, text-to-speech, desktop notifications, and more. The project aims to help humans flourish by leveraging AI technology to solve human problems and enhance creativity.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

browser

Lightpanda Browser is an open-source headless browser designed for fast web automation, AI agents, LLM training, scraping, and testing. It features ultra-low memory footprint, exceptionally fast execution, and compatibility with Playwright and Puppeteer through CDP. Built for performance, Lightpanda offers Javascript execution, support for Web APIs, and is optimized for minimal memory usage. It is a modern solution for web scraping and automation tasks, providing a lightweight alternative to traditional browsers like Chrome.

GraphRAG-Local-UI

GraphRAG Local with Interactive UI is an adaptation of Microsoft's GraphRAG, tailored to support local models and featuring a comprehensive interactive user interface. It allows users to leverage local models for LLM and embeddings, visualize knowledge graphs in 2D or 3D, manage files, settings, and queries, and explore indexing outputs. The tool aims to be cost-effective by eliminating dependency on costly cloud-based models and offers flexible querying options for global, local, and direct chat queries.

nodejs-todo-api-boilerplate

An LLM-powered code generation tool that relies on the built-in Node.js API Typescript Template Project to easily generate clean, well-structured CRUD module code from text description. It orchestrates 3 LLM micro-agents (`Developer`, `Troubleshooter` and `TestsFixer`) to generate code, fix compilation errors, and ensure passing E2E tests. The process includes module code generation, DB migration creation, seeding data, and running tests to validate output. By cycling through these steps, it guarantees consistent and production-ready CRUD code aligned with vertical slicing architecture.

opencode

Opencode is an AI coding agent designed for the terminal. It is a tool that allows users to interact with AI models for coding tasks in a terminal-based environment. Opencode is open source, provider-agnostic, and focuses on a terminal user interface (TUI) for coding. It offers features such as client/server architecture, support for various AI models, and a strong emphasis on community contributions and feedback.

air-light

Air-light is a minimalist WordPress starter theme designed to be an ultra minimal starting point for a WordPress project. It is built to be very straightforward, backwards compatible, front-end developer friendly and modular by its structure. Air-light is free of weird "app-like" folder structures or odd syntaxes that nobody else uses. It loves WordPress as it was and as it is.

steel-browser

Steel is an open-source browser API designed for AI agents and applications, simplifying the process of building live web agents and browser automation tools. It serves as a core building block for a production-ready, containerized browser sandbox with features like stealth capabilities, text-to-markdown session management, UI for session viewing/debugging, and full browser control through popular automation frameworks. Steel allows users to control, run, and manage a production-ready browser environment via a REST API, offering features such as full browser control, session management, proxy support, extension support, debugging tools, anti-detection mechanisms, resource management, and various browser tools. It aims to streamline complex browsing tasks programmatically, enabling users to focus on their AI applications while Steel handles the underlying complexity.

orama-core

OramaCore is a database designed for AI projects, answer engines, copilots, and search functionalities. It offers features such as a full-text search engine, vector database, LLM interface, and various utilities. The tool is currently under active development and not recommended for production use due to potential API changes. OramaCore aims to provide a comprehensive solution for managing data and enabling advanced AI capabilities in projects.

For similar tasks

NoLabs

NoLabs is an open-source biolab that provides easy access to state-of-the-art models for bio research. It supports various tasks, including drug discovery, protein analysis, and small molecule design. NoLabs aims to accelerate bio research by making inference models accessible to everyone.

For similar jobs

NoLabs

NoLabs is an open-source biolab that provides easy access to state-of-the-art models for bio research. It supports various tasks, including drug discovery, protein analysis, and small molecule design. NoLabs aims to accelerate bio research by making inference models accessible to everyone.

OpenCRISPR

OpenCRISPR is a set of free and open gene editing systems designed by Profluent Bio. The OpenCRISPR-1 protein maintains the prototypical architecture of a Type II Cas9 nuclease but is hundreds of mutations away from SpCas9 or any other known natural CRISPR-associated protein. You can view OpenCRISPR-1 as a drop-in replacement for many protocols that need a cas9-like protein with an NGG PAM and you can even use it with canonical SpCas9 gRNAs. OpenCRISPR-1 can be fused in a deactivated or nickase format for next generation gene editing techniques like base, prime, or epigenome editing.

ersilia

The Ersilia Model Hub is a unified platform of pre-trained AI/ML models dedicated to infectious and neglected disease research. It offers an open-source, low-code solution that provides seamless access to AI/ML models for drug discovery. Models housed in the hub come from two sources: published models from literature (with due third-party acknowledgment) and custom models developed by the Ersilia team or contributors.

ontogpt

OntoGPT is a Python package for extracting structured information from text using large language models, instruction prompts, and ontology-based grounding. It provides a command line interface and a minimal web app for easy usage. The tool has been evaluated on test data and is used in related projects like TALISMAN for gene set analysis. OntoGPT enables users to extract information from text by specifying relevant terms and provides the extracted objects as output.

bia-bob

BIA `bob` is a Jupyter-based assistant for interacting with data using large language models to generate Python code. It can utilize OpenAI's chatGPT, Google's Gemini, Helmholtz' blablador, and Ollama. Users need respective accounts to access these services. Bob can assist in code generation, bug fixing, code documentation, GPU-acceleration, and offers a no-code custom Jupyter Kernel. It provides example notebooks for various tasks like bio-image analysis, model selection, and bug fixing. Installation is recommended via conda/mamba environment. Custom endpoints like blablador and ollama can be used. Google Cloud AI API integration is also supported. The tool is extensible for Python libraries to enhance Bob's functionality.

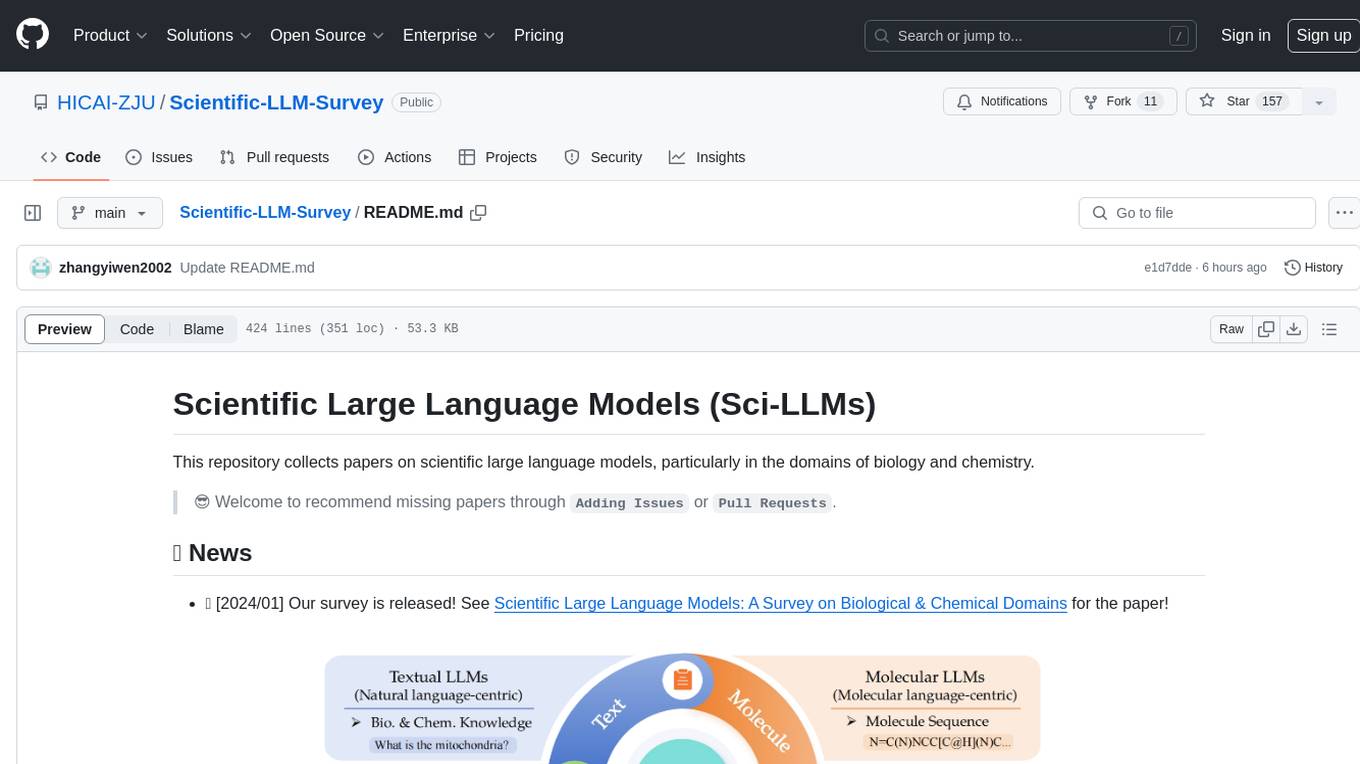

Scientific-LLM-Survey

Scientific Large Language Models (Sci-LLMs) is a repository that collects papers on scientific large language models, focusing on biology and chemistry domains. It includes textual, molecular, protein, and genomic languages, as well as multimodal language. The repository covers various large language models for tasks such as molecule property prediction, interaction prediction, protein sequence representation, protein sequence generation/design, DNA-protein interaction prediction, and RNA prediction. It also provides datasets and benchmarks for evaluating these models. The repository aims to facilitate research and development in the field of scientific language modeling.

polaris

Polaris establishes a novel, industry‑certified standard to foster the development of impactful methods in AI-based drug discovery. This library is a Python client to interact with the Polaris Hub. It allows you to download Polaris datasets and benchmarks, evaluate a custom method against a Polaris benchmark, and create and upload new datasets and benchmarks.

awesome-AI4MolConformation-MD

The 'awesome-AI4MolConformation-MD' repository focuses on protein conformations and molecular dynamics using generative artificial intelligence and deep learning. It provides resources, reviews, datasets, packages, and tools related to AI-driven molecular dynamics simulations. The repository covers a wide range of topics such as neural networks potentials, force fields, AI engines/frameworks, trajectory analysis, visualization tools, and various AI-based models for protein conformational sampling. It serves as a comprehensive guide for researchers and practitioners interested in leveraging AI for studying molecular structures and dynamics.