skynet

AI core services for Jitsi

Stars: 54

Skynet is an API server for AI services that wraps several apps and models. It consists of specialized modules that can be enabled or disabled as needed. Users can utilize Skynet for tasks such as summaries and action items with vllm or Ollama, live transcriptions with Faster Whisper via websockets, and RAG Assistant. The tool requires Poetry and Redis for operation. Skynet provides a quickstart guide for both Summaries/Assistant and Live Transcriptions, along with instructions for testing docker changes and running demos. Detailed documentation on configuration, running, building, and monitoring Skynet is available in the docs. Developers can contribute to Skynet by installing the pre-commit hook for linting. Skynet is distributed under the Apache 2.0 License.

README:

Skynet is an API server for AI services wrapping several apps and models.

It is comprised of specialized modules which can be enabled or disabled as needed.

- Summary and Action Items with vllm (or Ollama)

- Live Transcriptions with Faster Whisper via websockets

- RAG Assistant

- 🚧 More to follow

- Poetry

- Redis

# disable authorization

export BYPASS_AUTHORIZATION=1

# start Redis

docker run -d --rm -p 6379:6379 redis

# If using vLLM (running on NVIDIA GPU)

export LLAMA_PATH="$HOME/models/Llama-3.1-8B-Instruct"

poetry install --with vllm

# If using Ollama

export LLAMA_PATH="llama.3.1"

poetry install

./run.shVisit http://127.0.0.1:8000

Note: Make sure to have ffmpeg < 7 installed and to update the

DYLD_LIBRARY_PATHwith the path to the ffmpeg libraries, e.g.export DYLD_LIBRARY_PATH=/Users/MyUser/ffmpeg/6.1.2/lib:$DYLD_LIBRARY_PATH.

mkdir -p "$HOME/models/streaming-whisper"

export WHISPER_MODEL_NAME="tiny.en"

export BYPASS_AUTHORIZATION="true"

export ENABLED_MODULES="streaming_whisper"

export WHISPER_MODEL_PATH="$HOME/models/streaming-whisper"

poetry install

./run.shdocker compose -f compose-dev.yaml up --build

docker cp $HOME/models/Llama-3.1-8B-Instruct-Q8_0.gguf skynet-web-1:/models

docker restart skynet-web-1

# localhost:8000 for Skynet APIs

# localhost:8001/metrics for Prometheus metricsGo to Streaming Whisper Demo to test your deployment from a browser

OR

Go to demos/streaming-whisper/ and start a Python http server.

python3 -m http.server 8080Open http://127.0.0.1:8080.

Detailed documentation on how to configure, run, build and monitor Skynet and can be found in the docs.

If you want to contribute, make sure to install the pre-commit hook for linting.

poetry run githooks setupSkynet is distributed under the Apache 2.0 License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for skynet

Similar Open Source Tools

skynet

Skynet is an API server for AI services that wraps several apps and models. It consists of specialized modules that can be enabled or disabled as needed. Users can utilize Skynet for tasks such as summaries and action items with vllm or Ollama, live transcriptions with Faster Whisper via websockets, and RAG Assistant. The tool requires Poetry and Redis for operation. Skynet provides a quickstart guide for both Summaries/Assistant and Live Transcriptions, along with instructions for testing docker changes and running demos. Detailed documentation on configuration, running, building, and monitoring Skynet is available in the docs. Developers can contribute to Skynet by installing the pre-commit hook for linting. Skynet is distributed under the Apache 2.0 License.

batteries-included

Batteries Included is an all-in-one platform for building and running modern applications, simplifying cloud infrastructure complexity. It offers production-ready capabilities through an intuitive interface, focusing on automation, security, and enterprise-grade features. The platform includes databases like PostgreSQL and Redis, AI/ML capabilities with Jupyter notebooks, web services deployment, security features like SSL/TLS management, and monitoring tools like Grafana dashboards. Batteries Included is designed to streamline infrastructure setup and management, allowing users to concentrate on application development without dealing with complex configurations.

SecureAI-Tools

SecureAI Tools is a private and secure AI tool that allows users to chat with AI models, chat with documents (PDFs), and run AI models locally. It comes with built-in authentication and user management, making it suitable for family members or coworkers. The tool is self-hosting optimized and provides necessary scripts and docker-compose files for easy setup in under 5 minutes. Users can customize the tool by editing the .env file and enabling GPU support for faster inference. SecureAI Tools also supports remote OpenAI-compatible APIs, with lower hardware requirements for using remote APIs only. The tool's features wishlist includes chat sharing, mobile-friendly UI, and support for more file types and markdown rendering.

LLMinator

LLMinator is a Gradio-based tool with an integrated chatbot designed to locally run and test Language Model Models (LLMs) directly from HuggingFace. It provides an easy-to-use interface made with Gradio, LangChain, and Torch, offering features such as context-aware streaming chatbot, inbuilt code syntax highlighting, loading any LLM repo from HuggingFace, support for both CPU and CUDA modes, enabling LLM inference with llama.cpp, and model conversion capabilities.

openmeter

OpenMeter is a real-time and scalable usage metering tool for AI, usage-based billing, infrastructure, and IoT use cases. It provides a REST API for integrations and offers client SDKs in Node.js, Python, Go, and Web. OpenMeter is licensed under the Apache 2.0 License.

ChatGPT

The ChatGPT API Free Reverse Proxy provides free self-hosted API access to ChatGPT (`gpt-3.5-turbo`) with OpenAI's familiar structure, eliminating the need for code changes. It offers streaming response, API endpoint compatibility, and complimentary access without an API key. Installation options include Docker, PC/Server, and Termux on Android devices. The API can be accessed through a self-hosted local server or a pre-hosted API with an API key obtained from the Discord server. Usage examples are provided for Python and Node.js, and the project is licensed under AGPL-3.0.

todoist-ai

Library for connecting AI agents to Todoist, enabling them to access and modify a Todoist account on the user's behalf. Tools can be used through an MCP server or integrated into other projects for AI conversational interfaces. Reusable tools allow for complete workflows, balancing flexibility and efficiency for LLMs. Early-stage project with more tools planned. Designed to provide a small set of tools for various AI interfaces.

frontend

Nuclia frontend apps and libraries repository contains various frontend applications and libraries for the Nuclia platform. It includes components such as Dashboard, Widget, SDK, Sistema (design system), NucliaDB admin, CI/CD Deployment, and Maintenance page. The repository provides detailed instructions on installation, dependencies, and usage of these components for both Nuclia employees and external developers. It also covers deployment processes for different components and tools like ArgoCD for monitoring deployments and logs. The repository aims to facilitate the development, testing, and deployment of frontend applications within the Nuclia ecosystem.

mattermost-plugin-agents

The Mattermost Agents Plugin integrates AI capabilities directly into your Mattermost workspace, allowing users to run local LLMs on their infrastructure or connect to cloud providers. It offers multiple AI assistants with specialized personalities, thread and channel summarization, action item extraction, meeting transcription, semantic search, smart reactions, direct conversations with AI assistants, and flexible LLM support. The plugin comes with comprehensive documentation, installation instructions, system requirements, and development guidelines for users to interact with AI features and configure LLM providers.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

srcbook

Srcbook is an open-source interactive programming environment for TypeScript that allows users to create, run, and share reproducible programs and ideas. It features AI capabilities for exploring and iterating on ideas, supports exporting to valid markdown format, and enables diagraming with mermaid for rich annotations. Users can locally execute programs through a web interface, powered by Node.js under the Apache2 license.

xlang

XLang™ is a cutting-edge language designed for AI and IoT applications, offering exceptional dynamic and high-performance capabilities. It excels in distributed computing and seamless integration with popular languages like C++, Python, and JavaScript. Notably efficient, running 3 to 5 times faster than Python in AI and deep learning contexts. Features optimized tensor computing architecture for constructing neural networks through tensor expressions. Automates tensor data flow graph generation and compilation for specific targets, enhancing GPU performance by 6 to 10 times in CUDA environments.

middleware

Middleware is an open-source engineering management tool that helps engineering leaders measure and analyze team effectiveness using DORA metrics. It integrates with CI/CD tools, automates DORA metric collection and analysis, visualizes key performance indicators, provides customizable reports and dashboards, and integrates with project management platforms. Users can set up Middleware using Docker or manually, generate encryption keys, set up backend and web servers, and access the application to view DORA metrics. The tool calculates DORA metrics using GitHub data, including Deployment Frequency, Lead Time for Changes, Mean Time to Restore, and Change Failure Rate. Middleware aims to provide DORA metrics to users based on their Git data, simplifying the process of tracking software delivery performance and operational efficiency.

mdserve

Markdown preview server for AI coding agents. mdserve is a tool that allows AI agents to write markdown and see it rendered live in the browser. It features zero configuration, single binary installation, instant live reload via WebSocket, ephemeral sessions, and agent-friendly content support. It is not a documentation site generator, static site server, or general-purpose markdown authoring tool. mdserve is designed for AI coding agents to produce content like tables, diagrams, and code blocks.

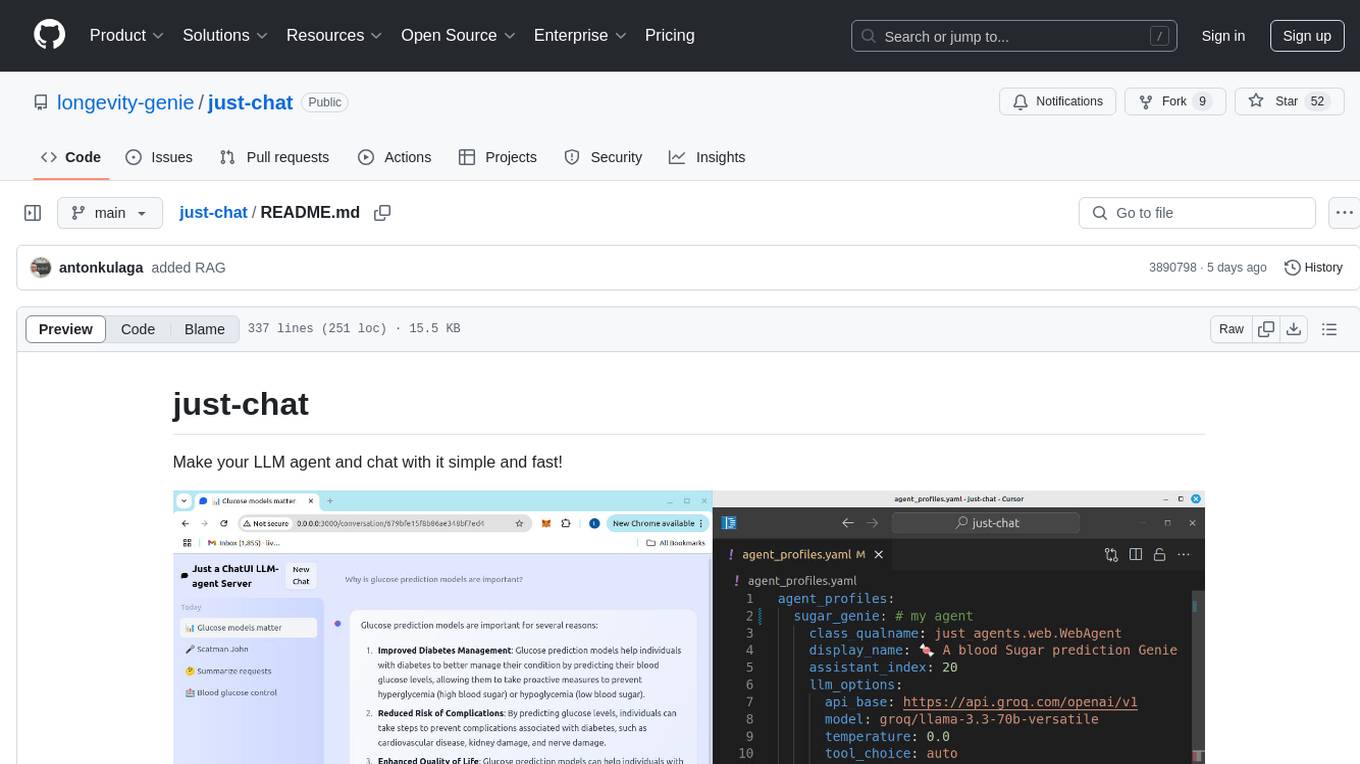

just-chat

Just-Chat is a containerized application that allows users to easily set up and chat with their AI agent. Users can customize their AI assistant using a YAML file, add new capabilities with Python tools, and interact with the agent through a chat web interface. The tool supports various modern models like DeepSeek Reasoner, ChatGPT, LLAMA3.3, etc. Users can also use semantic search capabilities with MeiliSearch to find and reference relevant information based on meaning. Just-Chat requires Docker or Podman for operation and provides detailed installation instructions for both Linux and Windows users.

sre-agent

SRE Agent is an open-source AI agent designed to help Site Reliability Engineers (SREs) debug, maintain healthy Kubernetes systems, and simplify DevOps tasks. With a command-line interface (CLI), users can interact directly with the agent to diagnose issues, report diagnostics, and streamline operations. The agent supports root cause debugging, Kubernetes log querying, GitHub codebase search, and CLI-powered interactions. It is powered by the Model Context Protocol (MCP) for seamless connectivity. Users can configure AWS credentials, GitHub integration, and Anthropic API key to start monitoring deployments and diagnosing issues. The tool is structured with Python services and TypeScript MCP servers for development and maintenance.

For similar tasks

sorrentum

Sorrentum is an open-source project that aims to combine open-source development, startups, and brilliant students to build machine learning, AI, and Web3 / DeFi protocols geared towards finance and economics. The project provides opportunities for internships, research assistantships, and development grants, as well as the chance to work on cutting-edge problems, learn about startups, write academic papers, and get internships and full-time positions at companies working on Sorrentum applications.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

craftgen

Craftgen.ai is an innovative AI platform designed for both technical and non-technical users. It's built on a foundation of graph architecture for scalability and the Actor Model for efficient concurrent operations, tailored to both technical and non-technical users. A key aspect of Craftgen.ai is its modular AI approach, allowing users to assemble and customize AI components like building blocks to fit their specific needs. The platform's robustness is enhanced by its event-driven architecture, ensuring reliable data processing and featuring browser web technologies for universal access. Craftgen.ai excels in dynamic tool and workflow generation, with strong offline capabilities for secure environments and plans for desktop application integration. A unique and valuable feature of Craftgen.ai is its marketplace, where users can access a variety of pre-built AI solutions. This marketplace accelerates the deployment of AI tools but also fosters a community of sharing and innovation. Users can contribute to and leverage this repository of solutions, enhancing the platform's versatility and practicality. Craftgen.ai uses JSON schema for industry-standard alignment, enabling seamless integration with any API following the OpenAPI spec. This allows for a broad range of applications, from automating data analysis to streamlining content management. The platform is designed to bridge the gap between advanced AI technology and practical usability. It's a flexible, secure, and intuitive platform that empowers users, from developers seeking to create custom AI solutions to businesses looking to automate routine tasks. Craftgen.ai's goal is to make AI technology an integral, seamless part of everyday problem-solving and innovation, providing a platform where modular AI and a thriving marketplace converge to meet the diverse needs of its users.

Data-Science-EBooks

This repository contains a collection of resources in the form of eBooks related to Data Science, Machine Learning, and similar topics.

BambooAI

BambooAI is a lightweight library utilizing Large Language Models (LLMs) to provide natural language interaction capabilities, much like a research and data analysis assistant enabling conversation with your data. You can either provide your own data sets, or allow the library to locate and fetch data for you. It supports Internet searches and external API interactions.

ai_wiki

This repository provides a comprehensive collection of resources, open-source tools, and knowledge related to quantitative analysis. It serves as a valuable knowledge base and navigation guide for individuals interested in various aspects of quantitative investing, including platforms, programming languages, mathematical foundations, machine learning, deep learning, and practical applications. The repository is well-structured and organized, with clear sections covering different topics. It includes resources on system platforms, programming codes, mathematical foundations, algorithm principles, machine learning, deep learning, reinforcement learning, graph networks, model deployment, and practical applications. Additionally, there are dedicated sections on quantitative trading and investment, as well as large models. The repository is actively maintained and updated, ensuring that users have access to the latest information and resources.

free-for-life

A massive list including a huge amount of products and services that are completely free! ⭐ Star on GitHub • 🤝 Contribute # Table of Contents * APIs, Data & ML * Artificial Intelligence * BaaS * Code Editors * Code Generation * DNS * Databases * Design & UI * Domains * Email * Font * For Students * Forms * Linux Distributions * Messaging & Streaming * PaaS * Payments & Billing * SSL

mslearn-ai-fundamentals

This repository contains materials for the Microsoft Learn AI Fundamentals module. It covers the basics of artificial intelligence, machine learning, and data science. The content includes hands-on labs, interactive learning modules, and assessments to help learners understand key concepts and techniques in AI. Whether you are new to AI or looking to expand your knowledge, this module provides a comprehensive introduction to the fundamentals of AI.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.