srcbook

TypeScript notebook for rapid prototyping

Stars: 2077

Srcbook is an open-source interactive programming environment for TypeScript that allows users to create, run, and share reproducible programs and ideas. It features AI capabilities for exploring and iterating on ideas, supports exporting to valid markdown format, and enables diagraming with mermaid for rich annotations. Users can locally execute programs through a web interface, powered by Node.js under the Apache2 license.

README:

- Create, run, and share reproducible programs and ideas

- Export to valid markdown format (.src.md)

- AI features for exploring and iterating on ideas

- Diagraming with mermaid for rich annotations

- Local execution with a web interface

- Powered by Node.js

- Open-source under the Apache2 license

See FAQ.

Srcbook runs locally on your machine as a CLI application with a web interface.

- Node 18+, we recommend using nvm to manage local node versions

- corepack to manage package manager versions

We recommend using npx to always run the latest version from npm

# Using npm

npx srcbook@latest start

# Using your pm equivalent

pnpm dlx srcbook@latest startYou can instead use a global install with

<pkg manager> i -g srcbookand then directly call srcbook withsrcbook start

$ srcbook -h

Usage: srcbook [options] [command]

Srcbook is a interactive programming environment for TypeScript

Options:

-V, --version output the version number

-h, --help display help for command

Commands:

start [options] Start the Srcbook server

import [options] <specifier> Import a Srcbook

help [command] display help for commandYou can remove srcbook by first removing the package, and then cleaning it's local directory on disk:

rm -rf ~/.srcbook

# if you configured a global install

npm uninstall -g srcbookif you used another pm you will need to use it's specific uninstall command

In order to improve Srcbook, we collect some behavioral analytics. We don't collect any Personal Identifiable Information (PII), our goals are simply to improve the application. The code is open source so you don't have to trust us, you can verify! You can find more information in our privacy policy.

If you want to disable tracking, you can run Srcbook with SRCBOOK_DISABLE_ANALYTICS=true set in the environment.

For development instructions, see CONTRIBUTING.md.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for srcbook

Similar Open Source Tools

srcbook

Srcbook is an open-source interactive programming environment for TypeScript that allows users to create, run, and share reproducible programs and ideas. It features AI capabilities for exploring and iterating on ideas, supports exporting to valid markdown format, and enables diagraming with mermaid for rich annotations. Users can locally execute programs through a web interface, powered by Node.js under the Apache2 license.

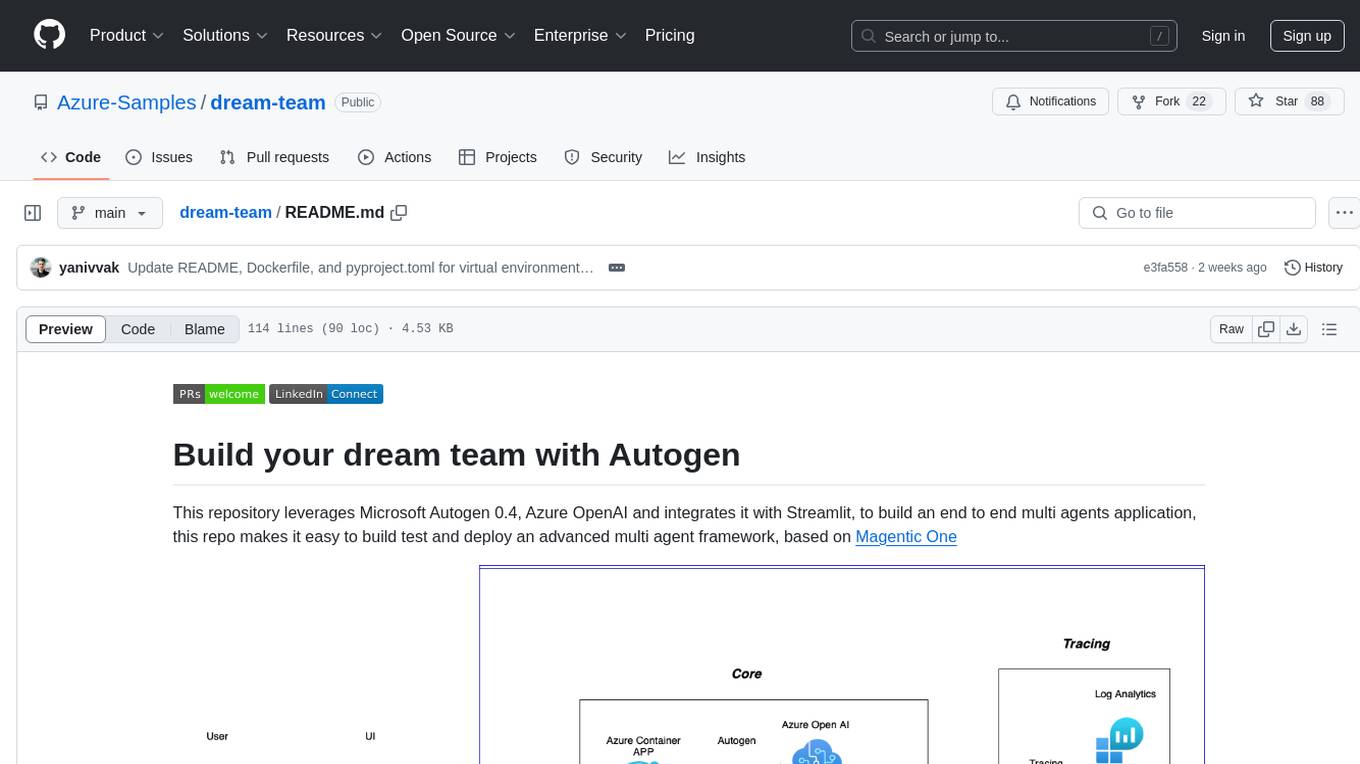

dream-team

Build your dream team with Autogen is a repository that leverages Microsoft Autogen 0.4, Azure OpenAI, and Streamlit to create an end-to-end multi-agent application. It provides an advanced multi-agent framework based on Magentic One, with features such as a friendly UI, single-line deployment, secure code execution, managed identities, and observability & debugging tools. Users can deploy Azure resources and the app with simple commands, work locally with virtual environments, install dependencies, update configurations, and run the application. The repository also offers resources for learning more about building applications with Autogen.

LLMinator

LLMinator is a Gradio-based tool with an integrated chatbot designed to locally run and test Language Model Models (LLMs) directly from HuggingFace. It provides an easy-to-use interface made with Gradio, LangChain, and Torch, offering features such as context-aware streaming chatbot, inbuilt code syntax highlighting, loading any LLM repo from HuggingFace, support for both CPU and CUDA modes, enabling LLM inference with llama.cpp, and model conversion capabilities.

todoist-ai

Library for connecting AI agents to Todoist, enabling them to access and modify a Todoist account on the user's behalf. Tools can be used through an MCP server or integrated into other projects for AI conversational interfaces. Reusable tools allow for complete workflows, balancing flexibility and efficiency for LLMs. Early-stage project with more tools planned. Designed to provide a small set of tools for various AI interfaces.

botpress

Botpress is a platform for building next-generation chatbots and assistants powered by OpenAI. It provides a range of tools and integrations to help developers quickly and easily create and deploy chatbots for various use cases.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

starter-monorepo

Starter Monorepo is a template repository for setting up a monorepo structure in your project. It provides a basic setup with configurations for managing multiple packages within a single repository. This template includes tools for package management, versioning, testing, and deployment. By using this template, you can streamline your development process, improve code sharing, and simplify dependency management across your project. Whether you are working on a small project or a large-scale application, Starter Monorepo can help you organize your codebase efficiently and enhance collaboration among team members.

vim-ollama

The 'vim-ollama' plugin for Vim adds Copilot-like code completion support using Ollama as a backend, enabling intelligent AI-based code completion and integrated chat support for code reviews. It does not rely on cloud services, preserving user privacy. The plugin communicates with Ollama via Python scripts for code completion and interactive chat, supporting Vim only. Users can configure LLM models for code completion tasks and interactive conversations, with detailed installation and usage instructions provided in the README.

PrAIvateSearch

PrAIvateSearch is a NextJS web application that aims to implement similar features to SearchGPT in an open-source, local, and private way. It allows users to search the web using their own AI model. The application provides a user-friendly interface for interacting with the AI model and accessing search results. PrAIvateSearch is designed to be easy to install and use, with detailed instructions provided in the readme file. The project is in beta stage and welcomes contributions from the community to improve and enhance its functionality. Users are encouraged to support the project through funding to help it grow and continue to be maintained as an open-source tool under the MIT license.

webwhiz

WebWhiz is an open-source tool that allows users to train ChatGPT on website data to build AI chatbots for customer queries. It offers easy integration, data-specific responses, regular data updates, no-code builder, chatbot customization, fine-tuning, and offline messaging. Users can create and train chatbots in a few simple steps by entering their website URL, automatically fetching and preparing training data, training ChatGPT, and embedding the chatbot on their website. WebWhiz can crawl websites monthly, collect text data and metadata, and process text data using tokens. Users can train custom data, but bringing custom open AI keys is not yet supported. The tool has no limitations on context size but may limit the number of pages based on the chosen plan. WebWhiz SDK is available on NPM, CDNs, and GitHub, and users can self-host it using Docker or manual setup involving MongoDB, Redis, Node, Python, and environment variables setup. For any issues, users can contact [email protected].

create-tsi

Create TSI is a generative AI RAG toolkit that simplifies the process of creating AI Applications using LlamaIndex with low code. The toolkit leverages LLMs hosted by T-Systems on Open Telekom Cloud to generate bots, write agents, and customize them for specific use cases. It provides a Next.js-powered front-end for a chat interface, a Python FastAPI backend powered by llama-index package, and the ability to ingest and index user-supplied data for answering questions.

buildware-ai

Buildware is a tool designed to help developers accelerate their code shipping process by leveraging AI technology. Users can build a code instruction system, submit an issue, and receive an AI-generated pull request. The tool is created by Mckay Wrigley and Tyler Bruno at Takeoff AI. Buildware offers a simple setup process involving cloning the repository, installing dependencies, setting up environment variables, configuring a database, and obtaining a GitHub Personal Access Token (PAT). The tool is currently being updated to include advanced features such as Linear integration, local codebase mode, and team support.

labs-ai-tools-for-devs

This repository provides AI tools for developers through Docker containers, enabling agentic workflows. It allows users to create complex workflows using Dockerized tools and Markdown, leveraging various LLM models. The core features include Dockerized tools, conversation loops, multi-model agents, project-first design, and trackable prompts stored in a git repo.

fasttrackml

FastTrackML is an experiment tracking server focused on speed and scalability, fully compatible with MLFlow. It provides a user-friendly interface to track and visualize your machine learning experiments, making it easy to compare different models and identify the best performing ones. FastTrackML is open source and can be easily installed and run with pip or Docker. It is also compatible with the MLFlow Python package, making it easy to integrate with your existing MLFlow workflows.

aider-composer

Aider Composer is a VSCode extension that integrates Aider into your development workflow. It allows users to easily add and remove files, toggle between read-only and editable modes, review code changes, use different chat modes, and reference files in the chat. The extension supports multiple models, code generation, code snippets, and settings customization. It has limitations such as lack of support for multiple workspaces, Git repository features, linting, testing, voice features, in-chat commands, and configuration options.

chatflow

Chatflow is a tool that provides a chat interface for users to interact with systems using natural language. The engine understands user intent and executes commands for tasks, allowing easy navigation of complex websites/products. This approach enhances user experience, reduces training costs, and boosts productivity.

For similar tasks

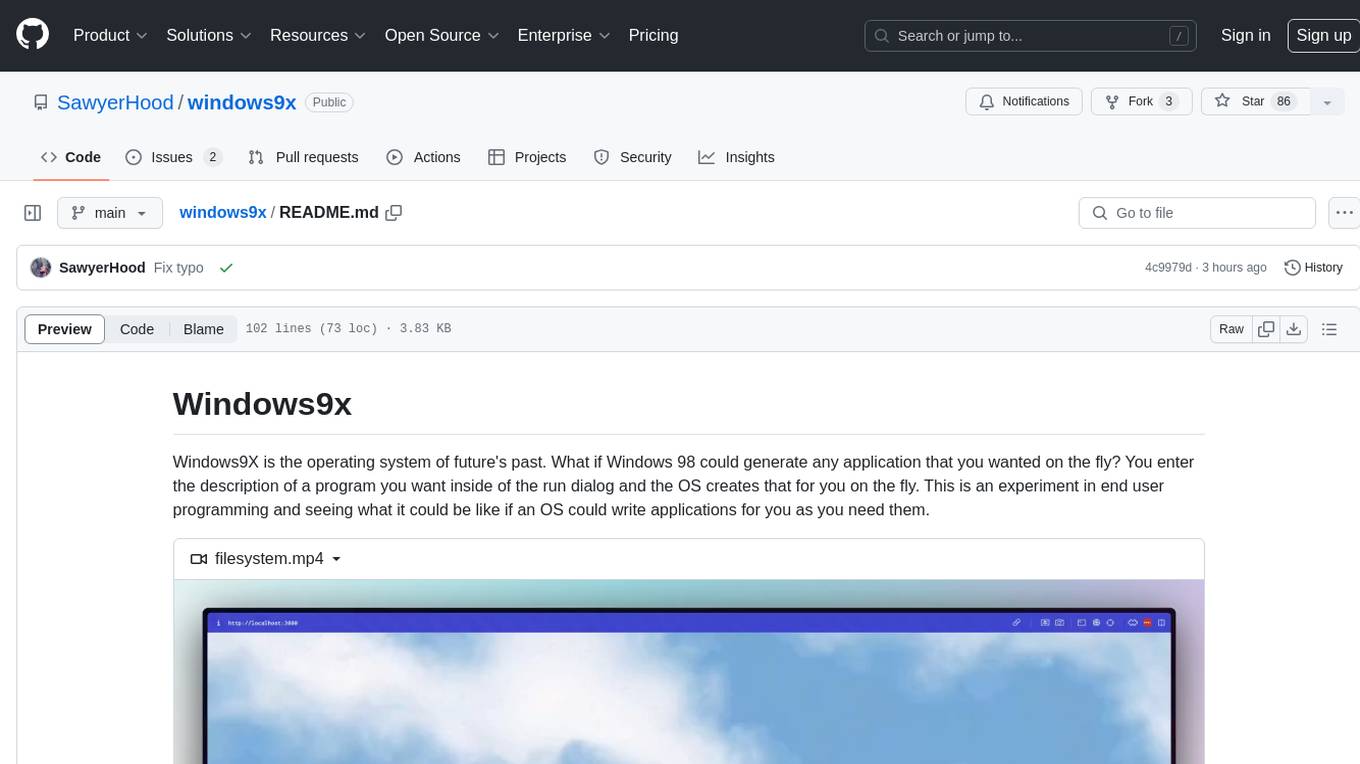

windows9x

Windows9X is an experimental operating system that allows users to generate applications on the fly by entering descriptions of programs. It leverages an LLM to create HTML files resembling Windows 98 applications, with access to a limited OS API for file operations, registry interactions, and LLM prompting.

srcbook

Srcbook is an open-source interactive programming environment for TypeScript that allows users to create, run, and share reproducible programs and ideas. It features AI capabilities for exploring and iterating on ideas, supports exporting to valid markdown format, and enables diagraming with mermaid for rich annotations. Users can locally execute programs through a web interface, powered by Node.js under the Apache2 license.

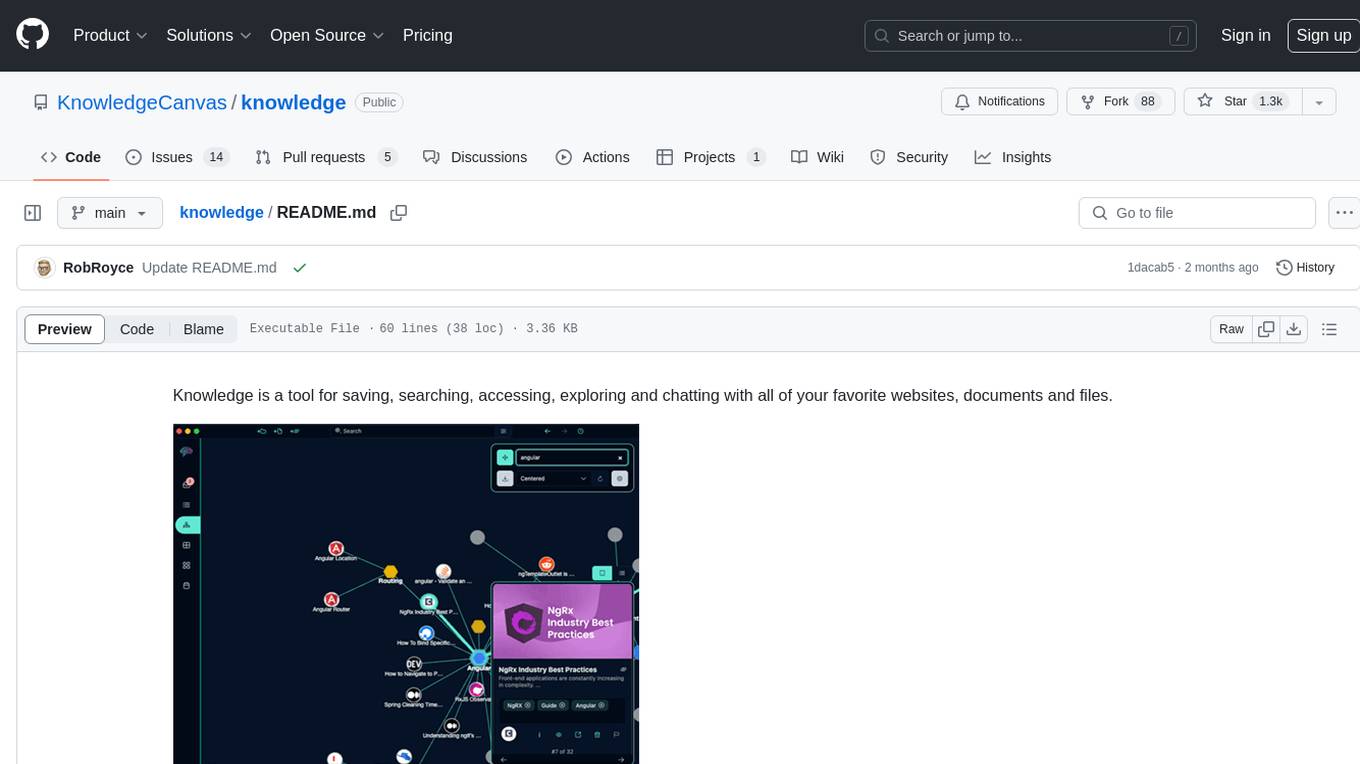

knowledge

Knowledge is a tool for saving, searching, accessing, exploring and chatting with all of your favorite websites, documents and files. Dive into a more interactive learning experience with Knowledge's new Chat feature! Engage in dynamic conversations with your Projects and Sources, leveraging the power of Large Language Models. The Chat feature is designed to transform the way you interact with your data, offering a more engaging and exploratory approach to learning. Unleash the power of context with the built-in Chromium browser. Transform your browsing into knowledge gathering effortlessly.

GenAiGuidebook

GenAiGuidebook is a comprehensive resource for individuals looking to begin their journey in GenAI. It serves as a detailed guide providing insights, tips, and information on various aspects of GenAI technology. The guidebook covers a wide range of topics, including introductory concepts, practical applications, and best practices in the field of GenAI. Whether you are a beginner or an experienced professional, this resource aims to enhance your understanding and proficiency in GenAI.

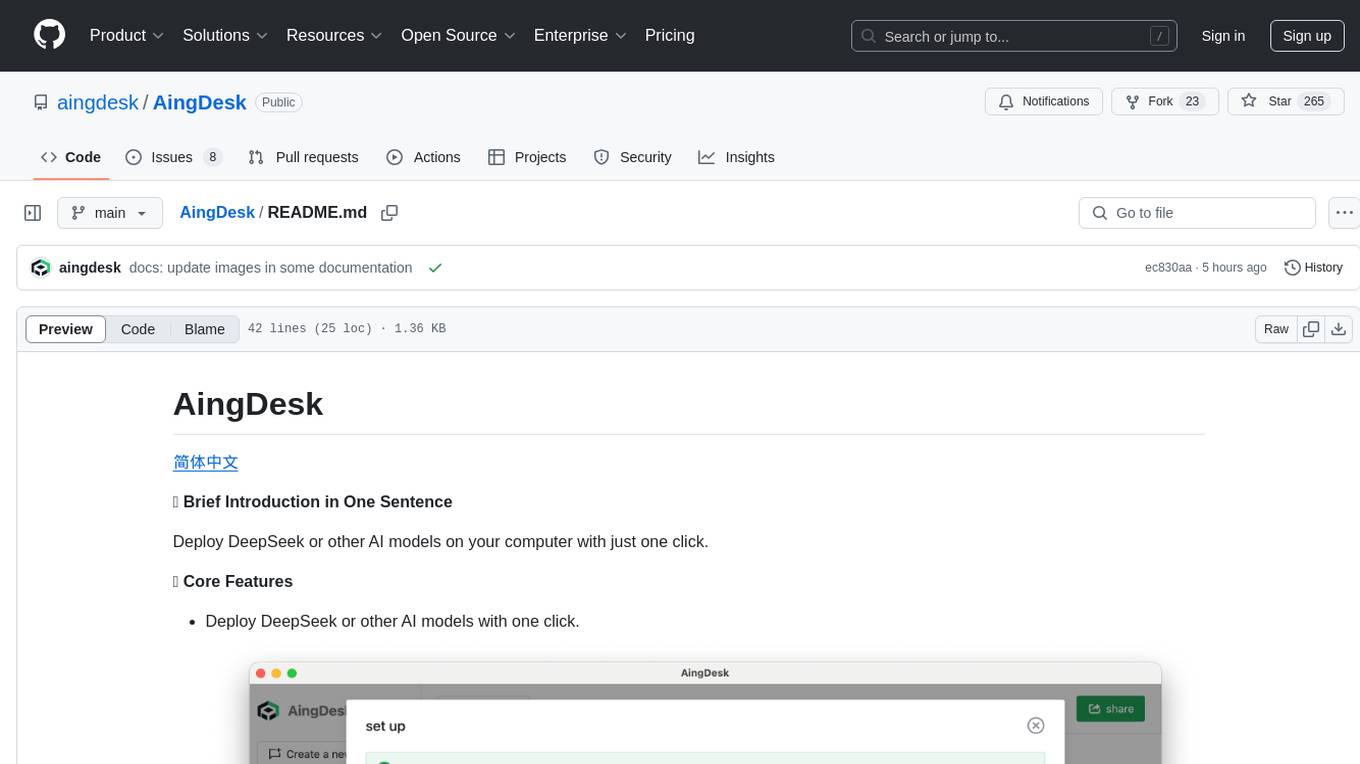

AingDesk

AingDesk is a tool that allows users to deploy DeepSeek or other AI models on their computer with just one click. It features a user-friendly interface, multi-source knowledge base support, built-in chat interface, and the ability to share projects online. The tool is optimized for performance on both local and cloud environments, with a focus on hassle-free setup and extensibility through a modular architecture. The development plan includes support for third-party API integrations and local deployment of text-to-image hybrid models for creative workflows.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.