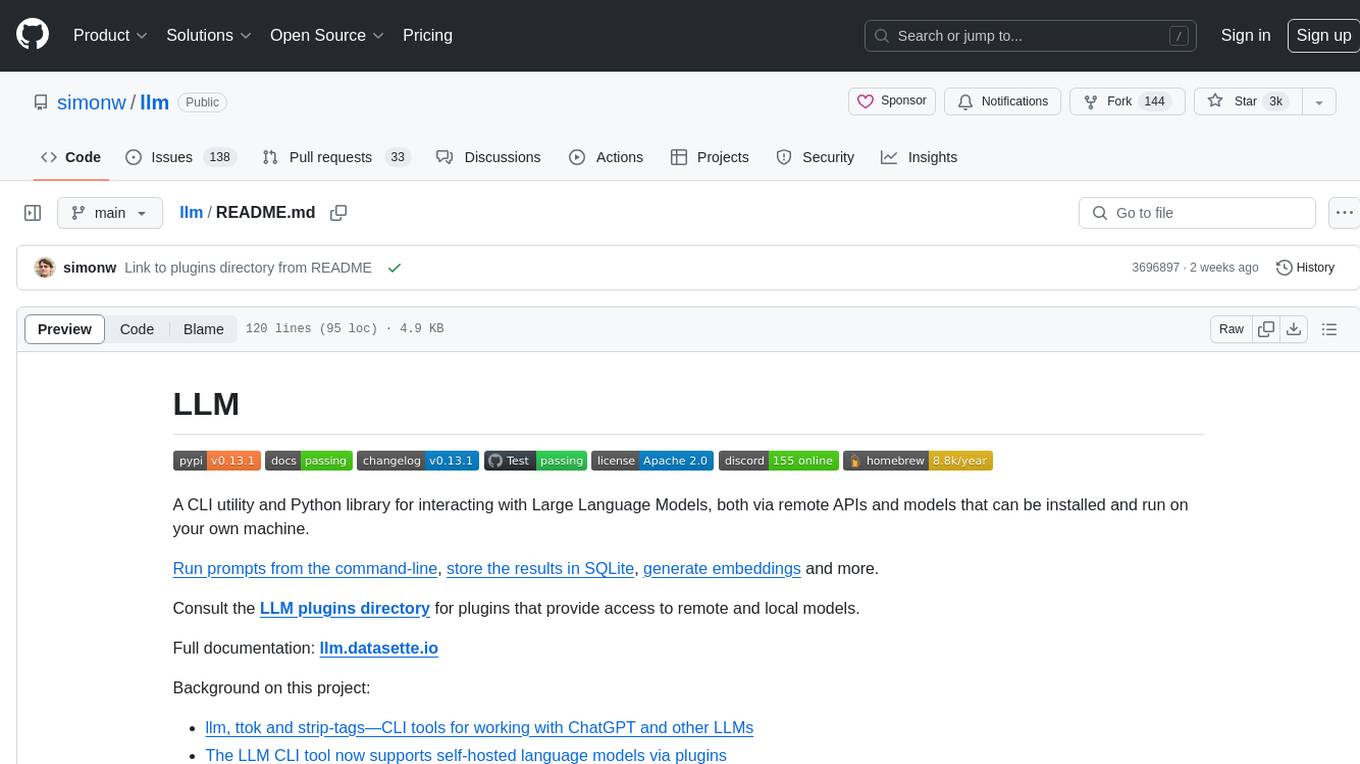

just-chat

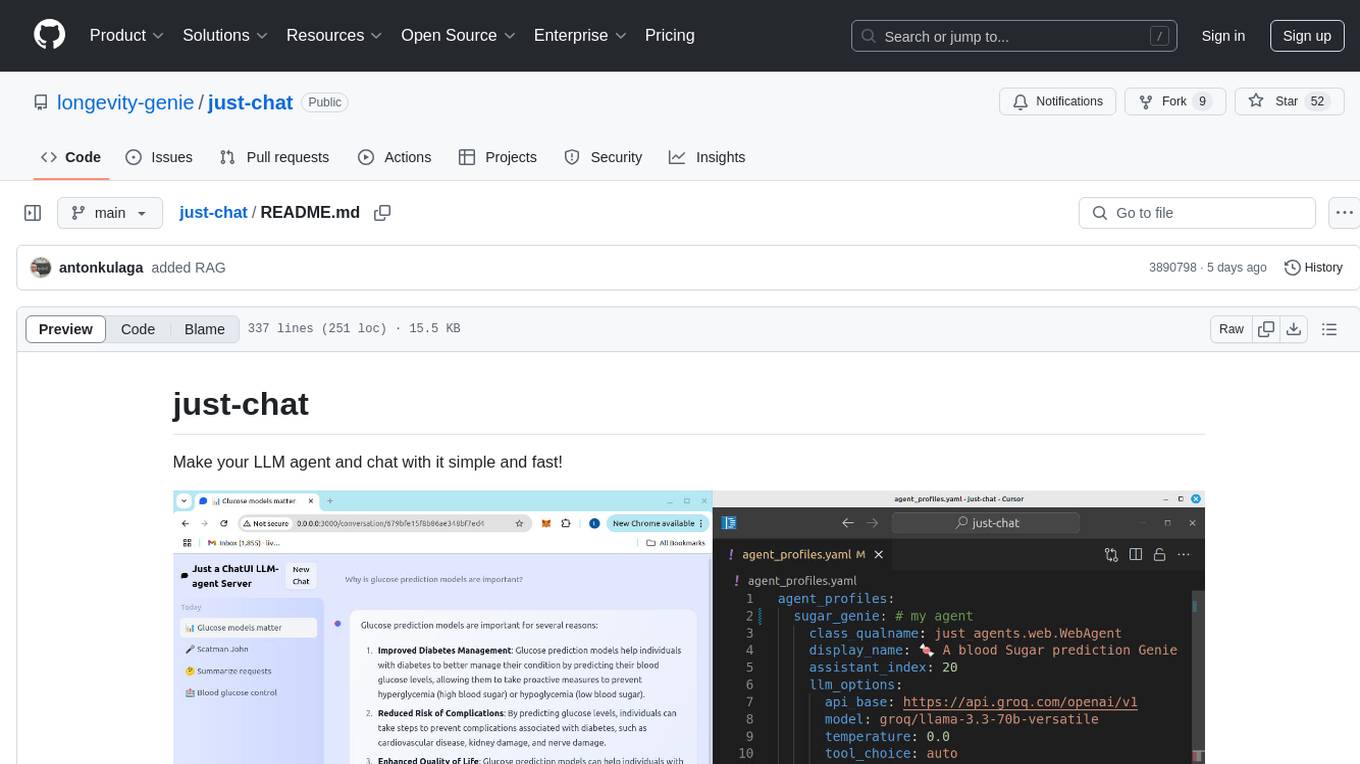

Make your LLM agent and chat with it simple and fast!

Stars: 52

Just-Chat is a containerized application that allows users to easily set up and chat with their AI agent. Users can customize their AI assistant using a YAML file, add new capabilities with Python tools, and interact with the agent through a chat web interface. The tool supports various modern models like DeepSeek Reasoner, ChatGPT, LLAMA3.3, etc. Users can also use semantic search capabilities with MeiliSearch to find and reference relevant information based on meaning. Just-Chat requires Docker or Podman for operation and provides detailed installation instructions for both Linux and Windows users.

README:

Make your LLM agent and chat with it simple and fast!

Setting up your agent and your chat in few clicks

Setting up your agent and your chat in few clicks

Just clone repository and run docker-compose!

git clone [email protected]:longevity-genie/just-chat.git

USER_ID=$(id -u) GROUP_ID=$(id -g) docker compose upAnd the chat with your agent is ready to go! Open http://localhost:3000 in your browser and start chatting with your agent!

Note: container will be started with the user and group of the host machine to avoid permission issues. If you want to change this, you can modify the USER_ID and GROUP_ID variables in the docker-compose.yml file.

If you prefer Podman or use RPM-based distro where it is the default option, you can use alternative Podman installation:

git clone [email protected]:longevity-genie/just-chat.git

podman-compose up Unlike Docker, Podman is rootless-by-design maps user and group id automatically. NB! Ubuntu 22 contains podman v.3 which is not fully compatible with this setup, assume podman v4.9.3 or higher is required.

You can customize your setup by:

- Editing

chat_agent_profiles.yamlto customize your agent - Adding tools to

/agent_toolsdirectory to empower your agent - Modifying

docker-compose.ymlfor advanced settings (optional)

The only requirement is Docker (or Podman, both are supported)! We provide detailed installation instructions for both Linux and Windows in the Installation section. Also check the notes section for further information.

- 🚀 Start chatting with one command ( docker compose up )

- 🤖 Customize your AI assistant using a YAML file (can be edited with a text editor)

- 🛠️ Add new capabilities with Python tools (can add additional functions and libraries)

- 🌐 Talk with agent with a chat web interface at 0.0.0.0:3000

- 🐳 Run everything in Docker containers

- 📦 Works without Python or Node.js on your system

We use just-agents library to initialize agents from YAML, so most of the modern models ( DeepSeek Reasoner, ChatGPT, LLAMA3.3, etc.) are supported. However, you might need to add your own keys to the environment variables. We provide a free Groq key by default but it is very rate-limited. We recommend getting your own keys, Groq can be a good place to start as it is free and contains many open-source models.

Podman is a safer container environment than Docker. If you prefer Podman or use Fedora or any other RPM-based Linux distribution, you can use it instead of Docker:

git clone https://github.com/winternewt/just-chat.git

podman compose up Unlike Docker, Podman is rootless-by-design and maps user and group id automatically.

The only requirement is Docker (or Podman, both are supported)! We provide detailed installation instructions for both Linux and Windows in the Installation section. Also check the notes section for further information.

-

chat_agent_profiles.yaml- Configure your agents, their personalities and capabilities, example agents provided. -

/agent_tools/- Python tools to extend agent capabilities. Contains example tools and instructions for adding your own tools with custom dependencies. -

/data/- Application data storage if you want to let your agent work with additional data. -

docker-compose.yml- Container orchestration and service configuration. -

/env/- Environment configuration files and settings. -

images/- Images for the README. -

/logs/- Application logs. -

/scripts/- Utility scripts including Docker installation helpers. -

/volumes/- Docker volume mounts for persistent storage. -

/logs/- Application logs. We use eliot library for logging

Note: Each folder contains additional README file with more information about the folder contents!

Just-Chat is a containerized application. To run it you can use either Docker or Podman. You can skip this section if you already have any of them installed. If you do not have a preference we recommend Podman as more modern and secure container engine.

Detailed docker instructions:

Docker Installation on Linux

Refer to the official guides:

For Ubuntu users, you can review and use the provided convenience.sh script:

./scripts/install_docker_ubuntu.shOr follow these manual steps:

# Add Docker's official GPG key:

sudo apt-get update

sudo apt-get install ca-certificates curl

sudo install -m 0755 -d /etc/apt/keyrings

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

sudo chmod a+r /etc/apt/keyrings/docker.asc

# Add the repository to Apt sources:

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "${UBUNTU_CODENAME:-$VERSION_CODENAME}") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get updatesudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

curl -SL https://github.com/docker/compose/releases/download/v2.32.4/docker-compose-linux-$(uname -m) -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

sudo ln -sf /usr/local/bin/docker-compose /usr/bin/docker-composeNote: at many systems it can be docker compose (without -)

Docker Installation on Windows

- Windows 10 (Pro, Enterprise, Education) Version 1909 or later

- Windows 11 (any edition)

- WSL 2 (Windows Subsystem for Linux) enabled (recommended) - WSL Installation Guide

- Hyper-V enabled (if using Windows 10 Pro/Enterprise)

- At least 4GB of RAM (recommended)

- Download Docker Desktop

- Run the installer and follow the prompts

- Restart your PC

- Launch Docker Desktop

- Docker Compose is included with Docker Desktop

For detailed instructions and troubleshooting, see the official Windows installation guide.

If you prefer Podman, you can use the following instructions:

Podman Installation on Linux

For Ubuntu users (especially Ubuntu 24.04+):

# Install Podman

sudo apt-get update

sudo apt-get install -y podman

# Install Python3 and pip if not already installed

sudo apt-get install -y python3 python3-pip

# Install Podman Compose

pip3 install podman-composeFor legacy Ubuntu users (22.04 LTS): You will have, sadly, to build podman from source. Due to outdated go-lang version in Ubuntu 22.04 LTS, you will have to add ppa repository and install a newer version of go-lang as a prerequisite and a bunch of libraries:

sudo add-apt-repository ppa:longsleep/golang-backports

sudo apt update

sudo sysctl kernel.unprivileged_userns_clone=1

sudo apt install git build-essential btrfs-progs gcc git golang-go go-md2man iptables libassuan-dev libbtrfs-dev libc6-dev libdevmapper-dev libglib2.0-dev libgpgme-dev libgpg-error-dev libprotobuf-dev libprotobuf-c-dev libseccomp-dev libselinux1-dev libsystemd-dev make containernetworking-plugins pkg-config uidmap

sudo apt install runc # only if you don't have docker installedClone the podman repository and checkout the latest stable version from 24 LTS:

git clone https://github.com/containers/podman.git

cd podman/

git checkout v4.9.3

make

sudo make install

podman --versionFor other Linux distributions, refer to:

Podman Installation on Windows

- Windows 10/11

- WSL 2 enabled

- 4GB RAM (recommended)

- Download and install Podman Desktop

- Initialize Podman:

podman machine init

podman machine start- Install Podman Compose:

pip3 install podman-composeFor detailed instructions, see the official Podman documentation.

git clone https://github.com/winternewt/just-chat.gitUSER_ID=$(id -u) GROUP_ID=$(id -g) docker compose upNote: Here we use USER_ID=$(id -u) GROUP_ID=$(id -g) to run as current user instead of root, but if it does not matter for you can simply run docker compose up We also provide experimental start and stop bash (for Linux) and bat (for Windows) scripts.

Just-Chat provides semantic search capabilities using MeiliSearch, which allows your agent to find and reference relevant information from documents based on meaning rather than just keywords:

- After starting the application, access the API documentation at

localhost:9000/docs - Use the API to index markdown files (use try it out button):

- Example: Index the included GlucoseDAO markdown files to the "glucosedao" index:

/index_markdownfor the/app/data/glucosedao_markdownfolder

- Example: Index the included GlucoseDAO markdown files to the "glucosedao" index:

- Enable semantic search in your agent:

- Uncomment the system prompt sections in

chat_agent_profiles.yamlcorresponding to search - Set the index to "glucosedao" (or your custom index name if you indexed other content)

- Uncomment the system prompt sections in

Note: the container has everything that you copy to ./data folder as /app/data/

This feature allows your agent to search and reference specific knowledge bases during conversations.

-

Be sure to use

docker pull(or podman pull if you use Podman) from time to time since the containers do not always automatically update when image was called with:latestIt might even cause errors in running - so keep this in mind. -

After the application is started, you can access the chat interface at

0.0.0.0:3000 -

Key settings in

docker-compose.yml(or podman-compose.yml if you use Podman):- UI Port:

0.0.0.0:3000(underhuggingchat-uiservice) - Agent Port:

127.0.0.1:8091:8091(underjust-chat-ui-agentsservice) - MongoDB Port:

27017(underchat-mongoservice) - Container image versions:

- just-chat-ui-agents:

ghcr.io/longevity-genie/just-agents/chat-ui-agents:main - chat-ui:

ghcr.io/longevity-genie/chat-ui/chat-ui:sha-325df57 - mongo:

latest

- just-chat-ui-agents:

- UI Port:

-

Troubleshooting container conflicts:

- Check running containers:

docker ps(or podman ps if you use Podman) - Stop conflicting containers:

cd /path/to/container/directory docker compose down

Note: Depending on your system and installation, you might need to use

docker-compose(with dash) instead ofdocker compose(without dash). - Check running containers:

-

Best practices for container management:

- Always stop containers when done using either:

-

docker compose down(ordocker-compose down) -

Ctrl+Cfollowed bydocker compose down

-

- To run in background mode, use:

docker compose up -d

- This prevents port conflicts in future sessions

- Always stop containers when done using either:

-

for editing the model used in chat_agent_profiles.yaml , the types are found here It is the just-agents library

The application uses environment variables to store API keys for various Language Model providers. A default configuration file is created under env/.env.keys during the initialization process. You can customize these keys to enable integrations with your preferred LLM providers.

- GROQ: A default API key is provided on the first run if no keys are present. However, this key is shared with other users and is rate-limited (you can get rate limit errors from time to time). We recommend getting your own key from Groq. For additional LLM providers and their respective key configurations, please refer to the LiteLLM Providers Documentation.

We provide meilisearch semantic search with an ability to add your own documents. You can use corresponding REST API methods either via calls or with a default SWAGGER UI (just open just-chat-agents which uses localhost:8091 by default) For your own documents we use MISTRAL_OCR to parse PDFs, so if you want to upload PDFs, please add MISTRAL_API_KEY to env/.env.keys For autoannotation free GROQ key may no be enough because of rate limits. Please, either provide a paid GROQ key or change the model at annotation_agent.

- By default, the application logs to the console and to timestamped files in the

/logsdirectory. - In case you run the application in background mode, you can still access and review the console logs by running:

docker compose logs -f just-chat-ui-agents. -

Langfuse: Uncomment and fill in your credentials in

env/.env.keysto enable additional observability for LLM calls. - Note: Langfuse is not enabled by default.

- NB!

docker compose downwill flush the container logs, but application logs will still be available in the/logsdirectory unless you delete them manually.

-

Editing the API Keys File:

The API keys are stored in/app/env/.env.keys. You can update this file manually or run the initialization script located atscripts/init_env.pyto automatically add commented hints for missing keys. -

Using Environment Variables:

When running the application via Docker, these keys are automatically loaded into the container's environment. Feel free to use other means to populate the environment variables as long as the application can access them. -

Restart the Application:

After updating the API keys, restart your Docker containers to apply the new settings, you may need to stop and start the containers to ensure the new keys are loaded:docker compose down docker compose up

Happy chatting!

This project is supported by:

HEALES - Healthy Life Extension Society

and

IBIMA - Institute for Biostatistics and Informatics in Medicine and Ageing Research

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for just-chat

Similar Open Source Tools

just-chat

Just-Chat is a containerized application that allows users to easily set up and chat with their AI agent. Users can customize their AI assistant using a YAML file, add new capabilities with Python tools, and interact with the agent through a chat web interface. The tool supports various modern models like DeepSeek Reasoner, ChatGPT, LLAMA3.3, etc. Users can also use semantic search capabilities with MeiliSearch to find and reference relevant information based on meaning. Just-Chat requires Docker or Podman for operation and provides detailed installation instructions for both Linux and Windows users.

vim-ollama

The 'vim-ollama' plugin for Vim adds Copilot-like code completion support using Ollama as a backend, enabling intelligent AI-based code completion and integrated chat support for code reviews. It does not rely on cloud services, preserving user privacy. The plugin communicates with Ollama via Python scripts for code completion and interactive chat, supporting Vim only. Users can configure LLM models for code completion tasks and interactive conversations, with detailed installation and usage instructions provided in the README.

sage

Sage is a tool that allows users to chat with any codebase, providing a chat interface for code understanding and integration. It simplifies the process of learning how a codebase works by offering heavily documented answers sourced directly from the code. Users can set up Sage locally or on the cloud with minimal effort. The tool is designed to be easily customizable, allowing users to swap components of the pipeline and improve the algorithms powering code understanding and generation.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

depthai

This repository contains a demo application for DepthAI, a tool that can load different networks, create pipelines, record video, and more. It provides documentation for installation and usage, including running programs through Docker. Users can explore DepthAI features via command line arguments or a clickable QT interface. Supported models include various AI models for tasks like face detection, human pose estimation, and object detection. The tool collects anonymous usage statistics by default, which can be disabled. Users can report issues to the development team for support and troubleshooting.

aio-switch-updater

AIO-Switch-Updater is a Nintendo Switch homebrew app that allows users to download and update custom firmware, firmware files, cheat codes, and more. It supports Atmosphère, ReiNX, and SXOS on both unpatched and patched Switches. The app provides features like updating CFW with custom RCM payload, updating Hekate/payload, custom downloads, downloading firmwares and cheats, and various tools like rebooting to specific payload, changing color schemes, consulting cheat codes, and more. Users can contribute by submitting PRs and suggestions, and the app supports localization. It does not host or distribute any files and gives special thanks to contributors and supporters.

frontend

Nuclia frontend apps and libraries repository contains various frontend applications and libraries for the Nuclia platform. It includes components such as Dashboard, Widget, SDK, Sistema (design system), NucliaDB admin, CI/CD Deployment, and Maintenance page. The repository provides detailed instructions on installation, dependencies, and usage of these components for both Nuclia employees and external developers. It also covers deployment processes for different components and tools like ArgoCD for monitoring deployments and logs. The repository aims to facilitate the development, testing, and deployment of frontend applications within the Nuclia ecosystem.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

jupyter-quant

Jupyter Quant is a dockerized environment tailored for quantitative research, equipped with essential tools like statsmodels, pymc, arch, py_vollib, zipline-reloaded, PyPortfolioOpt, numpy, pandas, sci-py, scikit-learn, yellowbricks, shap, optuna, and more. It provides Interactive Broker connectivity via ib_async and includes major Python packages for statistical and time series analysis. The image is optimized for size, includes jedi language server, jupyterlab-lsp, and common command line utilities. Users can install new packages with sudo, leverage apt cache, and bring their own dot files and SSH keys. The tool is designed for ephemeral containers, ensuring data persistence and flexibility for quantitative analysis tasks.

jupyter-quant

Jupyter Quant is a dockerized environment tailored for quantitative research, equipped with essential tools like statsmodels, pymc, arch, py_vollib, zipline-reloaded, PyPortfolioOpt, numpy, pandas, sci-py, scikit-learn, yellowbricks, shap, optuna, ib_insync, Cython, Numba, bottleneck, numexpr, jedi language server, jupyterlab-lsp, black, isort, and more. It does not include conda/mamba and relies on pip for package installation. The image is optimized for size, includes common command line utilities, supports apt cache, and allows for the installation of additional packages. It is designed for ephemeral containers, ensuring data persistence, and offers volumes for data, configuration, and notebooks. Common tasks include setting up the server, managing configurations, setting passwords, listing installed packages, passing parameters to jupyter-lab, running commands in the container, building wheels outside the container, installing dotfiles and SSH keys, and creating SSH tunnels.

vasttools

This repository contains a collection of tools that can be used with vastai. The tools are free to use, modify and distribute. If you find this useful and wish to donate your welcome to send your donations to the following wallets. BTC 15qkQSYXP2BvpqJkbj2qsNFb6nd7FyVcou XMR 897VkA8sG6gh7yvrKrtvWningikPteojfSgGff3JAUs3cu7jxPDjhiAZRdcQSYPE2VGFVHAdirHqRZEpZsWyPiNK6XPQKAg RVN RSgWs9Co8nQeyPqQAAqHkHhc5ykXyoMDUp USDT(ETH ERC20) 0xa5955cf9fe7af53bcaa1d2404e2b17a1f28aac4f Paypal PayPal.Me/cryptolabsZA

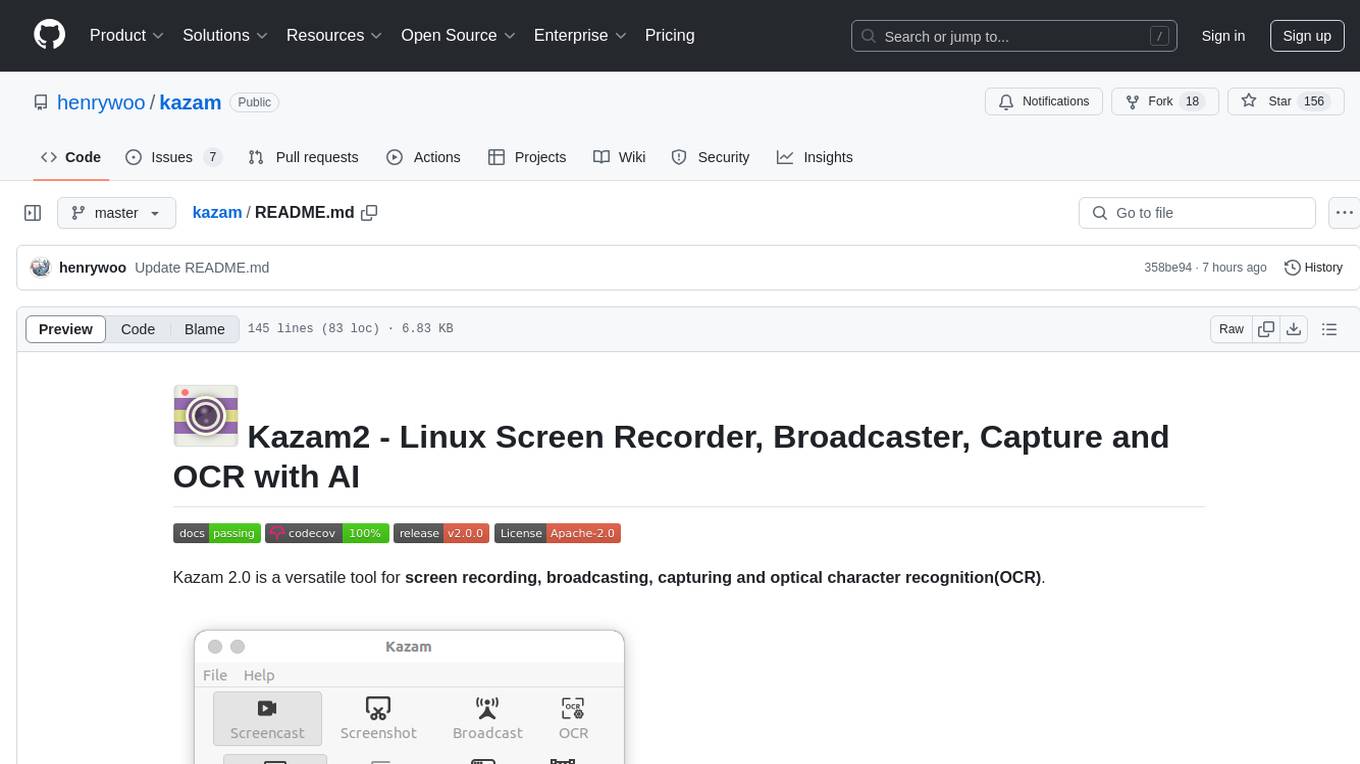

kazam

Kazam 2.0 is a versatile tool for screen recording, broadcasting, capturing, and optical character recognition (OCR). It allows users to capture screen content, broadcast live over the internet, extract text from captured content, record audio, and use a web camera for recording. The tool supports full screen, window, and area modes, and offers features like keyboard shortcuts, live broadcasting with Twitch and YouTube, and tips for recording quality. Users can install Kazam on Ubuntu and use it for various recording and broadcasting needs.

bia-bob

BIA `bob` is a Jupyter-based assistant for interacting with data using large language models to generate Python code. It can utilize OpenAI's chatGPT, Google's Gemini, Helmholtz' blablador, and Ollama. Users need respective accounts to access these services. Bob can assist in code generation, bug fixing, code documentation, GPU-acceleration, and offers a no-code custom Jupyter Kernel. It provides example notebooks for various tasks like bio-image analysis, model selection, and bug fixing. Installation is recommended via conda/mamba environment. Custom endpoints like blablador and ollama can be used. Google Cloud AI API integration is also supported. The tool is extensible for Python libraries to enhance Bob's functionality.

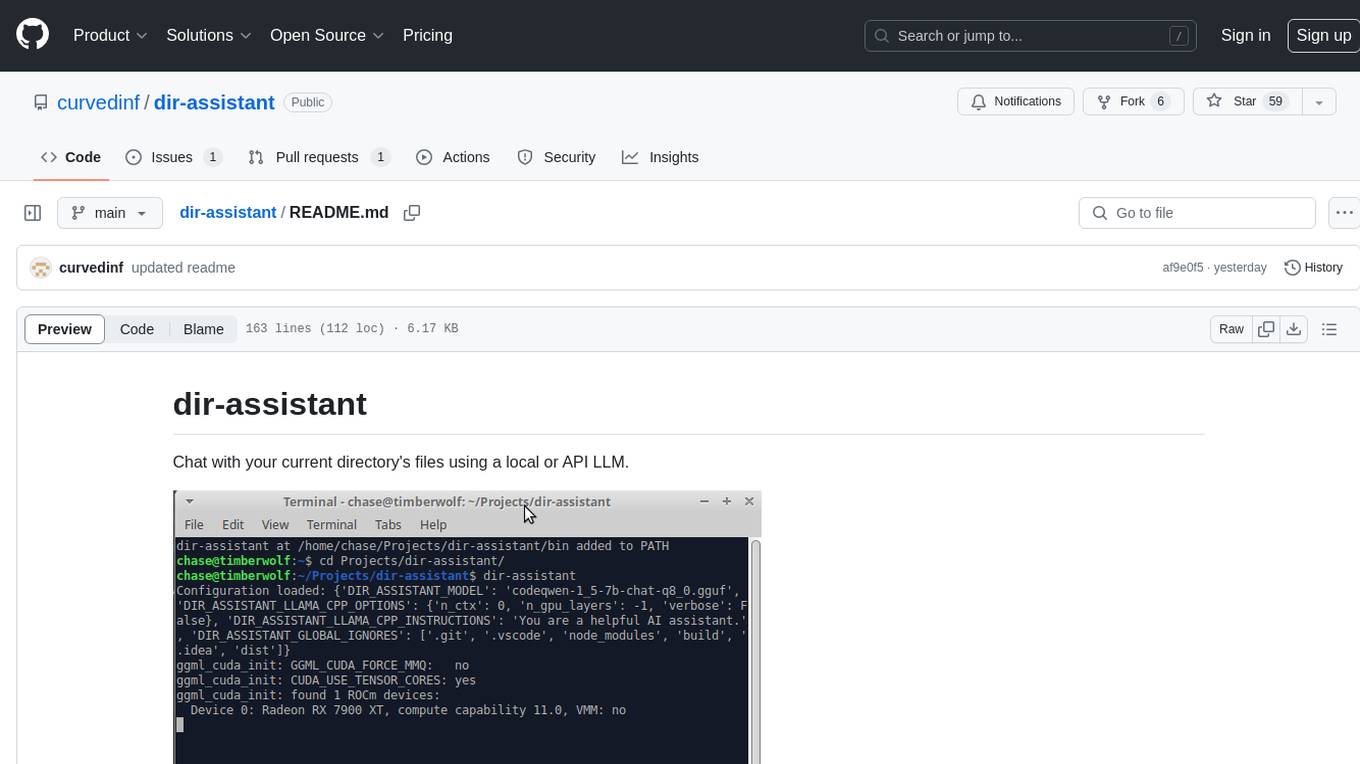

dir-assistant

Dir-assistant is a tool that allows users to interact with their current directory's files using local or API Language Models (LLMs). It supports various platforms and provides API support for major LLM APIs. Users can configure and customize their local LLMs and API LLMs using the tool. Dir-assistant also supports model downloads and configurations for efficient usage. It is designed to enhance file interaction and retrieval using advanced language models.

llm

LLM is a CLI utility and Python library for interacting with Large Language Models, both via remote APIs and models that can be installed and run on your own machine. It allows users to run prompts from the command-line, store results in SQLite, generate embeddings, and more. The tool supports self-hosted language models via plugins and provides access to remote and local models. Users can install plugins to access models by different providers, including models that can be installed and run on their own device. LLM offers various options for running Mistral models in the terminal and enables users to start chat sessions with models. Additionally, users can use a system prompt to provide instructions for processing input to the tool.

For similar tasks

just-chat

Just-Chat is a containerized application that allows users to easily set up and chat with their AI agent. Users can customize their AI assistant using a YAML file, add new capabilities with Python tools, and interact with the agent through a chat web interface. The tool supports various modern models like DeepSeek Reasoner, ChatGPT, LLAMA3.3, etc. Users can also use semantic search capabilities with MeiliSearch to find and reference relevant information based on meaning. Just-Chat requires Docker or Podman for operation and provides detailed installation instructions for both Linux and Windows users.

AIHub

AIHub is a client that integrates the capabilities of multiple large models, allowing users to quickly and easily build their own personalized AI assistants. It supports custom plugins for endless possibilities. The tool provides powerful AI capabilities, rich configuration options, customization of AI assistants for text and image conversations, AI drawing, installation of custom plugins, personal knowledge base building, AI calendar generation, support for AI mini programs, and ongoing development of additional features. Users can download the application package from the release section, resolve issues related to macOS app installation, and contribute ideas by submitting issues. The project development involves installation, development, and building processes for different operating systems.

neuron-ai

Neuron AI is a PHP framework that provides an Agent class for creating fully functional agents to perform tasks like analyzing text for SEO optimization. The framework manages advanced mechanisms such as memory, tools, and function calls. Users can extend the Agent class to create custom agents and interact with them to get responses based on the underlying LLM. Neuron AI aims to simplify the development of AI-powered applications by offering a structured framework with documentation and guidelines for contributions under the MIT license.

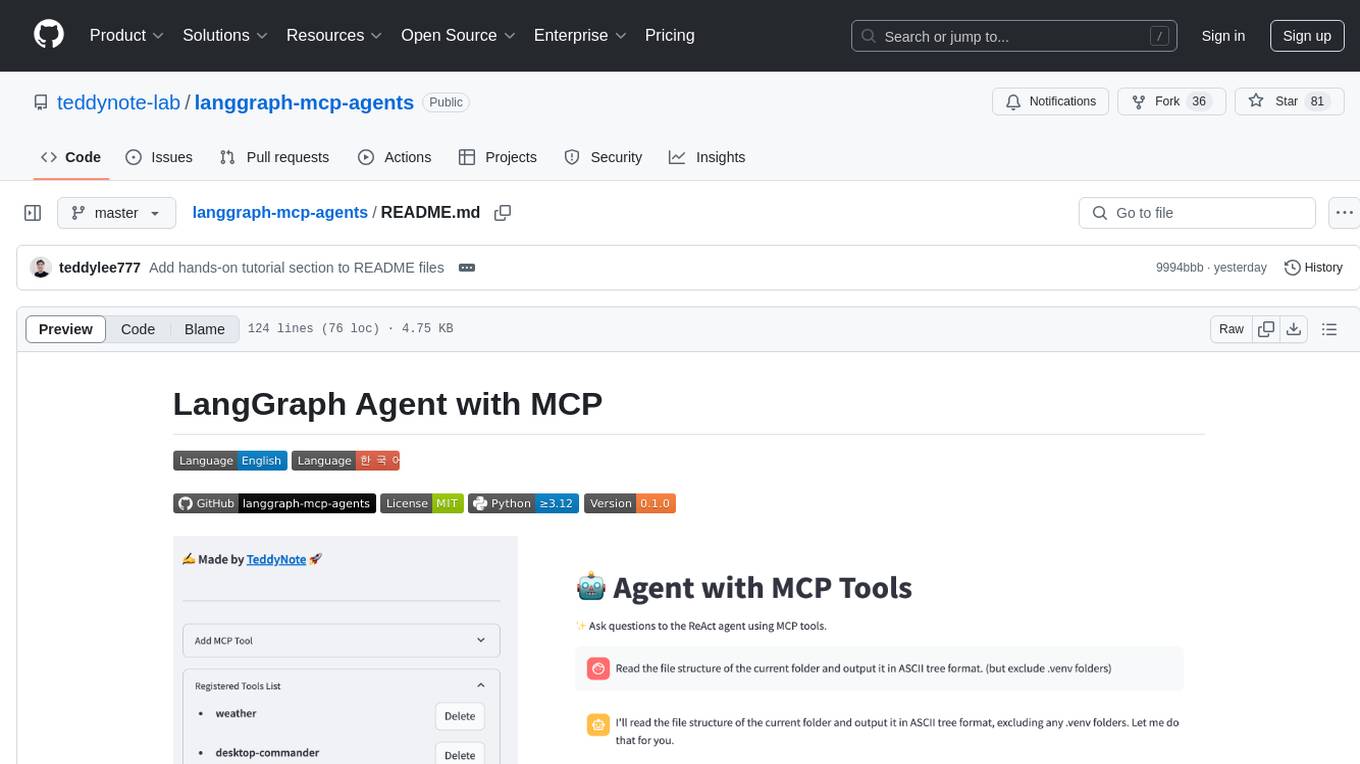

langgraph-mcp-agents

LangGraph Agent with MCP is a toolkit provided by LangChain AI that enables AI agents to interact with external tools and data sources through the Model Context Protocol (MCP). It offers a user-friendly interface for deploying ReAct agents to access various data sources and APIs through MCP tools. The toolkit includes features such as a Streamlit Interface for interaction, Tool Management for adding and configuring MCP tools dynamically, Streaming Responses in real-time, and Conversation History tracking.

Cerebr

Cerebr is an intelligent AI assistant browser extension designed to enhance work efficiency and learning experience. It integrates powerful AI capabilities from various sources to provide features such as smart sidebar, multiple API support, cross-browser API configuration synchronization, comprehensive Q&A support, elegant rendering, real-time response, theme switching, and more. With a minimalist design and focus on delivering a seamless, distraction-free browsing experience, Cerebr aims to be your second brain for deep reading and understanding.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.